An Intelligent Self-Service Vending System for Smart Retail

Abstract

1. Introduction

- We propose an intelligent self-service vending system that can detect multiple unlabeled products in real-time. The system realizes the integration of non-barcode items weighing, identification and settlement.

- We design a multi-device management platform through the IoT technology and achieved information docking between embedded devices, WeChat applets, Alipay, and database platforms.

- We propose a flexible weighing structure. The touch screen and camera are rotatable so that the system can recognize fruits from multiple angles and realize ACO for people of different heights.

- We propose to apply target detection in the sale of unlabeled products, and we manufacture and share 5637 tagged images of 16 unlabeled products.

- We carry out a comprehensive application scenario analysis that demonstrates that our system can effectively cope with the challenges of various sales situations.

2. Related Work

2.1. Image Processing

2.2. Target Detection

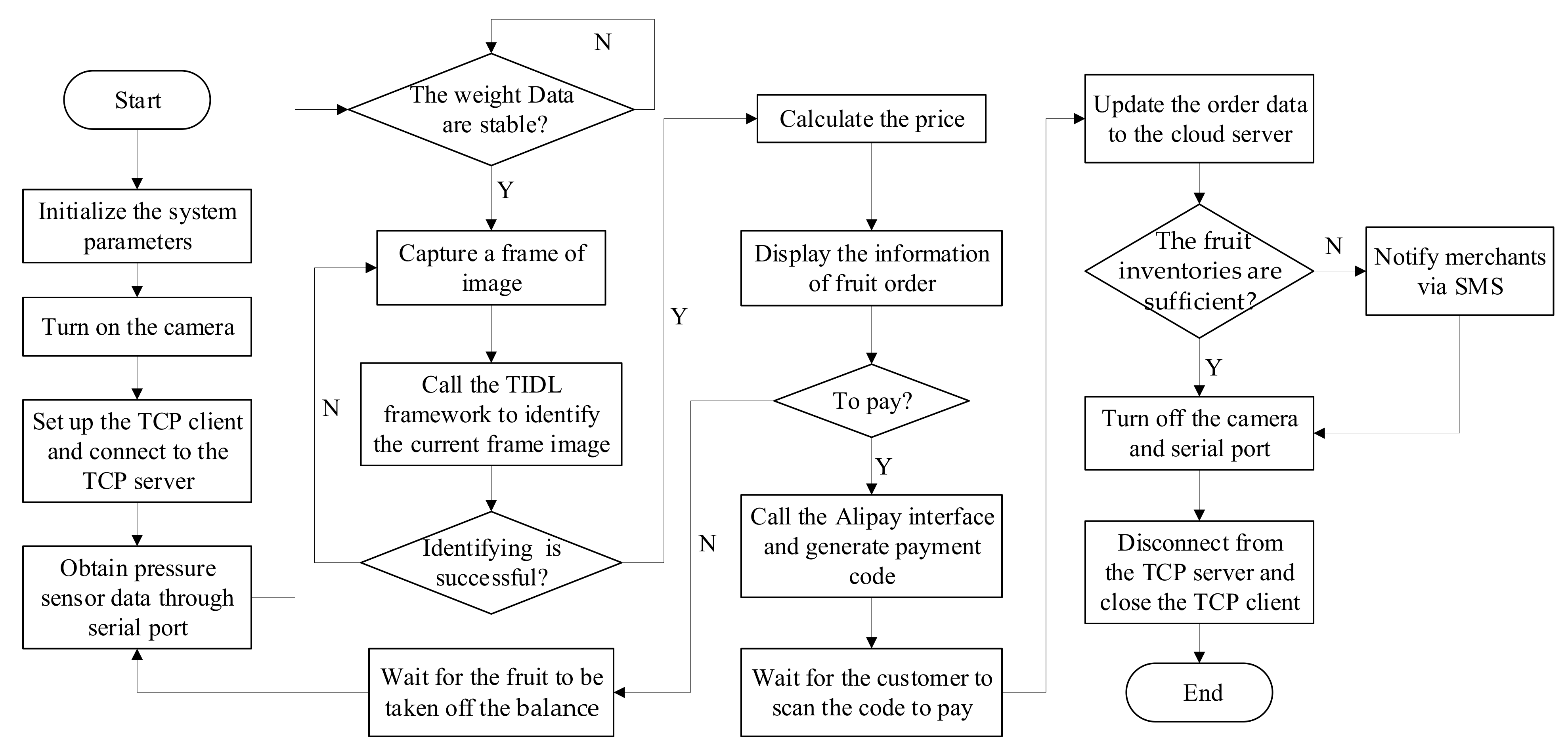

3. System Architecture

3.1. System Overview

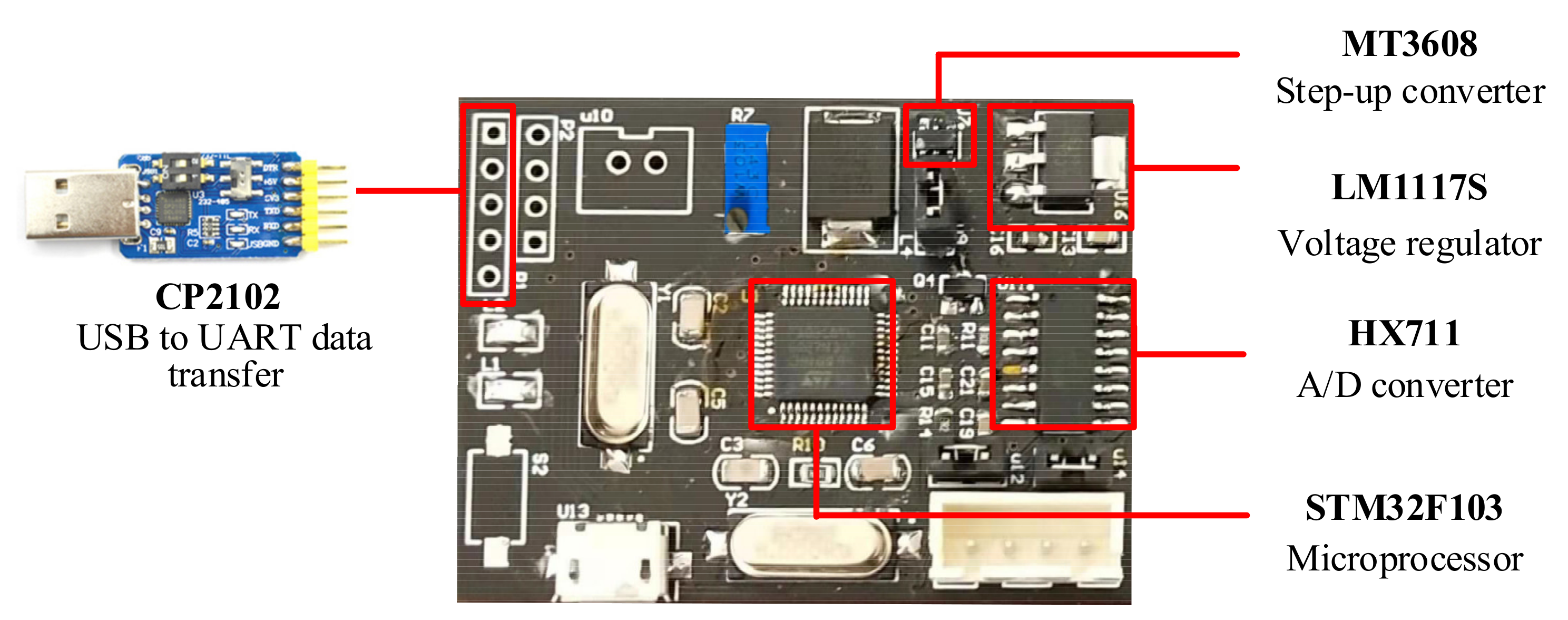

3.2. Main Control Part

3.3. Weight Data Acquisition Part

3.4. Vision-Based Object Identification

3.4.1. Data Collection

3.4.2. Data Labeling

3.4.3. Object Detection

3.4.4. Texas Instruments Deep Learning

3.5. Mechanical Structure

4. IoT Applications

4.1. Multi-Device Data Management Platform

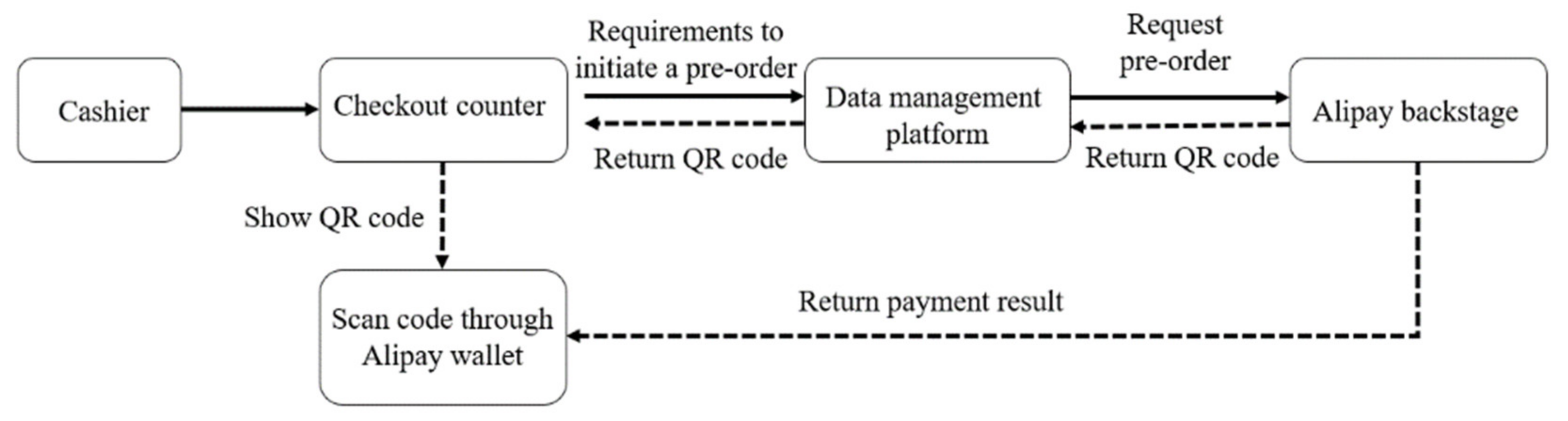

4.2. Payment Interface

4.3. WeChat Mini Program

5. Experiments and Results

5.1. Measurement Experiments

5.2. Image Recognition Experiments

5.2.1. Implementation Details and Parameter Settings

5.2.2. Accuracy Verification of Single Variety Products

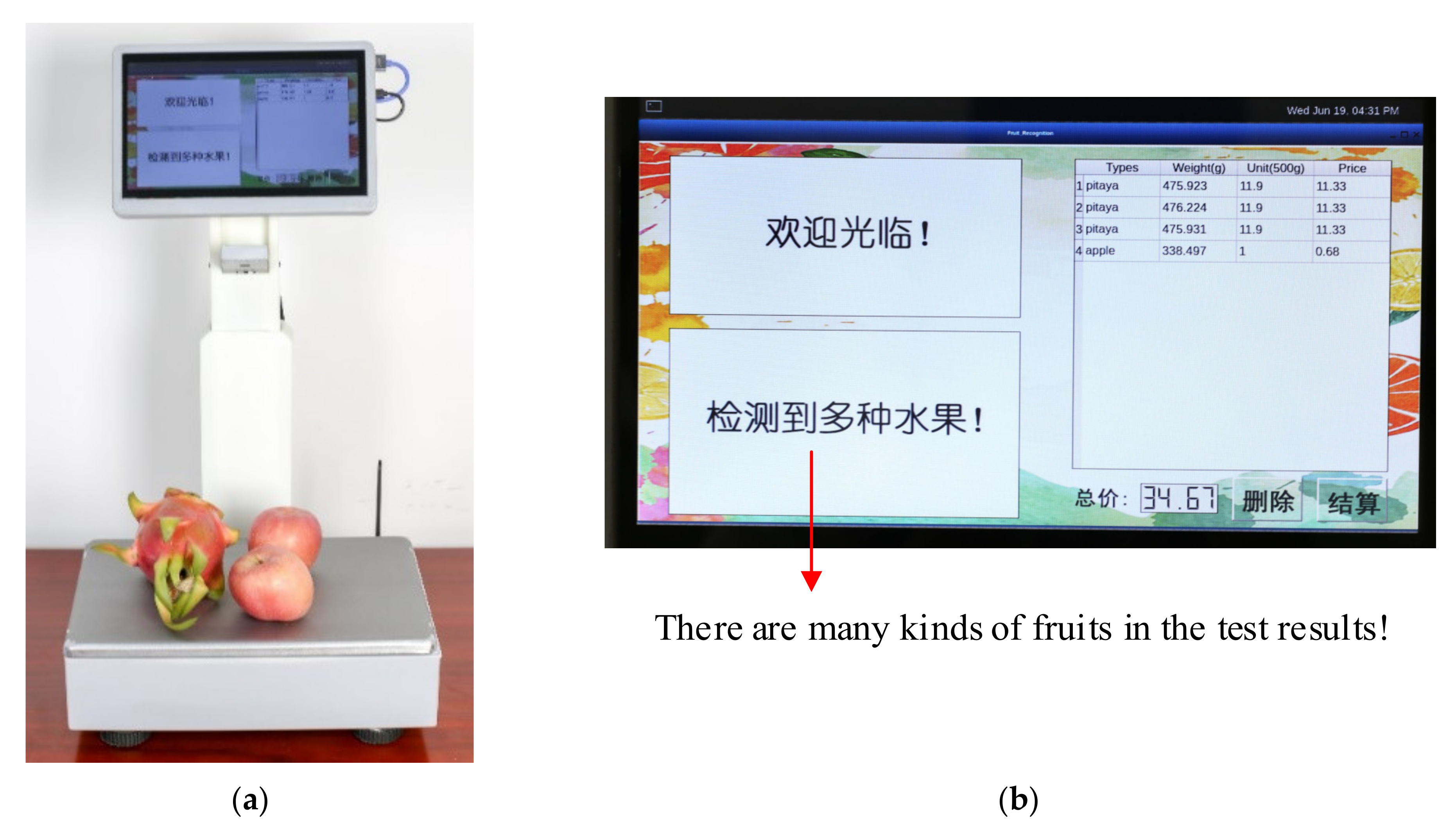

5.2.3. Running State Verification of Multi-Variety Product

5.3. Real-Sales Experiments

5.3.1. Assumptions

- Model training is performed on the products that need to be detected to extract features of the products;

- The total weight of a single test product is within the range of the load cell;

- Alipay’s merchant account is an experimental account.

5.3.2. Experiment Settings

5.3.3. Experiment Results

6. Conclusions

7. Patents

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dacko, S.G. Enabling smart retail settings via mobile augmented reality shopping apps. Technol. Forecast. Soc. Chang. 2017, 124, 243–256. [Google Scholar] [CrossRef]

- Amazon, Go. Available online: https://www.amazon.com (accessed on 23 January 2017).

- Early Amazon Step! Alibaba Announced that no One Retail Store is Coming. Available online: https://tw.news.yahoo.com (accessed on 3 July 2017).

- Xun, H.; Hong, L.; Xinrong, L.; Chiyu, W. Mobilenet–SSD Microscope using adaptive error correction algorithm: Real–time detection of license plates on mobile devices. IET Intell. Transp. Syst. 2020, 14, 110–118. [Google Scholar]

- chuanqi305/MobileNet–SSD. Available online: http://github.com/chua–nqi305/MobileNet–SSD (accessed on 16 October 2018).

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 17 September 2016. [Google Scholar]

- With the Introduction of RAPTOR, ECRS Looks to Change How Groceries Are Purchased. Available online: http://goo.gl/g8Bq1N (accessed on 17 January 2014).

- Reyes, P.M.; Frazier, G.V. Radio frequency identification: Past, present and future business applications. Int. J. Integr. Supply Manag. 2015, 3, 125. [Google Scholar] [CrossRef]

- Wu, B.F.; Tseng, W.J.; Chen, Y.S.; Yao, S.J.; Chang, P.J. An Intelligent Self–Checkout System for Smart Retail. In Proceedings of the International Conference on System Science and Engineering (ICSSE), Puli, Taiwan, 7–9 July 2016. [Google Scholar]

- Ravi, V.; Aparna, R. Security in RFID based Smart Retail System. In Proceedings of the International Conference on Computing for Sustainable Global Development, New Delhi, India, 16–18 March 2016. [Google Scholar]

- Wang, Y.C.; Yang, C.C. 3s–Cart: A lightweight, interactive sensor–Based cart for smart shopping in supermarkets. IEEE Sens. J. 2016, 16, 6774–6781. [Google Scholar] [CrossRef]

- Chandrasekar, P.; Sangeetha, T. Smart Shopping Cart with Automatic Billing System Through RFID and ZigBee. In Proceedings of the International Conference on Information Communication and Embedded Systems, Chennai, India, 27–28 February 2014. [Google Scholar]

- Chi, H.C.; Sarwar, M.A.; Daraghmi, Y.A.; Liu, K.W.; Ik, T.U.; Li, Y.L. Smart Self–Checkout Carts Based on Deep Learning for Shopping Activity Recognition. In Proceedings of the 21st Asia–Pacific Network Operations and Management Symposium, Daegu, Korea (South), 22–25 September 2020. [Google Scholar]

- Valcu, A. Use of balance calibration certificate to calculate the errors of indication and measurement uncertainty in mass determinations performed in medical laboratories. Sens. Transducers J. 2011, 132, 76–88. [Google Scholar]

- Sigg, S.; Scholz, M.; Shi, S.; Ji, Y.; Beigl, M. RF–Sensing of activities from non–cooperative subjects in device–free recognition systems using ambient and local signals. IEEE Trans. Mob. Comput. 2014, 13, 907–920. [Google Scholar] [CrossRef]

- Witrisal, K.; Meissner, P.; Leitinger, E.; Shen, Y.; Gustafson, C.; Tufvesson, F.; Haneda, K.; Dardari, D.; Molisch, A.F.; Conti, A.; et al. High–accuracy localization for assisted living: 5G systems will turnmultipath channels from foe to friend. IEEE Signal Process. 2016, 33, 59–70. [Google Scholar] [CrossRef]

- Brateris, D.; Bedford, D.; Calhoun, D.; Johnson, A.; Kowalski, N.; Mukalian, T.; Reda, J.; Samaritano, A.; Krchnavek, R.R. The smartphone–enabled DMM. IEEE Instrum. Meas. Mag. 2014, 17, 47–54. [Google Scholar] [CrossRef]

- Sivakumar, R.; Maheswari, A.; Pushpa, P. ZigBee–based wireless electronic scale and its network performance analysis for the application of smart billing system in super markets. Indian J. Sci. Technol. 2014, 7, 352–359. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist–Level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Huang, H.; Xie, Q.; Yao, L.; Chen, Q. Research on a surface defect detection algorithm based on mobilenet–SSD. Appl. Sci. 2018, 8, 1678–1692. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Kortli, Y.; Jridi, M.; Falou, A.A.; Atri, M. Face recognition systems: A survey. Sensors 2020, 20, 342. [Google Scholar] [CrossRef]

- Jiang, Y.; Tong, G.; Yin, H.; Xiong, N. A pedestrian detection method based on genetic algorithm for optimize xgboost training parameters. IEEE Access 2019, 7, 118310–118321. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Hu, C.; Liu, X.; Pan, Z.; Li, P. Automatic detection of single ripe tomato on plant combining faster r-cnn and intuitionistic fuzzy set. IEEE Access 2019, 7, 154683–154696. [Google Scholar] [CrossRef]

- Qiao, S.; Shen, W.; Qiu, W.; Liu, C.; Yuille, A. ScaleNet: Guiding Object Proposal Generation in Supermarkets and Beyond. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Tonioni, A.; Serra, E.; Stefano, L.D. A Deep Learning Pipeline for Product Recognition on Store Shelves. In Proceedings of the 2018 IEEE International Conference on Image Processing, Applications and Systems (IPAS), Sophia Antipolis, France, 12–14 December 2018. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards realtime object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Chaber, P.; Maciej, Ł. Fast analytical model predictive controllers and their implementation for STM32 ARM microcontroller. IEEE Trans. Ind. Inform. 2019, 15, 4580–4590. [Google Scholar] [CrossRef]

- Marcellis, A.D.; Ferri, G.; Mantenuto, P. Analog wheatstone bridge–Based automatic interface for grounded and floating wide–range resistive sensors. Sens. Actuators B Chem. 2013, 187, 371–378. [Google Scholar] [CrossRef]

- Ruiz, C.; Falcao, J.; Zhang, P. Autotag: Visual Domain Adaptation for Autonomous Retail Stores Through Multi-Modal Sensing. In Proceedings of the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2019 ACM International Symposium on Wearable Computers, London, UK, 9–13 September 2019; pp. 518–523. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Hossain, A.M.; Jin, Y.; Soh, W.S.; Van, H.N. SSD: A robust rf location fingerprint addressing mobile devices’ heterogeneity. IEEE Trans. Mob. Comput. 2013, 12, 65–77. [Google Scholar] [CrossRef]

- TI Deep Learning API User’s Guide. Available online: http://downloads.ti.com/mctools/esd/docs/tidl-api/index.html (accessed on 30 December 2019).

- Overview of Ti Deep Learning (tidl). Available online: https://edu.21ic.com/video/3411 (accessed on 7 August 2019).

- Hadipour, M.; Derakhshandeh, J.F.; Shiran, M.A. An experimental setup of multi–intelligent control system of water management using the internet of things (IoT). ISA Trans. 2020, 96, 309–326. [Google Scholar] [CrossRef] [PubMed]

- Xia, K.; Tang, T.; Mao, Z.; Zhang, Z.; Qu, H.; Li, H. Wearable smart multimeter equipped with ar glasses based on IoT platform. IEEE Instrum. Meas. Mag. 2020, 23, 40–45. [Google Scholar] [CrossRef]

- Li, J.; Wang, J.; Wangh, S.; Zhou, Y. Mobile payment with alipay: An application of extended technology acceptance model. IEEE Access 2019, 7, 50380–50387. [Google Scholar] [CrossRef]

- Tencent Announces 2020 Fourth Quarter and Annual Results. Available online: https://www.tencent.com/en-us/media.html#media-con-2 (accessed on 24 March 2021).

- Facebook Inc CIK#: 0001326801 (See All Company Filings). Available online: https://www.sec.gov/cgi-bin/browse-edgar?CIK=FB&Find=Search&owner=exclude&action=getcompany (accessed on 5 May 2021).

- OIML V 2–200. Measurement Precision. In International Vocabulary of Metrology–Basic and General Concepts and Associated Terms (VIM); International Organization of Legal Metrology: Paris, France, 2012; p. 52.

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| Composite Error (%FS) | 0.05 | Input Resistance (Ω) | 1000 ± 50 |

| FSO (mV/V) | 1.0 ± 0.1 | Output Resistance (Ω) | 1000 ± 50 |

| Non-Linearity (%FS) | 0.05 | Insulation Resistance (Ω) | ≥2000 (100 VDC) |

| Repeatability (%FS) | 0.05 | Operating Temp Range (°C) | −10~+50 |

| Hysteresis (%FS) | 0.05 | Safe Overload (%FS) | 150 |

| Creep (%FS/3 min) | 0.05 | Excitation (V) | 3~12 DC |

| Zero Balance (%FS) | ±0.1 | Maximum excitation (V) | 15 |

| Range of Height | Maximum Width | Size of Screen | Screen Resolution | Size of Pan |

|---|---|---|---|---|

| 44–54 mm | 310 mm | 250 × 170 mm | 1024 × 600 pixels | 340 × 234 mm |

| Parameter | Value |

|---|---|

| Weighing Capacity (kg) | 10 |

| Readability (mg) | 1 |

| Repeatability (≤mg) | ±2 |

| Linearity (≤mg) | ±0.1 |

| Pan Size (mm) | 280 × 200 |

| Name | Accuracy (%) | Name | Accuracy (%) |

|---|---|---|---|

| Watermelon | 89.52 | Pitaya | 91.23 |

| Muskmelon | 87.41 | Hami melon | 95.91 |

| Coconut | 92.92 | Apple | 89.36 |

| Pineapple | 95.87 | Orange | 96.19 |

| Mango | 95.78 | Lemon | 96.27 |

| Kiwi | 93.35 | Carrot | 96.34 |

| Avocado | 91.74 | Potato | 95.12 |

| Banana | 96.35 | Tomato | 96.38 |

| Average Accuracy | 93.73 | ||

| Category | Self-service Vending System | Manual Service System |

|---|---|---|

| Average Weighing and Recognition Time (s) | 3 | 2 |

| Average Alipay Callback Time (s) | 1 | 1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xia, K.; Fan, H.; Huang, J.; Wang, H.; Ren, J.; Jian, Q.; Wei, D. An Intelligent Self-Service Vending System for Smart Retail. Sensors 2021, 21, 3560. https://doi.org/10.3390/s21103560

Xia K, Fan H, Huang J, Wang H, Ren J, Jian Q, Wei D. An Intelligent Self-Service Vending System for Smart Retail. Sensors. 2021; 21(10):3560. https://doi.org/10.3390/s21103560

Chicago/Turabian StyleXia, Kun, Hongliang Fan, Jianguang Huang, Hanyu Wang, Junxue Ren, Qin Jian, and Dafang Wei. 2021. "An Intelligent Self-Service Vending System for Smart Retail" Sensors 21, no. 10: 3560. https://doi.org/10.3390/s21103560

APA StyleXia, K., Fan, H., Huang, J., Wang, H., Ren, J., Jian, Q., & Wei, D. (2021). An Intelligent Self-Service Vending System for Smart Retail. Sensors, 21(10), 3560. https://doi.org/10.3390/s21103560