AirSign: Smartphone Authentication by Signing in the Air

Abstract

1. Introduction

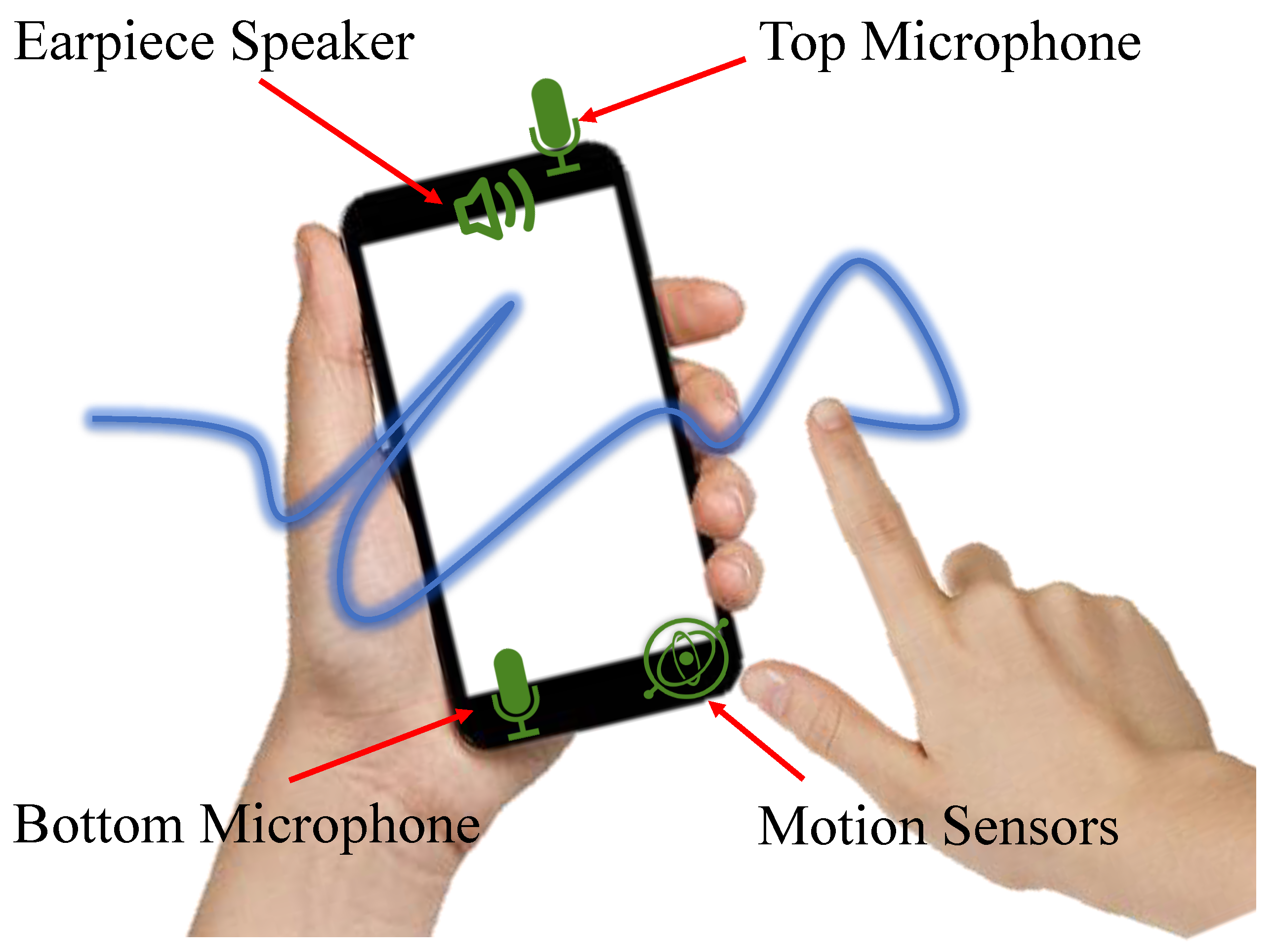

- We designed a smartphone signature authentication system that allows users to sign their signatures in the air, which improves the convenience and flexibility of the signing authentication process.

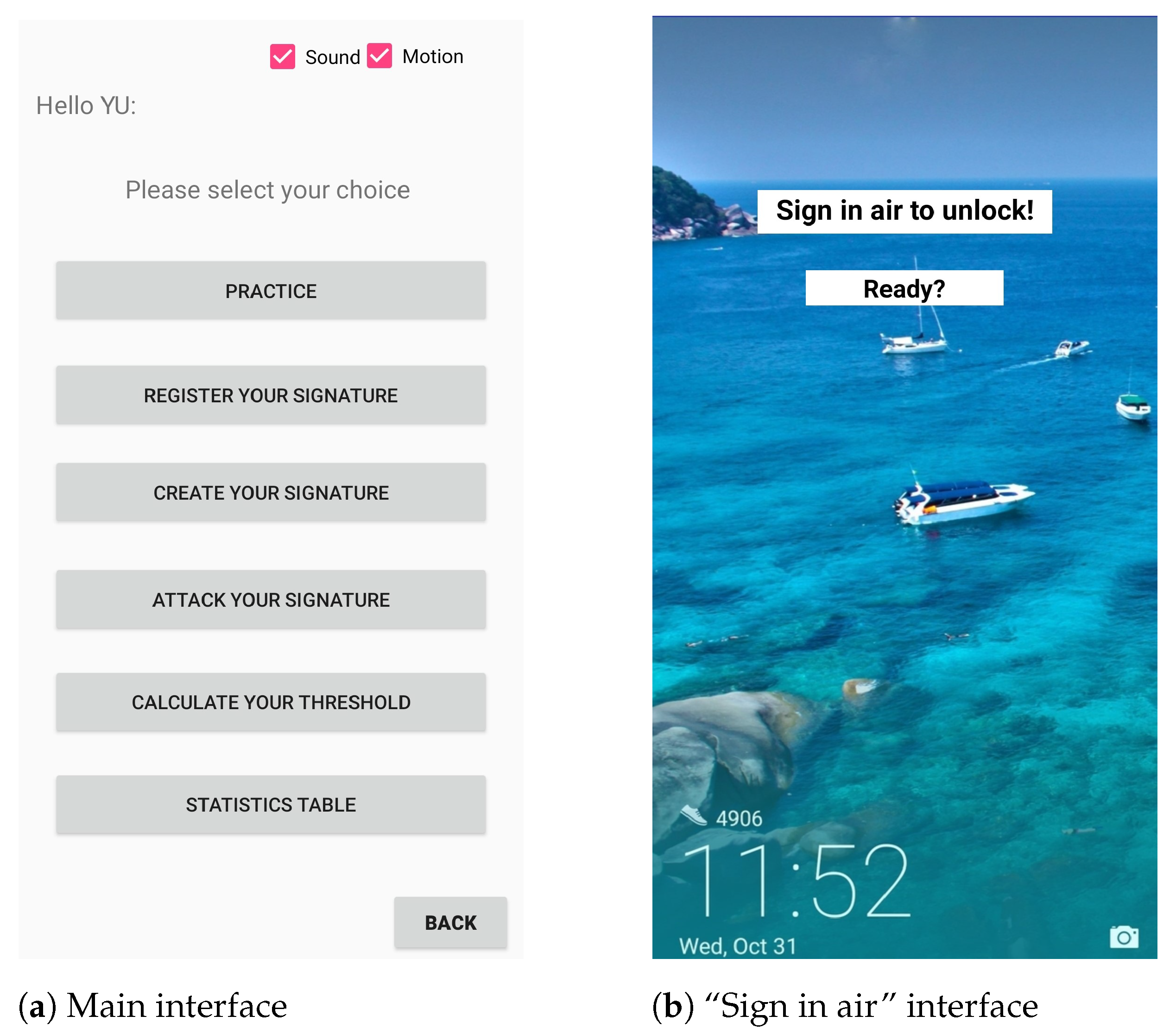

- We, for the first time, leveraged both acoustic sensors and motion sensors on a smartphone to detect different users’ hand geometries, trace their signing processes in the air, and extract essential features to verify their identities.

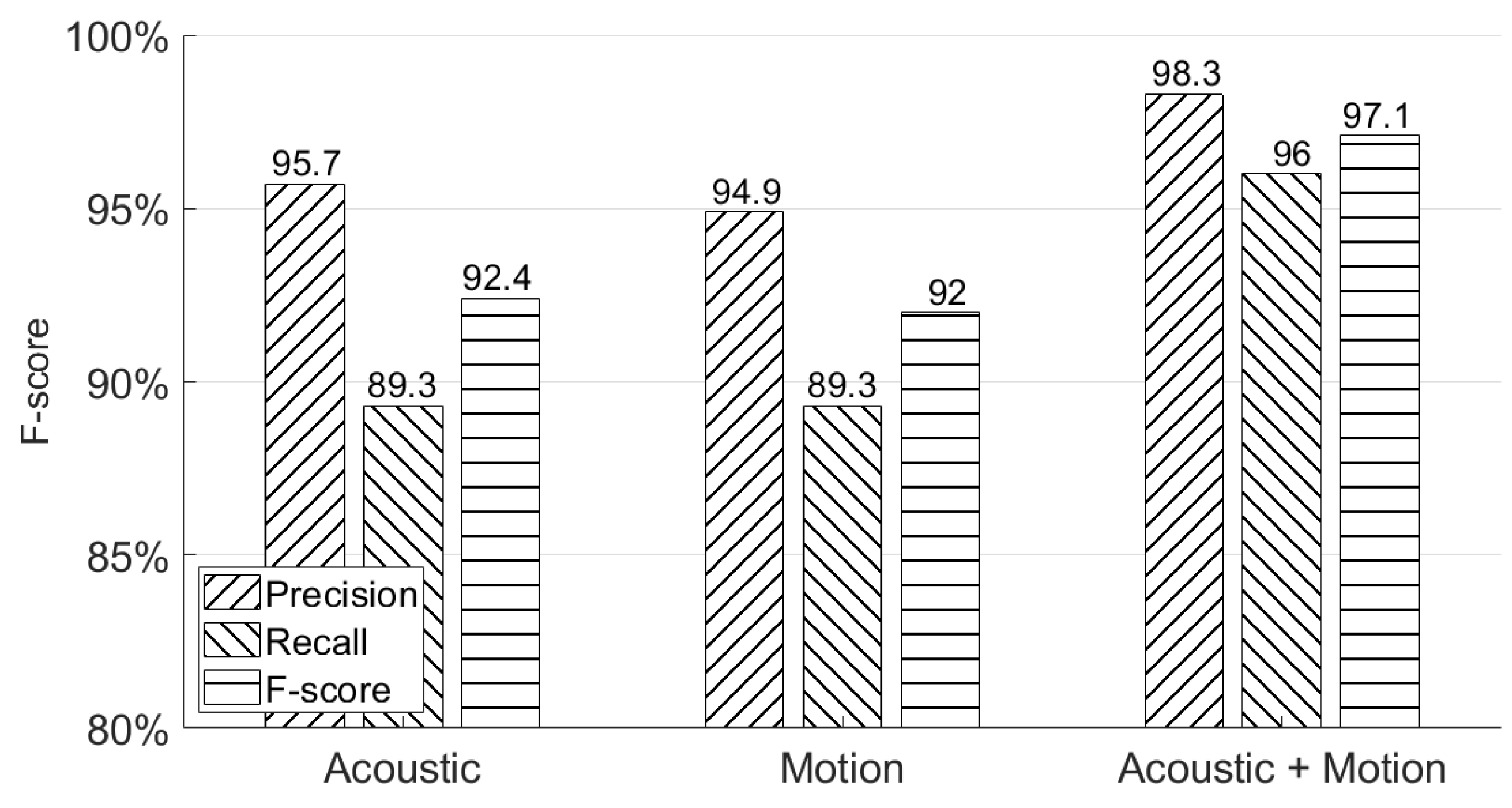

- We implemented a smartphone prototype application and collected user surveys to evaluate our AirSign system. The evaluation demonstrated that our system can authenticate users with an F-score of 97.1%.

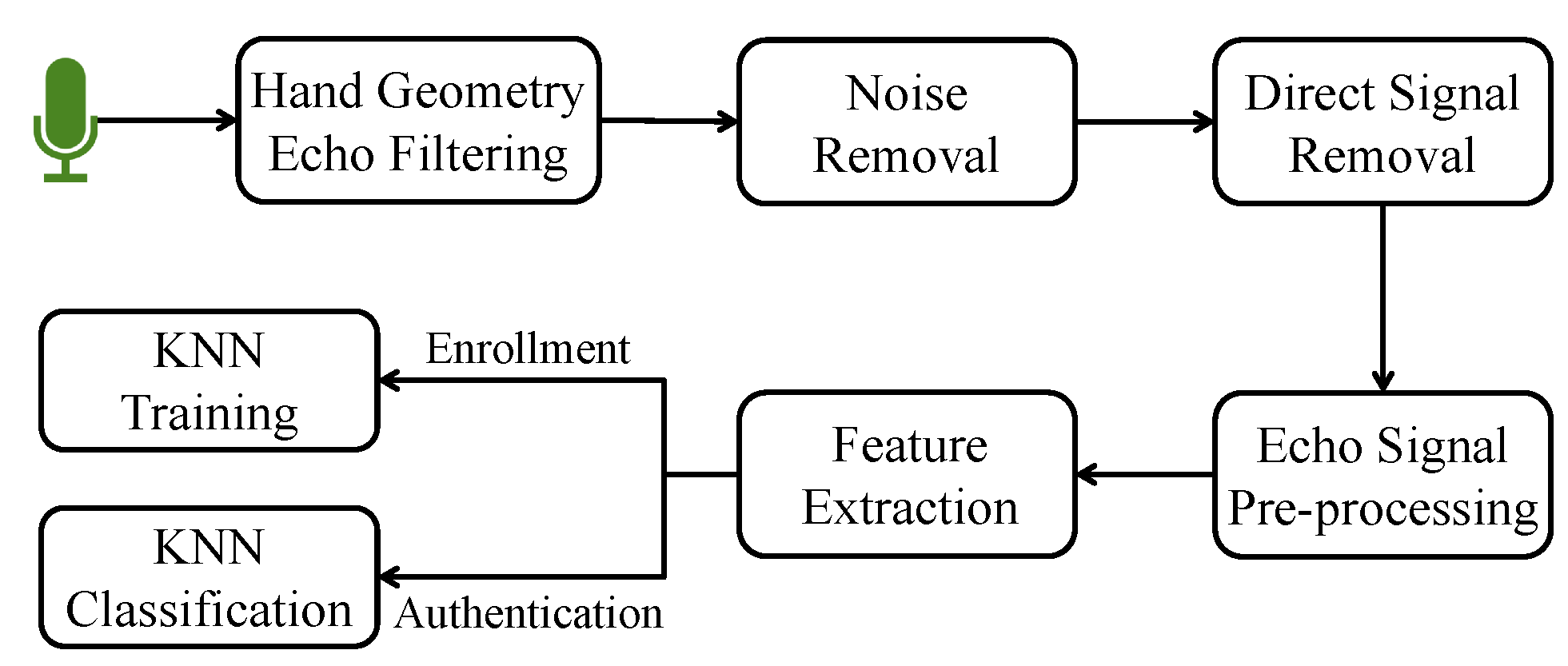

2. Overview

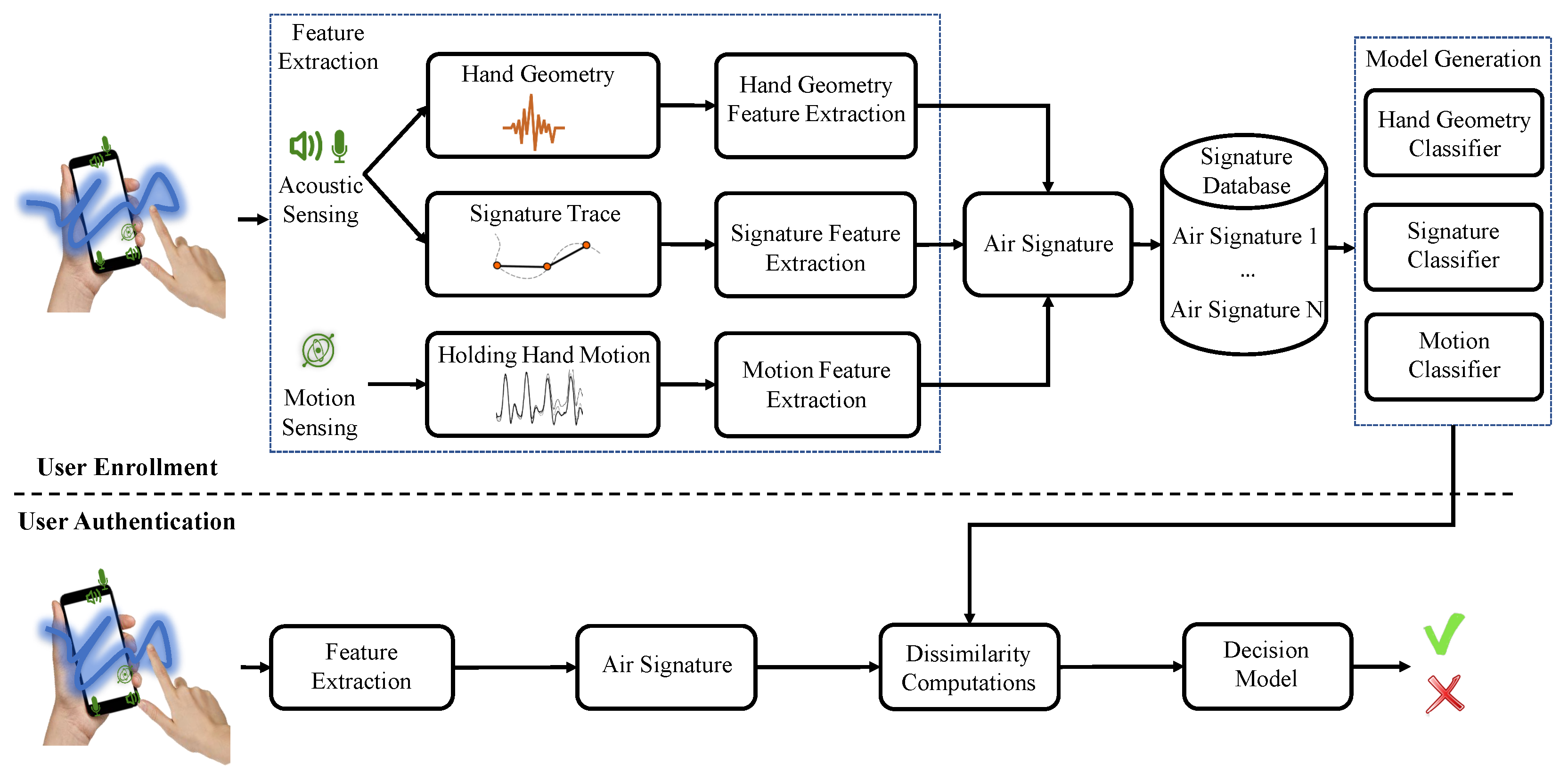

2.1. User Enrollment Phase

2.1.1. Acoustic Sensing

2.1.2. Motion Sensing

2.2. User Authentication Phase

3. System Design

3.1. Sound Signal Design

3.1.1. Selection of Acoustic Sensors

3.1.2. Overview of Sound Signal Design

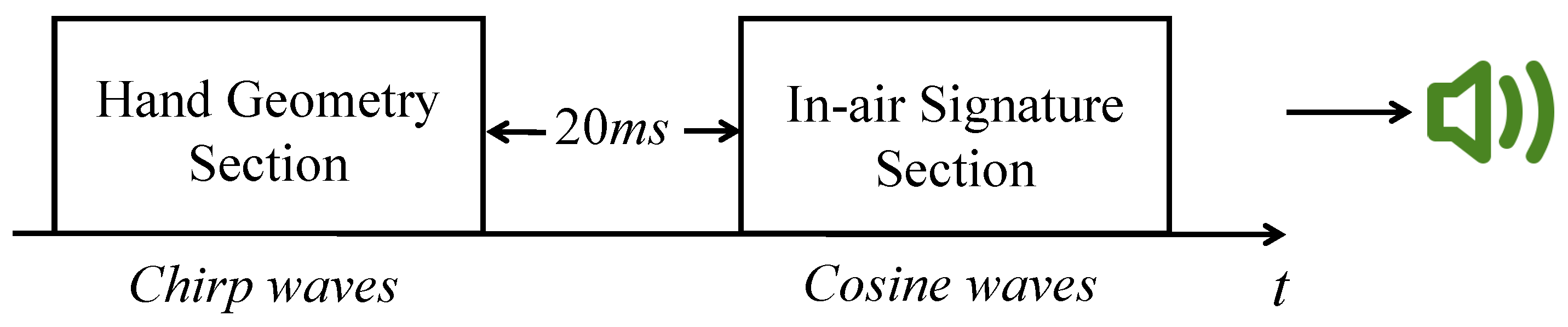

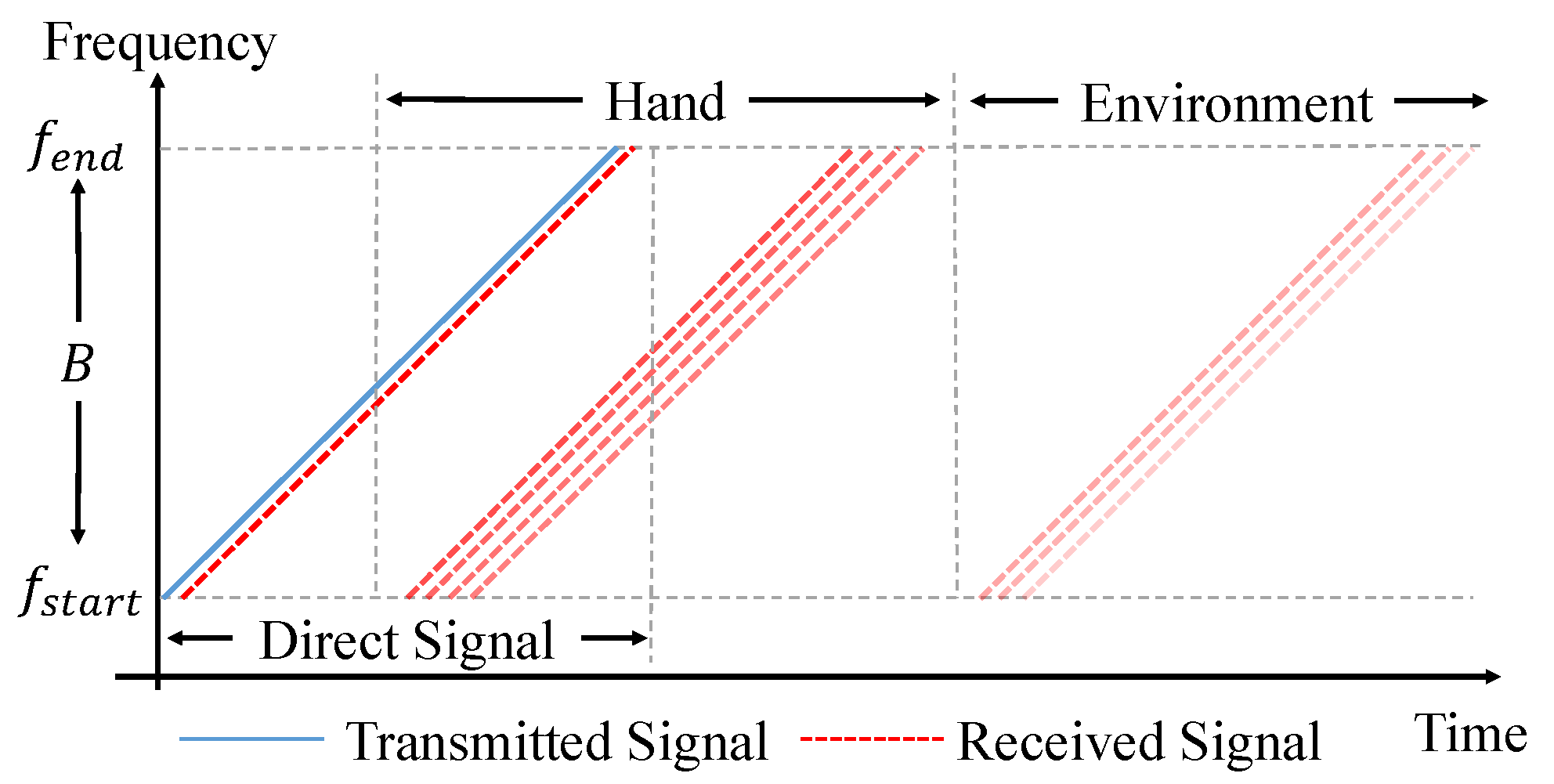

- To avoid disturbances to other people, the acoustic signals should be inaudible. According to [13], the sound above 17 kHz is difficult to hear. On the other hand, the highest sampling rate on a typical smartphone is 48 kHz, which means that the highest frequency of the sound wave should not be greater than 48 kHz/2 = 24 kHz to avoid aliasing. After extensive experiments on analyzing different frequency intervals, we choose 20–23 kHz as the frequency interval for both the hand geometry phase and the signature phase.

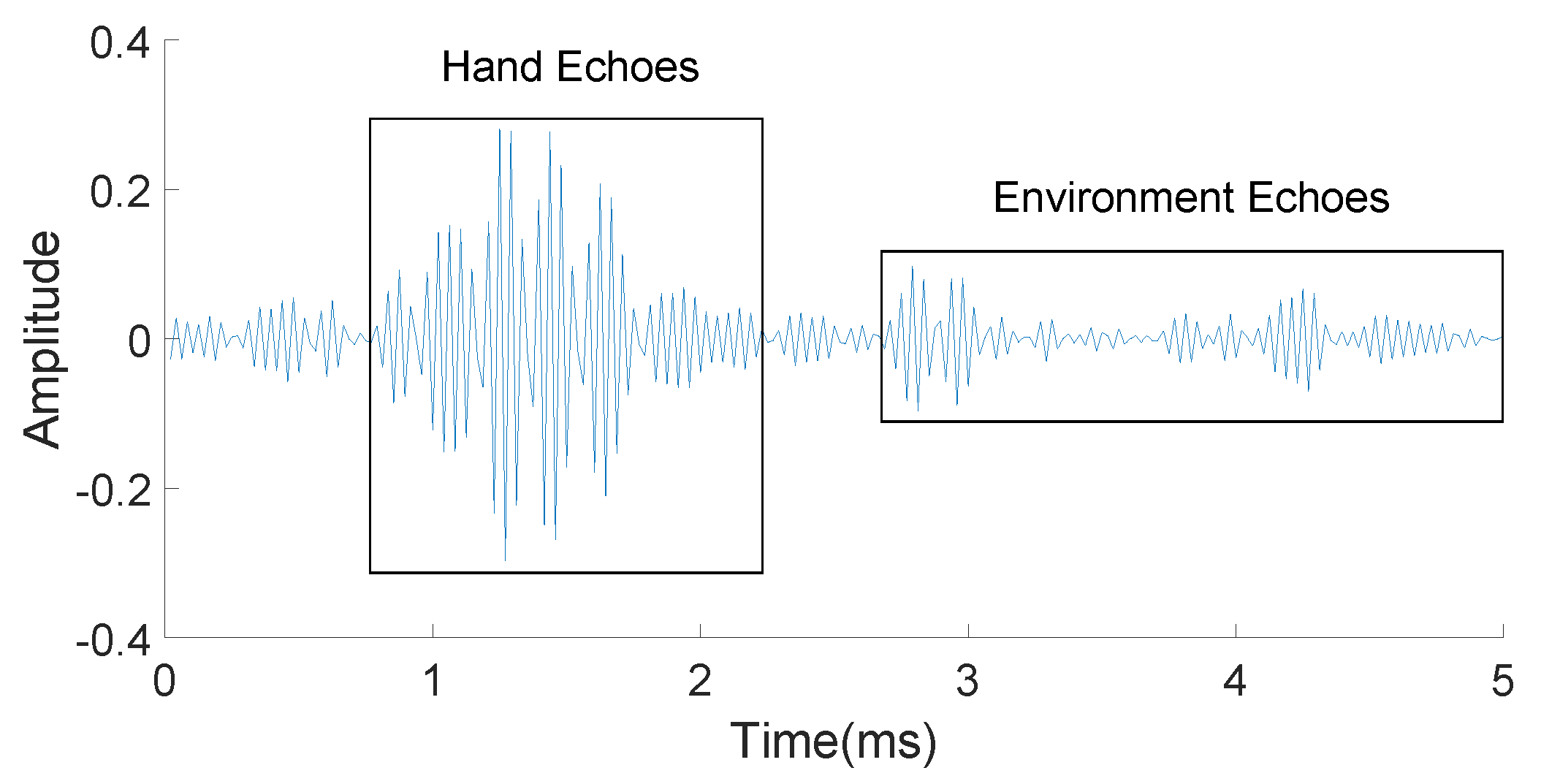

- To ensure that echoes from the hand geometry section do not overlap with the transmitted signal from the in-air signature section, we need to provide a gap between two sections. After testing different distance ranges for the above signal, we find that the echoes reflected by objects are very weak if the distance between objects and reflectors is over 3 m. Thus the maximum delay can be calculated as (2 × 3 m) /(343 m/s) = 17.5 ms. In AirSign, we add a little buffer and set the time interval between the hand geometry section and the in-air signature section to be 20 ms. An illustrative example of the acoustic signals is shown below in Figure 3.

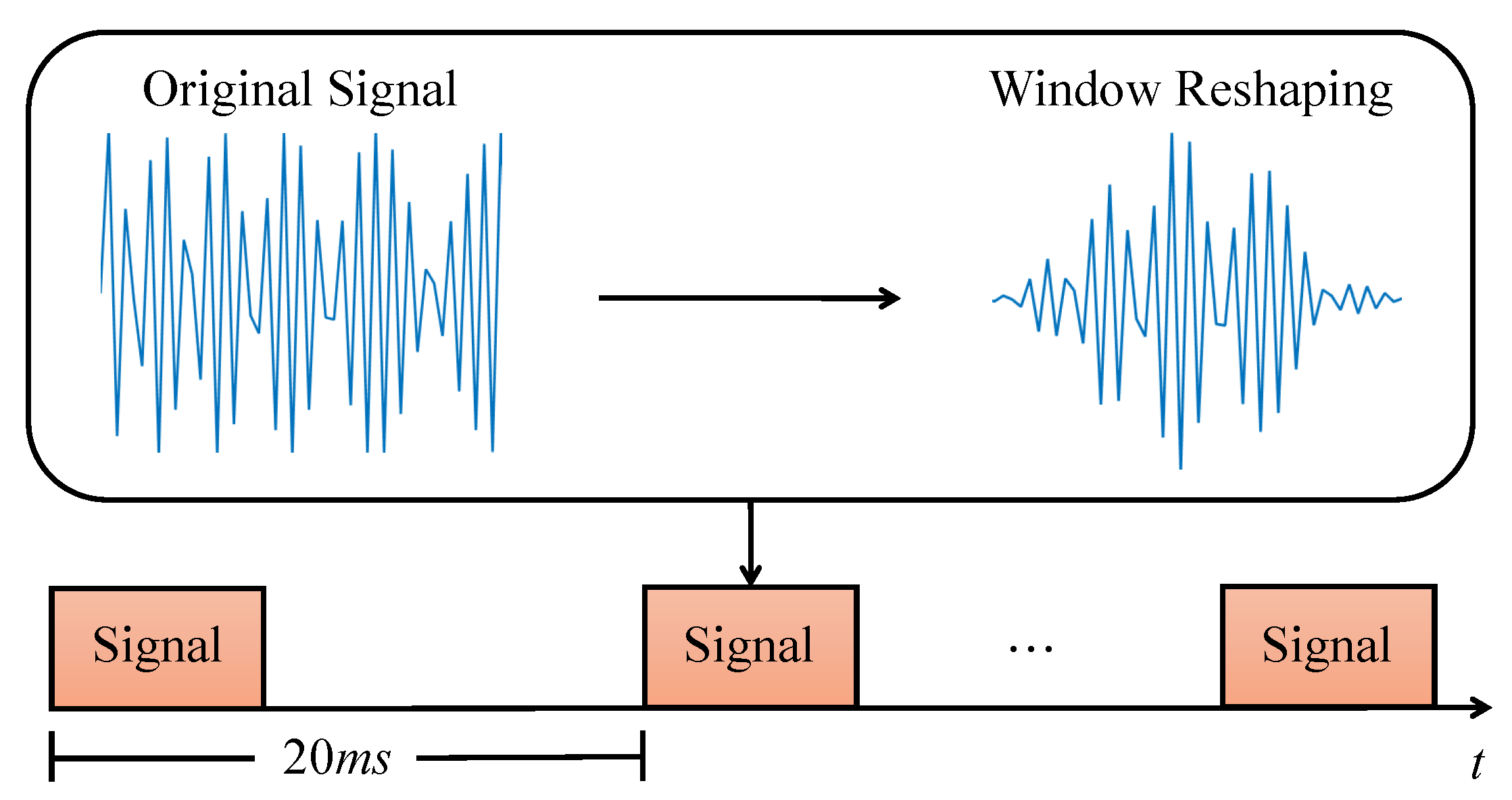

- To increase the signal-to-noise ratio (SNR) and prevent frequency echo leaks in both hand geometry and in-air signature sections, a Hanning window [14] is applied to reshape all emitted sound waves.

3.1.3. Sound Signal for Hand Geometry

3.1.4. Sound Signal for Signature

3.2. Feature Extraction

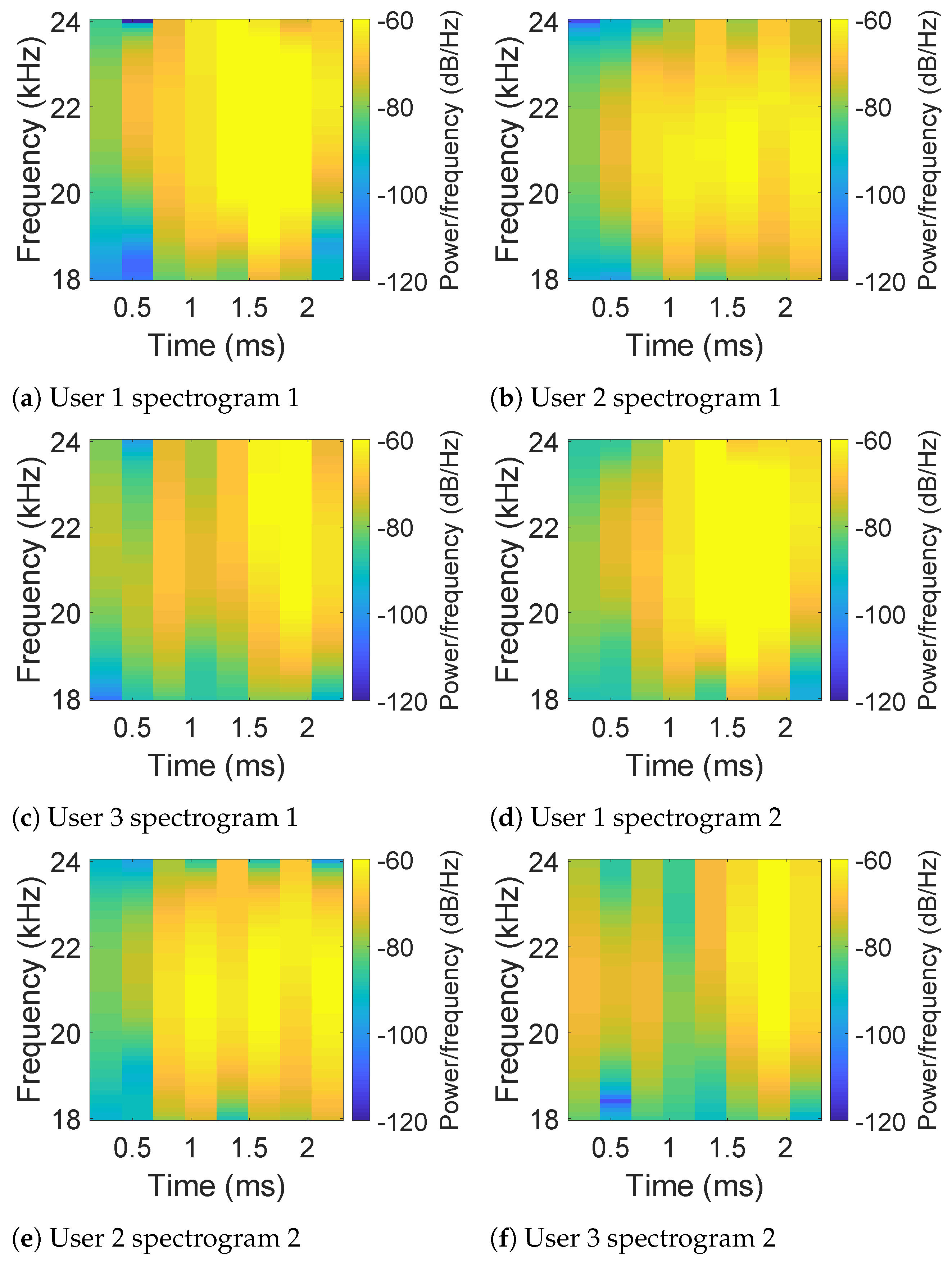

3.2.1. Hand Geometry Feature Extraction

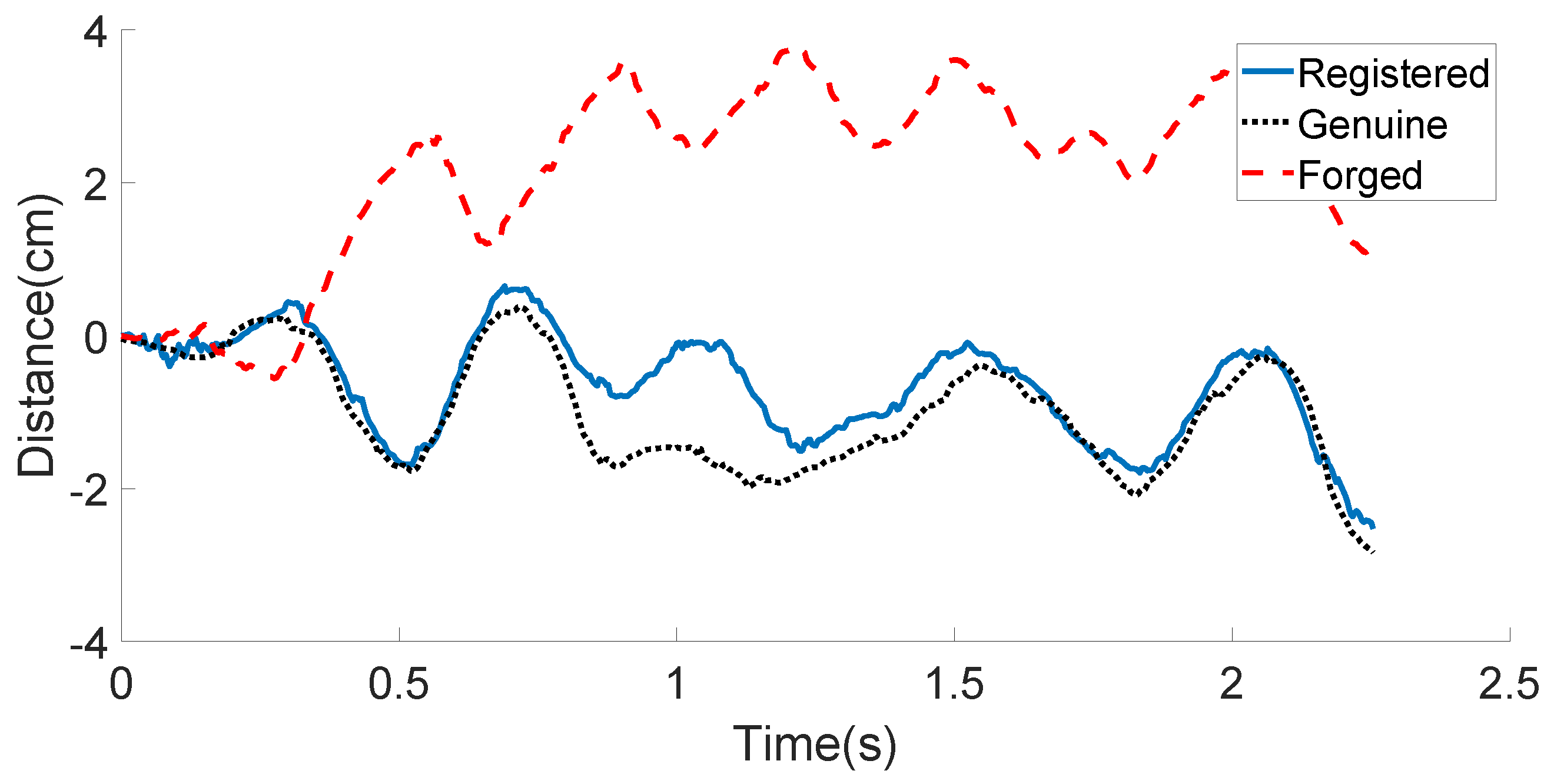

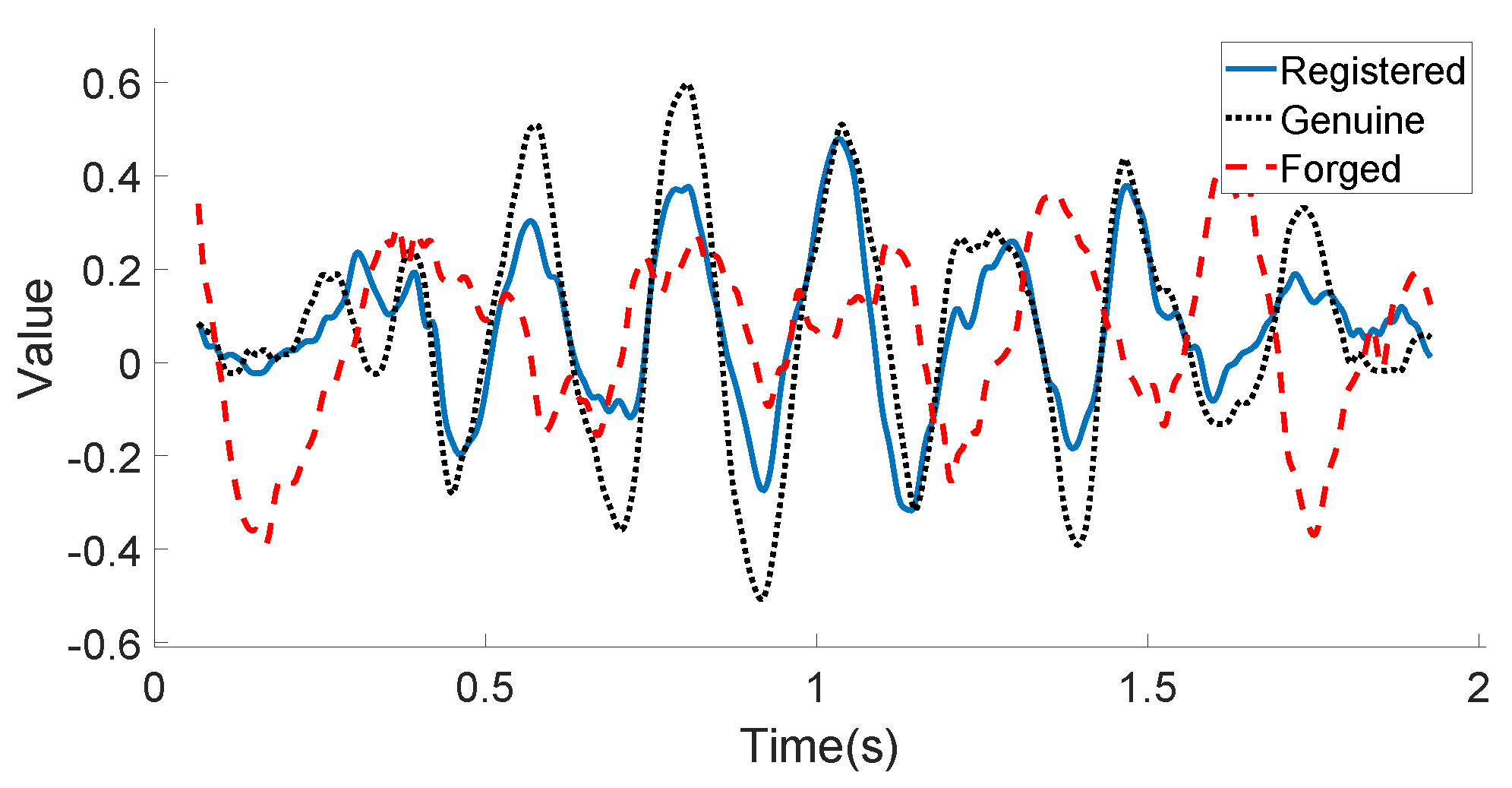

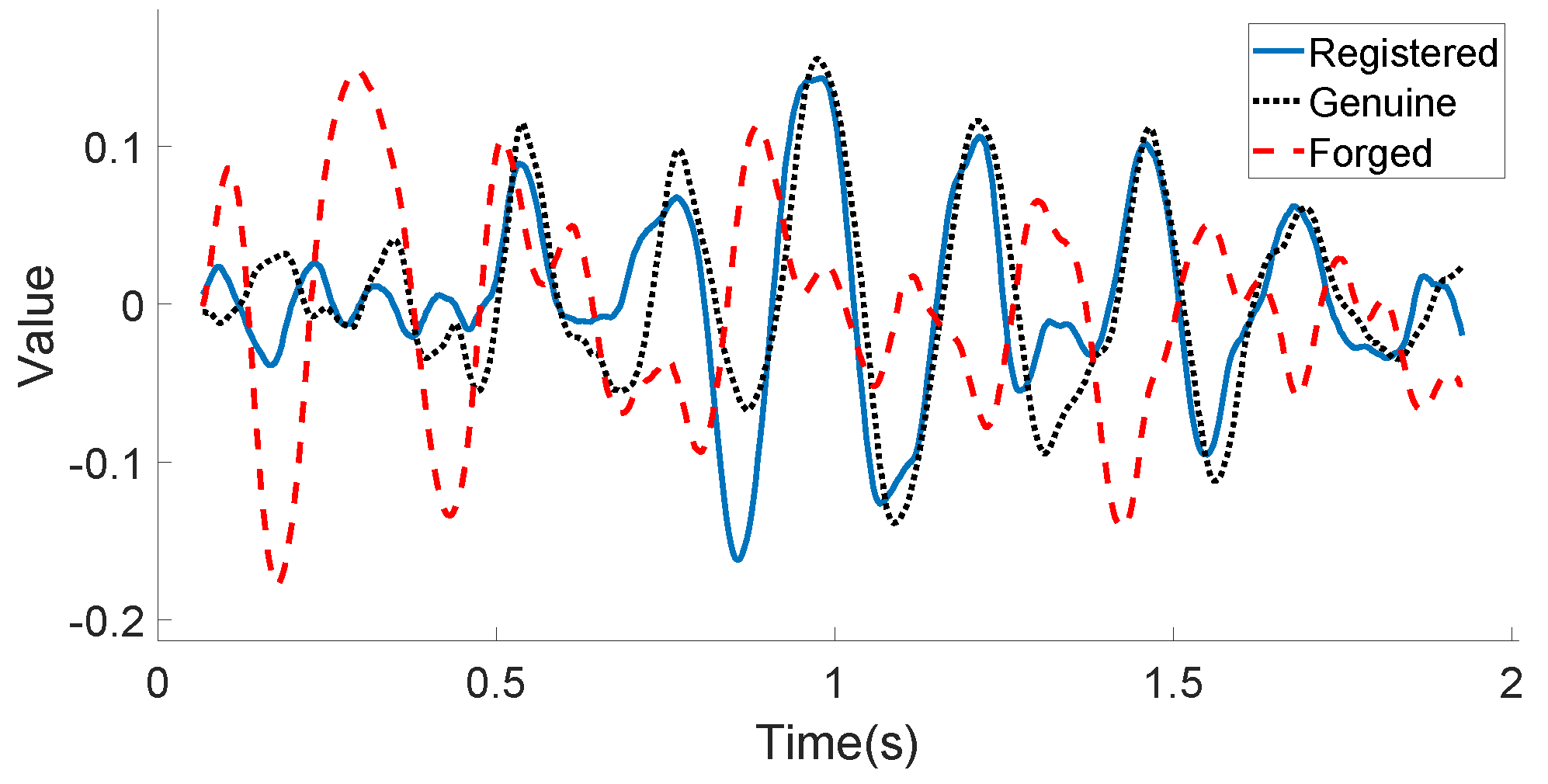

3.2.2. Signature Feature Extraction

- First-order differences:

- Second-order differences:

- Sine and cosine features:

- Length-based features:

3.2.3. Motion Feature Extraction

3.3. Decision Model

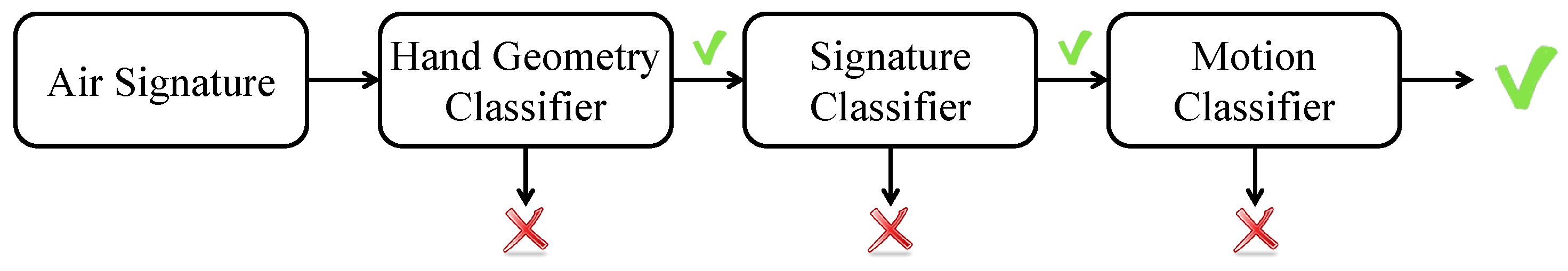

3.3.1. Architecture of the Decision Model

3.3.2. Hand Geometry Classifier

3.3.3. Motion and Signature Classifiers

4. Data Collection

4.1. Data Collection System

4.2. Data Collection Experiments

5. Evaluation

5.1. How Well Does AirSign Perform Overall?

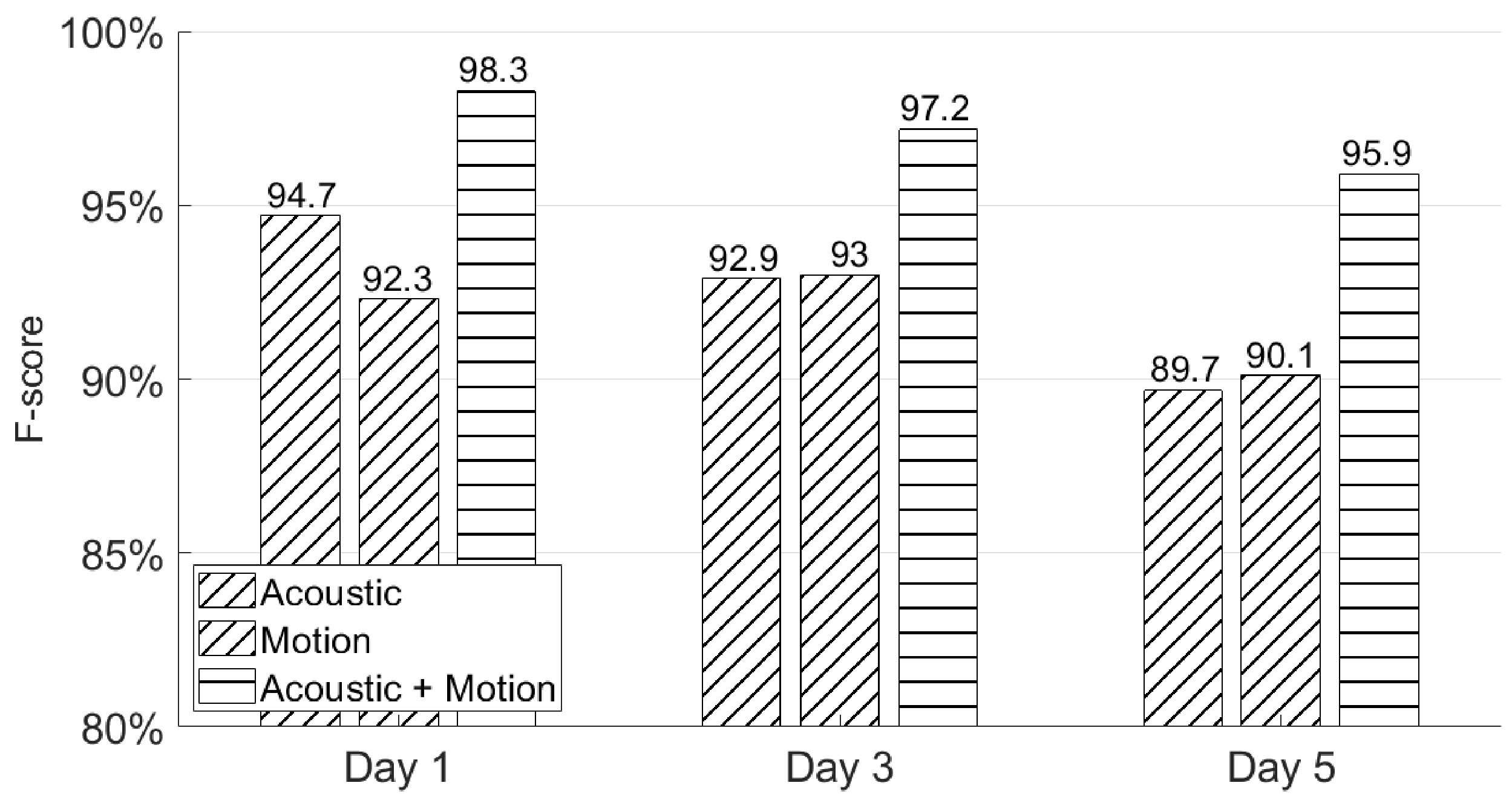

5.2. Will Users Be Able to Recall Their Signatures after a Few Days?

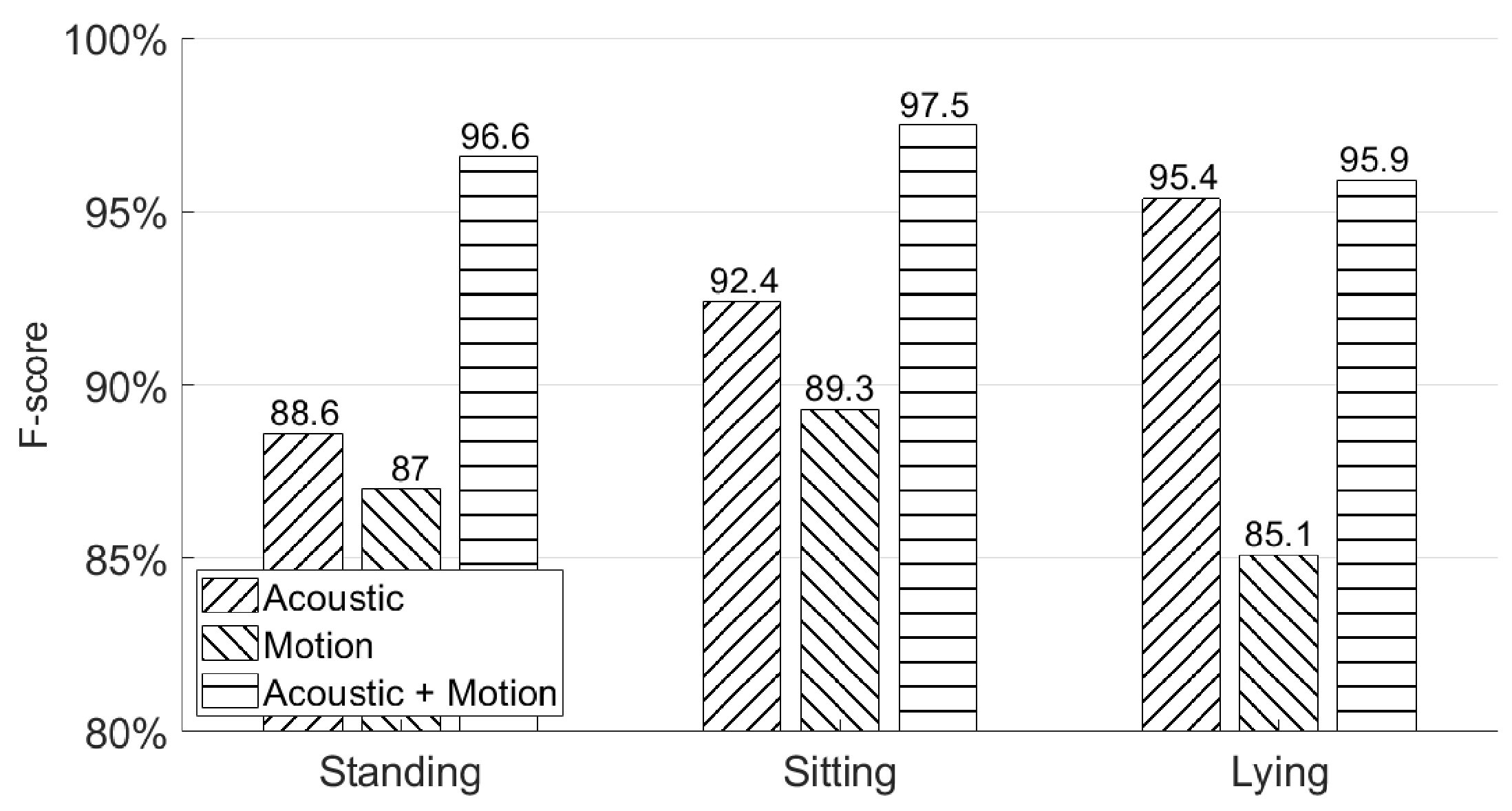

5.3. Will Different Poses Affect the Authentication Accuracy?

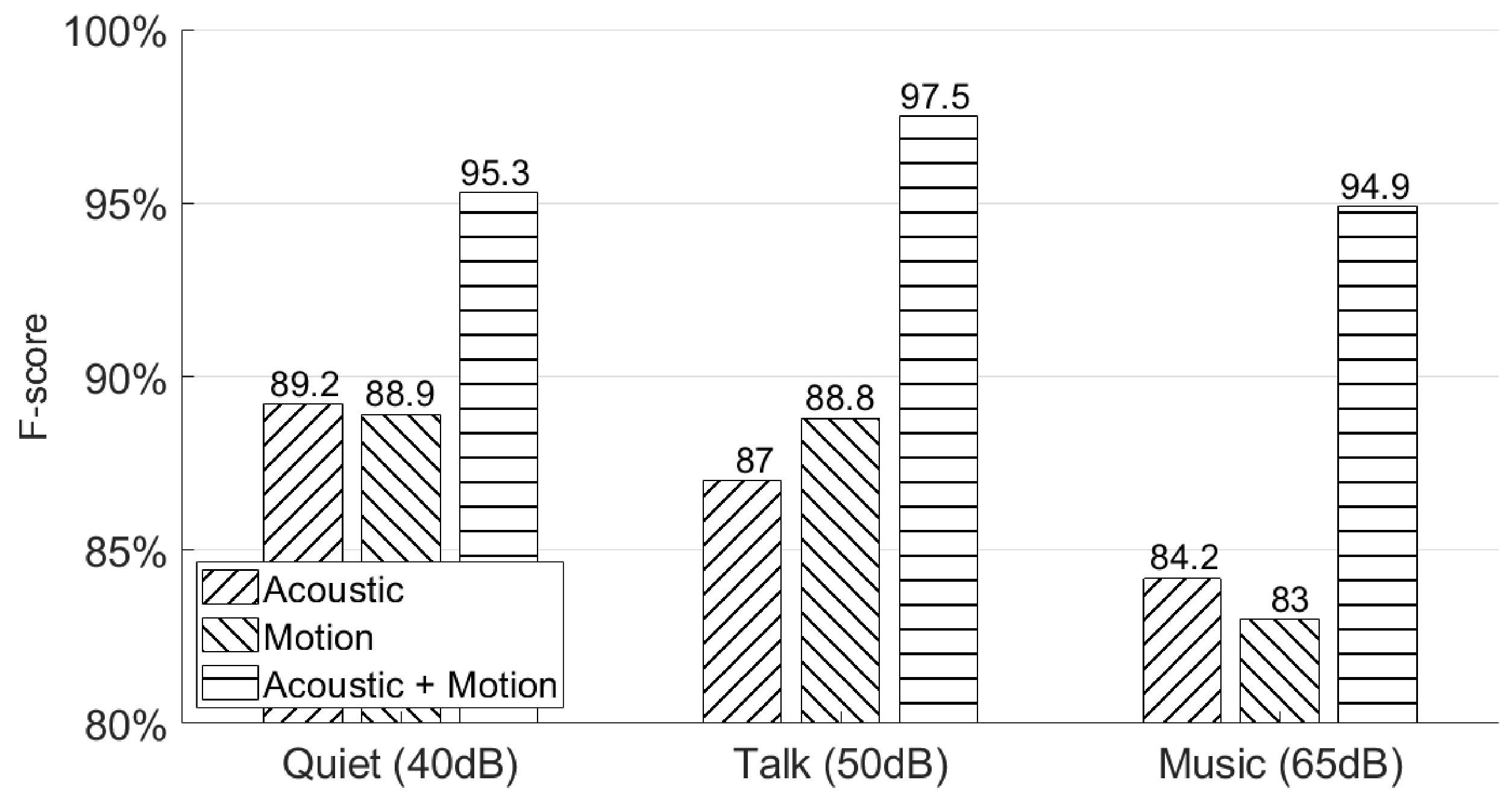

5.4. Will Different Environments Affect the Authentication Accuracy?

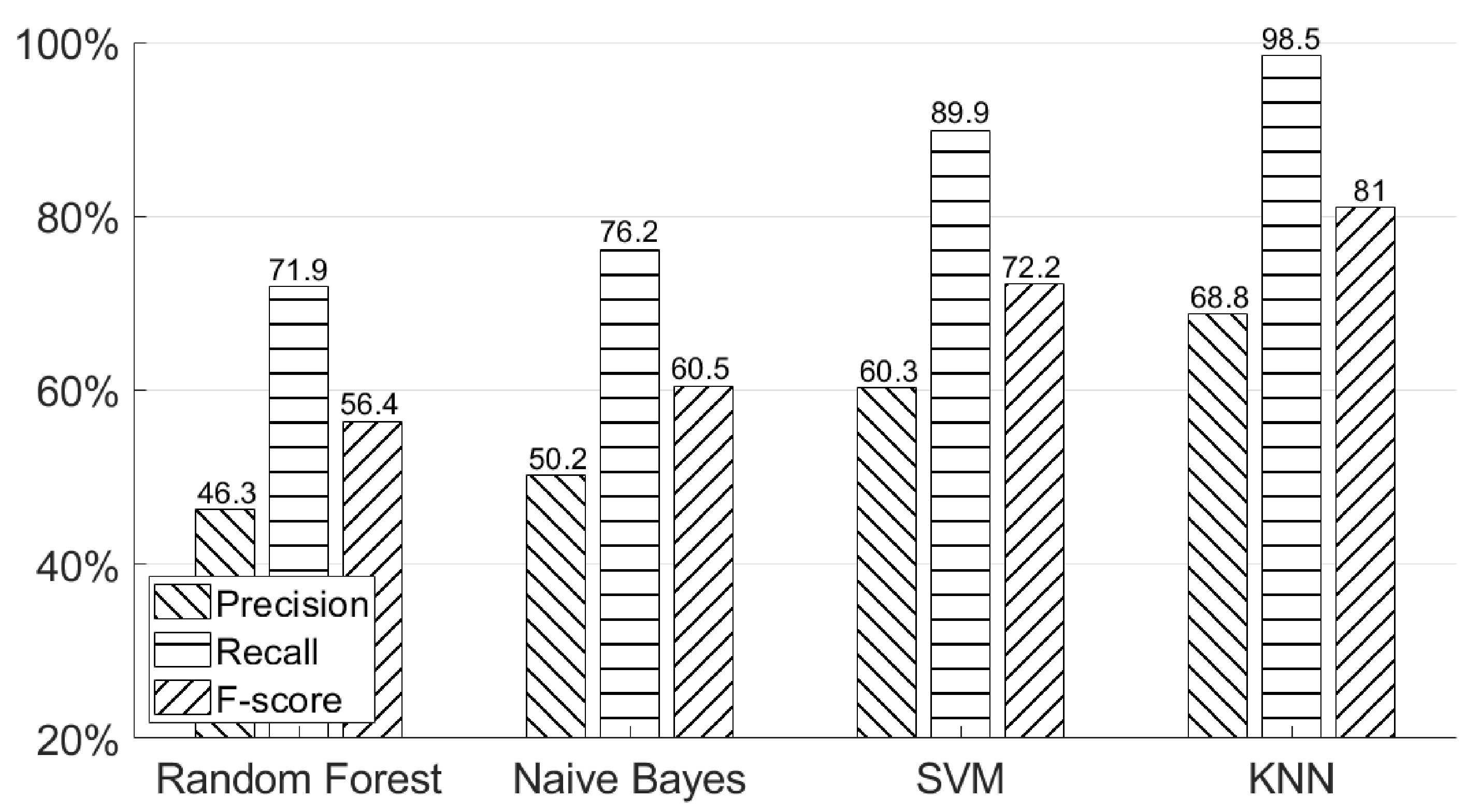

5.5. Why Was a KNN-Based Model Chosen for Classifying Hand Geometry?

5.6. How Do Hand Geometry, Signature, and Motion Classifiers Work for the Overall System?

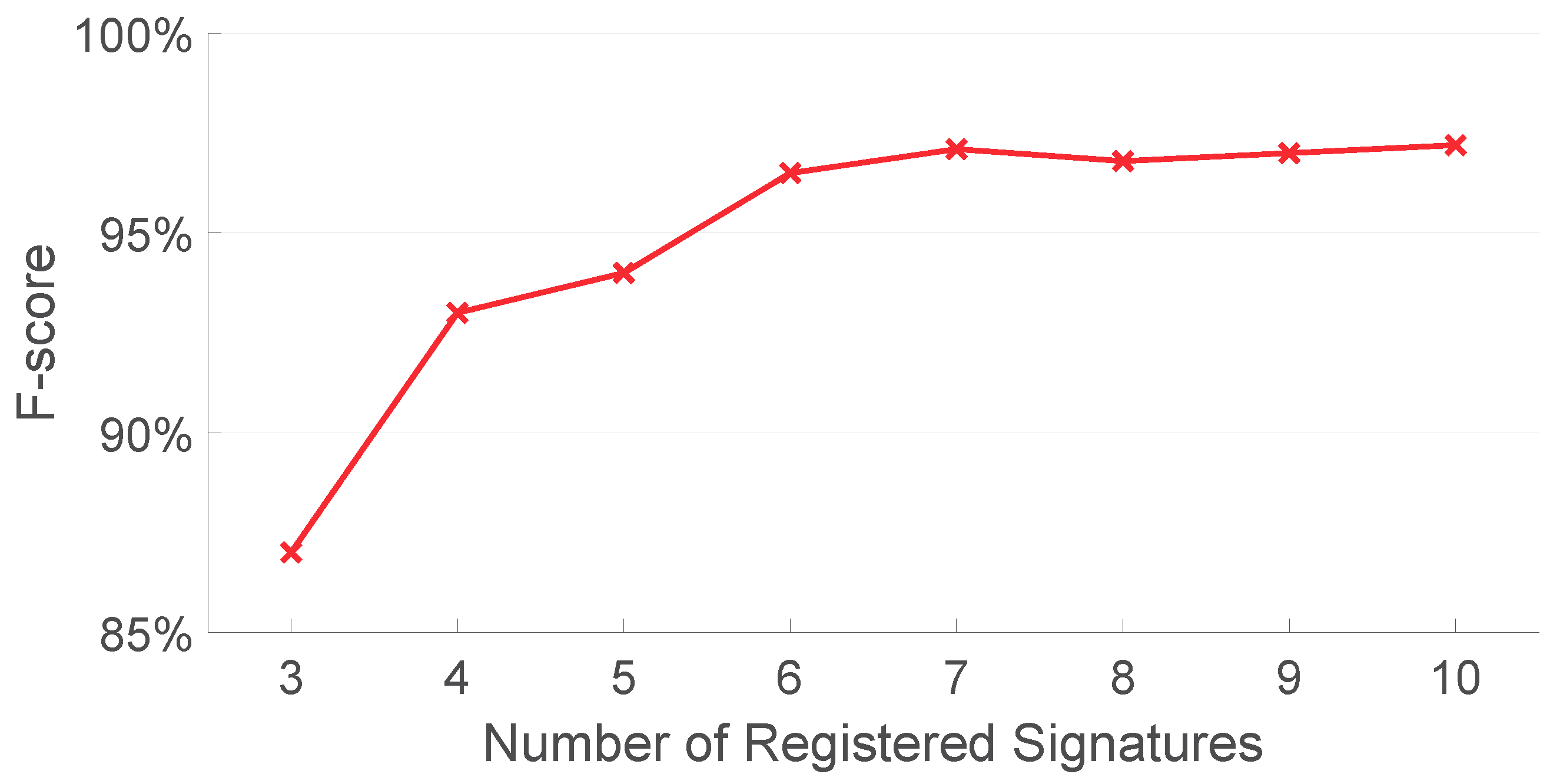

5.7. How Many Registered Signatures Are Needed?

5.8. How Do Participants Respond to AirSign?

5.9. How Does AirSign Compare to the Other Smartphone Authentication Systems?

6. Related Work

6.1. Smartphone Authentication

6.2. Signature Authentication

6.3. Acoustic Sensing on Smartphones

7. Discussion and Future Work

7.1. Users’ Active Motion

7.2. Multiple Users

7.3. Privacy Concerns and Memory Concerns

7.4. Large-Scale Experiment

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Apple. Apple Pay. Available online: https://www.apple.com/apple-pay/ (accessed on 25 December 2020).

- Google. Android Pay. Available online: https://www.android.com/pay/ (accessed on 25 December 2020).

- Killoran, J. 4 Password Authentication Vulnerabilities & How to Avoid Them. Available online: https://swoopnow.com/password-authentication/ (accessed on 25 December 2020).

- Tari, F.; Ozok, A.A.; Holden, S.H. A Comparison of Perceived and Real Shoulder-Surfing Risks Between Alphanumeric and Graphical Passwords; SOUPS ’06; ACM: New York, NY, USA, 2006; pp. 56–66. [Google Scholar]

- Apple. Use Touch ID on iPhone and iPad. Available online: https://support.apple.com/en-us/HT201371/ (accessed on 25 December 2020).

- Samsung. What is the Ultrasonic Fingerprint scanner on Galaxy S10, Galaxy Note10, and Galaxy Note10+? Available online: https://www.samsung.com/global/galaxy/what-is/ultrasonic-fingerprint/ (accessed on 25 December 2020).

- Thakkar, D. How to Clean Your Fingerprint Scanner Device & How to Avoid Them. Available online: https://www.bayometric.com/how-to-clean-your-fingerprint-scanner-device/ (accessed on 25 December 2020).

- Apple. About Face ID advanced technology. Available online: https://support.apple.com/en-us/HT208108/ (accessed on 25 December 2020).

- Corballis, M.C. Left Brain, Right Brain: Facts and Fantasies. PLoS Biol. 2014, 12, e1001767. [Google Scholar] [CrossRef] [PubMed]

- Bundy, D.T.; Szrama, N.; Pahwa, M.; Leuthardt, E.C. Unilateral, Three-dimensional Arm Movement Kinematics are Encoded in Ipsilateral Human Cortex. J. Neurosci. 2018, 38, 10042–10056. [Google Scholar] [CrossRef] [PubMed]

- Sanguansat, P. Multiple Multidimensional Sequence Alignment Using Generalized Dynamic Time Warping. WSEAS Trans. Math. 2012, 11, 668–678. [Google Scholar]

- Wang, W.; Liu, A.X.; Sun, K. Device-free Gesture Tracking Using Acoustic Signals. In Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, MobiCom’16, New York, NY, USA, 3–7 October 2016; ACM: New York, NY, USA, 2016; pp. 82–94. [Google Scholar]

- Valiente, A.R.; Trinidad, A.; Berrocal, J.R.G.; Górriz, C.; Camacho, R.R. Extended high-frequency (9–20 kHz) audiometry reference thresholds in 645 healthy subjects. Int. J. Audiol. 2014, 538, 531–545. [Google Scholar]

- Ifeachor, E.C.; Jervis, B.W. Digital Signal Processing: A Practical Approach; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 1993. [Google Scholar]

- Zhou, B.; Elbadry, M.; Gao, R.; Ye, F. BatMapper: Acoustic Sensing Based Indoor Floor Plan Construction Using Smartphones. In Proceedings of the 15th Annual International Conference on Mobile Systems, Applications, and Services, Niagara Falls, NY, USA, 19–23 June 2017; ACM: New York, NY, USA, 2017; pp. 42–55. [Google Scholar]

- Developers, A. SystemClock. Available online: https://developer.android.com/reference/android/os/SystemClock (accessed on 25 December 2020).

- Harrington, P. Machine Learning in Action; Manning Publications Co.: Greenwich, CT, USA, 2012. [Google Scholar]

- Diaz, J. Beware: Galaxy S10’s Facial Recognition Easily Fooled with a Photo. Available online: https://www.tomsguide.com/us/galaxy-s10-facial-recognition,news-29606.html (accessed on 25 December 2020).

- Winder, D. Samsung Galaxy S10 Fingerprint Scanner Hacked—Here’s What You Need To Know. Available online: https://www.forbes.com/sites/daveywinder/2019/04/06/samsung-galaxy-s10-fingerprint-scanner-hacked-heres-what-you-need-to-know/#32156535d423 (accessed on 25 December 2020).

- FAMELI, J. Testing Apple’s Touch ID with Fake Fingerprints. Available online: https://www.tested.com/tech/ios/486967-testing-apples-touch-id-fake-fingerprints/ (accessed on 25 December 2020).

- Aumi, M.T.I.; Kratz, S.G. AirAuth: Evaluating In-Air Hand Gestures for Authentication. In Proceedings of the Mobile HCI, Toronto, ON, Canada, 23–26 September 2014. [Google Scholar]

- Zhou, B.; Lohokare, J.; Gao, R.; Ye, F. EchoPrint: Two-Factor Authentication Using Acoustics and Vision on Smartphones. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, MobiCom ’18, New Delhi, India, 29 October–2 November 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 321–336. [Google Scholar]

- Sitová, Z.; Šeděnka, J.; Yang, Q.; Peng, G.; Zhou, G.; Gasti, P.; Balagani, K.S. HMOG: New Behavioral Biometric Features for Continuous Authentication of Smartphone Users. IEEE Trans. Inf. Forensics Secur. 2016, 11, 877–892. [Google Scholar] [CrossRef]

- Chen, M.; Lin, J.; Zou, Y.; Ruby, R.; Wu, K. SilentSign: Device-free Handwritten Signature Verification through Acoustic Sensing. In Proceedings of the 2020 IEEE International Conference on Pervasive Computing and Communications (PerCom), Austin, TX, USA, 23–27 March 2020; IEEE Computer Society: Los Alamitos, CA, USA, 2020; pp. 1–10. [Google Scholar] [CrossRef]

- Ding, F.; Wang, D.; Zhang, Q.; Zhao, R. ASSV: handwritten signature verification using acoustic signals. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, September 2019; pp. 274–277. [Google Scholar] [CrossRef]

- Ong, T. This $150 mask beat Face ID on the iPhone X. Available online: https://www.theverge.com/2017/11/13/16642690/bkav-iphone-x-faceid-mask/ (accessed on 25 December 2020).

- Samsung. Use Facial Recognition Security on Your Galaxy Phone. Available online: https://www.samsung.com/us/support/answer/ANS00062630/ (accessed on 25 December 2020).

- Tolosana, R.; Vera-Rodriguez, R.; Fierrez, J.; Ortega-Garcia, J. DeepSign: Deep On-Line Signature Verification. arXiv 2020, arXiv:2002.10119. [Google Scholar]

- Tycoyoken. How to Fool a Fingerprint Security System As Easy As ABC. Available online: https://www.instructables.com/id/How-To-Fool-a-Fingerprint-Security-System-As-Easy-/ (accessed on 25 December 2020).

- Alzubaidi, A.; Kalita, J. Authentication of Smartphone Users Using Behavioral Biometrics. IEEE Commun. Surv. Tutorials 2016, 18, 1998–2024. [Google Scholar] [CrossRef]

- Chauhan, J.; Hu, Y.; Seneviratne, S.; Misra, A.; Seneviratne, A.; Lee, Y. BreathPrint: Breathing Acoustics-based User Authentication. In Proceedings of the 15th Annual International Conference on Mobile Systems, Applications, and Services, MobiSys ’17, Niagara Falls, NY, USA, 19–23 June 2017; ACM: New York, NY, USA, 2017; pp. 278–291. [Google Scholar]

- Zheng, N.; Bai, K.; Huang, H.; Wang, H. You Are How You Touch: User Verification on Smartphones via Tapping Behaviors. In Proceedings of the 2014 IEEE 22nd International Conference on Network Protocols, Raleigh, NC, USA, 21–24 October 2014; pp. 221–232. [Google Scholar]

- Karakaya, N.; Alptekin, G.I.; Özlem Durmaz İncel. Using behavioral biometric sensors of mobile phones for user authentication. Procedia Comput. Sci. 2019, 159, 475–484. [Google Scholar] [CrossRef]

- Yang, Q.; Peng, G.; Nguyen, D.T.; Qi, X.; Zhou, G.; Sitová, Z.; Gasti, P.; Balagani, K.S. A Multimodal Data Set for Evaluating Continuous Authentication Performance in Smartphones. In Proceedings of the 12th ACM Conference on Embedded Network Sensor Systems, SenSys ’14, Memphis, TN, USA, 3–6 November 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 358–359. [Google Scholar] [CrossRef]

- Fenu, G.; Marras, M. Leveraging Continuous Multi-modal Authentication for Access Control in Mobile Cloud Environments. In New Trends in Image Analysis and Processing—ICIAP 2017; Battiato, S., Farinella, G.M., Leo, M., Gallo, G., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 331–342. [Google Scholar]

- Fenu, G.; Marras, M. Controlling User Access to Cloud-Connected Mobile Applications by Means of Biometrics. IEEE Cloud Comput. 2018, 5, 47–57. [Google Scholar] [CrossRef]

- Gabe Alvarez, B.S.; Bryant, M. Offline Signature Verification with Convolutional Neural Networks; Stanford University: Stanford, CA, USA, 2016. [Google Scholar]

- Almayyan, W.; Own, H.; Zedan, H. Information fusion in biometrics: a case study in online signature. In Proceedings of the International Multi-Conference on Complexity, Informatics and Cybernetics: IMCIC 2010, Orlando, FL, USA, 6–9 April 2010. [Google Scholar]

- Levy, A.; Nassi, B.; Elovici, Y.; Shmueli, E. Handwritten Signature Verification Using Wrist-Worn Devices. Proc. Acm Interact. Mob. Wearable Ubiquitous Technol. 2018, 119, 1–26. [Google Scholar] [CrossRef]

- Sae-Bae, N.; Memon, N. Online Signature Verification on Mobile Devices. IEEE Trans. Inf. Forensics Secur. 2014, 9, 933–947. [Google Scholar] [CrossRef]

- Iranmanesh, V.; Ahmad, S. Online Handwritten Signature Verification Using Neural Network Classifier Based on Principal Component Analysis. Sci. World J. 2014, 2014, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Gomez-Barrero, M.; Fierrez, J.; Galbally, J. Variable-length template protection based on homomorphic encryption with application to signature biometrics. In Proceedings of the 2016 4th International Conference on Biometrics and Forensics (IWBF), Limassol, Cyprus, 3–4 March 2016; pp. 1–6. [Google Scholar]

- Fischer, A.; Diaz, M.; Plamondon, R.; Ferrer, M.A. Robust score normalization for DTW-based on-line signature verification. In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Nancy, France, 23–26 August 2015; pp. 241–245. [Google Scholar]

- Fierrez, J.; Ortega-Garcia, J.; Ramos, D.; Gonzalez-Rodriguez, J. HMM-based on-line signature verification: Feature extraction and signature modeling. Pattern Recognit. Lett. 2007, 28, 2325–2334. [Google Scholar] [CrossRef]

- Fang, Y.; Kang, W.; Wu, Q.; Tang, L. A Novel Video-based System for In-air Signature Verification. Comput. Electron. Eng. 2017, 57, 1–14. [Google Scholar] [CrossRef]

- Peng, C.; Shen, G.; Zhang, Y.; Li, Y.; Tan, K. BeepBeep: A High Accuracy Acoustic Ranging System Using COTS Mobile Devices. In Proceedings of the 5th International Conference on Embedded Networked Sensor Systems, SenSys ’07, Sydney, Australia, 6–9 November 2007; ACM: New York, NY, USA, 2007; pp. 1–14. [Google Scholar]

- Zhang, Z.; Chu, D.; Chen, X.; Moscibroda, T. SwordFight: Enabling a New Class of Phone-to-Phone Action Games on Commodity Phones. In Proceedings of the 10th International Conference on Mobile Systems, Applications, and Services, Lake District, UK, 26–29 June 2012; ACM: New York, NY, USA, 2012. [Google Scholar]

- Smith, A.; Balakrishnan, H.; Goraczko, M.; Priyantha, N. Tracking moving devices with the cricket location system. In Proceedings of the 2nd International Conference on Mobile Systems, Applications, and Services, Boston, MA, USA, 6–9 June 2004; ACM: New York, NY, USA, 2004; pp. 190–202. [Google Scholar]

- Huang, W.; Xiong, Y.; Li, X.Y.; Lin, H.; Mao, X.; Yang, P.; Liu, Y. Shake and walk: Acoustic direction finding and fine-grained indoor localization using smartphones. In Proceedings of the INFOCOM, Toronto, ON, Canada, 27 April–2 May 2014; pp. 370–378. [Google Scholar]

- Yun, S.; Chen, Y.C.; Qiu, L. Turning a mobile device into a mouse in the air. In Proceedings of the 13th Annual International Conference on Mobile Systems, Applications, and Services, Florence, Italy, 19–22 May 2015; ACM: New York, NY, USA, 2015; pp. 15–29. [Google Scholar]

- Mao, W.; He, J.; Zheng, H.; Zhang, Z.; Qiu, L. High-precision Acoustic Motion Tracking: Demo. In Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, MobiCom’16, New York, NY, USA, 3–7 October 2016; ACM: New York, NY, USA, 2016; pp. 491–492. [Google Scholar]

- Nandakumar, R.; Gollakota, S.; Watson, N. Contactless sleep apnea detection on smartphones. In Proceedings of the 13th Annual International Conference on Mobile Systems, Applications, and Services, Florence, Italy, 19–22 May 2015; ACM: New York, NY, USA, 2015; pp. 45–57. [Google Scholar]

- Nandakumar, R.; Iyer, V.; Tan, D.; Gollakota, S. Fingerio: Using active sonar for fine-grained finger tracking. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; ACM: New York, NY, USA, 2016; pp. 1515–1525. [Google Scholar]

| Unsure (%) | FRR (%) | TAR (%) | |

|---|---|---|---|

| Input | 100 | 0 | 0 |

| Hand Geometry Classifier | 98.2 | 1.8 | 0 |

| Signature Classifier | 97.3 | 2.7 | 0 |

| Motion Classifier | 0 | 3.4 | 96.6 |

| Unsure (%) | FAR (%) | TRR (%) | |

|---|---|---|---|

| Input | 100 | 0 | 0 |

| Hand Geometry Classifier | 46.4 | 0 | 53.6 |

| Signature Classifier | 34.1 | 0 | 65.9 |

| Motion Classifier | 0 | 1.6 | 98.4 |

| Technique | Hardware | Screen Space | Limitation | Accuracy |

|---|---|---|---|---|

| Occupied | ||||

| FaceID [8] | TrueDepth camera | Large | 3D head mask attack[26] | >99.9% |

| Samsung FR [27] | RGB camera | Medium | Images attack [18] | - |

| TouchID [5] | Fingerprint sensor | Large | Finger masks [20] | >99.9% |

| Samsung FP [6] | Ultrasonic fingerprint sensor | None | Finger masks [19] | >99.9% |

| PIN [3] | Smartphone screen | None | Shoulder-surfing attack [4] | - |

| AirAuth [21] | Depth camera | Large | Additional hardware | EER 3.4% |

| Z. Sitová et al. [23] | Motion sensors and | Large | Low accuracy | EER 7.16% (walking) |

| touch screen | EER 10.05% (Sitting) | |||

| EchoPrint [22] | Acoustic sensors and camera | Medium | Low accuracy in low illumination | 93.5% |

| SilentSign [28] | Acoustic sensors | Small | Handwritten signature by pen | EER 1.25% |

| ASSV [25] | Acoustic sensors | Small | Handwritten signature by pen | EER 5.5% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shao, Y.; Yang, T.; Wang, H.; Ma, J. AirSign: Smartphone Authentication by Signing in the Air. Sensors 2021, 21, 104. https://doi.org/10.3390/s21010104

Shao Y, Yang T, Wang H, Ma J. AirSign: Smartphone Authentication by Signing in the Air. Sensors. 2021; 21(1):104. https://doi.org/10.3390/s21010104

Chicago/Turabian StyleShao, Yubo, Tinghan Yang, He Wang, and Jianzhu Ma. 2021. "AirSign: Smartphone Authentication by Signing in the Air" Sensors 21, no. 1: 104. https://doi.org/10.3390/s21010104

APA StyleShao, Y., Yang, T., Wang, H., & Ma, J. (2021). AirSign: Smartphone Authentication by Signing in the Air. Sensors, 21(1), 104. https://doi.org/10.3390/s21010104