A PCB Alignment System Using RST Template Matching with CUDA on Embedded GPU Board †

Abstract

1. Introduction

2. Related Work

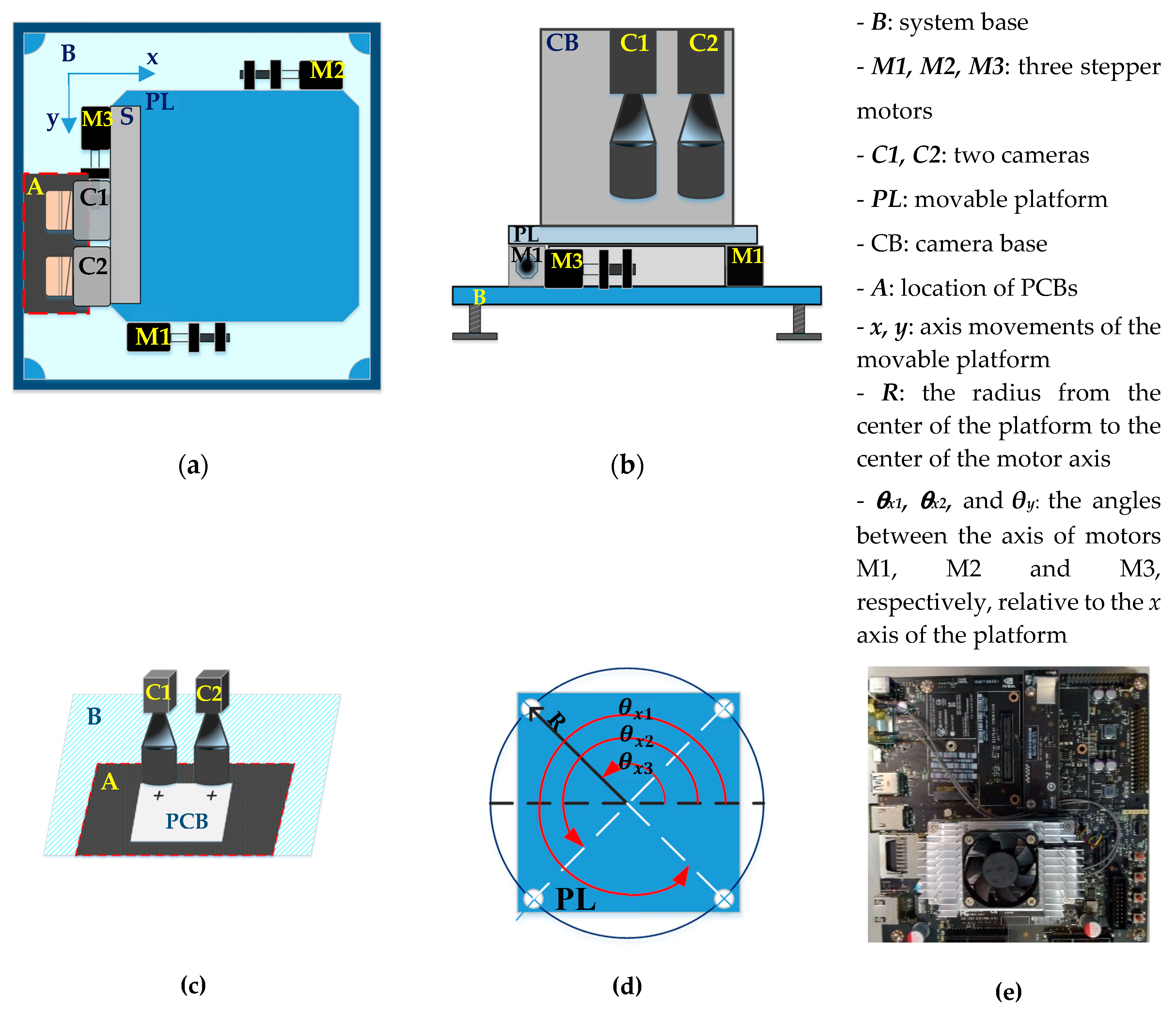

3. Proposed Embedded PCB Alignment System

3.1. System Hardware Consists of Two Subsystems

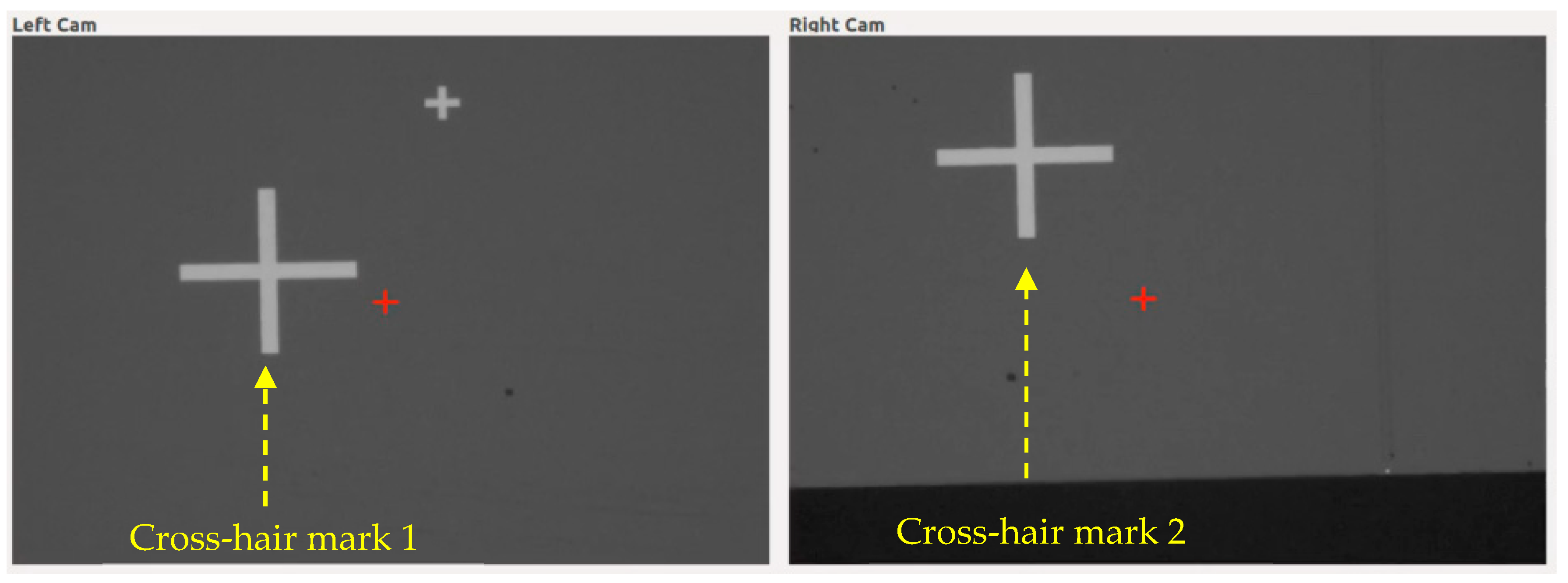

3.2. The Pixel-to-Metric Units Conversion Based on Four Reference Points of Cross-hair Marks

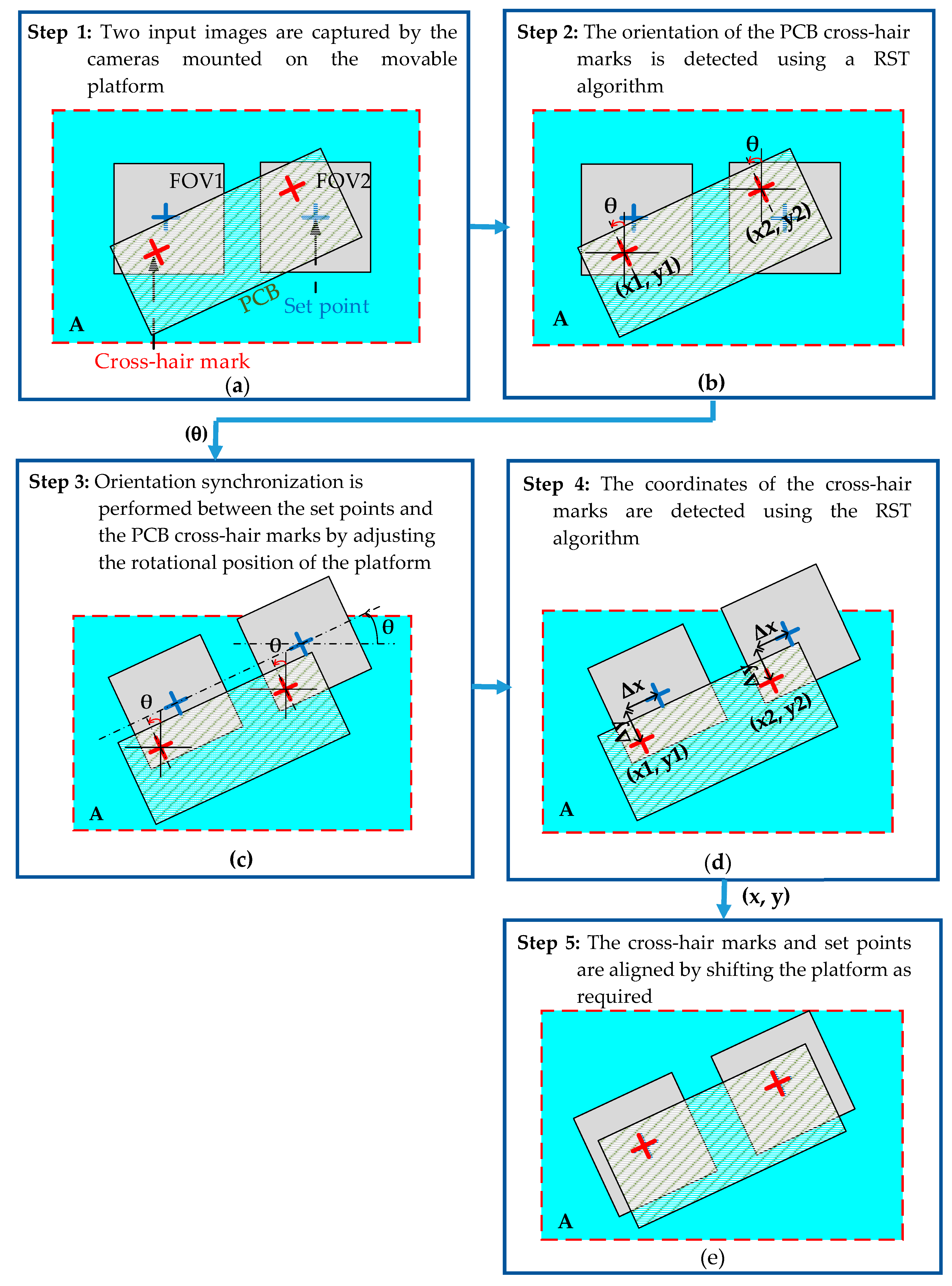

3.3. Procedure of Alignment between Marks on PCB Surface and Set Points in Field of View of Cameras

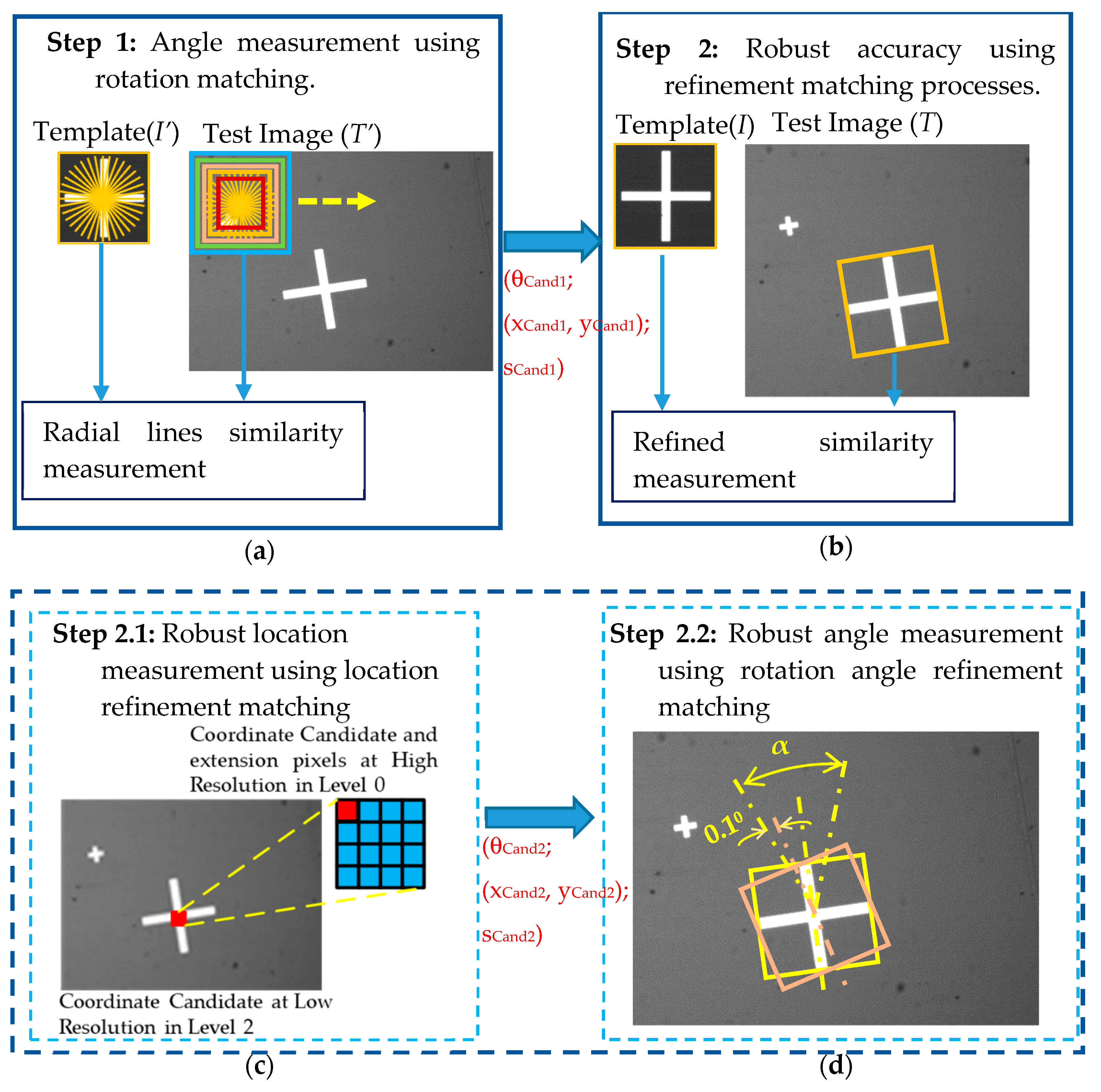

4. Refinement Algorithm of the Rotation, Scale and Translation (RST) Template Matching

5. Acceleration of the RST Template Matching Refinement Algorithm

5.1. Acceleration of Rotation Matching Using Quickly Rejecting Weak Features and Converting NCC Formula to Sum of Products

5.1.1. Quickly Rejecting Weak Features

5.1.2. Converting NCC formula to Sum of Products

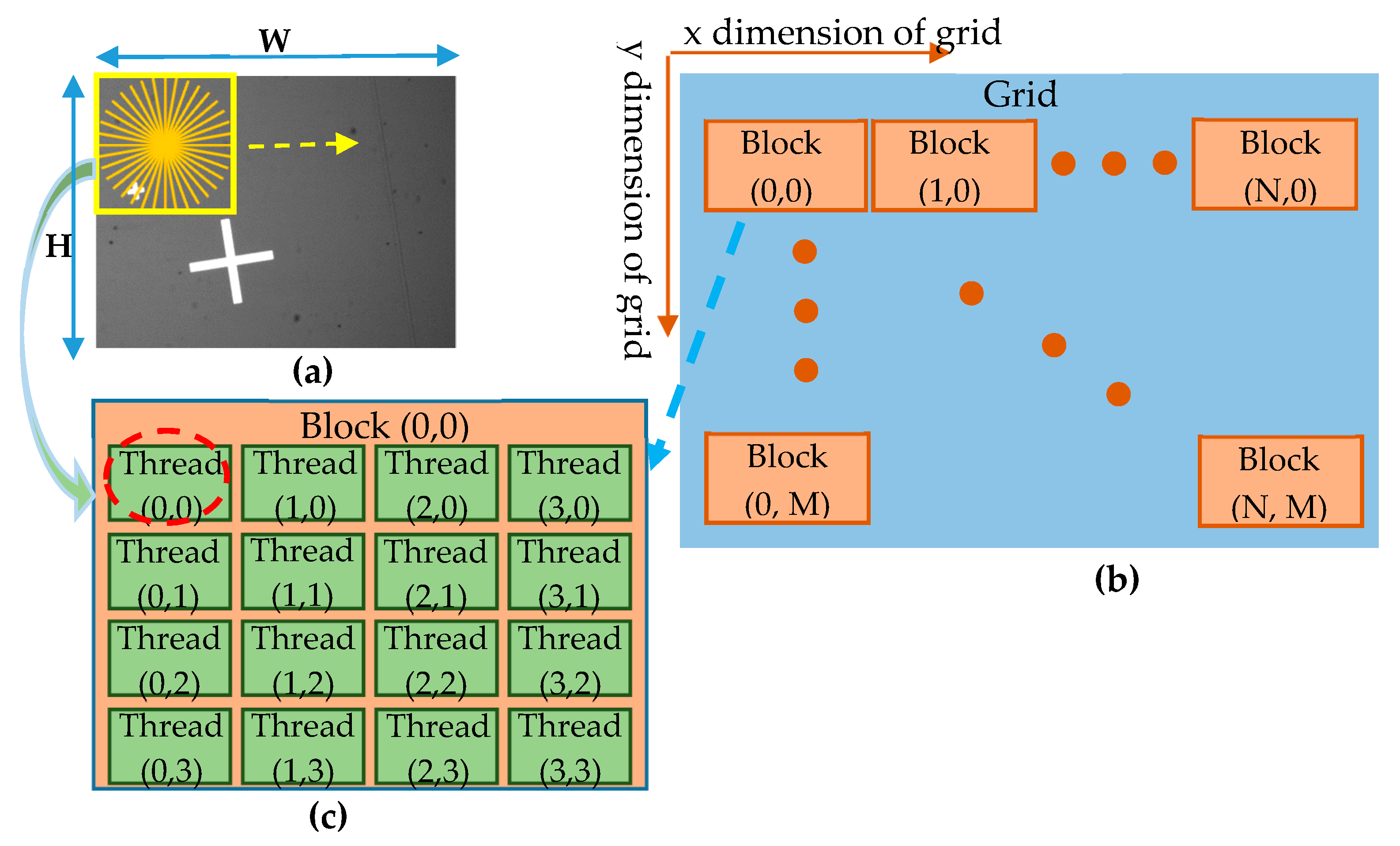

5.2. Acceleration of RST Refinement Template Matching Algorithm by Running on Parallel Threads of GPU with Hash Tables

5.2.1. Acceleration of Rotation Matching Using CUDA

5.2.2. Location Refinement Matching Algorithm Using CUDA

5.2.3. Rotation Angle Refinement Matching Algorithm Using CUDA

| Algorithm 1 Pseudo-code of location refinement matching | |

| 1: | Inputs: Test image I, size of template w × h, coordinate candidate (, ), angle |

| candidates scale candidates , pyramid level , number of candidates | |

| 2: | Outputs: Correlation coefficient |

| 3: | X index: idxXblockDim.x ∗ blockIdx.x + threadIdx.x //Number of extension pixels |

| 4: | Y index: idxYblockDim.y ∗ blockIdx.y + threadIdx.y //Number of candidates |

| 5: | if (idxX < ) and (idxY < ) then |

| 6: | Coordinate X: (idxX mod ) + ( + ) //Refinement coordinate x |

| 7: | Coordinate Y: (idxY div ) + ( + ) //Refinement coordinate y |

| 8: | Scale s: s [idxY] |

| 9: | Angle : [idxY] |

| 10: | for j in h do |

| 11: | for i in w do |

| 12: | Collect intensity pixel values inside a search window with a center point at |

| (, ), an orientation: , and a scale: s | |

| 13: | end |

| 14: | end |

| 15: | Calculate the NCC score between the template and the search windowend |

| 16: | end |

| 17: | Return |

| Algorithm 2 Pseudo-code of rotation angle refinement matching | |

| 1: | Inputs: Test image I, size of template w × h, coordinate candidate (, ), angle |

| candidates scale candidates , angular resolution α, number of candidates | |

| 2: | Outputs: Correlation coefficient |

| 3: | X index: idxXblockDim.x ∗ blockIdx.x + threadIdx.x //Angular resolution |

| 4: | Y index: idxYblockDim.y ∗ blockIdx.y + threadIdx.y //Number of candidates |

| 5: | if (idxX < ) and (idxY < ) then |

| 6: | Angle : [idxY] //Refined angle |

| 7: | Coordinate X: [idxY] |

| 8: | Coordinate Y: [idxY] |

| 9: | Scale s: s [idxY] |

| 10: | for j in h do |

| 11: | for i in w do |

| 12: | Collect intensity pixel values inside a search window using a bilinear interpolation approach with a center point at (x, y), an orientation: , and a scale: s |

| 13: | end |

| 14: | end |

| 15: | Calculate the NCC score between the template and the search windowend |

| 16: | end |

| 17: | Return |

6. Experimental Results and Analysis

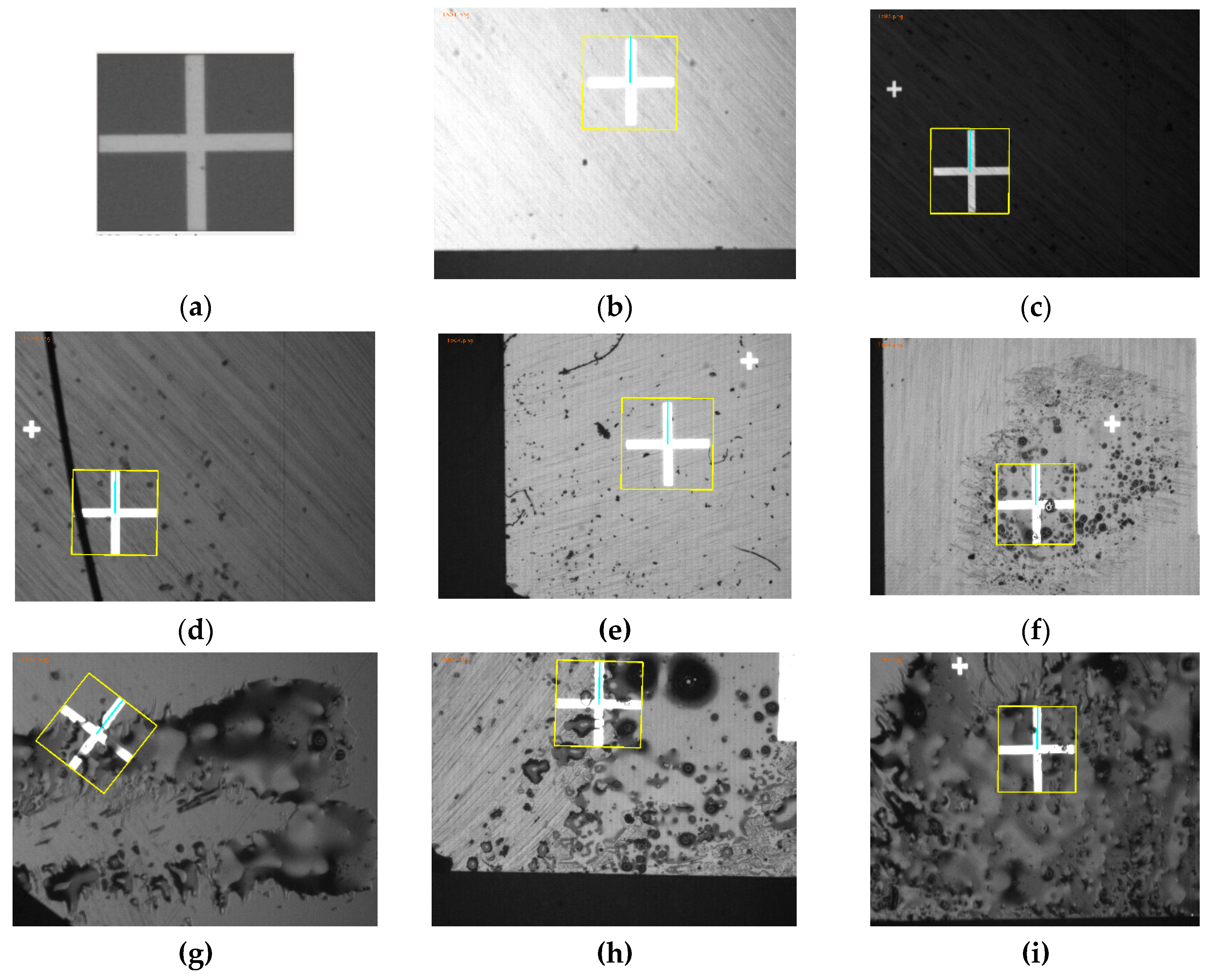

6.1. Data Collection

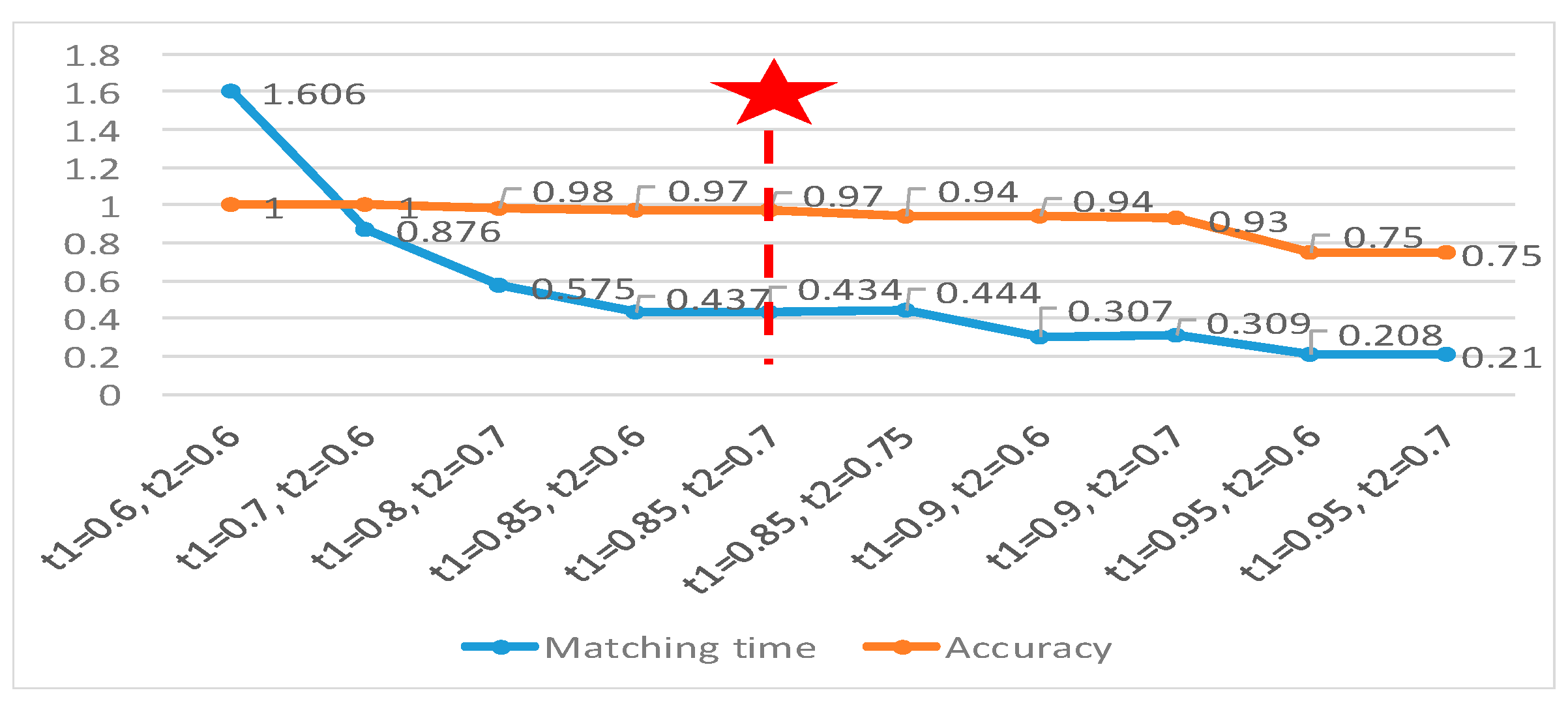

6.2. Results and Analysis

6.2.1. Comparative Evaluation Experiments Using Fiducial Marks Dataset

6.2.2. Comparative Evaluation Experiments Using PCB Component Dataset

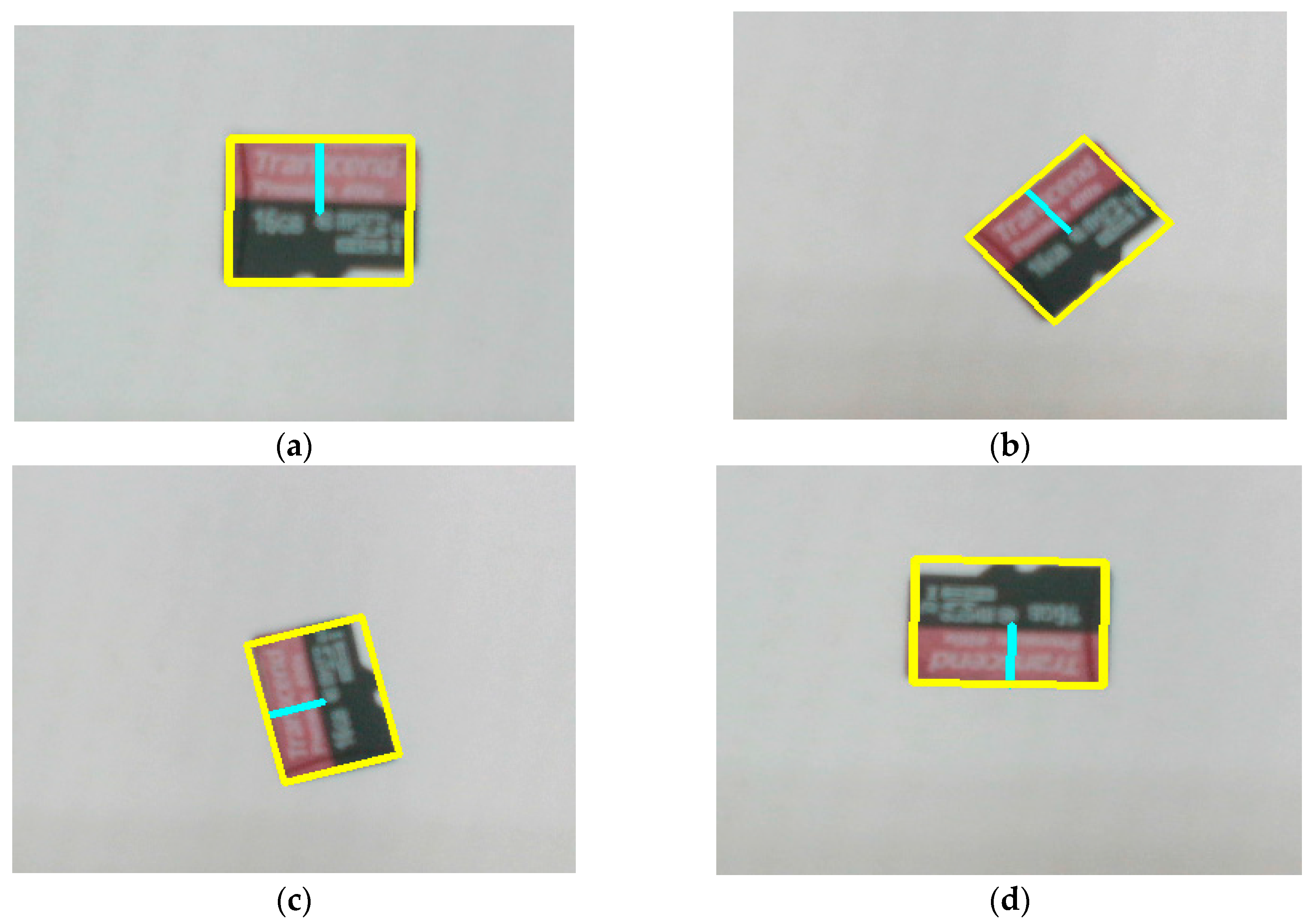

6.2.3. Comparative Evaluation Experiments Using SD Cards Dataset

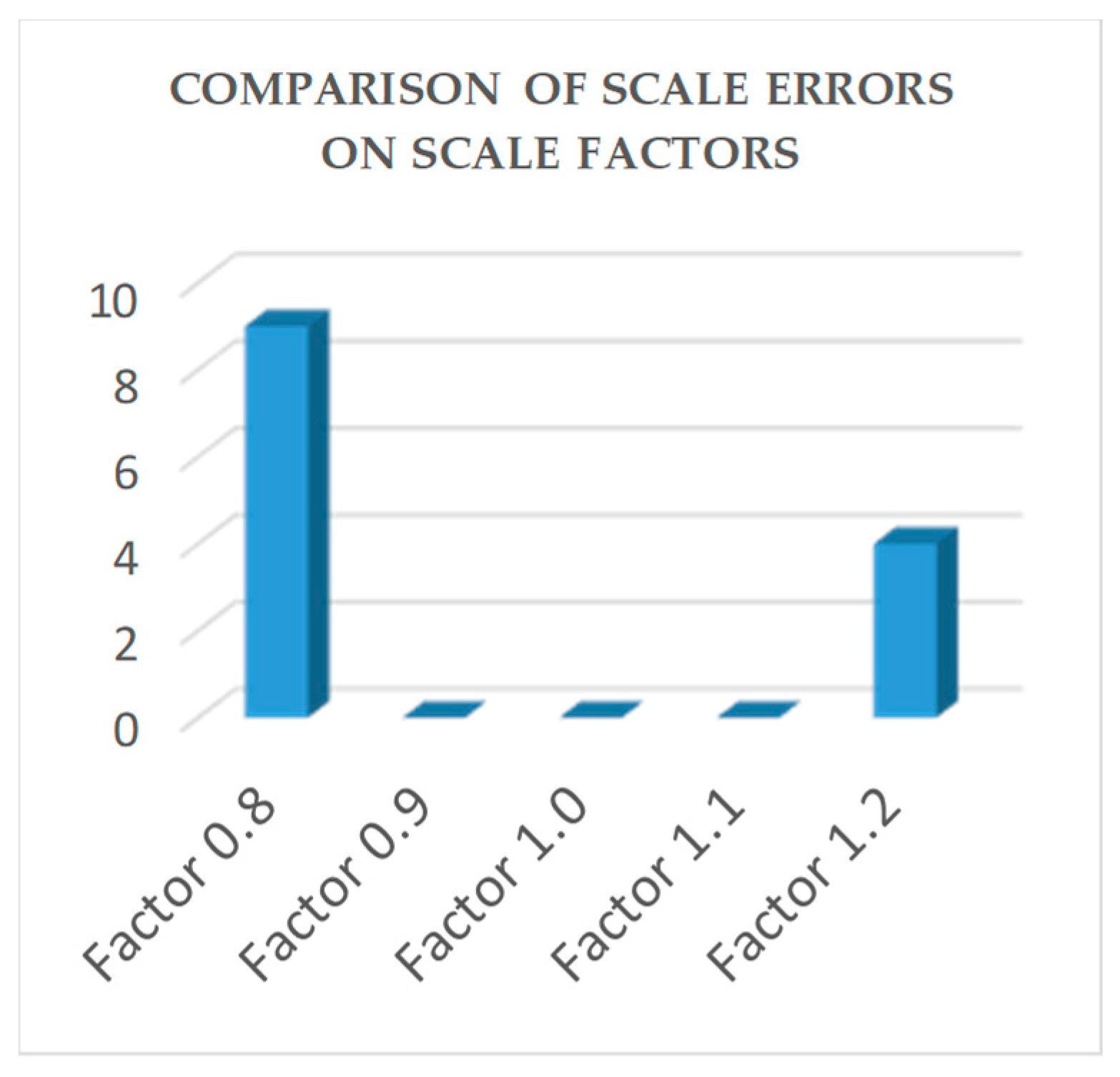

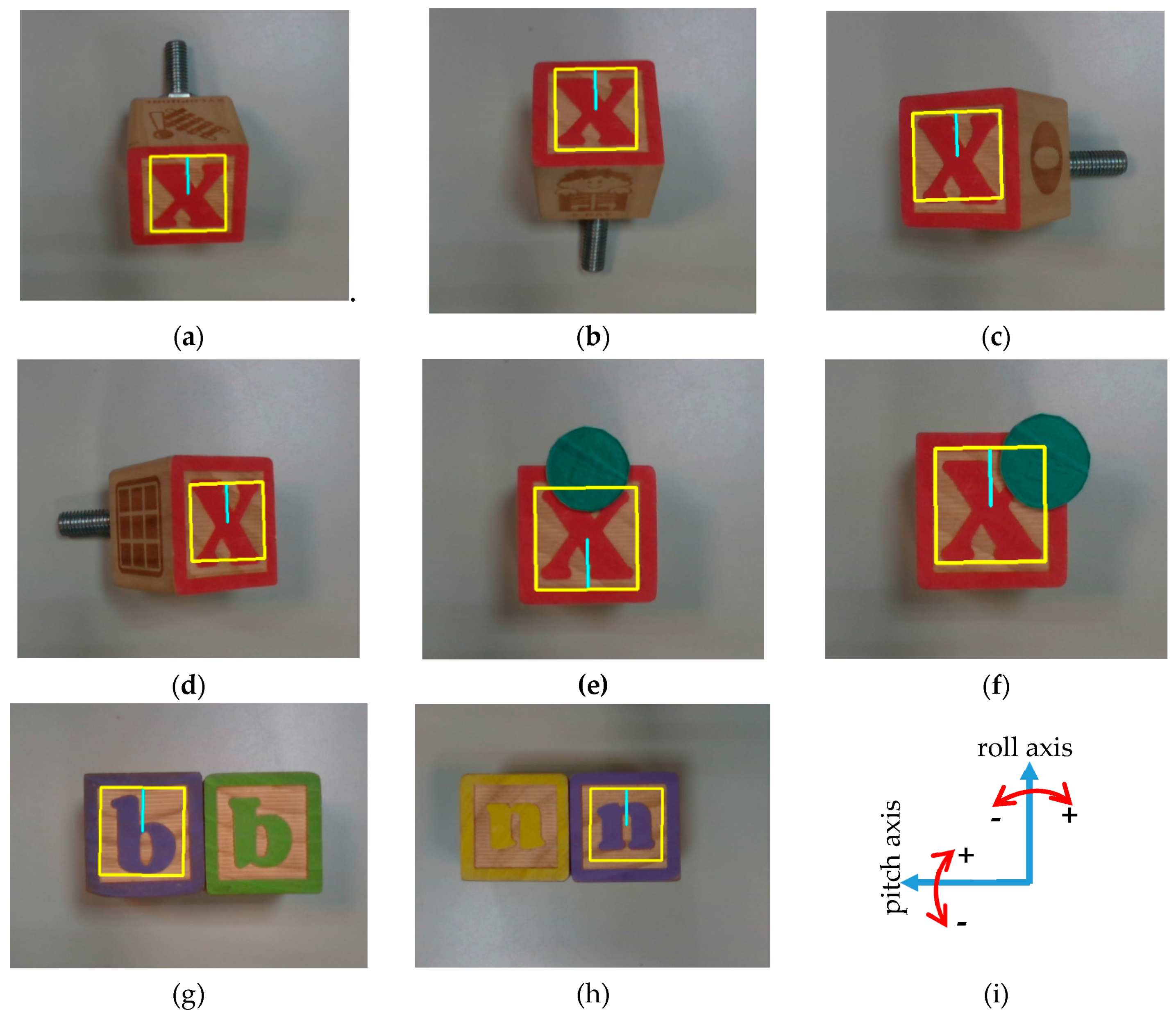

6.2.4. Performance Evaluation Experiments Using Alphabet Blocks Dataset

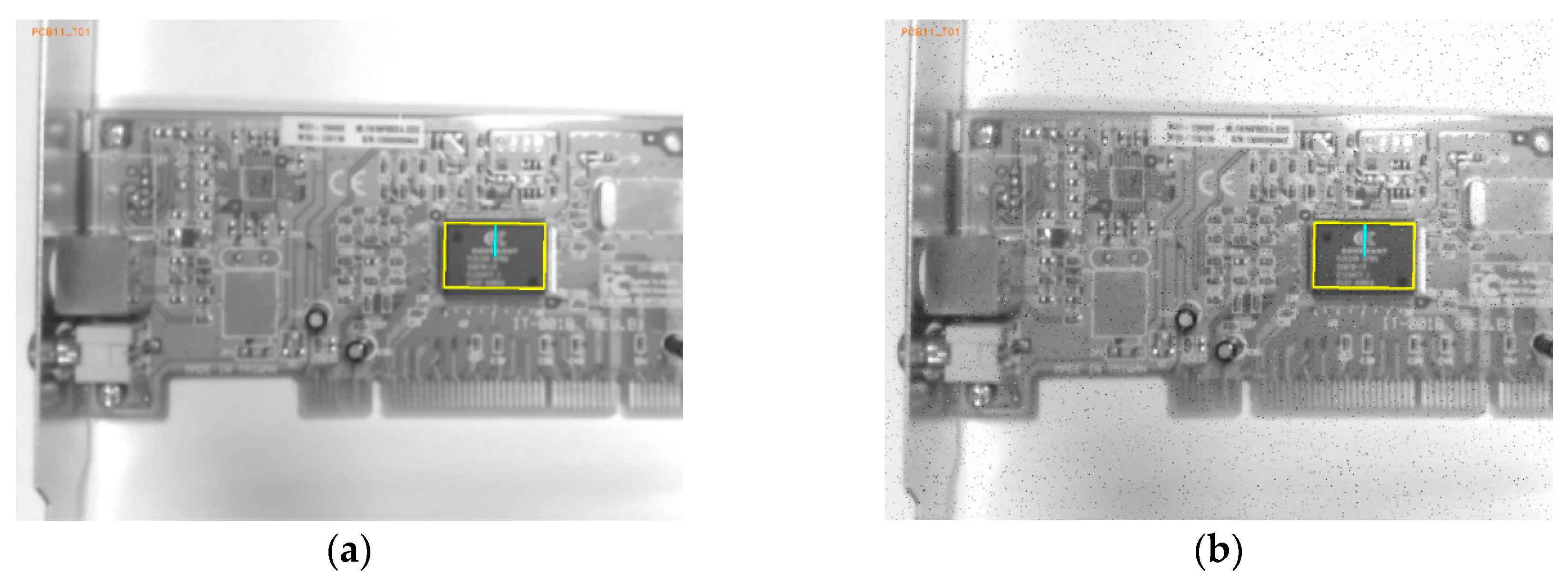

6.2.5. Cross-Hair Mark Detection Experiments Using emGPU-Improved RST

6.2.6. PCB Alignment Experiments Using emGPU-Improved RST

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Nguyen, H.D. Camera System for Aligning Components of a PCB. US Patent 9,265,186 B2, 16 February 2016. [Google Scholar]

- Kuo, C.-F.J.; Tsai, C.-H.; Wang, W.-R.; Wu, H.-C. Automatic marking point positioning of printed circuit boards based on template matching technique. J. Intell. Manuf. 2016, 30, 671–685. [Google Scholar] [CrossRef]

- Tsai, D.-M.; Hsieh, Y.-C. Machine Vision-Based Positioning and Inspection Using Expectation–Maximization Technique. IEEE Trans. Instrum. Meas. 2017, 66, 2858–2868. [Google Scholar] [CrossRef]

- Zhong, F.; He, S.; Li, B. Blob analyzation-based template matching algorithm for LED chip localization. Int. J. Adv. Manuf. Technol. 2015, 93, 55–63. [Google Scholar] [CrossRef]

- Opromolla, R.; Fasano, G.; Accardo, D. A Vision-Based Approach to UAV Detection and Tracking in Cooperative Applications. Sensors 2018, 18, 3391. [Google Scholar] [CrossRef]

- Dybedal, J.; Aalerud, A.; Hovland, G. Embedded Processing and Compression of 3D Sensor Data for Large Scale Industrial Environments. Sensors 2019, 19, 636. [Google Scholar] [CrossRef]

- Lee, S.-H.; Yang, C.-S. A Real Time Object Recognition and Counting System for Smart Industrial Camera Sensor. IEEE Sens. J. 2017, 17, 2516–2523. [Google Scholar] [CrossRef]

- Sassi, P.; Tripicchio, P.; Avizzano, C.A. A Smart Monitoring System for Automatic Welding Defect Detection. IEEE Trans. Ind. Electron. 2019, 66, 9641–9650. [Google Scholar] [CrossRef]

- Avizzano, C.A.; Tripicchio, P.; Ruffaldi, E.; Filippeschi, A.; Jacinto-Villegas, J.M. Real-Time Embedded Vision System for the Watchfulness Analysis of Train Drivers. IEEE Trans. Intell. Transp. Syst. 2019, 1–11. [Google Scholar] [CrossRef]

- Le, M.-T.; Li, C.-H.G.; Guo, S.-M.; Lien, J.-J.J. Embedded-Based Object Matching and Robot Arm Control. In Proceedings of the 2019 IEEE 15th International Conference on Automation Science and Engineering (CASE), Vancouver, BC, Canada, 22–26 August 2019; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2019; pp. 1296–1301. [Google Scholar]

- Zhao, M.; Hu, C.; Wei, F.; Wang, K.; Wang, C.; Jiang, Y. Real-Time Underwater Image Recognition with FPGA Embedded System for Convolutional Neural Network. Sensors 2019, 19, 350. [Google Scholar] [CrossRef]

- Wofk, D.; Ma, F.; Yang, T.-J.; Karaman, S.; Sze, V. FastDepth: Fast Monocular Depth Estimation on Embedded Systems. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2019; pp. 6101–6108. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2018; pp. 4510–4520. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2018; pp. 6848–6856. [Google Scholar]

- Wang, R.J.; Li, X.; Ao, S.; Ling, C.X. Pelee: A Real-Time Object Detection System on Mobile Devices. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8 December 2018; pp. 1963–1972. [Google Scholar]

- Tan, M.; Chen, B.; Pang, R.; Vasudevan, V.; Sandler, M.; Howard, A.; Le, Q.V. MnasNet: Platform-Aware Neural Architecture Search for Mobile. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2019; pp. 2815–2823. [Google Scholar]

- Jeon, D.; Kim, D.-H.; Ha, Y.-G.; Tyan, V. Image processing acceleration for intelligent unmanned aerial vehicle on mobile GPU. Soft Comput. 2015, 20, 1713–1720. [Google Scholar] [CrossRef]

- Zhang, J.; Li, J. Improving the Performance of OpenCL-based FPGA Accelerator for Convolutional Neural Network. In Proceedings of the Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays-FPGA ’17, Monterey, CA, USA, 22–24 February 2017; Association for Computing Machinery (ACM): New York, NY, USA, 2017; pp. 25–34. [Google Scholar]

- Gong, T.; Fan, T.; Guo, J.; Cai, Z. GPU-based parallel optimization of immune convolutional neural network and embedded system. Eng. Appl. Artif. Intell. 2017, 62, 384–395. [Google Scholar] [CrossRef]

- Juárez, D.H.; Chacón, A.; Espinosa, A.; Vázquez, D.; Moure, J.C.; López, A.M. Embedded Real-time Stereo Estimation via Semi-global Matching on the GPU. Procedia Comput. Sci. 2016, 80, 143–153. [Google Scholar] [CrossRef]

- Amert, T.; Otterness, N.; Yang, M.; Anderson, J.H.; Smith, F.D. GPU Scheduling on the NVIDIA TX2: Hidden Details Revealed. In Proceedings of the 2017 IEEE Real-Time Systems Symposium (RTSS), Paris, France, 5–8 Decemeber 2017; pp. 104–115. [Google Scholar] [CrossRef]

- Hossain, S.; Lee, D.-J. Deep Learning-Based Real-Time Multiple-Object Detection and Tracking from Aerial Imagery via a Flying Robot with GPU-Based Embedded Devices. Sensors 2019, 19, 3371. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.Y.; De Araújo, S.A. Grayscale Template-Matching Invariant to Rotation, Scale, Translation, Brightness and Contrast. In Proceedings of the 2nd Pacific Rim Symposium on Video and Image Technology (PSIVT 2007), Santiago, Chile, 17–19 December 2007; pp. 100–113. [Google Scholar]

- Kim, H.Y. Rotation-discriminating template matching based on Fourier coefficients of radial projections with robustness to scaling and partial occlusion. Pattern Recognit. 2010, 43, 859–872. [Google Scholar] [CrossRef][Green Version]

- Hsu, F.-H.; Shen, C.-A. The Design and Implementation of an Embedded Real-Time Automated IC Marking Inspection System. IEEE Trans. Semicond. Manuf. 2018, 32, 112–120. [Google Scholar] [CrossRef]

- Annaby, M.H.; Fouda, Y.; Rushdi, M.A. Improved Normalized Cross-Correlation for Defect Detection in Printed-Circuit Boards. IEEE Trans. Semicond. Manuf. 2019, 32, 199–211. [Google Scholar] [CrossRef]

- Shih, H.-C.; Yu, K.-C. SPiraL Aggregation Map (SPLAM): A new descriptor for robust template matching with fast algorithm. Pattern Recognit. 2015, 48, 1707–1723. [Google Scholar] [CrossRef]

- Lai, J.; Lei, L.; Deng, K.; Yan, R.; Ruan, Y.; Jinyun, Z. Fast and robust template matching with majority neighbour similarity and annulus projection transformation. Pattern Recognit. 2020, 98, 107029. [Google Scholar] [CrossRef]

- Chen, F.; Ye, X.; Yin, S.; Ye, Q.; Huang, S.; Tang, Q. Automated vision positioning system for dicing semiconductor chips using improved template matching method. Int. J. Adv. Manuf. Technol. 2018, 100, 2669–2678. [Google Scholar] [CrossRef]

- Wu, T.; Toet, A. Speed-up template matching through integral image based weak classifiers. J. Pattern Recognit. Res. 2014, 1, 1–12. [Google Scholar] [CrossRef]

- Cai, J.; Huang, P.; Zhang, B.; Wang, D. A TSR Visual Servoing System Based on a Novel Dynamic Template Matching Method †. Sensors 2015, 15, 32152–32167. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Shu, X.; Wu, X. Fast Screening Algorithm for Rotation Invariant Template Matching. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2018; pp. 3708–3712. [Google Scholar]

- Rakvic, R.; Broussard, R.; Ngo, H. Energy Efficient Iris Recognition With Graphics Processing Units. IEEE Access 2016, 4, 2831–2839. [Google Scholar] [CrossRef]

- Mu, D.; Lee, E.-J.; Chen, P. Rapid earthquake detection through GPU-Based template matching. Comput. Geosci. 2017, 109, 305–314. [Google Scholar] [CrossRef]

- Beaucé, E.; Frank, W.B.; Romanenko, A. Fast Matched Filter (FMF): An Efficient Seismic Matched-Filter Search for Both CPU and GPU Architectures. Seism. Res. Lett. 2017, 89, 165–172. [Google Scholar] [CrossRef]

- Yan, B.; Xiao, L.; Hang, Z.; Xu, D.; Ruan, L.; Wang, Z.; Zhang, Y. An adaptive template matching-based single object tracking algorithm with parallel acceleration. J. Vis. Commun. Image Represent. 2019, 64, 102603. [Google Scholar] [CrossRef]

- Li, J.; Pan, Y. GPU-based parallel optimization for real-time scale-invariant feature transform in binocular visual registration. Pers. Ubiquitous Comput. 2019, 23, 465–474. [Google Scholar] [CrossRef]

- Intel. Intel Packaging Databook. 2010. Available online: http://intel-vintage-developer.eu5.org/DESIGN/FLCOMP/PACKDATA/PACKBOOK.HTM (accessed on 8 May 2020).

- James Lien, J.J. Automatic Recognition of Facial Expressions Using Hidden Markov Models and Estimation of Expression Intensity. Ph.D. Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, April 1998. [Google Scholar]

| Dataset | Size of Test Images (pixels) | No. of Templates | No. of Test Images |

|---|---|---|---|

| Fiducial Marks | 640 × 480 | 10 | 200 |

| 800 × 600 | 10 | 200 | |

| 1280 × 960 | 10 | 200 | |

| PCB Component | 1280 × 960 | 20 | 400 |

| Alphabet Blocks | 1280 × 720 | 10 | 400 |

| Secure Digital (SD) Cards | 640 × 480 | 5 | 50 |

| Cross-hair Marks | 1280 × 960 | 1 | 100 |

| Methods | Image Size | |||||

|---|---|---|---|---|---|---|

| 640 × 480 (pixels) | 800 × 600 (pixels) | 1280 × 960 (pixels) | ||||

| Time(s) | Accuracy | Time(s) | Accuracy | Time(s) | Accuracy | |

| PC-based Platform | ||||||

| PC-RST [23] | 0.618 | 98.0% | 1.075 | 98.0% | 1.326 | 97.5% |

| FAsT-Match [32] | 0.1 | 99.9% | - | - | 0.4 | 99.8% |

| PC- Improved RST | 0.099 | 97.5% | 0.186 | 95.5% | 0.291 | 97.0% |

| Embedded System-based Platform | ||||||

| em-RST | 1.914 | 97.0% | 5.668 | 96.5% | 9.517 | 95.0% |

| emCPU-Improved RST | 0.568 | 92.0% | 1.633 | 95.0% | 3.664 | 98.0% |

| emGPU-Improved RST | 0.197 | 96.5% | 0.342 | 95.5% | 0.301 | 96.0% |

| Method | Average Matching Time(s) | Accuracy |

|---|---|---|

| emGPU-Improved RST | 0.382 | 94.0% |

| Test Images | Ground Truth (°) | 0.0 | 0.2 | 0.4 | 0.6 | 0.8 | 1.0 | 1.2 | 1.4 | 1.6 | 1.8 | Max. Error (°) | Mean Error (°) | StD |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PCBR01 | Predict | 0.0 | 0.1 | 0.3 | 0.5 | 0.8 | 1.0 | 1.2 | 1.3 | 1.5 | 1.7 | |||

| Error | 0.0 | 0.1 | 0.1 | 0.1 | 0.0 | 0.0 | 0.0 | 0.1 | 0.1 | 0.1 | 0.10 | 0.06 | 0.052 | |

| PCBR02 | Predict | 0.0 | 0.2 | 0.4 | 0.6 | 0.8 | 1.0 | 1.2 | 1.4 | 1.6 | 1.7 | |||

| Error | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.1 | 0.10 | 0.01 | 0.032 | |

| PCBR03 | Predict | 0.0 | 0.1 | 0.4 | 0.5 | 0.7 | 1.0 | 1.2 | 1.4 | 1.6 | 1.8 | |||

| Error | 0.0 | 0.1 | 0.0 | 0.1 | 0.1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.10 | 0.03 | 0.048 | |

| PCBR04 | Predict | 0.2 | 0.3 | 0.5 | 0.7 | 0.9 | 1.1 | 1.3 | 1.5 | 1.7 | 1.9 | |||

| Error | 0.2 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.20 | 0.11 | 0.032 | |

| PCBR05 | Predict | 0.0 | 0.1 | 0.3 | 0.5 | 0.7 | 1.0 | 1.2 | 1.3 | 1.5 | 1.7 | |||

| Error | 0.0 | 0.1 | 0.1 | 0.1 | 0.1 | 0.0 | 0.0 | 0.1 | 0.1 | 0.1 | 0.10 | 0.07 | 0.048 | |

| PCBR06 | Predict | 0.0 | 0.1 | 0.3 | 0.5 | 0.8 | 1.0 | 1.2 | 1.3 | 1.5 | 1.8 | |||

| Error | 0.0 | 0.1 | 0.1 | 0.1 | 0.0 | 0.0 | 0.0 | 0.1 | 0.1 | 0.0 | 0.10 | 0.05 | 0.053 | |

| PCBR07 | Predict | 0.0 | 0.0 | 0.2 | 0.5 | 0.8 | 1.1 | 1.2 | 1.3 | 1.5 | 1.7 | |||

| Error | 0.0 | 0.2 | 0.2 | 0.1 | 0.0 | 0.1 | 0.0 | 0.1 | 0.1 | 0.1 | 0.20 | 0.09 | 0.074 | |

| PCBR08 | Predict | 359.7 | 359.9 | 0.1 | 0.3 | 0.5 | 0.7 | 0.9 | 1.1 | 1.3 | 1.5 | |||

| Error | 0.3 | 0.3 | 0.3 | 0.3 | 0.3 | 0.3 | 0.3 | 0.3 | 0.3 | 0.3 | 0.30 | 0.30 | 0.00 | |

| PCBR09 | Predict | 0.2 | 0.5 | 0.6 | 0.9 | 1.0 | 1.3 | 1.4 | 1.7 | 1.9 | 2.1 | |||

| Error | 0.2 | 0.3 | 0.2 | 0.3 | 0.2 | 0.3 | 0.2 | 0.3 | 0.3 | 0.3 | 0.30 | 0.26 | 0.052 | |

| PCBR10 | Predict | 359.9 | 0.1 | 0.3 | 0.6 | 0.7 | 0.9 | 1.1 | 1.3 | 1.5 | 1.7 | |||

| Error | 0.1 | 0.1 | 0.1 | 0.0 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.10 | 0.09 | 0.032 | |

| Average | 0.16 | 0.107 | 0.04 | |||||||||||

| Methods | Average Matching Time on Each Type of Dataset(s) | Average Matching Time(s) | ||||

|---|---|---|---|---|---|---|

| SD1 | SD2 | SD3 | SD4 | SD5 | ||

| [25] | - | - | - | - | - | 0.031 |

| emGPU-Improved RST | 0.025 | 0.024 | 0.025 | 0.043 | 0.023 | 0.028 |

| Experiment (Exp.) | Initial Coordinates (pixels) | Post-Alignment Coordinates (pixels) | Distance Error (μm) | |||

|---|---|---|---|---|---|---|

| Cross-Mark 1 | Cross-Mark 2 | Cross-Mark 1 | Cross-Mark 2 | Cross-Mark 1 | Cross-Mark 2 | |

| Exp. #1 | x1 = 427 y1 = 604 | x2 = 308 y2 = 700 | x1 = 608 y1 = 488 | x2 = 308 y2 = 700 | 38.8 | 26.8 |

| Exp. #2 | x1 = 814 y1 = 580 | x2 = 694 y2 = 697 | x1 = 605 y1 = 471 | x2 = 694 y2 = 697 | 43.5 | 58.2 |

| Exp. #3 | x1 = 426 y1 = 288 | x2 = 301 y2 = 416 | x1 = 608 y1 = 471 | x2 = 301 y2 = 416 | 49.5 | 28.6 |

| Exp. #4 | x1 = 783 y1 = 295 | x2 = 661 y2 = 408 | x1 = 608 y1 = 478 | x2 = 661 y2 = 408 | 35.9 | 21.1 |

| Exp. #5 | x1 = 755 y1 = 541 | x2 = 632 y2 = 420 | x1 = 602 y1 = 488 | x2 = 632 y2 = 420 | 24.0 | 3.3 |

| Exp. #6 | x1 = 408 y1 = 283 | x2 = 286 y2 = 432 | x1 = 605 y1 = 478 | x2 = 286 y2 = 432 | 26.8 | 23.6 |

| Exp. #7 | x1 = 500 y1 = 341 | x2 = 378 y2 = 572 | x1 = 595 y1 = 476 | x2 = 378 y2 = 572 | 22.4 | 30.1 |

| Exp. #8 | x1 = 781 y1 = 551 | x2 = 659 y2 = 666 | x1 = 606 y1 = 482 | x2 = 659 y2 = 666 | 26.7 | 18.8 |

| Exp. #9 | x1 = 644 y1 = 682 | x2 = 524 y2 = 754 | x1 = 611 y1 = 488 | x2 = 524 y2 = 754 | 47.7 | 31.6 |

| Exp. #10 | x1 = 574 y1 = 227 | x2 = 449 y2 = 325 | x1 = 612 y1 = 478 | x2 = 449 y2 = 325 | 48.5 | 34.3 |

| Average Distance Error (μm): | 36.4 | 27.6 | ||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Le, M.-T.; Tu, C.-T.; Guo, S.-M.; Lien, J.-J.J. A PCB Alignment System Using RST Template Matching with CUDA on Embedded GPU Board. Sensors 2020, 20, 2736. https://doi.org/10.3390/s20092736

Le M-T, Tu C-T, Guo S-M, Lien J-JJ. A PCB Alignment System Using RST Template Matching with CUDA on Embedded GPU Board. Sensors. 2020; 20(9):2736. https://doi.org/10.3390/s20092736

Chicago/Turabian StyleLe, Minh-Tri, Ching-Ting Tu, Shu-Mei Guo, and Jenn-Jier James Lien. 2020. "A PCB Alignment System Using RST Template Matching with CUDA on Embedded GPU Board" Sensors 20, no. 9: 2736. https://doi.org/10.3390/s20092736

APA StyleLe, M.-T., Tu, C.-T., Guo, S.-M., & Lien, J.-J. J. (2020). A PCB Alignment System Using RST Template Matching with CUDA on Embedded GPU Board. Sensors, 20(9), 2736. https://doi.org/10.3390/s20092736