A Doorway Detection and Direction (3Ds) System for Social Robots via a Monocular Camera

Abstract

1. Introduction

2. Related Research

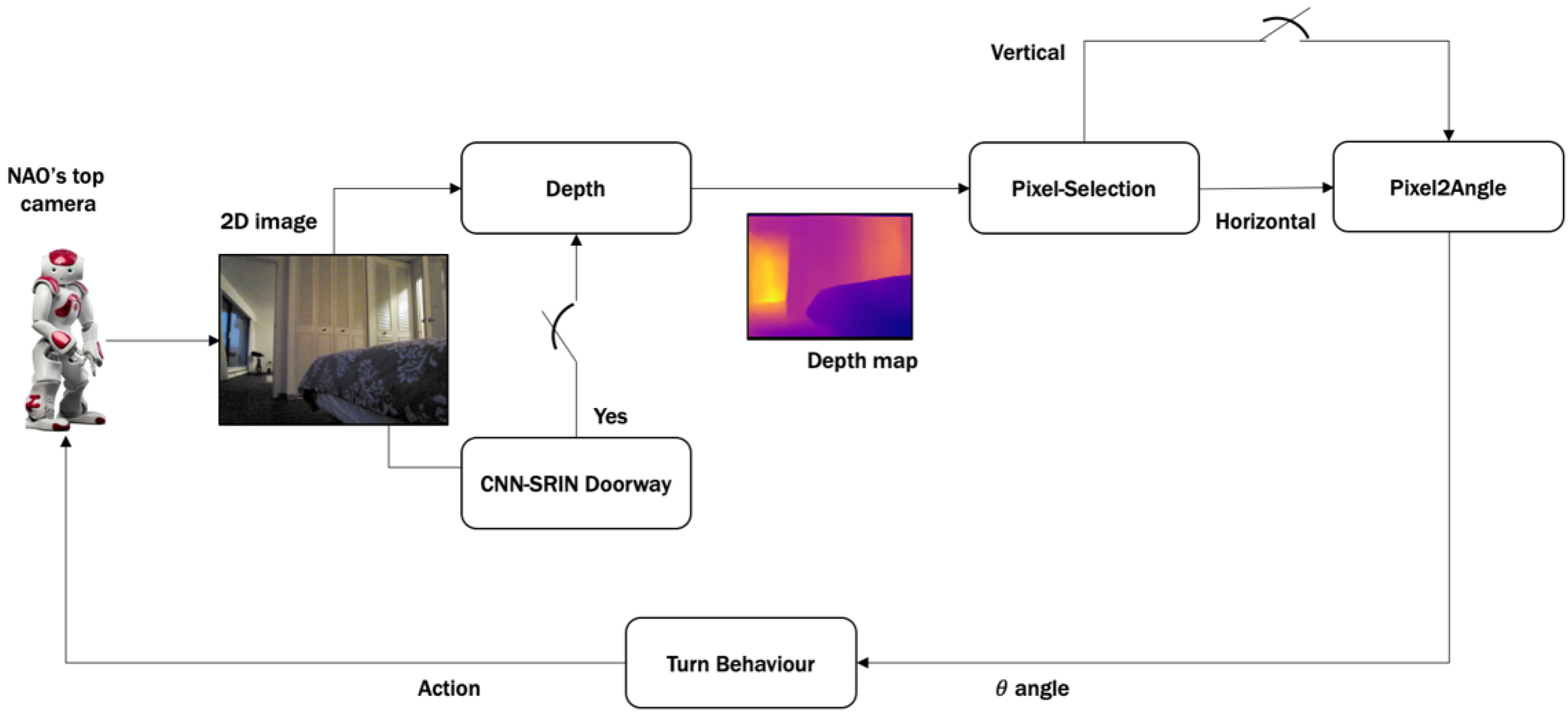

3. Proposed System and Methodology

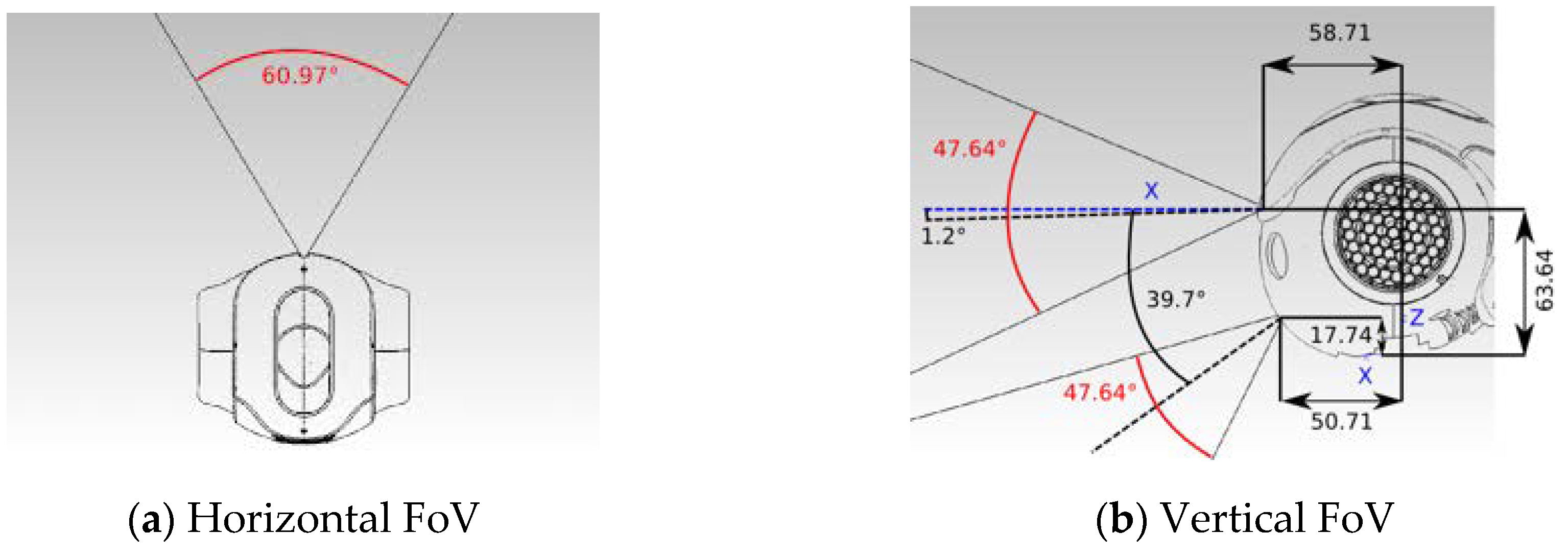

3.1. 2D Image from Nao Monocular Camera

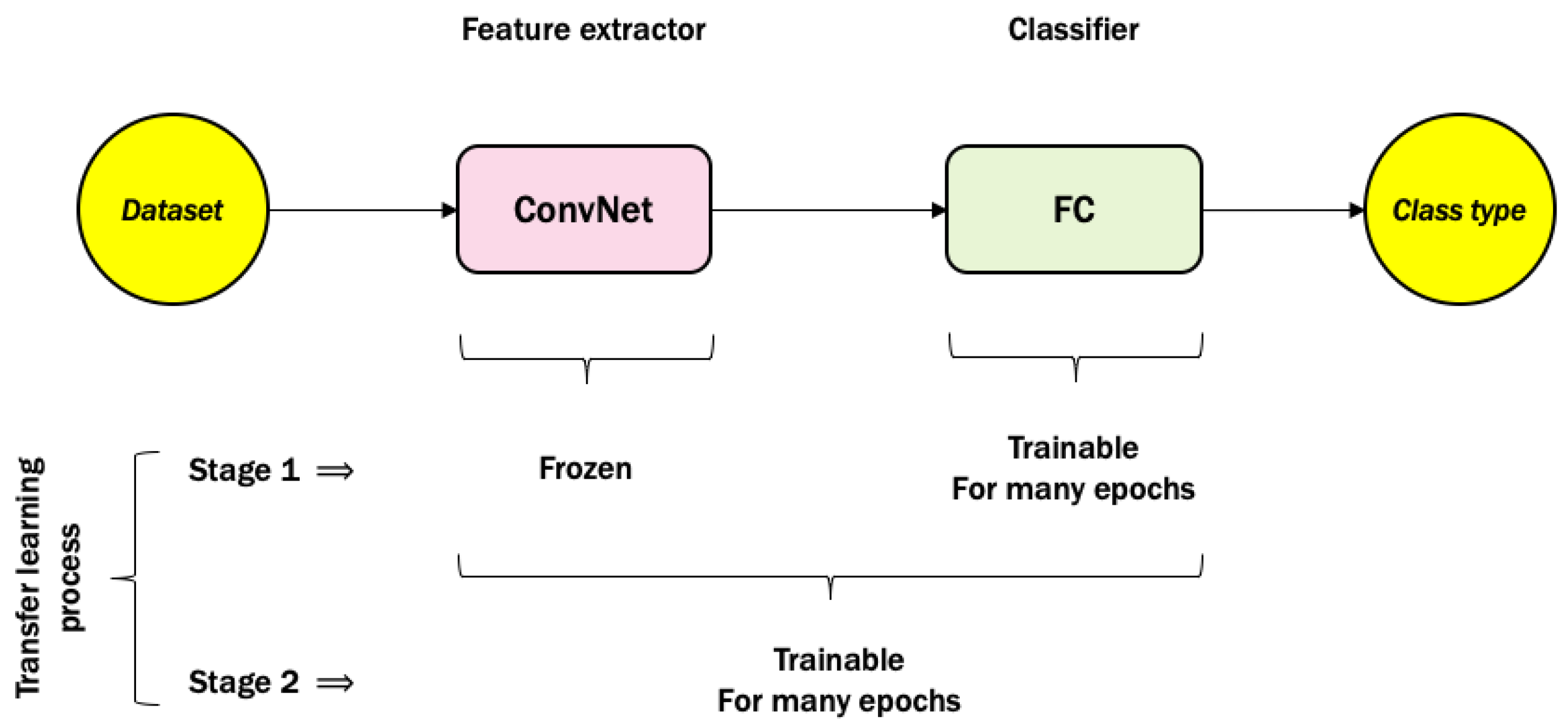

3.2. CNN-SRIN Doorway Module

3.3. Depth Module

3.4. Pixel-Selection Module

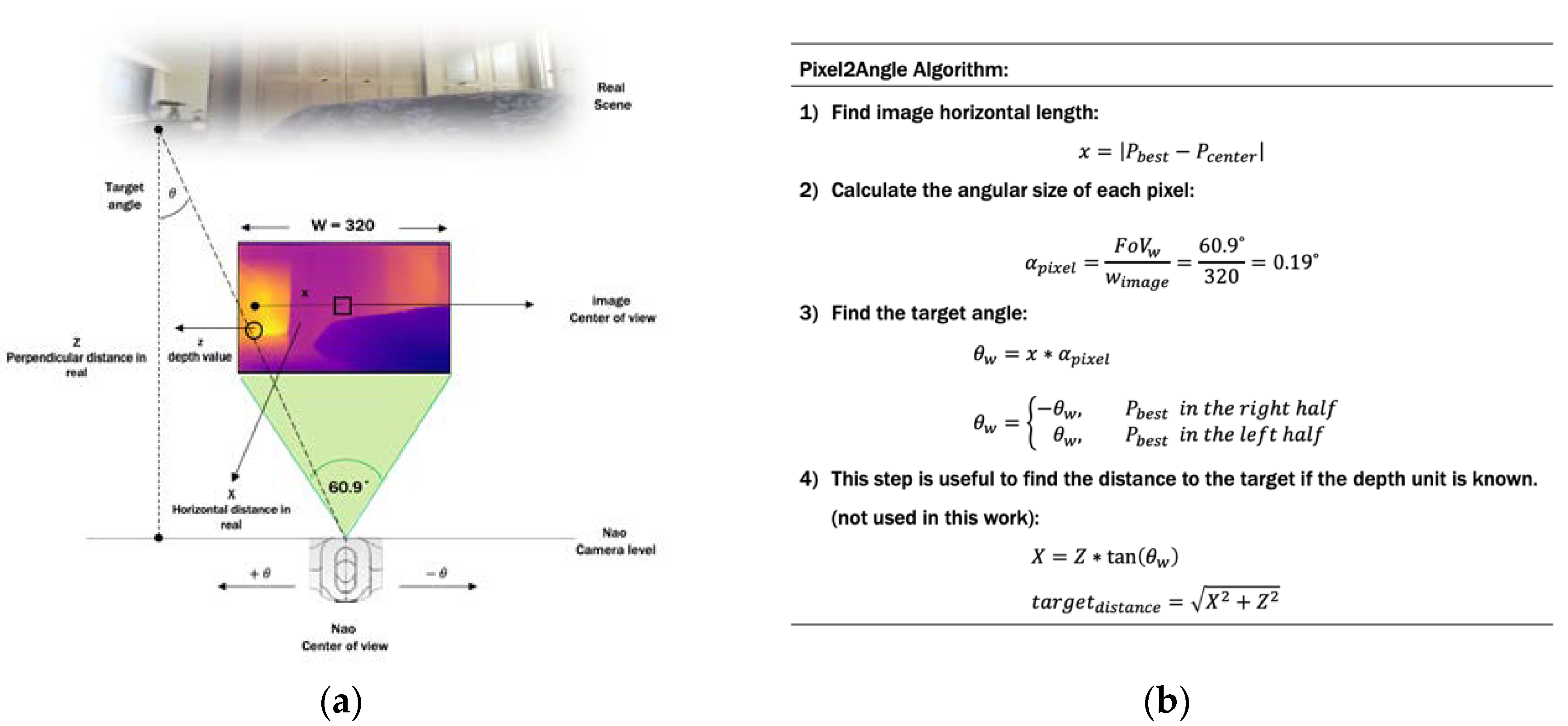

3.5. Pixel2Angle Module

4. Experiments and Results

4.1. Stage 1: CNN-SRIN for Doorway Detection

4.2. Stage 2: Angle Extraction from 2D Images Based on Depth Map and Pixel Selection

4.3. Validating the Overall Performance of 3Ds-System in Real-Time Experiments with Nao Humanoid Robot

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Othman, K.M.; Rad, A.B. An Indoor Room Classification System for Social Robots via Integration of CNN and ECOC. Appl. Sci. 2019, 9, 470. [Google Scholar] [CrossRef]

- Othman, K.M.; Rad, A.B. SRIN: A New Dataset for Social Robot Indoor Navigation. Glob. J. Eng. Sci. 2020, 4. [Google Scholar] [CrossRef]

- Brooks, R. A robust layered control system for a mobile robot. IEEE J. Robot. Autom. 1986, 2, 14–23. [Google Scholar] [CrossRef]

- Anguelov, D.; Koller, D.; Parker, E.; Thrun, S. Detecting and modeling doors with mobile robots. In Proceedings of the IEEE International Conference on Robotics and Automation, New Orleans, LA, USA, 26 April–1 May 2004. [Google Scholar]

- Lee, J.-S.; Doh, N.L.; Chung, W.K.; You, B.-J.; Youm, Y. Il Door Detection Algorithm of Mobile Robot in Hallway Using PC-Camera. In Proceedings of the 21st International Symposium on Automation and Robotics in Construction, Taipei, Taiwan, 28 June–1 July 2017. [Google Scholar]

- Tian, Y.; Yang, X.; Arditi, A. Computer vision-based door detection for accessibility of unfamiliar environments to blind persons. In Lecture Notes in Computer Science; Including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Derry, M.; Argall, B. Automated doorway detection for assistive shared-control wheelchairs. In Proceedings of the IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013. [Google Scholar]

- Kakillioglu, B.; Ozcan, K.; Velipasalar, S. Doorway detection for autonomous indoor navigation of unmanned vehicles. In Proceedings of the International Conference on Image Processing, ICIP, Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar]

- Fernández-Caramés, C.; Moreno, V.; Curto, B.; Rodríguez-Aragón, J.F.; Serrano, F.J. A Real-time Door Detection System for Domestic Robotic Navigation. J. Intell. Robot. Syst. Theory Appl. 2014, 76, 119–136. [Google Scholar]

- Hensler, J.; Blaich, M.; Bittel, O. Real-time door detection based on AdaBoost learning algorithm. In Communications in Computer and Information Science; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Chen, W.; Qu, T.; Zhou, Y.; Weng, K.; Wang, G.; Fu, G. Door recognition and deep learning algorithm for visual based robot navigation. In Proceedings of the 2014 IEEE International Conference on Robotics and Biomimetics, IEEE ROBIO 2014, Bali, Indonesia, 5–10 December 2014. [Google Scholar]

- Jin, R.; Andonovski, B.; Tu, Z.; Wang, J.; Yuan, J.; Tham, D.M. A framework based on deep learning and mathematical morphology for cabin door detection in an automated aerobridge docking system. In Proceedings of the 2017 Asian Control Conference, ASCC 2017, Gold Coast, Australia, 17–20 December 2017. [Google Scholar]

- Zhang, H.; Dou, L.; Fang, H.; Chen, J. Autonomous indoor exploration of mobile robots based on door-guidance and improved dynamic window approach. In Proceedings of the 2009 IEEE International Conference on Robotics and Biomimetics, ROBIO 2009, Guilin, China, 18–22 December 2009. [Google Scholar]

- Meeussen, W.; Wise, M.; Glaser, S.; Chitta, S.; McGann, C.; Mihelich, P.; Marder-Eppstein, E.; Muja, M.; Eruhimov, V.; Foote, T.; et al. Autonomous door opening and plugging in with a personal robot. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 4–8 May 2010. [Google Scholar]

- Nieuwenhuisen, M.; Stückler, J.; Behnke, S. Improving indoor navigation of autonomous robots by an explicit representation of doors. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 4–8 May 2010. [Google Scholar]

- Goil, A.; Derry, M.; Argall, B.D. Using machine learning to blend human and robot controls for assisted wheelchair navigation. In Proceedings of the IEEE International Conference on Rehabilitation Robotics, Seattle, WA, USA, 24–26 June 2013. [Google Scholar]

- Dai, D.; Jiang, G.; Xin, J.; Gao, X.; Cui, L.; Ou, Y.; Fu, G. Detecting, locating and crossing a door for a wide indoor surveillance robot. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics, ROBIO 2013, Shenzhen, China, 12–14 December 2013. [Google Scholar]

- Pasteau, F.; Narayanan, V.K.; Babel, M.; Chaumette, F. A visual servoing approach for autonomous corridor following and doorway passing in a wheelchair. Rob. Auton. Syst. 2016, 75, 28–40. [Google Scholar] [CrossRef]

- Criminisi, A.; Reid, I.; Zisserman, A. Single view metrology. Int. J. Comput. Vis. 2000, 40, 123–148. [Google Scholar] [CrossRef]

- Furukawa, Y.; Curless, B.; Seitz, S.M.; Szeliski, R. Reconstructing building interiors from images. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2010. [Google Scholar]

- Fuhrmann, S.; Langguth, F.; Goesele, M. MVE—A Multi-View Reconstruction Environment. In Proceedings of the Eurographics Workshop on Graphics and Cultural Heritage, Darmstadt, Germany, 6–8 October 2014. [Google Scholar]

- Fuhrmann, S.; Langguth, F.; Moehrle, N.; Waechter, M.; Goesele, M. MVE—An image-based reconstruction environment. Comput. Graph. 2015, 53, 44–53. [Google Scholar] [CrossRef]

- Saxena, A.; Chung, S.H.; Ng, A.Y. 3-D depth reconstruction from a single still image. Int. J. Comput. Vis. 2008, 76, 53–69. [Google Scholar] [CrossRef]

- Alhashim, I.; Wonka, P. High Quality Monocular Depth Estimation via Transfer Learning. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Yu, T.; Zou, J.-H.; Song, Q.-B. 3D Reconstruction from a Single Still Image Based on Monocular Vision of an Uncalibrated Camera. In Proceedings of the ITM Web of Conferences, Lublin, Poland, 23–25 November 2017; Volume 12, p. 1018. [Google Scholar]

- Aslantas, V. A depth estimation algorithm with a single image. Opt. Express 2007, 15, 5024–5029. [Google Scholar] [CrossRef] [PubMed]

- Tang, C.; Hou, C.; Song, Z. Depth recovery and refinement from a single image using defocus cues. J. Mod. Opt. 2015, 62, 204–211. [Google Scholar] [CrossRef]

- Khanna, M.T.; Rai, K.; Chaudhury, S.; Lall, B. Perceptual depth preserving saliency based image compression. In Proceedings of the 2nd International Conference on Perception and Machine Intelligence, Kolkata, West Bengal, India, 26–27 February 2015. [Google Scholar]

- Nao Documentation. Available online: http://doc.aldebaran.com/2-1/home_nao.html (accessed on 1 December 2019).

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor Segmentation and Support Inference from RGBD Images. In Proceedings of the Computer Vision—ECCV, Florence, Italy, 7–13 October 2012; Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 746–760. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Rob. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Chollet, F. Keras Documentation. Keras.Io. 2015. Available online: https://keras.io (accessed on 30 January 2020).

- Compute Canada. Available online: https://www.computecanada.ca (accessed on 1 December 2019).

| No-Door | Open-Door | ||

|---|---|---|---|

| Nao image | CNN-SRIN Prediction | Nao images | CNN-SRIN Prediction |

| No-door |  | Open-door |

| Open-door (false) |  | No-door (false) |

| No-door |  | Open-door |

| No-door |  | Open-door |

| No-door |  | Open-door |

| No-door |  | Open-door |

| 12 Images | Prediction | ||

|---|---|---|---|

| No-Door | Open-Door | ||

| Actual | No-door = 6 | TN = 5 | FP = 1 |

| Open-door = 6 | TP = 5 | FN = 1 | |

| Percentage | 83.3% | 16.7% | |

| Nao 2D Image | CNN-SRIN Trigger | Depth Map240 × 320 | Max Pixel | Max Depth | Best Pixel | Best Depth | Vertical Trigger | Angle in Degree | |

|---|---|---|---|---|---|---|---|---|---|

| 1 |  | Yes |  | [185, 194] | 0.24 | [185, 255] | 0.23 | True [238, 255] | −18.1 |

| 2 |  | Yes |  | [145, 157] | 0.46 | [145, 201] | 0.40 | False [185, 201] | n/a |

| 3 |  | Yes |  | [120, 130] | 0.50 | [120, 135] | 0.50 | True [238, 135] | 4.8 |

| 4 |  | Yes |  | [183, 73] | 0.37 | [183, 42] | 0.32 | True [238, 42] | 22.5 |

| 5 |  | Yes |  | [166, 41] | 0.52 | [166, 39] | 0.52 | True [238, 39] | 23.0 |

| 6 |  | No | - | - | - | - | - | - | - |

| 7 |  | Yes (False) |  | [188, 0] | 0.27 | [188, 19] | 0.23 | True [238, 19] | 26.8 |

| Scenario | Experiment | Input | Modules Outputs | Turning Action | ||

|---|---|---|---|---|---|---|

| Nao Perception | Nao Decision | Depth Perception | Important Values | Nao Perception After Turning | ||

| Doorway | 1 |  | Open door |  | Best pixel = [143, 246] Z = 0.54 Vertical trigger: True Turn Right |  |

| 2 |  | Open door |  | Best pixel = [152, 283] Z = 0.78 Vertical trigger: True Turn Right |  | |

| 3 |  | Open door |  | Best pixel = [182, 36] Z = 0.58 Vertical trigger: True Turn Left |  | |

| 4 |  | Open door |  | Best pixel = [177, 291] Z = 0.64 Vertical trigger: True Turn Right |  | |

| No door | 5 |  | No door | Prohibiting other modules | ||

| 6 |  | No door | Prohibiting other modules | |||

| Door with an obstacle | 7 |  | Open door |  | Best pixel = [131, 237] Z = 0.41 Vertical trigger: False No Turn | Prohibiting to turn |

| Objectives | Papers | Main Methods | Hardware/Data Type | Required Information | Computational Cost/Robustness | Environments | Output Information |

|---|---|---|---|---|---|---|---|

| Extracting depth from a 2D image | [19] | Image geometry | Simulation work/2D still image | Vanishing point and line, reference plane | High/High | Static | Dimensions |

| [20,21,22] | SfM | Simulation work/2D sequenced and overlapped images | N/A | High/High | Static | Feature detection and matching for 3D reconstruction | |

| [23,24,25] | CNN-based Supervised learning | Simulation work/2D images with associated depth | Dataset | High/High | Dynamic | Predicting depth values | |

| Only door detection | [4] | (EM) probabilistic | Camera and laser/images and laser polar readings | Pre-map | Medium/Medium | Static/Corridor | Segmentation with assumption of only dynamic door |

| [5] | Graphical Bayesian network | Camera and sonars/images and sonar polar readings | N/A | Medium/Medium | Static/Corridor | Differentiating doors from walls to build GVG-map | |

| [6] | Image geometry | Camera/2D still image | N/A | Medium/Medium | Static/Corridor | Extracting the concave and convex information | |

| [8] | RANSAC and ACF detector | Project Tango Tablet/3D points cloud data | Dataset | High/High | Static | Differentiating doors from walls | |

| [9] | Sensor fusion | Camera and laser/sequenced images and laser polar readings | N/A | High/High | Static | Detecting the wall and then extracting door edges | |

| [10] | Adaboost supervised learning | Camera and laser/images and laser polar readings | Extracted features and dataset | High/Medium | Static | Accuracy of extracting features of doors | |

| [11,12] | CNN-based supervised learning/image processing | Camera/[Images], [Videos] | Dataset | High/Medium | Static closed door | Discrete door direction/extracting certain features | |

| Door detection and navigation | [13] | Image geometry + DWA and A* | Stereo camera/overlapped images | Pre-map | High/Medium | Static | Obstacle avoidance and path planning |

| [14] | Image processing + probabilistic method | Stereo camera and laser/3D data points | Pre-map | Medium/Medium | Static | Demonstration of opening doors by manipulator | |

| [15] | Probabilistic method | Laser/continuous laser readings | Pre-map | Medium/Medium | Static with assumption of moving doors | Enhancing the map with an explicit door representation | |

| [17,18] | Sensor-based + conventional controller | Kinect/3D overlapped images | Extracted features | High/High | Static | Passing through door | |

| This study | CNN-based + reactive approach | Camera/2D still images | Dataset and FoV | High/Medium | Dynamic/any indoor environment | Extracting angle direction toward the door |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Othman, K.M.; Rad, A.B. A Doorway Detection and Direction (3Ds) System for Social Robots via a Monocular Camera. Sensors 2020, 20, 2477. https://doi.org/10.3390/s20092477

Othman KM, Rad AB. A Doorway Detection and Direction (3Ds) System for Social Robots via a Monocular Camera. Sensors. 2020; 20(9):2477. https://doi.org/10.3390/s20092477

Chicago/Turabian StyleOthman, Kamal M., and Ahmad B. Rad. 2020. "A Doorway Detection and Direction (3Ds) System for Social Robots via a Monocular Camera" Sensors 20, no. 9: 2477. https://doi.org/10.3390/s20092477

APA StyleOthman, K. M., & Rad, A. B. (2020). A Doorway Detection and Direction (3Ds) System for Social Robots via a Monocular Camera. Sensors, 20(9), 2477. https://doi.org/10.3390/s20092477