A Double-Branch Surface Detection System for Armatures in Vibration Motors with Miniature Volume Based on ResNet-101 and FPN

Abstract

1. Introduction

2. Related Works

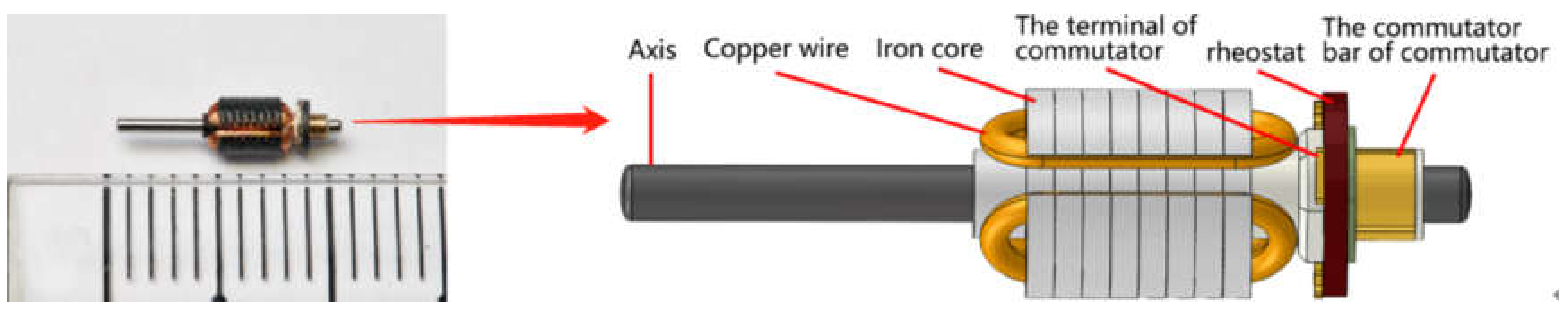

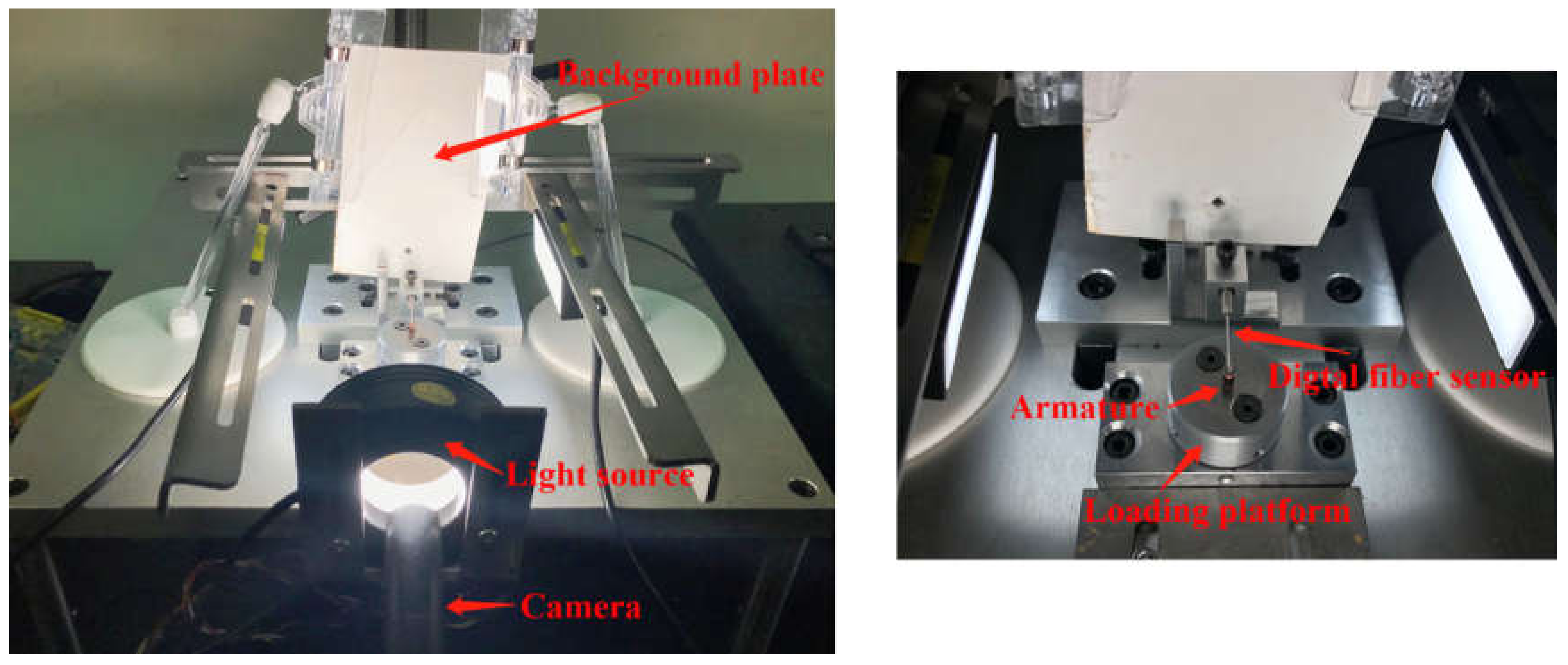

2.1. Image Acquisition

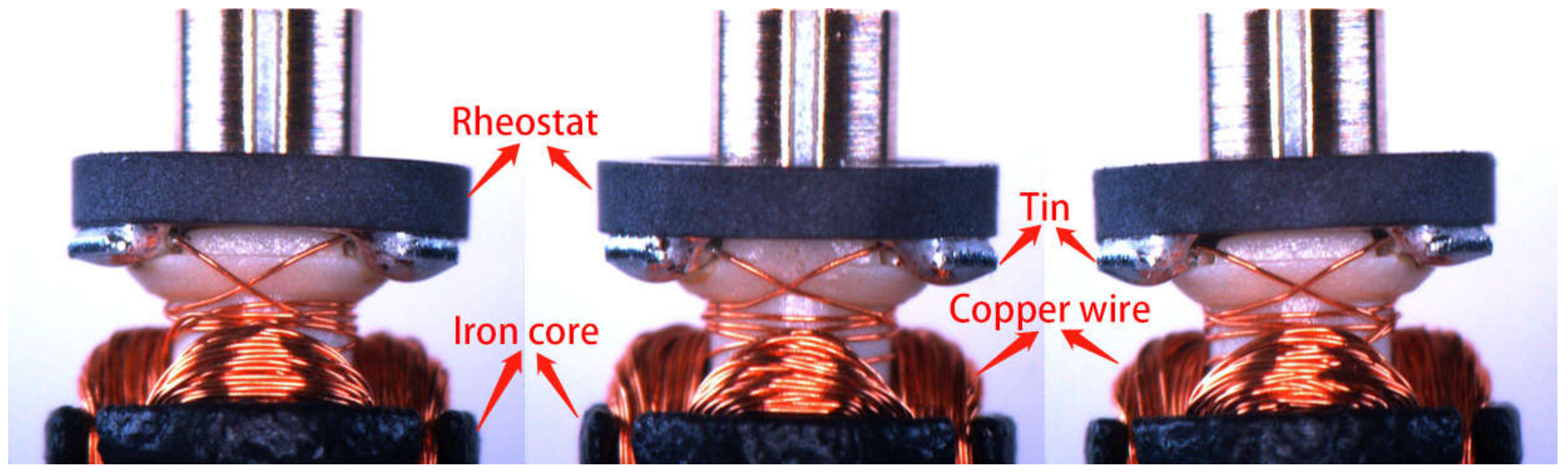

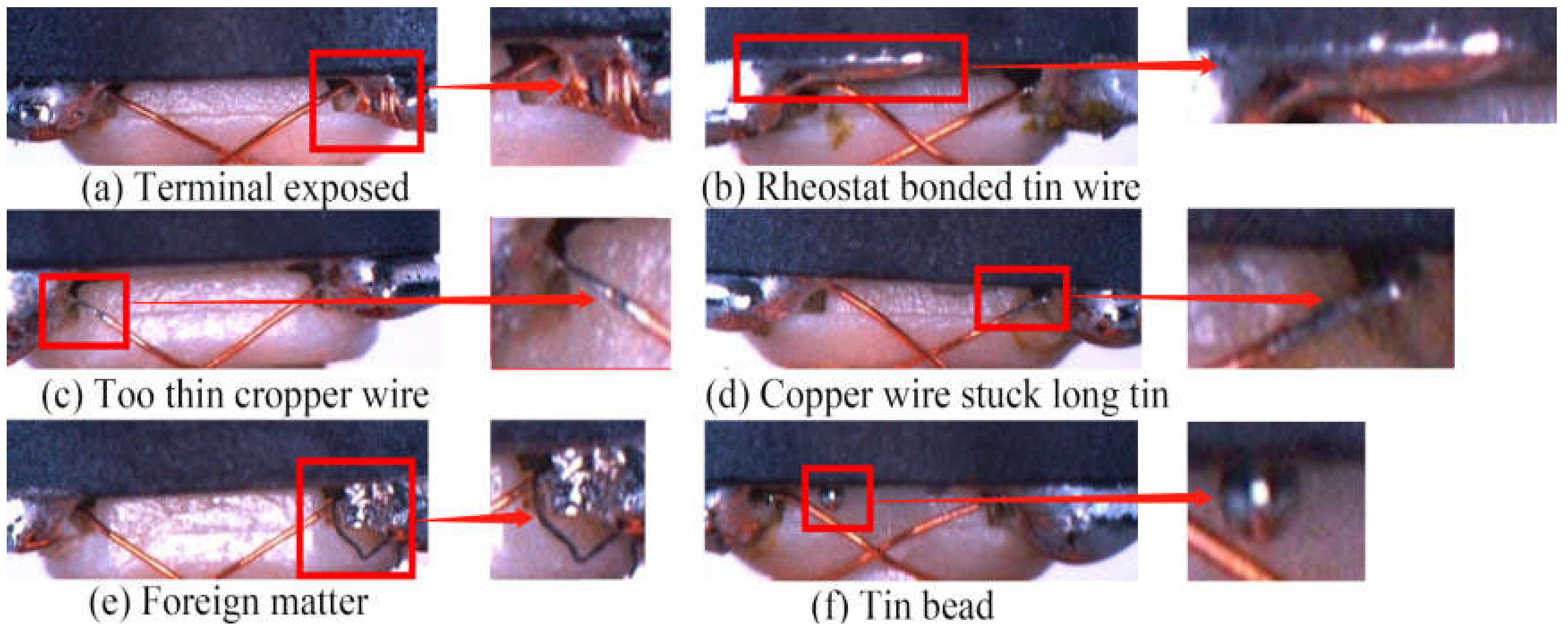

2.2. Surface Defects on the Armatures

2.3. Data Calibration and Manual Observation

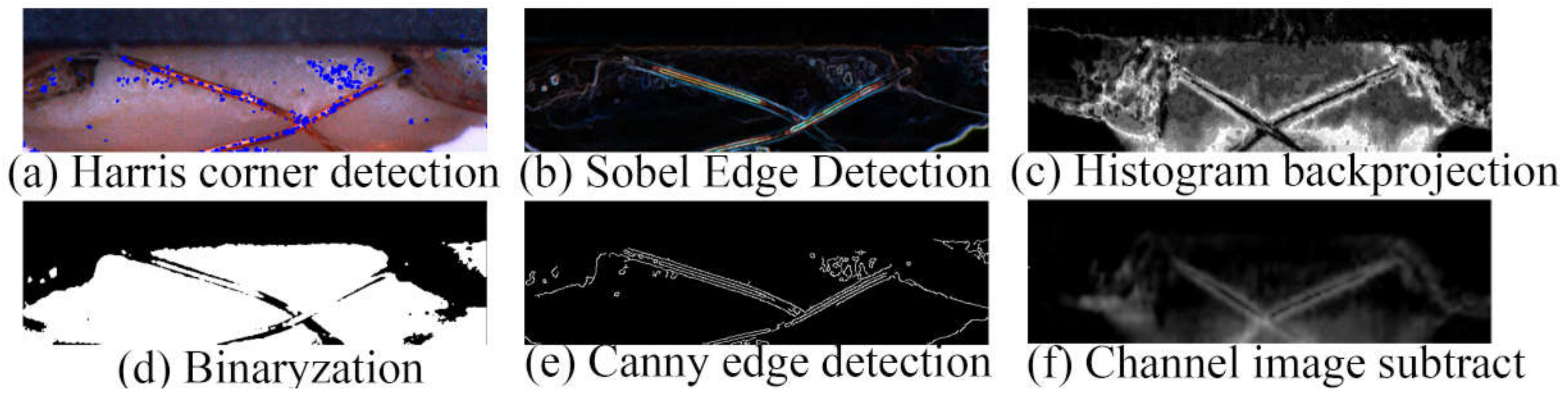

2.4. Traditional Computer Vision Method

3. Methodology

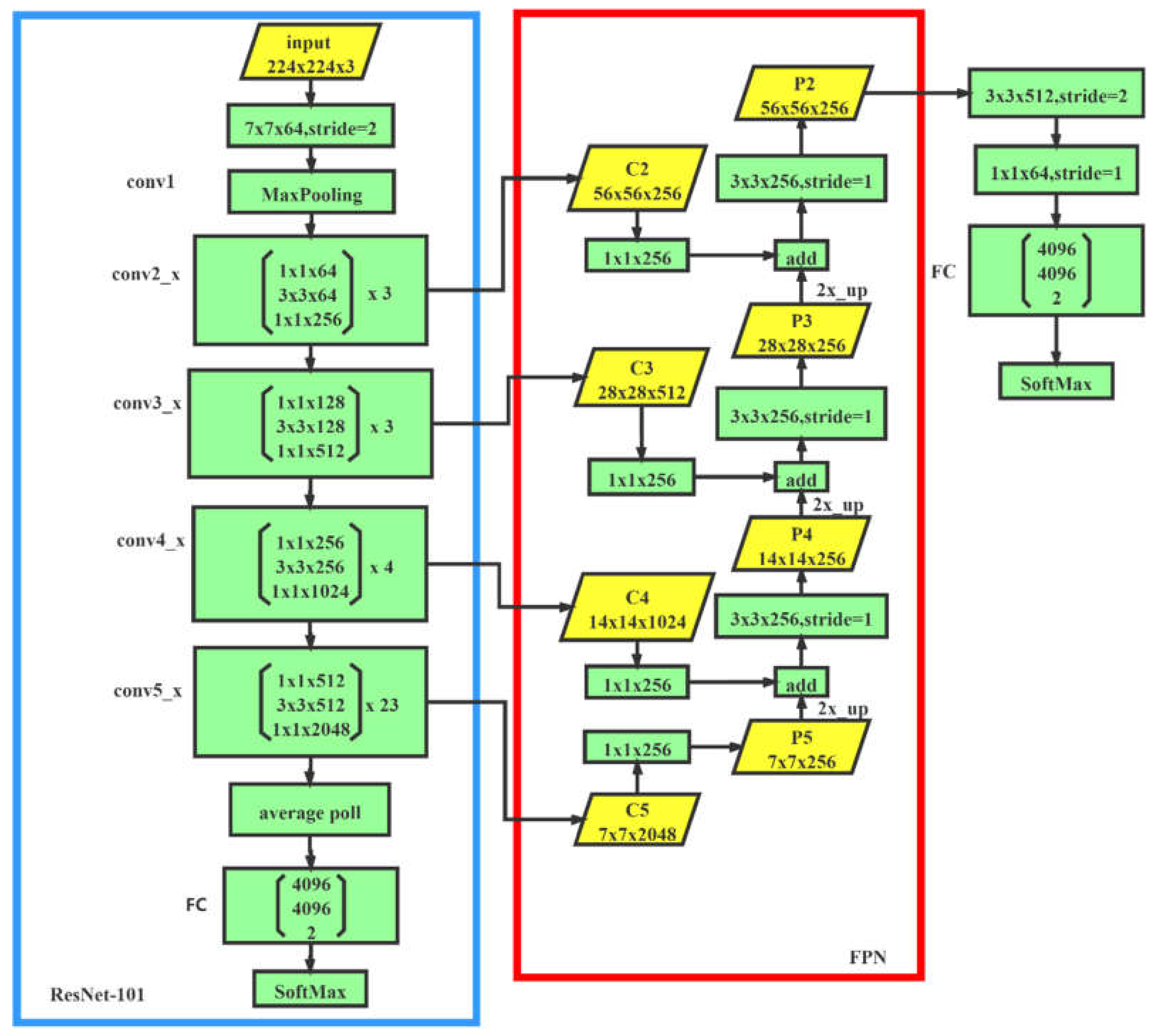

3.1. ResNet-101 + FPN Network

3.2. Focal Loss

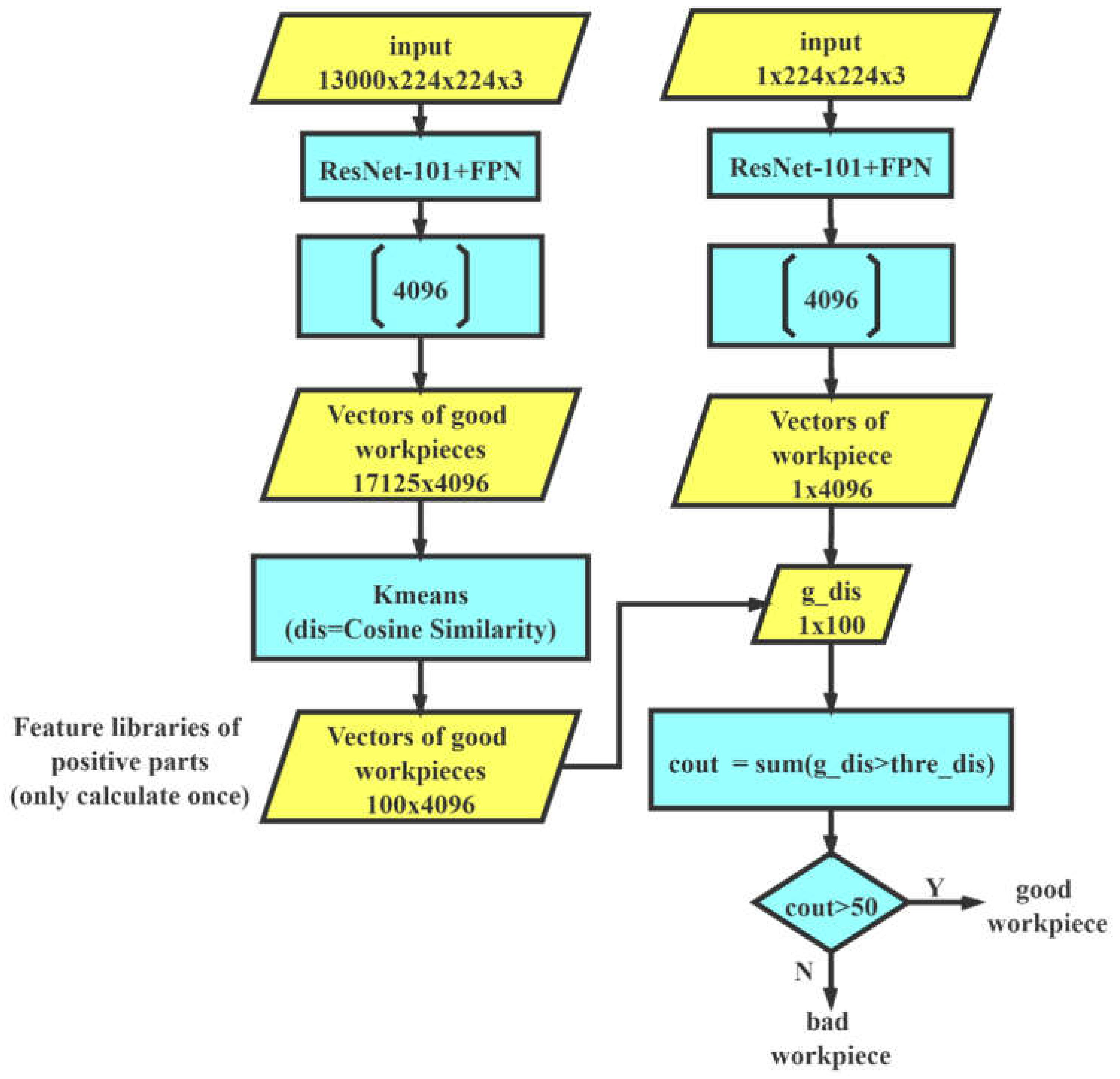

3.3. Feature Library Matching

3.4. The Double-Branch Discrimination Mechanism

4. Experiment

4.1. Dataset

4.2. Implementation Details

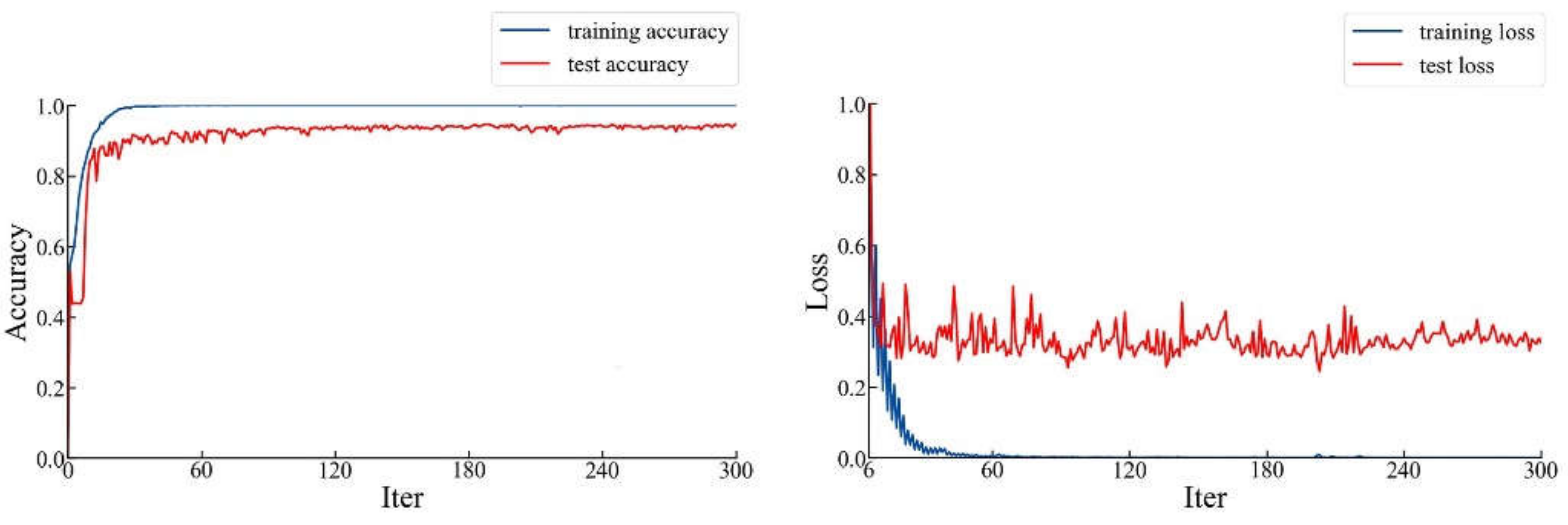

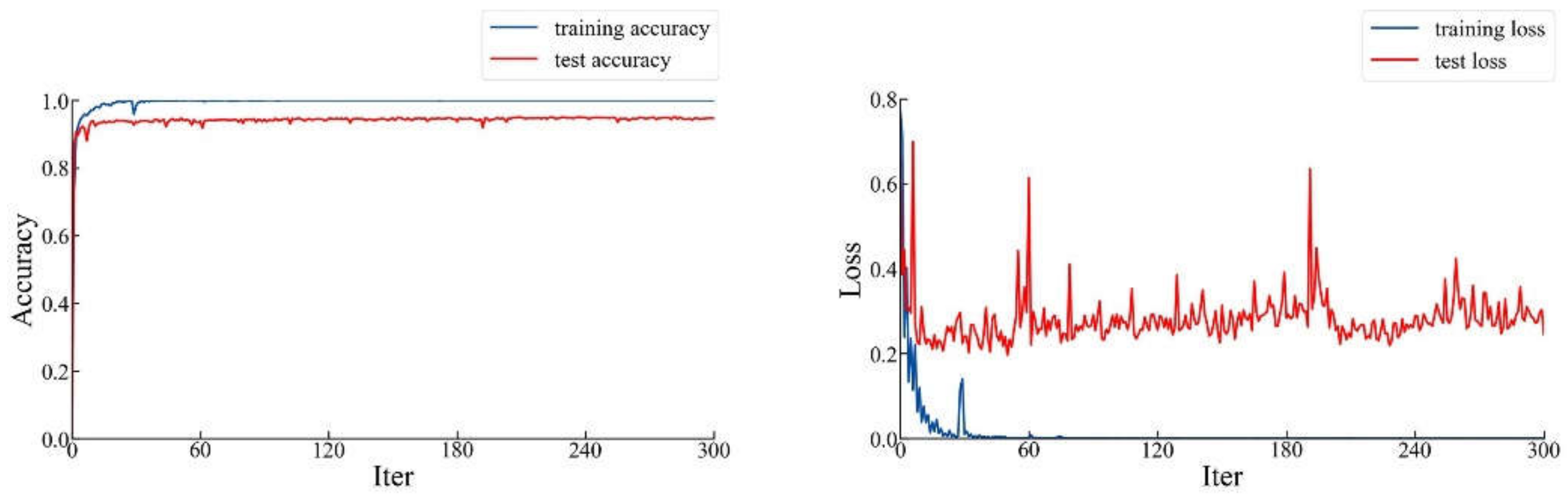

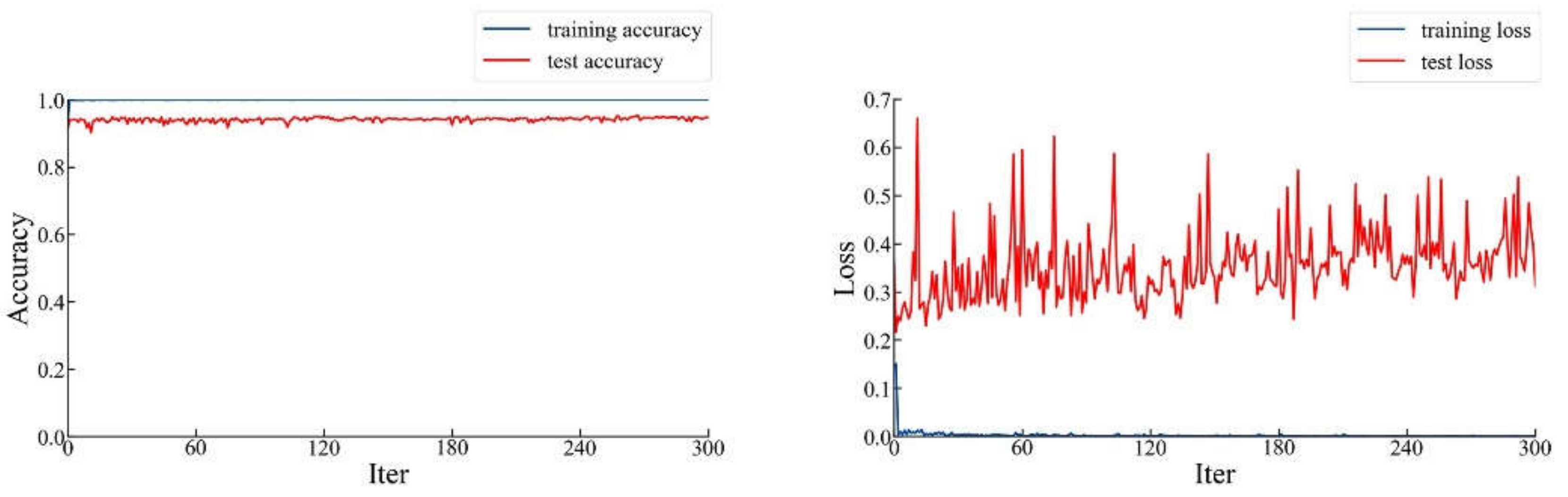

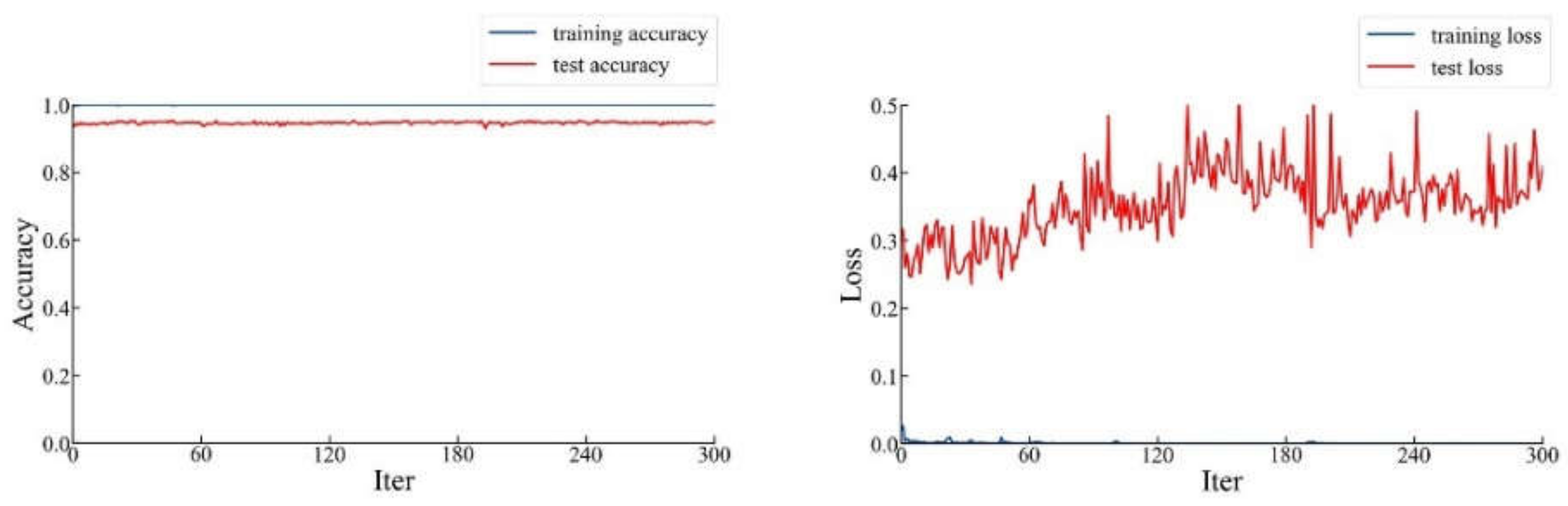

4.3. Training and Results

4.4. Establishing the Double-Branch Discrimination System and Results

4.5. Comparison and Discussions

4.6. Validation in Actual Production

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Meng, D. Research and Application of Vibration Motor Fault Diagnosis. Master’s Thesis, Xi’an University of Architecture and Technology, Xi’an, China, 2017. [Google Scholar]

- Chang, L.; Luo, F. Application of wavelet analysis in fault detection of cell phone vibration motor. In Proceedings of the 2009 International Asia Conference on Informatics in Control, Automation and Robotics, Bangkok, Thailand, 1–2 February 2009; pp. 473–476. [Google Scholar]

- Worden, K.; Staszewski, W.J.; Hensman, J. Natural computing for mechanical systems research: A tutorial overview. Mech. Syst. Signal Process. 2011, 25, 4–111. [Google Scholar] [CrossRef]

- Glowacz, A.; Glowacz, W.; Glowacz, Z.; Kozik, J. Early fault diagnosis of bearing and stator faults of the single-phase induction motor using acoustic signals. Measurement 2018, 113, 1–9. [Google Scholar] [CrossRef]

- Guo, L.; Li, N.; Jia, F.; Lei, Y.; Lin, J. A recurrent neural network based health indicator for remaining useful life prediction of bearings. Neurocomputing 2017, 240, 98–109. [Google Scholar] [CrossRef]

- Asr, M.Y.; Ettefagh, M.M.; Hassannejad, R.; Razavi, S.N. Diagnosis of combined faults in Rotary Machinery by Non-Naive Bayesian approach. Mech. Syst. Signal Process. 2017, 85, 56–70. [Google Scholar] [CrossRef]

- Georgoulas, G.; Karvelis, P.; Loutas, T.; Stylios, C.D. Rolling element bearings diagnostics using the Symbolic Aggregate approXimation. Mech. Syst. Signal Process. 2015, 60, 229–242. [Google Scholar] [CrossRef]

- Pesante-Santana, J.; Woldstad, J.C. Quality Inspection Task in Modern Manufacturing. In International Encyclopedia of Ergonomics and Human Factors; Karwowski, W., Ed.; Taylor and Francis: London, UK, 2001. [Google Scholar]

- Cabral, J.D.D.; De Araújo, S.A. An intelligent vision system for detecting defects in glass products for packaging and domestic use. Int. J. Adv. Manuf. Technol. 2014, 77, 485–494. [Google Scholar] [CrossRef]

- Long, Z.; Zhou, X.; Zhang, X.; Wang, R.; Wu, X. Recognition and Classification of Wire Bonding Joint via Image Feature and SVM Model. IEEE Trans. Compon. Packag. Manuf. Technol. 2019, 9, 998–1006. [Google Scholar] [CrossRef]

- Bondada, V.; Pratihar, D.K.; Kumar, C.S. Detection and quantitative assessment of corrosion on pipelines through image analysis. Procedia Comput. Sci. 2018, 133, 804–811. [Google Scholar] [CrossRef]

- Staar, B.; Lütjen, M.; Freitag, M. Anomaly detection with convolutional neural networks for industrial surface inspection. Procedia CIRP 2019, 79, 484–489. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for largescale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Ji, Y.; Zhang, H.; Wu, Q.M.J. Salient object detection via multi-scale attention CNN. Neurocomputing 2018, 322, 130–140. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Huang, F.; Yu, Y.; Feng, T. Automatic extraction of impervious surfaces from high resolution remote sensing images based on deep learning. J. Vis. Commun. Image Represent. 2019, 58, 453–461. [Google Scholar] [CrossRef]

- Masci, J.; Meier, U.; Ciresan, D.; Schmidhuber, J.; Fricout, G. Steel defect classification with max-pooling convolutional neural networks. In Proceedings of the 2012 International Joint Conference on Neural Networks (IJCNN), Brisbane, Australia, 10–15 June 2012; pp. 1–6. [Google Scholar]

- Song, Z.; Yuan, Z.; Liu, T. In Residual Squeeze-and-Excitation Network for Battery Cell Surface Inspection. In Proceedings of the 2019 16th International Conference on Machine Vision Applications (MVA), Tokyo, Japan, 27–31 May 2019; pp. 1–5. [Google Scholar]

- Weimer, D.; Scholz-Reiter, B.; Shpitalni, M. Design of deep convolutional neural network architectures for automated feature extraction in industrial inspection. CIRP Ann. 2016, 65, 417–420. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Pascanu, R.; Mikolov, T.; Bengio, Y. On the difficulty of training recurrent neural networks. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 1310–1318. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Nguyen, H.V.; Bai, L. Cosine similarity metric learning for face verification. In Proceedings of the Asian Conference on Computer Vision, Queenstown, New Zealand, 8–12 November 2010; pp. 709–720. [Google Scholar]

- Al-Anzi, F.; AbuZeina, D. Toward an enhanced Arabic text classification using cosine similarity and Latent Semantic Indexing. J. King Saud Univ. Comput. Inf. Sci. 2017, 29, 189–195. [Google Scholar] [CrossRef]

- Kotsiantis, S.; Kanellopoulos, D.; Pintelas, P. Data preprocessing for supervised leaning. Int. J. Comput. Sci. 2006, 1, 111–117. [Google Scholar]

- Venables, W.N.; Ripley, B.D. Modern Applied Statistics with S-PLUS; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

| Terminal exposed (Figure 4a) | The terminal connecting the copper wire and the resistor is not covered with tin. It is not strong enough to ensure the service life. |

| Rheostat bonded tin (Figure 4b) | A long piece of tin is attached to the rheostat, which will affect the use of the motor. |

| Too thin cropper wire (Figure 4c) | Because of the high temperature, the copper wire connecting the terminals is thinned, which will cause the copper wire to break easily. |

| Copper wire stuck, long tin (Figure 4d) | The attached tin on the copper wire is too long. The tin is not as hard as the copper wire, which will also cause the copper wire to break easily. |

| Foreign matter (Figure 4e) | There are foreign bodies sticking in this area, which will cause noise in the process of rotation. |

| Tin bead (Figure 4f) | In the process of rotation, the tin beads easily fall off, which will have a serious impact on the service life. |

| Predicted Value | Total | |||

|---|---|---|---|---|

| Positive | Negative | |||

| Observed Value | Positive | 6788 | 153 | 6941 |

| Negative | 221 | 3944 | 4165 | |

| Predicted Value | Total | |||

|---|---|---|---|---|

| Positive | Negative | |||

| Observed Value | Positive | 6823 | 118 | 6941 |

| Negative | 209 | 3956 | 4165 | |

| Predicted Value | Total | |||

|---|---|---|---|---|

| Positive | Negative | |||

| Observed Value | Positive | 6835 | 106 | 6941 |

| Negative | 196 | 3969 | 4165 | |

| Predicted Value | Total | |||

|---|---|---|---|---|

| Positive | Negative | |||

| Observed Value | Positive | 6797 | 144 | 6941 |

| Negative | 162 | 4003 | 4165 | |

| Accuracy | Recall | Precision | F1-Score | Time Per Image/s | |

|---|---|---|---|---|---|

| SVM | 83.2% | 85.3% | 87.5% | 86.4% | 0.574 |

| VGG-16 | 92.4% | 93.3% | 94.6% | 93.9% | 0.035 |

| ResNet-101 | 93.6% | 95.1% | 95.0% | 95.0% | 0.084 |

| ResNet-101+FPN (trained directly) | 96.6% | 97.8% | 96.8% | 97.3% | 0.095 |

| ResNet-101+FPN (two stage trained) | 97.1% | 98.3% | 97.0% | 97.6% | 0.095 |

| Feature library matching | 97.3% | 98.5% | 97.2% | 97.8% | 0.186 |

| Double-branch discrimination mechanism | 97.2% | 97.9% | 97.7% | 97.7% | 0.205 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, T.; Liu, J.; Fang, X.; Wang, J.; Zhou, L. A Double-Branch Surface Detection System for Armatures in Vibration Motors with Miniature Volume Based on ResNet-101 and FPN. Sensors 2020, 20, 2360. https://doi.org/10.3390/s20082360

Feng T, Liu J, Fang X, Wang J, Zhou L. A Double-Branch Surface Detection System for Armatures in Vibration Motors with Miniature Volume Based on ResNet-101 and FPN. Sensors. 2020; 20(8):2360. https://doi.org/10.3390/s20082360

Chicago/Turabian StyleFeng, Tao, Jiange Liu, Xia Fang, Jie Wang, and Libin Zhou. 2020. "A Double-Branch Surface Detection System for Armatures in Vibration Motors with Miniature Volume Based on ResNet-101 and FPN" Sensors 20, no. 8: 2360. https://doi.org/10.3390/s20082360

APA StyleFeng, T., Liu, J., Fang, X., Wang, J., & Zhou, L. (2020). A Double-Branch Surface Detection System for Armatures in Vibration Motors with Miniature Volume Based on ResNet-101 and FPN. Sensors, 20(8), 2360. https://doi.org/10.3390/s20082360