1. Introduction

The emerging Internet of Things (IoT) promises to create a world in which all the objects around us are connected to the Internet and communicate with each other with minimal human intervention. The crucial goal is to create a better world for human beings, in which the objects around us are context-aware, allowing them to respond to questions such as what we want, what we need and where we are. Smart homes are one of the main application domains of IoT, have received particular attention from researchers [

1].

Smart homes provide a safe, secure environment for dependent people. They offer the ability (1) to track residents activities without interfering in their daily life; and (2) to track residents behaviors and monitor their health by using sensors embedded in their living spaces [

2]. The data collected from smart homes needs to be deeply analyzed and investigated, in order to extract useful information about residents daily routines, in particular regarding specific activities of daily living. According to Reference [

3], the training process can be distinguished into trained, training-free and trained-once. Therefore, this paper is interested in trained approach.

Activity recognition [

4], as a core feature of the smart home, consists of classifying data recorded by the different integrated environmental and/or wearable sensors into well-defined, known movements. However, dependent persons are often exposed to problems of various types that may cause them to perform activities of daily living incorrectly. Detecting abnormal behaviors is thus of great importance for dependent people, in order to ensure that activities are performed correctly without errors [

5]. This will also ensure their safety and well-being.

Detecting an anomaly in a person’s activities of daily living (ADL) is usually done by detecting nonconformities with their usual ADL patterns. Various authors have used classical machine learning algorithms to achieve this [

6,

7]. Tele-health care requires systems with high accuracy, low computational time and minimal user intervention because data are becoming larger and more complex [

8]. Deep learning architectures provide a way to automatically extract useful and meaningful spatial and temporal features from raw data without the need for data labeling, which is time consuming, complex and error prone [

9,

10]. This makes deep learning models easily generalizable to different contexts. LSTM is a powerful deep learning model for sequence prediction and anomaly detection in sequential data [

11]. The LSTM model is able to extract temporal features with long-term relationships. CNN has powerful spatial feature extraction capabilities and the ability to detect abnormality, which is considered as a classification problem. The advantage in the use of CNN with LSTM is to combine their capabilities in terms of spatial and temporal features extraction. Autoencoder learns a compressed input representation, which is recommended for the high dimensional data collected from smart home. Therefore, the motivation to select our deep learning models is due to their high performance accuracy in terms of abnormal behavior detection in different research areas [

12,

13].

This property is of great importance in smart homes in order to understand people’s behaviors, which change over time and particularly any deviations from normal execution of activities of daily living.

In this paper, we investigate this variety of deep learning models such as LSTM, CNN, CNN-LSTM and Autoencoder-CNN-LSTM to identify and predict elderly people’s abnormal behaviors. The rationale of using deep learning models is fourfold: (1) the models are capable of handling multivariate sequential time-series data, (2) they can identify and accurately predict abnormal behavior in time-series data [

14,

15] and (3) they can automatically extract temporal and spatial features, from massive time-series data, making it easily generalizable to other types of data and (4) minimizing computation time.

Therefore, the contributions of our paper can be summarized as follows:

Investigating a variety of deep learning models even models hybridization for automatic prediction of abnormal behaviors in smart homes.

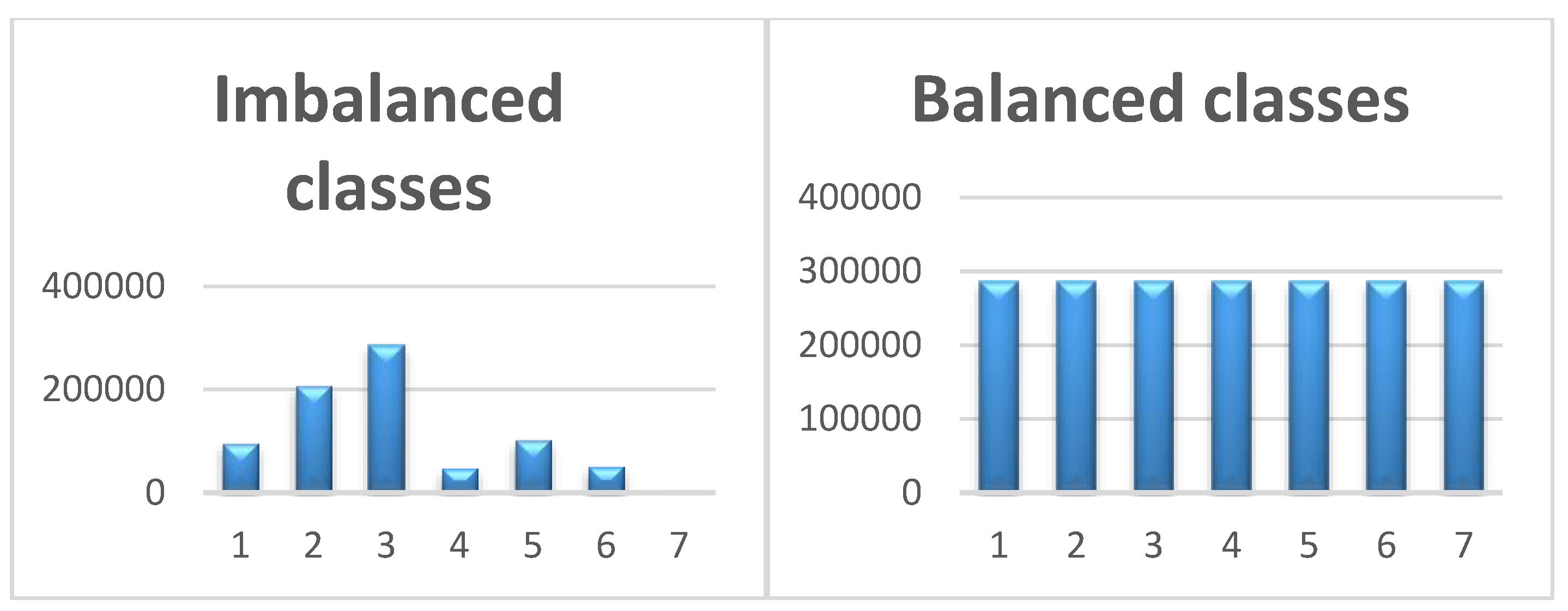

Managing the problem of imbalanced data by oversampling minority classes for LSTM model in particular.

Conducting extensive experiments based on two public datasets to validate the proposed models.

The paper is organized as follows:

Section 2 presents an overview of anomaly detection models and related work on machine learning algorithms.

Section 3 presents materials and methods used to carry out our work.

Section 4 shows the obtained results for each method/datasets. Finally,

Section 5 discusses and highlights the obtained results.

2. Related Work

Tracking user behavior for abnormality detection has attracted considerable attention and is becoming a primary goal for some researchers [

16]. Abnormal behavior detection approaches are based mainly on machine learning algorithms and specifically on supervised learning techniques [

17]. Supervised classification techniques need labelled data points (samples) for the models to learn. This kind of classification entails training a classifier on the labelled data points and then evaluating the model on new data points. Thus, in the case of normal and abnormal classes, the model learns the characteristics of the data points and classifies them as normal or abnormal. Any data point that does not fit the normal class will be classified as an anomaly by the model. Various classification techniques have been applied for abnormal behavior detection.

Pirzada et al. [

18] explored the k-nearest neighbors algorithm (KNN), which works well to classify data into categories. Their method performs a binary classification in which activities are classified as good or bad, to distinguish anomalies in user behavior. The proposed KNN is applied to predict whether an activity belongs to the regular (good) or irregular (bad) class. Their method allows an unobtrusive use of sensors to monitor the health condition of an elderly person living alone.

Aran et al. [

19] proposed an approach to automatically observe and model the daily behavior of the elderly and detect anomalies that could occur in the sensor data. In their proposed method, anomalies can be relied on to signal health-related problems. They therefore created a probabilistic spatio-temporal model to summarize daily behavior. Anomalies, defined as significant changes from the learned behavioral model, are detected and performance is evaluated using the cross-entropy measure. When an anomaly is detected, caregivers are informed accordingly.

Ordonez et al. [

20] presented an anomaly detection method based on Bayesian statistics that identifies anomalous human behavioral patterns. Their proposed method automatically assists elderly persons with disabilities who live alone, by learning and predicting standard behaviors to improve the efficiency of their healthcare system. The Bayesian statistics are chosen to analyze the collected data and the estimation of the static behavior is based on the introduction of three probabilistic features: sensor activation likelihood, sensor sequence likelihood and sensor event duration likelihood.

Yahaya et al. [

21] proposed a novelty detection algorithm, known as the one-class Support Vector Machine (SVM), which they applied to the detection of anomalies in activities of daily living. Specifically, they studied an anomaly in sleeping patterns which could be a sign of mild cognitive impairment in older adults or other health-related issues.

Palaniappan et al. [

22] were interested in detecting abnormal activities of individuals by ruling out all possible normal activities. They define abnormal activities as randomly occurring, unexpected events. The multi-class SVM method is used as a classifier to identify the activities in the form of a state transition table. The transition table helps the classifier avoid states which are unreachable from the current state.

Hung et al. [

23] proposed a novel approach that combines SVM and Hidden Markov Model (HMM) in a homecare sensory system. Radio Frequency IDentification (RFID) sensor networks are used to collect the elder’s daily activities; an HMM is used to learn the data and SVMs are employed to estimate whether the elder’s behavior is abnormal or not.

Bouchachia et al. [

24] proposed a Recurrent Neural Network (RNN) model to address the problem of activity recognition and abnormal behavior detection for elderly people with dementia. Their proposed method suffered from the lack of data in the context of dementia.

All of the aforementioned methods suffer from one or more of the following limitations:

The presented methods focus on spatial and temporal anomalies in user assistance. However, we note that abnormal behavior is not addressed in the smart home context;

These methods require feature engineering, which is difficult, particularly as data become larger;

The accuracy of abnormality identification and prediction is not sufficient; These points motivate us to propose methods, which seek to overcome these limitations and be useful for assistance in the smart home context.

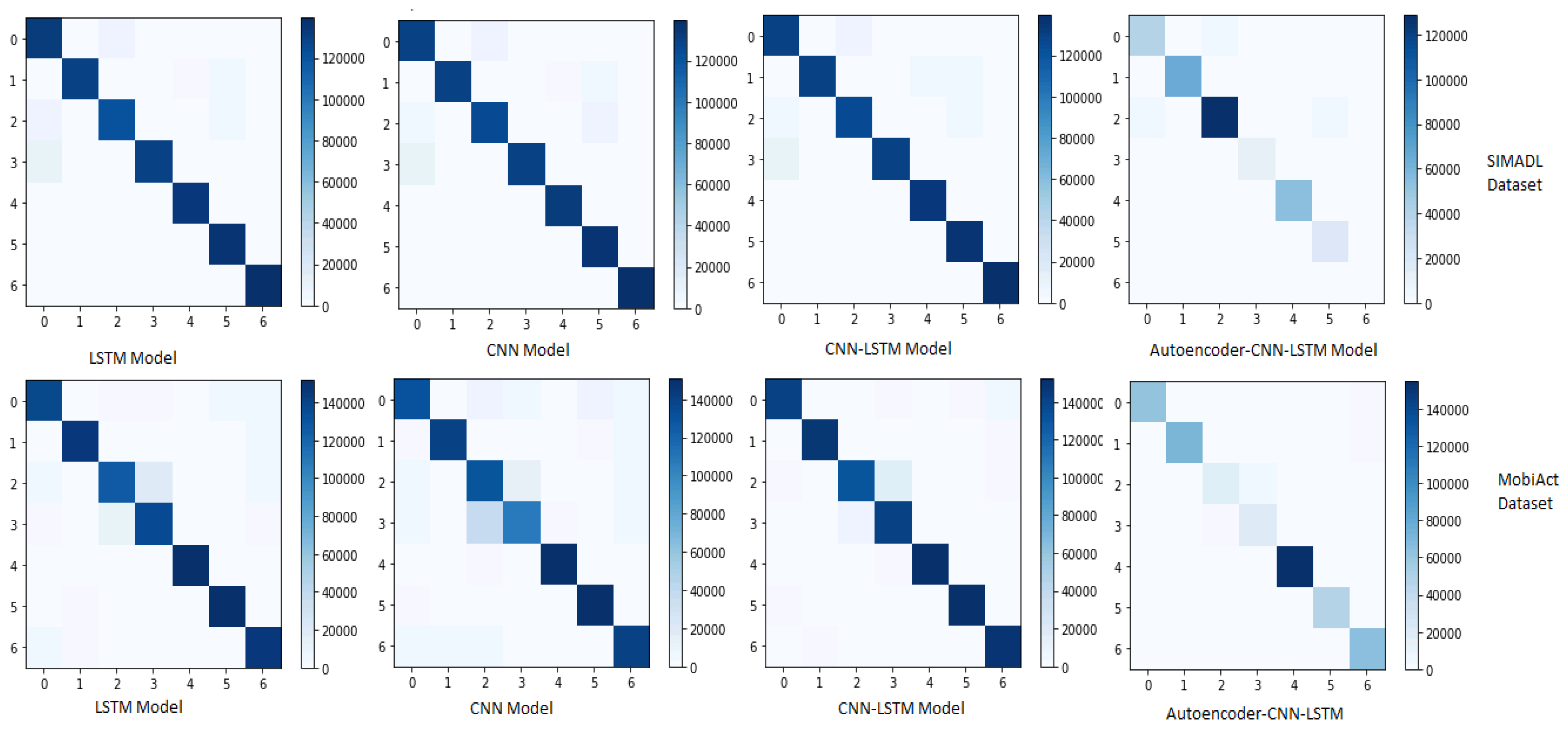

5. Performance Metrics Analysis

As stated in the introduction section, the experimental study was carried out in order to identify and predict the abnormal behavior. To highlight the performance of the proposed methods, we consider the accuracy, the precision and the recall as performance measures for the different LSTM, CNN, LSTM_CNN and Autoencoder_LSTM_CNN models. The results of each method/datasets are summarized in

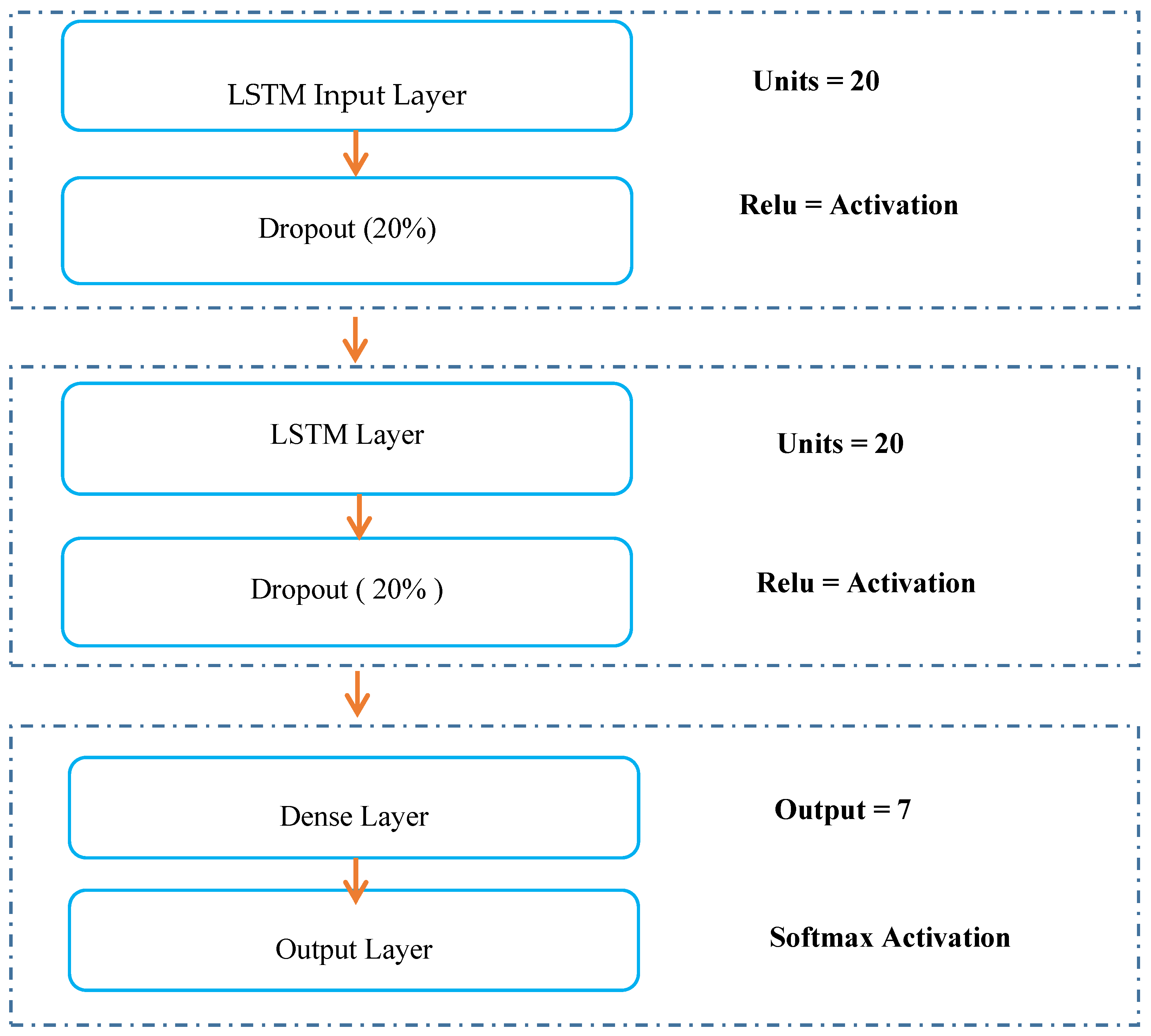

Table 7; the presented results are interesting in several ways. In this section, we analyze our objective in term of abnormality detection where the captured abnormality is different from model to another. LSTM aims to capture temporal abnormal behavior sequences by incorporating memory cell to store temporal dependency and information. As stated in the Introduction section, the most important characteristic of deep learning is that it does not need any manually features extraction to learn and can easily learn a hierarchical feature representation from the raw data directly.

The LSTM model clearly has an advantage over temporal information identification and prediction. The metrics shown in

Table 7 indicate that LSTM model adequately captures the important features to boost detection abnormality accuracy. LSTM performs well in each dataset with an accuracy of 94% and 93% respectively. We also reported precision and recall measures which are shown in the

Table 7. A comparison of LSTM with classic machine learning models was done in Reference [

39].

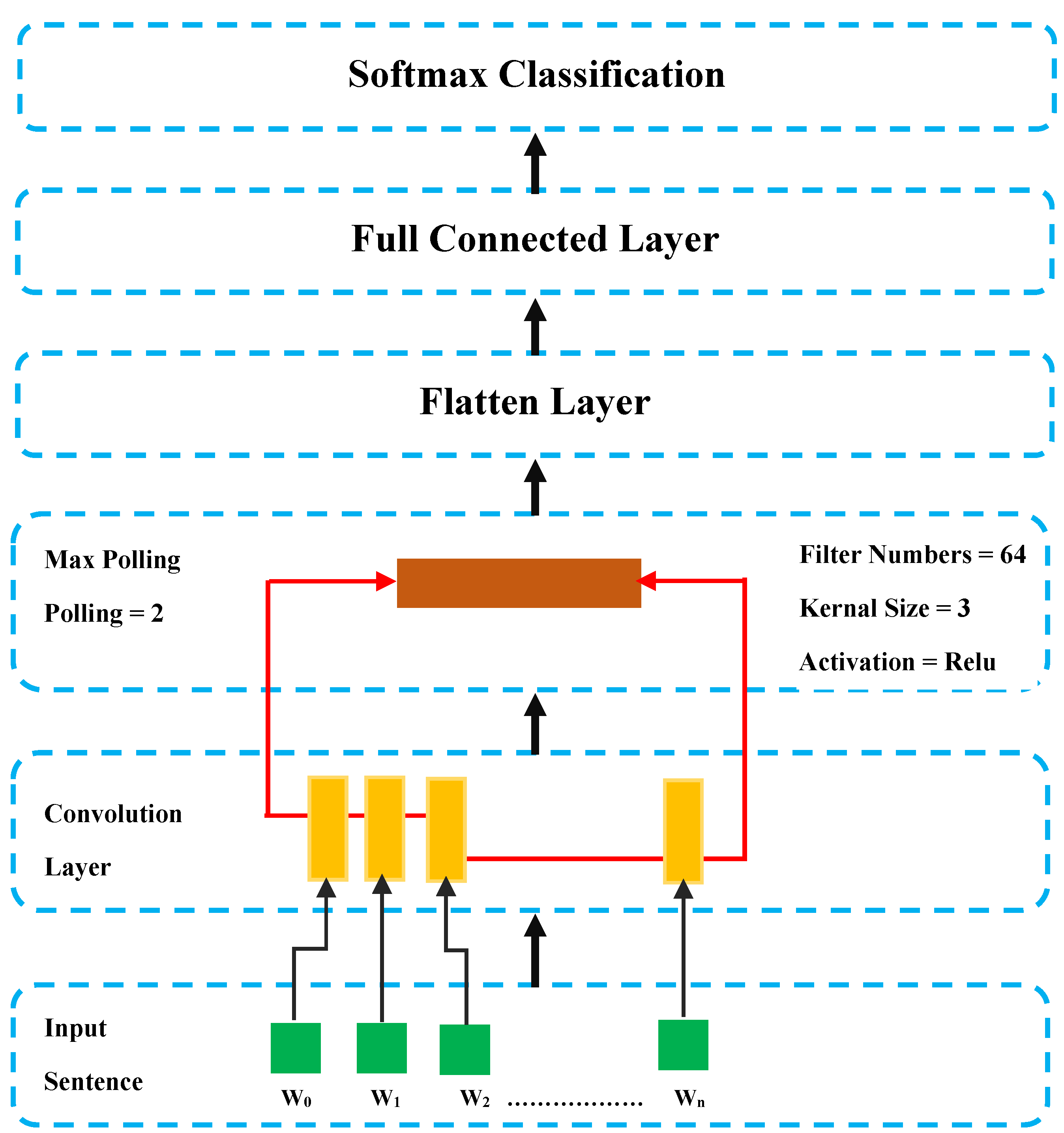

CNN aims to capture spatial abnormal behavior sequences of time series based on an automatic features extraction. We reported in

Table 7 the obtained results for the SIMADL and MobiAct datasets where CNN is experimented by testing an increasing number of layers (3-CNN and 4-CNN). The model performs well even by increasing the number of layers. The accuracy obtained by the CNN models in both datasets was 93% and 91% respectively. As shown in

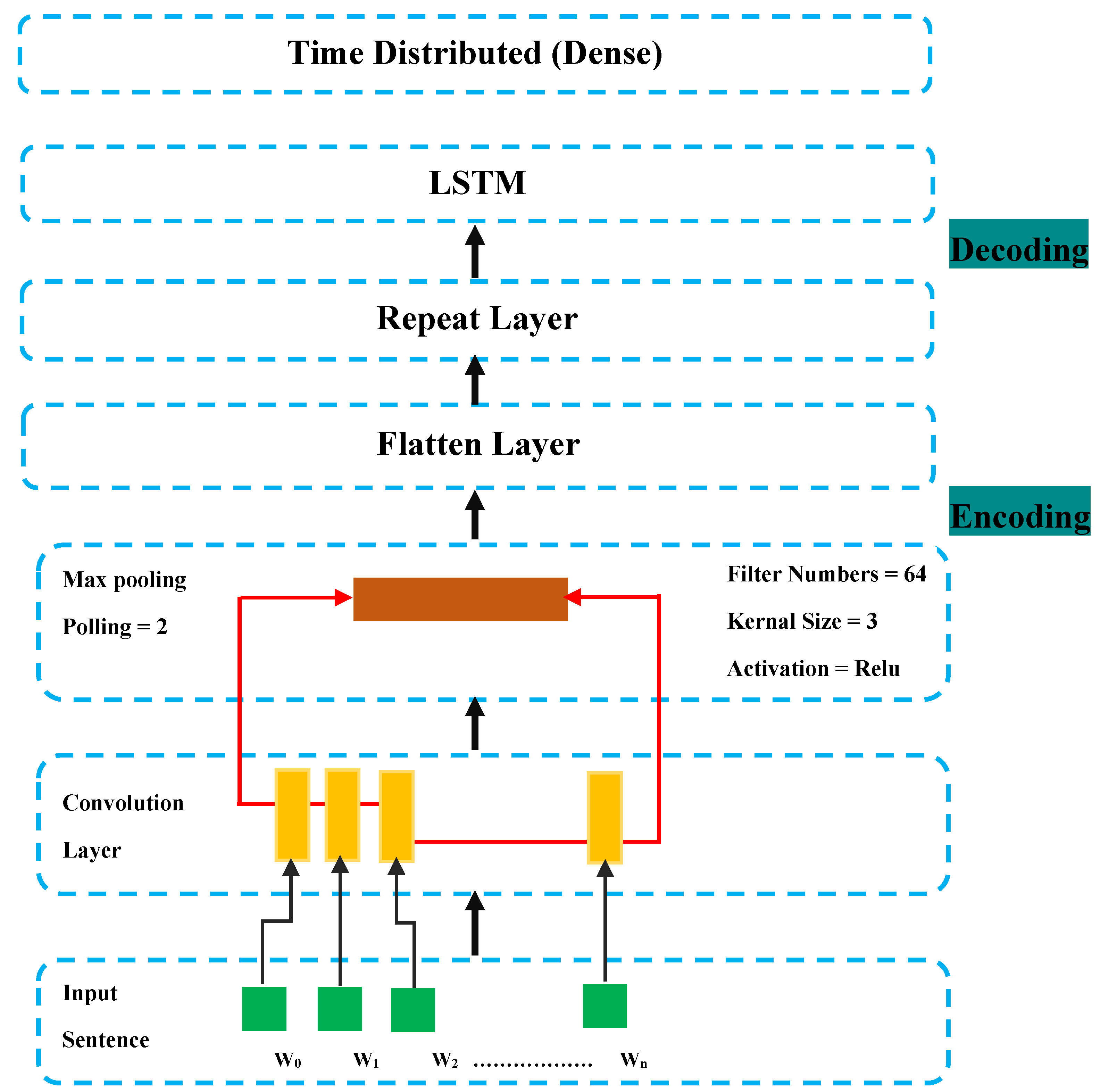

Table 7, the hybridization of CNN with LSTM is interesting, it has strong ability in terms of the extraction of temporal and spatial features automatically at the same time. According to the obtained accuracy, precision and recall, it was decided that the model gives the best performance in terms of abnormality detection.

The hybridization of CNN with LSTM achieves an accuracy of 98% in the SIMADL dataset and 93% in the MobiAct dataset. This could be explained by the fact that temporal and spatial features are two important types of features in detecting abnormal behaviors.

Autoencoder-CNN-LSTM provides additional support and a clear improvement for our problem in the compressed manner and it can be seen from

Table 7 that it gives the best accuracy, precision and recall for only MobiAct dataset. In contrast, testing the model on SIMADL, accuracy of 84% was obtained. Therefore, we cannot generalize its usefulness.

In order to check if the obtained accuracy and precision were not misleading, we use a confusion matrix for each model and both datasets. LSTM, CNN and CNN-LSTM perform well when the datasets are oversampled by using SMOTE method as shown in

Figure 5.

6. Conclusions

This paper presents a comparative study of abnormal behavior prediction methods (LSTM, CNN, CNN-LSTM, Autoencoder-CNN-LSTM) based on neural networks. The presented methods identify and predict abnormal behaviors with a high degree of accuracy and require less user intervention in order to automate the identification and prediction process. Two public datasets are chosen to validate the methods performance.

The non-uniform distribution of classes generates an imbalanced classes problem which is addressed by applying the SMOTE method for oversampling the classes. LSTM, CNN and CNN-LSTM models are affected by this problem in particular. LSTM gives a good result for temporal extraction, CNN proves its ability to extract the spatial information in time series, the hybridization of CNN and LSTM is validated in the purpose to extract the temporal and spatial features. Another interesting manner to extract the features by compressing them in the unsupervised manner with Autoencoder-CNN-LSTM model, we have to improve it in order to generalize it.

In future work, an analysis of users’ outdoor behavior could provide a fuller understanding of elderly people’s health and thereby improve their well-being.