Real-Time Queue Length Detection with Roadside LiDAR Data

Abstract

1. Introduction

2. Materials and Preprocessing

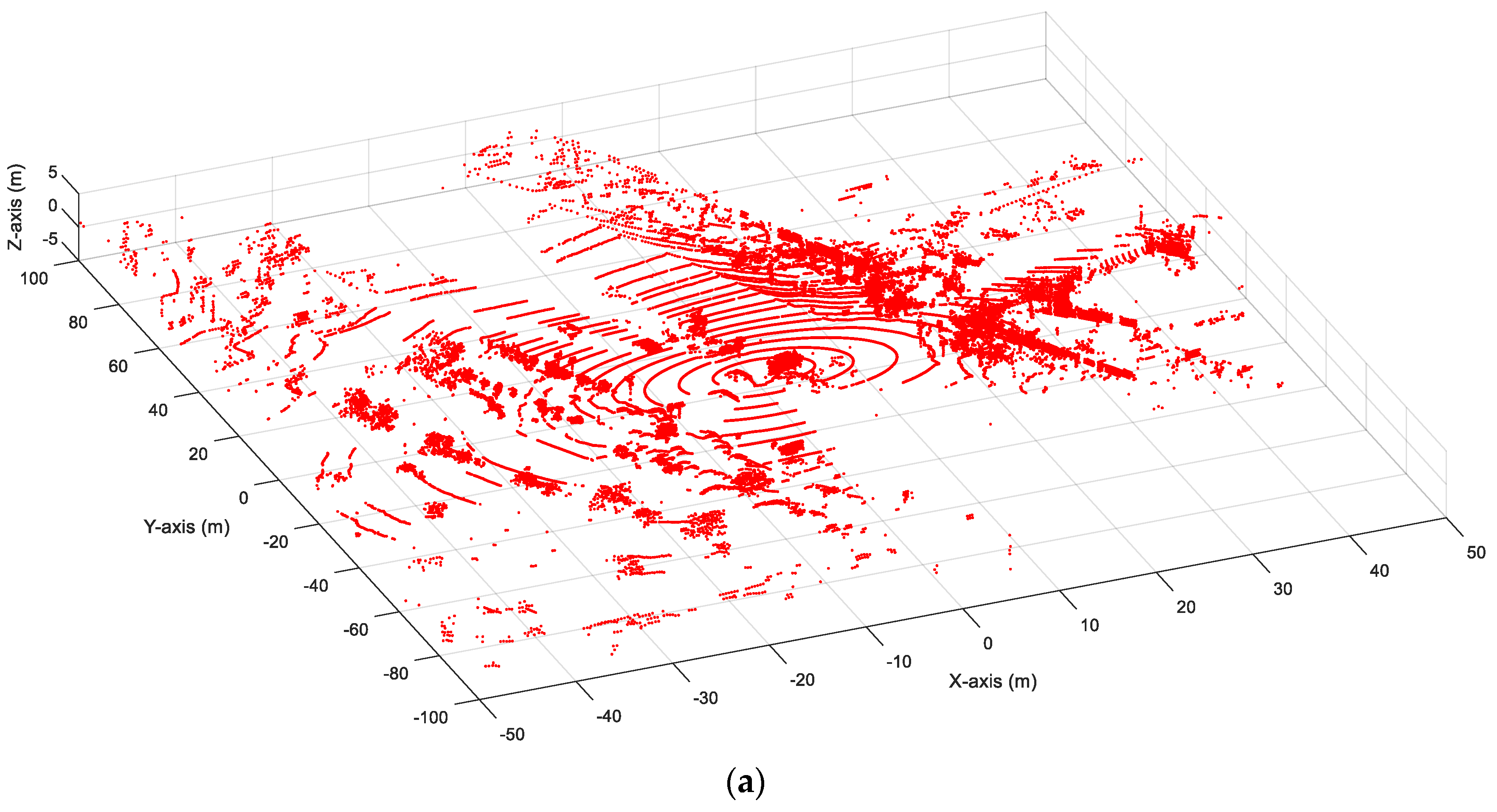

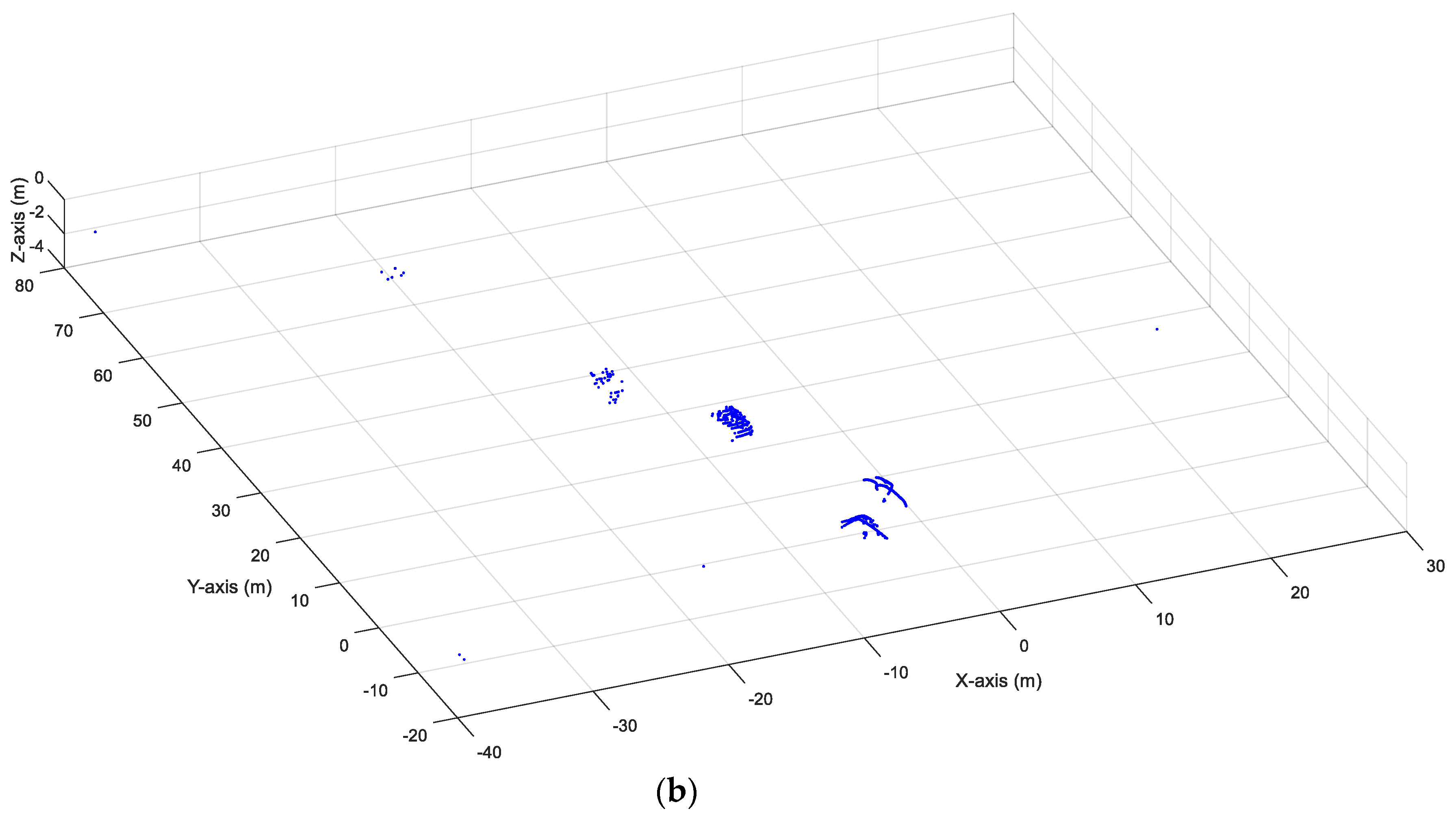

2.1. Background Filtering

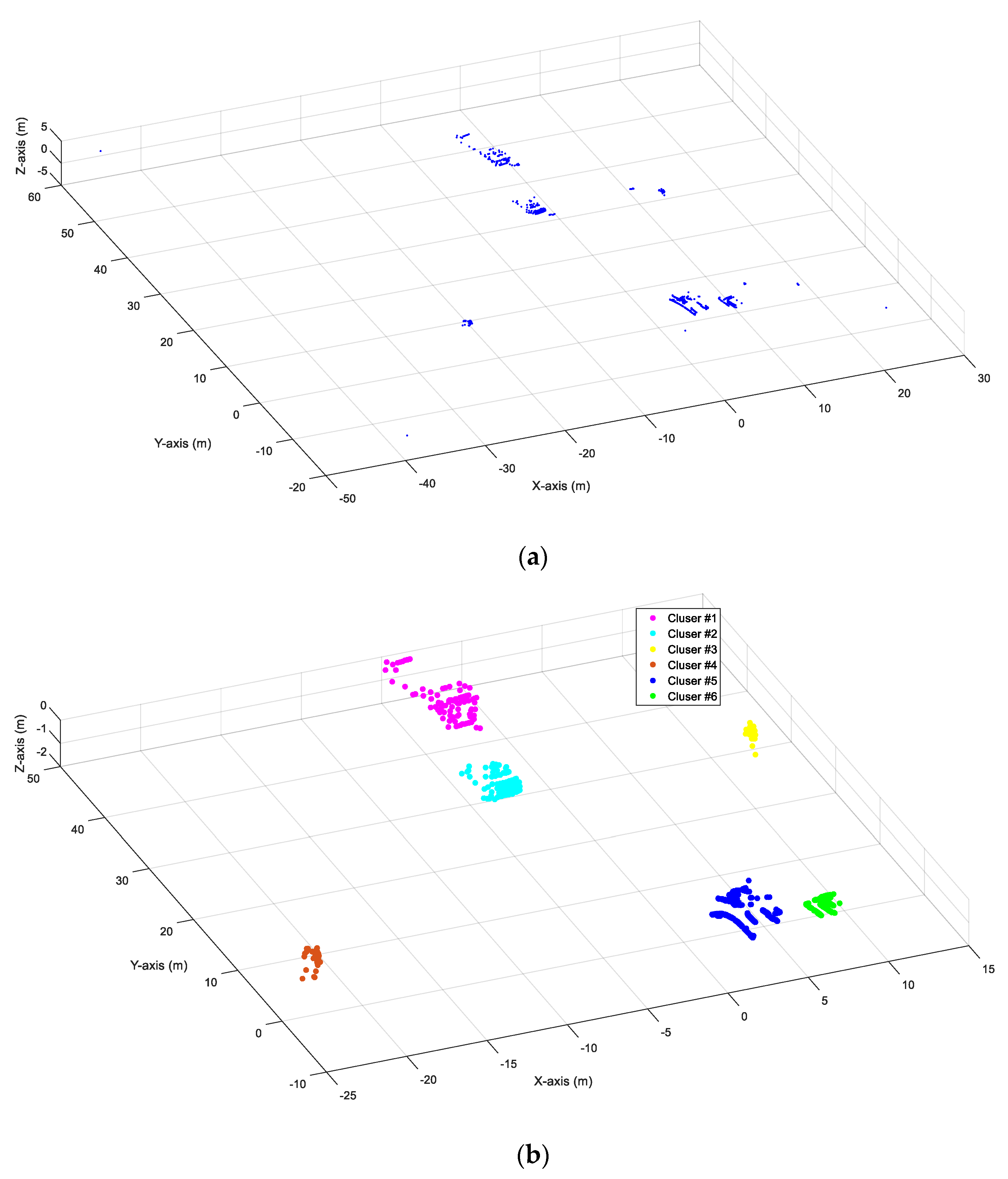

2.2. Point Clustering

- If the number of the neighbors of point A ≥ minPts, then point A and its neighbors are marked as a cluster and point A is marked as a visited point. DBSCAN then uses the same method to process the points of other unvisited points in the same cluster to extend the range of the cluster.

- If the number of the neighbors of point A < minPts, then point A will be marked as a noising point and a visited point.

2.3. Object Classification

2.4. Lane Identification

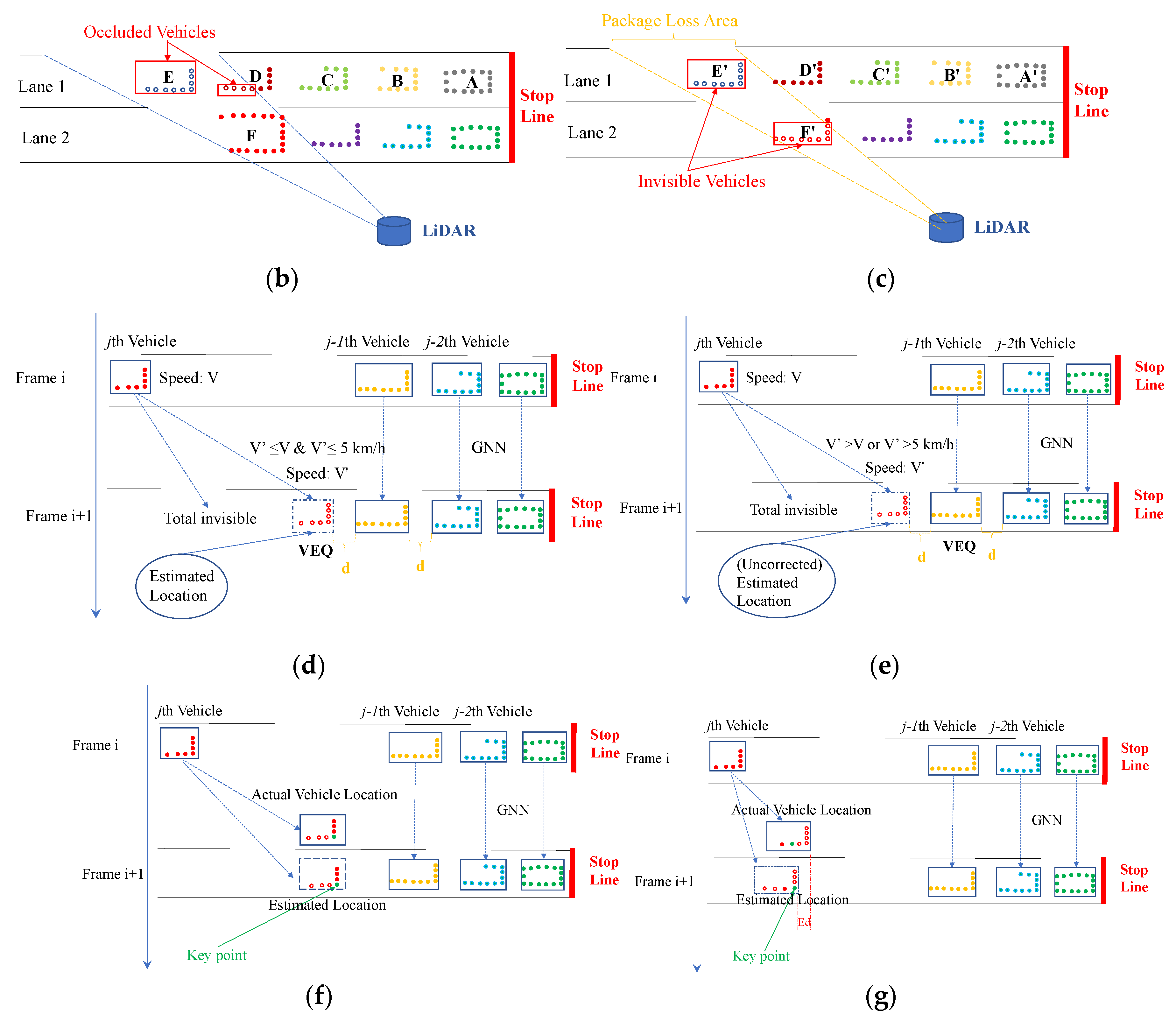

2.5. Object Association

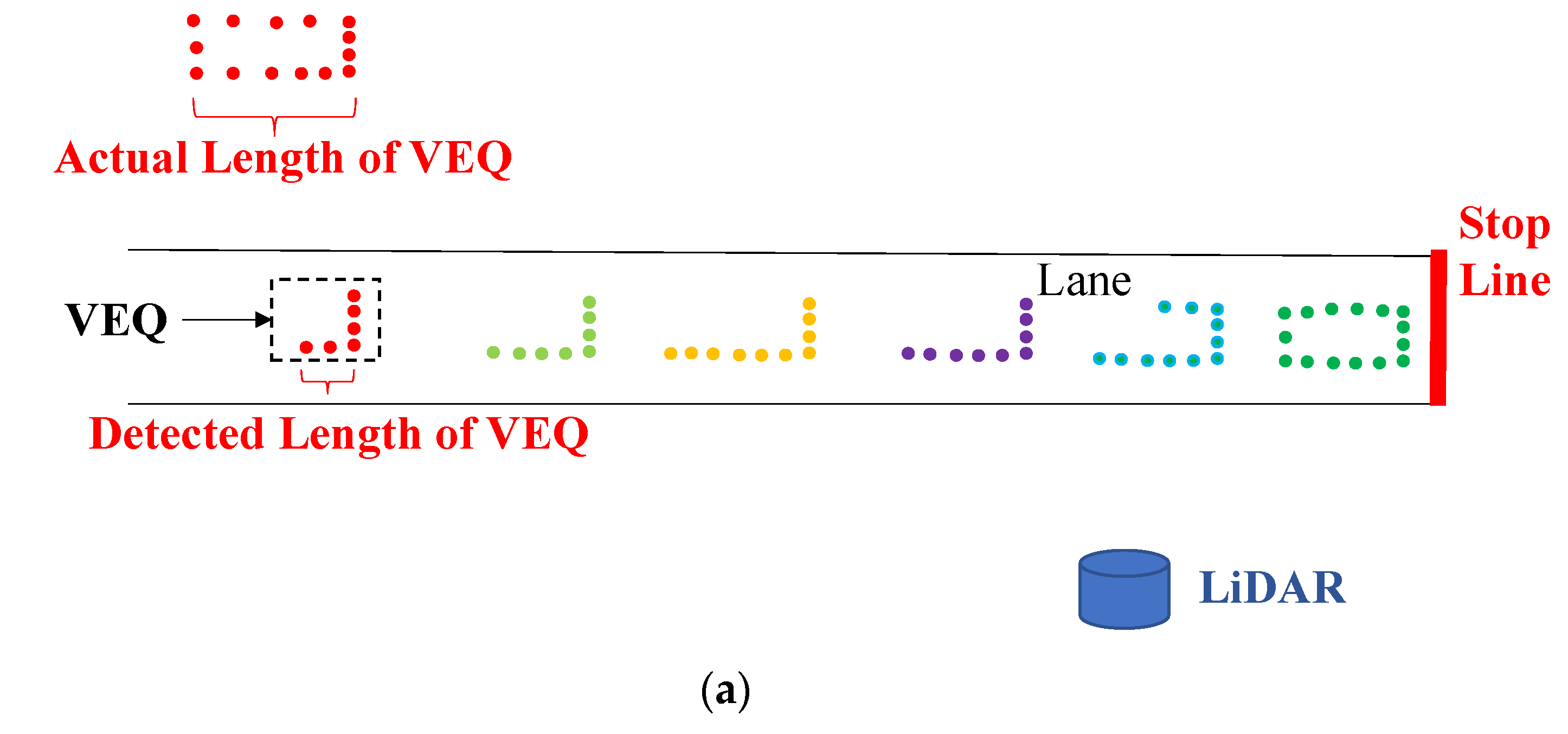

3. Queue Length Detection

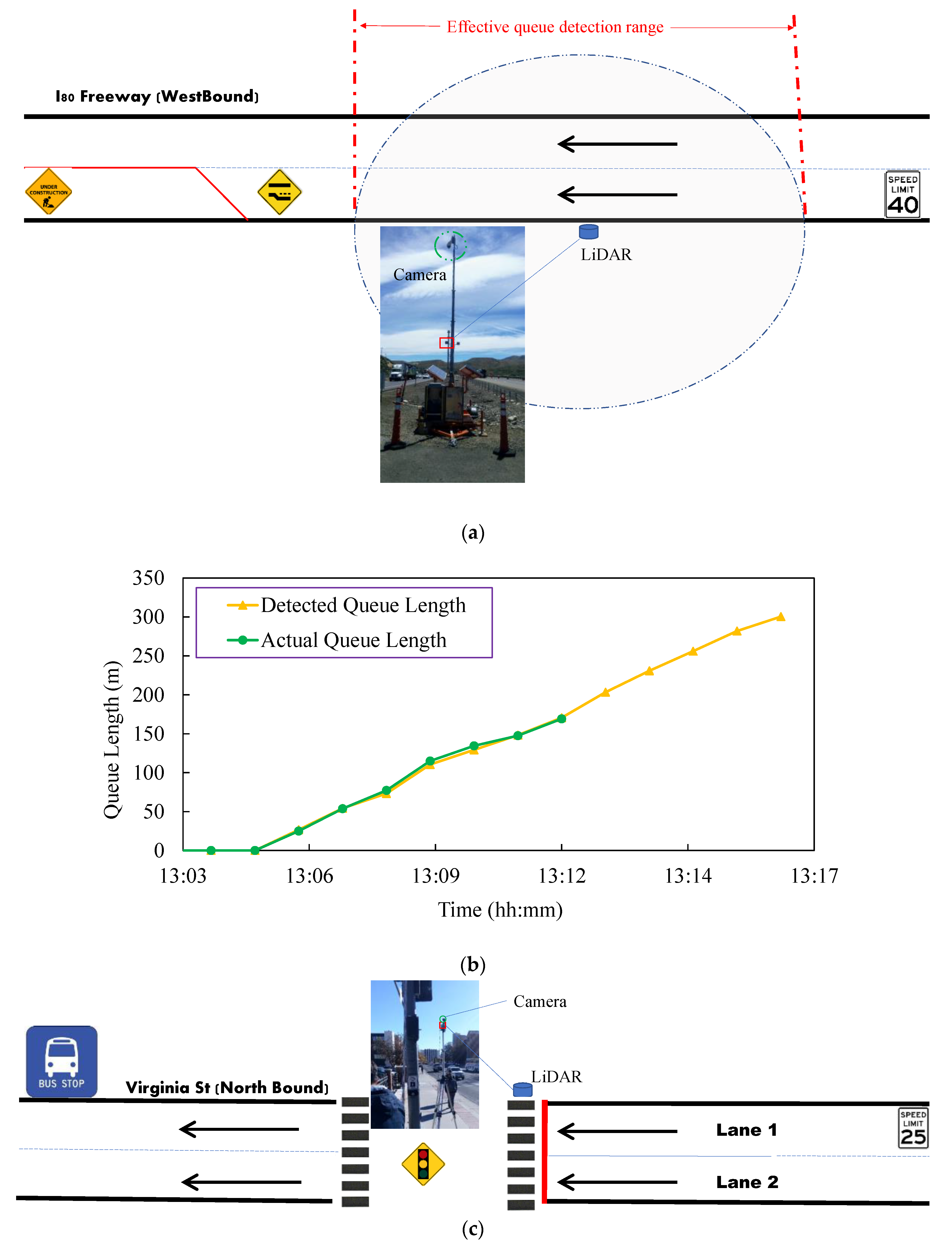

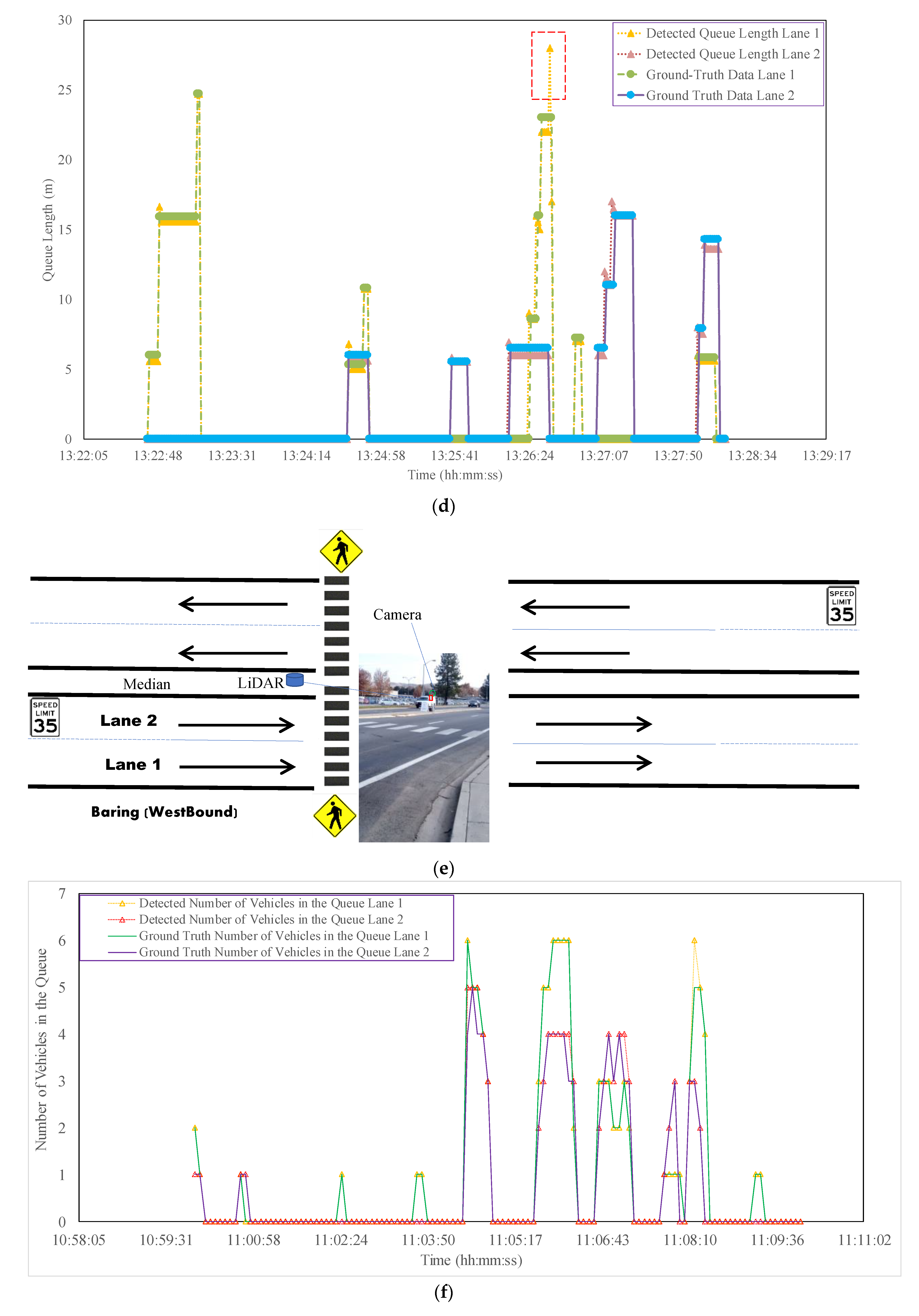

4. Evaluation

5. Conclusions and Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Yang, G.; Yue, R.; Tian, Z.; Xu, H. Modeling the Impacts of Traffic Flow Arrival Profiles on Ramp Metering Queues. Transp. Res. Rec. 2018, 2672, 85–92. [Google Scholar] [CrossRef]

- Ban, X.J.; Hao, P.; Sun, Z. Real time queue length estimation for signalized intersections using travel times from mobile sensors. Transp. Res. Part C Emerg. Technol. 2011, 19, 1133–1156. [Google Scholar]

- Sharma, A.; Bullock, D.M.; Bonneson, J.A. Input–output and hybrid techniques for real-time prediction of delay and maximum queue length at signalized intersections. Transp. Res. Rec. 2007, 2035, 69–80. [Google Scholar] [CrossRef]

- Qian, G.; Lee, J.; Chung, E. Algorithm for queue estimation with loop detector of time occupancy in off-ramps on signalized motorways. Transp. Res. Rec. 2012, 2278, 50–56. [Google Scholar] [CrossRef]

- Lee, J.; Jiang, R.; Chung, E. Traffic queue estimation for metered motorway on-ramps through use of loop detector time occupancies. Transp. Res. Rec. 2013, 2396, 45–53. [Google Scholar] [CrossRef]

- Liu, H.X.; Wu, X.; Ma, W.; Hu, H. Real-time queue length estimation for congested signalized intersections. Transp. Res. Part C Emerg. Technol. 2009, 17, 412–427. [Google Scholar] [CrossRef]

- Neumann, T. Efficient queue length detection at traffic signals using probe vehicle data and data fusion. In Proceedings of the ITS 16th World Congress, Stockholm, Sweden, 21–25 September 2009. [Google Scholar]

- Cheng, Y.; Qin, X.; Jin, J.; Ran, B.; Anderson, J. Cycle-by-cycle queue length estimation for signalized intersections using sampled trajectory data. Transp. Res. Rec. 2011, 2257, 87–94. [Google Scholar] [CrossRef]

- Hao, P.; Ban, X.J.; Guo, D.; Ji, Q. Cycle-by-cycle intersection queue length distribution estimation using sample travel times. Transp. Res. Part B Methodol. 2014, 68, 185–204. [Google Scholar] [CrossRef]

- Cai, Q.; Wang, Z.; Zheng, L.; Wu, B.; Wang, Y. Shock wave approach for estimating queue length at signalized intersections by fusing data from point and mobile sensors. Transp. Res. Rec. 2014, 2422, 79–87. [Google Scholar] [CrossRef]

- Sun, Z.; Ban, X.J. Vehicle trajectory reconstruction for signalized intersections using mobile traffic sensors. Transp. Res. Part C Emerg. Technol. 2013, 36, 268–283. [Google Scholar] [CrossRef]

- Li, J.Q.; Zhou, K.; Shladover, S.E.; Skabardonis, A. Estimating queue length under connected vehicle technology: Using probe vehicle, loop detector, and fused data. Transp. Res. Rec. 2013, 2356, 17–22. [Google Scholar] [CrossRef]

- Christofa, E.; Argote, J.; Skabardonis, A. Arterial queue spillback detection and signal control based on connected vehicle technology. Transp. Res. Rec. 2013, 2366, 61–70. [Google Scholar] [CrossRef]

- Tiaprasert, K.; Zhang, Y.; Wang, X.B.; Zeng, X. Queue length estimation using connected vehicle technology for adaptive signal control. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2129–2140. [Google Scholar] [CrossRef]

- Yang, K.; Menendez, M. Queue estimation in a connected vehicle environment: A convex approach. IEEE Trans. Intell. Transp. Syst. 2018, 20, 2480–2496. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, J.; Xu, H.; Wang, X. Vehicle Detection and Tracking in Complex Traffic Circumstances with Roadside LiDAR. Transp. Res. Rec. 2019, 2673, 62–71. [Google Scholar] [CrossRef]

- Zanin, M.; Messelodi, S.; Modena, C.M. An efficient vehicle queue detection system based on image processing. In Proceedings of the 12th International Conference on Image Analysis and Processing, Mantova, Italy, 17–19 September 2003; pp. 232–237. [Google Scholar]

- Siyal, M.Y.; Fathy, M. A neural-vision based approach to measure traffic queue parameters in real-time. Pattern Recognit. Lett. 1999, 20, 761–770. [Google Scholar] [CrossRef]

- Cai, Y.; Zhang, W.; Wang, H. Measurement of vehicle queue length based on video processing in intelligent traffic signal control system. In Proceedings of the International Conference on Measuring Technology and Mechatronics Automation, Changsha, China, 13–14 March 2010; Volume 2, pp. 615–618. [Google Scholar]

- Satzoda, R.K.; Suchitra, S.; Srikanthan, T.; Chia, J.Y. Vision-based vehicle queue detection at traffic junctions. In Proceedings of the 7th IEEE Conference on Industrial Electronics and Applications (ICIEA), Singapore, 18–20 July 2012; pp. 90–95. [Google Scholar]

- Xu, Y.; Wu, Y.; Xu, J.; Ni, D.; Wu, G.; Sun, L. A queue-length-based detection scheme for urban traffic congestion by VANETs. In Proceedings of the IEEE Seventh International Conference on Networking, Architecture, and Storage, Xiamen, China, 28–30 June 2012; pp. 252–259. [Google Scholar]

- Lv, B.; Xu, H.; Wu, J.; Tian, Y.; Zhang, Y.; Zheng, Y.; Yuan, C.; Tian, S. LiDAR-Enhanced Connected Infrastructures Sensing and Broadcasting High-Resolution Traffic Information Serving Smart Cities. IEEE Access 2019, 7, 79895–79907. [Google Scholar] [CrossRef]

- Wu, J.; Tian, Y.; Xu, H.; Yue, R.; Wang, A.; Song, X. Automatic ground points filtering of roadside LiDAR data using a channel-based filtering algorithm. Opt. Laser Technol. 2019, 115, 374–383. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Yue, R.; Tian, Z.; Tian, Y.; Tian, Y. An automatic skateboarder detection method with roadside LiDAR data. J. Transp. Saf. Secur. 2019, 1–20. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Zheng, J. Automatic background filtering and lane identification with roadside LiDAR data. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–6. [Google Scholar]

- Wu, J.; Xu, H.; Sun, Y.; Zheng, J.; Yue, R. Automatic background filtering method for roadside LiDAR data. Transp. Res. Rec. 2018, 2672, 106–114. [Google Scholar] [CrossRef]

- Zhao, J.; Xu, H.; Liu, H.; Wu, J.; Zheng, Y.; Wu, D. Detection and tracking of pedestrians and vehicles using roadside LiDAR sensors. Transp. Res. Part C Emerg. Technol. 2019, 100, 68–87. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Zheng, Y.; Zhang, Y.; Lv, B.; Tian, Z. Automatic Vehicle Classification using Roadside LiDAR Data. Transp. Res. Rec. 2019, 2673, 153–164. [Google Scholar] [CrossRef]

- Chen, J.; Xu, H.; Wu, J.; Yue, R.; Yuan, C.; Wang, L. Deer Crossing Road Detection with Roadside LiDAR Sensor. IEEE Access 2019, 7, 65944–65954. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Zhao, J. Automatic lane identification using the roadside LiDAR sensors. IEEE Intell. Transp. Syst. Mag. 2020, 12, 25–34. [Google Scholar] [CrossRef]

- Cui, Y.; Xu, H.; Wu, J.; Sun, Y.; Zhao, J. Automatic Vehicle Tracking with Roadside LiDAR Data for the Connected-Vehicles System. IEEE Intell. Syst. 2019, 34, 44–51. [Google Scholar] [CrossRef]

- Wu, J. An automatic procedure for vehicle tracking with a roadside LiDAR sensor. Inst. Transp. Eng. ITE J. 2018, 88, 32–37. [Google Scholar]

- Lv, B.; Xu, H.; Wu, J.; Tian, Y.; Yuan, C. Raster-based Background Filtering for Roadside LiDAR Data. IEEE Access 2019, 7, 2169–3536. [Google Scholar] [CrossRef]

- Lv, B.; Xu, H.; Wu, J.; Tian, Y.; Tian, S.; Feng, S. Revolution and rotation-based method for roadside LiDAR data integration. Opt. Laser Technol. 2019, 119, 105571. [Google Scholar] [CrossRef]

- Zheng, J.; Xu, B.; Wang, X.; Fan, X.; Xu, H.; Sun, G. A portable roadside vehicle detection system based on multi-sensing fusion. Int. J. Sens. Netw. 2019, 29, 38–47. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. KDD 1996, 96, 226–231. [Google Scholar]

- Yang, B.; Fang, L.; Li, Q.; Li, J. Automated extraction of road markings from mobile LiDAR point clouds. Photogramm. Eng. Remote Sens. 2012, 78, 331–338. [Google Scholar] [CrossRef]

- Cheng, M.; Zhang, H.; Wang, C.; Li, J. Extraction and classification of road markings using mobile laser scanning point clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 1182–1196. [Google Scholar] [CrossRef]

- Yu, L.; Dan-pu, Z.; Xian-qing, T.; Zhi-li, L. The queue length estimation for congested signalized intersections based on shockwave theory. In Proceedings of the International Conference on Remote Sensing, Environment and Transportation Engineering, Nanjing, China, 26–28 July 2013. [Google Scholar]

- Wu, A.; Qi, L.; Yang, X. Mechanism analysis and optimization of signalized intersection coordinated control under oversaturated status. Procedia Soc. Behav. Sci. 2013, 96, 1433–1442. [Google Scholar] [CrossRef]

- Yue, R.; Xu, H.; Wu, J.; Sun, R.; Yuan, C. Data Registration with Ground Points for Roadside LiDAR Sensors. Remote Sens. 2019, 11, 1354. [Google Scholar] [CrossRef]

- Cheung, S.Y.; Coleri, S.; Dundar, B.; Ganesh, S.; Tan, C.W.; Varaiya, P. Traffic measurement and vehicle classification with single magnetic sensor. Transp. Res. Rec. 2005, 1917, 173–181. [Google Scholar] [CrossRef]

| Site | Speed Limit | Traffic Control | Areas for Queue Length Evaluation |

|---|---|---|---|

| I80 work zone | 64.4 km/h (40 mph) | Left lane closed control | One westbound unclosed lane |

| Virginia St @ Artemesia Way | 40.2 km/h (25 mph) | Signalized T-intersection | Two northbound through lanes |

| Baring Blvd at the front of the Reed High School | 56.3 km/h (35 mph) | Yield sign for pedestrian crossing | Two westbound through lanes |

| Queue Length | Number of Vehicles in the Queue | ||

|---|---|---|---|

| Error | Percentage (%) | Error | Percentage (%) |

| 0–0.5 m | 88.3 | 0 vehicle | 96.2 |

| 0.5–1.0 m | 89.8 | 1 vehicle | 98.5 |

| 1.0–1.5 m | 91.3 | 2 vehicles | 99.1 |

| 1.5–2.0 m | 94.5 | 3 vehicles | 99.8 |

| 2.0–3.0 m | 96.2 | 4 vehicles | 100 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, J.; Xu, H.; Zhang, Y.; Tian, Y.; Song, X. Real-Time Queue Length Detection with Roadside LiDAR Data. Sensors 2020, 20, 2342. https://doi.org/10.3390/s20082342

Wu J, Xu H, Zhang Y, Tian Y, Song X. Real-Time Queue Length Detection with Roadside LiDAR Data. Sensors. 2020; 20(8):2342. https://doi.org/10.3390/s20082342

Chicago/Turabian StyleWu, Jianqing, Hao Xu, Yongsheng Zhang, Yuan Tian, and Xiuguang Song. 2020. "Real-Time Queue Length Detection with Roadside LiDAR Data" Sensors 20, no. 8: 2342. https://doi.org/10.3390/s20082342

APA StyleWu, J., Xu, H., Zhang, Y., Tian, Y., & Song, X. (2020). Real-Time Queue Length Detection with Roadside LiDAR Data. Sensors, 20(8), 2342. https://doi.org/10.3390/s20082342