Latency Compensated Visual-Inertial Odometry for Agile Autonomous Flight

Abstract

1. Introduction

1.1. Related Work

1.1.1. Visual-Inertial Odometry

1.1.2. State Estimation Using Time-Delayed Measurements

1.2. Summary of Contributions

1.3. A Guide to This Document

2. Preliminaries

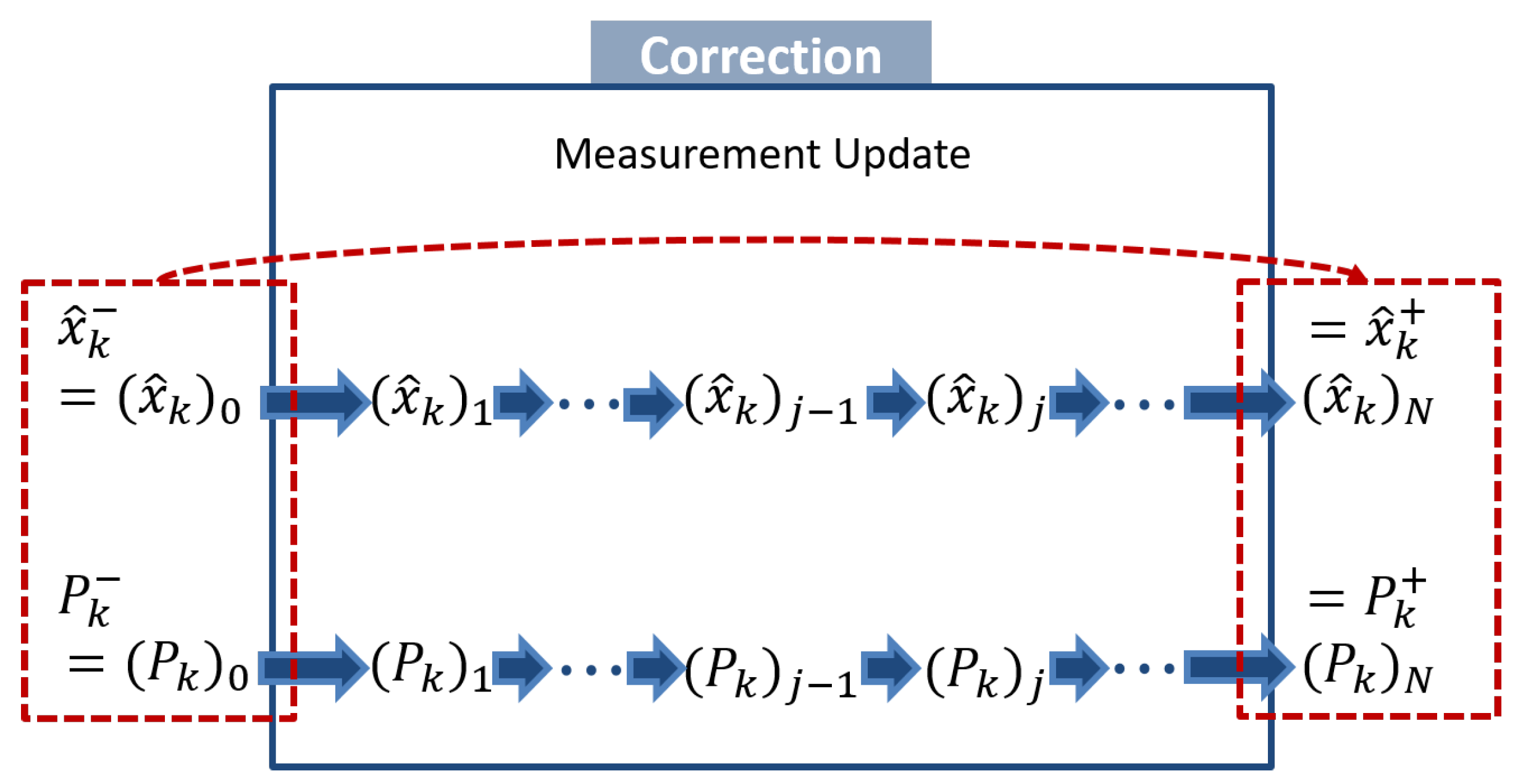

2.1. Sequential Measurement Update

2.2. Vehicle Model

2.3. Camera Model

2.4. Feature Initialization

3. Theory

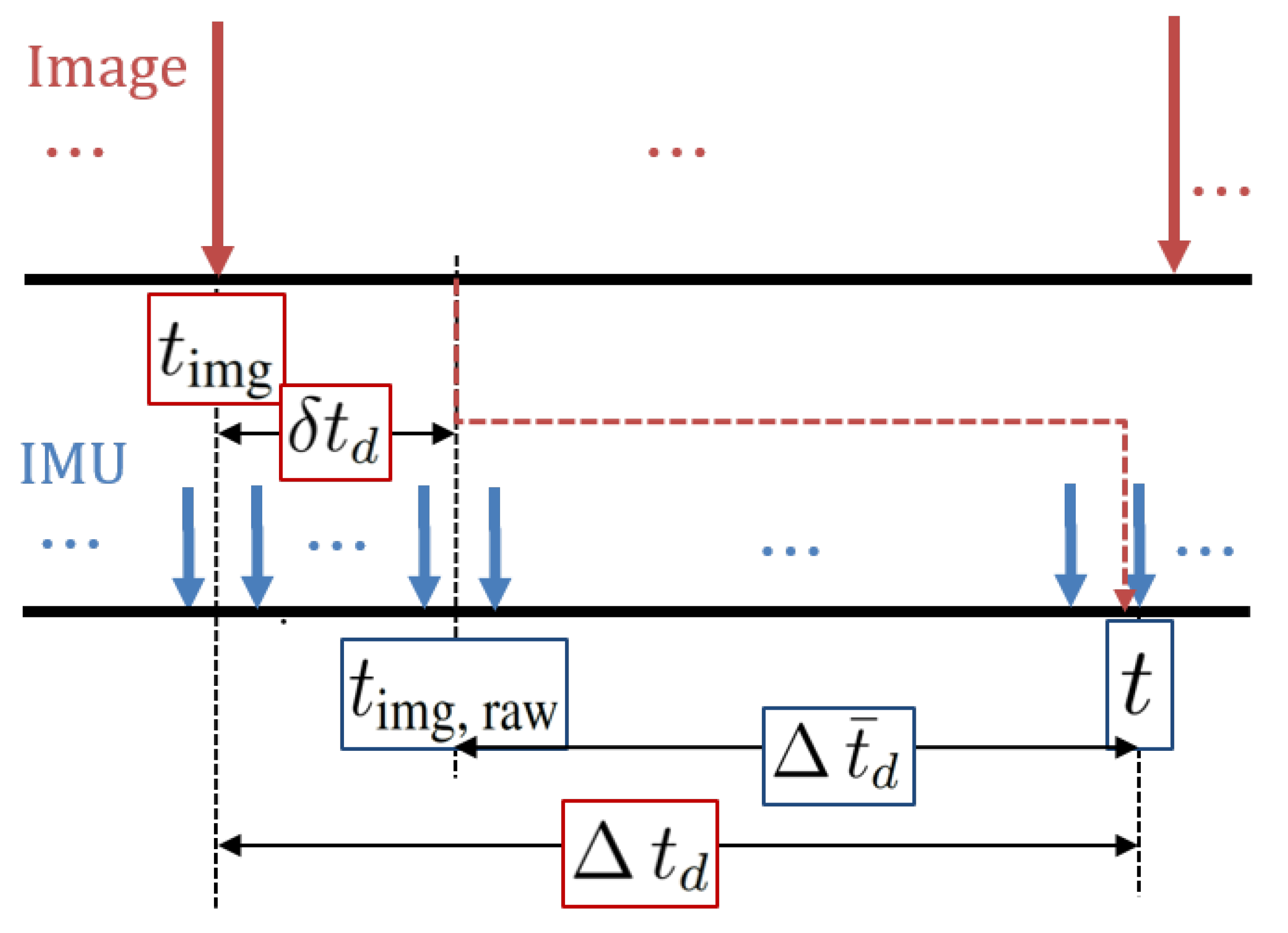

3.1. Definition of Time Delays

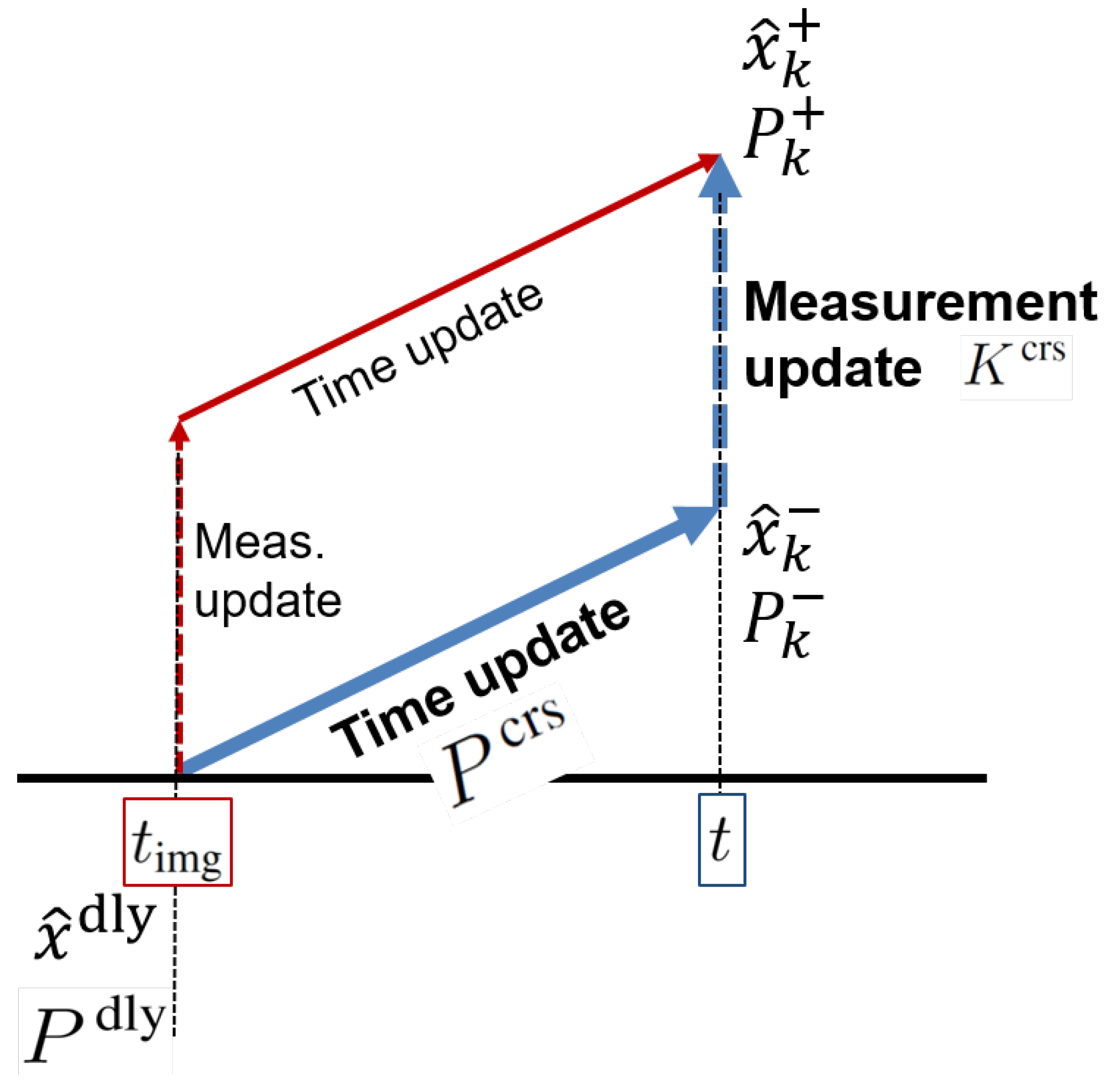

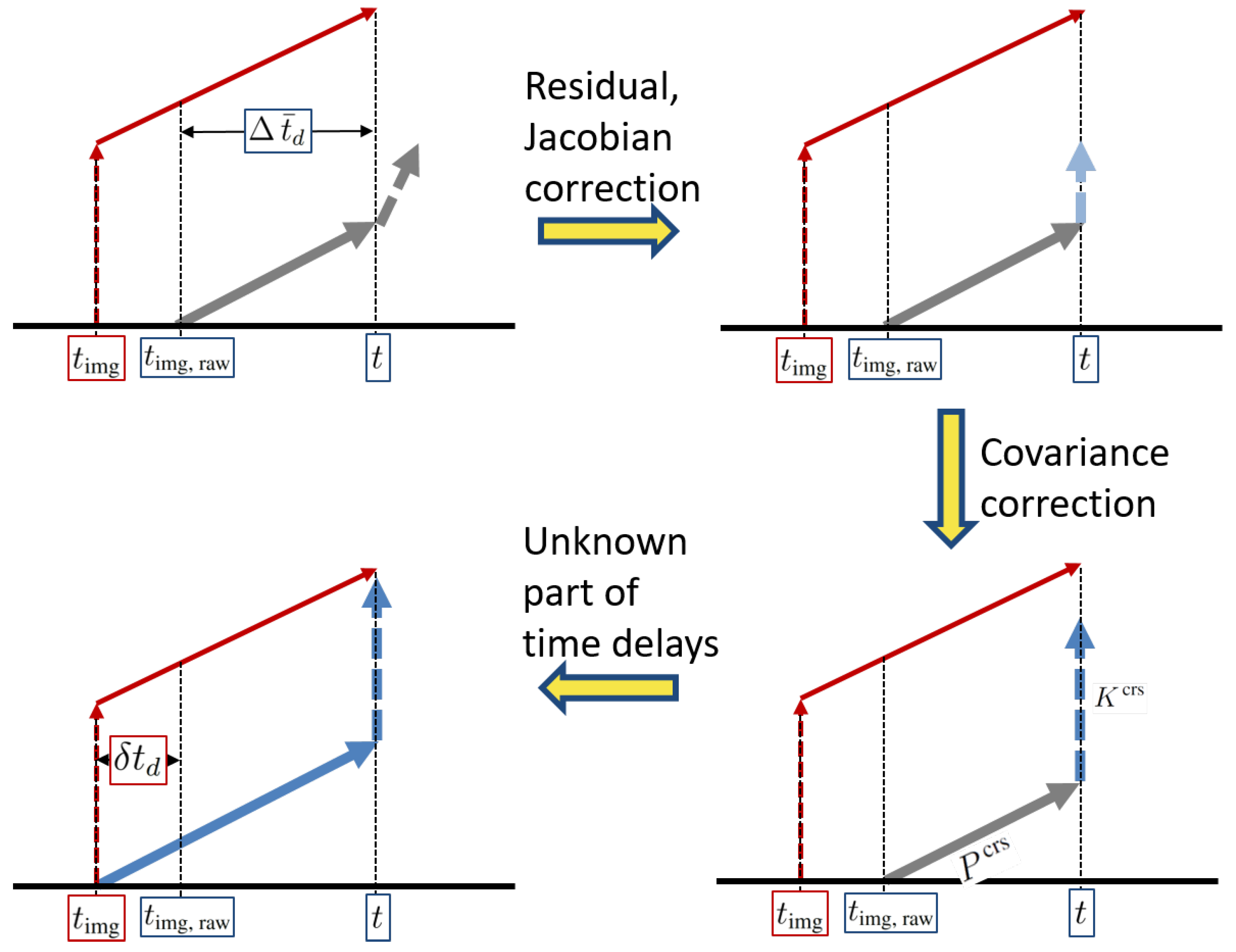

3.2. Approximately Known Part of Time Delays

3.2.1. Jacobian and Residual—“Baseline Correction”

3.2.2. Cross-Covariance—“Covariance Correction”

3.3. Unknown Part of Time Delays—“Online Calibration”

4. Implementation

4.1. Forward Computation of Cross-Covariance

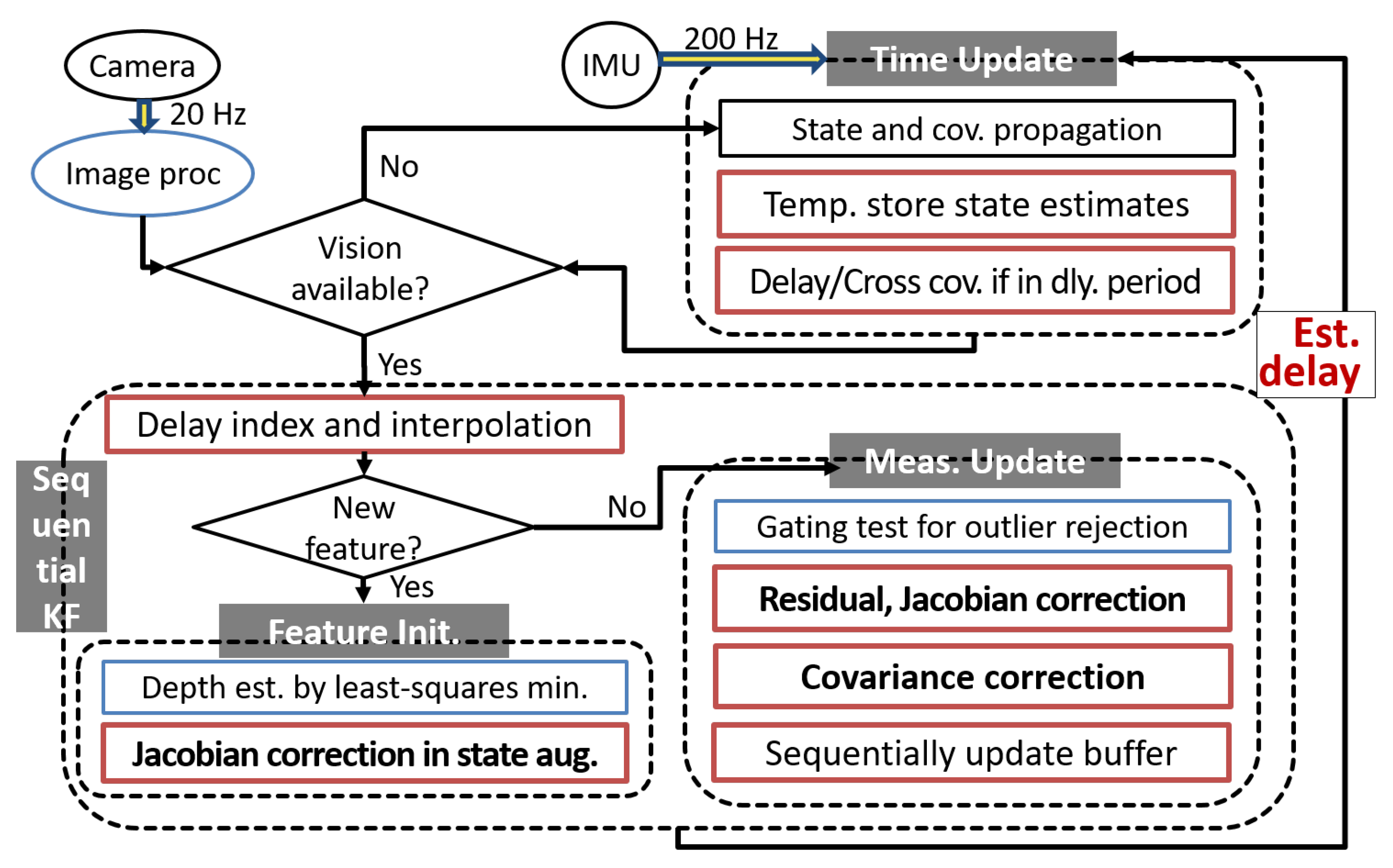

4.2. Summarized Algorithm

| Algorithm 1 The Latency Compensated VIO |

Require:

|

5. Results

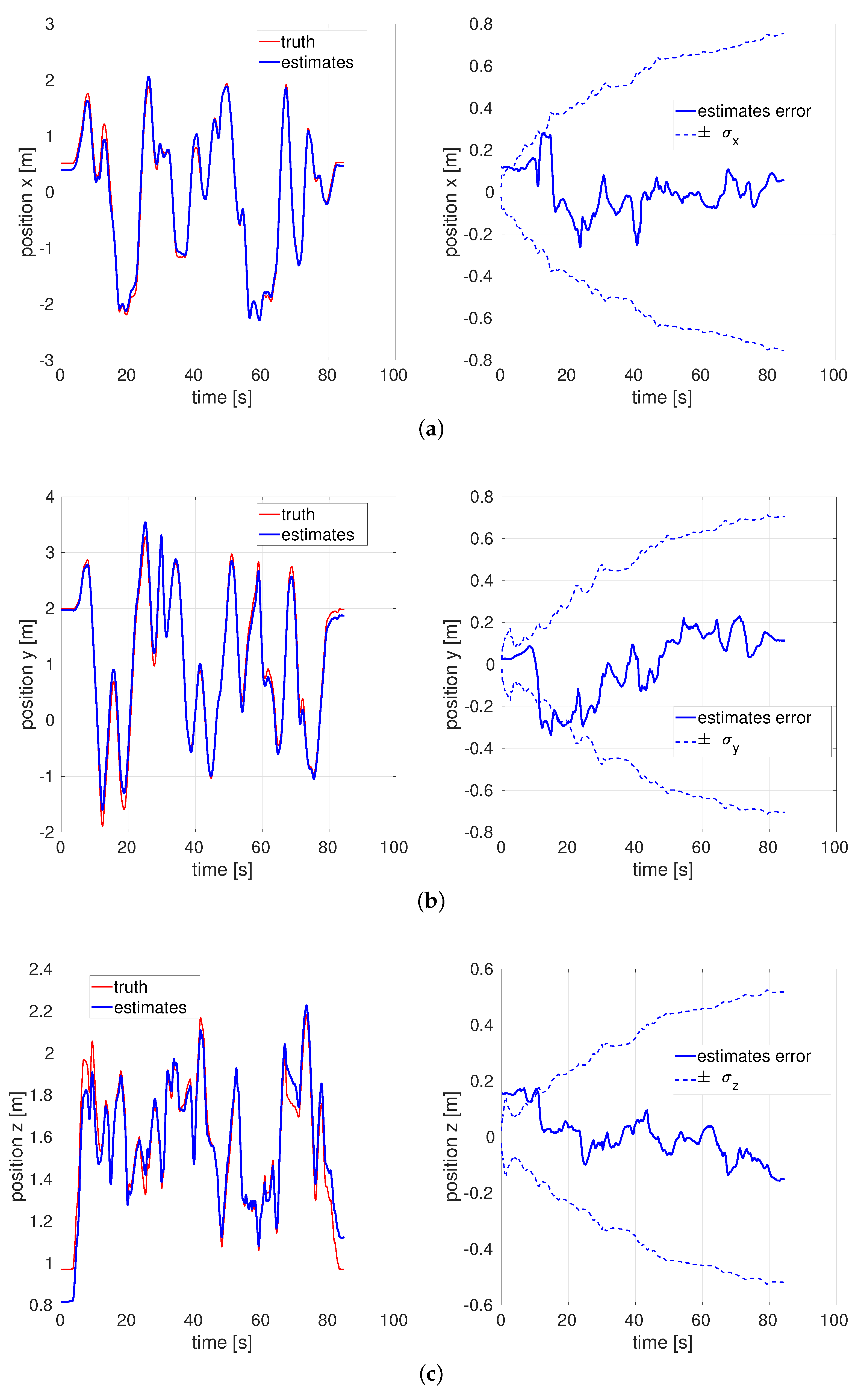

5.1. Monte Carlo Simulations of a Simple Example

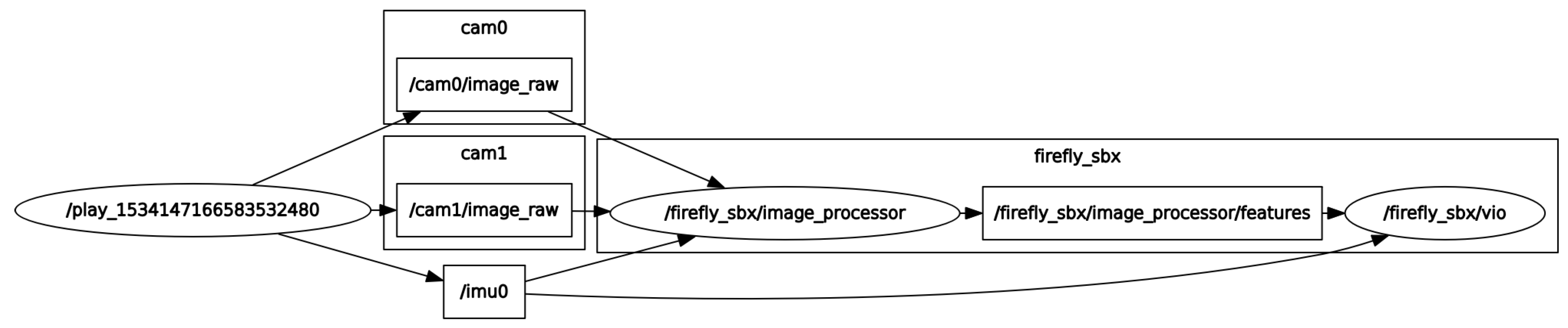

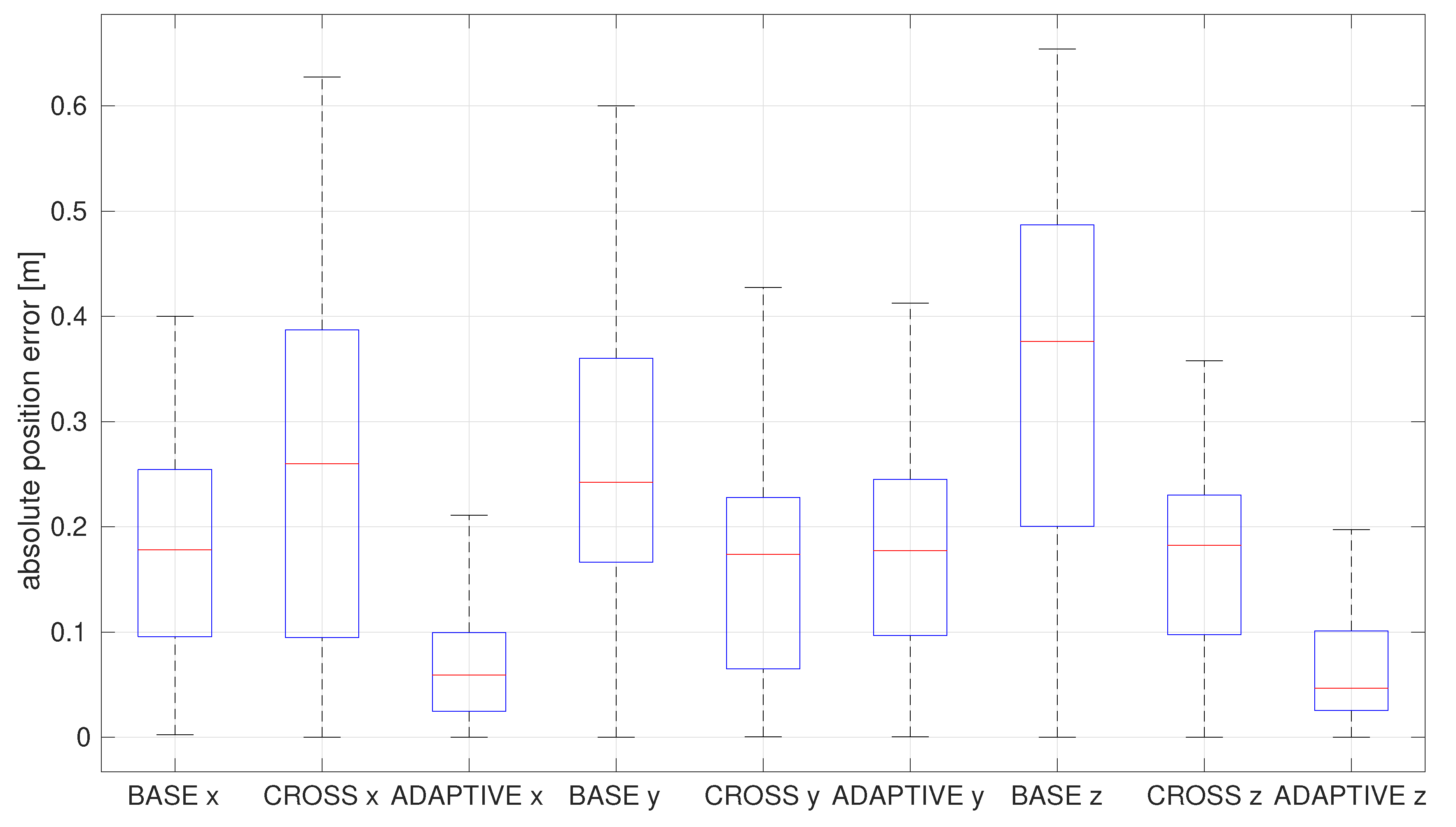

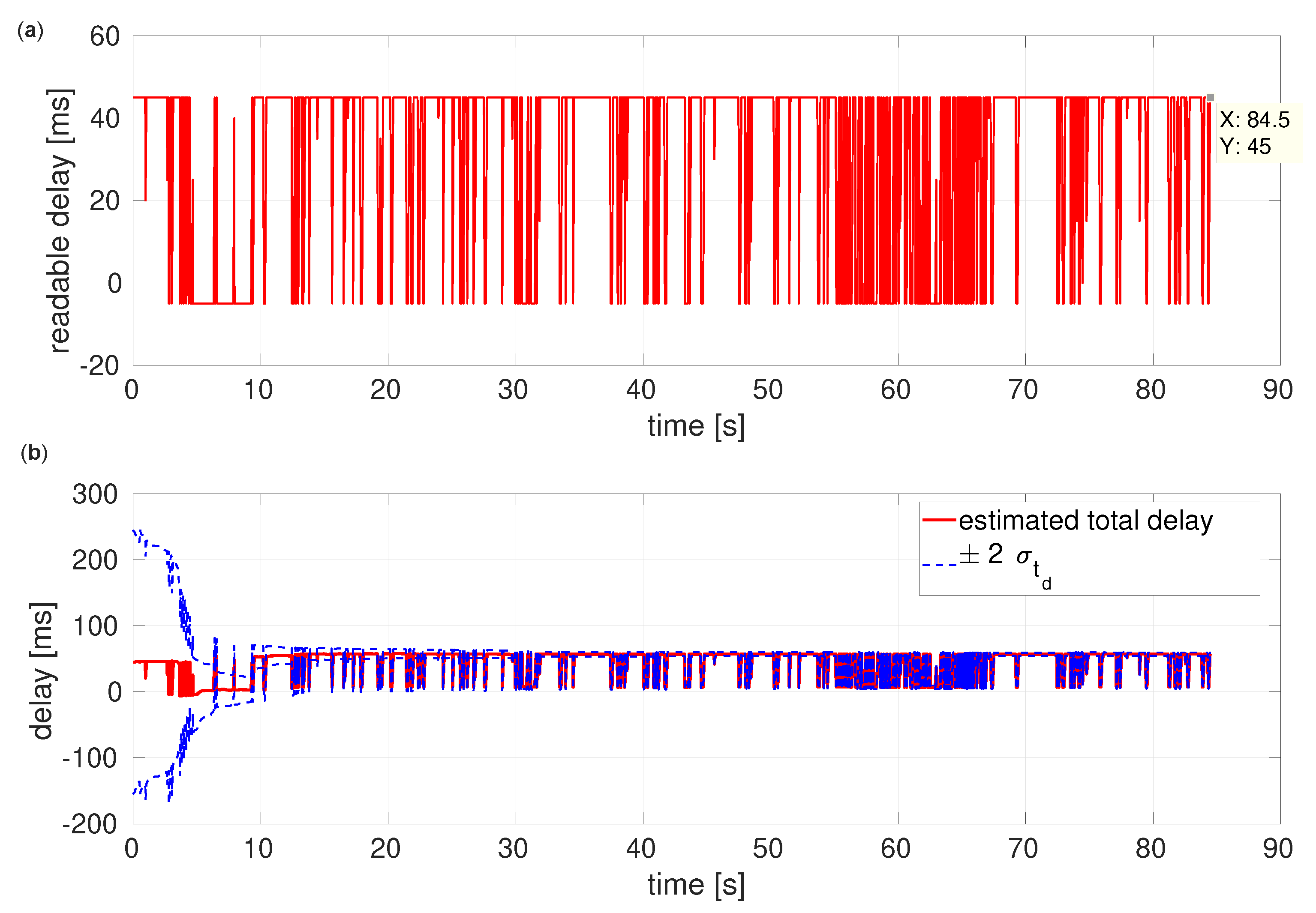

5.2. Flight Datasets’ Test Results

6. Discussion

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| VIO | Visual-Inertial Odometry |

| IMU | Inertial Measurement Unit |

| EKF | Extended Kalman Filter |

| UAV | Unmanned Aerial Vehicle |

| ROS | Robot Operating System |

Nomenclature

| x | state | |

| y | measurement | |

| t | continuous time | |

| k | discrete time | |

| j | index of measurements | |

| P | error state covariance | |

| Q | process noise covariance | |

| R | measurement noise covariance | |

| r | residual |

Appendix A. Jacobians of Models

Appendix B. Feature Initialization

Appendix C. Stochastic Cloning (or the Schmidt–Kalman filter)

References

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Kalman, R.E.; Bucy, R.S. New Results in Linear Filtering and Prediction Theory. J. Basic Eng. 1961, 83, 95–108. [Google Scholar] [CrossRef]

- Anderson, B.D.; Moore, J.B. Optimal Filtering; Prentice Hall: Englewood Cliffs, NJ, USA, 1979; pp. 193–222. [Google Scholar]

- Bar-Shalom, Y.; Li, X.R.; Kirubarajan, T. Estimation with Applications to Tracking and Navigation: Theory Algorithms and Software; John Wiley & Sons: River Street Hoboken, NJ, USA, 2004. [Google Scholar]

- Julier, S.J.; Uhlmann, J.K. A New Extension of the Kalman Filter to Nonlinear Systems. In Proceedings of the International Symposium on Aerospace/Defense, Sensing, Simulation and Controls, Orlando, FL, USA, 20–25 April 1997; Volume 3, pp. 182–193. [Google Scholar] [CrossRef]

- Wu, A.D.; Johnson, E.N.; Kaess, M.; Dellaert, F.; Chowdhary, G. Autonomous Flight in GPS-Denied Environments Using Monocular Vision and Inertial Sensors. J. Aerosp. Inform. Syst. 2013, 10, 172–186. [Google Scholar] [CrossRef]

- Chowdhary, G.; Johnson, E.N.; Magree, D.; Wu, A.; Shein, A. GPS-Denied Indoor and Outdoor Monocular Vision Aided Navigation and Control of Unmanned Aircraft. J. Field Robot. 2013, 30, 415–438. [Google Scholar] [CrossRef]

- Song, Y.; Nuske, S.; Scherer, S. A Multi-Sensor Fusion MAV State Estimation from Long-Range Stereo, IMU, GPS and Barometric Sensors. Sensors 2017, 17, 11. [Google Scholar] [CrossRef]

- Kong, W.; Hu, T.; Zhang, D.; Shen, L.; Zhang, J. Localization Framework for Real-Time UAV Autonomous Landing: An On-Ground Deployed Visual Approach. Sensors 2017, 17, 1437. [Google Scholar] [CrossRef]

- Delmerico, J.; Scaramuzza, D. A Benchmark Comparison of Monocular Visual-Inertial Odometry Algorithms for Flying Robots. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018. [Google Scholar] [CrossRef]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle Adjustment—A Modern Synthesis. In Proceedings of the International Workshop on Vision Algorithms, Corfu, Greece, 21–22 September 1999; pp. 298–372. [Google Scholar] [CrossRef]

- Dellaert, F.; Kaess, M. Square Root SAM: Simultaneous Localization and Mapping via Square Root Information Smoothing. Int. J. Rob. Res. 2006, 25, 1181–1203. [Google Scholar] [CrossRef]

- Huang, G. Visual-Inertial Navigation: A Concise Review. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust Visual Inertial Odometry Using a Direct EKF-Based Approach. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-MONO: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Faessler, M.; Fontana, F.; Forster, C.; Mueggler, E.; Pizzoli, M.; Scaramuzza, D. Autonomous, Vision-Based Flight and Live Dense 3D Mapping with a Quadrotor Micro Aerial Vehicle. J. Field Robot. 2016, 33, 431–450. [Google Scholar] [CrossRef]

- Paul, M.K.; Roumeliotis, S.I. Alternating-Stereo VINS: Observability Analysis and Performance Evaluation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4729–4737. [Google Scholar] [CrossRef]

- Sun, K.; Mohta, K.; Pfrommer, B.; Watterson, M.; Liu, S.; Mulgaonkar, Y.; Taylor, C.J.; Kumar, V. Robust Stereo Visual Inertial Odometry for Fast Autonomous Flight. IEEE Robot. Autom. Lett. 2018, 3, 965–972. [Google Scholar] [CrossRef]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-Based Visual–Inertial Odometry Using Nonlinear Optimization. Int. J. Rob. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef]

- Lin, Y.; Gao, F.; Qin, T.; Gao, W.; Liu, T.; Wu, W.; Yang, Z.; Shen, S. Autonomous Aerial Navigation Using Monocular Visual-Inertial Fusion. J. Field Robot. 2018, 35, 23–51. [Google Scholar] [CrossRef]

- Mourikis, A.I.; Roumeliotis, S.I. A Multi-State Constraint Kalman Filter for Vision-Aided Inertial Navigation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Roma, Italy, 10–14 April 2007; pp. 3565–3572. [Google Scholar] [CrossRef]

- Li, M.; Mourikis, A.I. High-Precision, Consistent EKF-Based Visual-Inertial Odometry. Int. J. Rob. Res. 2013, 32, 690–711. [Google Scholar] [CrossRef]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast Semi-Direct Monocular Visual Odometry. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar] [CrossRef]

- Forster, C.; Zhang, Z.; Gassner, M.; Werlberger, M.; Scaramuzza, D. SVO: Semidirect Visual Odometry for Monocular and Multicamera Systems. IEEE Trans. Robot. 2017, 33, 249–265. [Google Scholar] [CrossRef]

- Lynen, S.; Achtelik, M.W.; Weiss, S.; Chli, M.; Siegwart, R. A Robust and Modular Multi-Sensor Fusion Approach Applied to MAV Navigation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013; pp. 3923–3929. [Google Scholar] [CrossRef]

- Paul, M.K.; Wu, K.; Hesch, J.A.; Nerurkar, E.D.; Roumeliotis, S.I. A Comparative Analysis of Tightly-Coupled Monocular, Binocular, and Stereo Vins. In Proceedings of the International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 165–172. [Google Scholar]

- Alexander, H.L. State Estimation for Distributed Systems with Sensing Delay. In Proceedings of the Data Structures and Target Classification, Orlando, FL, USA, 1–2 April 1991; Volume 1470, pp. 103–111. [Google Scholar]

- Larsen, T.D.; Andersen, N.A.; Ravn, O.; Poulsen, N.K. Incorporation of Time Delayed Measurements in a Discrete-Time Kalman Filter. In Proceedings of the 37th IEEE Conference on Decision and Control (CDC), Tampa, FL, USA, 16–18 December 1998; Volume 4, pp. 3972–3977. [Google Scholar] [CrossRef]

- Van Der Merwe, R.; Wan, E.A.; Julier, S. Sigma-Point Kalman Filters for Nonlinear Estimation and Sensor-Fusion: Applications to Integrated Navigation. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Providence, RI, USA, 16–19 August 2004; pp. 16–19. [Google Scholar] [CrossRef]

- Van Der Merwe, R. Sigma-Point Kalman Filters for Probabilistic Inference in Dynamic State-Space Models. Ph.D. Thesis, Oregon Health & Science University, Portland, OR, USA, 2004. [Google Scholar]

- Schmidt, S.F. Applications of State Space Methods to Navigation Problems. Adv. Control Syst. 1966, 3, 293–340. [Google Scholar] [CrossRef]

- Roumeliotis, S.I.; Burdick, J.W. Stochastic Cloning: A Generalized Framework for Processing Relative State Measurements. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Washington, DC, USA, 11–15 May 2002; Volume 2, pp. 1788–1795. [Google Scholar] [CrossRef]

- Gopalakrishnan, A.; Kaisare, N.S.; Narasimhan, S. Incorporating Delayed and Infrequent Measurements in Extended Kalman Filter Based Nonlinear State Estimation. J. Process Contr. 2011, 21, 119–129. [Google Scholar] [CrossRef]

- Julier, S.J.; Uhlmann, J.K. Fusion of Time Delayed Measurements with Uncertain Time Delays. In Proceedings of the American Control Conference (ACC), Portland, OR, USA, 8–10 June 2005; pp. 4028–4033. [Google Scholar] [CrossRef]

- Sinopoli, B.; Schenato, L.; Franceschetti, M.; Poolla, K.; Jordan, M.I.; Sastry, S.S. Kalman Filtering with Intermittent Observations. IEEE Trans. Automat. Contr. 2004, 49, 1453–1464. [Google Scholar] [CrossRef]

- Choi, M.; Choi, J.; Park, J.; Chung, W.K. State Estimation with Delayed Measurements Considering Uncertainty of Time Delay. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Kobe, Japan, 12–17 May 2009; pp. 3987–3992. [Google Scholar] [CrossRef]

- Yoon, H.; Sternberg, D.C.; Cahoy, K. Interpolation Method for Update with out-of-Sequence Measurements: The Augmented Fixed-Lag Smoother. J. Guid. Control Dyn. 2016, 39, 2546–2553. [Google Scholar] [CrossRef][Green Version]

- Lee, K.; Johnson, E.N. Multiple-Model Adaptive Estimation for Measurements with Unknown Time Delay. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Grapevine, TX, USA, 9–13 January 2017. [Google Scholar] [CrossRef]

- Nilsson, J.O.; Skog, I.; Händel, P. Joint State and Measurement Time-Delay Estimation of Nonlinear State Space Systems. In Proceedings of the 10th IEEE International Conference on Information Sciences, Signal Processing and their Applications (ISSPA), Kuala Lumpur, Malaysia, 10–13 May 2010; pp. 324–328. [Google Scholar] [CrossRef]

- Li, M.; Mourikis, A.I. Online Temporal Calibration for Camera–IMU systems: Theory and Algorithms. Int. J. Rob. Res. 2014, 33, 947–964. [Google Scholar] [CrossRef]

- Qin, T.; Shen, S. Online Temporal Calibration for Monocular Visual-Inertial Systems. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 3662–3669. [Google Scholar] [CrossRef]

- Lee, K.; Johnson, E.N. State and Parameter Estimation Using Measurements with Unknown Time Delay. In Proceedings of the IEEE Conference on Control Technology and Applications (CCTA), Mauna Lani, HI, USA, 27–30 August 2017; pp. 1402–1407. [Google Scholar] [CrossRef]

- Lee, K. Adaptive Filtering for Vision-Aided Inertial Navigation. Ph.D. Thesis, Georgia Institute of Technology, Atlanta, GA, USA, 2018. [Google Scholar]

- Simon, D. Optimal State Estimation: Kalman, H Infinity, and Nonlinear Approaches; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Stevens, B.L.; Lewis, F.L.; Johnson, E.N. Aircraft Control and Simulation: Dynamics, Controls Design, and Autonomous Systems; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Sola, J. Quaternion Kinematics for the Error-State Kalman Filter. arXiv 2017, arXiv:1711.02508. [Google Scholar]

- Markley, F.L. Attitude Error Representations for Kalman Filtering. J. Guid. Control Dyn. 2003, 26, 311–317. [Google Scholar] [CrossRef]

- Forsyth, D.A.; Ponce, J. Computer Vision: A Modern Approach; Prentice Hall: Upper Saddle River, NJ, USA, 2002; pp. 96–147. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003; pp. 22–236. [Google Scholar]

- Bjorck, A. Numerical Methods for Least Squares Problems; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 1996; Volume 51, Chapters 2–9. [Google Scholar]

- Montiel, J.M.; Civera, J.; Davison, A.J. Unified Inverse Depth Parametrization for Monocular SLAM. In Proceedings of the Robotics: Science and Systems (RSS), Philadelphia, PA, UAS, 16–19 August 2006. [Google Scholar] [CrossRef]

- Schutz, B.; Tapley, B.; Born, G.H. Statistical Orbit Determination; Elsevier Academic Press: Burlington, MA, USA, 2004; pp. 387–438. [Google Scholar]

- Hinks, J.; Psiaki, M. A Multipurpose Consider Covariance Analysis for Square-Root Information Filters. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Minneapolis, MN, USA, 13–16 August 2012; p. 4599. [Google Scholar] [CrossRef][Green Version]

- Meijering, E. A Chronology of Interpolation: From Ancient Astronomy to Modern Signal and Image Processing. Proc. IEEE 2002, 90, 319–342. [Google Scholar] [CrossRef]

- Shoemake, K. Animating Rotation with Quaternion Curves. In Proceedings of the ACM SIGGRAPH Computer Graphics, San Francisco, CA, USA, 20–28 July 1985; Volume 19, pp. 245–254. [Google Scholar] [CrossRef]

- Dam, E.B.; Koch, M.; Lillholm, M. Quaternions, Interpolation and Animation; Citeseer: Copenhagen, Denmark, 1998; Volume 2, pp. 34–76. [Google Scholar]

- Gelb, A. Applied Optimal Estimation; MIT Press: Cambridge, MA, USA, 1974; pp. 102–155, 180–228. [Google Scholar]

- Kopp, R.E.; Orford, R.J. Linear Regression Applied to System Identification for Adaptive Control Systems. AIAA J. 1963, 1, 2300–2306. [Google Scholar] [CrossRef]

- Viegas, D.; Batista, P.; Oliveira, P.; Silvestre, C. Nonlinear Observability and Observer Design through State Augmentation. In Proceedings of the IEEE 53rd Annual Conference on Decision and Control (CDC), Los Angeles, CA, USA, 15–17 December 2014; pp. 133–138. [Google Scholar] [CrossRef]

- Del Vecchio, D.; Murray, R. Observability and Local Observer Construction for Unknown Parameters in Linearly and Nonlinearly Parameterized Systems. In Proceedings of the American Control Conference (ACC), Denver, CO, USA, 4–6 June 2003; Volume 6, pp. 4748–4753. [Google Scholar] [CrossRef]

- Carrassi, A.; Vannitsem, S. State and Parameter Estimation with the Extended Kalman Filter: An Alternative Formulation of the Model Error Dynamics. Q. J. R. Meteorol. Soc. 2011, 137, 435–451. [Google Scholar] [CrossRef]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC Micro Aerial Vehicle Datasets. Int. J. Rob. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An Open-Source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3, p. 5. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A Benchmark for the Evaluation of RGB-D SLAM Systems. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar] [CrossRef]

- Wu, A.D. Vision-Based Navigation and Mapping for Flight in GPS-Denied Environments. Ph.D. Thesis, Georgia Institute of Technology, Atlanta, GA, USA, 2010. [Google Scholar]

| Name | ROVIO [15] | VINS-MONO [16] | SVO +MSF [17] | Alternating Stereo VINS [18] | S-MSCKF [19] | OKVIS [20] | |

|---|---|---|---|---|---|---|---|

| Monocular | × | × | × | ||||

| Stereo | × | × | × | ||||

| Indirect | × | × | × | × | |||

| Semi-direct | × | ||||||

| Direct | × | ||||||

| Loosely-Coupled | × | ||||||

| Tightly-Coupled | × | × | × | × | × | ||

| Optimization-based | × | × | |||||

| Filtering-based | × | × | × | × | |||

| Open-source | × | × | × | × | × |

| Multiplier on R | /10 | /3 | 1 | ×3 | ×10 | |

|---|---|---|---|---|---|---|

| RMS error [m] | 1.5096 | 0.1969 | 0.1619 | 0.2636 | 0.2850 |

| Dataset | EuRoC V1 Easy Slow Motion 0.41 m/s, 16.0 deg/s | EuRoC V1 Medium Fast Motion 0.91 m/s, 32.1 deg/s | ||||

|---|---|---|---|---|---|---|

| Method | Cross-Cov OFF | Cross-Cov ON | Cross-Cov OFF | Cross-Cov ON | ||

| Fixed | 0.3376 | 0.2677 | 0.4644 | 0.3135 | ||

| Entirely Estimated | 0.2282 | 0.2406 | 0.4734 | 0.3538 | ||

| Readouts | + N/A | 0.2558 | 0.2032 | 0.4163 | 0.3121 | |

| + Fixed | 0.2869 | 0.2285 | 0.3281 | 0.2218 | ||

| + Estimated | 0.2019 | 0.1461 | 0.3353 | 0.1619 | ||

| Dataset | EuRoC V1 Easy | EuRoC V1 Medium | |

|---|---|---|---|

| Method | Slow Motion 0.41 m/s, 0.28 rad/s | Fast Motion 0.91 m/s, 0.56 rad/s | |

| Latency Compensated VIO | 0.1461 | 0.1619 | |

| S-MSCKF (stereo-filter) | 0.34 | 0.20 | |

| SVO+MSF (loosely-coupled) | 0.40 | 0.63 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, K.; Johnson, E.N. Latency Compensated Visual-Inertial Odometry for Agile Autonomous Flight. Sensors 2020, 20, 2209. https://doi.org/10.3390/s20082209

Lee K, Johnson EN. Latency Compensated Visual-Inertial Odometry for Agile Autonomous Flight. Sensors. 2020; 20(8):2209. https://doi.org/10.3390/s20082209

Chicago/Turabian StyleLee, Kyuman, and Eric N. Johnson. 2020. "Latency Compensated Visual-Inertial Odometry for Agile Autonomous Flight" Sensors 20, no. 8: 2209. https://doi.org/10.3390/s20082209

APA StyleLee, K., & Johnson, E. N. (2020). Latency Compensated Visual-Inertial Odometry for Agile Autonomous Flight. Sensors, 20(8), 2209. https://doi.org/10.3390/s20082209