1. Introduction

An integral component of an effective and efficient defense system to aid the commander in situational awareness of the battlefield is target classification. The task of classifying targets into a predefined set of classes depend on a group of features or attributes that characterize the different categories. Sensors such as radar, infrared (IR) camera, and electronic support measure (ESM) are often deployed to acquire relevant information regarding the different attributes [

1,

2]. Attributes may include signature and kinematic features such as speed, acceleration, altitude, radar cross-section (RCS), shape, length, transmission frequency, pulse repetitive frequency interval (PRI) [

1,

2].

Classification of a target requires data about the different attributes. The information extracted from the data is usually characterized by uncertainty due to ambiguity, imprecision, vagueness, incompleteness, noise, and conflict [

3,

4,

5]. This uncertainty corrupts the quality of the information fusion system. Consequently, how to effectively and efficiently deal with uncertainty has become a topic of interest among researchers in the field of information fusion systems. Multisensor data fusion can effectively address this problem. Dealing with uncertainty through data fusion provokes three fundamental problems of (1) representation of uncertain information (2) aggregation of two or more pieces of uncertain information and (3) making a reasonable decision based on the aggregated pieces of information [

6]. Different mathematical theories of uncertainty, such as probability theory, fuzzy set theory, possibility theory, and belief theory, can be used to tackle these problems. The framework of belief theory, also known as the Dempster Shafer (DS) theory, was first introduced by Dempster in [

7] and later extended in [

8] by Shafer. The theory of belief functions has an established nexus with the probability theory, the possibility theory, and by extension, the fuzzy set theory. The DS theory offers provision for the representation of ignorance. The DS theory of belief functions has been widely accepted as a powerful formalism for modeling and reasoning under uncertainty.

Implementation of the framework of belief theory entails three building blocks: the modeling, the reasoning, and decision making. The modeling involves the representation of historical data to build the belief function model. The reasoning stage is characterized by the generation, analysis, and the combination of belief functions from the various uncertain evidence sources. The transformation of the fused mass into the probability distribution and the application of an appropriate decision rule is the focus of the decision-making block. How to generate the mass function, also known as the basic probability assignment is an open problem [

9]. There is a rich collection of articles to address this problem. At the emergence of the theory, in its various deployments, masses are assigned based on expert opinion [

2]. In [

9], masses are assigned based on the normal distributions. Fuzzy membership functions have been utilized in [

10,

11,

12,

13]. The heart of the reasoning stage is the DS rule of combination. The DS rule of combination can be used to fuse multiple pieces of evidence. However, the DS combination rule suffers a major setback of counter-intuitive results when it is required to aggregate pieces of evidence that are highly conflicting with one another. This phenomenon was first pointed out by Zadeh in [

14], and it has since become another open issue. An attempt to address this problem has given birth to two schools of thought among researchers [

15]. One school of thought believes that the high conflict is due to a problem with the original combination rule. Consequently, they proposed a modification of the traditional DS rule of combination. Some of the significant contributions in this category can be found in [

16]. This approach is such that the conflicting masses are transferred to the universal set. According to [

17], the resulting masses obtained from the combination of conflicting sets are assigned to the union. Philippe Smets in [

18] proposed an alternative method where the conflicting mass is assigned to the empty set. The other school of thought attributed the high conflict to bad evidence or a faulty sensor. As a result, they believe in the modification of the original basic probability assignment (BPA) before the application of the traditional DS rule of combination. These methods are similar to the discounting measure proposed by Shafer in [

8]. A simple average combination rule was proposed in [

19]. In [

20], it was argued that Murphy’s approach does not account for the relationship among the participating bodies of evidence; consequently, a weighted combination approach based on the distance of evidence was proposed. Also, in [

21], Zhang proposed a weighted evidence combination that is capable of identifying and reducing the impact of the conflict. The method explores the cosine similarity among the Pignistic transformations of the various belief functions. Leveraging the strength of methods proposed in [

19,

20] in terms of simplicity and taking into consideration the relationship among participating belief functions, a new combination method based on the average/consensus belief function was proposed in [

22].

In [

11], a target classification algorithm was proposed that employed fuzzy membership functions to generate belief functions where belief functions are combined using the traditional DS rule of combination. The method did not account for possible conflict among the participating BPA, despite the availability of information regarding the BPA, which can be harnessed to mitigate the effect of conflict. To deal with conflict, in [

12], the fusion stage takes into consideration the credibility of each piece of evidence before the combination. The credibility is based on information contained in the BPAs. Also, in [

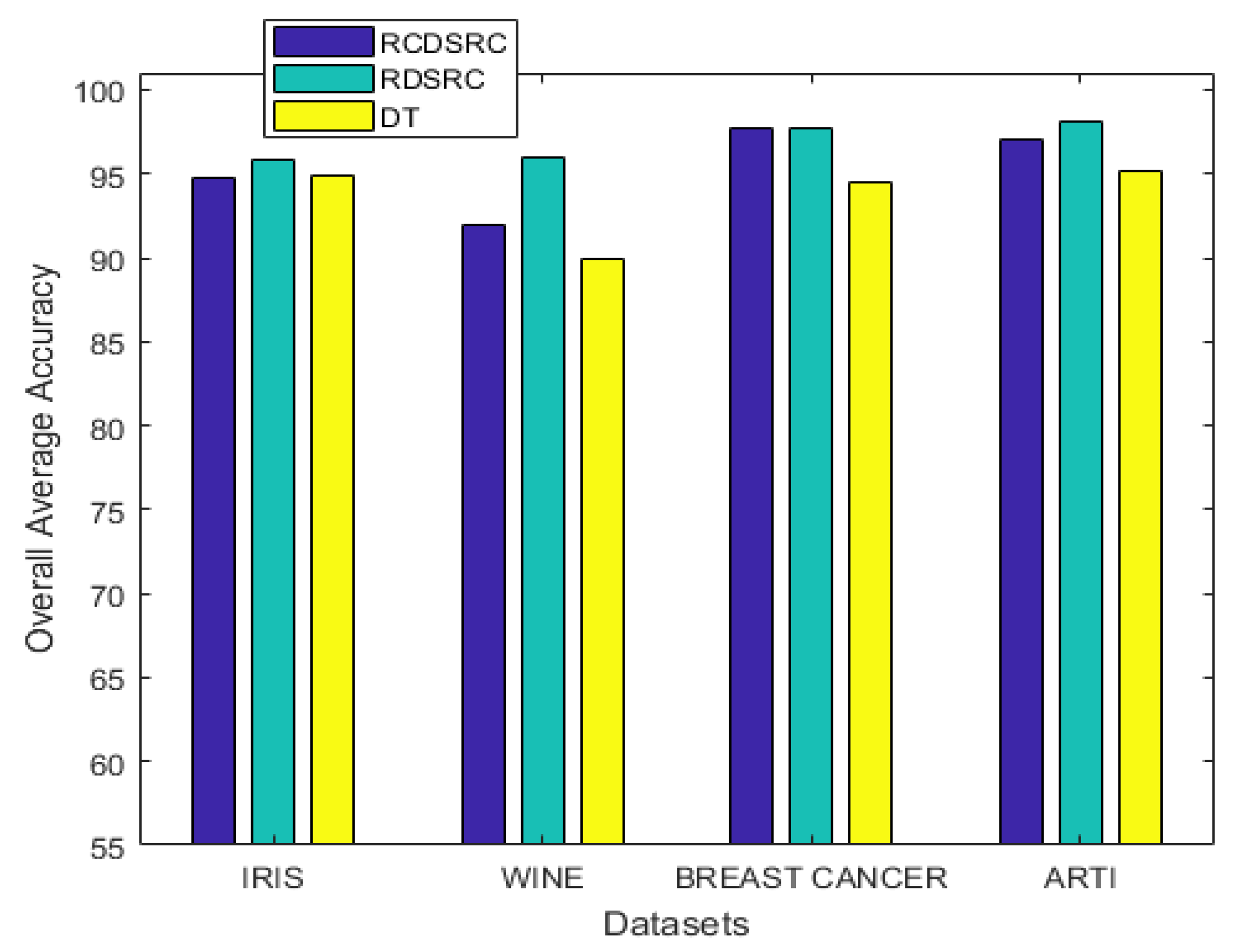

13], a fusion method termed reliability credibility Dempster Shafer rule of combination (RCDSRC) was introduced that utilizes the credibility of each BPA as well as the reliability of the source for proper adjustments of BPAs prior to the DS fusion. It has been observed that in [

12,

13], the spread of the membership function was set to 2 standard deviation from the mean. According to [

23], conflict in the combination of evidence can be attributed to three main factors: (1) abnormal measurement by sensor usually a direct consequence of sensor defect and poor calibration, (2) improper belief function model due to poor estimation of the likelihood function and inappropriate selection of metric for the distance-based method, and (3) Large number of information sources. The membership functions are used to estimate the likelihood of the various classes; this means improper characterization of the fuzzy membership functions may induce conflicts. The idea behind this study is that by adjusting the spread of the membership function, we can improve on the decision accuracy of the method proposed in [

13].

In this study, we propose a reliability-based multisensor data fusion, which is coined as reliability-based Dempster Shafer rule of combination (RDSRC), within the framework of the belief theory for target classification where shape/spread of the membership functions is adjusted during the training/modeling stage. Only the reliability is used to assign weights to the various information sources. The proposed method does not utilize credibility. Since every attribute (information source) of the unknown target produces a local declaration in the form of a belief function, calculation of the credibility for each belief function for every query target will incur additional overhead costs of the reasoning process. Besides the computational requirement, the credibility based on distance or similarity measure is with the assumption that the majority of the belief functions are reliable.

The proposed method is closely related to the work in [

24]. However, they are different in the following respects: in this approach, we use triangular membership functions to model the historical data regarding the different attributes of the various target classes as opposed to the Gaussian membership function used in [

24]. The reliability in this approach was calculated using an evaluation criterion based on the concordance index, while the Jaccard index was utilized in the determination of the static reliability in [

24]. The spread of membership function is adjustable in our proposed method while it is fixed in [

24]. Although the tuning of the spread of the membership function in the proposed method introduces additional overheads, it is only incurred offline. In [

13,

24], credibility/dynamic reliability is calculated at the reasoning phase, which creates an extra cost for on-line identification. The method of generating the BPA is different from the one used in [

24]. This work is basically an extension/modification of [

13]. The major contributions of the newly proposed method are summarized as follows.

We introduced a tuning parameter for the likelihood estimation function and demonstrated its impact on the decision accuracy of the classification system.

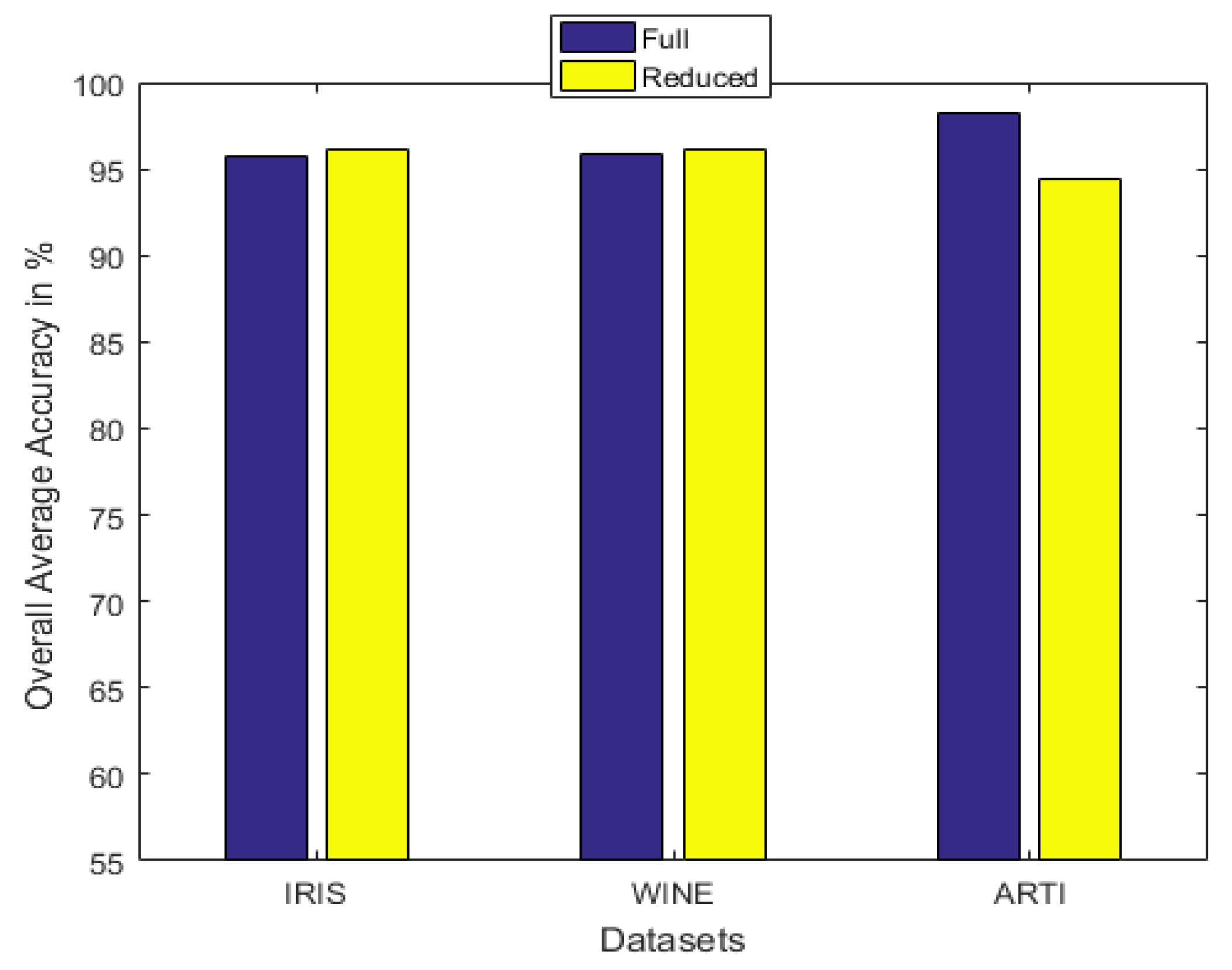

We proposed the average pairwise discordance index (APDI) as a selection criterion to reduce the number of evidence sources before the deployment of the DS framework.

Three real-world and one artificially generated datasets were used to show the performance of the proposed method in terms of accuracy.

The rest of the paper is organized as follows: The basic preliminaries are briefly discussed in

Section 2. In

Section 3, the proposed reliability based multisensor data fusion with application in target classification is presented. The focus of

Section 4 is to show the effectiveness of the proposed approach on both the real and artificial datasets. The conclusion is contained in

Section 5.

3. Proposed Method

Target classification problem can be formulated in the same way as the general data classification problem as follows. Let be a set of n training samples with corresponding class labels , is a dimensional attribute vector with class label , where , which is a set of M classes. Suppose in a target classification system, a set of sensors measuring different attributes of the target produces a collection of basic probability of assignment (BPA) defined as . The primary goal of a target classification system is to assign the unknown target to one of the members of the frame of discernment based on the combination of different pieces of evidence induced by the different attribute measurements.

In [

23], it was asserted that the conflict within the framework of belief theory could be attributed to improper likelihood estimation function. Since fuzzy membership function is employed as the likelihood estimation model which is parameterized by the spread factor, poor characterization using the spread factor

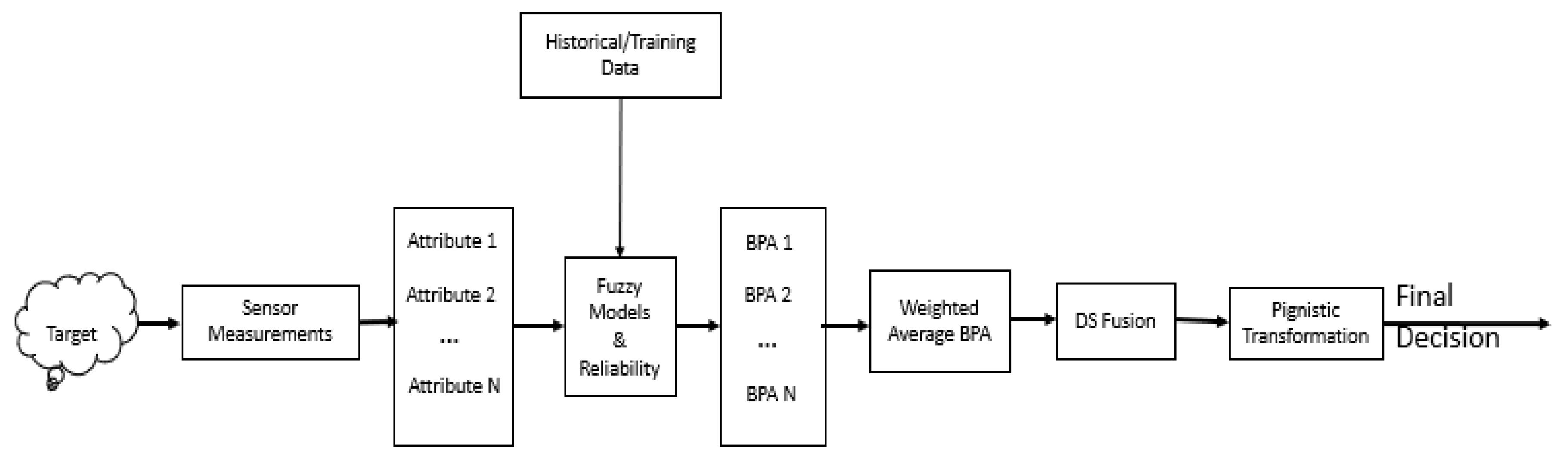

may result in high conflict and consequently, a degradation in the performance of the reasoning process. The motivation for this study was triggered by the application of interval Type−2 fuzzy set for the representation of uncertainty. An interval type 2 fuzzy set is a form of Type2 fuzzy set with uniform secondary membership function. Interval type2 fuzzy set is characterized by upper and lower membership functions. It was discovered that using the lower membership function at the modeling stage did not yield the same value of accuracy as utilizing the upper membership function. Moreover, the only difference between the two is the spread/width. This gives the insight that by varying the spread parameter, we can actually improve on the performance of the proposed method in terms of accuracy. In this work, the theory of belief functions is being proposed as a multisensor data fusion approach for target classification. Individual attributes of the unknown target induce local declarations in the form of belief functions by assigning masses to each of the subsets of the frame of discernment. To address possible conflict, a reliability degree, which is essentially the normalized average pairwise discordance index (APDI), is proposed based on the discriminatory power of each evidence source (attribute or feature). The reliability degree is then used as a weighting factor to obtain a weighted average belief function. The weighted average basic probability assignment (BPA) is fused to produce the final BPA. Decisions are taken based on the probability transformation of the final BPA. The flowchart of the proposed method is shown in

Figure 4.

The entire flowchart can be summarized using the three building blocks of modeling, reasoning, and decision making. The reasoning block corresponds to the credal level with the decision-making block representing the Pignistic level of the transferable belief model (TBM) [

18].

3.1. Modeling

3.1.1. Representation of Historical/Training Data

Fuzzy membership functions are used to model every attribute for the various target classes. In other words, the fuzzy membership functions are deployed to estimate the likelihood of the various target classes. The membership functions are built from the statistical information (the mean and the standard deviation) extracted from the training set.

Individual class

i having an attribute

j is represented by a triangular membership function using the statistical information. The mean

and the standard deviation

for every class

and attribute

are defined as:

is the

sample value given attribute

j and class

i.

T is the sample size for the class. Therefore, for class

i and a given attribute

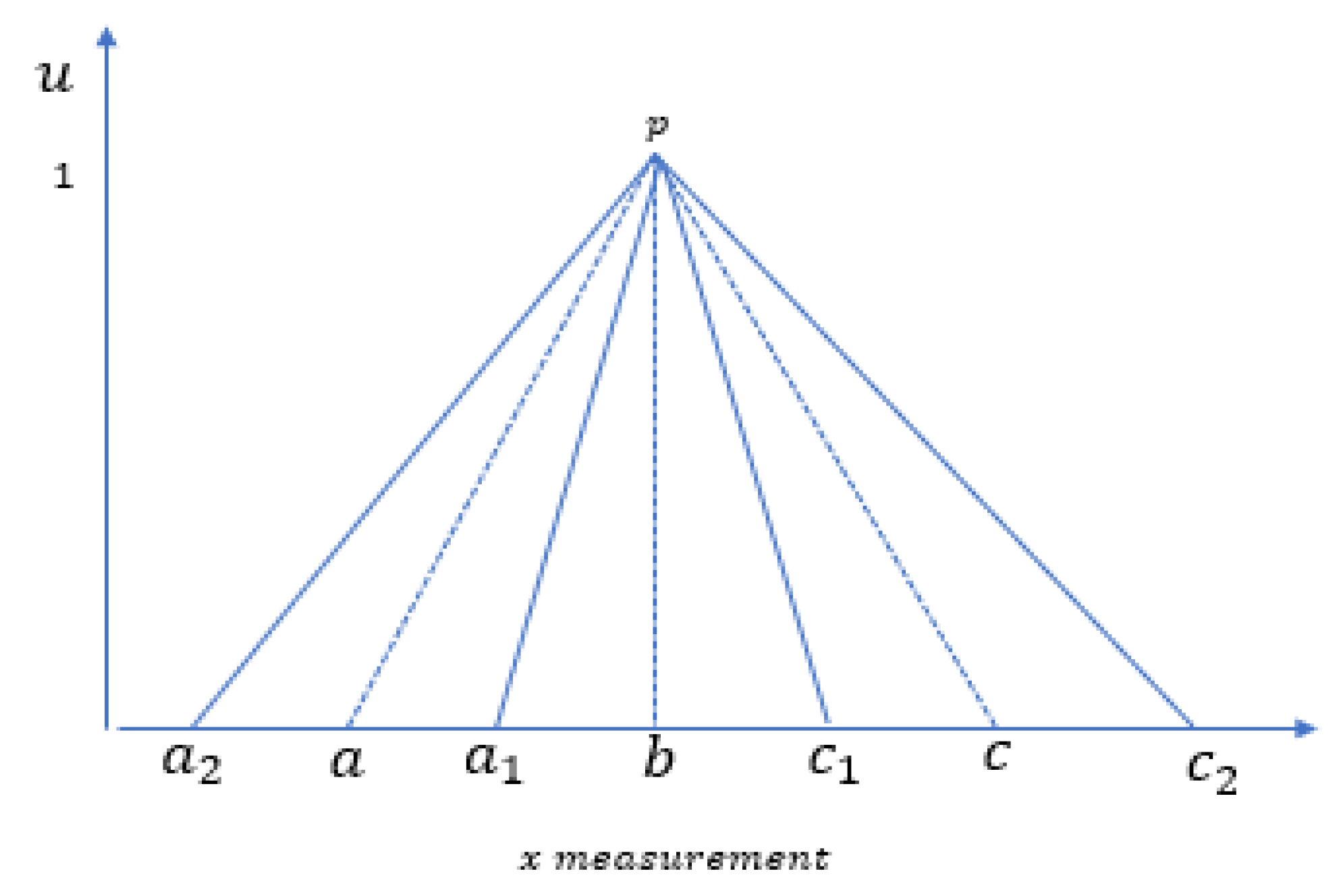

j the triplets for the triangular fuzzy number shown in

Figure 5 is defined as

where

is a tuning parameter (adjustment factor) as opposed to being set to 2 in [

12,

13].

3.1.2. Determination of the Reliability Degree

A reliability factor was proposed in [

13] and its defined as follows

where,

M is the number of classes,

is the concordance index between class

i and

k. We define the reliability degree as the average pairwise discordance index (APDI). Therefore the normalized reliability of source

j can be expressed as

The normalization is required to satisfy the constraint imposed by the DS combination rule. Such that

In [

13], the reliability factor was not normalized, normalization occur after its combination with the credibility degree before the DS fusion.

3.2. Reasoning

The reasoning entails the generation, analysis, and the combination of BPAs. As can be seen, no calculation of credibility is required at the reasoning. Only the (static) reliability obtained at the modeling stage is used. The credibility using the similarity among evidence is with the assumption that a greater proportion of the evidence sources are credible. This assumption may not always be true.

3.2.1. Generation of the Basic Probability Assignment (BPA)

This is where information modeled as belief functions are extracted from sensor measurement. The attribute values of the unknown target are of lower abstraction level, which is mapped into a higher information abstraction level in the form of BPAs. The BPAs are generated based on the similarity between the different attribute values and the fuzzy models(membership functions) obtained from the historical/training data. Due to its simplicity, a similar method used in [

11,

12,

13] is adopted in this work.

3.2.2. Computation of the Weighted Average BPA

Suppose there are

N evidence sources provided by

N sensors. For any proposition

A, a subset of the frame of discernment, the weighted average mass function is a weighted combination of confidence polled from the different evidence sources and it is defined as [

20]

3.2.3. Dempster Shafer (DS) Fusion

Having obtained the weighted average evidence, the next step is to apply the traditional DS Rule of combination on

in

times [

19].

3.3. Decision Making

This essentially consists of the transformation of the belief function and the application of an appropriate decision rule.

3.3.1. Pignistic Transformation

The final BPA retrieved from the DS fusion cannot be employed directly for decision making, hence, a transformation of the final mass function to probability distribution is required. A well-known probability transformation is the Pignistic probability transformation of the transferable belief model defined as [

18]

The ultimate goal of target classification is to assign the unknown target to one of the known classes, thus

equals 1, hence, (

17) reduces to (

18).

We adopted the Pignistic probability for decision making following the justification of its suitability provided in [

30]

3.3.2. Decision Rule

Assign the unknown target to the class with the highest Pignistic probability.

3.3.3. Selection of Spread Factor

The following example is used to illustrate the impact of

on the accuracy of the proposed model on the Iris dataset using only reliability as a weighting factor. Three models are built using 3 different values of

, as shown in

Figure 6 with the triangular fuzzy numbers (TFN1: TFN3). Model1, Model2, and Model3 can be viewed as the lower, the mid, and the upper membership functions, respectively. By applying 5 fold cross-validation 10 times, the associated accuracy with the three different values of

is shown in

Table 1. The focus of this study is not to deploy Type

fuzzy set as the likelihood estimation model but demonstrate a type

fuzzy set as part of the intuition behind this study.

Model1: , ,

Model2: , ,

Model3: , ,

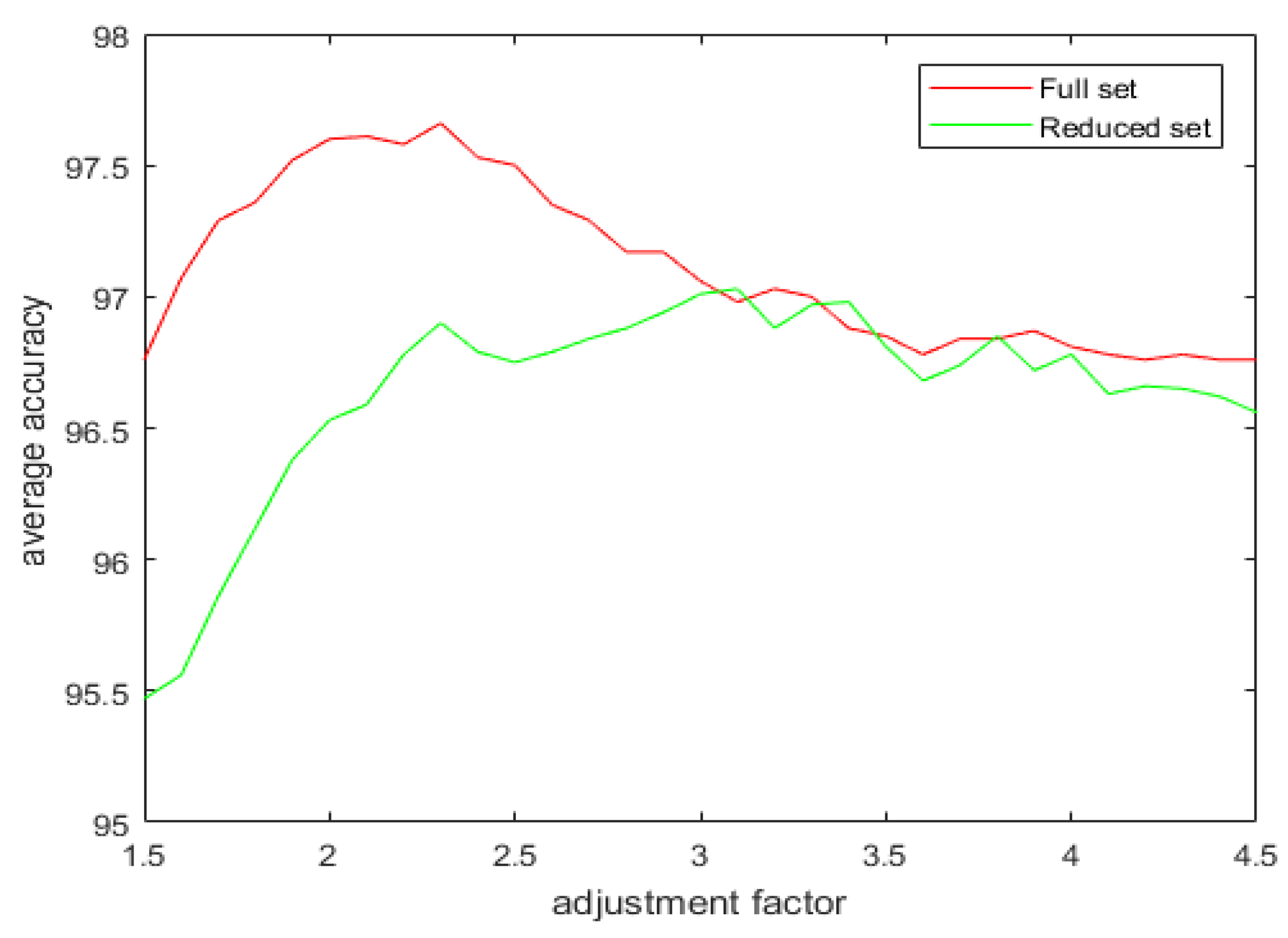

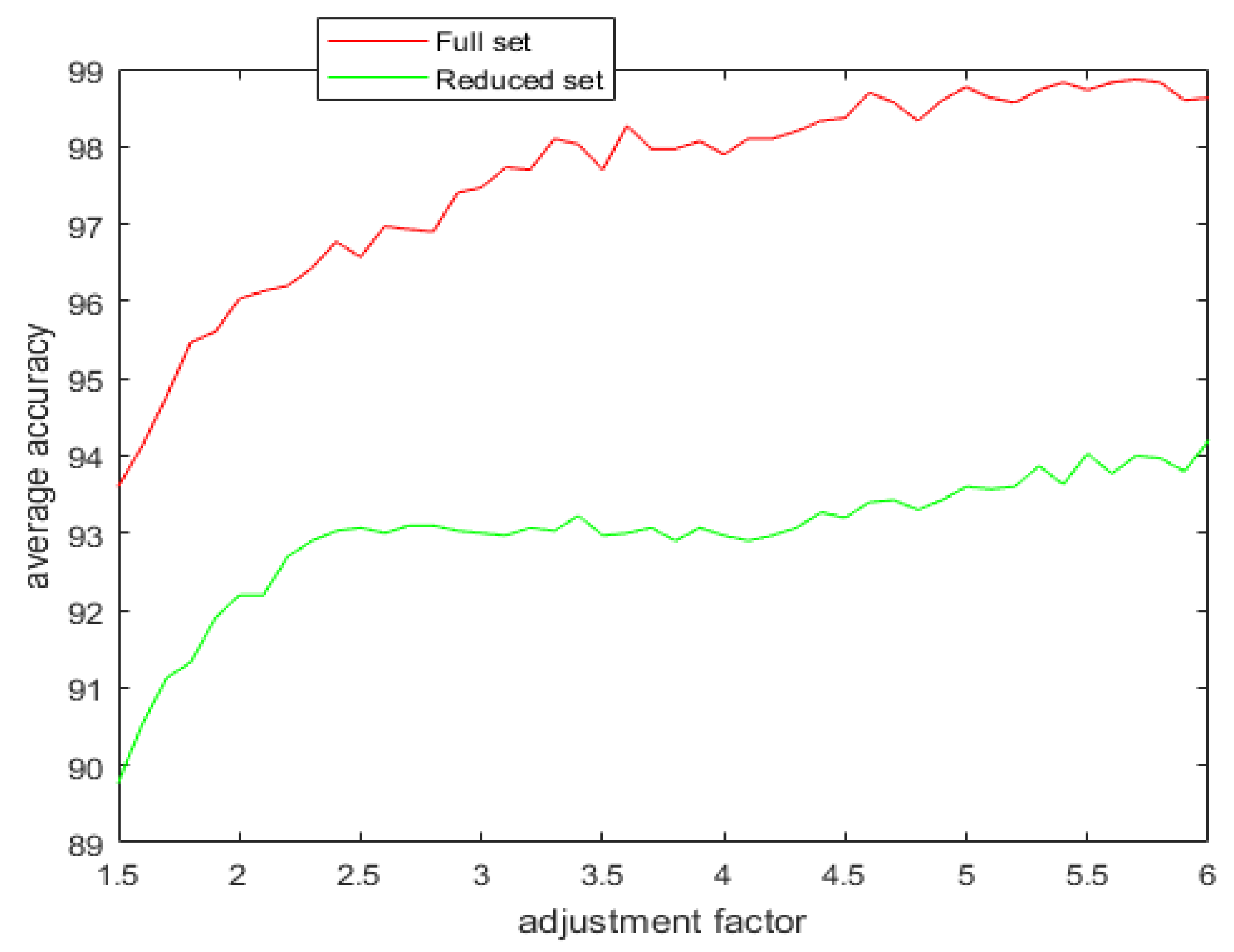

Having discovered that by changing the value of , we can alter the decision accuracy of the DS model. The next question is how to select the value of . The training set is used to determine a suitable value of . With 5-fold cross-validation, we increase from with a step size of to , and their corresponding accuracies on the training set are recorded. The value of that returns the maximum accuracy is selected to build the fuzzy models.

3.4. Selection of Evidence Source

In the proposed framework, every attribute measurement is considered as a source of evidence to induce a corresponding belief function. The implication is that the utilization of every attribute obtained from signature and kinematic sets of the targets for characterization will unavoidably lead to high processing costs [

31]. The long processing time comes from the combination of the various belief functions using the DS rule of combination. In addition to high processing costs, conflict in evidential reasoning can also be attributed to a large number of evidence sources [

23]. Reducing the number of sources is analogous to the challenge of dimensionality reduction in the conventional machine learning algorithm.

Dimensionality reduction is one of the most well-known strategies to remove irrelevant and redundant features. The strategies can be broadly categorized into feature extraction and feature selection [

32]. In feature extraction, the original feature space is transformed into a new feature space with a reduced dimension. However, in feature selection, a subset of the original feature space that enhances the performance of the machine learning algorithm is selected. In this study, feature selection is of importance to us for enhanced interpretation. As a result, we will incorporate a preprocessing stage that will involve a reduction of the cardinality of the measurement set based on the significance of each attribute in relation to its discriminatory capability for the various target classes. Only a set of significant attributes is selected as sources of information to produce the basic probability assignment (BPA). In the traditional machine learning, feature selection can be subdivided into two groups [

33]:

Filter: Features are ranked based on evaluation criteria independent of learning algorithms. Filter methods have proven to be computationally efficient for feature subset selection.

Wrapper: In wrapper, the ranking of individual features utilizes learning algorithms. Wrapper method is more computationally expensive than the filter methods.

Suppose there are N information sources, . The proposed selection method is a filter-based approach that utilizes the average pairwise discordance index(APDI). It is implemented through the following steps:

A pseudo code for the proposed method of selection of information sources is presented in Algorithm 1.

| Algorithm 1 Feature Selection Using APDI |

INPUT: Given a set of information sources OUTPUT: Selected set of information sources S - 1:

- 2:

fordo - 3:

Compute the APDI - 4:

End - 5:

Compute the mean of the APDI - 6:

fordo - 7:

if then - 8:

- 9:

else - 10:

- 11:

endif - 12:

endfor - 13:

returnS

|