Abstract

Although fingerprint-based systems are the commonly used biometric systems, they suffer from a critical vulnerability to a presentation attack (PA). Therefore, several approaches based on a fingerprint biometrics have been developed to increase the robustness against a PA. We propose an alternative approach based on the combination of fingerprint and electrocardiogram (ECG) signals. An ECG signal has advantageous characteristics that prevent the replication. Combining a fingerprint with an ECG signal is a potentially interesting solution to reduce the impact of PAs in biometric systems. We also propose a novel end-to-end deep learning-based fusion neural architecture between a fingerprint and an ECG signal to improve PA detection in fingerprint biometrics. Our model uses state-of-the-art EfficientNets for generating a fingerprint feature representation. For the ECG, we investigate three different architectures based on fully-connected layers (FC), a 1D-convolutional neural network (1D-CNN), and a 2D-convolutional neural network (2D-CNN). The 2D-CNN converts the ECG signals into an image and uses inverted Mobilenet-v2 layers for feature generation. We evaluated the method on a multimodal dataset, that is, a customized fusion of the LivDet 2015 fingerprint dataset and ECG data from real subjects. Experimental results reveal that this architecture yields a better average classification accuracy compared to a single fingerprint modality.

1. Introduction

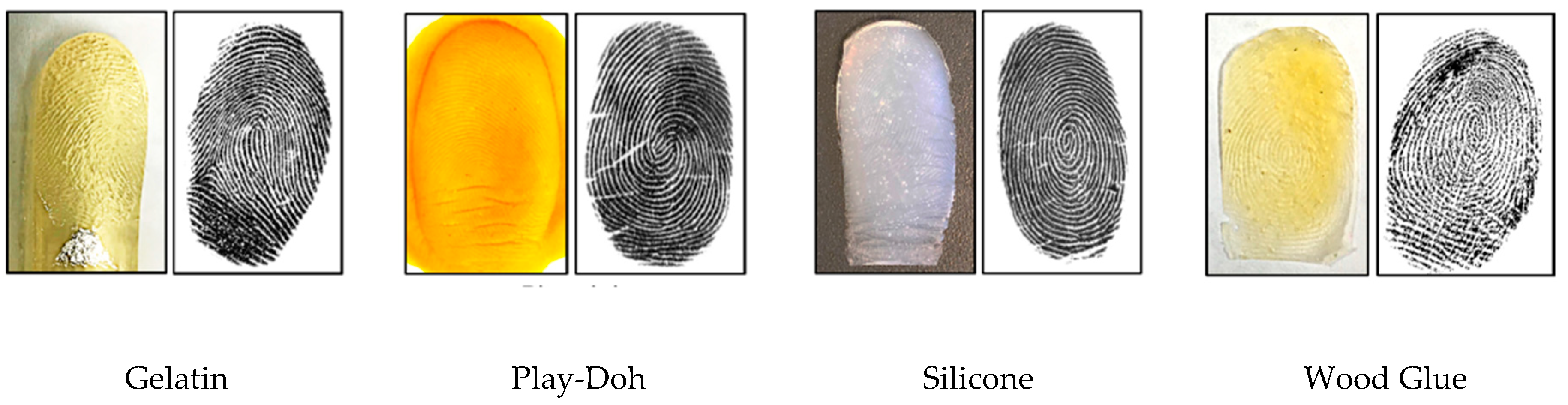

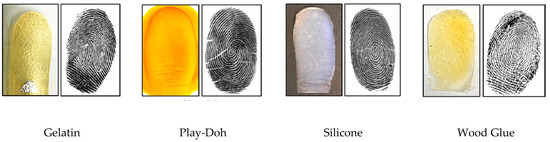

Biometric systems in which the physiological or behavioral characteristics of humans, e.g., fingerprints, electrocardiogram (ECG), gait, iris, and face, are captured and utilized for authentication are increasingly used. Fingerprints are among the most extensively employed biometrics owing to their several advantages, such as acceptability, collectability, and high authentication accuracy [1]. The widespread availability of fingerprint-based systems has made them vulnerable to numerous attacks, mainly presentation attacks (PAs). ISO/IEC 30107 defines a PA as the presentation of a fraudulent sample, such as an artefact or a fake biological sample, to an input biometric sensor with the intention of circumventing the system policy [2]. An artefact can be an artificial or synthetic fingerprint presented as a copy of a real fingerprint, which is also known as a spoof [3]. Figure 1 shows examples of numerous artefact fingerprint samples created by different artificial materials, such as gelatin, Play-Doh, and silicon [4]. For fake biological sample-based attacks, a severed or altered finger, or a finger of a cadaver, is presented to deceive a biometric sensor. The automated process used for detecting a PA in a biometric system is called PA detection (PAD) [2]. The aim of PAD is discriminating the bona fide (i.e., real or live) biometric samples from PA (i.e., artefact) samples.

Figure 1.

Examples of fingerprint artefacts fabricated using different materials. A real image of a fabricated fingerprint is shown on the left and a scanned image using a fingerprint sensor is shown on the right [4].

Fingerprint PAD methods can be divided into hardware- and software-based methods [5]. In a hardware-based method, additional hardware devices are added to the biometric system to capture additional characteristics indicating the liveness of the fingerprint, such as blood pressure in the fingers, skin transformation, and skin odor [6,7,8]. With software-based methods, in contrast, the PAs of the fingerprints are analyzed by applying image processing techniques on fingerprint images. By exploring software-based techniques for fingerprint PAD studied in the literature, these methods can be grouped into handcrafted feature- and deep-learning-based techniques. In handcrafted feature-based techniques, expert knowledge is required to formulate the feature descriptors, whereas in deep-learning-based techniques, no such expert knowledge is required.

The local binary pattern (LBP) is one of the earliest and most common handcrafted techniques that has been investigated for fingerprint liveness detection, in which LBP histograms are applied to extract the texture liveness information using binary coding [9]. Measuring the loss of information while fabricating fake fingerprints is utilized in local phase quantization (LPQ) to differentiate between bona fide and artefact fingerprint images [10]. The Weber local descriptor (WLD) is applied for fingerprint liveness detection, in which 2D histograms representing differential excitation and orientation features are applied [11]. Combining these local descriptors such as WLD with LPQ [11], or WLD with LBP [12], improves the accuracy of detecting the liveness of a fingerprint. A new local contrast phase descriptor is proposed for fingerprint liveness detection as 2D histogram features composed of spatial and phase information [13].

Deep learning techniques have recently proven their superiority over traditional approaches in image classification problems [14,15]. Deep learning techniques have also proven their advantages on 1D signals, including ECG [16,17,18,19]. Several studies have investigated the utilization of deep learning techniques in biometrics systems [20,21,22], and for fingerprint PAD [23,24,25,26]. Convolutional neural network (CNN) networks have exhibited continuous improvements for spoof detection compared with handcrafted techniques. An early work that introduced CNN for fingerprint PAD [23] employed transfer learning using a pre-trained CNN model for detecting fake fingerprints, which achieved the best results in the LivDet 2015 competition [27]. Another use of deep learning for fingerprint PAD is presented in [28], where local patches of minutiae have been extracted and processed using a well-known CNN model called Inception-V3, which achieved state-of-the-art accuracy in fingerprint liveness detection. A CNN model with improved residual blocks was proposed to balance between the accuracy and the convergence time in a fingerprint liveness system [29], wherein they extracted local patches using the statistical histogram and center of gravity. This approach won first place in the LivDet 2017 competition. A small CNN network was proposed to overcome the difficulties in the deployment of a fingerprint liveness detection system in mobile systems by utilizing the structure of the SqueezNet fire module and removing the fully connected layers [24].

Recently, a new group of fingerprint PAD methods have also been considered, which fall outside of software- and hardware-based approaches and are based on the fusion of fingerprint with a more secure biometric modality [30,31]. Several researchers investigated the fusion of fingerprints with a variety of biometric modalities, such as face, ECG, and fingerprint dynamics, to improve the accuracy and security of biometric systems [19,32,33,34,35,36,37,38,39,40,41]. The fusion of an ECG with other biometric modalities [37,38,42,43,44,45,46] has also received attention because the ECG has certain biometric advantages, such as a natural inherence of the liveness characteristic and a continuous authentication over time [47]. The crucial location of the heart in the body enables this biometric to be used as a secure modality. Moreover, a high-quality ECG can be captured from fingers, which make this modality a convenient candidate for a multimodal fusion with fingerprints [48]. These characteristics render ECG biometrics robust against PAs and provide them with advantages over other traditional biometrics. Several studies have considered the fusion of fingerprints and an ECG for PAD in fingerprint biometrics. A sequential score level fusion between an ECG and a fingerprint was proposed in [37]. Later, this approach was improved to be appropriate for fingerprint PAD in an authentication system [38]. Another study on fusing a fingerprint with an ECG was proposed in [36], in which the fusion is achieved at the score level by applying an automatic updating of the ECG templates. In this study, the authors fused an ECG matching score with the liveness score to evaluate the liveness of the fingerprint sample, demonstrating a good performance.

Several recent studies have proposed utilizing a CNN to deal with two-branch networks for processing video data [49,50,51]. A CNN network has been introduced into a multimodal biometric system combining an ECG with a fingerprint [19,52], in which the CNN is used for extracting ECG and fingerprint features. Although CNN was used in these studies, they did not achieve an end-to-end fusion in which the CNN is only used as features extractor and the classification carried out by an independent classifier. Furthermore, these studies focused on authentication performance rather than fingerprint PAD.

In this study, we propose a novel architecture for fusing a fingerprint and an ECG to detect and prevent fingerprint PAs. The proposed architecture is learnable end-to-end from the signal level to the final decision. The proposed method is intended to achieve a high degree of robustness against the PA targeting of a fingerprint modality. We evaluated the proposed system using a customized dataset composed of fingerprints and ECG signals.

The main contributions of this paper are listed as follows:

- Proposal of a novel end-to-end neural fusion architecture for fingerprints and ECG signals.

- A novel application of state-of-the-art EfficientNets for fingerprint PAD.

- Proposal of a 2D-convolutional neural network (2D-CNN) architecture for converting 1D ECG features into 2D images, yielding a better representation for ECG features compared to standard models based on fully-connected layers (FC) and 1D-convolutional neural networks (1D-CNNs).

The remainder of this paper is organized as follows. In Section 2, we introduce our proposed end-to-end deep learning approaches. In Section 3, we present the datasets and experimental setup applied. In Section 4, we present experimental results and discussions. Finally, in Section 5, we provide some concluding remarks and suggest areas of future study.

2. Proposed Methodology

Assume a fingerprint dataset composed of (where is the number of artefact samples and is the number of bona fide samples), where represents the input fingerprint image and is a binary label indicating if a fingerprint is an artefact or a bona fide (real). The aim of ordinary fingerprint PAD is to detect whether a fingerprint image is a PA (artefact) and differentiate it from a bona fide fingerprint sample. In this study, we consider ECG signals as an additional input modality to strengthen the fingerprint PAD system. To this end, the dataset becomes triplet , where is the fingerprint image and is the ECG signal.

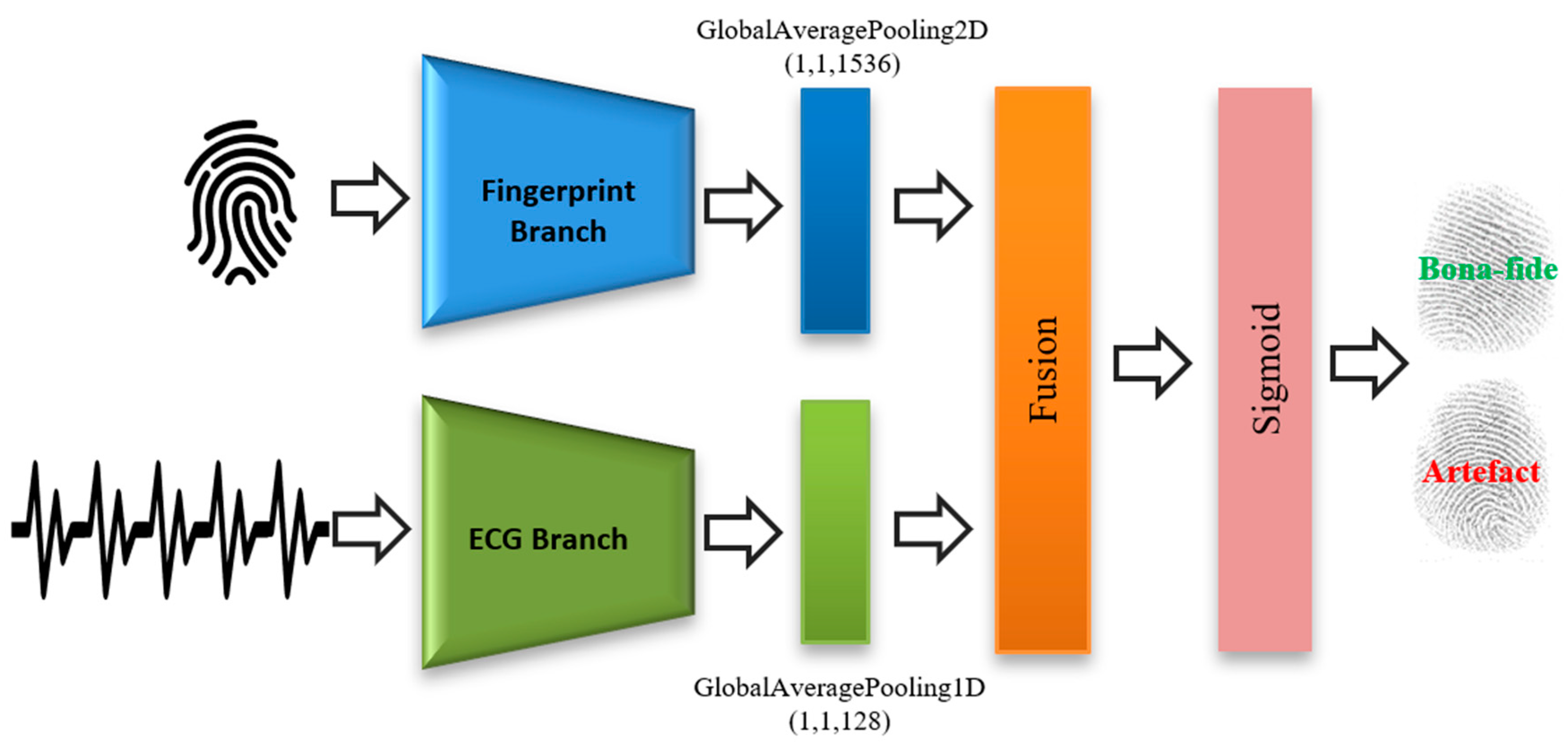

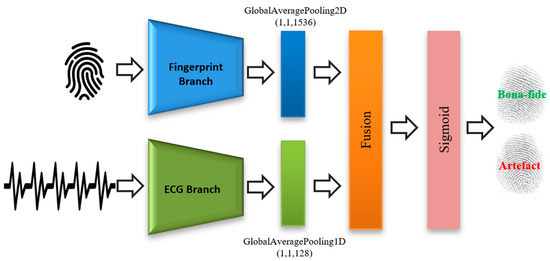

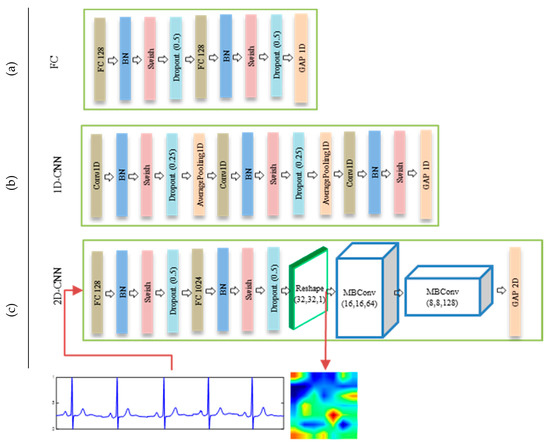

Figure 2 shows the proposed fusion approach, which is composed of three parts, i.e., the fingerprint branch, the ECG branch, and a fusion module. Detailed descriptions for these branches are provided in the next subsections.

Figure 2.

Overall architecture of the proposed end-to-end convolutional neural network-based (CNN) fusion architecture. ECG, electrocardiogram.

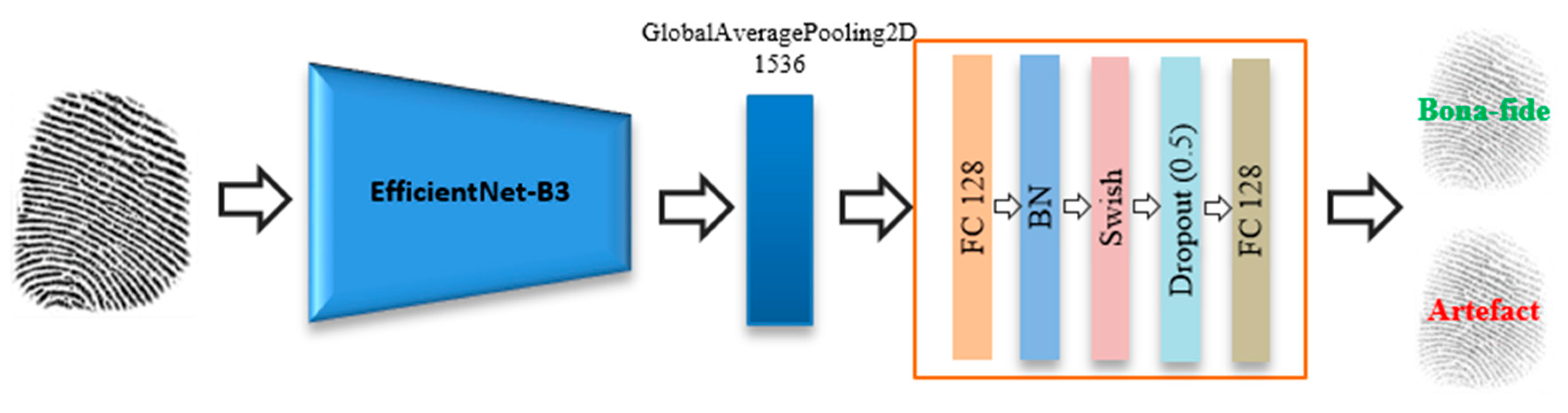

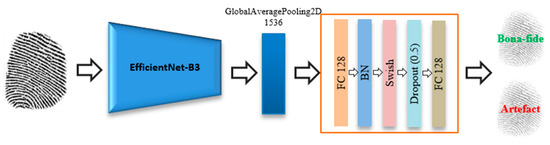

2.1. Fingerprint Branch

A fingerprint branch uses state-of-the-art EfficientNets [53] to obtain the feature representations of a fingerprint as shown in Figure 3 EfficientNets are a family of models that were recently developed by the Google Brain team by applying a new model scaling method for balancing the depth, width, and resolution of the CNNs [53]. Their scaling method uniformly scales the dimensions of a network using a simple and efficient compound coefficient. The compound scaling method enables a baseline CNN network to be scaled up with respect to the available resources while maintaining a high efficiency and accuracy. EfficientNets include mobile inverted bottleneck convolution (MBConv) as the basic building block [54]. In addition, this network uses an attention mechanism based on squeeze excitation (SE) to improve feature representations. This attention layer starts by applying a global average pooling (GAP) after each block. This operation is then followed by a fully-connected layer (with weight ) to reduce the number of dimensions by ). The resulting feature vector is then used to calibrate the feature maps of each channel (V) using a channel-wise scale operation with an extra fully-connected layer with weight . SE operates as shown below:

where is the scaling factor, refers to the channel-wise multiplication, and represents the feature maps obtained from a particular layer of the EfficientNet.

Figure 3.

Flowchart of a fingerprint branch.

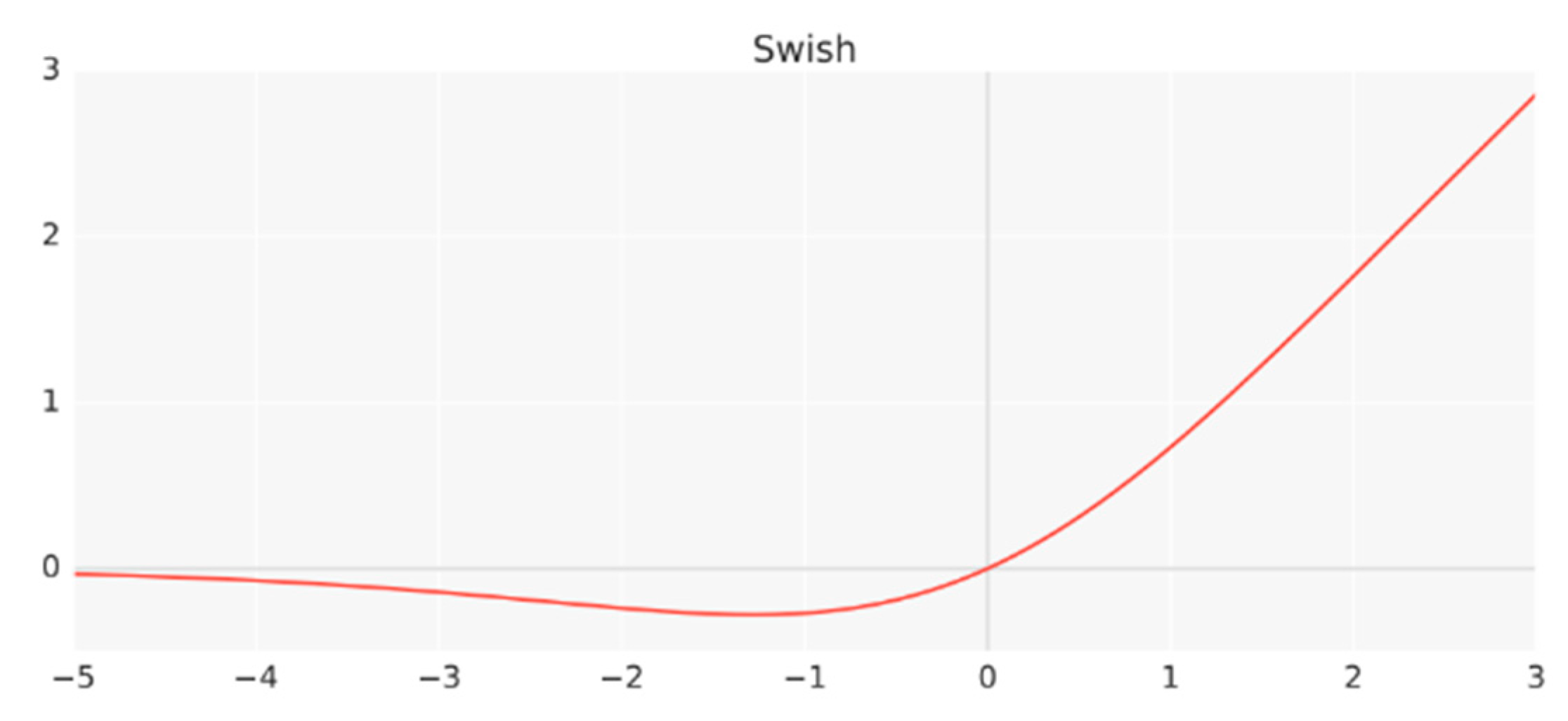

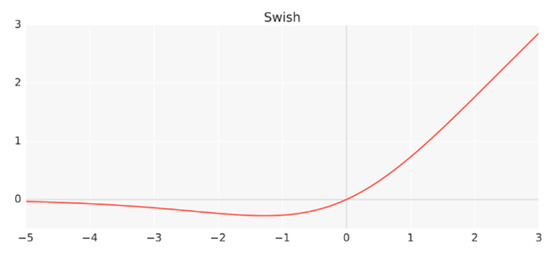

Furthermore, a novel activation function called Swish is used by an EfficientNet, which is essentially the sigmoid function multiplied by x according to Equation (3). Figure 4 shows the behavior of the following Swish activation function:

Figure 4.

Swish activation function [55].

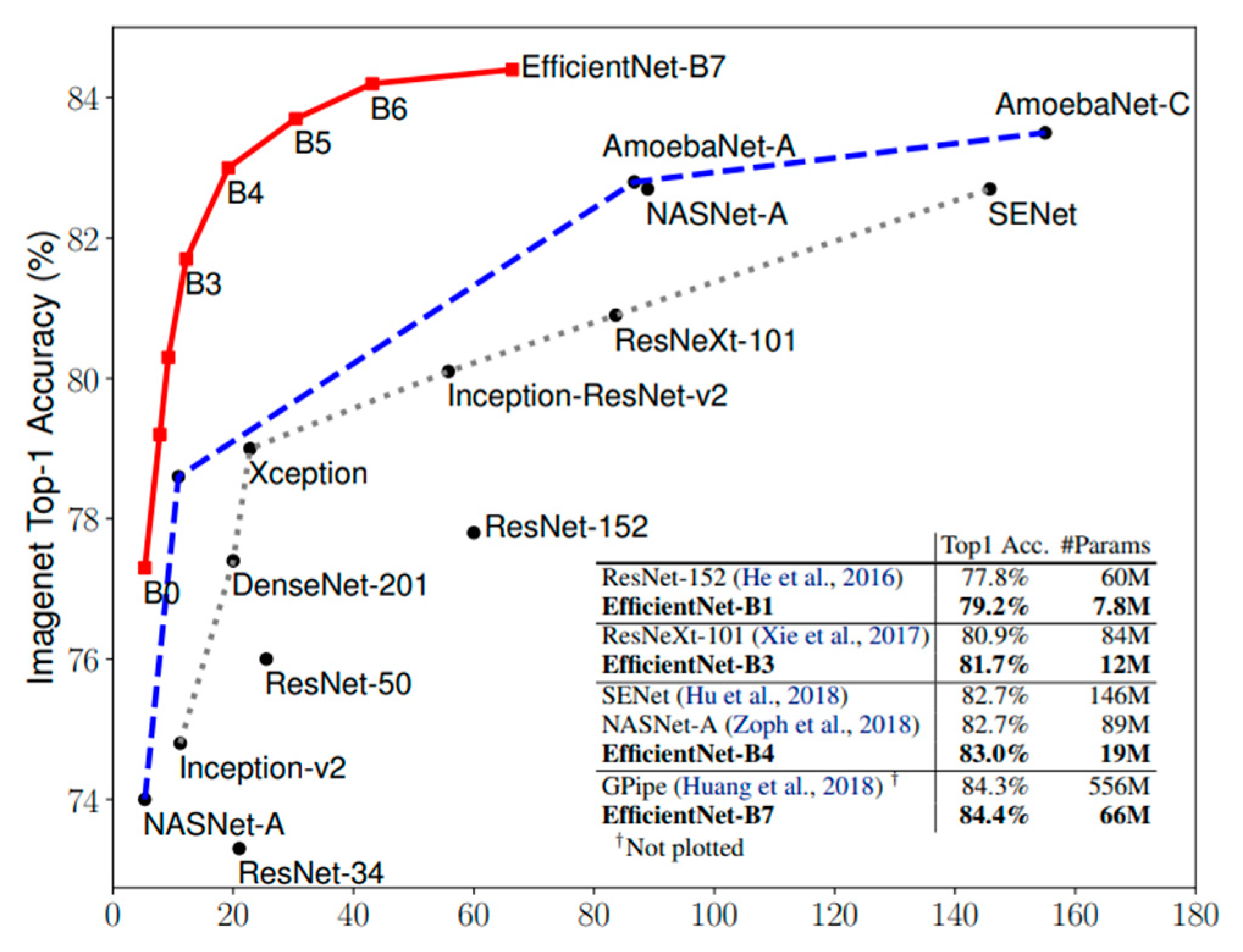

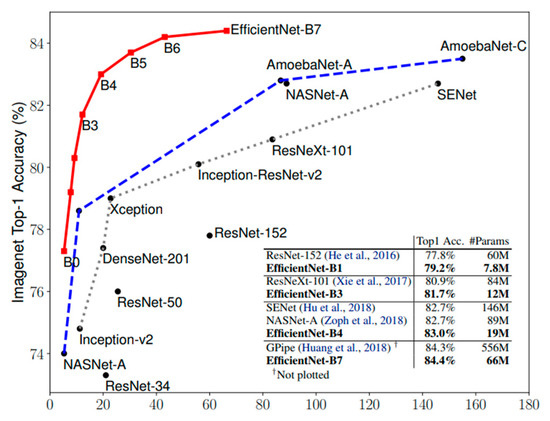

EfficientNet models surpass the accuracy of state-of-the-art CNN approaches on the ImageNet dataset [56] by minimizing the numbers of parameters and FLOPS, as shown in Figure 5. In this study, we investigate the baseline EfficientNet-B3 in terms of the feature representations of a fingerprint. To the best of our knowledge, this is the first time EfficientNets have been used for fingerprint PAD.

Figure 5.

Comparison among EfficientNet and other popular CNN models in terms of ImageNet accuracy vs. model size [53].

During the experiments, we truncated EfficientNet-B3 by removing its 1000 softmax classification layer and used the output of the “swish_78” layer as an input to a fusion module, which has the task of fusing the fingerprint and ECG features, as described later.

2.2. ECG Branch

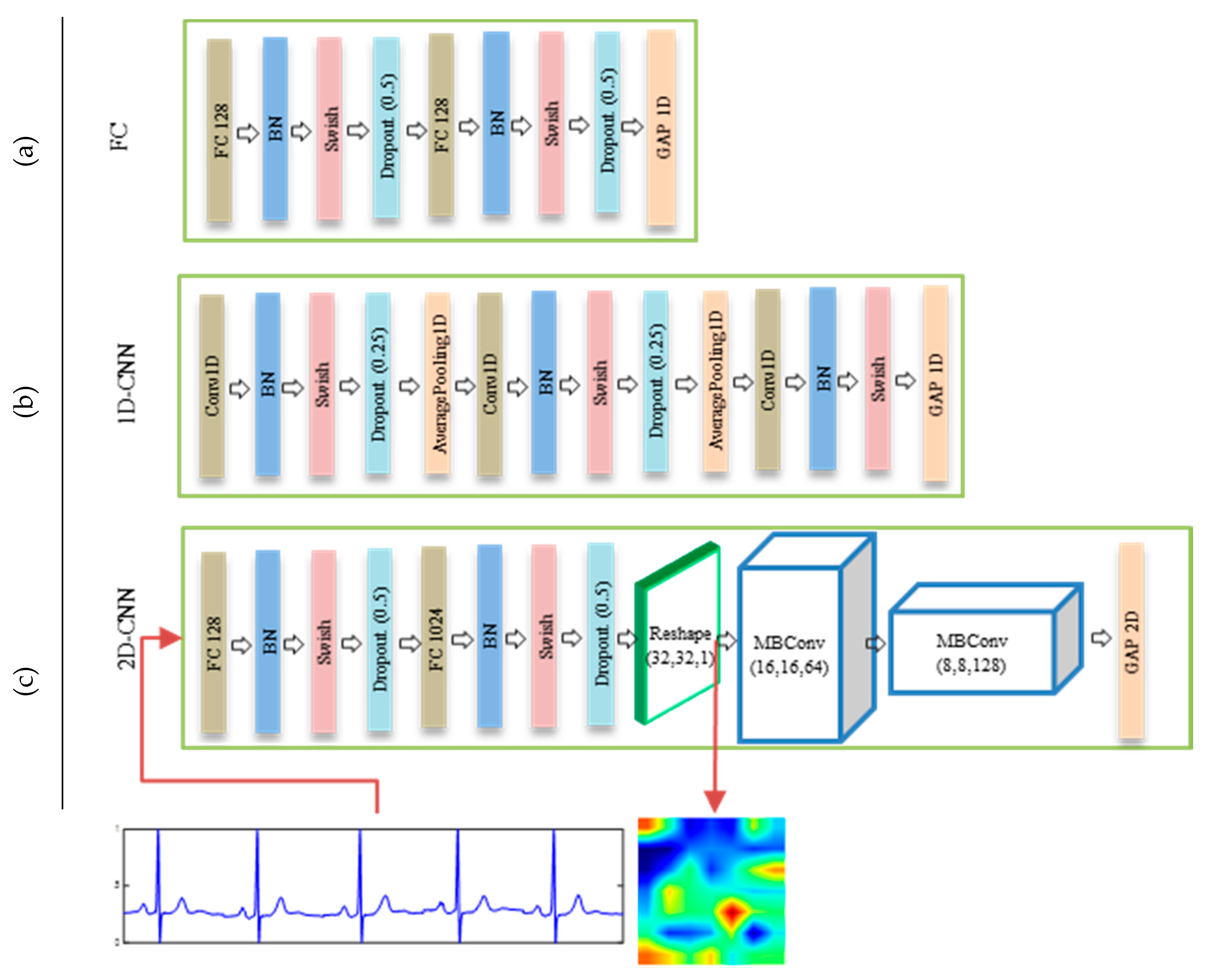

Regarding an ECG branch, we propose three different feature representation architectures, FC, 1D-CNN, and 2D-CNN, as shown in Figure 6. The FC architecture is composed of simple fully-connected layers followed by batch normalization (BN), a Swish activation function, and dropout regularization to reduce an overfitting, as presented in Figure 6a. The second architecture, 1D-CNN, is based on the application of 1D convolution operations on the ECG signals. With this architecture, the ECG signals are fed through three 1D consecutive convolutional layers, the first two layers of which are followed by a BN, Swish, dropout (0.25), and 1D average pooling, and the last layer is followed by a BN, Swish, and 1D average pooling, as shown in Figure 6b. The last architecture, which is one of the main contributions of the present paper, is based on the idea of converting ECG feature into a 2D features image using a generator module and then processing the signal using a standard 2D CNN network, as shown in Figure 6c. In particular, this architecture learns and reshapes a 1D ECG feature into a 2D image using fully connected layers. The resulting image is then fed to two consecutives MBConv blocks to obtain the final 2D representations. Transforming a 1D ECG feature into an image can play a significant role in achieving powerful 2D convolution and pooling operations when learning the appropriate ECG features. During the experiments, we show that this architecture allows the generation of a better representation compared to those based on an analysis of 1D features.

Figure 6.

Details of ECG feature extraction architectures in ECG branch: (a) FC (b) 1D-CNN, and (c) 2D-CNN.

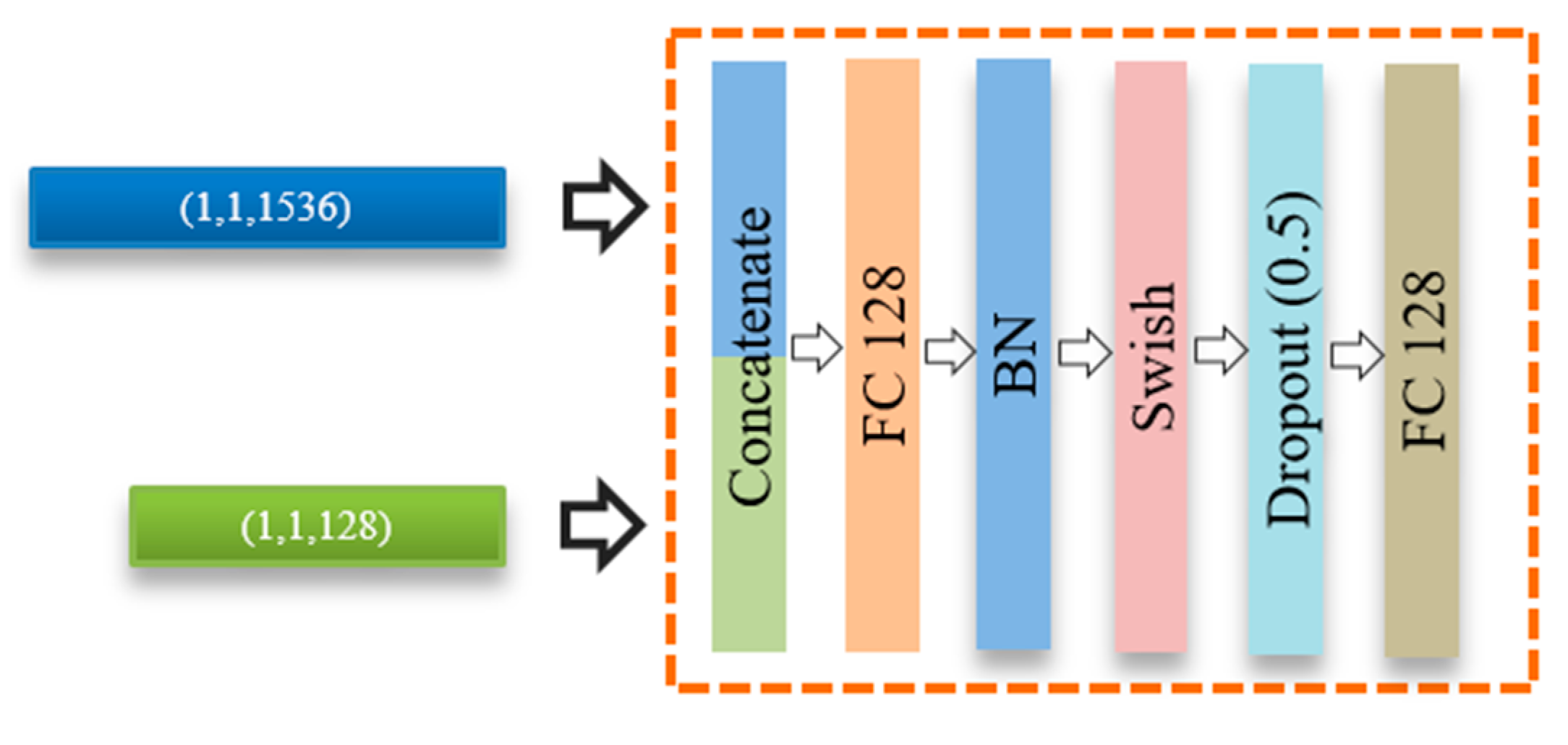

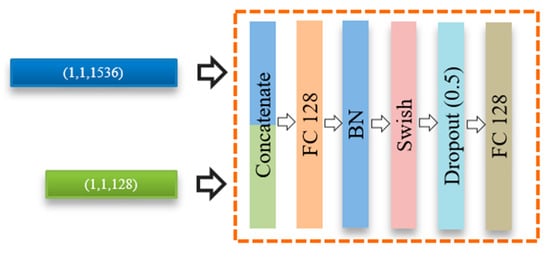

2.3. Fusion Module

The feature representations obtained from both fingerprint and ECG branches are further processed using a fusion module. This fusion module is composed of a sequence of layers, as shown in Figure 7. First, the feature vector of an ECG is concatenated into a fingerprint feature vector to produce a single global feature vector. The concatenated feature is fed to an additional fully connected layer followed by a BN, Swish activation function, and dropout (0.5) regularization. Finally, the output of this module is fed to a binary classification layer using a sigmoid activation function to determine the final fingerprint class, i.e., an artefact or bona fide.

Figure 7.

Structure of the fusion module.

2.4. Network Optimization

As mentioned previously, the complete architecture proposed in this study is a learnable end-to-end network using a backpropagation algorithm. If we define the output of the sigmoid function in the final layer of the trained network as , then the distribution of the network output follows a Bernoulli distribution. The determination of the weights of the network, including those of the fingerprint and ECG branches, can be carried out by maximizing the following likelihood function:

which is equivalent to minimizing the following log-likelihood function:

The loss function in (5) is usually called a cross-entropy loss function. To optimize this loss, we use the RMSProp optimization algorithm proposed by Hinton [57], which is considered one of the most common adaptive gradient algorithms, dividing the gradient by averaging the magnitude of its recent movement as follows:

where represents a moving average of the squared gradients during the iteration process (), and is known as the gradients of the loss function of the weights of the network . Parameters and are the learning rate and moving average, respectively. During the experiments, parameter is set to its default value (), whereas is initially set to 0.0001 and is periodically decreased by a factor of 1/10 for every 20 epochs.

3. Experiments

3.1. Datasets

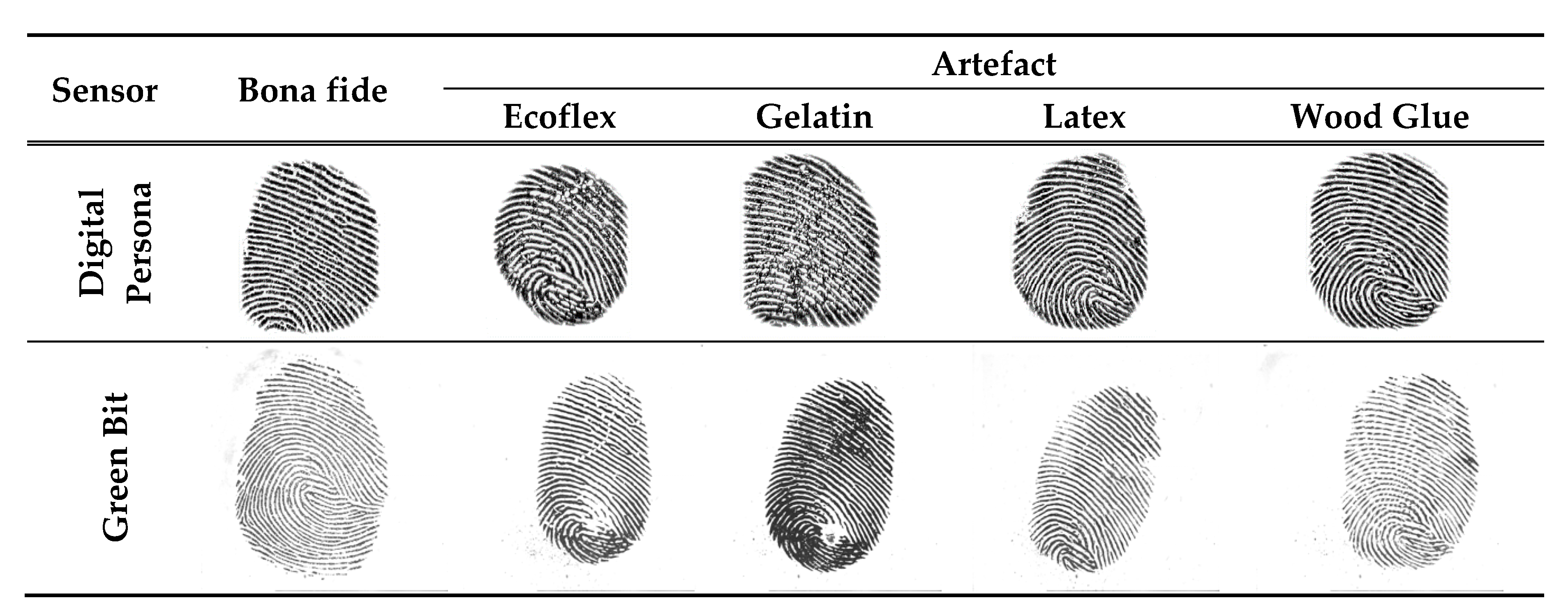

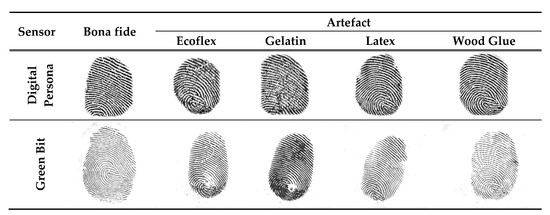

To evaluate the proposed approach, we used the LivDet 2015 dataset for the fingerprint and real ECG datasets collected in our lab. LivDet 2015 has approximately 19,000 images divided into two parts: training and testing. Each part has bona fide (live) and artefact (fake) images captured using different fingerprint sensors, as shown in Table 1. Numerous materials are used for fabricating the artefact fingerprint samples, e.g., Ecoflex, gelatin, latex, and wood glue. The testing dataset contains artefact samples fabricated using various materials, some of which are not used in the training dataset, such as OOMOO and RTV, as shown in Table 2. Figure 8 shows bona fide and artefact samples for the same subject captured from two different sensors, i.e., Green Bit and Digital Persona sensors.

Table 1.

Device and image characteristics of the LivDet 2015 dataset.

Table 2.

Materials used for fabricating fake images in the LivDet 2015 dataset. Some materials in the testing are unknown during training (underlined).

Figure 8.

Bona fide and artefact fingerprint samples from the LivDet 2015 dataset captured using Digital Person and Green Bit sensors. Artefact samples were fabricated using different materials.

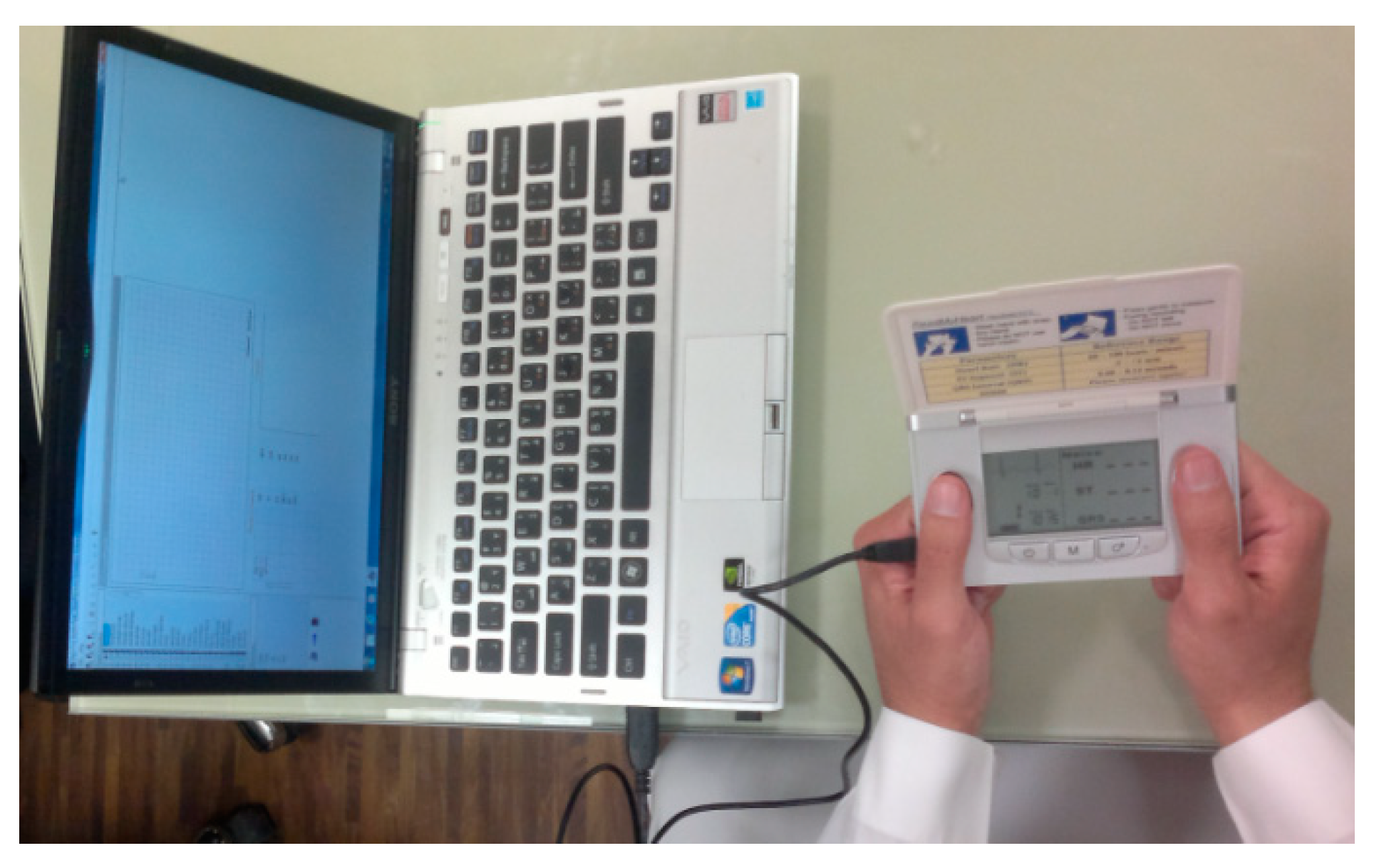

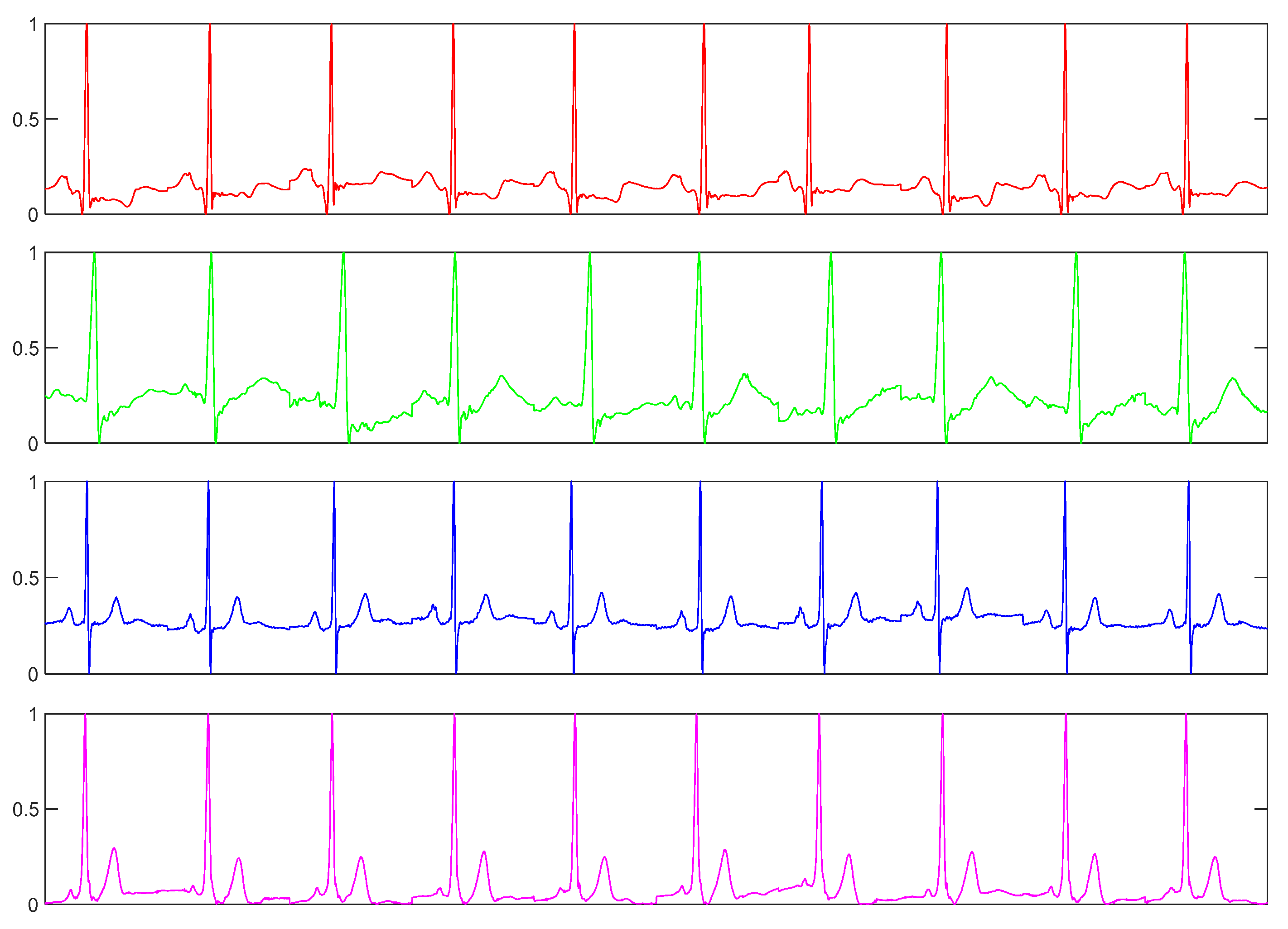

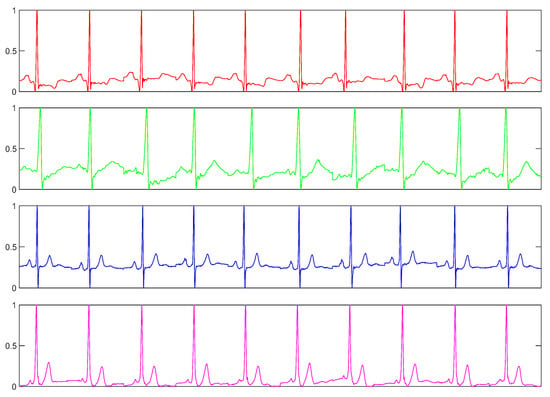

For the ECG dataset, we used a dataset collected in our lab. We collected this dataset using a commercially available handheld ECG device, i.e., ReadMyHeart by DailyCare BioMedical, Inc. (https://www.dcbiomed.com/webls-en-us/index.html), as shown in Figure 9. We built a database of 656 ECG records captured from 164 individuals collected in two sessions [48,58]. Now, we have extended this database with a third session to have 10 records for most of the users. The device captures a signal for 15 seconds, digitalizes it and exports it to the computer as an ECG record. Generally, such a signal may contain different types of noise, such as power-line interface, baseline wanders, and patient-electrode motion artifacts. In the preprocessing step, we use a band-pass Butterworth filter of order four with cut-off frequencies of 0.25 and 40 Hz to remove the noises. Then an efficient curvature-based method is used to detect heartbeats [59,60] and we take the first 10 beats from each record for this experiment. Figure 10 shows such preprocessed ECG samples from four different subjects.

Figure 9.

ECG data collection using the ReadMyHeart device.

Figure 10.

ECG sample of 10 heart beats from four different subjects.

Owing to a lack of availability of public multimodal datasets containing fingerprint and ECG signals, we constructed a multi-model dataset from the LivDet 2015 dataset and an ECG dataset. First, we built a mini fingerprint dataset from the LivDet 2015 dataset, called the mini-livdet2015 dataset, containing images from Digital Persona sensor. This mini-livdet2015 is composed of 70 subjects, each of which has bona fide and artefact samples (10 and 12, respectively). Subsequently, we randomly selected the artefact samples from all available fabricating materials. To form this multimodal dataset, we assigned a random subject from the ECG dataset to each subject from the mini-livdet2015 dataset. Table 3 describes this new dataset, which is comprised of 70 subjects, each of which has 10 bona fide and 12 artefact fingerprint samples, and 10 ECG samples.

Table 3.

Description of the customized multimodal dataset, which contains 70 subjects.

During training, we feed the network with batches of input triplets that cover both possible classes. For the bona fide label, we assign a bona fide fingerprint sample with a bona fide ECG sample from the same subject; i.e., and are bona fide samples belonging to the same subject. Because we do not have artefact ECG signals, we assign an artefact fingerprint sample from one subject with a bona fide ECG sample from another subject (zero-effort ECG sample); i.e., and are bona fide samples from two different subjects.

Feeding the network with these inputs allows learning the correlations between bona fide fingerprint samples and ECG samples of the same subject to correctly predict which samples are bona fide. Furthermore, this network learns how to correctly predict an artefact by learning the features representing the incoherence between artefact fingerprint sample and a bona fide ECG sample of the same subjects or between a bona fide fingerprint sample of a subject and a bona fide ECG sample belonging to different subjects.

3.2. Experiment Setup

To evaluate the proposed approach, we conducted several experiments. First, we carried out an initial experiment to evaluate the performance of the fingerprint branch net regarding the detection of PAs. We compared our results with previous state-of-the-art methods. For this purpose, we utilized the LivDet 2015 dataset, whereas the fingerprint branch net was trained on the training portion of the LivDet 2015 and tested on the testing portion of the same dataset. In the second experiment, we evaluated the three proposed fusion architectures in detecting and preventing the PAs. We then conducted an experiment to analyze the sensitivity of the highest performing architecture during the second experiment. To this end, we analyzed the effects of increasing the number of subjects during the training on the classification accuracy. Finally, we reported the number of parameters and classification time by the proposed architectures compared with state-of-the-art methods.

For training the network, we use the RMSProp optimizer with the following parameters: is set to its default value (), whereas is initially set to 0.0001 and is periodically decreased by a factor of 1/10 for every 20 iterations (epochs). For compatibility with the LivDet 2015 competition [27], the accuracy was used as the evaluation parameter in all of the experiments. The accuracy is defined as the percentage of correctly classified samples.

All experiments were repeated five times and the average classification accuracy was reported. The experiments were carried out using a workstation with i9 CPU @ 2.9 GHz, 32 GB of RAM, and NVIDIA GeForce GTX 1080 Ti (11 GB GDDR5X).

4. Results and Discussions

4.1. Experiments Using Fingerprint Modality Only

Initially, we examined the performance of the proposed fingerprint network based on EfficientNet. This evaluation allows us to compare the performances of this network in terms of fingerprint PAD with that of the methods proposed in the LivDet 2015 competition [27]. Table 4 shows the results after training the network for 50 iterations.

Table 4.

Comparison between the results of the proposed fingerprint branch net and the best methods from the LivDet 2015 competition, where we present the average accuracy %.

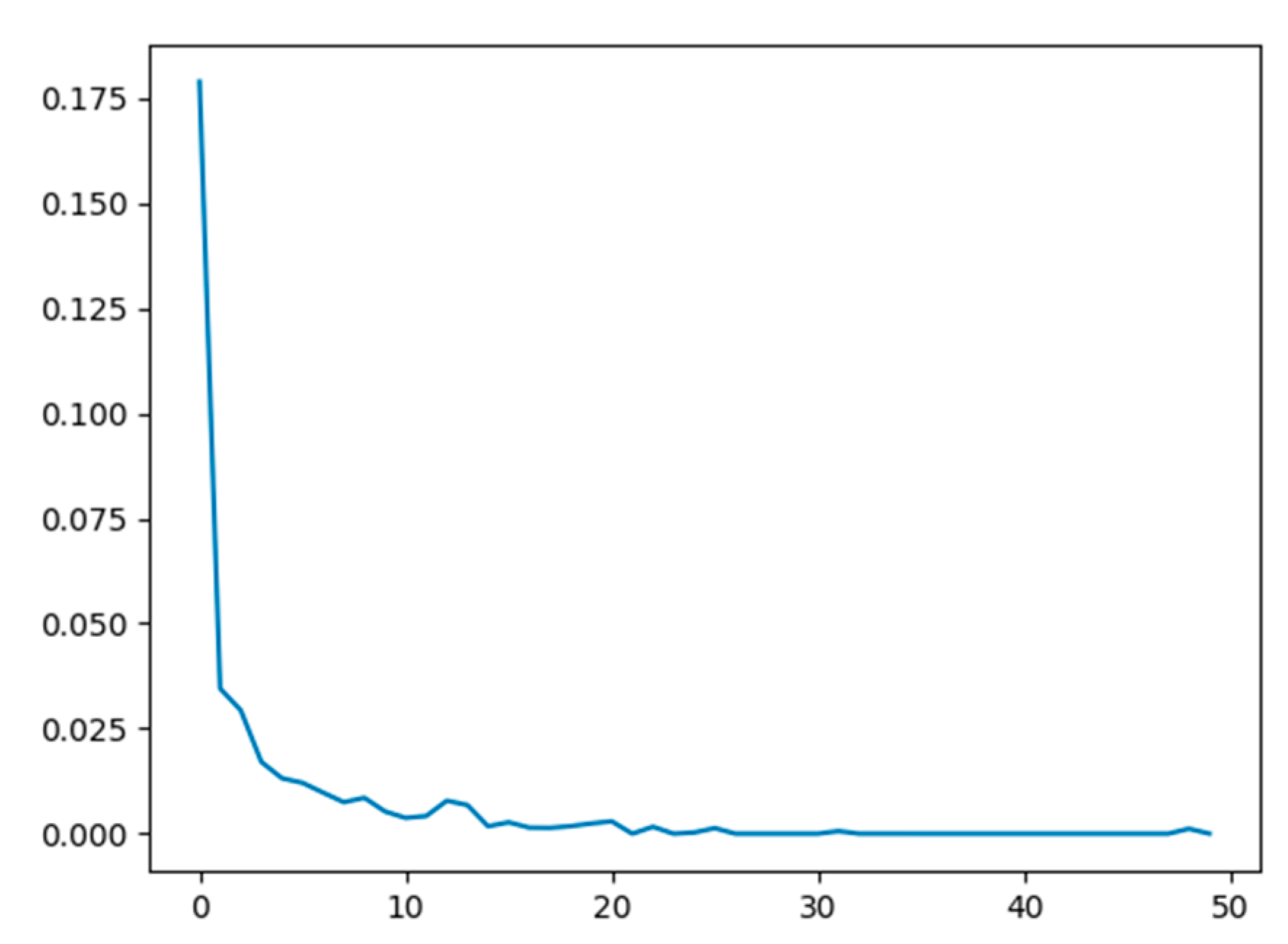

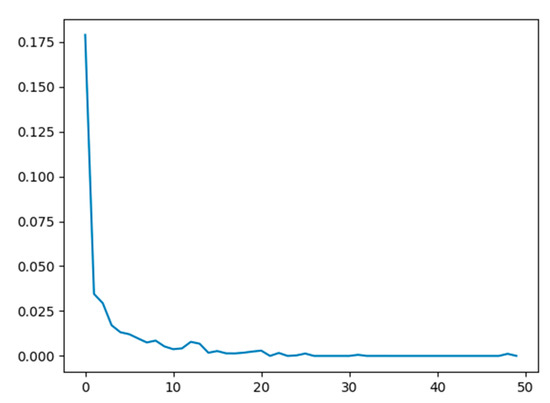

We can see from the results in Table 4 that the proposed fingerprint network achieves an overall classification accuracy of 94.87%. A comparison of the reported accuracy of the proposed network with those reported from the LivDet 2015 competition shows that our method would have been the second-best approach. Moreover, the proposed method follows the same behavior as the other two algorithms in terms of its accuracy for the individual sensors, achieving a high accuracy of 97.29% for the Crossmatch sensor (i.e., an easy to learn sensor) and a relatively lower accuracy of 91.96% for the Digital Persona sensor (i.e., a difficult to learn sensor). Furthermore, the proposed method achieves moderately high accuracies of 94.68% and 95.12% for the Green bit and Biometrika sensors, respectively. Figure 11 shows the progress of the loss function during the training on the LivDet 2015 dataset (training part). Note that the loss converges at a low number of iterations (nearly 25 iterations). The reported results confirm the promising capability of the network in detecting PAs, motivating us to improve it further by proposing a multimodal solution that fuses fingerprints with ECG signals.

Figure 11.

Model loss versus number of epochs (50) by training on LivDet 2015 dataset.

4.2. Fusion of Fingerprints and ECGs

As mentioned previously, owing to the lack of a multimodal dataset containing fingerprints and ECG modalities, we built a mini-livdet2015 dataset and fused it with the ECG dataset. We used a Digital Persona sensor, the most difficult sensor used for the LivDet 2015 dataset, as demonstrated in the previous experiment, and achieved the lowest accuracy 91.96% in comparison to the other sensors. This mini-livdet2015 contains 70 subjects, each of which has 10 bona fide and 12 artefact fingerprint samples. We constructed the multimodal dataset by randomly linking each subject from the mini-livdet2015 dataset to a subject from the ECG dataset, as previously discussed. Before running the fusion network on this multimodal dataset, we first trained the fingerprint network on the mini-livdet2015 dataset to obtain an indication regarding its performance, which is considered a baseline for our fusion mechanism. We obtained an accuracy of 92.98% using 50% of the subjects for training and 50% for testing, i.e., 35 subjects for training and the other 35 subjects for testing.

After this step, we evaluated the complete architecture using the three proposed feature extraction solutions (i.e., FC, 1D-CNN, and 2D-CNN). Table 5 shows the average classification accuracy of the three fusion architectures. Furthermore, the average classification accuracy of the fingerprint network on the mini-livdet2015 dataset is reported.

Table 5.

Average accuracy of three proposed fusion architectures and the fingerprint branch net. The reported results are achieved on the customized dataset.

The reported results show that fusing fingerprints with ECG data clearly improves the accuracy of artefact fingerprint detection. The different architectures, namely, FC, 1D-CNN, and 2D-CNN, achieve accuracies of 94.99%, 94.84%, and 95.32%, respectively, thereby outperforming the accuracy achieved by fingerprint net (i.e., without applying a fusion). As the results indicate, the 2D-CNN model achieves the highest accuracy (95.32%) compared with the other two fusion architectures. The high performance of the 2D-CNN model can be attributed to the conversion of ECG signals into images, thus utilizing the power of 2D convolution and pooling operations, in addition to the introduction of MBConv blocks as the main blocks for learning the representative features.

4.3. Sensitivity Analysis of the Number of Training Subjects

During this experiment, we discuss how the number of subjects used for training can affect the level of accuracy. We repeated the above experiment with different percentages of subjects used for training (between 20% and 80%), the average accuracy of which is reported in Table 6.

Table 6.

Sensitivity analysis of the proposed architectures against the number of training subjects in terms of the reported testing accuracy (ACC %).

The reported results show that increasing the number of subjects (70% and 80%) during the training improves the testing accuracy. Although this behavior is the same for the three proposed architectures, we can see that the 2D-CNN model consistently outperforms the other two models with an accuracy of 97.10% when using 80% of the subjects in the dataset for training. In contrast, decreasing the number of subjects during the training degrades the testing accuracy. Despite the decrease in testing accuracy, the level achieved is still acceptable (89.71%, 89.31%, and 90.79%) for the FC, 1D-CNN, and 2D-CNN, respectively when using 20% of the subjects for training.

4.4. Sensitivity with Respect to the Pre-Trained CNN

In order to further assess the sensitivity of the proposed approach with respect to the pre-trained CNN model, we carried out additional experiments using other well-known pre-trained CNN models: Inception-v3 [61], DenseNet [62], and residual network (ResNet) [63]. We used these recent pre-trained models for this experiment as they require a comparatively small number of parameters as shown in Table 7.

Table 7.

Classification Accuracy using different pre-trained CNN models. We used Inception-v3, DenseNet-169, and ResNet-50.

Inception-v3 is one of Inception models family developed by Google [61], in which they introduced the concept of factorizing of convolutions. Inceptions models are based on increasing the width and depth of the network, by utilizing a module called inception [64], which contains several convolutional layers with different filter sizes. The utilization of the inception module allows the Inception model for a better deal with scale and spatial variations. DenseNet was proposed by Szegedy et al. [62] for better utilization of computing resources. DeneNet is based on adding connections between each layer and every other layer in feed-forward fashion, in which each layer receives the feature maps of all preceding layers as input and feeds its own feature maps as input into all subsequent layers. ResNet was proposed by He et al. [63] to overcome the difficulties in training deeper networks by learning residual functions. These residual networks achieved better optimization and generalization as the depth increases.

We note from the results in Table 7, that EfficientNet-B3 achieves the highest accuracies in the three architectures and outperforms the other pre-trained CNNs. The accuracies of EfficientNet-B3 in the three architecture (94.99%, 94.84%, and 95.32%) consistently exceed the best accuracies of the other models except for Inception-v3 which achieves a comparable result in the case of 2D-CNN (95.20%). However, EfficientNet-B3 requires the minimum number of parameters (10 M after removing the top layers), whereas Inception-v3 and resNet-50 require 21 M and 23 M, respectively (after removing the top layers). Finally, from the reported accuracies, we note that 2D-CNN outperforms the FC and 1D-CNN for all the pre-trained models (i.e., 95.32%, 95.20%, 93.29%, and 94.00% for EffieicentNet-B3, Inception-v3, DenseNet-169, and ResNet-50 respectively).

4.5. Sensitivity of the ECG Network Architecture

In order to further assess the sensitivity of the proposed approach with different configurations, we carried out additional experiments to show the effect of using different configurations of the ECG branch net on the overall accuracy. Considering that the 2D-CNN architecture proves its superiority over the FC and 1D-CNN as shown in the previous sections, we reported the experiments that cover applying different configurations using 2D-CNN architecture. We tested 8 different configurations as described in Table 8. Let us consider the configuration #8, which is shown in Figure 6c: (2 fc = (128, 1024), 2 blocks MBConv (64, 128), fc = 128), this means we use two consecutive fully-connected layers of size 128 and 1024, respectively, in addition to using two consecutive MBConv blocks, of depth 64 and 128, respectively, and finally one fully-connected layer of size 128. The second fully-connected layer fc (1024) means that the feature vector is reshaped into (32 × 32 × 1) as shown in Figure 6c.

Table 8.

Classification accuracy of 2D-CNN network by applying three different configurations for ECG architecture.

From the reported results in Table 8, we note the following points. Removing the first fully-connected layer fc (128) in configuration #1, degraded the accuracy (91.90%), whereas increasing the feature map in configuration #2 by replacing the second fully-connected layer fc (1024) with fc (4096); i.e., the feature vector is reshaped into (64 × 64 × 1); will not significantly improve the accuracy (93.56%). Furthermore, changing the number and sizes of MBConv blocks up to 3 (configurations #6 & #7) or down to 1 (configurations #3, #4, & #5), produces better accuracies up to 95.56% in configuration #4. In the proposed configuration #8, we used 2 MBConv blocks, in which the networks achieved the second best accuracy of 95.32%.

4.6. Classification Time

In this study, we used an EfficientNet with 12 million parameters as the main building block for the fingerprint branch. This model provides impressive results with a low computational cost. In particular, our models converge using only 50 epochs. The complete architecture provides an average classification time for one subject (i.e., fingerprint image + ECG signal) of 30–35 ms (depending on the architecture), which is faster than previous state-of-the-art approaches (i.e., 128 ms [24] and 800 ms [28]). Recall that the approaches described in [21] and [24] applied solutions using only the fingerprint modality and networks with a larger number of weights.

5. Conclusions

In this paper, we proposed an end-to-end deep learning approach fusing fingerprint and ECG signals for boosting the PAD capabilities of fingerprint biometrics. We also introduced EfficientNet, a state-of-the-art network, for learning efficient fingerprint feature representations. The experimental results prove the superiority of the EfficientNet over other known pre-trained CNNs in terms of accuracy and efficiency. For the ECG signals, we proposed three different architectures and configurations FC, a 1D-CNN, and a 2D-CNN. With the 2D-CNN model, we transformed the ECG features into images using a generator network. The experimental results obtained on a multimodal dataset composed of fingerprint and ECG signals reveal the promising capability of the proposed solution in terms of the classification accuracy and computation time. Although we used a customized database of fingerprint and ECG signal to validate the proposed method, we intend to use a database of fingerprint and ECG signal captured simultaneously using a multimodal sensor. Since this type of sensor is not commercially available, we would like to develop a prototype of such a sensor, create a database of real multimodal data, and use it for the validation of the proposed method as our future work.

Author Contributions

Data curation, M.S.I.; methodology, R.M.J. and Y.B.; supervision, H.M.; writing—original draft, R.M.J.; writing—review and editing, H.M., Y.B., and M.S.I. All authors have read and agreed to the published version of the manuscript.

Funding

The authors would like to thank Deanship of scientific research for funding and supporting this research through the initiative of DSR Graduate Students Research Support (GSR).

Acknowledgments

The authors thank the Deanship of Scientific Research and RSSU at King Saud University for their technical support.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jain, A.K.; Kumar, A. Biometric Recognition: An Overview. In Second Generation Biometrics: The Ethical, Legal and Social Context; Mordini, E., Tzovaras, D., Eds.; Springer: Dordrecht, Netherlands, 2012; pp. 49–79. ISBN 978-94-007-3891-1. [Google Scholar]

- Standard, I. Information Technology—Biometric Presentation Attack Detection—Part 1: Framework; ISO: Geneva, Switzerland, 2016. [Google Scholar]

- Schuckers, S. Presentations and attacks, and spoofs, oh my. Image Vis. Comput. 2011, 55 Pt 1, 26–30. [Google Scholar] [CrossRef]

- Chugh, T.; Jain, A.K. Fingerprint Spoof Generalization. arXiv 2019, arXiv:191202710. [Google Scholar]

- Coli, P.; Marcialis, G.L.; Roli, F. Vitality detection from fingerprint images: a critical survey. In Proceedings of the International Conference on Biometrics, Berlin/Heidelberg, Germany, 27 August 2007; pp. 722–731. [Google Scholar]

- Lapsley, P.D.; Lee, J.A.; Pare, D.F., Jr.; Hoffman, N. Anti-Fraud Biometric Scanner that Accurately Detects Blood Flow. U.S. Patent Application No. US 5,737,439, 7 April 1998. [Google Scholar]

- Antonelli, A.; Cappelli, R.; Maio, D.; Maltoni, D. Fake finger detection by skin distortion analysis. IEEE Trans. Inf. Forensics Secur. 2006, 1, 360–373. [Google Scholar] [CrossRef]

- Baldisserra, D.; Franco, A.; Maio, D.; Maltoni, D. Fake fingerprint detection by odor analysis. In Proceedings of the International Conference on Biometrics, Berlin/Heidelberg, Germany, 5 January 2006; pp. 265–272. [Google Scholar]

- Nikam, S.B.; Agarwal, S. Texture and wavelet-based spoof fingerprint detection for fingerprint biometric systems. In Proceedings of the 2008 First International Conference on Emerging Trends in Engineering and Technology, Nagpur, Maharashtra, 16 July 2008; pp. 675–680. [Google Scholar]

- Ghiani, L.; Marcialis, G.L.; Roli, F. Fingerprint liveness detection by local phase quantization. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11 November 2012; pp. 537–540. [Google Scholar]

- Gragnaniello, D.; Poggi, G.; Sansone, C.; Verdoliva, L. Fingerprint liveness detection based on weber local image descriptor. In Proceedings of the IEEE workshop on biometric measurements and systems for security and medical applications, Naples, Italy, 9 September 2013; pp. 46–50. [Google Scholar]

- Xia, Z.; Yuan, C.; Lv, R.; Sun, X.; Xiong, N.N.; Shi, Y.-Q. A novel weber local binary descriptor for fingerprint liveness detection. IEEE Trans. Syst. Man Cybern. Syst. 2018, 50, 1526–1536. [Google Scholar] [CrossRef]

- Gragnaniello, D.; Poggi, G.; Sansone, C.; Verdoliva, L. Local contrast phase descriptor for fingerprint liveness detection. Pattern Recognit. 2015, 48, 1050–1058. [Google Scholar] [CrossRef]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the devil in the details: Delving deep into convolutional nets. arXiv 2014, arXiv:14053531. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:14091556. [Google Scholar]

- Al Rahhal, M.M.; Bazi, Y.; Almubarak, H.; Alajlan, N.; Al Zuair, M. Dense Convolutional Networks With Focal Loss and Image Generation for Electrocardiogram Classification. IEEE Access 2019, 7, 182225–182237. [Google Scholar] [CrossRef]

- Al Rahhal, M.M.; Bazi, Y.; Al Zuair, M.; Othman, E.; BenJdira, B. Convolutional neural networks for electrocardiogram classification. J. Med. Biol. Eng. 2018, 38, 1014–1025. [Google Scholar] [CrossRef]

- Al Rahhal, M.M.; Bazi, Y.; AlHichri, H.; Alajlan, N.; Melgani, F.; Yager, R.R. Deep learning approach for active classification of electrocardiogram signals. Inf. Sci. 2016, 345, 340–354. [Google Scholar] [CrossRef]

- Hammad, M.; Wang, K. Parallel score fusion of ECG and fingerprint for human authentication based on convolution neural network. Comput. Secur. 2019, 81, 107–122. [Google Scholar] [CrossRef]

- Minaee, S.; Abdolrashidi, A.; Su, H.; Bennamoun, M.; Zhang, D. Biometric Recognition Using Deep Learning: A Survey. arXiv 2019, arXiv:191200271. [Google Scholar]

- Talreja, V.; Valenti, M.C.; Nasrabadi, N.M. Multibiometric secure system based on deep learning. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; pp. 298–302. [Google Scholar]

- Al-Waisy, A.S.; Qahwaji, R.; Ipson, S.; Al-Fahdawi, S.; Nagem, T.A. A multi-biometric iris recognition system based on a deep learning approach. Pattern Anal. Appl. 2018, 21, 783–802. [Google Scholar] [CrossRef]

- Nogueira, R.F.; de Alencar Lotufo, R.; Machado, R.C. Fingerprint Liveness Detection Using Convolutional Neural Networks. IEEE Trans Inf. Forensics Secur. 2016, 11, 1206–1213. [Google Scholar] [CrossRef]

- Park, E.; Cui, X.; Nguyen, T.H.B.; Kim, H. Presentation attack detection using a tiny fully convolutional network. IEEE Trans. Inf. Forensics Secur. 2019, 14, 3016–3025. [Google Scholar] [CrossRef]

- Souza, G.B.; Santos, D.F.; Pires, R.G.; Marana, A.N.; Papa, J.P. Deep Boltzmann Machines for robust fingerprint spoofing attack detection. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 3 July 2017; pp. 1863–1870. [Google Scholar]

- Tolosana, R.; Gomez-Barrero, M.; Kolberg, J.; Morales, A.; Busch, C.; Ortega-Garcia, J. Towards Fingerprint Presentation Attack Detection Based on Convolutional Neural Networks and Short Wave Infrared Imaging. In Proceedings of the 2018 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 3 December 2018; pp. 1–5. [Google Scholar]

- Mura, V.; Ghiani, L.; Marcialis, G.L.; Roli, F.; Yambay, D.A.; Schuckers, S.A. LivDet 2015 fingerprint liveness detection competition 2015. In Proceedings of the 2015 IEEE 7th International Conference on Biometrics Theory, Applications and Systems (BTAS), Arlington, VA, USA, 8–11 September 2015; pp. 1–6. [Google Scholar]

- Chugh, T.; Cao, K.; Jain, A.K. Fingerprint spoof detection using minutiae-based local patches. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1 February 2017; pp. 581–589. [Google Scholar]

- Zhang, Y.; Shi, D.; Zhan, X.; Cao, D.; Zhu, K.; Li, Z. Slim-ResCNN: A Deep Residual Convolutional Neural Network for Fingerprint Liveness Detection. IEEE Access 2019, 7, 91476–91487. [Google Scholar] [CrossRef]

- Galbally, J.; Fierrez, J.; Cappelli, R. An introduction to fingerprint presentation attack detection. In Handbook of Biometric Anti-Spoofing. Advances in Computer Vision and Pattern Recognition; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Ross, A.A.; Nandakumar, K.; Jain, A.K. Handbook of multibiometrics; Springer: New York, NY, USA, 2006; Volume 6. [Google Scholar]

- Huang, Z.; Feng, Z.-H.; Kittler, J.; Liu, Y. Improve the Spoofing Resistance of Multimodal Verification with Representation-Based Measures. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Guangzhou, China, 23–26 November 2018; pp. 388–399. [Google Scholar]

- Wild, P.; Radu, P.; Chen, L.; Ferryman, J. Robust multimodal face and fingerprint fusion in the presence of spoofing attacks. Pattern Recognit. 2016, 50, 17–25. [Google Scholar] [CrossRef]

- Marasco, E.; Shehab, M.; Cukic, B. A Methodology for Prevention of Biometric Presentation Attacks. In Proceedings of the 2016 Seventh Latin-American Symposium on Dependable Computing (LADC), Cali, Colombia, 19–21 October 2016; pp. 9–14. [Google Scholar]

- Bhardwaj, I.; Londhe, N.D.; Kopparapu, S.K. A spoof resistant multibiometric system based on the physiological and behavioral characteristics of fingerprint. Pattern Recognit. 2017, 62, 214–224. [Google Scholar] [CrossRef]

- Komeili, M.; Armanfard, N.; Hatzinakos, D. Liveness detection and automatic template updating using fusion of ECG and fingerprint. IEEE Trans. Inf. Forensics Secur. 2018, 13, 1810–1822. [Google Scholar] [CrossRef]

- Pouryayevali, S. ECG Biometrics: New Algorithm and Multimodal Biometric System. Master’s Thesis, Department of Electrical and Computer Engineering, University of Toronto, Toronto, ON, Canada, 2015. [Google Scholar]

- Jomaa, R.M.; Islam, M.S.; Mathkour, H. Improved sequential fusion of heart-signal and fingerprint for anti-spoofing. In Proceedings of the 2018 IEEE 4th International Conference on Identity, Security, and Behavior Analysis (ISBA), Singapore, 12 March 2018; pp. 1–7. [Google Scholar]

- Regouid, M.; Touahria, M.; Benouis, M.; Costen, N. Multimodal biometric system for ECG, ear and iris recognition based on local descriptors. Multimed. Tools Appl. 2019, 78, 22509–22535. [Google Scholar] [CrossRef]

- Su, K.; Yang, G.; Wu, B.; Yang, L.; Li, D.; Su, P.; Yin, Y. Human identification using finger vein and ECG signals. Neurocomputing 2019, 332, 111–118. [Google Scholar] [CrossRef]

- Blasco, J.; Peris-Lopez, P. On the feasibility of low-cost wearable sensors for multi-modal biometric verification. Sensors 2018, 18, 2782. [Google Scholar] [CrossRef] [PubMed]

- Jomaa, R.M.; Islam, M.S.; Mathkour, H. Enhancing the information content of fingerprint biometrics with heartbeat signal. In Proceedings of the 2015 World Symposium on Computer Networks and Information Security (WSCNIS), Hammamet, Tunisia, 4 January 2015; pp. 1–5. [Google Scholar]

- Alajlan, N.; Islam, M.S.; Ammour, N. Fusion of fingerprint and heartbeat biometrics using fuzzy adaptive genetic algorithm. In Proceedings of the 2013 World Congress on Internet Security (WorldCIS), London, UK, 9 December 2013; pp. 76–81. [Google Scholar]

- Singh, Y.N.; Singh, S.K.; Gupta, P. Fusion of electrocardiogram with unobtrusive biometrics: An efficient individual authentication system. Pattern Recognit. Lett. 2012, 33, 1932–1941. [Google Scholar] [CrossRef]

- Pinto, J.R.; Cardoso, J.S.; Lourenço, A. Evolution, current challenges, and future possibilities in ECG biometrics. IEEE Access 2018, 6, 34746–34776. [Google Scholar] [CrossRef]

- Zhao, C.; Wysocki, T.; Agrafioti, F.; Hatzinakos, D. Securing handheld devices and fingerprint readers with ECG biometrics. In Proceedings of the 2012 IEEE Fifth International Conference on Biometrics: Theory, Applications and Systems (BTAS), Arlington, VA, USA, 23–27 September 2012; pp. 150–155. [Google Scholar]

- Agrafioti, F.; Hatzinakos, D.; Gao, J. Heart Biometrics: Theory, Methods and Applications; INTECH Open Access Publisher: Shanghai, China, 2011. [Google Scholar]

- Islam, M.S.; Alajlan, N. Biometric template extraction from a heartbeat signal captured from fingers. Multimed. Tools Appl. 2016. [Google Scholar] [CrossRef]

- Minaee, S.; Bouazizi, I.; Kolan, P.; Najafzadeh, H. Ad-Net: Audio-Visual Convolutional Neural Network for Advertisement Detection In Videos. arXiv 2018, arXiv:180608612. [Google Scholar]

- Torfi, A.; Iranmanesh, S.M.; Nasrabadi, N.; Dawson, J. 3d convolutional neural networks for cross audio-visual matching recognition. IEEE Access 2017, 5, 22081–22091. [Google Scholar] [CrossRef]

- Zhu, Y.; Lan, Z.; Newsam, S.; Hauptmann, A. Hidden two-stream convolutional networks for action recognition. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2 December 2018; pp. 363–378. [Google Scholar]

- Hammad, M.; Liu, Y.; Wang, K. Multimodal biometric authentication systems using convolution neural network based on different level fusion of ECG and fingerprint. IEEE Access 2018, 7, 26527–26542. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. Efficientnet: Rethinking model scaling for convolutional neural networks. ArXiv 2019, arXiv:190511946. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Ramachandran, P.; Zoph, B.; Le, Q.V. Searching for activation functions. arXiv 2017, arXiv:171005941. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Hinton, G.; Srivastava, N.; Swersky, K. Neural networks for machine learning lecture 6a overview of mini-batch gradient descent. Cited On 2012, 14. [Google Scholar]

- Islam, S.; Ammour, N.; Alajlan, N.; Abdullah-Al-Wadud, M. Selection of heart-biometric templates for fusion. IEEE Access 2017, 5, 1753–1761. [Google Scholar] [CrossRef]

- Islam, M.S.; Alajlan, N. An efficient QRS detection method for ECG signal captured from fingers. In Proceedings of the 2013 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), San Jose, CA, USA, 15–19 July 2013; pp. 1–5. [Google Scholar]

- Islam, M.S.; Alajlan, N. Augmented-hilbert transform for detecting peaks of a finger-ECG signal. In Proceedings of the 2014 IEEE Conference on Biomedical Engineering and Sciences (IECBES), Kuala Lumpur, Malaysia, 8–10 December 2014; pp. 864–867. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NY, USA, 26–30 June 2016; pp. 2818–2826. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).