Abstract

Based on the well-established biopotential theory, we hypothesize that the high frequency spectral information, like that higher than 100Hz, of the EEG signal recorded in the off-the-shelf EEG sensor contains muscle tone information. We show that an existing automatic sleep stage annotation algorithm can be improved by taking this information into account. This result suggests that if possible, we should sample the EEG signal with a high sampling rate, and preserve as much spectral information as possible.

1. Introduction

Electroencephalogram (EEG) is a widely used monitoring method to record brain activity in a non-invasive way. It has been extensively applied in various scientific and clinical setups. To date, it is still the gold standard method to define different sleep stages. In order to visually identify these stages, electrooculography (EOG, eye movement) and surface electromyography (EMG, muscle activation/tone) are further recommended, specifically to distinguish non-rapid eye-movement sleep (NREM) from REM sleep. Typically, in sleep laboratories all signals are recorded to provide an accurate sleep staging. The EEG signal is bandpass filtered at 0.3–35 Hz for the visualization purpose [1,2,3] according to the AASM criteria [4,5]. Furthermore, the raw data might be dumped from the commercial polysomnogram (PSG) and the raw data might have been filtered at the frequency range 0.3–35 Hz. Due to the advance of technology and focus on large-scale applications, there is an increasing number of mobile devices capable of recording EEG signal in in-home settings (e.g., single channel amplifiers on the forehead or in-ear/around ear applications) [6,7,8,9]. These mobile solutions might not include EMG and EOG information, and the raw data might be bandpassed for different purposes, like data storage or transmission.

According to the biopotential knowledge [10] about the spectral content of the recorded stimuli (action potentials) conducted by skeletal muscle fibers that contract (shorten) when stimulated, the spectral content above 25Hz of the EMG contains muscle tone information. Based on the normal muscle anatomy, while there is no muscle across the top of the head (epicarnial aponeuosis), we have the occipitofrontalis muscle that moves up the scalp and eyebrows, and several EEG leads are put on top of this muscle. In addition, while the amplitude of the EEG signal is of order 0.025–0.1 mV, the EMG signal is much larger and of order 0.1–100 mV. It is thus reasonable to infer that the high frequency spectral information (e.g., higher than 80Hz) of the EEG signal contains information related to surface EMG [11,12,13]. It is therefore interesting to ask if we can obtain sufficient muscle tone information from the high frequency range of the EEG signal, and use this information for any research or clinical purpose, if the sampling rate is high enough. Previous researchers have considered spectral power information around 80Hz in their sleep scoring algorithms and called 40–120Hz range as the EMG band [14,15], but to the best of our knowledge, generally information higher than 80Hz is less considered in sleep staging studies. Often, these frequencies are considered artificial noise in the sleep EEG and discarded. Yet, this “noisy” high-frequency bands might hold EMG related information that helps in sleep stage classification. To date, it is unclear whether this high-frequency content will add additional information to improve an existing sleep stage classification algorithm. This question is particularly important when we have only one EEG channel in a mobile health setup and not the standard montage in the lab that includes EMG for sleep stage differentiation. Clearly, unlike in the hospital setup, in this setup any single bit of information should be preserved and utilized.

In this paper, we want to illustrate that high frequency spectral information might capture EMG information and is therefore a valuable tool to better differentiate sleep stages. According to the sleep physiology [16,17], the EMG contains necessary information to distinguish REM and N1 (transition state in NREM sleep). Specifically, a normal subject is atonic during the REM stage, but the muscle tone is relative active during N1. Without the EMG, it might be challenging for a sleep expert, or a well-trained artificial intelligent (AI) system, to distinguish REM and N1. This fact has been well reflected in several state-of-the-art automatic sleep stage classification algorithms based on the single channel EEG signal [18,19,20,21,22]. As we can identify from those papers, the classification accuracy of N1 and REM is usually limited. We mention that the EEG signals in the commonly considered benchmark database in those papers, Sleep-EDF Database (Expanded) [23], are sampled at the sampling rate 100 Hz; that is, the spectral information higher than 50 Hz is not available. Motivated by the above-mentioned biopotential knowledge, in this report we hypothesize that we can improve an automatic sleep stage annotation system to obtain a more accurate N1 and REM classification if we keep the high frequency spectral information of the EEG signal.

2. Method and Material

2.1. Automatic Sleep Stage Annotation Algorithm

To show the usefulness of high frequency spectral information of the EEG, we consider a recently developed automatic sleep stage annotation algorithm from our group [22]. The algorithm is composed of three steps: preprocessing, unsupervised feature extraction, and learning. In the preprocessing step, we only apply the 60 Hz notch filter, and do not apply detrend or artifact rejection. We do not apply detrend or artifact rejection since the feature extraction algorithm summarized below automatically handles these issues.

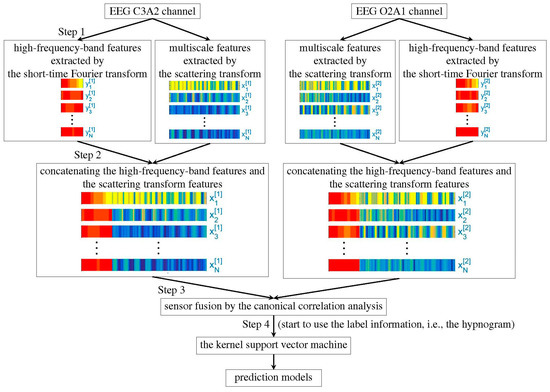

The feature extraction step is summarized in Figure 1. We firstly apply the recently developed scattering transform [24,25] to extract features from the EEG, filtered or unfiltered. This part of feature is obtained by iteratively applying the continuous wavelet transform and nonlinear modulus operators on a given EEG signal. It has been proved that the extracted features are stable to the time-warping deformation [24,25]. Moreover, they can effectively characterize self-similarity and intermittency properties of multiscale time series [26]. While the scattering transform is motivated by establishing a mathematical foundation of the convolutional neural network, it does not rely on the label information, so the feature extraction step in this algorithm is unsupervised. For unfiltered EEG signals, the authors further apply the short-time Fourier transform (STFT) to extract extra features from high frequency bands, including (35,80), (80,150), and (150,250) Hz. As indicated by Part 2 in Figure 1, the features obtained by the scattering transform and the short-time Fourier transform are merged together by the concatenation method. In view that each sleep stage has a correlation with the sleep stages before it, the temporal relationship is taken into account. Specifically, for each 30s epoch, we apply the scattering transform on a 90s EEG signal, which consists of the current 30s EEG signal and its previous 60s EEG signal. When we have two EEG channels, we apply the canonical correlation analysis (CCA) [27] to merge the heterogeneous information extracted from different channels and reduce their dimensions (indicated by Part 3 in Figure 1). Note that while we may consider modern dimension reduction methods, like the alternating diffusion maps [28], to simplify the discussion, we focus on the traditional CCA algorithm. Finally, we apply the well-established kernel support vector machine (SVM) [29] to learn the experts’ knowledge by classifying the CCA fused features (indicated by Part 4 in Figure 1).

Figure 1.

Flowchart of the proposed algorithm.

We mention that the high frequency features associated with STFT was not considered in our previous work [22], otherwise the algorithm is the same. We refer readers with interest to that paper [22] for technical details. The code is implemented in MATLAB 2016a on a desktop PC with an Intel(R) Core(TM) i7-7820 3·6 GHz CPU and 64 GB of RAM, and is available via request. The scattering transform used in this study might be of independent interest, and readers having interest can download the generic code from www.di.ens.fr/data/software/scatnet.

2.2. Statistics

To evaluate the spectral relationship between EMG and EEG, for each considered frequency band and sleep stage, we construct a dataset collecting all spectral energies over the considered frequency band of all 30 s EEG segments that are labeled as the considered sleep stage from all ten subjects. A similar procedure is used to construct a database from the EMG segments. Then, we apply the Pearson correlation to study the linear relationship between these two datasets. To test the hypothesis that EMG and EEG’s spectral energies are uncorrelated, the permutation test [30,31]. With permutations is applied and we set the significant level to be 0.05. To account for the multiple test issue, the p-value is conservatively adjusted by the Bonferroni correction.

To evaluate how the high frequency spectral information impacts the performance of the automatic sleep stage annotation, we consider the leave-one-subject-out cross validation (LOSOCV). In the LOSOCV scheme, the database is divided into the training set and the validation set, and each set contains different subjects. As is discussed in our previous work [22], the LOSOCV scheme is challenged by the inter-individual variability and is close to the real-world clinical setup.

All performance measurements used in this paper are computed through the unnormalized confusion matrix since we have 5 sleep stages to classify. For , the entry represents the number of expert-assigned p-class epochs, which were predicted to the q-class. The precision (PR), recall (RE), overall accuracy (ACC), Cohen’s kappa () coefficient and F1-score () of the p-th class, where p = 1,…,5, are computed respectively from the confusion matrix. Finally, we evaluate the macro F1 score (Macro F1), which is the mean of 5 F1-scores associated with 5 sleep stages:

2.3. Database

The Institutional Review Board of Shuang Ho Hospital (SHH), Taipei Medical University, New Taipei City, Taiwan approved the study protocol (No. 101-4968A3). We consider the database from the sleep center at SHH collected from 2018/8/29 to 2019/4/26, where the subjects were suspected to have sleep apnea syndrome but ended up with a normal apnea-hypopnea index (AHI); that is, AHI is less than or equal to 5. A standard polysomnogram (PSG) study was performed with at least 6 h of sleep. The whole night signals were recorded by a biosignal amplifier system from Embla (NeuroLite, Belp, Switzerland). Standard signals, such as EEG signals (F3-A2, F4-A1, O1-A2, O2-A1, C3-A2 and C4-A1, where A1 and A2 are the reference lead located 1 cm above the mastoid process for the EEG recording), oral-nasal airflows, piezo-based abdominal and thoracic movement signals, electrocardiogram (ECG), electromyography (EMG), and photoplethysmography (PPG), were recorded. We focus on the EEG and EMG signals here, which are recorded at the sampling rate 500 Hz. We collected 10 subjects. The overnight sleep stages for all 30 s epochs, including Awake, REM, N1, N2 and N3, are provided by two sleep experts following the American Academy of Sleep Medicine (AASM) standard5. Among 10 subjects, there are 5 males and 5 females. For the 5 males, the age is 30.00 ± 7.84-year-old, the BMI is 24.09 ± 2.99 kg/, and the AHI is 3.10 ± 1.57. For the 5 females, the age is 48.20 ± 12.15-year-old, the BMI is 21.95 ± 2.00 kg/, and the AHI is 1.66 ± 1.57. The sleep recording time is 6.31 ± 0.64 h (Males: 6.06 ± 0.14 h, range 5.89–6.28 h; Females: 6.56 ± 0.87 h, range 5.91–7.96 h). Each subject’s EEG recording has two versions. In the first version, the high-frequency components in the EEG signals are filtered by a low-passed filter at 50 Hz, while in the second version the signal is not filtered.

3. Results

3.1. Relationship with EMG

The EEG signal in our database is sampled at the sampling rate 500 Hz, so theoretically we could inspect the spectral information up to 250 Hz. According to the biopotential theory, the spectral range of EMG is about 25–5000 Hz, while EEG is about 0.1–100 Hz. Therefore, to show how much muscle tone information is encoded in the EEG signal, we consider four different spectral ranges: (0.5, 15), (15, 35), (35, 80), and (80, 250). The first three spectral ranges are motivated by the EEG spectral range we are interested, while the fourth one is used to evaluate the EMG information encoded in the EEG. While there is no global consensus which high frequency spectral band is important, to the best of our knowledge, we further consider two finer spectral ranges, (80, 150) and (150, 250). There are in total 7556 30 s segments for analysis. The results of evaluating the relationship between EMG and central, occipital, and frontal EEGs are shown in Table 1, Table 2, and Table 3 respectively. The value of correlation is marked by * or ** if the p value is less than 0.05/36 = 0.0014, after Bonferroni correction, or , respectively. The higher the correlation coefficient, the more association we have between the EEG and EMG.

Table 1.

Pearson correlation coefficient between EMG’s spectral energy and C3-A2 EEG’s spectral energy within various frequency bands during different sleep stages. The spectral range of each band is the same for the EEG and EMG signals. The dataset consisting of all 30s EMG and EEG segments from the ten subjects.

Table 2.

Pearson correlation coefficient between EMG’s spectral energy and O2-A1 EEG’s spectral energy within various frequency bands during different sleep stages. The spectral range of each band is the same for the EEG and EMG signals. The dataset consisting of all 30s EMG and EEG segments from the ten subjects.

Table 3.

Pearson correlation coefficient between EMG’s spectral energy and F3-A2 EEG’s spectral energy within various frequency bands during different sleep stages. The spectral range of each band is the same for the EEG and EMG signals. The dataset consisting of all 30s EMG and EEG segments from the ten subjects.

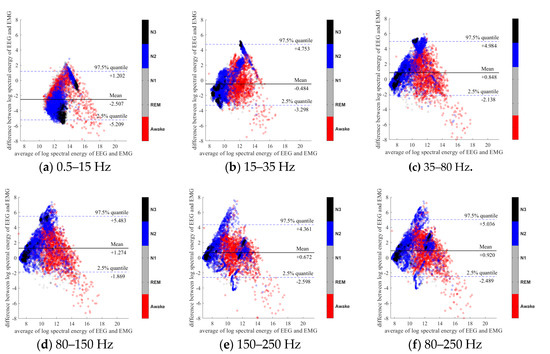

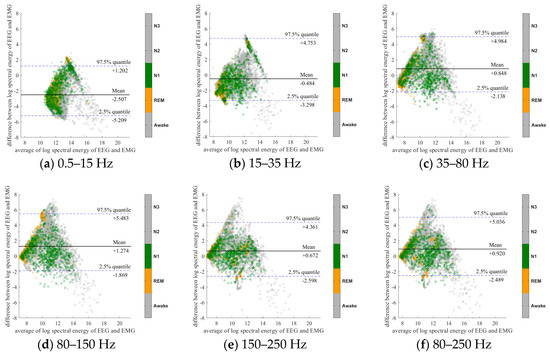

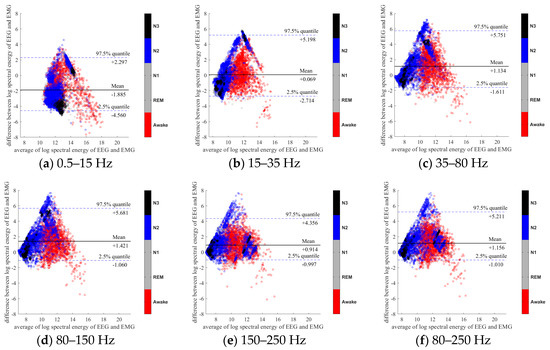

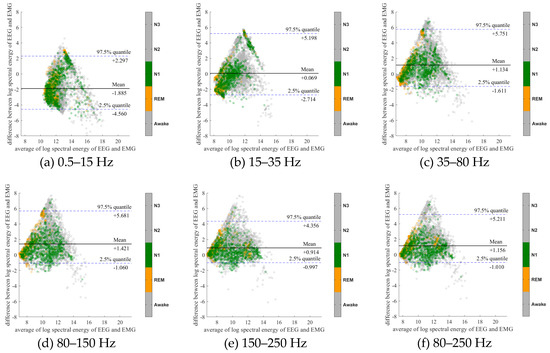

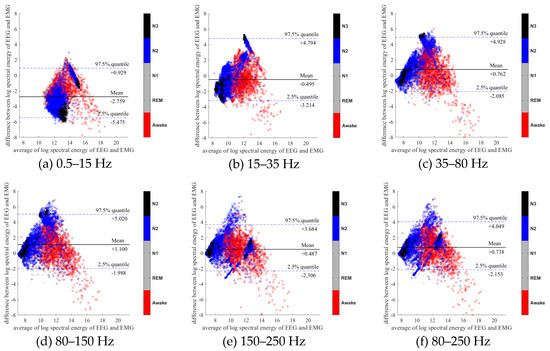

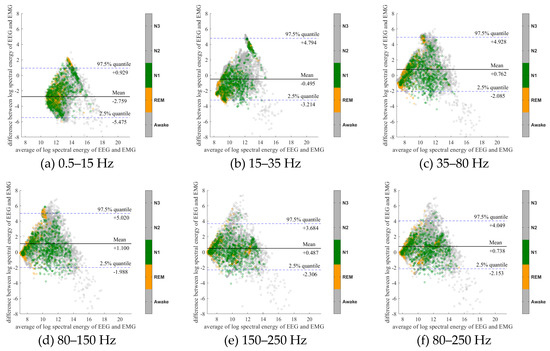

To further evaluate the agreement between the EEG and EMG spectra, we plot the Bland-Altman plot [32] in Figure 2, Figure 3, Figure 4, Figure 5, Figure 6 and Figure 7. Because the number of Awake and N2 epochs is remarkably higher than that of REM and N1 epochs, the distribution of the REM and N1 dots will be hidden behind the Awake and N2 point clouds if all samples are plotted in the common window. Thus, to enhance the visualization, each figure is further divided into two subfigures, and the dots in both subfigures are the same except their colors. In the first (resp. second) subfigure, we highlight the distribution of Awake, N2, and N3 (resp. REM and N1) point clouds by plotting REM and N1 (resp. Awake, N2, and N3) points in gray as the background. We observed that usually the region covered by the REM and N1 dots is smaller than the region covered by Awake, N2, and N3 dots. On the other hand, note that the energy over the frequency range (150,250) Hz has the smallest 95% confidence interval (2.5% quantile, 97.5% quantile) in comparison with the energy over other high frequency bands, including (35,80), (80,150), and (80,250) Hz. The above results suggest that we can obtain a lot muscle tone information from the high frequency spectral information of EEG.

Figure 2.

Bland-Altman plots of the logarithmic spectral energy of 30s (C3-A2) EEG and (Chin) EMG signals. To enhance the visualization, N1 and REM are plotted in the gray color in the background.

Figure 3.

Bland-Altman plots of the logarithmic spectral energy of 30s (C3-A2) EEG and (Chin) EMG signals. To enhance the visualization, Awake, N2 and N3 are plotted in the gray color in the background.

Figure 4.

Bland-Altman plots of the logarithmic spectral energy of 30s (O2-A1) EEG and (Chin) EMG signals. To enhance the visualization, N1 and REM are plotted in the gray color in the background.

Figure 5.

Bland-Altman plots of the logarithmic spectral energy of 30s (O2-A1) EEG and (Chin) EMG signals. To enhance the visualization, Awake, N2 and N3 are plotted in the gray color in the background.

Figure 6.

Bland-Altman plots of the logarithmic spectral energy of 30s (F3-A2) EEG and (Chin) EMG signals. To enhance the visualization, N1 and REM are plotted in the gray color in the background.

Figure 7.

Bland-Altman plots of the logarithmic spectral energy of 30s (F3-A2) EEG and (Chin) EMG signals. To enhance the visualization, Awake, N2 and N3 are plotted in the gray color in the background.

3.2. Automatic Sleep Stage Annotation Results

Table 4 is the confusion matrix obtained by applying the automatic annotation algorithm to low-pass-filtered EEG signals and Table 5 is the confusion matrix obtained by applying the algorithm to the original raw EEG signal. The increases of the main performance indices, including the overall accuracy (from 78.70% to 79.52%), macro F1 score (from 66.97% to 70.30%), and Cohen’s kappa (from 0.68 to 0.70), show that the high frequency spectral information in the unfiltered raw data is useful for the sleep stage classification. Furthermore, we have the following detailed observation.

Table 4.

Confusion matrix obtained from 10-fold leave-one-subject-out cross-validation on band-pass filtered EEG over 0.5-50 Hz signals extracted from C3-A2 and O2-A1 channels. The overall accuracy equals 78.70%, the macro F1 score equals 66.97% and Cohen’s kappa equals 0.6803. The precision (PR), recall (RE), and F1 score for each sleep stage are described in the last three columns.

Table 5.

Confusion matrix obtained from 10-fold leave-one-subject-out cross-validation on unfiltered EEG signals extracted from C3-A2 and O2-A1 channels. The overall accuracy equals 79.52%, the macro F1 score equals 70.30% and Cohen’s kappa equal 0.6972. The precision (PR), recall (RE), and F1 score for each sleep stage are described in the last three columns.

- For the REM stage, Table 4 shows that 8% and 23% REM epochs were misclassified as N1 and N2 stages when the features extracted from low-pass-filtered EEG signals are used for classification. Table 5 shows that after taking high-frequency features extracted from the unfiltered raw data into account, the misclassification rate of REM epochs decreases (7% REM epochs were misclassified as N1 and 10% REM epochs were misclassified as N2).

- When the features extracted from the low-pass filtered EEG signals are used for classification, the F1 scores for REM and N1 stages are 66% and 29%, respectively. After taking high-frequency features extracted from the unfiltered raw data into account, the F1 scores for REM and N1 stages increase to 78% and 34%, respectively.

Note that Table 1, Table 2 and Table 3 show that the spectral energy of EEG and EMG signals within the frequency band above 100 Hz, particularly the band (150,250) Hz, has remarkably higher Pearson correlation on REM and N1 epochs in comparison with the correlation on Awake and N2 epochs. It means that we can obtain parts of EMG information from the unfiltered EEG signals during REM and N1 epochs. This may be the reason that the F1 scores of REM and N1 stages increase after taking high frequency spectral information in the unfiltered raw data into account.

4. Discussion and Conclusion

In this report, we show that high frequency EEG spectral information, particularly 80–250 Hz band, could be recycled to gain muscle tone information. By taking this information into account, it can help improve the existing automatic sleep stage annotation algorithm. This result suggests that if possible, particularly outside the hospital or when we do not have extensive monitoring equipment, we should sample the EEG signal with a higher sampling rate, and recycle as much high frequency spectral information as possible.

Physiologically, for a normal subject, the EEG signals during REM and N1 are indistinguishable. However, he/she is atonic during the REM stage, but not atonic during N1. To distinguish REM and N1, the sleep experts depend on reading EMG. Moreover, EMG provides information about sleep onset and macro-arousals during REM stage. As is shown in Section 3.1, the high frequency EEG spectrum encodes EMG information, particularly above 80Hz. This fact indicates that we can use the high frequency EEG spectral information as a surrogate of the muscle tone information. In Section 3.2, we show that the high frequency EEG spectral information does help improve the automatic annotation system. However, we shall mention that it is not clear from this study which information, like the size, shape or recruitment pattern of motor unit action potential, is encoded in the high frequency EEG spectrum. Also, as we could see, the EMG spectral information above 80 Hz could be better captured, while the EMG spectral information between 25–80 Hz is less well captured in the EEG signal, probably due to the interference of the brain activity. On the other hand, to the best of our knowledge, the relationship between the high frequency EEG spectral band and different sleep stages is less studied.

There are several possible applications of recycling the muscle tone information from the EEG signal. Recently, an increasing number of wearable biosignal amplifiers have been developed (e.g., around ear applications, wearable headbands) to obtain large-scale data and allow for in-field, long-term assessments of sleep [7,8,9]. One important aspect of these wearable devices is a non-obtrusive design and therefore a reduction of electrodes is desired. Specifically, electrodes around the chin for submental EMG assessment might be difficult to implement in a wearable design. Our results directly propose a solution to this problem by showing that much EMG information is already contained in the high-frequency EEG energy and can therefore partially replace EMG applications.

Another application of our findings could be in the operation room. Specifically, the bispectrum index (BIS) derived from the frontal EEG signal [33] is usually used in conjunction with the EMG to estimate the depth of anesthesia for the sake of minimizing the occurrence of intraoperative awareness. Similarly, during procedure sedation [34], the EMG could be taken into consideration to control the sedation level to reduce the side effect. In a recent paper [35], it is shown that the muscle tone information is directly related to BIS, which also supports the potential of recycling the muscle tone information from the EEG signal. To sum up, this suggests that in the situation that the BIS sensor is not available, we could count on a biopotential sensor on the frontal to obtain both EEG and EMG information, which enhance the healthcare quality. Outside the hospital, drowsiness detection for human control of motor vehicles or other facilities is an interesting application [36], since drowsiness is directly reflected in the muscle tone. It can also be applied to psychiatry related problems, like monitoring the stress release by the Yoga practice or meditation [37]. We will explore these interesting applications in future work.

Author Contributions

Conceptualization, G.-R.L., C.L. and Y.-L.L.; Methodology, G.-R.L., Y.-C.S., H.-T.W.; Software, G.-R.L.; Validation, C.L., H.-T.W.; Formal Analysis, G.-R.L.; Investigation, Y.-L.L. and H.-T.W.; Resources, Y.-L.L. and W.-T.L.; Data Curation, Y.-L.L. and W.-T.L.; Writing—Original Draft Preparation, G.-R.L.; Writing—Review & Editing, C.L., Y.-L.L., Y.-C.S., H.-T.W.; Visualization, G.-R.L.; Supervision, Y.-L.L.; Project Administration, H.-T.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work is part of the Taiwan Integrated Database for Intelligent Sleep (TIDIS) project support by Ministry of Science and Technology 109-2119-M-002-014-, NCTS Taiwan. CL is supported by the Swiss National Science Foundation (PZ00P3_179795).

Conflicts of Interest

There is no conflict of interest.

References

- Frølich, L.; Dowding, I. Removal of muscular artifacts in EEG signals: A comparison of linear decomposition methods. Brain Inform. 2018, 5, 13–22. [Google Scholar] [CrossRef] [PubMed]

- Larsen, L.H.; Moe, K.E.; Vitiello, M.V.; Prinz, P.N. Age trends in the sleep EEG of healthy older men and women. J. Sleep Res. 1995, 4, 160–172. [Google Scholar] [CrossRef] [PubMed]

- Barlow, J.S. Muscle spike artifact minimization in EEGs by time-domain filtering. Electroencephalogr. Clin. Neurophysiol. 1983, 55, 487–491. [Google Scholar] [CrossRef]

- Berry, R.B.; Brooks, R.; Gamaldo, C.E.; Harding, S.M.; Marcus, C.; Vaughn, B.V. The AASM manual for the scoring of sleep and associated events. In Rules, Terminology and Technical Specifications; American Academy of Sleep Medicine: Darien, IL, USA, 2012. [Google Scholar]

- Berry, R.B.; Budhiraja, R.; Gottlieb, D.J.; Gozal, D.; Iber, C.; Kapur, V.K.; Marcus, C.L.; Mehra, R.; Parthasarathy, S.; Quan, S.F.; et al. Rules for scoring respiratory events in sleep: Update of the 2007 AASM manual for the scoring of sleep and associated events. J. Clin. Sleep Med. 2012, 8, 597–619. [Google Scholar] [CrossRef] [PubMed]

- Mikkelsen, K.B.; Tabar, Y.R.; Kappel, S.L.; Christensen, C.B.; Toft, H.O.; Hemmsen, M.C.; Rank, M.L.; Otto, M.; Kidmose, P. Accurate whole-night sleep monitoring with dry-contact ear-EEG. Sci. Rep. 2019, 9, 16824. [Google Scholar] [CrossRef] [PubMed]

- Ferster, M.L.; Lustenberger, C.; Karlen, W. Configurable mobile system for autonomous high-quality sleep monitoring and closed-loop acoustic stimulation. IEEE Sens. Lett. 2019, 3, 1–4. [Google Scholar] [CrossRef]

- Nakamura, T.; Alqurashi, Y.D.; Morrell, M.J.; Mandic, D.P. Hearables: Automatic Overnight Sleep Monitoring With Standardized In-Ear EEG Sensor. IEEE Trans. Biomed. Eng. 2019, 67, 203–212. [Google Scholar] [CrossRef]

- Debener, S.; Emkes, R.; De Vos, M.; Bleichner, M. Unobtrusive ambulatory EEG using a smartphone and flexible printed electrodes around the ear. Sci. Rep. 2015, 5, 16743. [Google Scholar] [CrossRef]

- Yazicioglu, R.F.; Van Hoof, C.; Puers, R. Biopotential Readout Circuits for Portable Acquisition Systems; Springer Science & Business Media, Springer: Dordrecht, The Netherlands, 2008. [Google Scholar]

- Tuncel, D.; Dizibuyuk, A.; Kiymik, M.K. Time frequency based coherence analysis between EEG and EMG activities in fatigue duration. J. Med. Syst. 2010, 34, 131–138. [Google Scholar] [CrossRef]

- Pope, K.J.; Fitzgibbon, S.P.; Lewis, T.W.; Whitham, E.M.; Willoughby, J.O. Relation of gamma oscillations in scalp recordings to muscular activity. Brain Topogr. 2009, 22, 13–17. [Google Scholar] [CrossRef]

- Cisotto, G.; Michieli, U.; Badia, L. A coherence study on EEG and EMG signals. arXiv 2017, arXiv:171201277. preprint. [Google Scholar]

- Levendowski, D.J.; Ferini-Strambi, L.; Gamaldo, C.; Cetel, M.; Rosenberg, R.; Westbrook, P.R. The accuracy, night-to-night variability, and stability of frontopolar sleep electroencephalography biomarkers. J. Clin. Sleep Med. 2017, 13, 791–803. [Google Scholar] [CrossRef] [PubMed]

- Levendowski, D.J.; Louis, E.K.S.; Strambi, L.F.; Galbiati, A.; Westbrook, P.; Berka, C. comparison of eMg power during sleep from the submental and frontalis muscles. Nat. Sci. Sleep 2018, 10, 431. [Google Scholar] [CrossRef] [PubMed]

- Frauscher, B.; Iranzo, A.; Gaig, C.; Gschliesser, V.; Guaita, M.; Raffelseder, V.; Ehrmann, L.; Sola, N.; Salamero, M.; Tolosa, E.; et al. Normative EMG values during REM sleep for the diagnosis of REM sleep behavior disorder. Sleep 2012, 35, 835–847. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Choi, S. Automatic Sleep Stage Classification Using EEG and EMG Signal. In Proceedings of the 2018 Tenth International Conference on Ubiquitous and Future Networks (ICUFN), Prague, Czech Republic, 3–6 July 2018; pp. 207–212. [Google Scholar]

- Koley, B.; Dey, D. An ensemble system for automatic sleep stage classification using single channel EEG signal. Comput. Biol. Med. 2012, 42, 1186–1195. [Google Scholar] [CrossRef]

- Tsinalis, O.; Matthews, P.M.; Guo, Y. Automatic sleep stage scoring using time-frequency analysis and stacked sparse autoencoders. Ann. Biomed. Eng. 2016, 44, 1587–1597. [Google Scholar] [CrossRef]

- Supratak, A.; Dong, H.; Wu, C.; Guo, Y. DeepSleepNet: A model for automatic sleep stage scoring based on raw single-channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1998–2008. [Google Scholar] [CrossRef]

- Vilamala, A.; Madsen, K.H.; Hansen, L.K. Deep convolutional neural networks for interpretable analysis of EEG sleep stage scoring. In Proceedings of the 2017 IEEE 27th International Workshop on Machine Learning for Signal Processing (MLSP), Tokyo, Japan, 25–28 September 2017; pp. 1–6. [Google Scholar]

- Liu, G.-R.; Lo, Y.-L.; Malik, J.; Sheu, Y.-C.; Wu, H.-T. Diffuse to fuse EEG spectra–Intrinsic geometry of sleep dynamics for classification. Biomed. Signal Process. Control 2020, 55, 101576. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef]

- Mallat, S. Group invariant scattering. Commun. Pure Appl. Math. 2012, 65, 1331–1398. [Google Scholar] [CrossRef]

- Andén, J.; Mallat, S. Deep scattering spectrum. IEEE Trans. Signal Process. 2014, 62, 4114–4128. [Google Scholar] [CrossRef]

- Bruna, J.; Mallat, S.; Bacry, E.; Muzy, J.-F. Intermittent process analysis with scattering moments. Ann. Stat. 2015, 43, 323–351. [Google Scholar] [CrossRef]

- Hardoon, D.R.; Szedmak, S.; Shawe-Taylor, J. Canonical correlation analysis: An overview with application to learning methods. Neural Comput. 2004, 16, 2639–2664. [Google Scholar] [CrossRef] [PubMed]

- Talmon, R.; Wu, H.-T. Latent common manifold learning with alternating diffusion: Analysis and applications. Appl. Comput. Harmon. Anal. 2019, 47, 848–892. [Google Scholar] [CrossRef]

- Scholkopf, B.; Smola, A.J. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; MIT Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Efron, B.; Tibshirani, R.J. An Introduction to the Bootstrap; CRC Press: Boca Raton, FL, USA, 1994. [Google Scholar]

- Good, P. Permutation Tests: A Practical Guide to Resampling Methods for Testing Hypotheses; Springer-Verlag Series in Statistics: New York, NY, USA, 1994. [Google Scholar]

- Giavarina, D. Understanding bland altman analysis. Biochem. Med. Biochem. Med. 2015, 25, 141–151. [Google Scholar] [CrossRef] [PubMed]

- Sigl, J.C.; Chamoun, N.G. An introduction to bispectral analysis for the electroencephalogram. J. Clin. Monit. 1994, 10, 392–404. [Google Scholar] [CrossRef]

- Lo, Y.L.; Lin, T.Y.; Fang, Y.F.; Wang, T.Y.; Chen, H.C.; Chou, C.L.; Chung, F.T.; Kuo, C.H.; Feng, P.H.; Liu, C.Y.; et al. Feasibility of bispectral index-guided propofol infusion for flexible bronchoscopy sedation: A randomized controlled trial. PLoS ONE 2011, 6, e27769. [Google Scholar] [CrossRef]

- Kim, D.; Ahn, J.H.; Jung, H.; Choi, K.Y.; Jeong, J.S. Effects of neuromuscular blockade reversal on bispectral index and frontal electromyogram during steady-state desflurane anesthesia: A randomized trial. Sci. Rep. 2019, 9, 1–7. [Google Scholar] [CrossRef]

- Lawoyin, S. Novel Technologies for the Detection and Mitigation of Drowsy Driving. PhD Thesis, Biomedical Engineering Department, Virginia Commonwealth University, Richmond, VA, USA, 2014. [Google Scholar]

- Narayan, R.; Kamat, A.; Khanolkar, M.; Kamat, S.; Desai, S.R.; Dhume, R. Quantitative evaluation of muscle relaxation induced by Kundalini yoga with the help of EMG integrator. Indian J. Physiol. Pharm. 1990, 34, 279–281. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).