Head-Mounted Display-Based Microscopic Imaging System with Customizable Field Size and Viewpoint †

Abstract

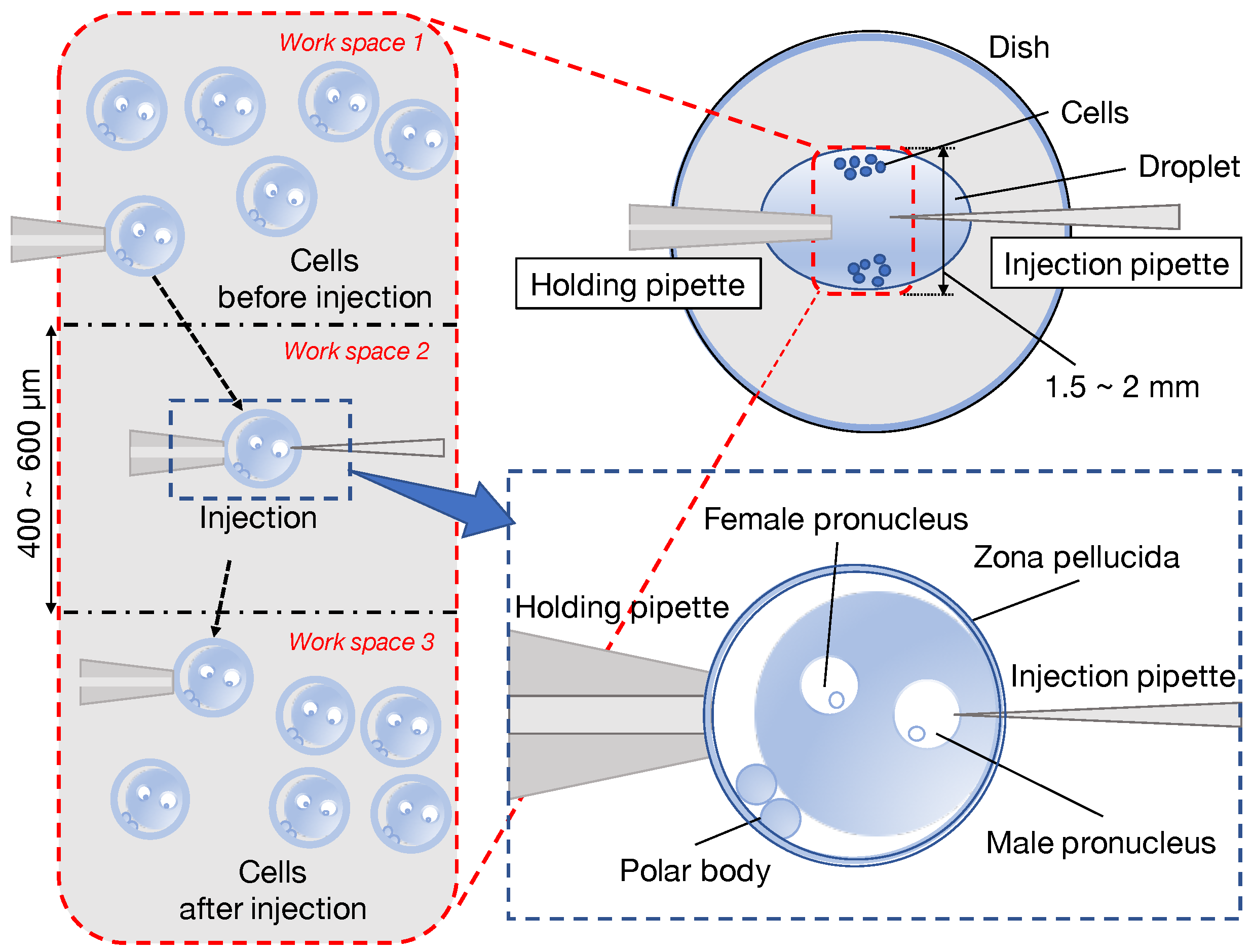

1. Introduction

2. Related Works

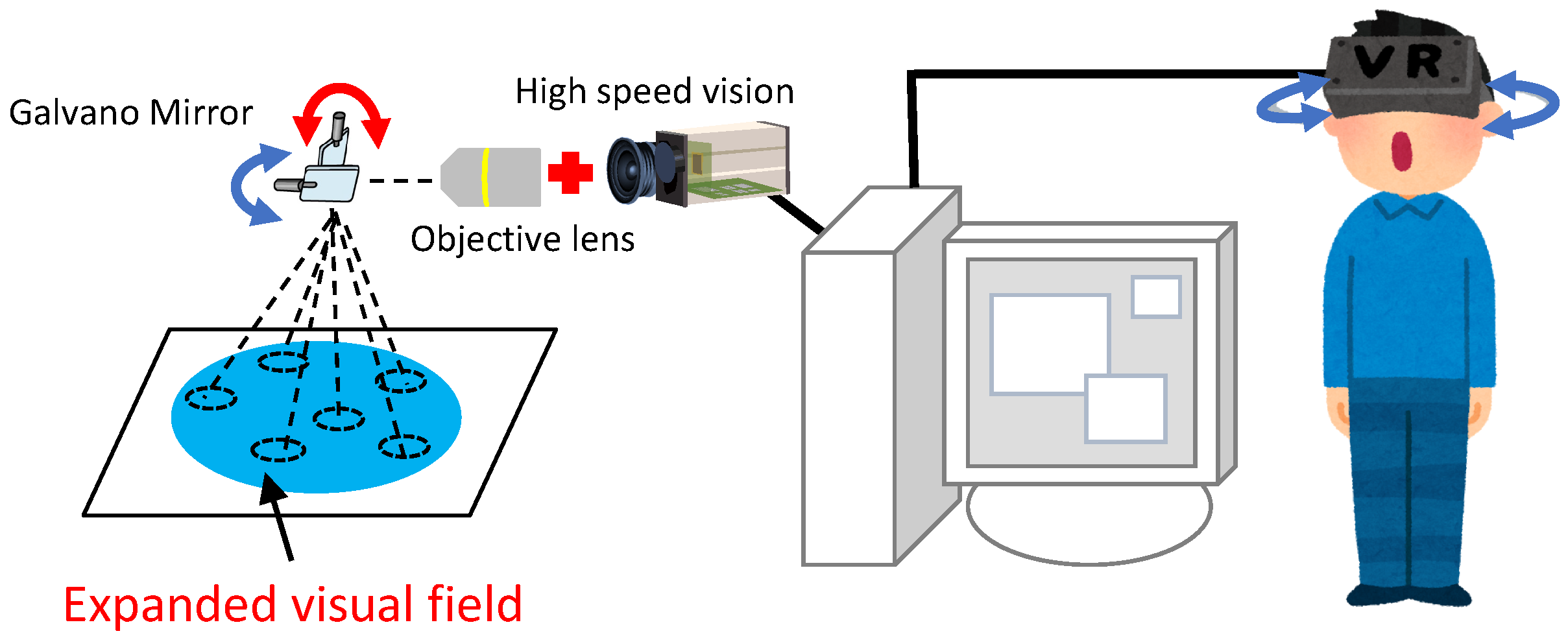

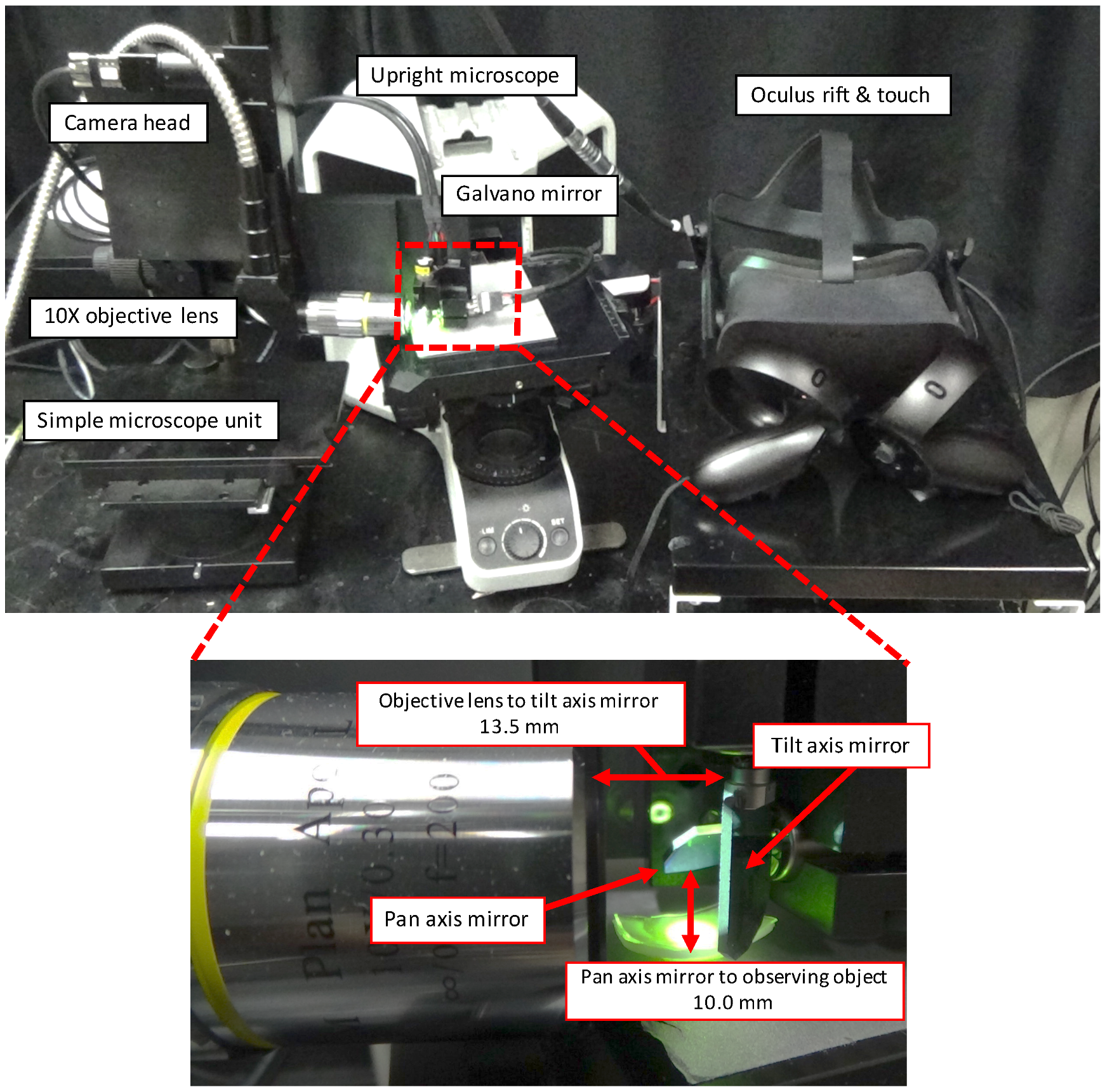

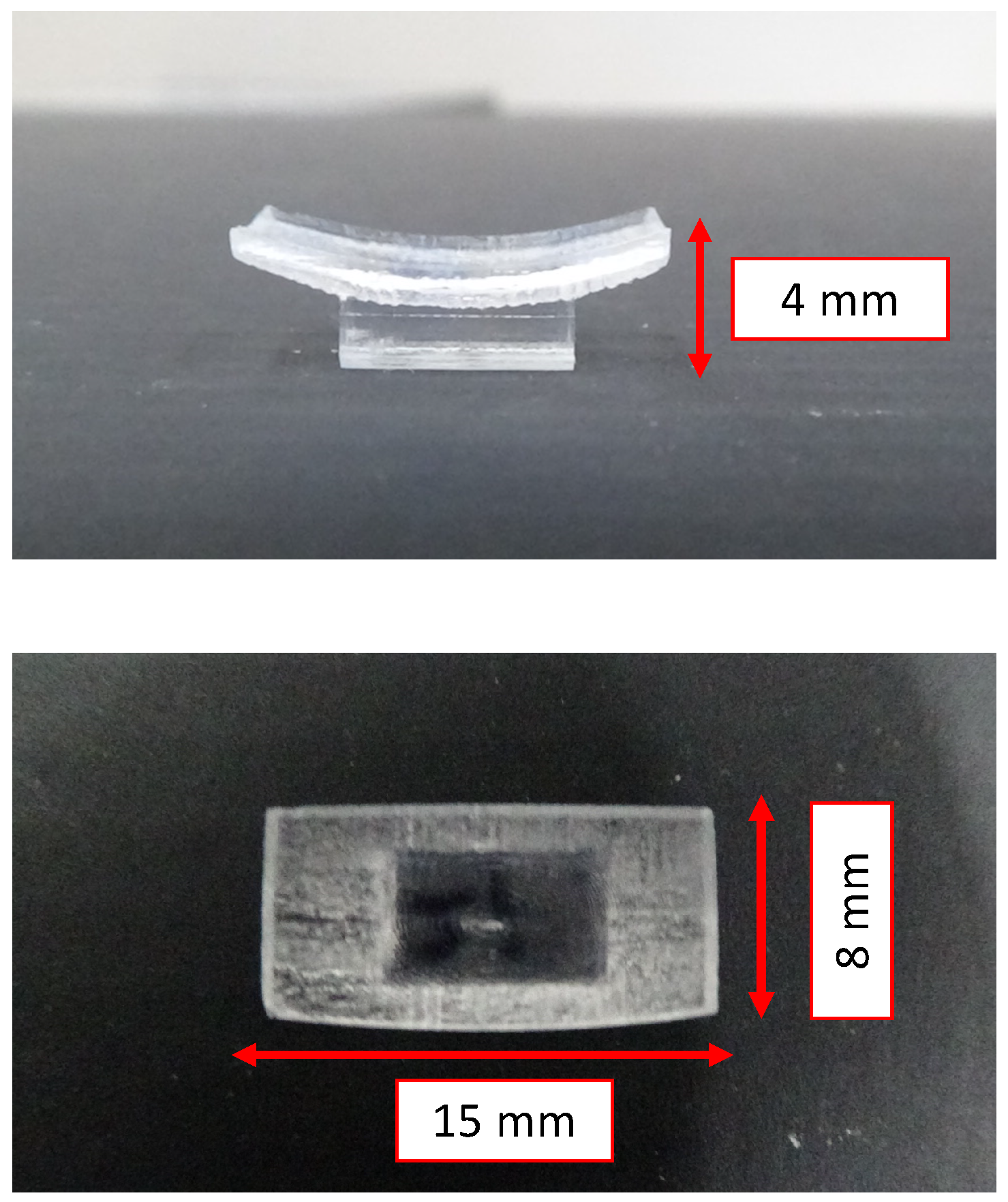

3. Microscopic Image Presentation Apparatus

4. Evaluation of Captured Microscopic Image Using the View-Expansive Microscope System

4.1. Evaluation Index

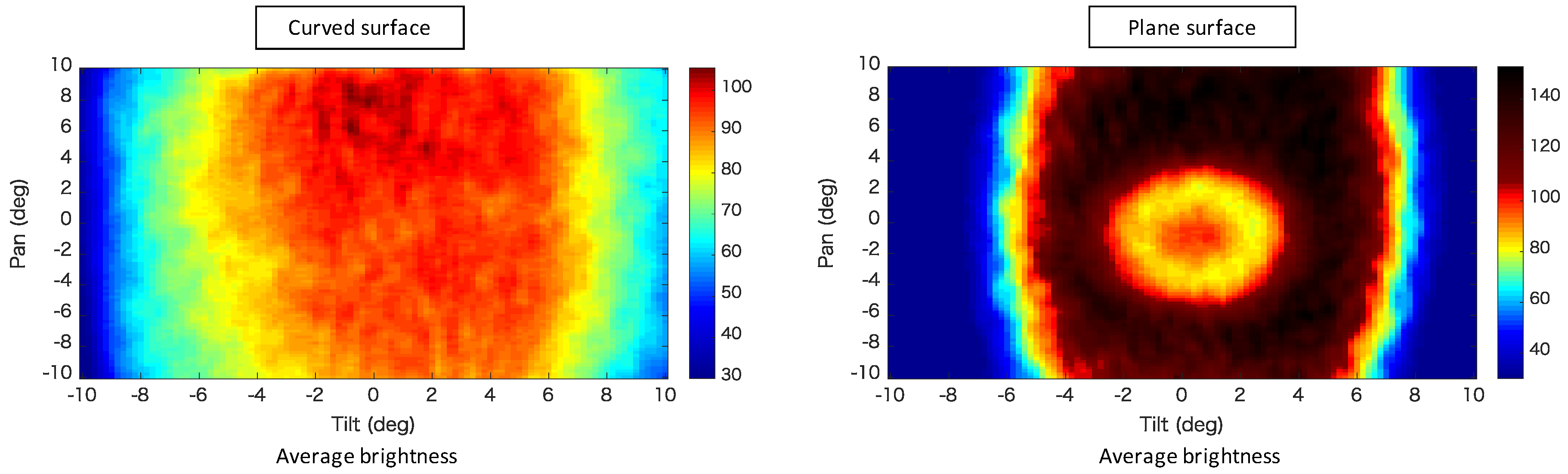

4.1.1. Average Brightness

4.1.2. Average Edge Intensity

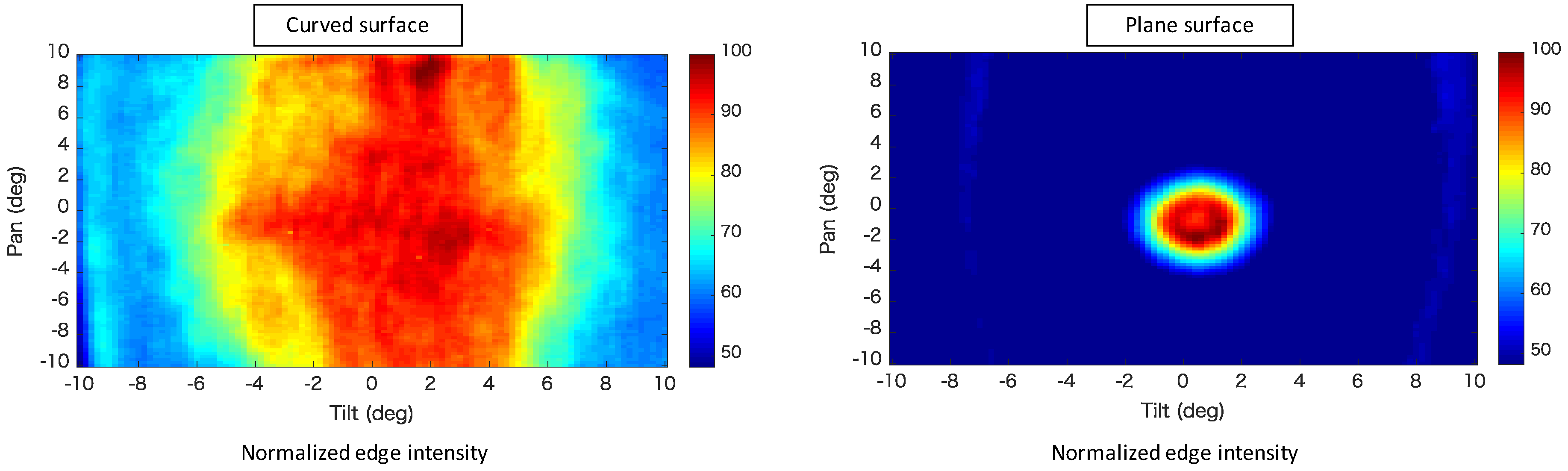

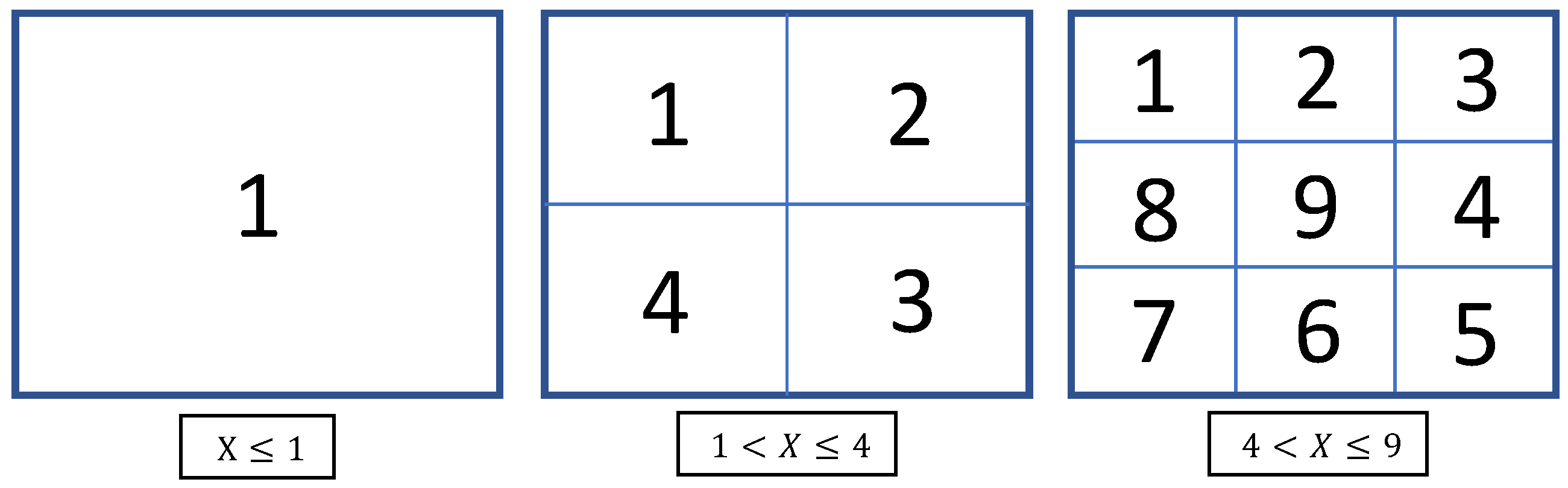

4.2. Evaluation of Captured Images

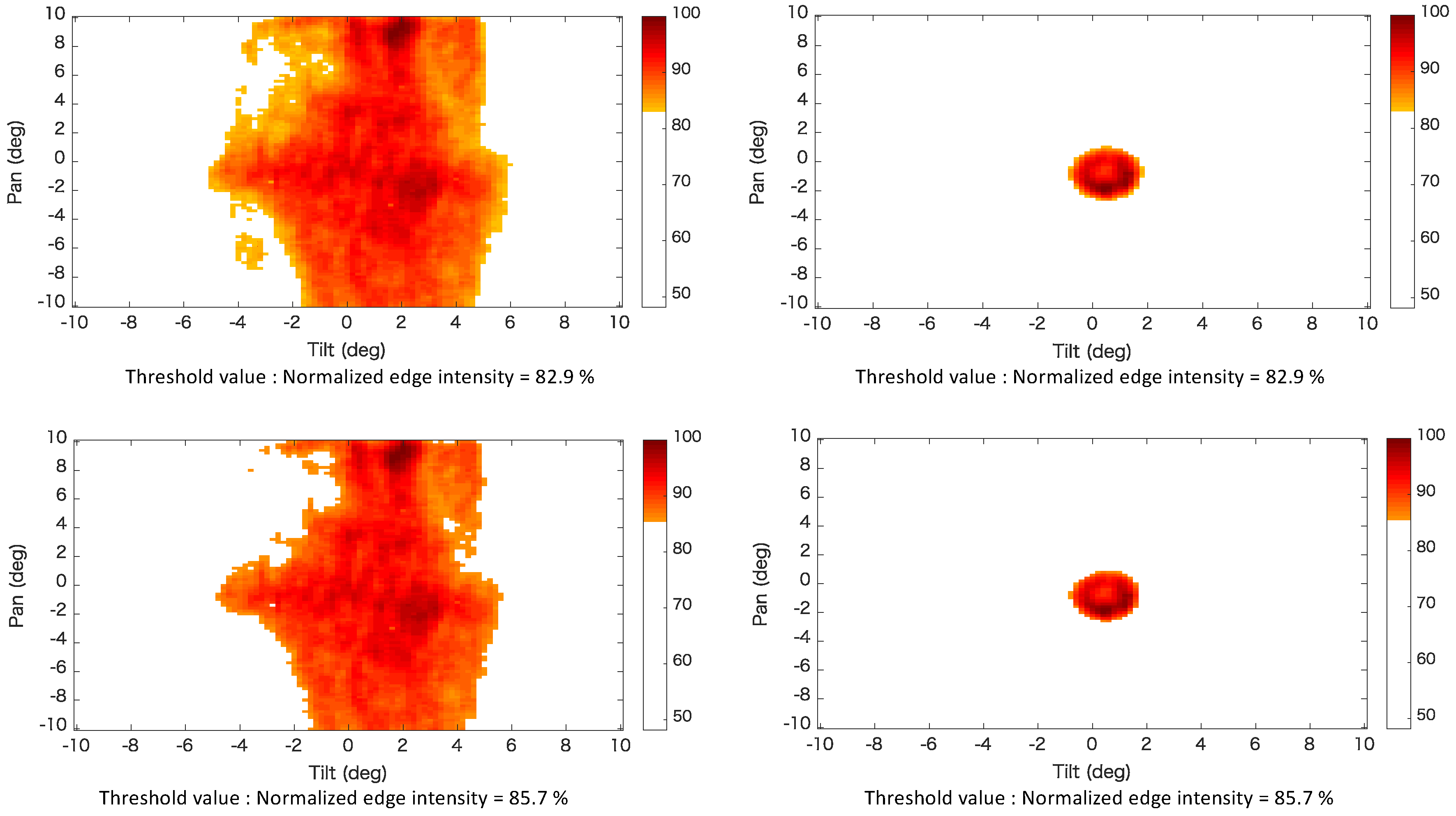

4.3. Comparison of Observable Area Results

5. Implemented Algorithm

5.1. Angle Control of Mirror: Viewpoint Adjustment

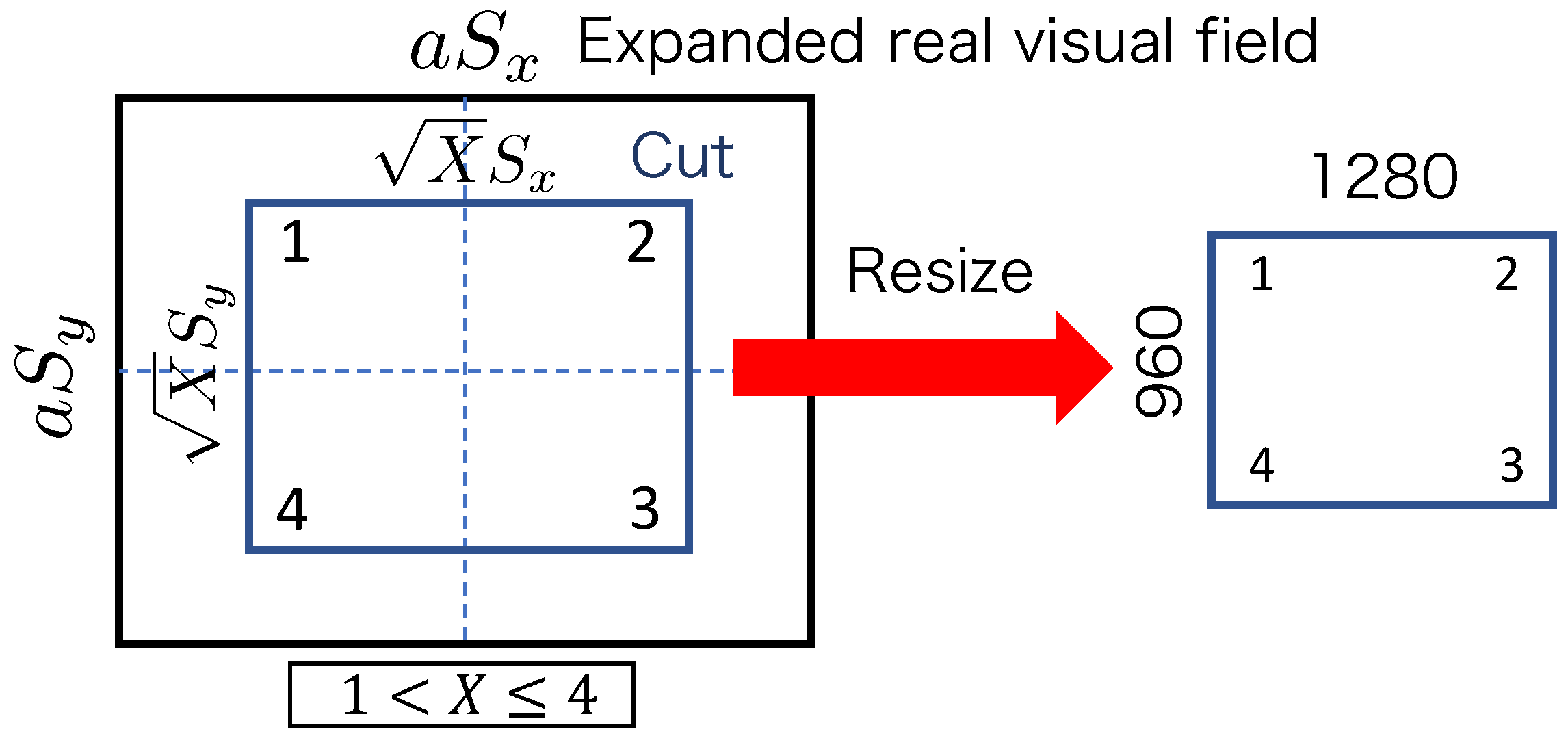

5.2. Creation of the View-Expanded Image of Desired Size

6. Observation Experiment

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gordon, J.W.; Scangos, G.A.; Plotkin, D.J.; Barbosa, J.A.; Ruddle, F.H. Genetic transformation of mouse embryos by microinjection of purified DNA. Proc. Natl. Acad. Sci. USA 1980, 77, 7380–7384. [Google Scholar] [CrossRef] [PubMed]

- Capecchi, M.R. Altering the genome by homologous recombination. Science 1989, 244, 1288–1292. [Google Scholar] [CrossRef] [PubMed]

- Galli, C.; Duchi, R.; Colleoni, S.; Lagutina, I.; Lazzari, G. Ovum pick up, intracytoplasmic sperm injection and somatic cell nuclear transfer in cattle, buffalo and horses: From the research laboratory to clinical practice. Theriogenology 2014, 81, 138–151. [Google Scholar] [CrossRef] [PubMed]

- Fowler, K.E.; Mandawala, A.A.; Griffin, D.K.; Walling, G.A.; Harvey, S.C. The production of pig preimplantation embryos in vitro: Current progress and future prospects. Reprod. Biol. 2018, 18, 203–211. [Google Scholar] [CrossRef] [PubMed]

- Aoyama, T.; Kaneishi, M.; Takaki, T.; Ishii, I. View expansion system for microscope photography based on viewpoint movement using galvano mirror. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 1140–1145. [Google Scholar]

- Takeno, S.; Aoyama, T.; Takeuchi, M.; Hasegawa, Y.; Ishii, I. Microscopic image presentation apparatus for micro manipulation based on the view expansion microscope system. In Proceedings of the IEEE International Symposium on Micro-NanoMechatronics and Human Science, Nagoya, Japan, 9–12 December 2018; pp. 326–327. [Google Scholar]

- Chow, S.K.; Hakozaki, H.; Price, D.L.; Maclean, N.A.B.; Deerinck, T.J.; Bouwer, J.C.; Martone, M.E.; Peltier, S.T.; Ellisman, M.H. Automated microscopy system for mosaic acquisition and processing. J. Microsc. 2006, 222, 76–84. [Google Scholar] [CrossRef] [PubMed]

- Potsaid, B.; Bellouard, Y.; Wen, J.T. Adaptive Scanning Optical Microscope (ASOM): A multidisciplinary optical microscope design for large field of view and high resolution imaging. Opt. Express 2005, 13, 6504–6518. [Google Scholar] [CrossRef] [PubMed]

- Lecoq, J.; Savall, J.; Vučinić, D.; Grewe, B.F.; Kim, H.; Li, J.Z.; Kitch, L.J.; Schnitzer, M.J. Visualizing mammalian brain area interactions by dual-axis two-photon calcium imaging. Nature 2014, 17, 1825–1829. [Google Scholar] [CrossRef] [PubMed]

- Terada, S.-I.; Kobayashi, K.; Ohkura, M.; Nakai, J.; Matsuzaki, M. Super-wide-field two-photon imaging with a micro-optical device moving in post-objective space. Nat. Commun. 2018, 9, 3550. [Google Scholar] [CrossRef] [PubMed]

- Greenbaum, A.; Luo, W.; Khademhosseinieh, B.; Su, T.-W.; Coskun, A.F.; Ozcan, A. Increased space-bandwidth product in pixel super-resolved lensfree on-chip microscopy. Sci. Rep. 2013, 3, 1717. [Google Scholar] [CrossRef]

- Luo, W.; Greenbaum, A.; Zhang, Y.; Ozcan, A. Synthetic aperturebased on-chip microscopy. Light Sci. Appl. 2015, 4, e261. [Google Scholar] [CrossRef]

- Pavillon, N.; Seelamantula, C.S.; ühn, J.K.; Unser, M.; Depeursinge, C. Suppression of the zero-order term in off-axis digital holography through nonlinear filtering. Appl. Opt. 2009, 48, H186–H195. [Google Scholar] [CrossRef] [PubMed]

- Chen, G.-L.; Lin, C.-Y.; Kuo, M.-K.; Chang, C.-C. Numerical suppression of zero-order image in digital holography. Opt. Express 2007, 15, 8851–8856. [Google Scholar] [CrossRef] [PubMed]

- Yamaguchi, I.; Zhang, T. Phase-shifting digital holography. Opt. Lett. 1997, 22, 1268–1270. [Google Scholar] [CrossRef] [PubMed]

- Tahara, T.; Ito, K.; Kakue, T.; Fujii, M.; Shimozato, Y.; Awatsuji, Y.; Nishio, K.; Ura, S.; Kubota, T.; Matoba, O. Parallel phase shifting digital holographic microscopy. Biomed. Opt. Express 2010, 1, 610–616. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.; Choi, Y.; Fang-Yen, C.; Sung, Y.; Dasari, R.R.; Feld, M.S.; Choi, W. High-speed synthetic aperture microscopy for live cell imaging. Opt. Lett. 2011, 36, 148–150. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.; Kim, K.S.; Park, H.; Ye, J.C.; Park, Y. Real-time visualization of 3-d dynamic microscopic objects using optical diffraction tomography. Opt. Express 2013, 21, 32269–32278. [Google Scholar] [CrossRef] [PubMed]

- Dardikman, G.; Habaza, M.; Waller, L.; Shaked, N.T. Video-rate processing in tomographic phase microscopy of biological cells using cuda. Opt. Express 2016, 24, 11839–11854. [Google Scholar] [CrossRef] [PubMed]

- Girshovitz, P.; Shaked, N.T. Fast phase processing in off-axis holography using multiplexing with complex encoding and live-cell fluctuation map calculation in real-time. Opt. Express 2015, 23, 8773–8787. [Google Scholar] [CrossRef] [PubMed]

- Backoach, O.; Kariv, S.; Girshovitz, P.; Shaked, N.T. Fast phase processing in off-axis holography by cuda including parallel phase unwrapping. Opt. Express 2016, 24, 3177–3188. [Google Scholar] [CrossRef] [PubMed]

- Huang, K.-W.; Su, T.-W.; Ozcan, A.; Chiou, P.-Y. Optoelectronic tweezers integrated with lensfree holographic microscopy for wide-field interactive cell and particle manipulation on a chip. Lab Chip 2013, 13, 2278–2284. [Google Scholar] [CrossRef] [PubMed]

- Aoyama, T.; Takeno, S.; Takeuchi, M.; Hasegawa, Y.; Ishii, I. Microscopic tracking system for simultaneous expansive observations of multiple micro-targets based on view-expansive microscope. In Proceedings of the IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Hong Kong, China, 8–12 July 2019; pp. 501–506. [Google Scholar]

| Method of Observing | Observable Area | Rate of Observable Area |

|---|---|---|

| Normal | 0.144 mm | 1 |

| Plane ( = 82.9) | 3.017 mm | 21.0 |

| Plane ( = 85.7) | 2.714 mm | 18.8 |

| variable-focus device ( = 82.9) | 45.161 mm | 313.6 |

| variable-focus device ( = 85.7) | 37.645 mm | 261.4 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aoyama, T.; Takeno, S.; Takeuchi, M.; Hasegawa, Y. Head-Mounted Display-Based Microscopic Imaging System with Customizable Field Size and Viewpoint. Sensors 2020, 20, 1967. https://doi.org/10.3390/s20071967

Aoyama T, Takeno S, Takeuchi M, Hasegawa Y. Head-Mounted Display-Based Microscopic Imaging System with Customizable Field Size and Viewpoint. Sensors. 2020; 20(7):1967. https://doi.org/10.3390/s20071967

Chicago/Turabian StyleAoyama, Tadayoshi, Sarau Takeno, Masaru Takeuchi, and Yasuhisa Hasegawa. 2020. "Head-Mounted Display-Based Microscopic Imaging System with Customizable Field Size and Viewpoint" Sensors 20, no. 7: 1967. https://doi.org/10.3390/s20071967

APA StyleAoyama, T., Takeno, S., Takeuchi, M., & Hasegawa, Y. (2020). Head-Mounted Display-Based Microscopic Imaging System with Customizable Field Size and Viewpoint. Sensors, 20(7), 1967. https://doi.org/10.3390/s20071967