Edge4TSC: Binary Distribution Tree-Enabled Time Series Classification in Edge Environment

Abstract

1. Introduction

- A new framework Edge4TSC that allows time series to be processed on the edge device.

- A new time series representation method based on binary distribution tree which transforms the original time series into the hierarchical distribution space.

- Comprehensive experiments on 6 challenging time series datasets with insightful analysis about the impact of key factors on the classification accuracy of 4 classifiers.

2. Problem Formulation

- a set of class labels ;

- a train set of time series in which each time series is attached with one class label ; and

- a test set of time series in which each time series is attached with one class label that is unknown during classification but available for evaluation;

- ;

- each time series consists of a set of consecutive numerical values (i.e., the time point value must be a real number); and

- the class label of each time series must be included in the class label set C.

- Maximize the classification accuracy defined as follows:

3. Methodology

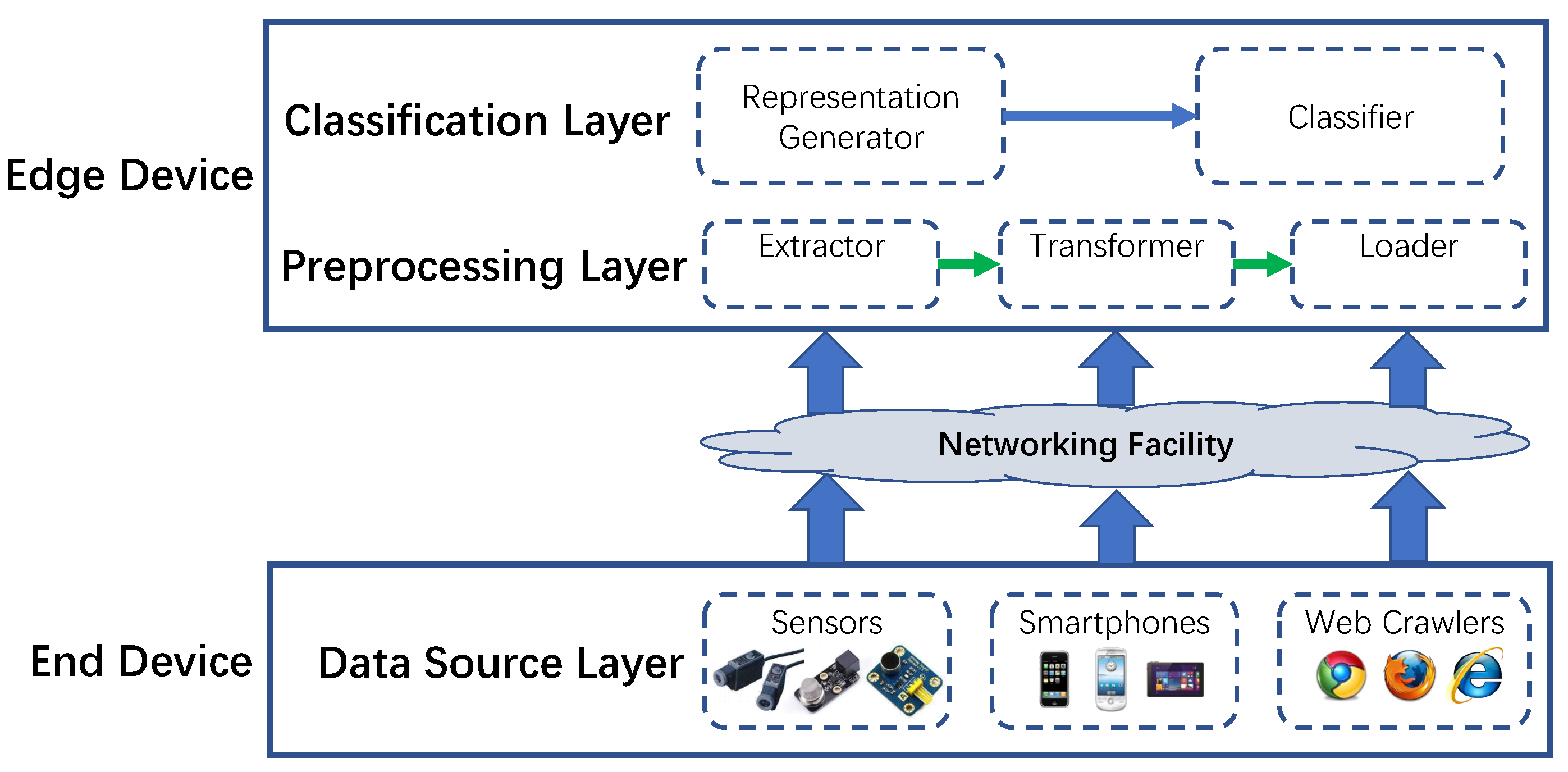

3.1. Overall Architecture

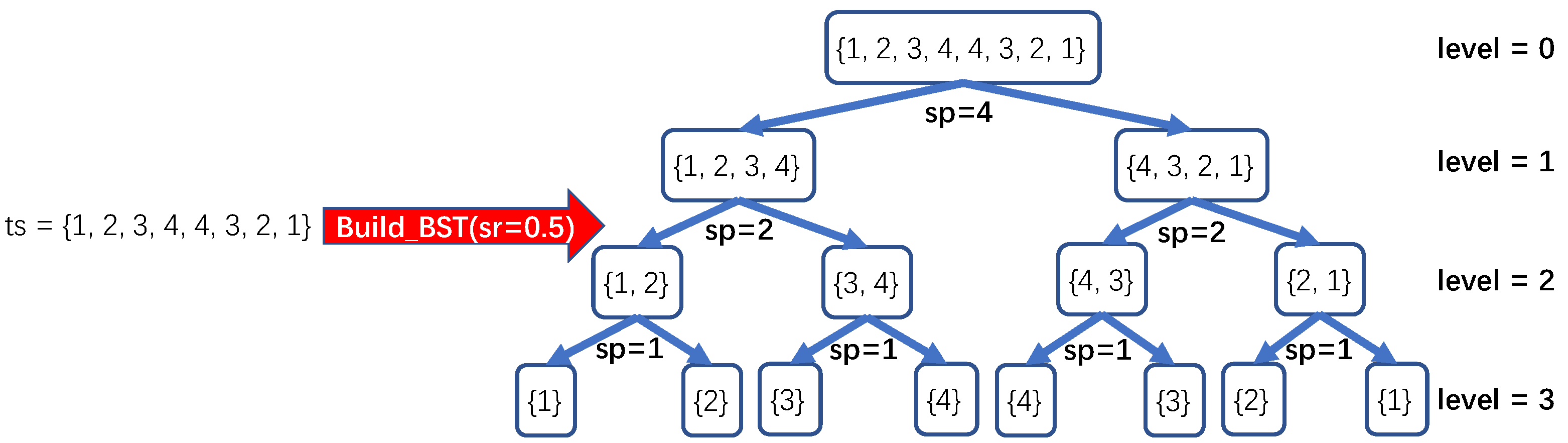

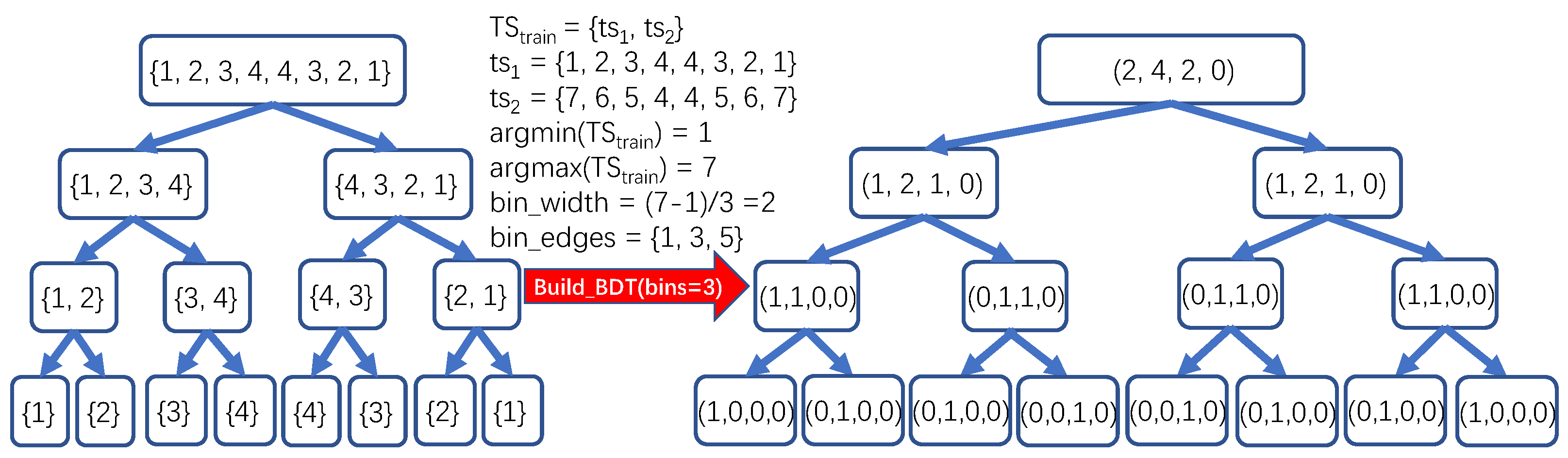

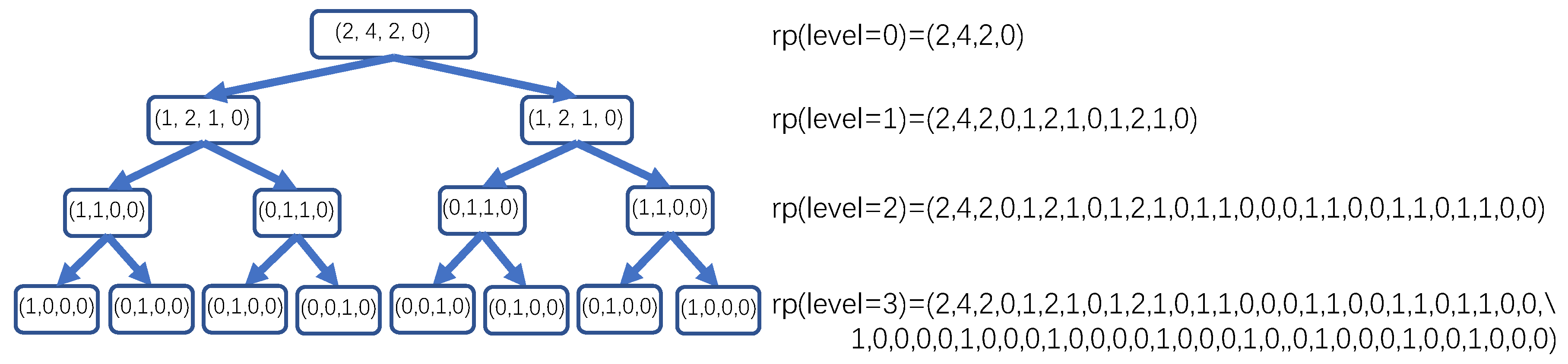

3.2. Time Series Representation Based on Binary Distribution Tree

| Algorithm 1 Build_BST |

| Input: in Output: out Initialisation:

Iterative Process:

Output Binary Subsequence Tree for :

|

| Algorithm 2 Build_BDT |

| Input: in Output: out Initialisation:

Determining Bin Edges:

Transforming into Distribution Space:

Output Binary Distribution Tree for :

|

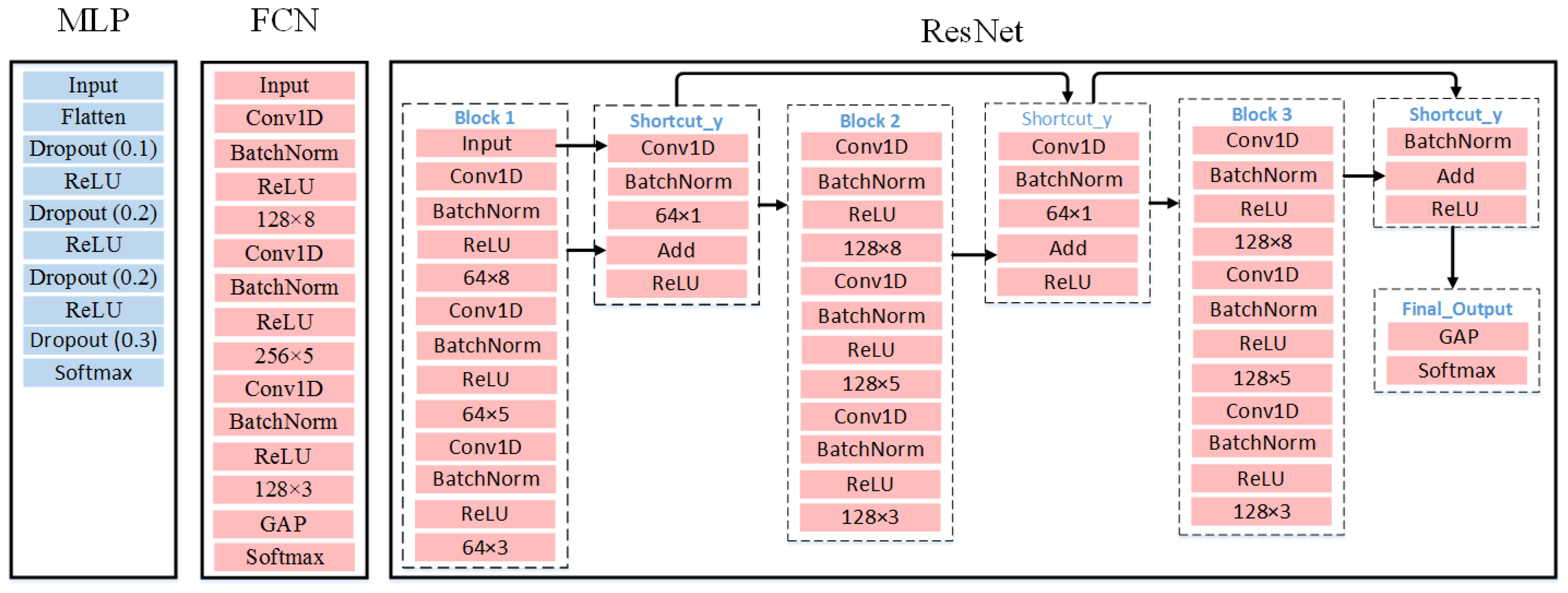

3.3. Classifier Selection and Design

4. Evaluation

4.1. Experimental Settings

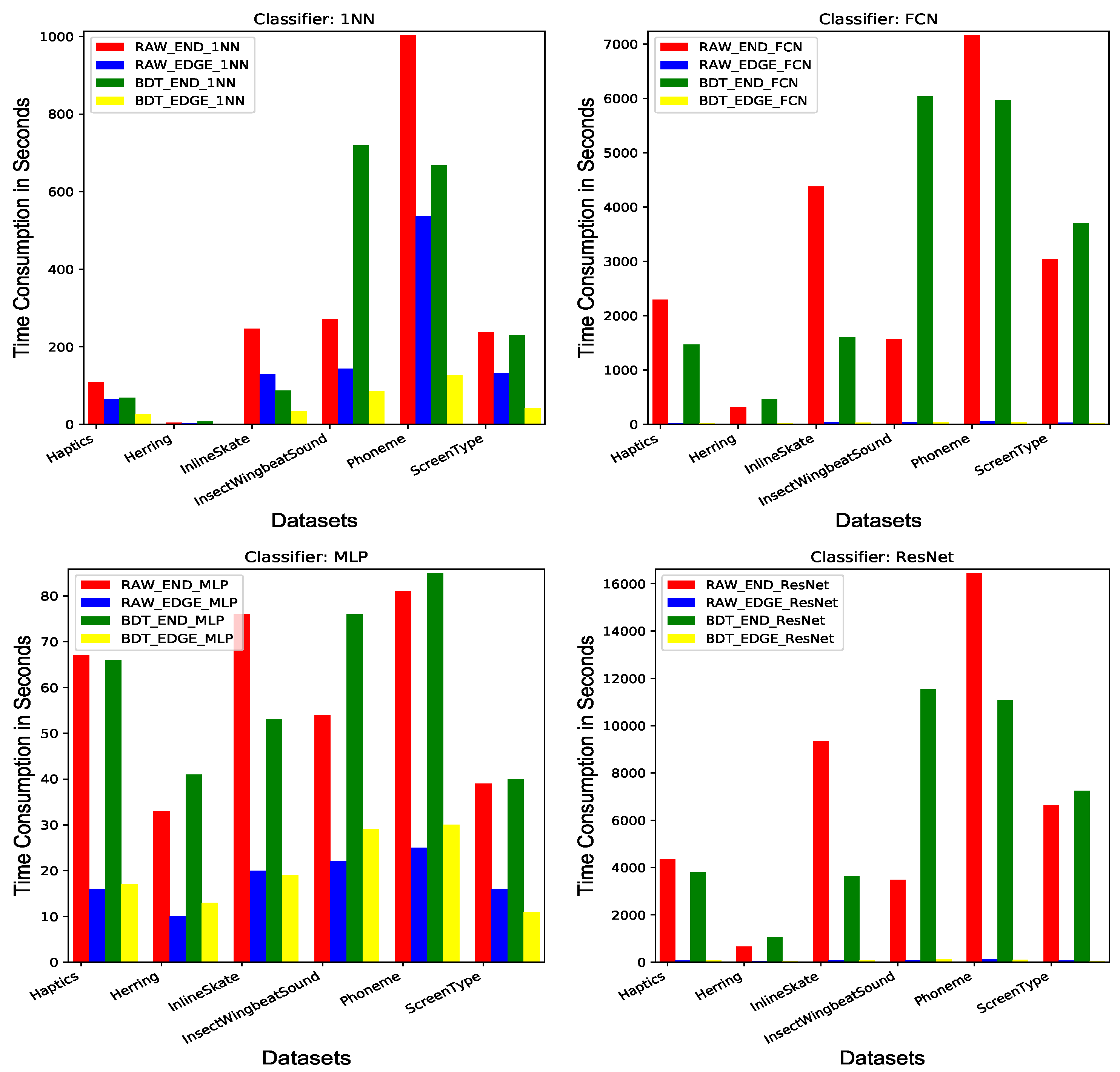

4.2. Overall Results and Analysis

4.3. Impact of Split Ratio on TSC Accuracy

4.4. Impact of Bin Number on TSC Accuracy

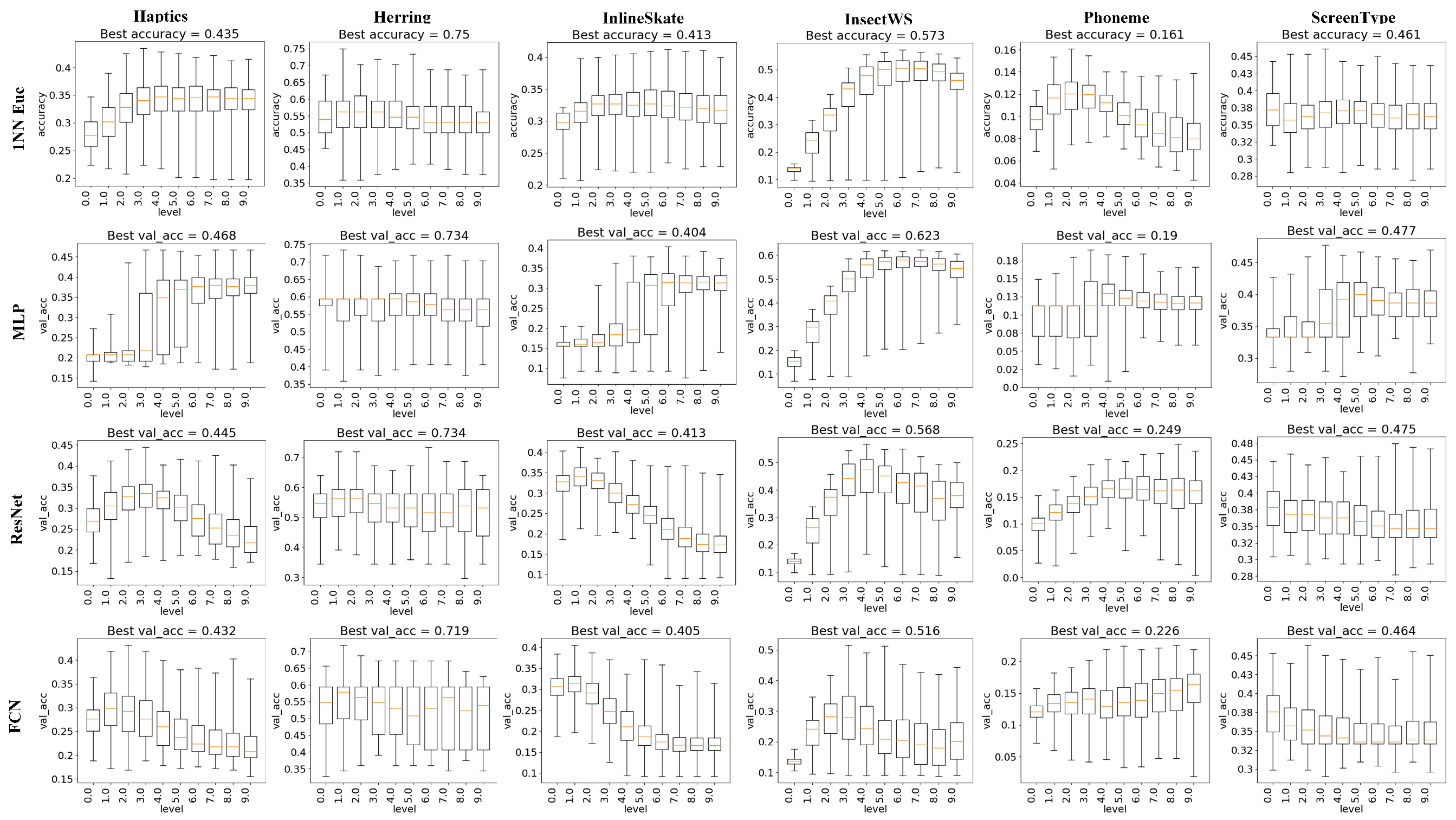

4.5. Impact of BDT Level on TSC Accuracy

5. Related Work

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| TSC | Time Series Classification |

| BST | Binary Segment Tree |

| BDT | Binary Distribution Tree |

| 1NN | 1 Nearest Neighbor |

| MLP | Multi-Layer Perceptron |

| FCN | Fully Convolutional Network |

| ResNet | Residual Network |

References

- Fawaz, H.I.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef]

- Berndt, D.J.; Clifford, J. Human Activity Recognition Using Wearable Sensors by Deep Convolutional Neural Networks. In Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 26–30 October 2015; pp. 1307–1310. [Google Scholar]

- Ghassemi, M.; Pimentel, M.A.F.; Naumann, T.; Brennan, T.; Clifton, D.A.; Szolovits, P.; Feng, M. A Multivariate Timeseries Modeling Approach to Severity of Illness Assessment and Forecasting in ICU with Sparse, Heterogeneous Clinical Data. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 446–453. [Google Scholar]

- Taylor, J.W.; McSharry, P.E.; Buizza, R. Wind Power Density Forecasting Using Ensemble Predictions and Time Series Models. IEEE Trans. Energy Convers. 2009, 24, 775–782. [Google Scholar] [CrossRef]

- Jebb, A.T.; Tay, L.; Wang, W.; Huang, Q. Time series analysis for psychological research: Examining and forecasting change. Front. Psychol. 2015, 6, 1–24. [Google Scholar] [CrossRef]

- Zhu, W.; Cao, J.; Raynal, M. Energy-Efficient Composite Event Detection in Wireless Sensor Networks. IEEE Commun. Lett. 2018, 22, 177–180. [Google Scholar] [CrossRef]

- Zhu, W.; Cao, J.; Raynal, M. Predicate Detection in Asynchronous Distributed Systems: A Probabilistic Approach. IEEE Trans. Comput. 2016, 65, 173–186. [Google Scholar] [CrossRef]

- Li, W.; Liu, X.; Liu, J.; Chen, P.; Wan, S.; Cui, X. On improving the accuracy with auto-encoder on conjunctivitis. Appl. Soft Comput. 2019, 81, 105489. [Google Scholar] [CrossRef]

- Wang, T.; Wang, P.; Cai, S.; Ma, Y.; Liu, A.; Xie, M. A Unified Trustworthy Environment based on Edge Computing in Industrial IoT. IEEE Trans. Ind. Inform. 2019. [Google Scholar] [CrossRef]

- Bagnall, A.; Lines, J.; Bostrom, A.; Large, J.; Keogh, E. The great time series classification bake off: A review and experimental evaluation of recent algorithmic advances. Data Min. Knowl. Discov. 2017, 31, 606–660. [Google Scholar] [CrossRef] [PubMed]

- Berndt, D.J.; Clifford, J. Using Dynamic Time Warping to Find Patterns in Time Series. In Proceedings of the 3rd International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 31 July–1 August 1994; pp. 359–370. [Google Scholar]

- Bostrom, A.; Bagnall, A. Binary Shapelet Transform for Multiclass Time Series Classification. In Transactions on Large-Scale Data- and Knowledge-Centered Systems XXXII; Hameurlain, A., Küng, J., Wagner, R., Madria, S., Hara, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2015; pp. 257–269. [Google Scholar]

- Lines, J.; Davis, L.M.; Hills, J.; Bagnall, A. A Shapelet Transform for Time Series Classification. In Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Beijing, China, 12–16 August 2012; pp. 289–297. [Google Scholar]

- Mueen, A.; Keogh, E.; Young, N. Logical-shapelets: An Expressive Primitive for Time Series Classification. In Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, 21–24 August 2011; pp. 1154–1162. [Google Scholar]

- Grabocka, J.; Schilling, N.; Wistuba, M.; Schmidt-Thieme, L. Learning Time-series Shapelets. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 392–401. [Google Scholar]

- Lin, J.; Keogh, E.; Wei, L.; Lonardi, S. Experiencing SAX: A novel symbolic representation of time series. Data Min. Knowl. Discov. 2007, 15, 107–144. [Google Scholar] [CrossRef]

- Senin, P.; Malinchik, P. SAX-VSM: Interpretable Time Series Classification Using SAX and Vector Space Model. Proceedings of 2013 IEEE 13th International Conference on Data Mining, Dallas, TX, USA, 7–10 December 2013; pp. 1175–1180. [Google Scholar]

- Baydogan, M.G.; Runger, G.; Tuv, E. A Bag-of-Features Framework to Classify Time Series. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2796–2802. [Google Scholar] [CrossRef] [PubMed]

- Schäfer, P. The BOSS is Concerned with Time Series Classification in the Presence of Noise. Data Min. Knowl. Discov. 2015, 29, 1505–1530. [Google Scholar] [CrossRef]

- Wang, Z.; Yan, W.; Oates, T. Time series classification from scratch with deep neural networks: A strong baseline. Proceedings of 2017 International Joint Conference on Neural Networks, Anchorage, AK, USA, 14–19 May 2017; pp. 1578–1585. [Google Scholar]

- Chen, Y.; Keogh, E.; Hu, B.; Begum, N.; Bagnall, A.; Mueen, A.; Batista, G. The UCR Time Series Classification Archive. 2015. Available online: www.cs.ucr.edu/ eamonn/timeseriesdata/ (accessed on 15 March 2019).

- Schäfer, P.; Högqvist, M. SFA: A Symbolic Fourier Approximation and Index for Similarity Search in High Dimensional Datasets. In Proceedings of the 15th International Conference on Extending Database Technology, Berlin, Germany, 26–30 March 2012; pp. 516–527. [Google Scholar]

- Schäfer, P.; Leser, U. Fast and Accurate Time Series Classification with WEASEL. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 637–646. [Google Scholar]

- Lines, J.; Bagnall, A. Time series classification with ensembles of elastic distance measures. Data Min. Knowl. Discov. 2015, 29, 565–592. [Google Scholar] [CrossRef]

- Bagnall, A.; Lines, J.; Hills, J.; Bostrom, A. Time-Series Classification with COTE: The Collective of Transformation-Based Ensembles. IEEE Trans. Knowl. Data Eng. 2015, 29, 2522–2535. [Google Scholar] [CrossRef]

| Symbol | Description | Range |

|---|---|---|

| Split ratio for determining the split position for the current node. | (0.00, 1.00) | |

| Number of bins for calculating the width of each bin. | (1, ∞) | |

| Level of BDT that all nodes from level 0 to this level are concatenated to generate the representation, and L is the total number of BDT levels. | [0, L) |

| Dataset | No. of Classes | Train/Test Size | Series Length | Domain |

|---|---|---|---|---|

| Haptics | 5 | 155/308 | 1092 | Passgraph Identification |

| Herring | 2 | 64/64 | 512 | Otolith Analysis |

| InlineSkate | 7 | 100/550 | 1882 | In-Line Speed Skating |

| InsectWingbeatSound | 11 | 220/1980 | 256 | Flying Insect Classification |

| Phoneme | 39 | 214/1896 | 1024 | Phoneme Classification |

| ScreenType | 3 | 375/375 | 720 | Screen Type Identification |

| Dataset | 1NN | MLP | ResNet | FCN | ||||

|---|---|---|---|---|---|---|---|---|

| RAW | BDT | RAW | BDT | RAW | BDT | RAW | BDT | |

| Haptics | 0.370 | 0.435 | 0.419 | 0.468 | 0.377 | 0.445 | 0.334 | 0.432 |

| Herring | 0.516 | 0.750 | 0.594 | 0.734 | 0.625 | 0.734 | 0.422 | 0.719 |

| InlineSkate | 0.342 | 0.413 | 0.336 | 0.404 | 0.187 | 0.413 | 0.187 | 0.405 |

| InsectWingbeatSound | 0.562 | 0.573 | 0.618 | 0.623 | 0.505 | 0.568 | 0.244 | 0.516 |

| Phoneme | 0.109 | 0.161 | 0.087 | 0.190 | 0.319 | 0.249 | 0.249 | 0.226 |

| ScreenType | 0.360 | 0.461 | 0.397 | 0.477 | 0.605 | 0.475 | 0.619 | 0.464 |

| Average Accuracy | 0.377 | 0.466 | 0.409 | 0.483 | 0.436 | 0.481 | 0.342 | 0.46 |

| Standard Deviation | 0.159 | 0.194 | 0.193 | 0.187 | 0.172 | 0.162 | 0.158 | 0.160 |

| Win | 0 | 6 | 0 | 5 | 2 | 4 | 2 | 4 |

| Tie | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 |

| Lose | 6 | 0 | 5 | 0 | 4 | 2 | 4 | 2 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, C.; Shi, X.; Li, W.; Zhu, W. Edge4TSC: Binary Distribution Tree-Enabled Time Series Classification in Edge Environment. Sensors 2020, 20, 1908. https://doi.org/10.3390/s20071908

Ma C, Shi X, Li W, Zhu W. Edge4TSC: Binary Distribution Tree-Enabled Time Series Classification in Edge Environment. Sensors. 2020; 20(7):1908. https://doi.org/10.3390/s20071908

Chicago/Turabian StyleMa, Chao, Xiaochuan Shi, Wei Li, and Weiping Zhu. 2020. "Edge4TSC: Binary Distribution Tree-Enabled Time Series Classification in Edge Environment" Sensors 20, no. 7: 1908. https://doi.org/10.3390/s20071908

APA StyleMa, C., Shi, X., Li, W., & Zhu, W. (2020). Edge4TSC: Binary Distribution Tree-Enabled Time Series Classification in Edge Environment. Sensors, 20(7), 1908. https://doi.org/10.3390/s20071908