A Novel Intelligent Fault Diagnosis Method for Rolling Bearing Based on Integrated Weight Strategy Features Learning

Abstract

1. Introduction

2. Stack Sparse Auto-Encoders and Softmax Classifier

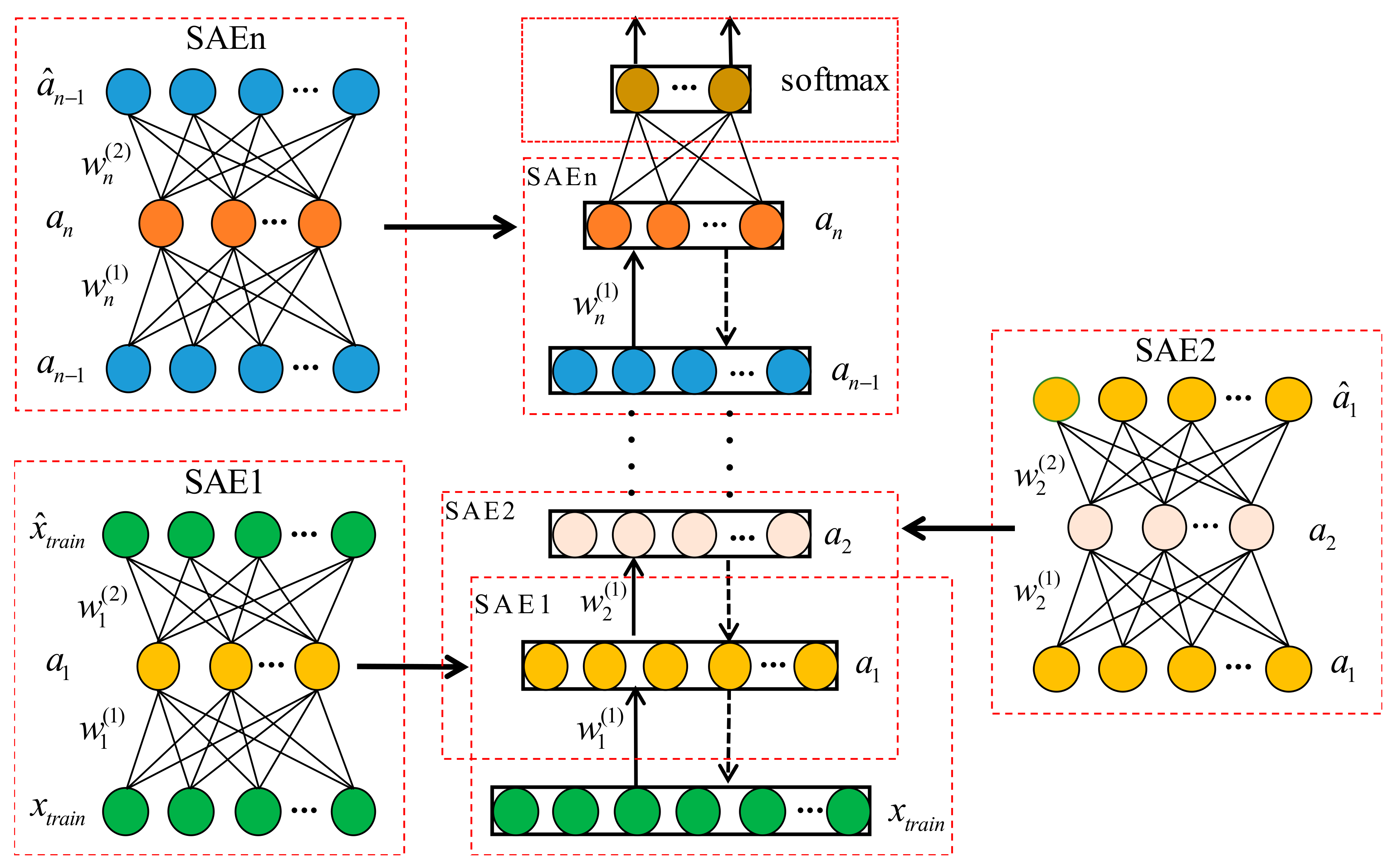

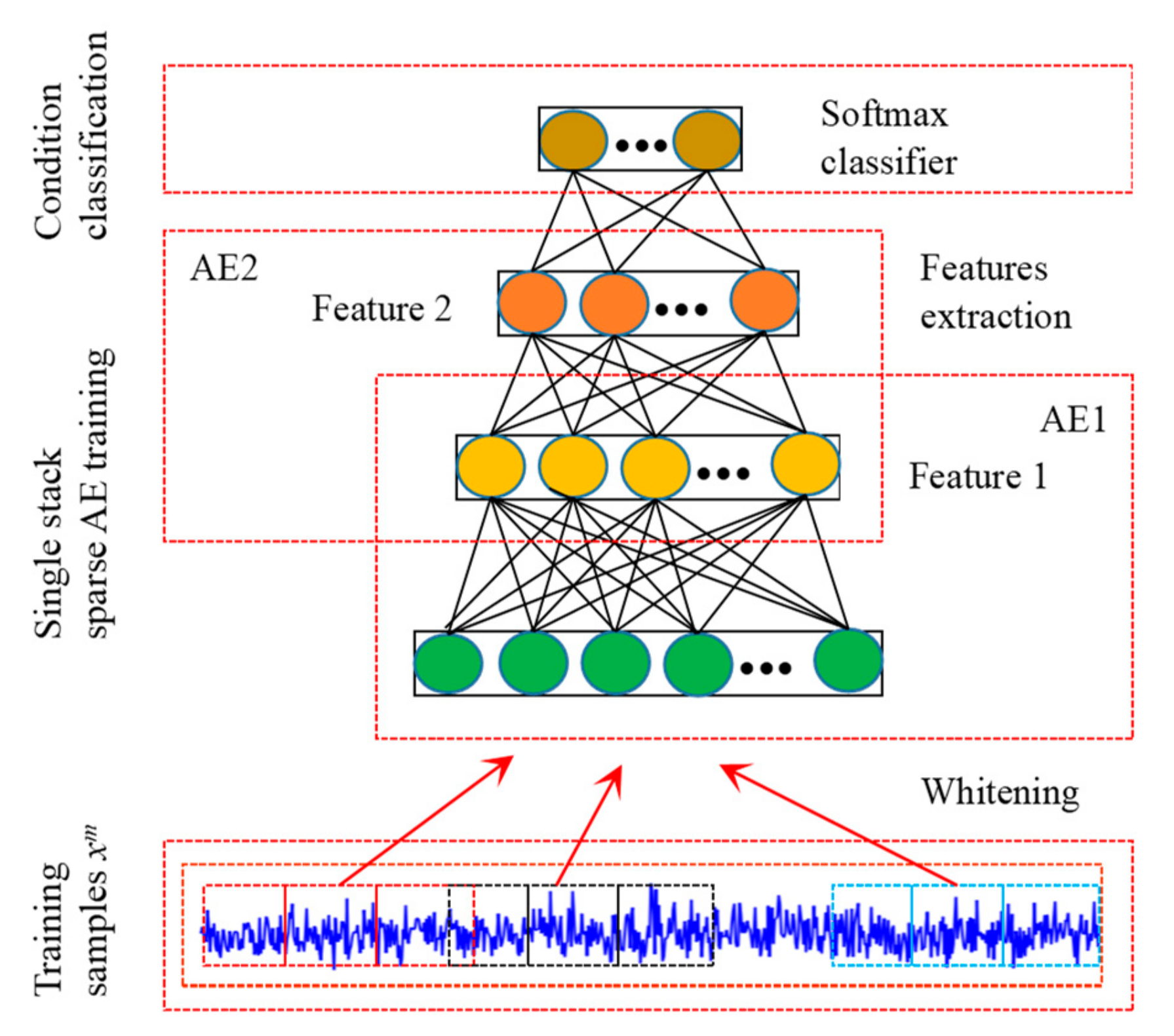

2.1. Stack Sparse Auto-Encoders

2.2. Sparse Auto-Encoder

2.3. Softmax Classifier

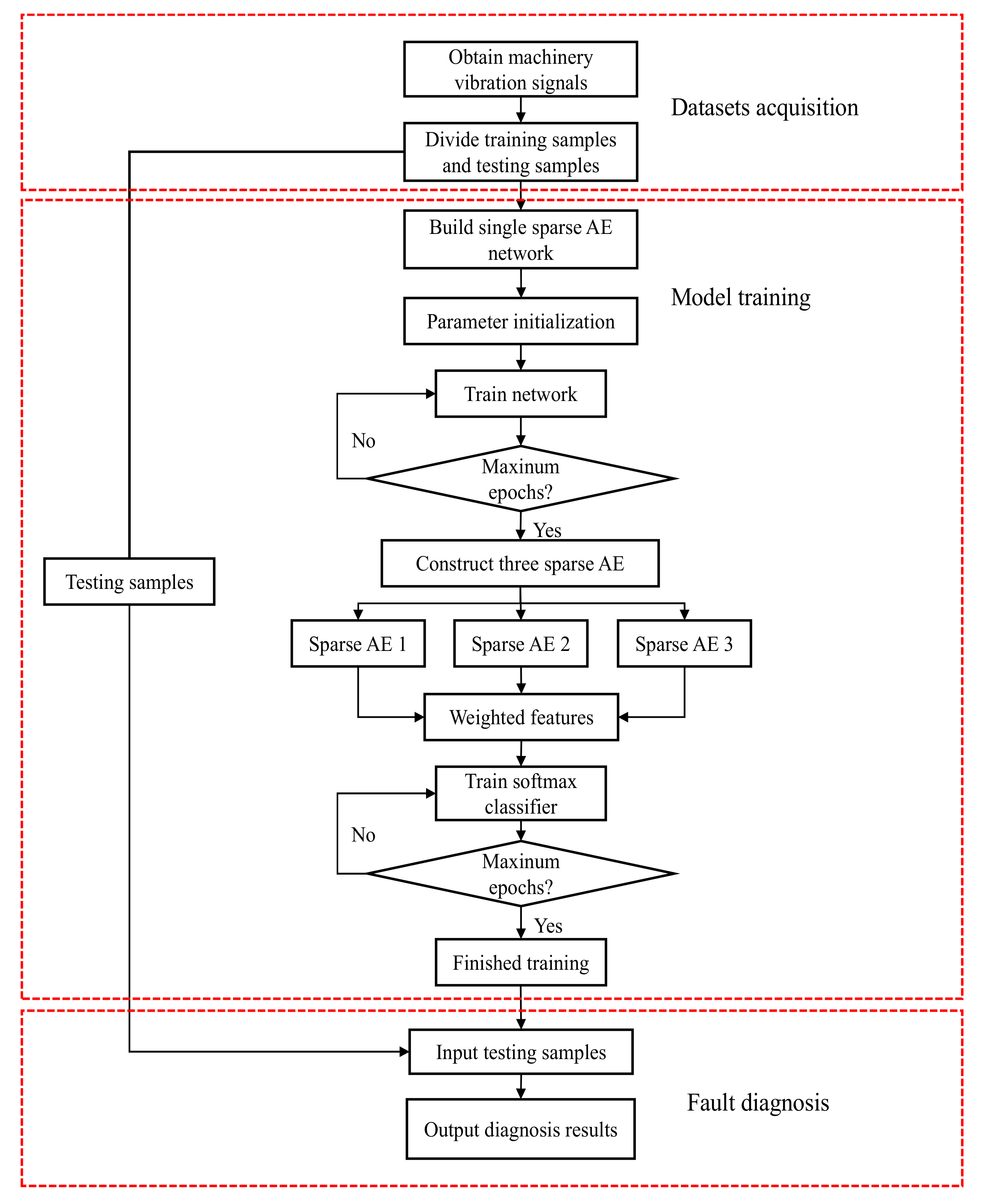

3. Proposed Fault Diagnosis Method

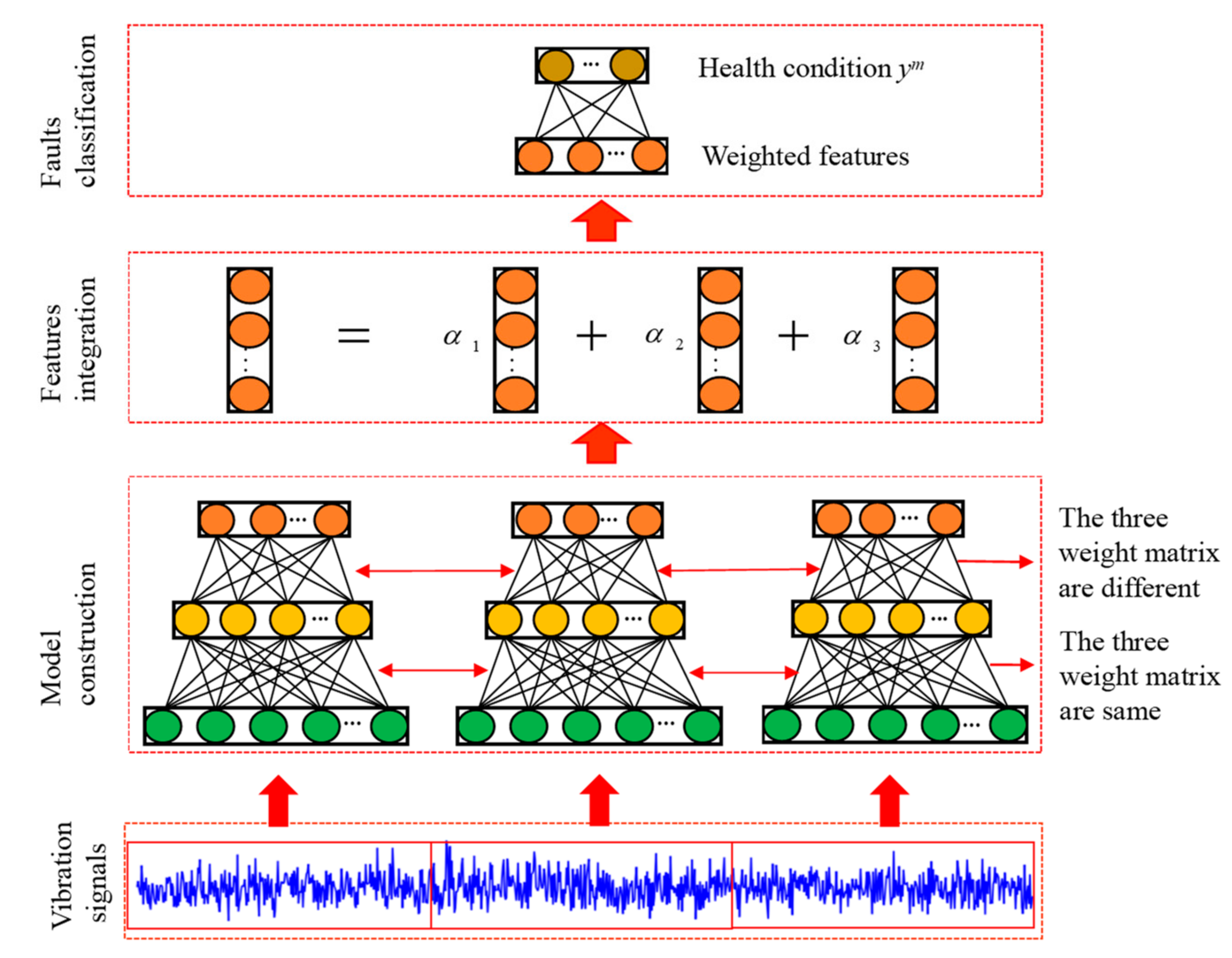

3.1. Ensemble Auto-Encoders Construction

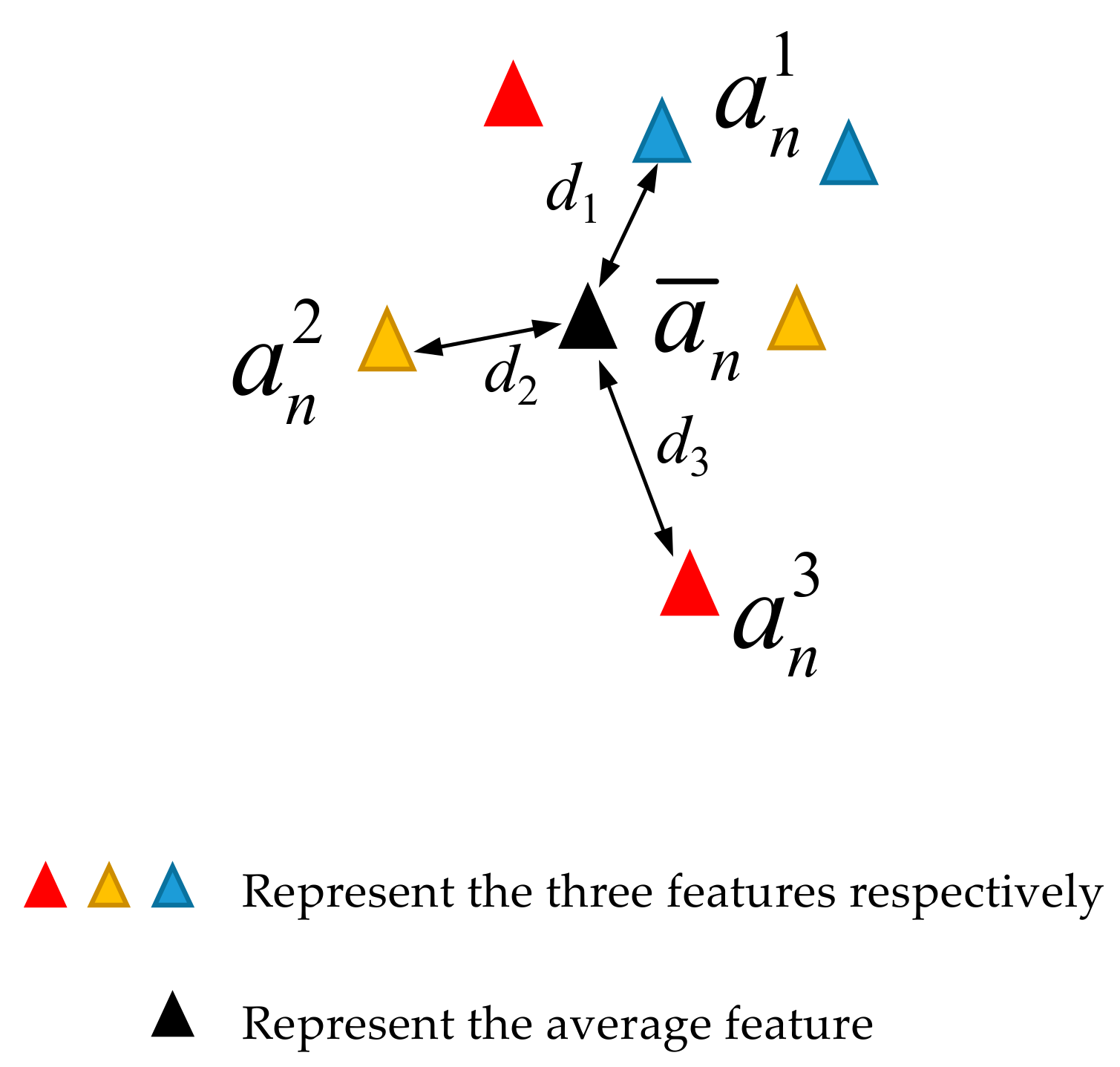

3.2. Weighting Strategy

3.3. Feature Integration

4. Experiment and Analysis

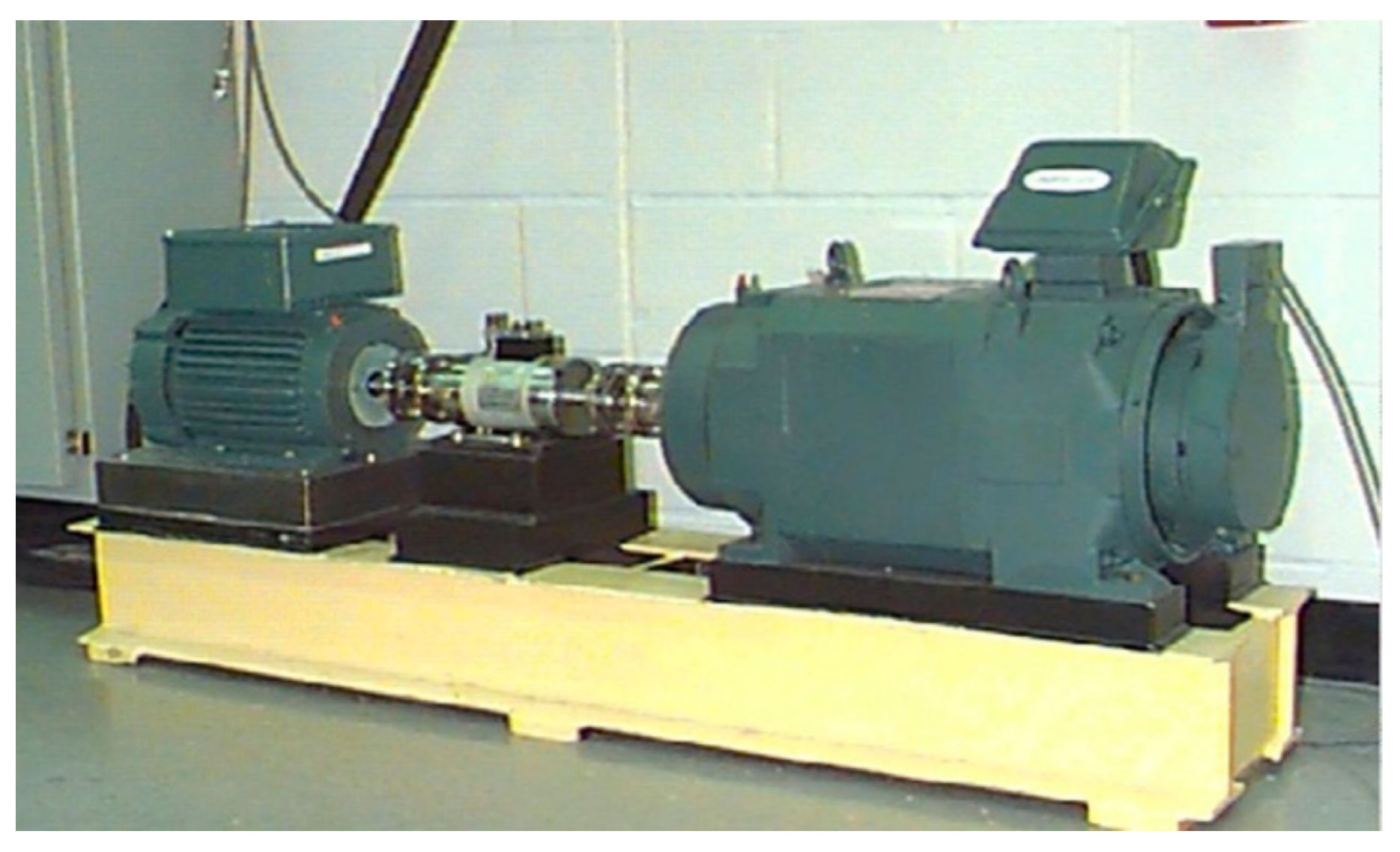

4.1. Dataset Description

4.2. Compare Studies

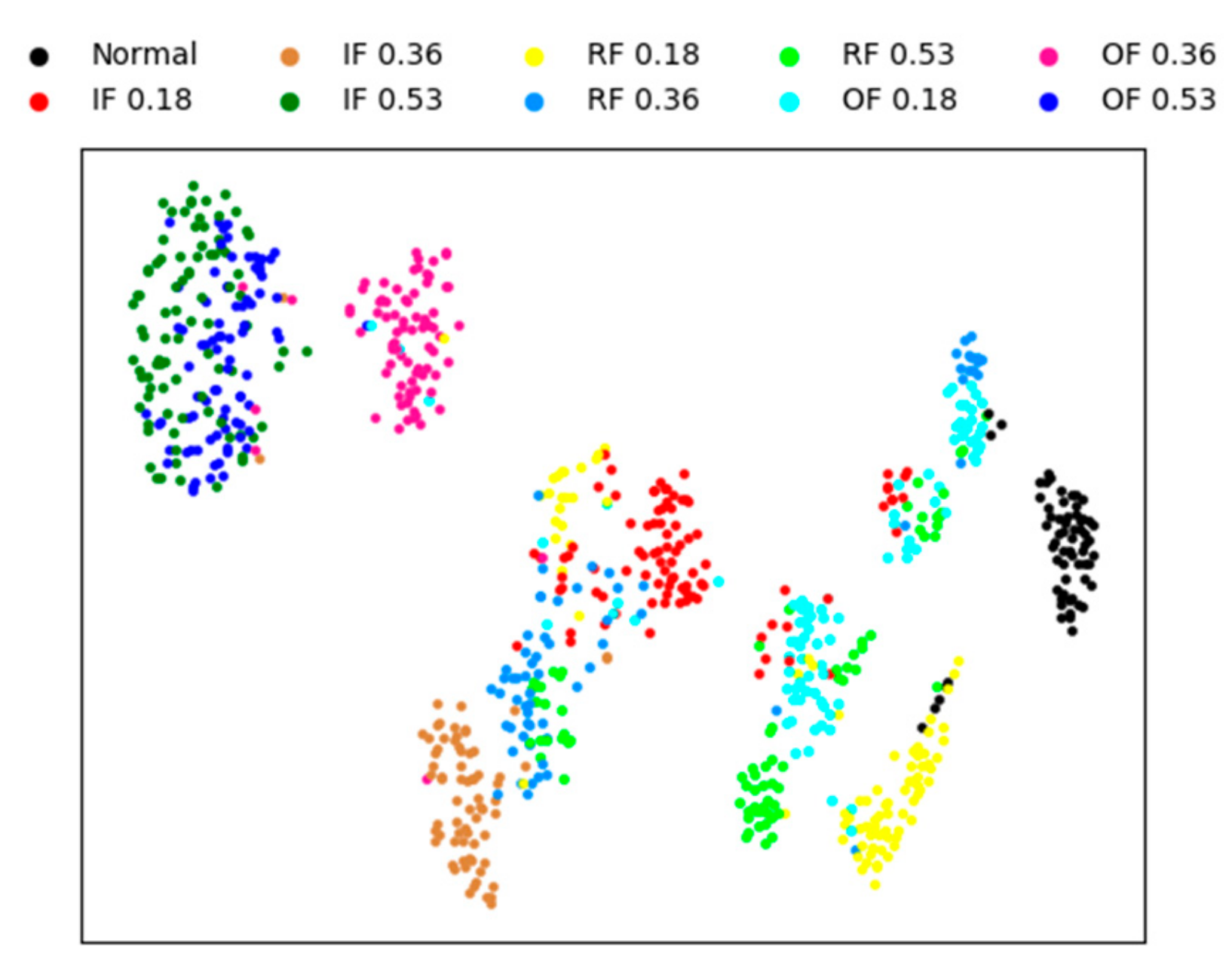

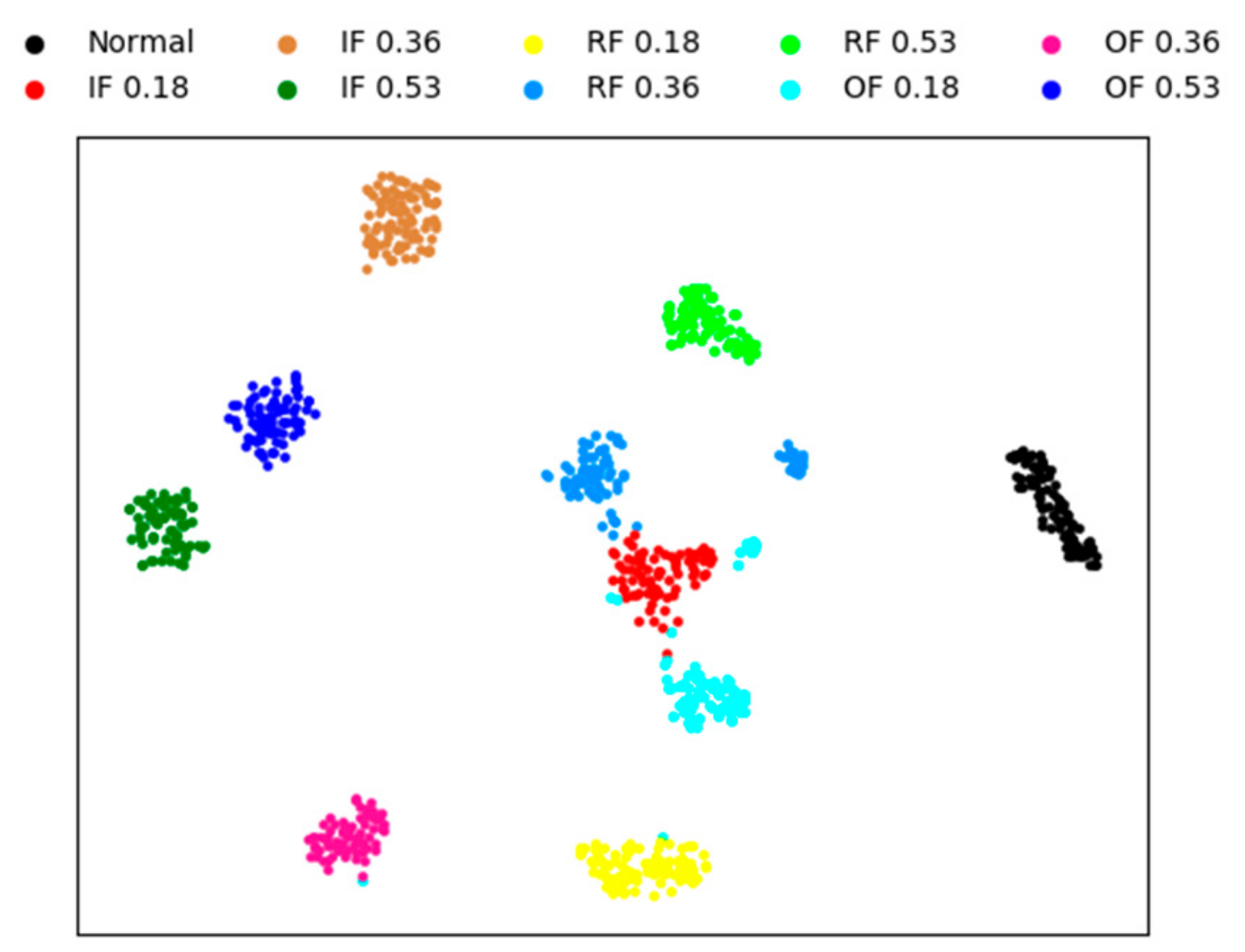

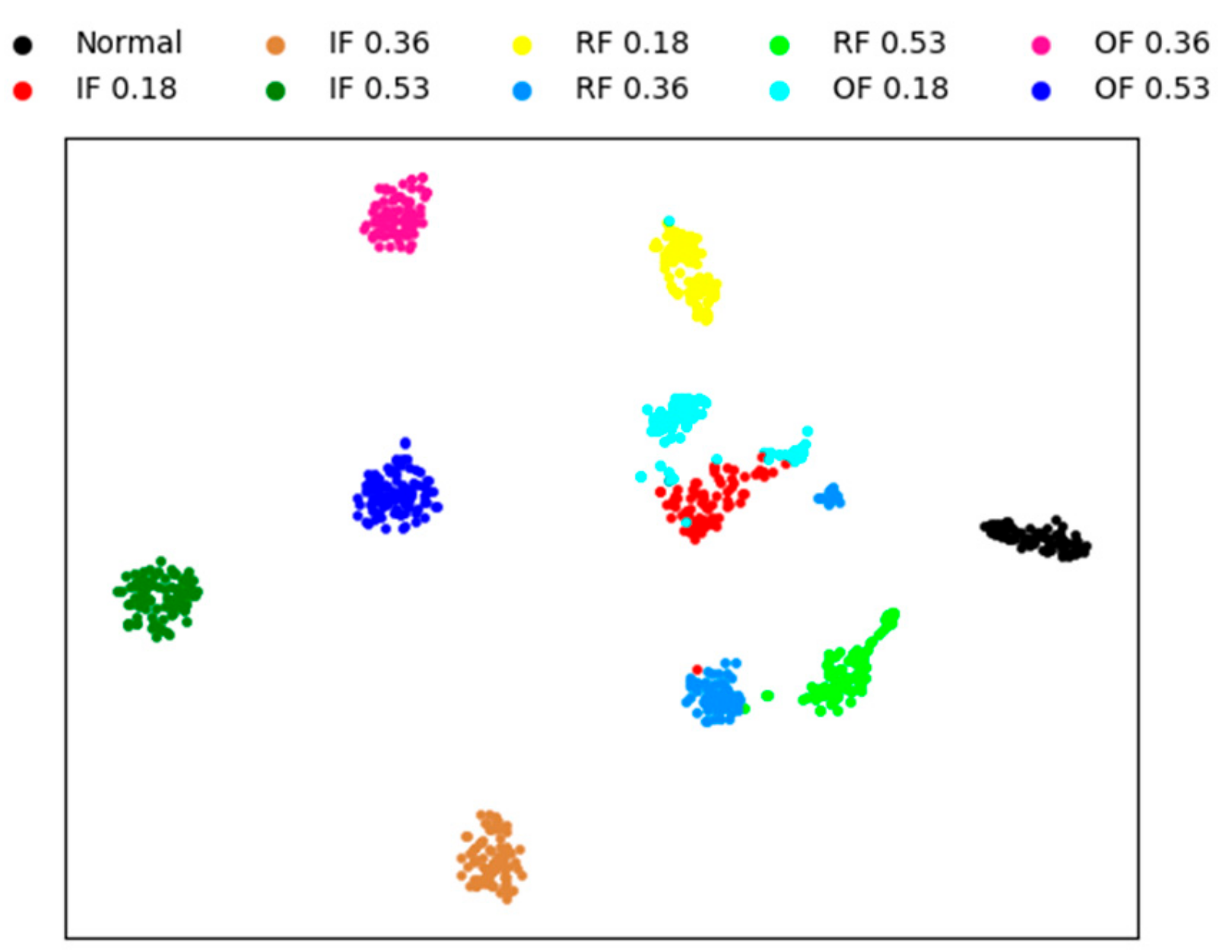

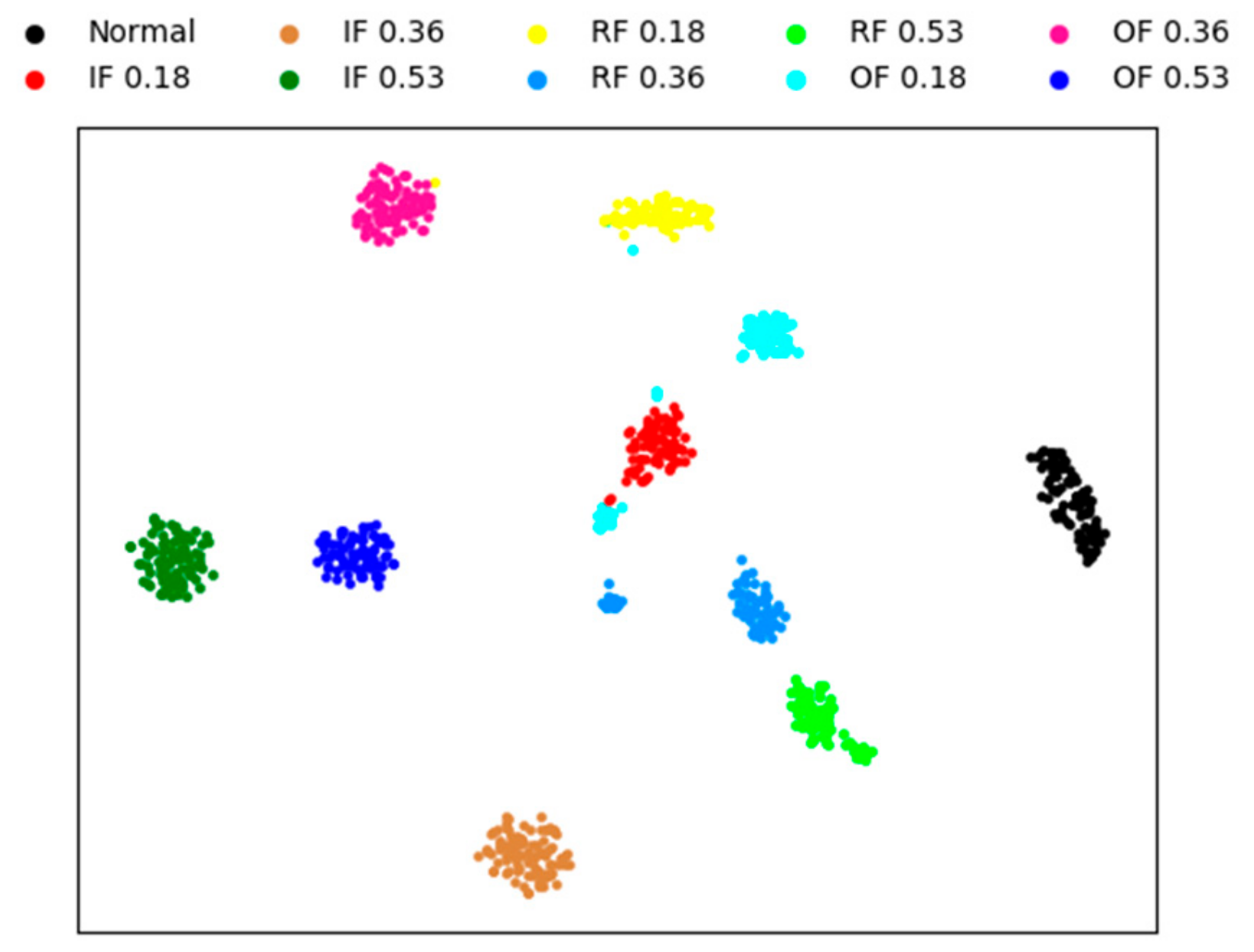

4.3. Visualization of Learned Representation

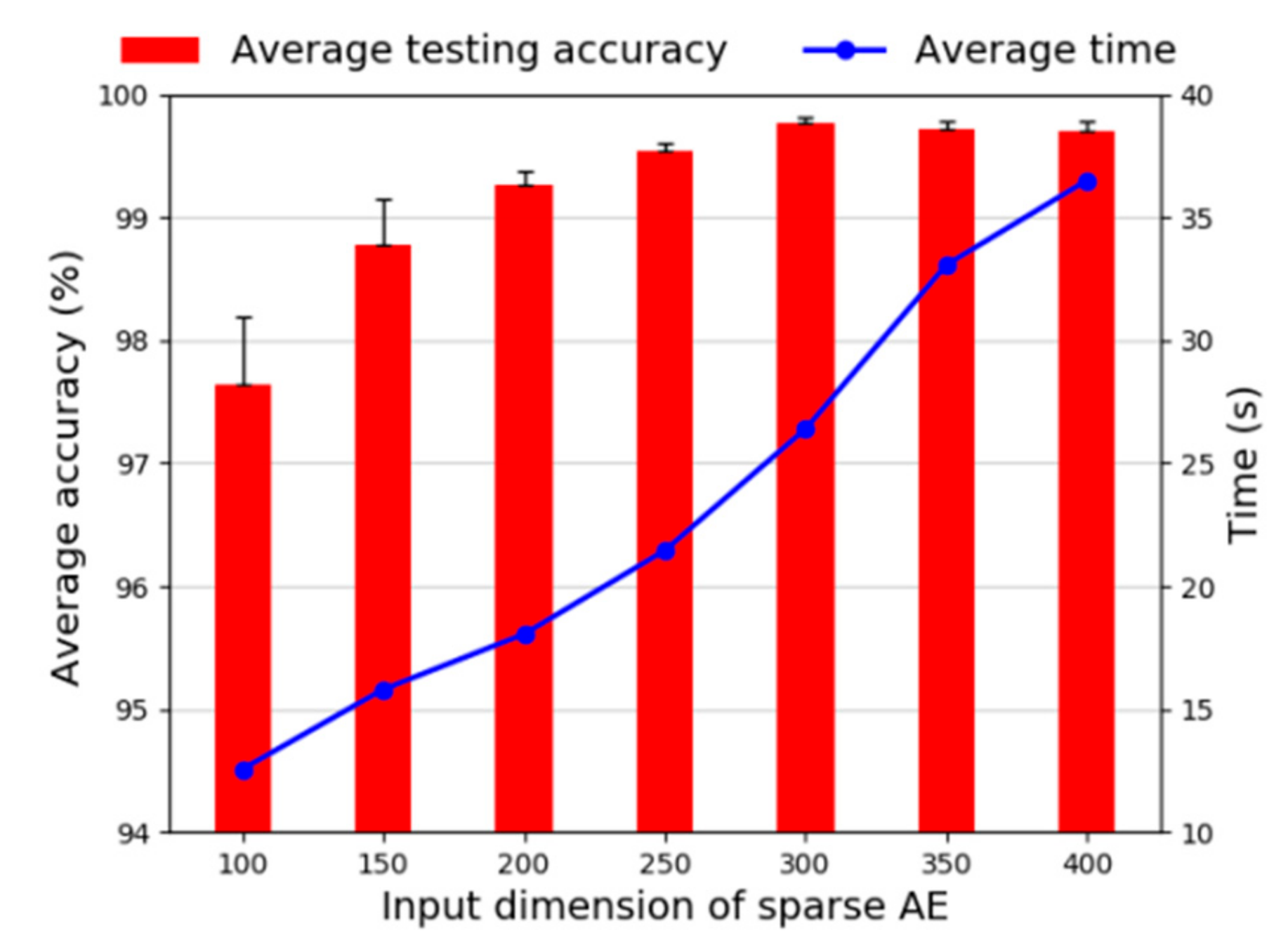

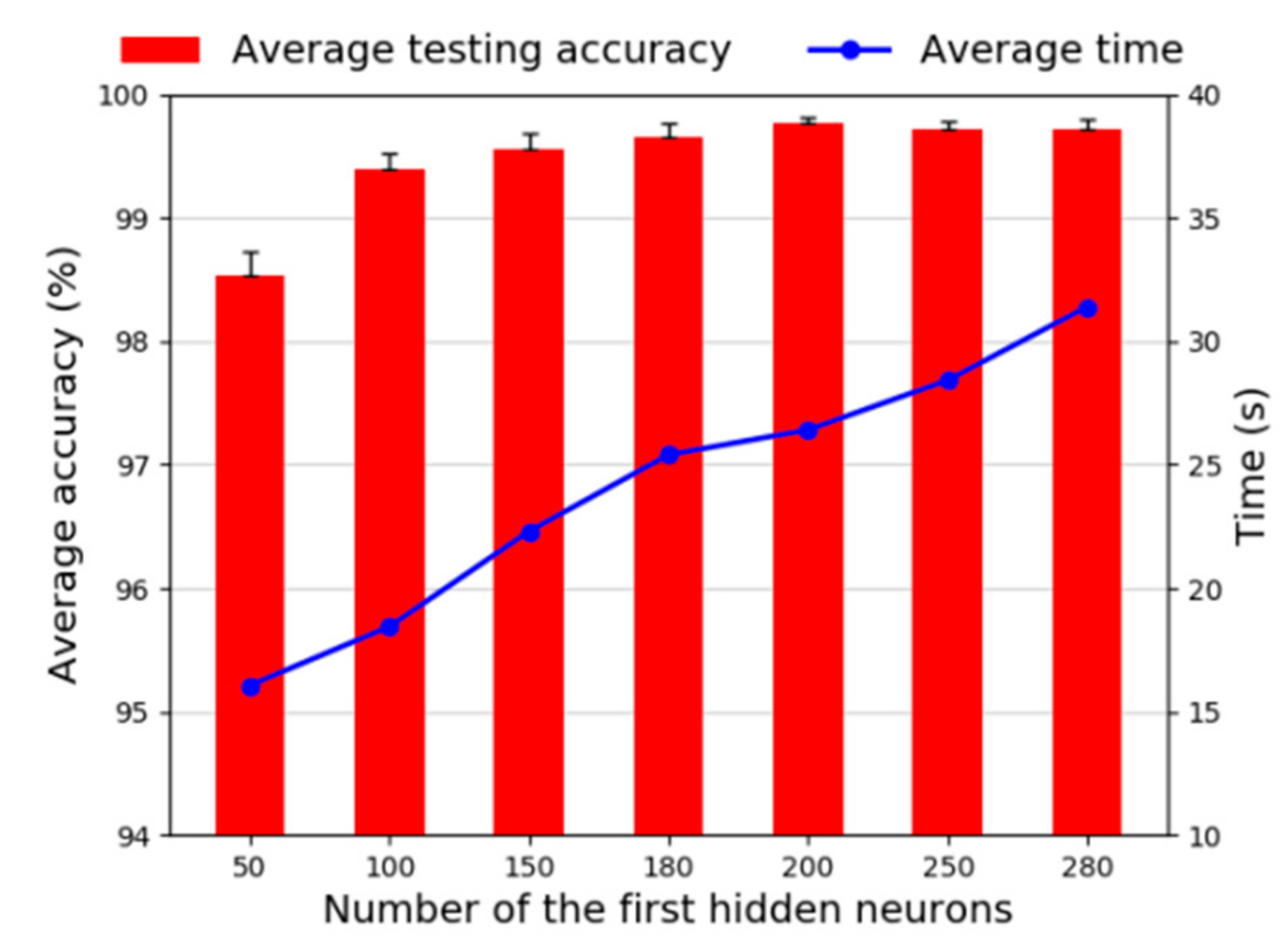

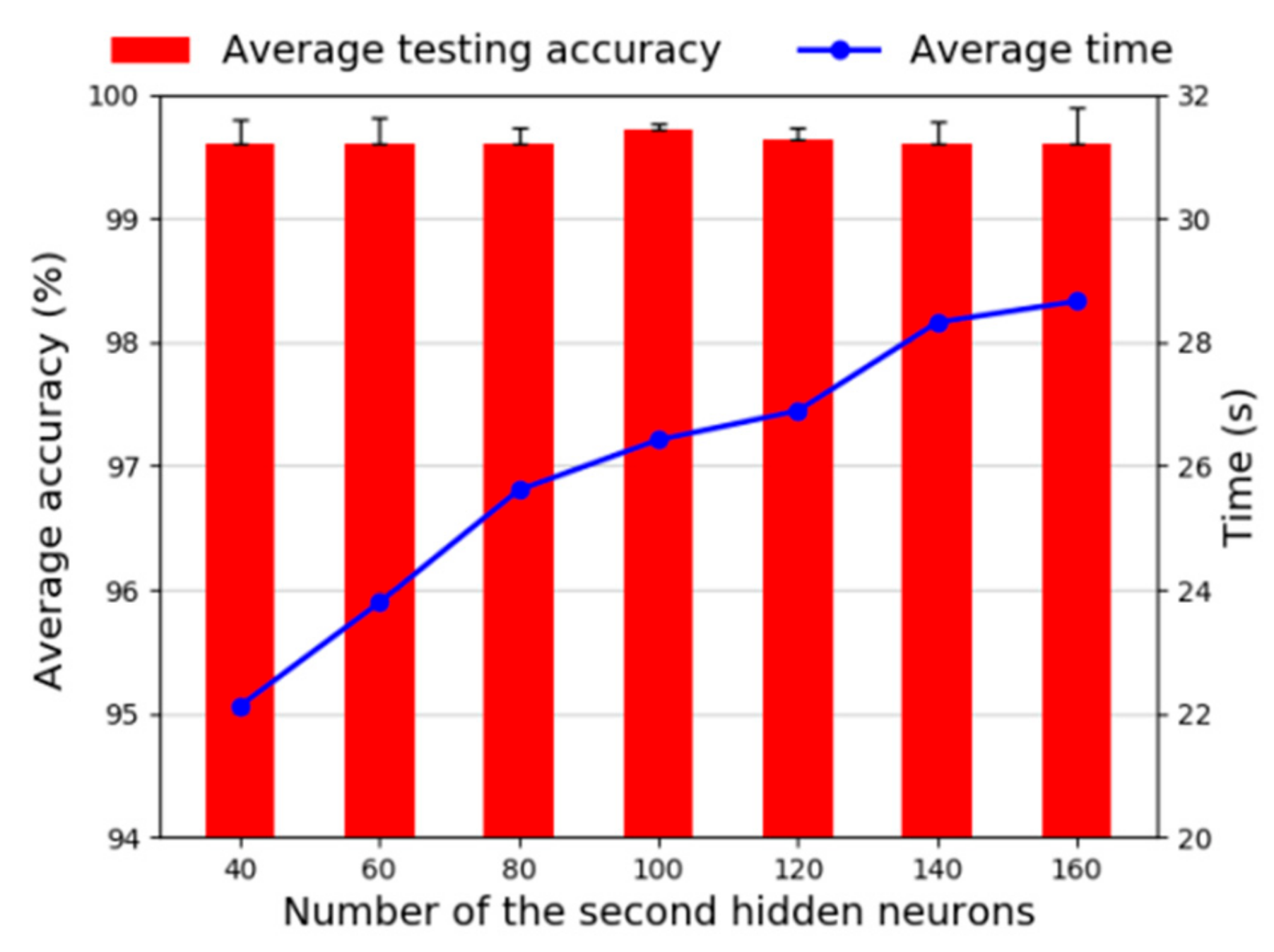

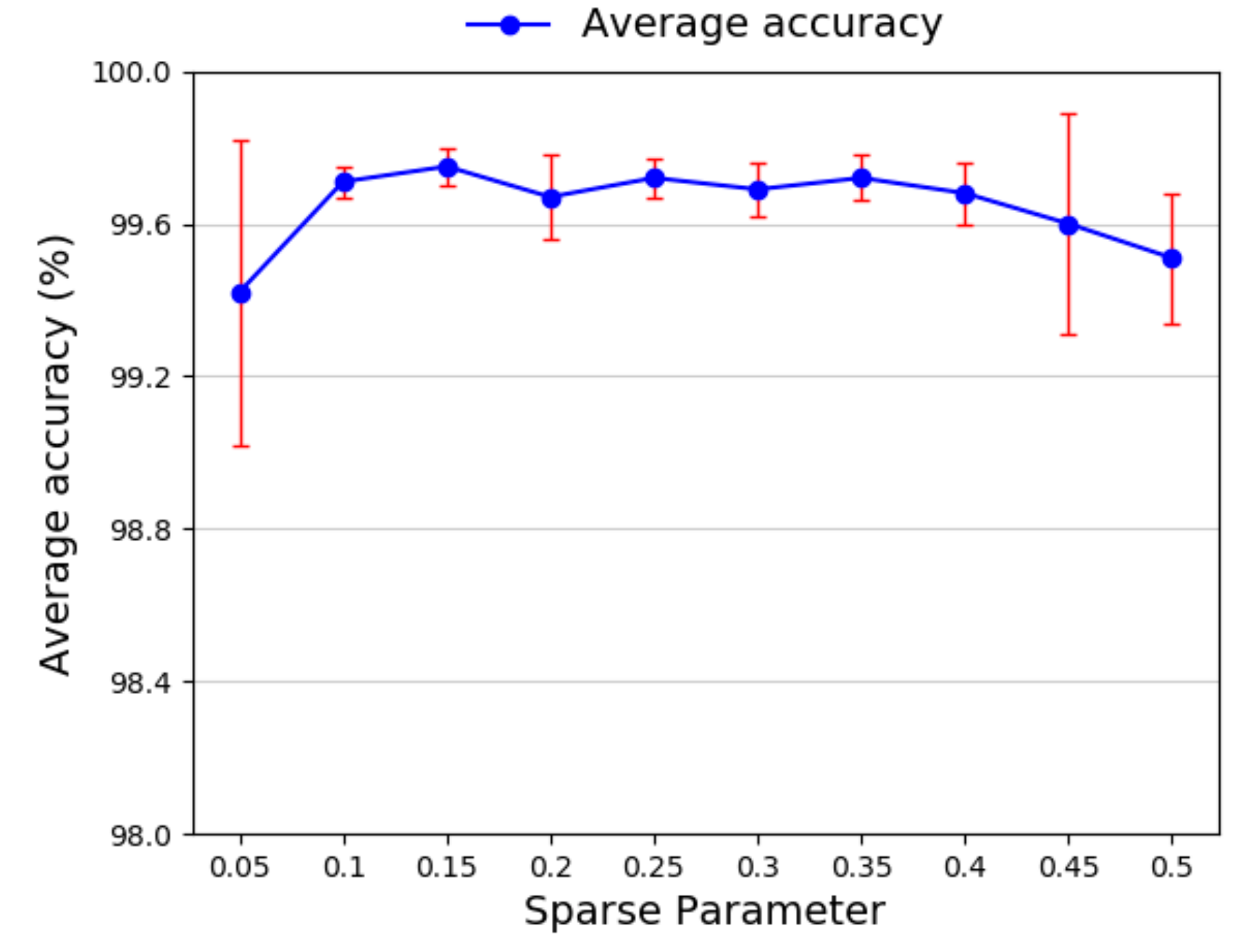

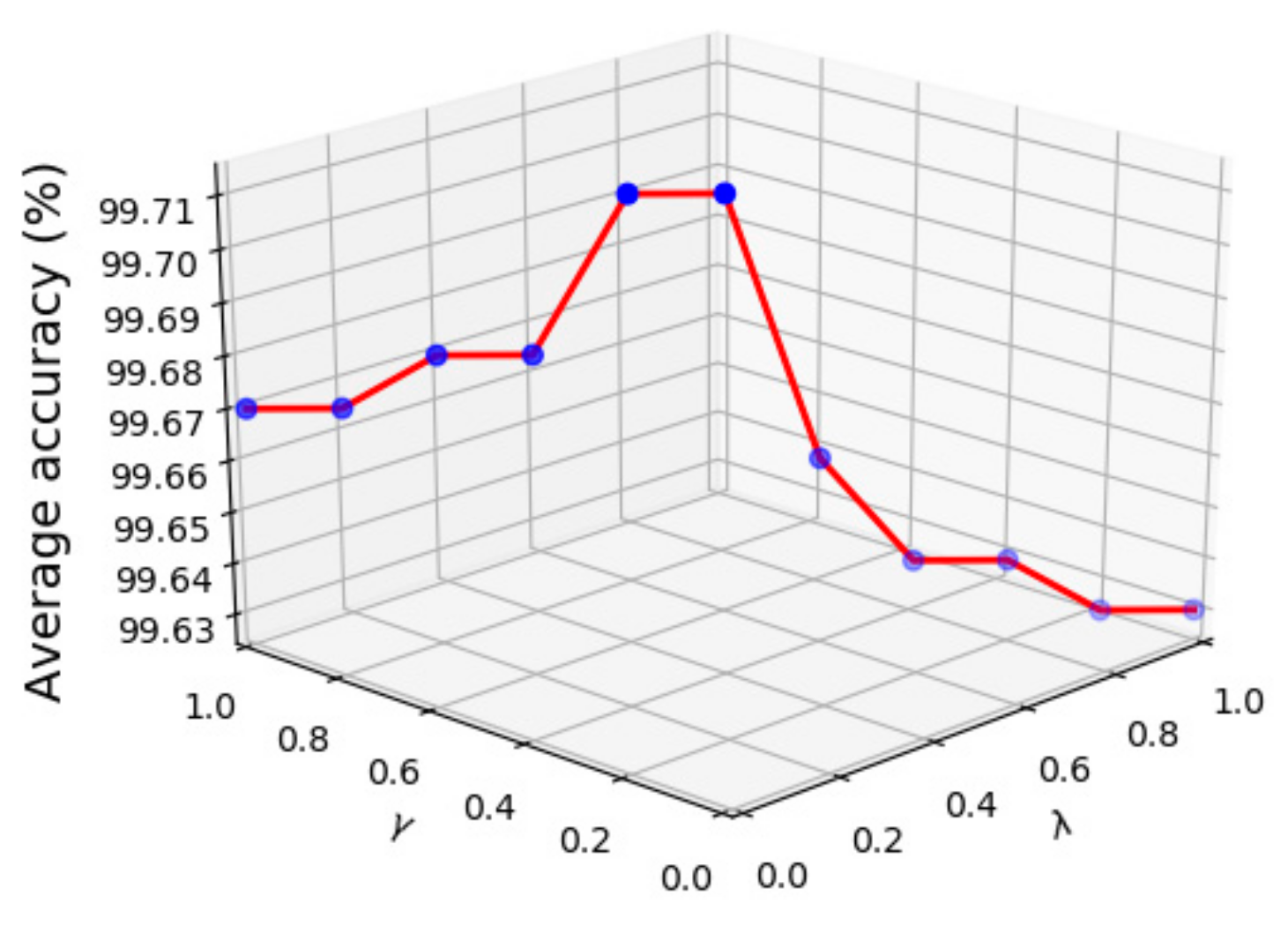

4.4. Parameters Selection of the Proposed Method

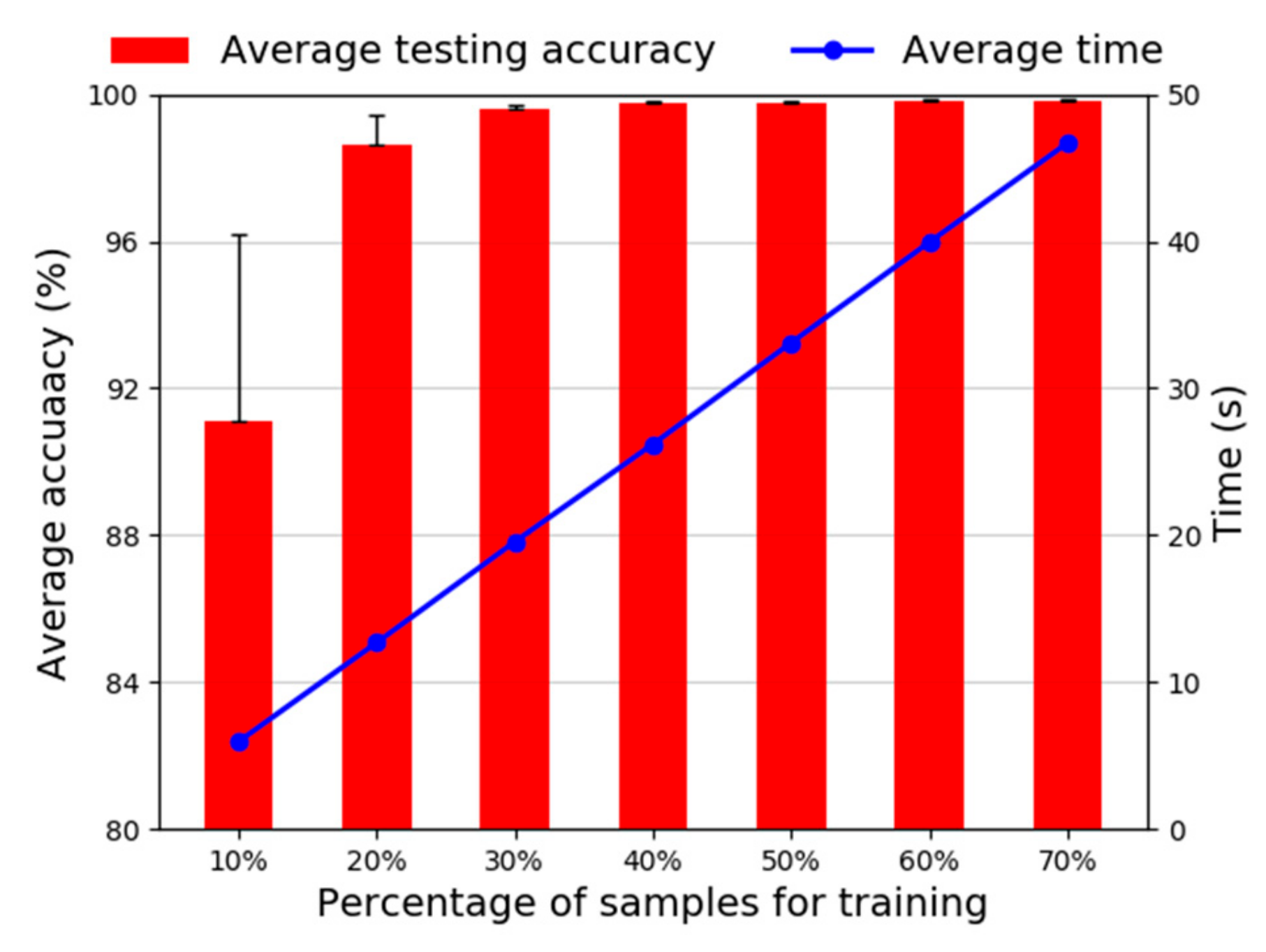

4.5. Effect of Segments and Training Samples

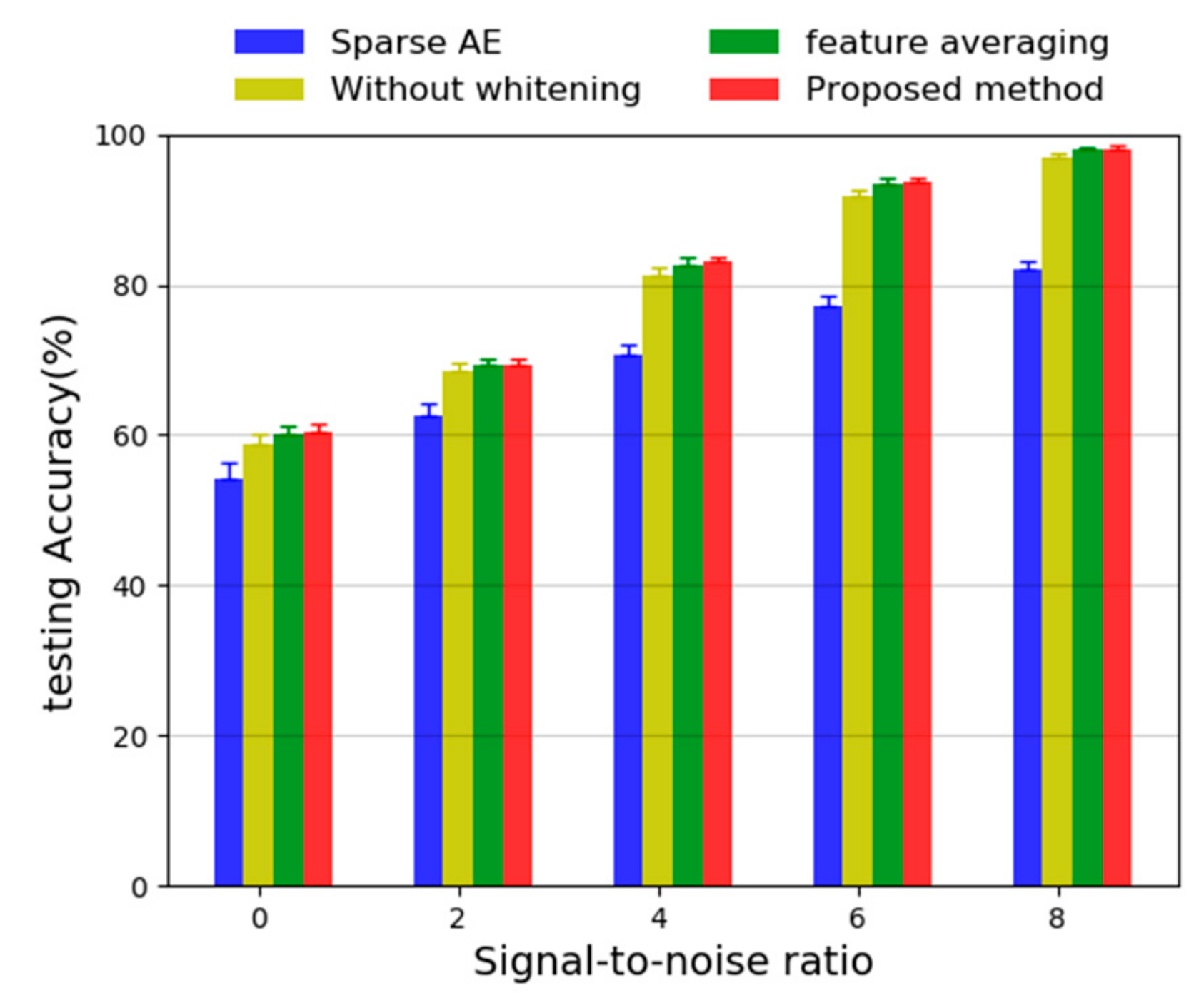

4.6. Robustness Against Environmental Noises

5. Concluding Remarks

Author Contributions

Funding

Conflicts of Interest

References

- Lei, Y.; Li, N.; Guo, L.; Li, N.; Yan, T.; Lin, J. Machinery health prognostics: A systematic review from data acquisition to RUL prediction. Mech. Syst. Signal Process. 2018, 104, 799–834. [Google Scholar] [CrossRef]

- Lu, C.; Wang, Z.-Y.; Qin, W.-L.; Ma, J. Fault diagnosis of rotary machinery components using a stacked denoising autoencoder-based health state identification. Signal Process. 2017, 130, 377–388. [Google Scholar] [CrossRef]

- Li, X.; Zhang, W.; Ding, Q. A robust intelligent fault diagnosis method for rolling element bearings based on deep distance metric learning. Neurocomputing 2018, 310, 77–95. [Google Scholar] [CrossRef]

- Xu, Y.; Sun, Y.; Wan, J.; Liu, X.; Song, Z. Industrial big data for fault diagnosis: Taxonomy, review, and applications. IEEE Access 2017, 5, 17368–17380. [Google Scholar] [CrossRef]

- Gao, Z.; Cecati, C.; Ding, S.X. A survey of fault diagnosis and fault-tolerant techniques—Part I: Fault diagnosis with model-based and signal-based approaches. IEEE Trans. Ind. Electron. 2015, 62, 3757–3767. [Google Scholar] [CrossRef]

- Gao, Z.; Ding, S.X.; Cecati, C. Real-time fault diagnosis and fault-tolerant control. IEEE Trans. Ind. Electron. 2015, 62, 3752–3756. [Google Scholar] [CrossRef]

- Jia, F.; Lei, Y.; Lin, J.; Zhou, X.; Lu, N. Deep neural networks: A promising tool for fault characteristic mining and intelligent diagnosis of rotating machinery with massive data. Mech. Syst. Signal Process. 2016, 72, 303–315. [Google Scholar] [CrossRef]

- Liu, R.; Yang, B.; Zio, E.; Chen, X. Artificial intelligence for fault diagnosis of rotating machinery: A review. Mech. Syst. Signal Process. 2018, 108, 33–47. [Google Scholar] [CrossRef]

- Feng, Z.; Ma, H.; Zuo, M.J. Vibration signal models for fault diagnosis of planet bearings. J. Sound Vib. 2016, 370, 372–393. [Google Scholar] [CrossRef]

- Rai, A.; Upadhyay, S. A review on signal processing techniques utilized in the fault diagnosis of rolling element bearings. Tribol. Int. 2016, 96, 289–306. [Google Scholar] [CrossRef]

- Bustos, A.; Rubio, H.; Castejón, C.; García-Prada, J.C.J. EMD-based methodology for the identification of a high-speed train running in a gear operating state. Sensors 2018, 18, 793. [Google Scholar] [CrossRef] [PubMed]

- Yan, R.; Gao, R.X.; Chen, X. Wavelets for fault diagnosis of rotary machines: A review with applications. Signal Process. 2014, 96, 1–15. [Google Scholar] [CrossRef]

- Bustos, A.; Rubio, H.; Castejon, C.; Garcia-Prada, J.C. Condition monitoring of critical mechanical elements through Graphical Representation of State Configurations and Chromogram of Bands of Frequency. Measurement 2019, 135, 71–82. [Google Scholar] [CrossRef]

- Li, X.; Yang, Y.; Pan, H.; Cheng, J.; Cheng, J.J.M.; Theory, M. Non-parallel least squares support matrix machine for rolling bearing fault diagnosis. Mech. Mach. Theory 2020, 145, 103676. [Google Scholar] [CrossRef]

- Ali, J.B.; Fnaiech, N.; Saidi, L.; Chebel-Morello, B.; Fnaiech, F. Application of empirical mode decomposition and artificial neural network for automatic bearing fault diagnosis based on vibration signals. Appl. Acoust. 2015, 89, 16–27. [Google Scholar]

- Lee, J.; Wu, F.; Zhao, W.; Ghaffari, M.; Liao, L.; Siegel, D. Prognostics and health management design for rotary machinery systems—Reviews, methodology and applications. Mech. Syst. Signal Process. 2014, 42, 314–334. [Google Scholar] [CrossRef]

- Fu, W.; Shao, K.; Tan, J.; Wang, K.J. Fault diagnosis for rolling bearings based on composite multiscale fine-sorted dispersion entropy and SVM with hybrid mutation SCA-HHO algorithm optimization. IEEE Access 2020, 8, 13086–13104. [Google Scholar] [CrossRef]

- He, C.; Liu, C.; Wu, T.; Xu, Y.; Wu, Y.; Chen, T. Medical rolling bearing fault prognostics based on improved extreme learning machine. J. Comb. Optim. 2019, 1–22. [Google Scholar] [CrossRef]

- Kang, M.; Kim, J.; Kim, J.-M.; Tan, A.C.; Kim, E.Y.; Choi, B.-K. Reliable fault diagnosis for low-speed bearings using individually trained support vector machines with kernel discriminative feature analysis. IEEE Trans. Power Electron. 2014, 30, 2786–2797. [Google Scholar] [CrossRef]

- Cao, H.; Fan, F.; Zhou, K.; He, Z. Wheel-bearing fault diagnosis of trains using empirical wavelet transform. Measurement 2016, 82, 439–449. [Google Scholar] [CrossRef]

- Huang, S.; Tan, K.K.; Lee, T.H. Fault diagnosis and fault-tolerant control in linear drives using the Kalman filter. IEEE Trans. Ind. Electron. 2012, 59, 4285–4292. [Google Scholar] [CrossRef]

- Wen, L.; Li, X.; Gao, L.; Zhang, Y. A new convolutional neural network-based data-driven fault diagnosis method. IEEE Trans. Ind. Electron. 2017, 65, 5990–5998. [Google Scholar] [CrossRef]

- Khan, S.; Yairi, T. A review on the application of deep learning in system health management. Mech. Syst. Signal Process. 2018, 107, 241–265. [Google Scholar] [CrossRef]

- Liu, R.; Meng, G.; Yang, B.; Sun, C.; Chen, X. Dislocated time series convolutional neural architecture: An intelligent fault diagnosis approach for electric machine. IEEE Trans. Ind. Inform. 2016, 13, 1310–1320. [Google Scholar] [CrossRef]

- Jia, F.; Lei, Y.; Guo, L.; Lin, J.; Xing, S. A neural network constructed by deep learning technique and its application to intelligent fault diagnosis of machines. Neurocomputing 2018, 272, 619–628. [Google Scholar] [CrossRef]

- Shao, H.; Jiang, H.; Zhang, H.; Duan, W.; Liang, T.; Wu, S. Rolling bearing fault feature learning using improved convolutional deep belief network with compressed sensing. Mech. Syst. Signal Process. 2018, 100, 743–765. [Google Scholar] [CrossRef]

- Li, X.; Zhang, W.; Ding, Q. Cross-domain fault diagnosis of rolling element bearings using deep generative neural networks. IEEE Trans. Ind. Electron. 2018, 66, 5525–5534. [Google Scholar] [CrossRef]

- Long, J.; Zhang, S.; Li, C. Evolving Deep Echo State Networks for Intelligent Fault Diagnosis. IEEE Trans. Ind. Inform. 2019. [Google Scholar] [CrossRef]

- Mao, W.; He, L.; Yan, Y.; Wang, J. Online sequential prediction of bearings imbalanced fault diagnosis by extreme learning machine. Mech. Syst. Signal Process. 2017, 83, 450–473. [Google Scholar] [CrossRef]

- Ma, S.; Chu, F. Ensemble deep learning-based fault diagnosis of rotor bearing systems. Comput. Ind. 2019, 105, 143–152. [Google Scholar] [CrossRef]

- Rokach, L. Ensemble-based classifiers. Artif. Intell. Rev. 2010, 33, 1–39. [Google Scholar] [CrossRef]

- Polikar, R. Ensemble based systems in decision making. IEEE Circuits Syst. Mag. 2006, 6, 21–45. [Google Scholar] [CrossRef]

- Qi, Y.; Shen, C.; Wang, D.; Shi, J.; Jiang, X.; Zhu, Z. Stacked sparse autoencoder-based deep network for fault diagnosis of rotating machinery. IEEE Access 2017, 5, 15066–15079. [Google Scholar] [CrossRef]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Makhzani, A.; Frey, B. K-sparse autoencoders. arXiv 2013, arXiv:1312.5663. [Google Scholar]

- Ng, A. Sparse autoencoder. CS294A Lect. Notes 2011, 72, 1–19. [Google Scholar]

- Erhan, D.; Bengio, Y.; Courville, A.; Manzagol, P.-A.; Vincent, P.; Bengio, S. Why does unsupervised pre-training help deep learning? J. Mach. Learn. Res. 2010, 11, 625–660. [Google Scholar]

- Tao, S.; Zhang, T.; Yang, J.; Wang, X.; Lu, W. Bearing fault diagnosis method based on stacked autoencoder and softmax regression. In Proceedings of the 2015 34th Chinese Control Conference (CCC), Hangzhou, China, 28–30 July 2015; pp. 6331–6335. [Google Scholar]

- Zheng, J.; Pan, H.; Cheng, J. Rolling bearing fault detection and diagnosis based on composite multiscale fuzzy entropy and ensemble support vector machines. Mech. Syst. Signal Process. 2017, 85, 746–759. [Google Scholar] [CrossRef]

- Li, S.; Liu, G.; Tang, X.; Lu, J.; Hu, J. An ensemble deep convolutional neural network model with improved DS evidence fusion for bearing fault diagnosis. Sensors 2017, 17, 1729. [Google Scholar] [CrossRef]

- Zhou, F.; Hu, P.; Yang, S.; Wen, C. A Multimodal Feature Fusion-Based Deep Learning Method for Online Fault Diagnosis of Rotating Machinery. Sensors 2018, 18, 3521. [Google Scholar] [CrossRef]

- Jiang, G.; He, H.; Yan, J.; Xie, P. Multiscale convolutional neural networks for fault diagnosis of wind turbine gearbox. IEEE Trans. Ind. Electron. 2018, 66, 3196–3207. [Google Scholar] [CrossRef]

- Smith, W.A.; Randall, R.B. Rolling element bearing diagnostics using the Case Western Reserve University data: A benchmark study. Mech. Syst. Signal Process. 2015, 64, 100–131. [Google Scholar] [CrossRef]

- Shao, H.; Jiang, H.; Lin, Y.; Li, X. A novel method for intelligent fault diagnosis of rolling bearings using ensemble deep auto-encoders. Mech. Syst. Signal Process. 2018, 102, 278–297. [Google Scholar] [CrossRef]

- Sun, J.; Yan, C.; Wen, J. Intelligent bearing fault diagnosis method combining compressed data acquisition and deep learning. IEEE Trans. Instrum. Meas. 2017, 67, 185–195. [Google Scholar] [CrossRef]

- Lei, Y.; Jia, F.; Lin, J.; Xing, S.; Ding, S.X. An intelligent fault diagnosis method using unsupervised feature learning towards mechanical big data. IEEE Trans. Ind. Electron. 2016, 63, 3137–3147. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, X.; Gao, L.; Chen, W.; Li, P. Intelligent fault diagnosis of rotating machinery using a new ensemble deep auto-encoder method. Measurement 2020, 151, 107232. [Google Scholar] [CrossRef]

- Maaten, L.V.d.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Fault Type | Fault Size (mm) | Load(hp) | Label |

|---|---|---|---|

| Normal | 0.0 | 0,1,2,3 | 1 |

| IF | 0.18 | 0,1,2,3 | 2 |

| IF | 0.36 | 0,1,2,3 | 3 |

| IF | 0.53 | 0,1,2,3 | 4 |

| RF | 0.18 | 0,1,2,3 | 5 |

| RF | 0.36 | 0,1,2,3 | 6 |

| RF | 0.53 | 0,1,2,3 | 7 |

| OF | 0.18 | 0,1,2,3 | 8 |

| OF | 0.36 | 0,1,2,3 | 9 |

| OF | 0.53 | 0,1,2,3 | 10 |

| Method | Average Accuracy | Standard Deviation |

|---|---|---|

| SVM | 43.99% | 3.09% |

| BPNN | 78.07% | 5.91% |

| SAE | 87.40% | 2.44% |

| Our method | 99.71% | 0.05% |

| Method | Load(hp) | No. of Health Condition | Testing Accuracy | Standard Deviation |

|---|---|---|---|---|

| [45] | 0 | 12 | 97.18% | 0.11% |

| [46] | 2 | 7 | 97.41% | 0.43% |

| [47] | 0,1,2,3 | 10 | 99.66% | 0.19% |

| [48] | 0,1,2,3 | 10 | 99.69% | 0.24% |

| Proposed | 0,1,2,3 | 10 | 99.71% | 0.05% |

| Parameters Description | Value |

|---|---|

| The dimension of Sparse AE | 300 |

| The number of the hidden layers | 2 |

| The number of the first hidden neurons | 200 |

| The number of the second hidden neurons | 100 |

| Learning rate | 0.007 |

| Sparse parameter | 0.15 |

| Sparse penalty factor | 2 |

| Batch size | 100 |

| Hyper-parameters (λ, γ) | 0.5 |

| Segments | Average Accuracy | Standard Deviation | Training Time (s) | Testing Time (s) |

|---|---|---|---|---|

| 1 | 87.40% | 2.44% | 19.11 | 0.28 |

| 2 | 98.62% | 0.23% | 23.27 | 0.36 |

| 3 | 99.71% | 0.05% | 27.62 | 0.44 |

| 4 | 99.88% | 0.04% | 49.44 | 0.50 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, J.; Ouyang, M.; Yong, C.; Chen, D.; Guo, J.; Zhou, Y. A Novel Intelligent Fault Diagnosis Method for Rolling Bearing Based on Integrated Weight Strategy Features Learning. Sensors 2020, 20, 1774. https://doi.org/10.3390/s20061774

He J, Ouyang M, Yong C, Chen D, Guo J, Zhou Y. A Novel Intelligent Fault Diagnosis Method for Rolling Bearing Based on Integrated Weight Strategy Features Learning. Sensors. 2020; 20(6):1774. https://doi.org/10.3390/s20061774

Chicago/Turabian StyleHe, Jun, Ming Ouyang, Chen Yong, Danfeng Chen, Jing Guo, and Yan Zhou. 2020. "A Novel Intelligent Fault Diagnosis Method for Rolling Bearing Based on Integrated Weight Strategy Features Learning" Sensors 20, no. 6: 1774. https://doi.org/10.3390/s20061774

APA StyleHe, J., Ouyang, M., Yong, C., Chen, D., Guo, J., & Zhou, Y. (2020). A Novel Intelligent Fault Diagnosis Method for Rolling Bearing Based on Integrated Weight Strategy Features Learning. Sensors, 20(6), 1774. https://doi.org/10.3390/s20061774