Recycling and Updating an Educational Robot Manipulator with Open-Hardware-Architecture

Abstract

1. Introduction

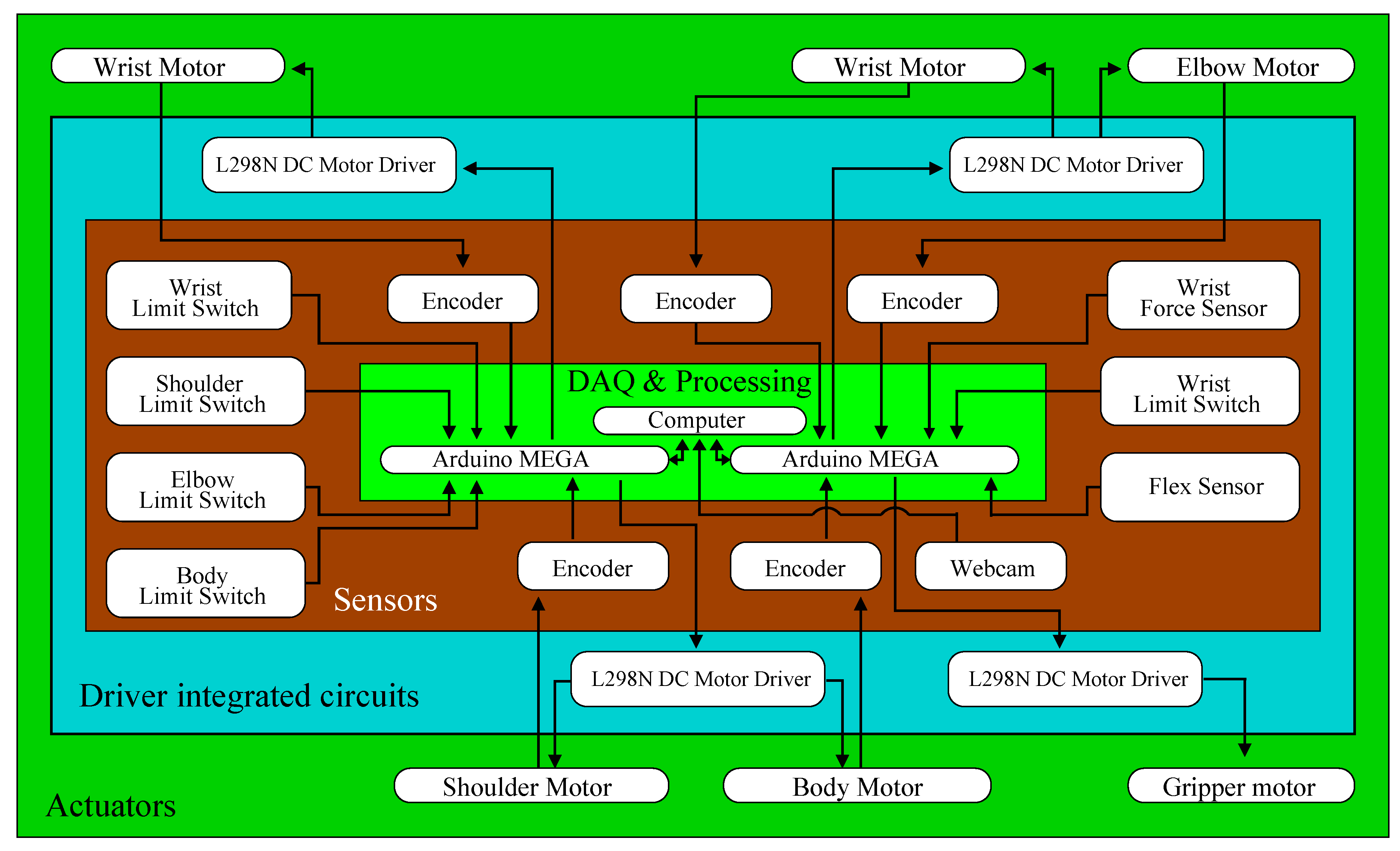

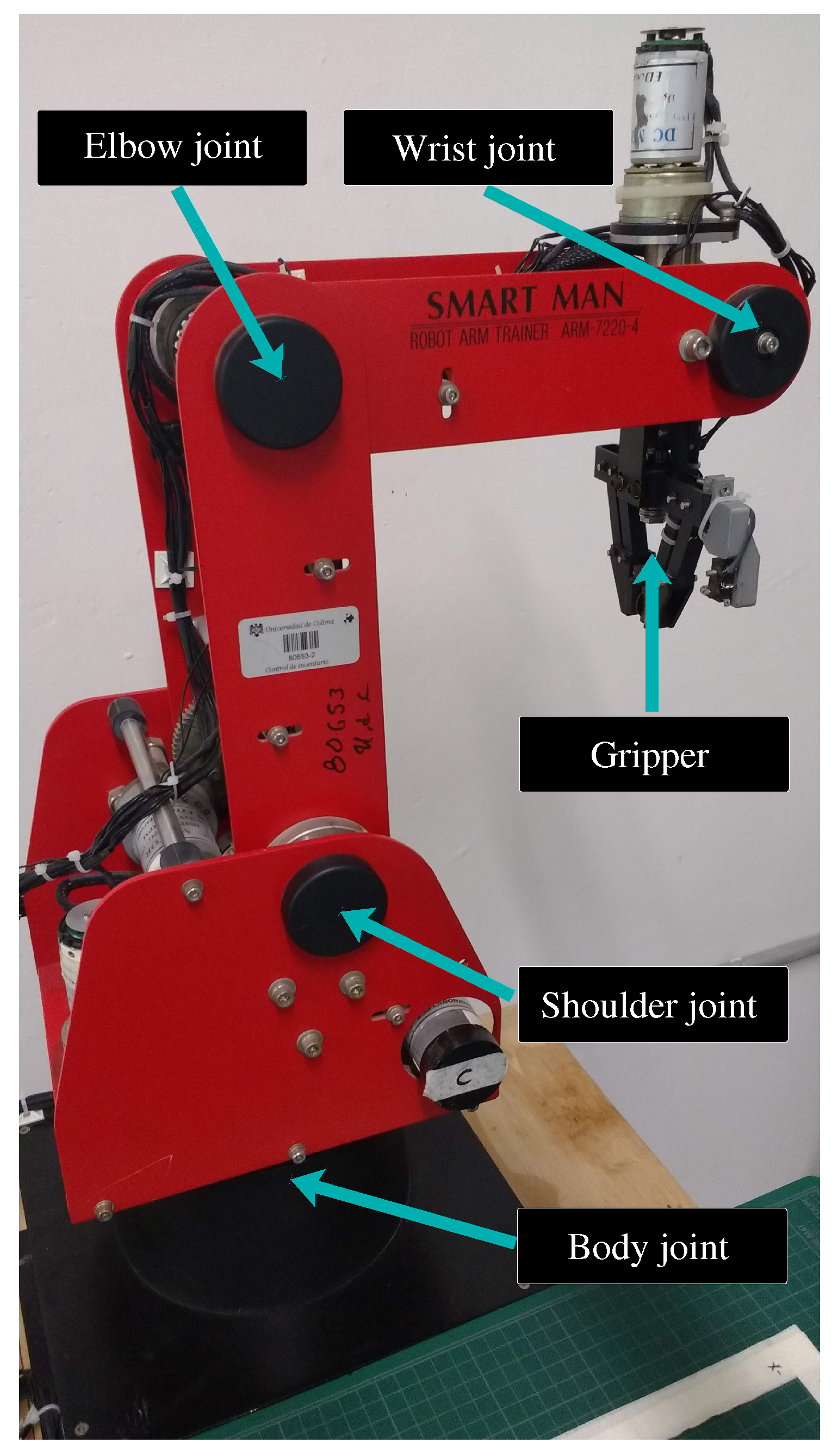

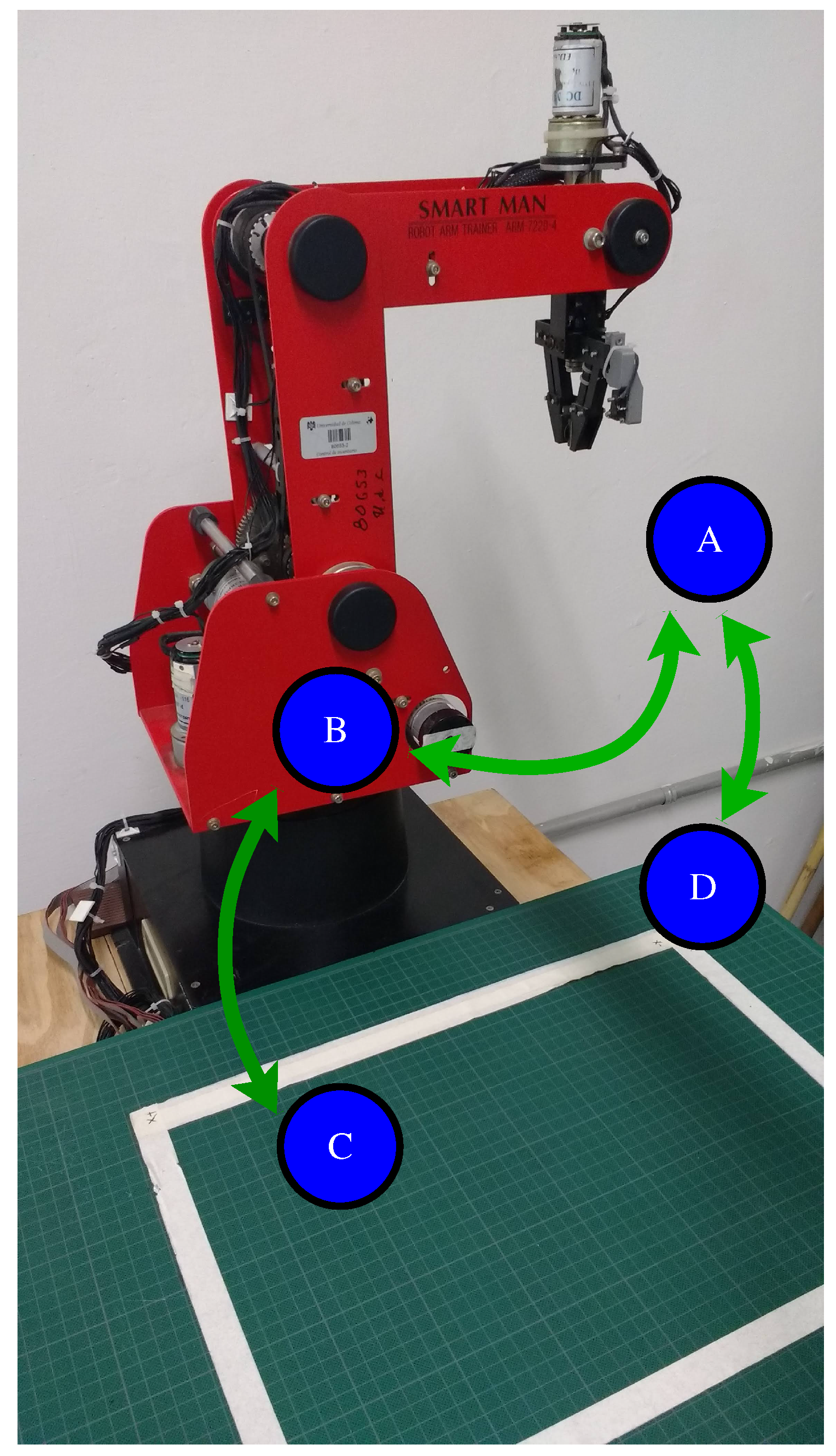

2. Robot Architecture

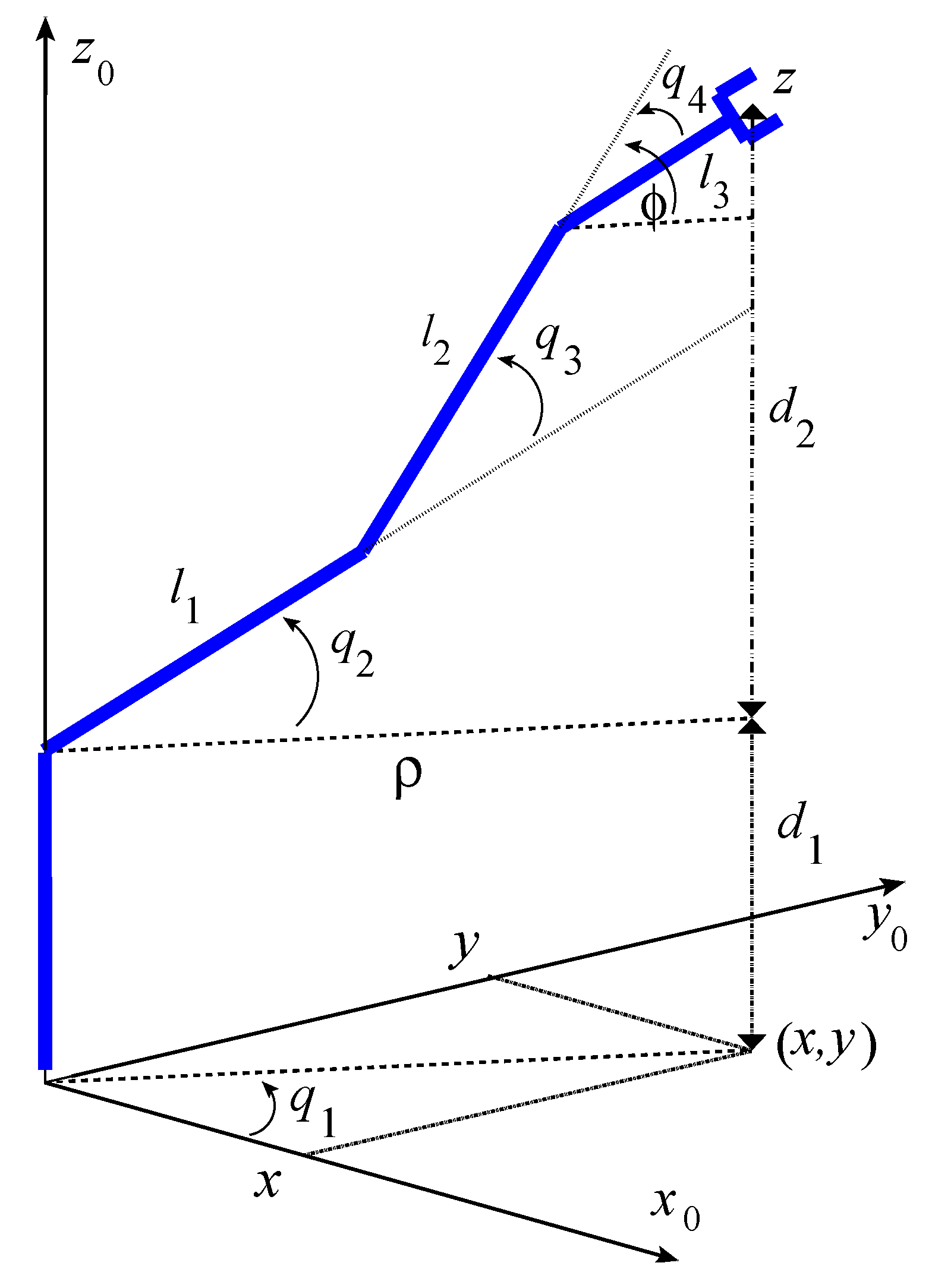

3. Robot Kinematics

3.1. Forward Kinematics

3.2. Robot Workspace

3.3. Inverse Kinematics

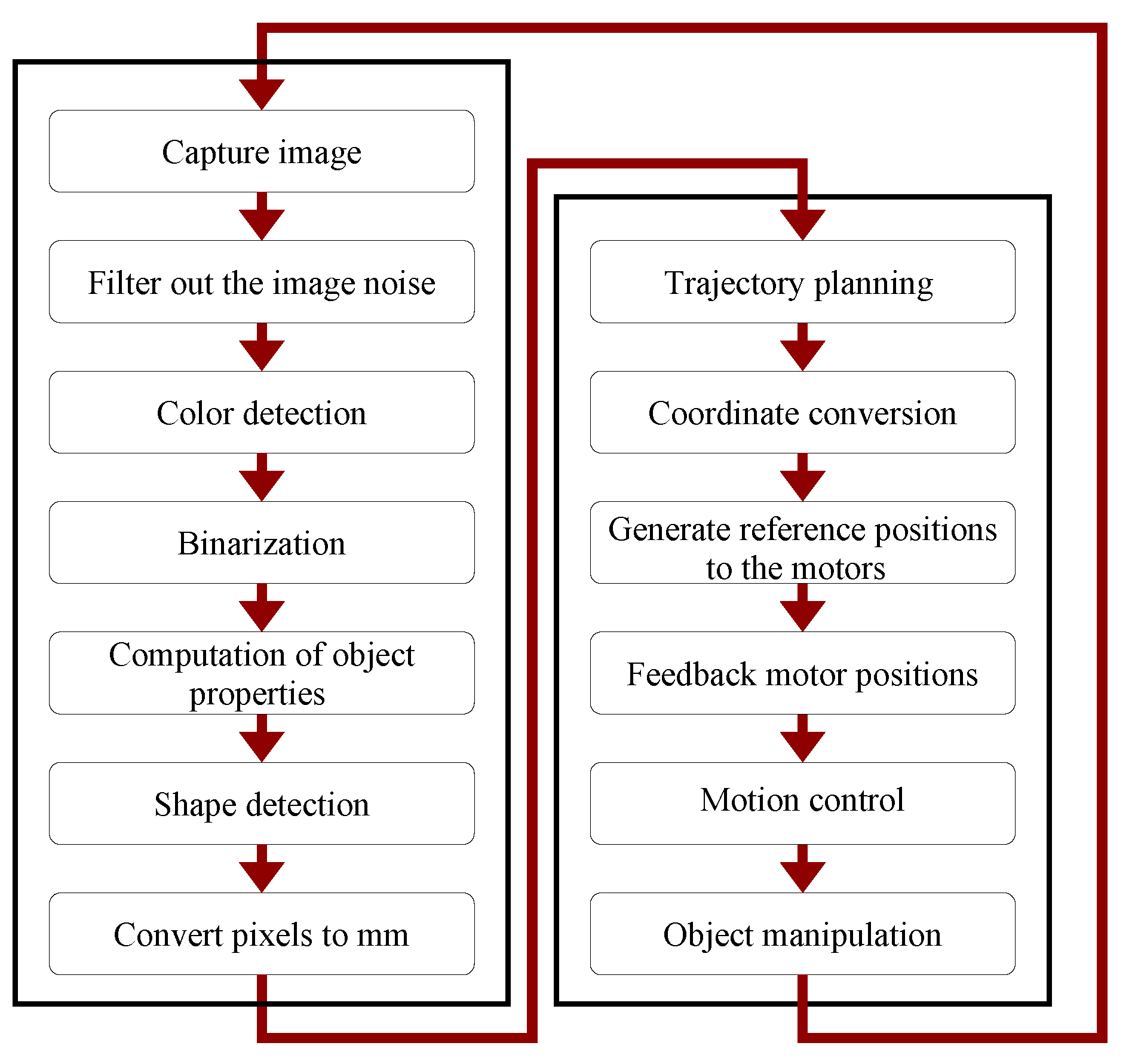

4. Robot Dynamics

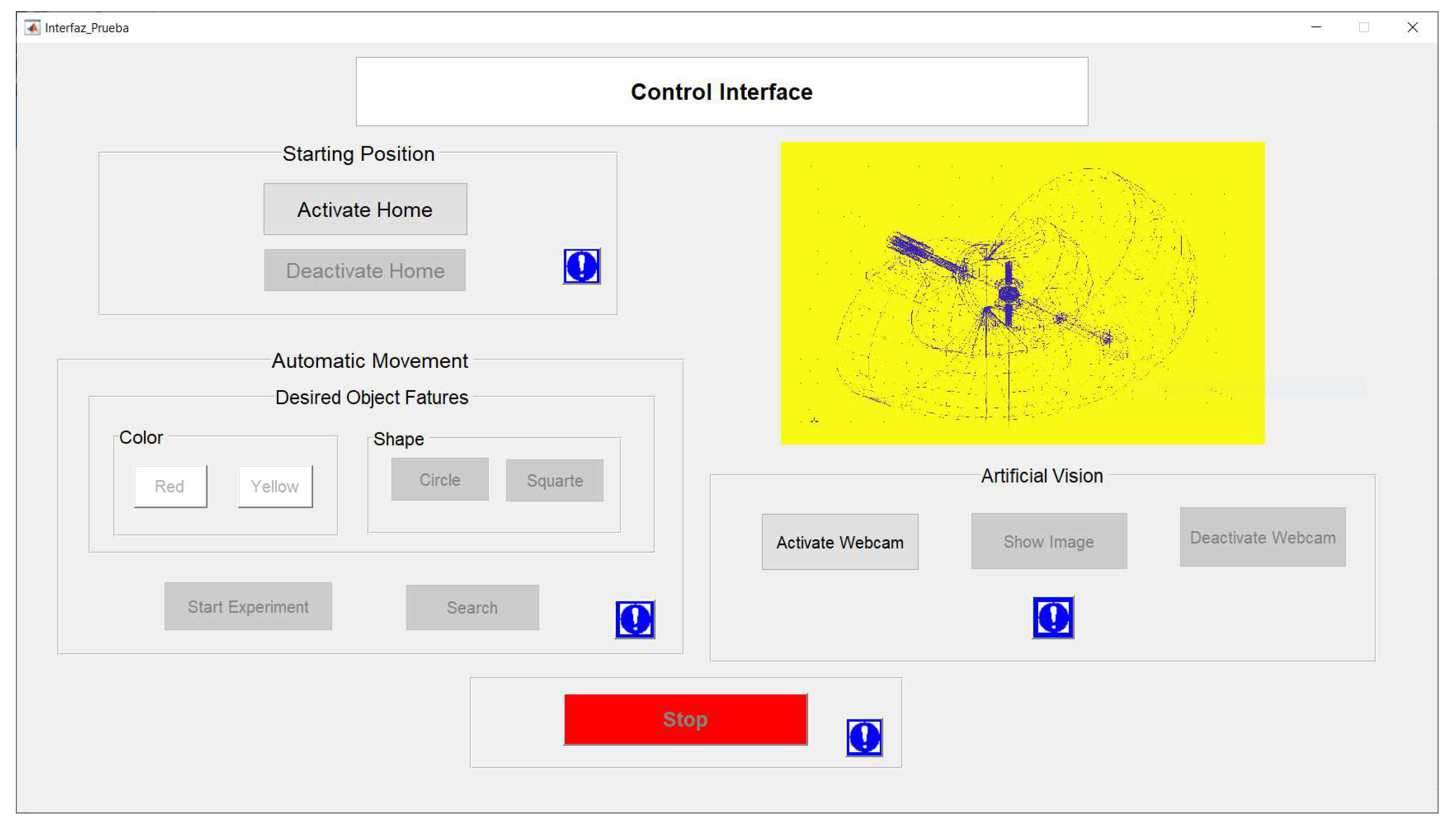

4.1. Dynamic Model of the Actuators

4.2. Mathematical Model of the Robot Manipulator with Actuators

5. Parameter Identification of the Actuators

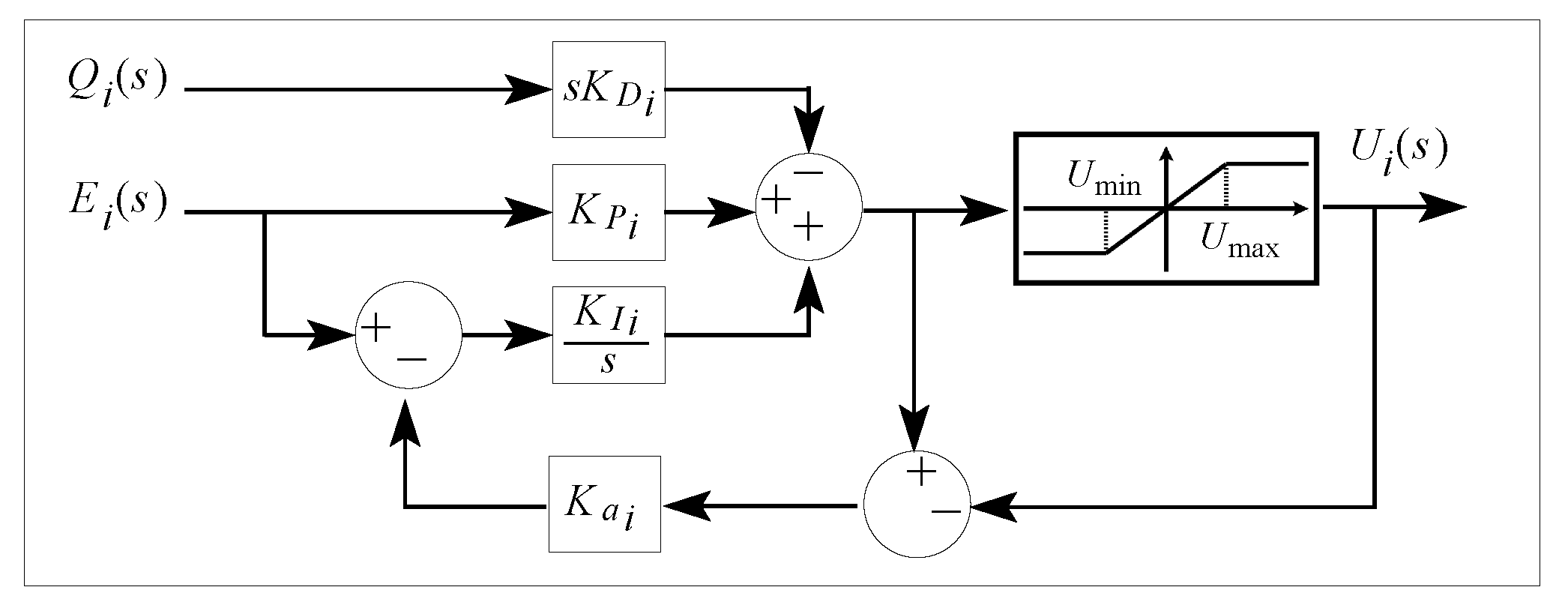

6. Robot Control

State Observer

7. Trajectory Planning

8. Artificial Vision

8.1. Color Detection

8.2. Shape Detection

8.3. Correction of the Object Position

9. Graphical User Interface

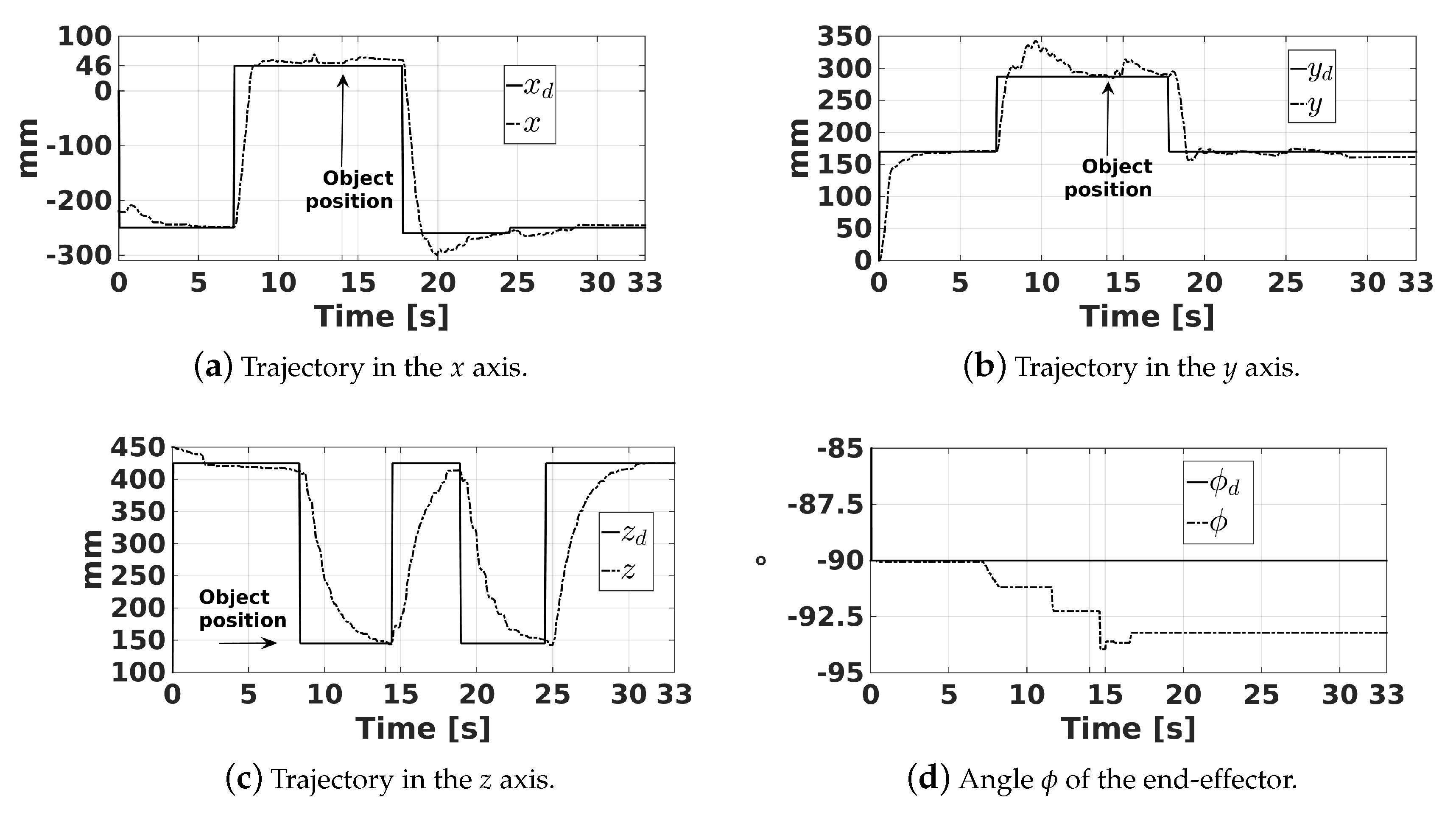

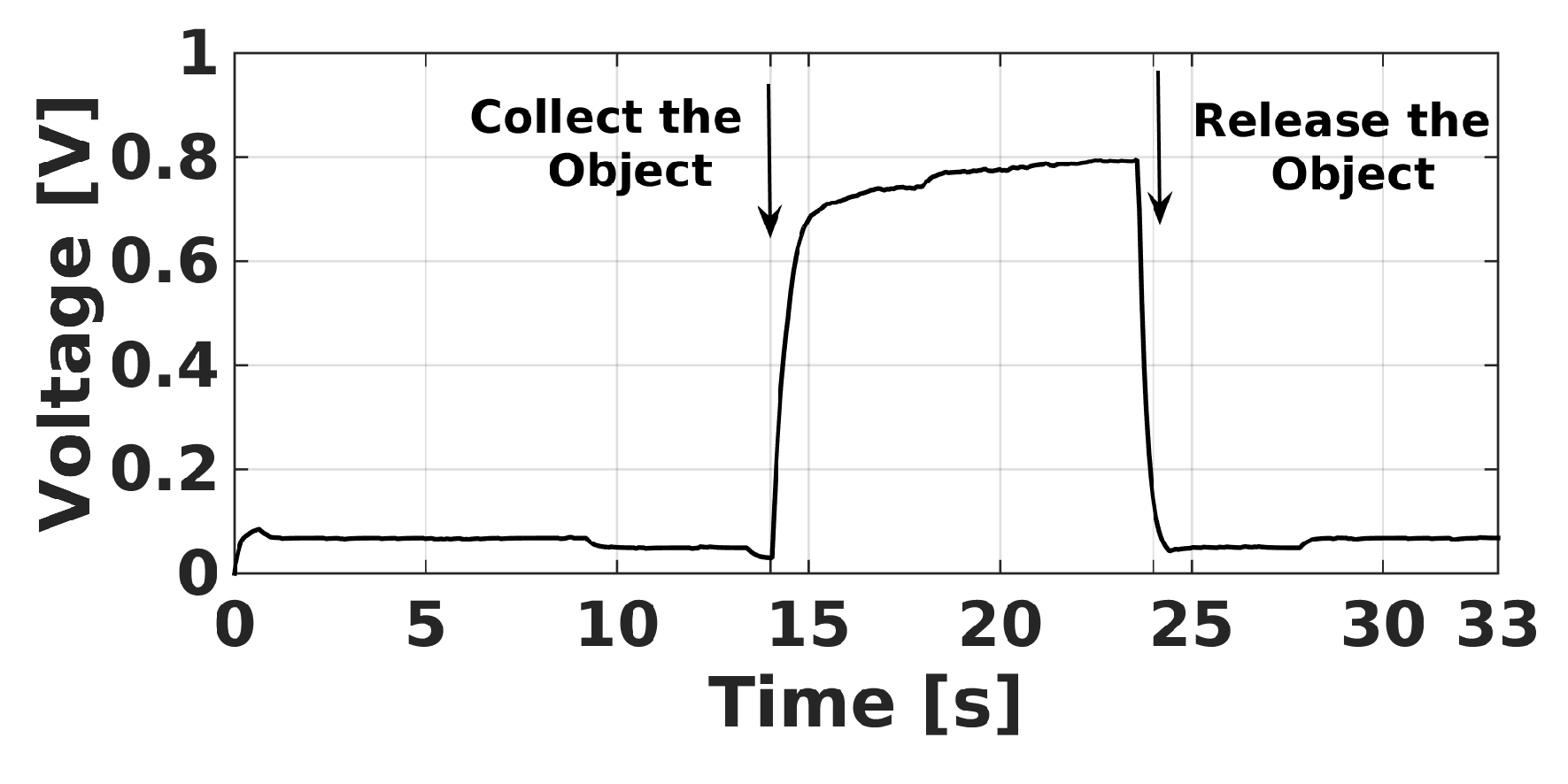

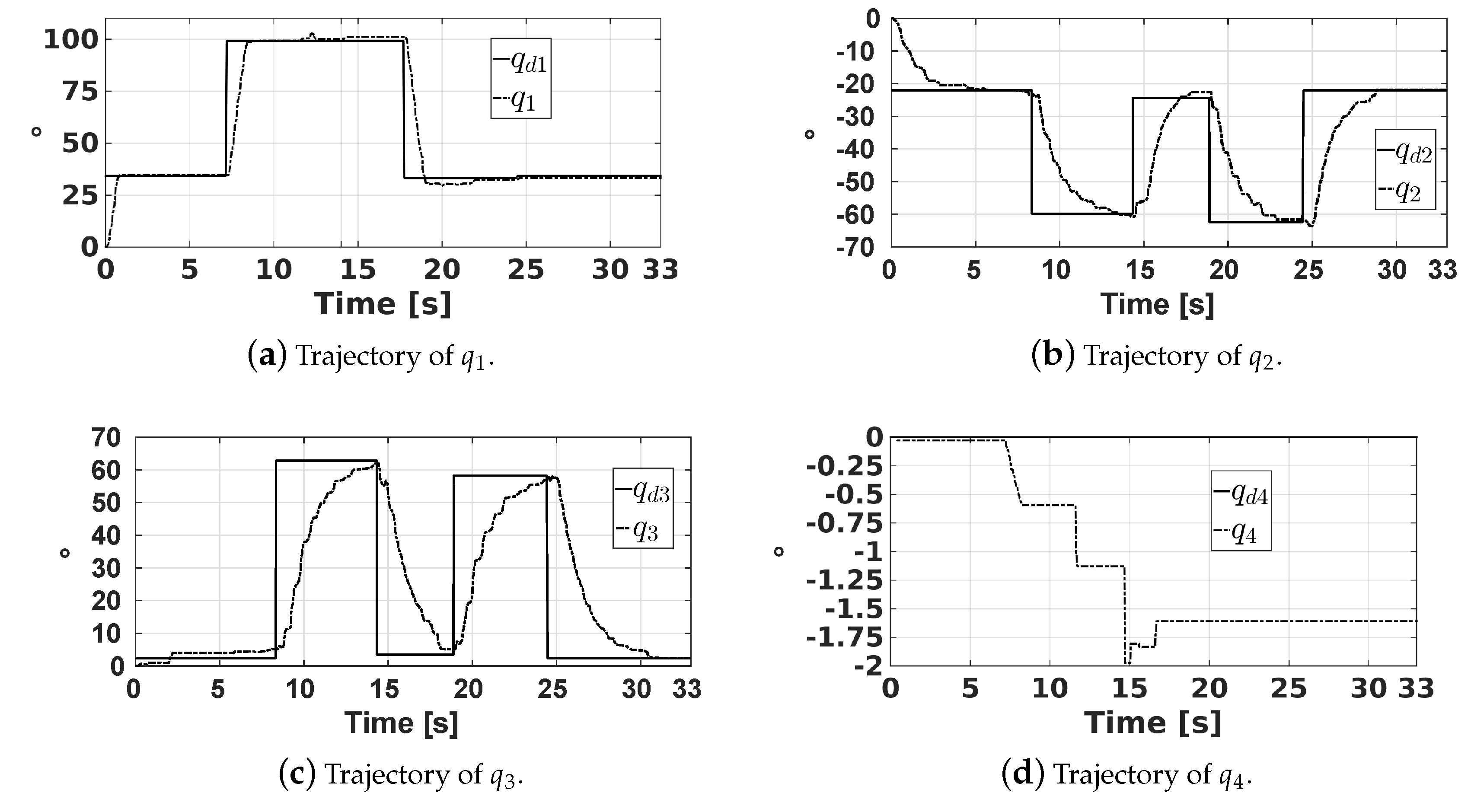

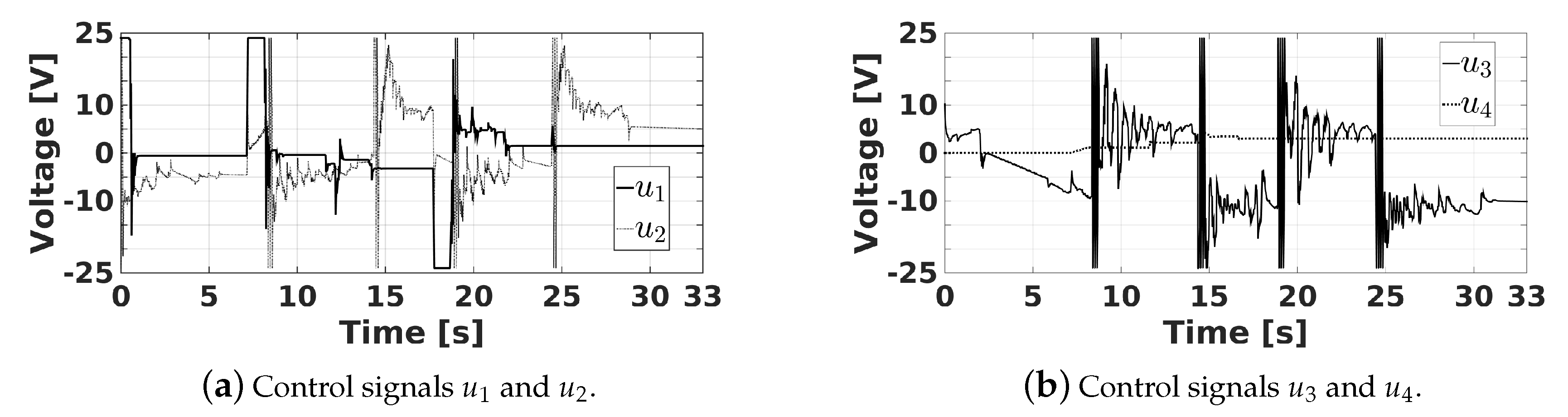

10. Experimental Results

11. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kopacek, B.; Kopacek, P. End of life management of industrial robots. Elektrotech. Informationstech. 2013, 130, 67–71. [Google Scholar] [CrossRef]

- Bomfim, M.; Fagner Coelho, A.; Lima, E.; Gontijo, R. A low cost methodology applied to remanufacturing of robotic manipulators. Braz. Autom. Congr. 2014, 20, 1506–1513. [Google Scholar]

- Sanfilippo, F.; Osen, O.L.; Alaliyat, S. Recycling A Discarded Robotic Arm For Automation Engineering Education. In Proceedings of the 28th European Conference on Modelling and Simulation (ECMS), Brescia, Italy, 27–30 May 2014; pp. 81–86. [Google Scholar]

- Soriano, A.; Marin, L.; Valles, M.; Valera, A.; Albertos, P. Low Cost Platform for Automatic Control Education Based on Open Hardware. IFAC Proc. Vol. 2014, 47, 9044–9050. [Google Scholar] [CrossRef]

- Yen, S.H.; Tang, P.C.; Lin, Y.C.; Lin, C.Y. Development of a Virtual Force Sensor for a Low-Cost Collaborative Robot and Applications to Safety Control. Sensors 2019, 19, 2603. [Google Scholar] [CrossRef] [PubMed]

- Qassem, M.A.; Abuhadrous, I.; Elaydi, H. Modeling and Simulation of 5 DOF educational robot arm. In Proceedings of the 2010 2nd International Conference on Advanced Computer Control, Shenyang, China, 27–29 March 2010; Volume 5, pp. 569–574. [Google Scholar]

- Rai, N.; Rai, B.; Rai, P. Computer vision approach for controlling educational robotic arm based on object properties. In Proceedings of the 2014 2nd International Conference on Emerging Technology Trends in Electronics, Communication and Networking, Surat, India, 26–27 December 2014; pp. 1–9. [Google Scholar]

- Cocota, J.A.N.; Fujita, H.S.; da Silva, I.J. A low-cost robot manipulator for education. In Proceedings of the 2012 Technologies Applied to Electronics Teaching (TAEE), Vigo, Spain, 13–15 June 2012; pp. 164–169. [Google Scholar]

- Rivas, D.; Alvarez, M.; Velasco, P.; Mamarandi, J.; Carrillo-Medina, J.L.; Bautista, V.; Galarza, O.; Reyes, P.; Erazo, M.; Pérez, M.; et al. BRACON: Control system for a robotic arm with 6 degrees of freedom for education systems. In Proceedings of the 2015 6th International Conference on Automation, Robotics and Applications (ICARA), Queenstown, New Zealand, 17–19 February 2015; pp. 358–363. [Google Scholar]

- Kim, H.S.; Song, J.B. Multi-DOF counterbalance mechanism for a service robot arm. IEEE/ASME Trans. Mechatron. 2014, 19, 1756–1763. [Google Scholar] [CrossRef]

- Manzoor, S.; Islam, R.U.; Khalid, A.; Samad, A.; Iqbal, J. An open-source multi-DOF articulated robotic educational platform for autonomous object manipulation. Rob. Comput. Integr. Manuf. 2014, 30, 351–362. [Google Scholar] [CrossRef]

- Iqbal, U.; Samad, A.; Nissa, Z.; Iqbal, J. Embedded control system for AUTAREP-A novel autonomous articulated robotic educational platform. Tehnicki Vjesnik Tech. Gazette 2014, 21, 1255–1261. [Google Scholar]

- Ajwad, S.A.; Iqbal, U.; Iqbal, J. Hardware realization and PID control of multi-degree of freedom articulated robotic arm. Mehran Univ. Res. J. Eng. Technol. 2015, 34, 1–12. [Google Scholar]

- Iqbal, J.; Ullah, M.I.; Khan, A.A.; Irfan, M. Towards sophisticated control of robotic manipulators: An experimental study on a pseudo-industrial arm. Strojniški Vestnik J. Mech. Eng. 2015, 61, 465–470. [Google Scholar] [CrossRef]

- Ajwad, S.; Adeel, M.; Ullah, M.I.; Iqbal, J. Optimal v/s Robust control: A study and comparison for articulated manipulator. J. Balkan Tribol. Assoc. 2016, 22, 2460–2466. [Google Scholar]

- Baek, J.; Jin, M.; Han, S. A new adaptive sliding-mode control scheme for application to robot manipulators. IEEE Trans. Ind. Electron. 2016, 63, 3628–3637. [Google Scholar] [CrossRef]

- Wang, Y.; Gu, L.; Xu, Y.; Cao, X. Practical tracking control of robot manipulators with continuous fractional-order nonsingular terminal sliding mode. IEEE Trans. Ind. Electron. 2016, 63, 6194–6204. [Google Scholar] [CrossRef]

- Yang, C.; Jiang, Y.; He, W.; Na, J.; Li, Z.; Xu, B. Adaptive parameter estimation and control design for robot manipulators with finite-time convergence. IEEE Trans. Ind. Electron. 2018, 65, 8112–8123. [Google Scholar] [CrossRef]

- Guo, K.; Pan, Y.; Yu, H. Composite learning robot control with friction compensation: A neural network-based approach. IEEE Trans. Ind. Electron. 2018, 66, 7841–7851. [Google Scholar] [CrossRef]

- Chen, D.; Li, S.; Lin, F.; Wu, Q. New Super-Twisting Zeroing Neural-Dynamics Model for Tracking Control of Parallel Robots: A Finite-Time and Robust Solution. IEEE Trans. Cybern. 2019. [Google Scholar] [CrossRef] [PubMed]

- Li, W. Predefined-Time Convergent Neural Solution to Cyclical Motion Planning of Redundant Robots Under Physical Constraints. IEEE Trans. Ind. Electron. 2019, 1. [Google Scholar] [CrossRef]

- Van, M.; Ge, S.S.; Ren, H. Finite time fault tolerant control for robot manipulators using time delay estimation and continuous nonsingular fast terminal sliding mode control. IEEE Trans. Cybern. 2016, 47, 1681–1693. [Google Scholar] [CrossRef]

- Chen, D.; Zhang, Y.; Li, S. Tracking control of robot manipulators with unknown models: A Jacobian-matrix-adaption method. IEEE Trans. Ind. Inf. 2017, 14, 3044–3053. [Google Scholar] [CrossRef]

- Jin, L.; Li, S.; Luo, X.; Li, Y.; Qin, B. Neural Dynamics for Cooperative Control of Redundant Robot Manipulators. IEEE Trans. Ind. Inf. 2018, 14, 3812–3821. [Google Scholar] [CrossRef]

- Van, M.; Mavrovouniotis, M.; Ge, S.S. An Adaptive Backstepping Nonsingular Fast Terminal Sliding Mode Control for Robust Fault Tolerant Control of Robot Manipulators. IEEE Trans. Syst. Man Cybern. Syst. 2019, 49, 1448–1458. [Google Scholar] [CrossRef]

- Concha, A.; Figueroa-Rodriguez, J.F.; Fuentes-Covarrubias, A.G.; Fuentes-Covarrubias, R. Plataforma experimental de bajo costo para el control desacoplado de un robot manipulador de 5 GDL. Revista de Tecnologías en Procesos Industriales 2018, 2, 1–11. [Google Scholar]

- Giampiero, C. Legacy MATLAB and Simulink Support for Arduino—File Exchange—MATLAB Central. 2016. Available online: https://www.mathworks.com/matlabcentral/fileexchange/32374-legacy-matlab-and-simulink-support-for-arduino (accessed on 28 February 2020).

- Wilson, C.E.; Sadler, J.P.; Michels, W.J. Kinematics and Dynamics of Machinery; Pearson Education: Upper Saddle River, NJ, USA, 2003. [Google Scholar]

- Spong, M.W.; Vidyasagar, M. Robot Dynamics and Control; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Yen, S.H.; Tang, P.C.; Lin, Y.C.; Lin, C.Y. A Sensorless and Low-Gain Brushless DC Motor Controller Using a Simplified Dynamic Force Compensator for Robot Arm Application. Sensors 2019, 19, 3171. [Google Scholar] [CrossRef] [PubMed]

- Ioannou, P.; Fidan, B. Adaptive Control Tutorial; SIAM: Philadelphia, PA, USA, 2006. [Google Scholar]

- Jia, J.; Zhang, M.; Zang, X.; Zhang, H.; Zhao, J. Dynamic Parameter Identification for a Manipulator with Joint Torque Sensors Based on an Improved Experimental Design. Sensors 2019, 19, 2248. [Google Scholar] [CrossRef] [PubMed]

- Ogata, K. Modern Control Engineering, 5th ed.; Pearson: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Dorf, R.C.; Bishop, R.H. Modern control systems; Pearson: Upper Saddle River, NJ, USA, 2011. [Google Scholar]

- Nise, N.S. Control Systems Engineering; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Bousquet-Jette, C.; Achiche, S.; Beaini, D.; Cio, Y.L.K.; Leblond-Ménard, C.; Raison, M. Fast scene analysis using vision and artificial intelligence for object prehension by an assistive robot. Eng. Appl. Artif. Intell. 2017, 63, 33–44. [Google Scholar] [CrossRef]

- Madani, K.; Kachurka, V.; Sabourin, C.; Golovko, V. A soft-computing-based approach to artificial visual attention using human eye-fixation paradigm: Toward a human-like skill in robot vision. Soft Comput. 2019, 23, 2369–2389. [Google Scholar] [CrossRef]

- Madani, K.; Kachurka, V.; Sabourin, C.; Amarger, V.; Golovko, V.; Rossi, L. A human-like visual-attention-based artificial vision system for wildland firefighting assistance. Appl. Intelli. 2018, 48, 2157–2179. [Google Scholar] [CrossRef]

- Yang, G.; Chen, Z.; Li, Y.; Su, Z. Rapid relocation method for mobile robot based on improved ORB-SLAM2 algorithm. Remote Sens. 2019, 11, 149. [Google Scholar] [CrossRef]

- Yang, G.; Yang, J.; Sheng, W.; Junior, F.E.F.; Li, S. Convolutional neural network-based embarrassing situation detection under camera for social robot in smart homes. Sensors 2018, 18, 1530. [Google Scholar] [CrossRef]

- Corke, P. Robotics, Vision and Control: Fundamental Algorithms in MATLAB®; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Li, X.F.; Shen, R.J.; Liu, P.L.; Tang, Z.C.; He, Y.K. Molecular characters and morphological genetics of CAL gene in Chinese cabbage. Cell Res. 2000, 10, 29. [Google Scholar] [CrossRef]

- Bribiesca, E. Measuring 2-D shape compactness using the contact perimeter. Comput. Math. Appl. 1997, 33, 1–9. [Google Scholar] [CrossRef]

- Montero, R.S.; Bribiesca, E. State of the art of compactness and circularity measures. Int. Math. Forum 2009, 4, 1305–1335. [Google Scholar]

- Corporation, E. ED-7220C Robot Manipulator. 2011. Available online: http://www.adinstruments.es/WebRoot/StoreLES/Shops/62688782/4C61/2F15/726A/B301/6188/C0A8/28BB/86B9/ED_7220C.pdf (accessed on 28 January 2020).

- Concha, A.; Figueroa-Rodríguez, J.F. Robot Software. 2020. Available online: https://github.com/skgadi/UCol-Educational-Robot (accessed on 28 February 2020).

| Joint | Lower Limit (°) | Upper Limit (°) |

|---|---|---|

| 25 | 335 | |

| 0 | 120 | |

| −66 | 116 | |

| −90 | −45 |

| Actuated joint | Estimate [s−1] | Estimate [V−1s−2] |

|---|---|---|

| Waist | ||

| Shoulder | ||

| Elbow | ||

| Wrist |

| Actuated Joint | ||||||

|---|---|---|---|---|---|---|

| Body | 64 | 0 | 16 | 0 | 13.8 | 140.65 |

| Shoulder | 56 | 40 | 20 | 700 | 10.9 | 142.09 |

| Elbow | 61 | 40 | 18.5 | 700 | 18.5 | 141.41 |

| Wrist | 64 | 0 | 24 | 0 | 7 | 143.02 |

| Detected Color | Limits for the Grayscale Image of the Red Plane | Limits for the Grayscale Image of the Green Plane | Limits for the Grayscale Image of the Blue Plane |

|---|---|---|---|

| Red | |||

| Yellow | |||

| Object | Cartesian Space Position Errors | Joint Space Position Errors |

|---|---|---|

| Yellow circle | = −5.15 mm | = −1.01° |

| = 0.22 mm | = 0.40° | |

| = −1.72 mm | = 1.62° | |

| = 2.26° | = 1.15° | |

| Yellow square | = 0.09 mm | = −0.43° |

| = −5.63 mm | = 0.32° | |

| = −1.56 mm | = 1.30° | |

| = −0.17° | = −0.11° | |

| Red circle | = 0.37 mm | = 0.04° |

| = 0.45 mm | = −0.04° | |

| = −2.17 mm | = 1.30° | |

| = 2.05° | = 1.04° | |

| Red square | = −1.87 mm | = −0.68° |

| = −5.30 mm | = −0.14° | |

| = −2.81 mm | = 2.12° | |

| = 1.11° | = 0.58° |

| Platform | Accuracy |

|---|---|

| Proposed recycled robot | 6 mm |

| Manufacturer of the ED-7220C robot | 0.5 mm |

| AUTAREP | 2 m |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Concha Sánchez, A.; Figueroa-Rodríguez, J.F.; Fuentes-Covarrubias, A.G.; Fuentes-Covarrubias, R.; Gadi, S.K. Recycling and Updating an Educational Robot Manipulator with Open-Hardware-Architecture. Sensors 2020, 20, 1694. https://doi.org/10.3390/s20061694

Concha Sánchez A, Figueroa-Rodríguez JF, Fuentes-Covarrubias AG, Fuentes-Covarrubias R, Gadi SK. Recycling and Updating an Educational Robot Manipulator with Open-Hardware-Architecture. Sensors. 2020; 20(6):1694. https://doi.org/10.3390/s20061694

Chicago/Turabian StyleConcha Sánchez, Antonio, Juan Felipe Figueroa-Rodríguez, Andrés Gerardo Fuentes-Covarrubias, Ricardo Fuentes-Covarrubias, and Suresh Kumar Gadi. 2020. "Recycling and Updating an Educational Robot Manipulator with Open-Hardware-Architecture" Sensors 20, no. 6: 1694. https://doi.org/10.3390/s20061694

APA StyleConcha Sánchez, A., Figueroa-Rodríguez, J. F., Fuentes-Covarrubias, A. G., Fuentes-Covarrubias, R., & Gadi, S. K. (2020). Recycling and Updating an Educational Robot Manipulator with Open-Hardware-Architecture. Sensors, 20(6), 1694. https://doi.org/10.3390/s20061694