Ambiguity-Free Optical–Inertial Tracking for Augmented Reality Headsets

Abstract

1. Introduction

- the presence of poorly calibrated tracking cameras;

- the presence of inaccuracies in the feature detection that may lead to numerical instability and tracking ambiguities particularly for those tracking strategies that rely on a reduced number of feature points;

- the limited frame rate of the tracking cameras typically mounted over AR headsets (60 Hz at most);

- the presence of noise due to head movements affecting the quality of the tracking;

- the presence of occlusions on the line-of-sight between the user’s wearing the AR headset and the target scene;

- the latency typical of purely optical tracking methods that results in misregistration between virtual content and real world in optical see-through (OST) headsets or in delayed perceptions of the reality in video see-through (VST) HMDs [16].

2. Related Works

3. Materials and Methods

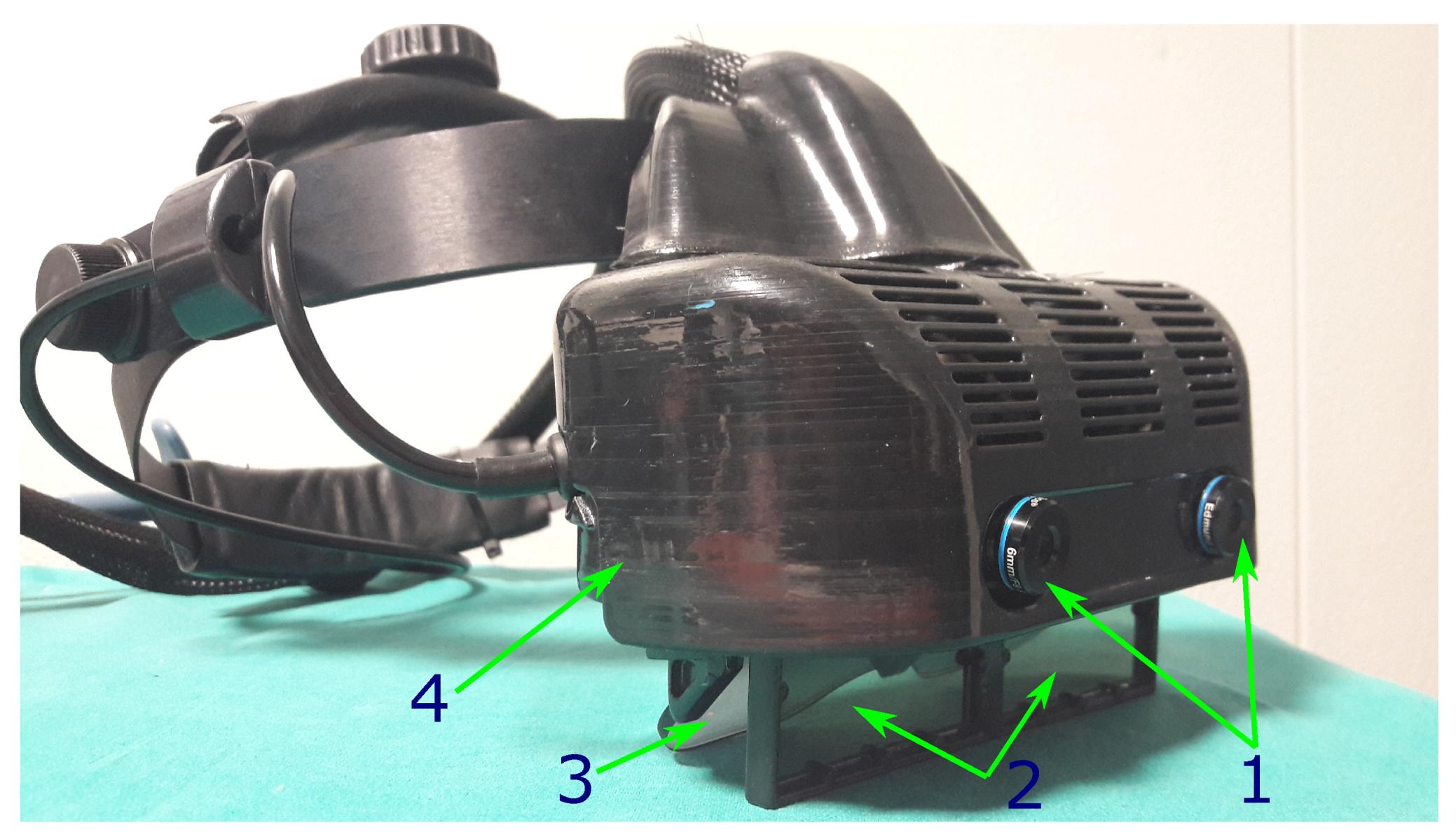

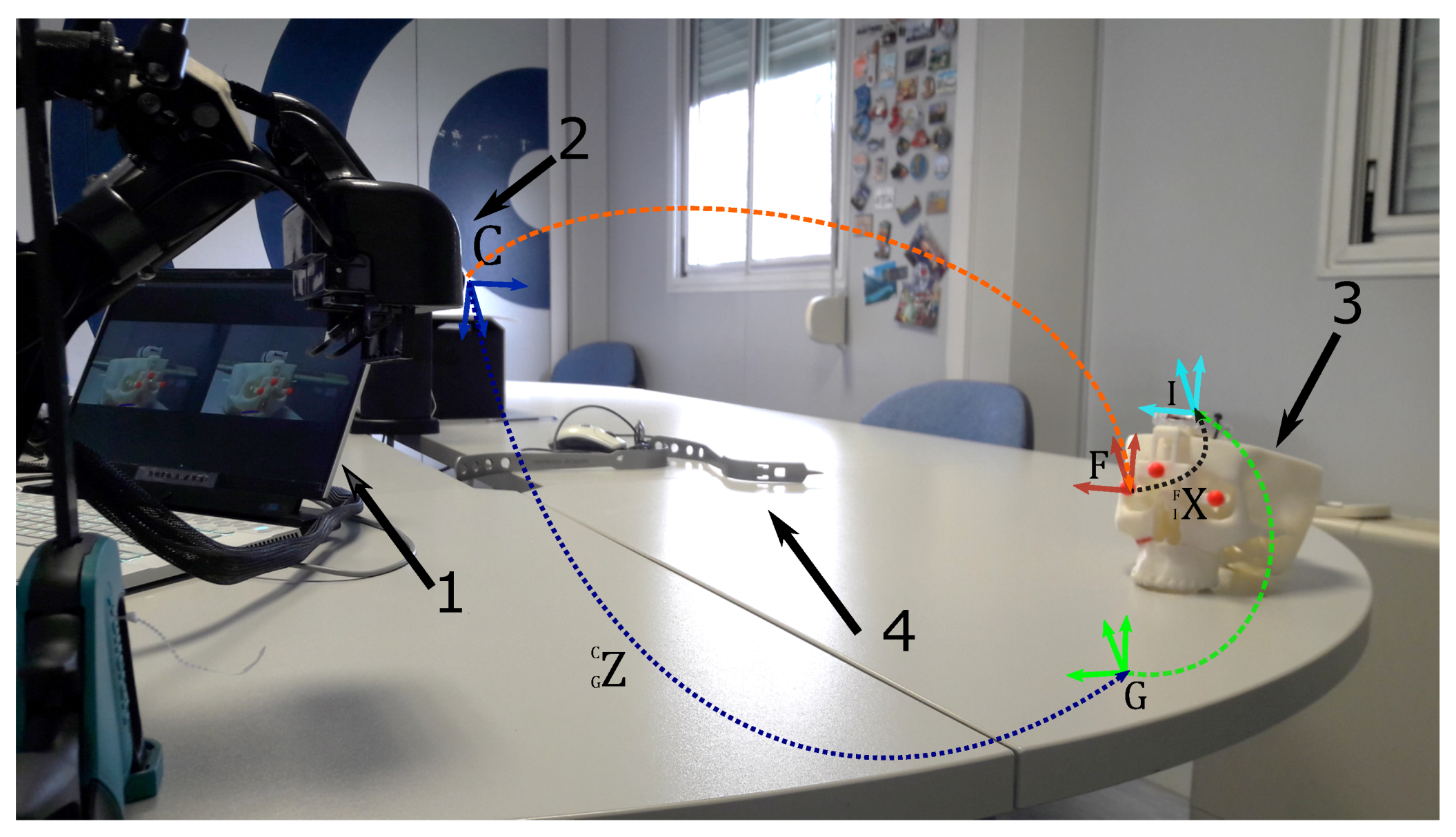

3.1. Hardware

3.2. AR Software Framework

- The software is capable of supporting the deployment of AR applications on different headsets (both VST and OST HMDs) and it features a non-distributed architecture, which makes it compatible with embedded computing units.

- The software framework is based on Compute Unified Device Architecture (CUDA) in order to harness the power of parallel computing over the GPU cores of the graphic card. This architecture makes the software framework computationally efficient in terms of frame rate and latency: the average frame rate of the AR application is fps.

- The software is suited to deliver in situ visualization of medical imaging data, thanks to the employment of the open-source computer library VTK for 3D computer graphics, modelling, and volume rendering of medical images [37].

- The software framework is highly configurable in terms of rendering and tracking capabilities.

- The software can deliver both optical and video see-through-based augmentations.

- The software features a robust optical self-tracking mechanism (i.e., inside-out tracking) based on OpenCV API 3.3.1 [38], that relies on the stereo localization of a set of spherical markers (i.e., the optical frame), as described in more details in the next subsection.

Optical Inside-Out Tracking Algorithm

- Markers detection.

- Stereo matching.

- First stage of the camera pose estimation through the unambiguous closed-form solution of the absolute orientation problem with three points (i.e., estimation of the rigid transformation that aligns the two sets of corresponding triplets of 3D points). Hereafter, we label this pose as .

- Second stage of the camera pose estimation through an iterative optimization method. Hereafter, we label this pose as .

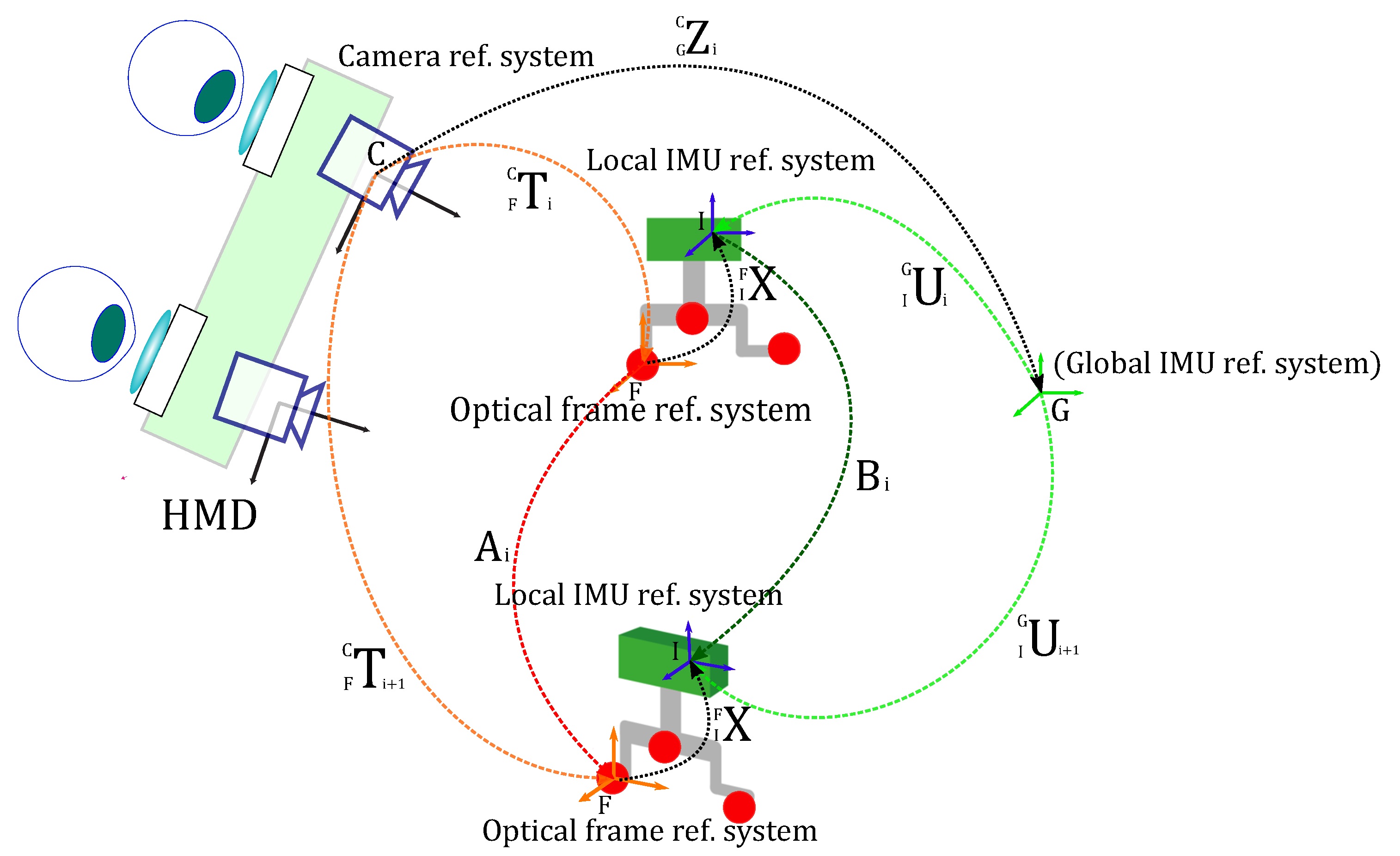

3.3. Calibration Procedure for Orientation Alignment of Inertial and Optical Coordinate Systems

- Given n-1 pairs of consecutive arbitrary poses between the optical frame reference system and the tracking camera reference system , is the rotation matrix that describes the relative orientation between each pair.

- Given n-1 pairs of consecutive arbitrary poses between the local IMU reference system and the global IMU reference system , is the rotation matrix that describes the relative orientation between each pair.

- is the unknown rotation matrix between the and .

- and are the orientation of the optical frame with respect to the tracking camera in terms of rotation matrices, with the scene object at the i and pose respectively. These tracking data are recorded by querying the tracking camera.

- and are the orientation of the IMU with respect to the global inertial reference system in terms of rotation matrices, with the scene object at the i and pose respectively. These tracking data are recorded by querying the IMU sensor.

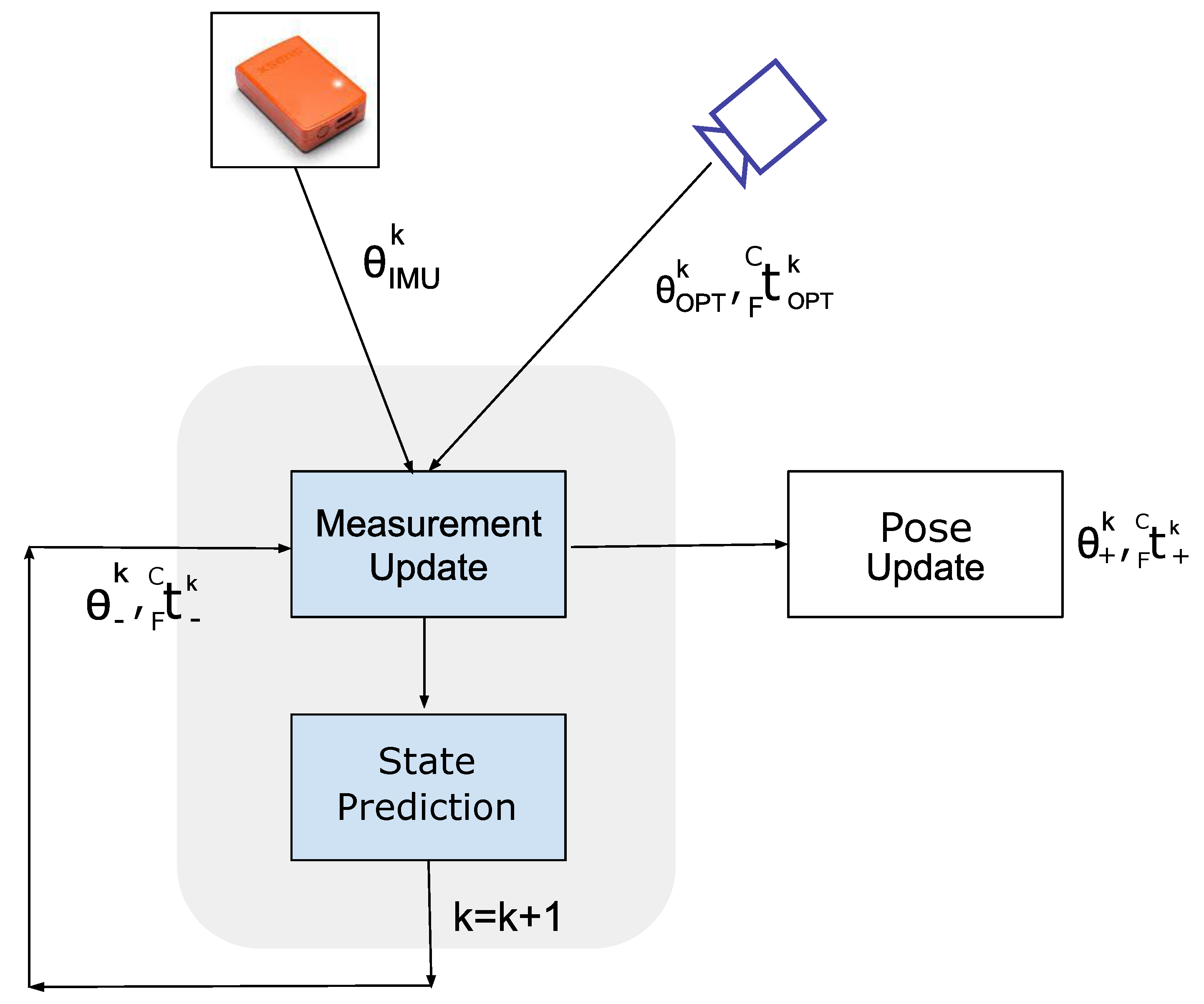

3.4. Sensor Fusion Based on Kalman Filter

4. Experiments and Results

- The IMU data were down-sampled by a factor of two to match the sampling rate of the optical data.

- Using the IMU data and the calibration data (see Section 3.3), the orientation of the target scene with respect to the tracking camera in terms of rotation matrices was determined.

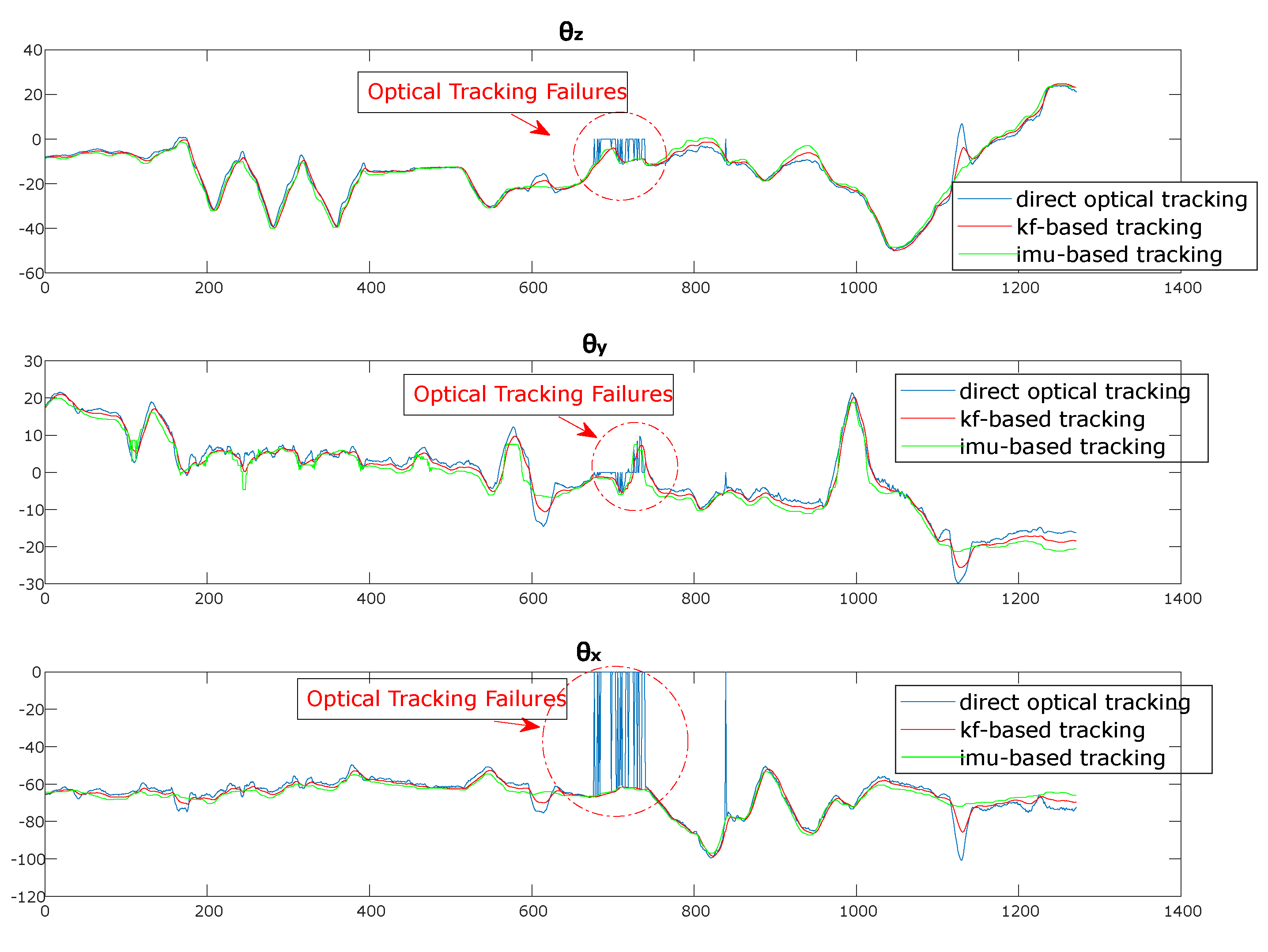

- The two time series of the Euler angles associated to and were synchronized through cross-correlation.

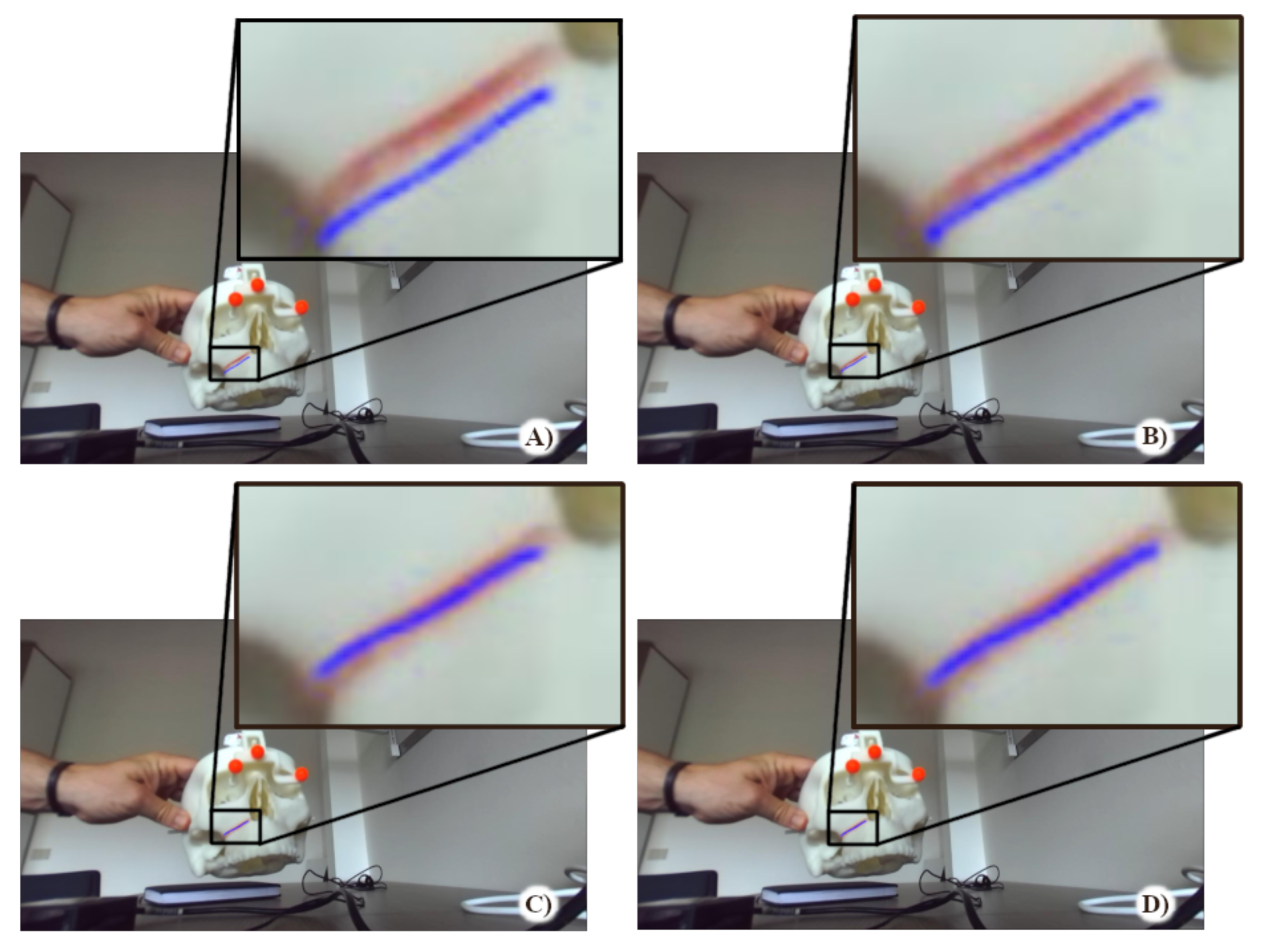

4.1. Quantitative Evaluation of Virtual-to-Real Overlay Accuracy

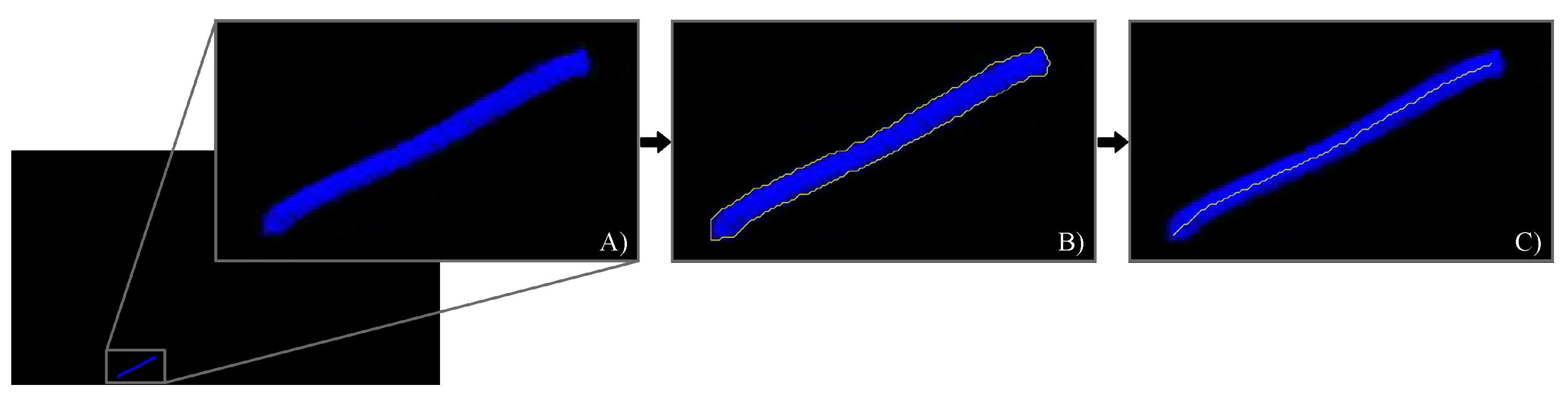

- The images containing the left camera views of the real scene with the real osteotomy line were exported as a series of .png files with image resolution = camera resolution (1280 × 720). (Figure 7A).

- The associated virtual images with the virtual osteotomy line rendered as dictated by the tracking data for each modality, were exported as a series of .png files with the same image resolution as those associated to the real images (Figure 8A).

- The real images were converted in the HSV (hue, saturation, and value) color model to improve the robustness of the feature detection algorithm. The red fluorescent pigmentation used to highlight the real osteotomy peaks the S channel of the HSV color space and allowed the proper detection of structures that undergo non-uniform levels of illumination intensity, shadows and shading [50,55]. An active contour image segmentation technique was iteratively applied to the HSV-converted real image. Specifically, a coarse boundary was manually selected for the first image of the real group, and the detection algorithm autonomously adapted the contour to best fit the profile of the real osteotomy on the maxillary bone of the skull. The images were processed with no need of user’s input and using, as coarse outline, the one resulting from the previous iteration. The active contour algorithm follows the procedure described in [56]. This technique uses Mumford–Shah segmentation to stop the evolving curve on the desired boundary, offering positive results also in presence of smooth boundaries. The output of this first part of the processing is shown in Figure 7B. The resulting contour was then thinned into a line by removing pixels according to the algorithm described in [57]. This last step provides the center line illustrated in Figure 7C.

- The osteotomy line detection in the virtual images required a simpler approach than the previous one. As shown in Figure 8, the set of virtual images is characterized by a homogeneous black background. This prevented both the conversion to the HSV color model and the contour-based segmentation procedure. The latter is based on the assumption that the osteotomy line in the image does not undergo rapid or sudden displacements. This condition is satisfied for the set of real images sampled at 60 Hz, but it is not satisfied for those virtual images that may undergo “jerky” movements due to tracking inaccuracies/ambiguities, themselves caused by variable lighting conditions and degraded tracking camera calibrations. Each virtual image was therefore segmented using a standard threshold technique to obtain the boundary (as shown in Figure 8b). As in the case of the real images, the boundary is then thinned to obtain the center line highlighted in Figure 8c).

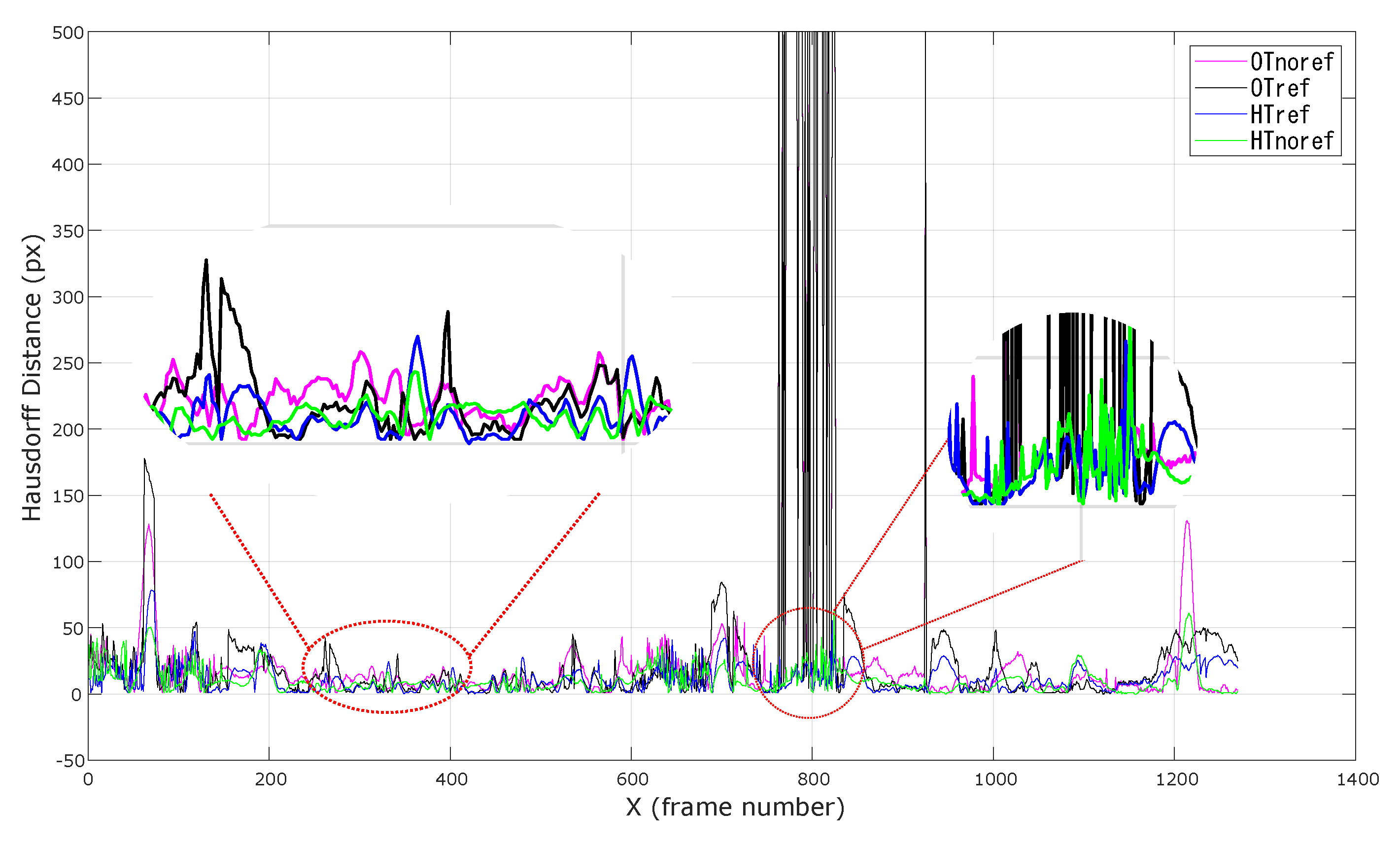

- The overlay error between real and virtual content was computed, for each pair of real and virtual frames, as the Hausdorff distance between the two center lines.

4.2. Results and Discussion

5. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AR | Augmented reality |

| VST | Video see-through |

| OST | Optical see-through |

| IMU | Inertial motion unit |

| GPS | Global Positioning System |

| KF | Kalman filter |

| EKF | Extended kalman filter |

Appendix A

References

- Grubert, J.; Itoh, Y.; Moser, K.; Swan, J.E. A Survey of Calibration Methods for Optical See-Through Head-Mounted Displays. IEEE Trans. Vis. Comput. Graph. 2018, 24, 2649–2662. [Google Scholar] [CrossRef] [PubMed]

- Cutolo, F.; Fida, B.; Cattari, N.; Ferrari, V. Software Framework for Customized Augmented Reality Headsets in Medicine. IEEE Access 2020, 8, 706–720. [Google Scholar] [CrossRef]

- Cutolo, F.; Parchi, P.D.; Ferrari, V. Video see through AR head-mounted display for medical procedures. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; pp. 393–396. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Park, J.; You, S.; You, S.; Neumann, U. Natural Feature Tracking for Extendible Robust Augmented Realities. In International Workshop on Augmented Reality: Placing Artificial Objects in Real Scenes (IWAR1998); A. K. Peters, Ltd.: Natick, MA, USA, 1999; pp. 209–217. [Google Scholar]

- Davison, A.J.; Mayol, W.W.; Murray, D.W. Real-Time Localisation and Mapping with Wearable Active Vision. In Proceedings of the Second IEEE and ACM International Symposium on Mixed and Augmented Reality, Tokyo, Japan, 10 October 2003; pp. 18–27. [Google Scholar] [CrossRef]

- Bleser, G.; Wuest, H.; Stricker, D. Online camera pose estimation in partially known and dynamic scenes. In Proceedings of the 2006 IEEE/ACM International Symposium on Mixed and Augmented Reality (ISMAR2006), Santa Barbara, CA, USA, 22–25 October 2006; pp. 56–65. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar] [CrossRef]

- Lepetit, V.; Fua, P. Monocular Model-Based 3D Tracking of Rigid Objects: A Survey. Found. Trends Comput. Graphics Vis. 2005, 1, 1–89. [Google Scholar] [CrossRef]

- Kato, H.; Billinghurst, M. Marker Tracking and HMD Calibration for a Video-Based Augmented Reality Conferencing System. In Proceedings of the 2nd IEEE and ACM International Workshop on Augmented Reality, San Francisco, CA, USA, 20–21 October 1999; pp. 85–94. [Google Scholar] [CrossRef]

- Vávra, P.; Roman, J.; Zonča, P.; P.Ihnát; Němec, M.; Kumar, J.; Habib, N.; El-Gendi, A. Recent Development of Augmented Reality in Surgery: A Review. J. Healthc. Eng. 2017, 2017, 4574172. [Google Scholar] [CrossRef]

- Cutolo, F. Letter to the Editor on “Augmented Reality Based Navigation for Computer Assisted Hip Resurfacing: A Proof of Concept Study”. Ann. Biomed. Eng. 2019, 47, 2151–2153. [Google Scholar] [CrossRef]

- Ferrari, V.; Cutolo, F.; Calabrò, E.M.; Ferrari, M. [Poster] HMD Video see though AR with unfixed cameras vergence. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; pp. 265–266. [Google Scholar] [CrossRef]

- Kytö, M.; Nuutinen, M.; Oittinen, P. Method for measuring stereo camera depth accuracy based on stereoscopic vision. In Proceedings of the Three-Dimensional Imaging, Interaction, and Measurement, San Francisco, CA, USA, 24–27 January 2011; pp. 168–176. [Google Scholar] [CrossRef]

- Cutolo, F.; Ferrari, V. The Role of Camera Convergence in Stereoscopic Video See-through Augmented Reality Displays. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 12–17. [Google Scholar] [CrossRef]

- Sielhorst, T.; Sa, W.; Khamene, A.; Sauer, F.; Navab, N. Measurement of absolute latency for video see through augmented reality. In Proceedings of the 6th IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR2007), Nara, Japan, 13–16 November 2007; pp. 215–220. [Google Scholar] [CrossRef]

- Badiali, G.; Ferrari, V.; Cutolo, F.; Freschi, C.; Caramella, D.; Bianchi, A.; Marchetti, C. Augmented reality as an aid in maxillofacial surgery: Validation of a wearable system allowing maxillary repositioning. J. Craniomaxillofac Surg. 2014, 42, 1970–1976. [Google Scholar] [CrossRef]

- Cutolo, F.; Carbone, M.; Parchi, P.D.; Ferrari, V.; Lisanti, M.; Ferrari, M. Application of a New Wearable Augmented Reality Video See-Through Display to Aid Percutaneous Procedures in Spine Surgery. In Proceedings of the Augmented Reality, Virtual Reality, and Computer Graphics (AVR2016), Lecce, Italy, 15–18 June 2016; De Paolis, L.T., Mongelli, A., Eds.; Springer: Berlin, Germany, 2016; pp. 43–54. [Google Scholar] [CrossRef]

- Cutolo, F.; Meola, A.; Carbone, M.; Sinceri, S.; Cagnazzo, F.; Denaro, E.; Esposito, N.; Ferrari, M.; Ferrari, V. A new head-mounted display-based augmented reality system in neurosurgical oncology: A study on phantom. Comput. Assist. Surg. 2017, 22, 39–53. [Google Scholar] [CrossRef]

- Gao, X.S.; Hou, X.R.; Tang, J.; Cheng, H.F. Complete solution classification for the perspective-three-point problem. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 930–943. [Google Scholar] [CrossRef]

- Aron, M.; Simon, G.; Berger, M.O. Use of inertial sensors to support video tracking. Comput. Animat. Virt. Worlds 2007, 18, 57–68. [Google Scholar] [CrossRef]

- State, A.; Hirota, G.; Chen, D.T.; Garrett, W.F.; Livingston, M.A. Superior Augmented Reality Registration by Integrating Landmark Tracking and Magnetic Tracking. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 4–9 August 1996; Association for Computing Machinery: New York, NY, USA, 1996; pp. 429–438. [Google Scholar] [CrossRef]

- Yokokohji, Y.; Sugawara, Y.; Yoshikawa, T. Accurate image overlay on video see-through HMDs using vision and accelerometers. In Proceedings of the IEEE Virtual Reality 2000 (Cat. No.00CB37048), New Brunswick, NJ, USA, 18–22 March 2000; pp. 247–254. [Google Scholar] [CrossRef]

- Satoh, K.; Anabuki, M.; Yamamoto, H.; Tamura, H. A hybrid registration method for outdoor augmented reality. In Proceedings of the IEEE and ACM International Symposium on Augmented Reality, New York, NY, USA, 29–30 October 2001; pp. 67–76. [Google Scholar] [CrossRef]

- Jiang, B.; Neumann, U.; Suya, Y. A robust hybrid tracking system for outdoor augmented reality. In Proceedings of the IEEE Virtual Reality 2004, Chicago, IL, USA, 27–31 March 2004; pp. 3–275. [Google Scholar] [CrossRef]

- Klein, G.; Drummond, T. Tightly Integrated Sensor Fusion for Robust Visual Tracking. In Proceedings of the British Machine Vision Conference (BMVC’02), Cardiff, UK, 2–5 September 2002; pp. 787–796. [Google Scholar]

- Bleser, G.; Stricker, D. Advanced tracking through efficient image processing and visual-inertial sensor fusion. In Proceedings of the 2008 IEEE Virtual Reality Conference, Reno, NE, USA, 8–12 March 2008; pp. 137–144. [Google Scholar] [CrossRef]

- Ercan, A.O.; Erdem, A.T. On sensor fusion for head tracking in augmented reality applications. In Proceedings of the 2011 American Control Conference, San Francisco, CA, USA, 29 June–1 July 2011; pp. 1286–1291. [Google Scholar] [CrossRef]

- Oskiper, T.; Samarasekera, S.; Kumar, R. Multi-sensor navigation algorithm using monocular camera, IMU and GPS for large scale augmented reality. In Proceedings of the 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, GA, USA, 5–8 November 2012; pp. 71–80. [Google Scholar] [CrossRef]

- Menozzi, A.; Clipp, B.; Wenger, E.; Heinly, J.; Dunn, E.; Towles, H.; Frahm, J.; Welch, G. Development of vision-aided navigation for a wearable outdoor augmented reality system. In Proceedings of the 2014 IEEE/ION Position, Location and Navigation Symposium - PLANS 2014, Monterey, CA, USA, 5–8 May 2014; pp. 460–472. [Google Scholar] [CrossRef]

- He, H.; Li, Y.; Guan, Y.; Tan, J. Wearable Ego-Motion Tracking for Blind Navigation in Indoor Environments. IEEE Trans. Autom. Sci. Eng. 2015, 12, 1181–1190. [Google Scholar] [CrossRef]

- Qian, K.; Zhao, W.; Ma, Z.; Ma, J.; Ma, X.; Yu, H. Wearable-Assisted Localization and Inspection Guidance System Using Egocentric Stereo Cameras. IEEE Sens. J. 2018, 18, 809–821. [Google Scholar] [CrossRef]

- Trivisio, Lux Prototyping. Available online: https://www.trivisio.com/ (accessed on 1 March 2020).

- Fontana, U.; Cutolo, F.; Cattari, N.; Ferrari, V. Closed - Loop Calibration for Optical See-Through Near Eye Display with Infinity Focus. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct 2018), Munich, Germany, 16–20 October 2018; pp. 51–56. [Google Scholar] [CrossRef]

- Cutolo, F.; Fontana, U.; Ferrari, V. Perspective Preserving Solution for Quasi-Orthoscopic Video See-Through HMDs. Technologies 2018, 6, 9. [Google Scholar] [CrossRef]

- Cattari, N.; Cutolo, F.; D’amato, R.; Fontana, U.; Ferrari, V. Toed-in vs Parallel Displays in Video See-Through Head-Mounted Displays for Close-Up View. IEEE Access 2019, 7, 159698–159711. [Google Scholar] [CrossRef]

- VTK, The Visualization Toolkit. Available online: https://vtk.org/ (accessed on 1 March 2020).

- OpenCV, Open Source Computer Vision Library. Available online: https://opencv.org/ (accessed on 1 March 2020).

- Lee, J.; Park, J. Reducing Gross Errors of Perspective 3-point Pose Computation. In Proceedings of the Convergence and Hybrid Information Technology, Daejeon, Korea, 23–25 August 2012; Lee, G., Howard, D., Kang, J.J., Ślęzak, D., Eds.; Springer Berlin Heidelberg: Berlin, Germany, 2012; pp. 303–311. [Google Scholar] [CrossRef]

- Arun, K.S.; Huang, T.S.; Blostein, S.D. Least-Squares Fitting of Two 3-D Point Sets. IEEE Trans. Pattern Anal. Mach. Intell. 1987, PAMI-9, 698–700. [Google Scholar] [CrossRef]

- Cutolo, F.; Freschi, C.; Mascioli, S.; Parchi, P.D.; Ferrari, M.; Ferrari, V. Robust and Accurate Algorithm for Wearable Stereoscopic Augmented Reality with Three Indistinguishable Markers. Electronics 2016, 5, 59. [Google Scholar] [CrossRef]

- Chang, C.; Chatterjee, S. Quantization error analysis in stereo vision. In Proceedings of the Conference Record of the Twenty-Sixth Asilomar Conference on Signals, Systems Computers, Pacific Grove, CA, USA, 26–28 October 1992; pp. 1037–1041. [Google Scholar] [CrossRef]

- Luhmann, T.; Fraser, C.; Maas, H.G. Sensor modelling and camera calibration for close-range photogrammetry. ISPRS J. Photogramm. Remote Sens. 2016, 115, 37–46. [Google Scholar] [CrossRef]

- Wu, Y.; Hu, Z. PnP Problem Revisited. J. Math. Imaging Vis. 2006, 24, 131–141. [Google Scholar] [CrossRef]

- Ting, W.; Yuecao, W.; Chen, Y. Some Discussion on the Conditions of the Unique Solution of P3P Problem. In Proceedings of the 2006 International Conference on Mechatronics and Automation, Luoyang, China, 25–28 June 2006; pp. 205–210. [Google Scholar] [CrossRef]

- Faugère, J.C.; Moroz, G.; Rouillier, F.; El Din, M.S. Classification of the Perspective-Three-Point Problem, Discriminant Variety and Real Solving Polynomial Systems of Inequalities. In Proceedings of the Twenty-First International Symposium on Symbolic and Algebraic Computation, Hagenberg, Austria, 20–23 July 2008; pp. 79–86. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An Accurate O(n) Solution to the PnP Problem. Int. J. Comput. Vis. 2008, 81, 155. [Google Scholar] [CrossRef]

- Garro, V.; Crosilla, F.; Fusiello, A. Solving the PnP Problem with Anisotropic Orthogonal Procrustes Analysis. In Proceedings of the 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization Transmission, Zurich, Switzerland, 13–15 October 2012; pp. 262–269. [Google Scholar] [CrossRef]

- Lu, C.; Hager, G.D.; Mjolsness, E. Fast and globally convergent pose estimation from video images. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 610–622. [Google Scholar] [CrossRef]

- Diotte, B.; Fallavollita, P.; Wang, L.; Weidert, S.; Euler, E.; Thaller, P.; Navab, N. Multi-Modal Intra-Operative Navigation During Distal Locking of Intramedullary Nails. IEEE Trans. Med. Imaging 2015, 34, 487–495. [Google Scholar] [CrossRef] [PubMed]

- He, C.; Kazanzides, P.; Sen, H.T.; Kim, S.; Liu, Y. An Inertial and Optical Sensor Fusion Approach for Six Degree-of-Freedom Pose Estimation. Sensors 2015, 15, 16448–16465. [Google Scholar] [CrossRef] [PubMed]

- Park, F.C.; Martin, B.J. Robot sensor calibration: solving AX=XB on the Euclidean group. IEEE Trans. Robot. 1994, 10, 717–721. [Google Scholar] [CrossRef]

- Markley, F.L.; Cheng, Y.; Crassidis, J.L.; Oshman, Y. Averaging Quaternions. J. Guid. Control Dyn. 2007, 30, 1193–1197. [Google Scholar] [CrossRef]

- Wertz, J.R. Three-Axis Attitude Determination Methods. In Spacecraft Attitude Determination and Control; Springer: Dordrecht, The Netherlands, 1978; pp. 410–435. [Google Scholar] [CrossRef]

- Mamone, V.; Viglialoro, R.M.; Cutolo, F.; Cavallo, F.; Guadagni, S.; Ferrari, V. Robust Laparoscopic Instruments Tracking Using Colored Strips. Augmented Reality, Virtual Reality, and Computer Graphics. In Augmented Reality, Virtual Reality, and Computer Graphics (AVR2017); De Paolis, L.T., Bourdot, P., Mongelli, A., Eds.; Springer: Cham, Switzerland, 2017; pp. 129–143. [Google Scholar] [CrossRef]

- Chan, T.F.; Sandberg, B.; Vese, L.A. Active Contours without Edges for Vector-Valued Images. J. Vis. Commun. Image Represent. 2000, 11, 130–141. [Google Scholar] [CrossRef]

- Lam, L.; Lee, S.; Suen, C.Y. Thinning methodologies-a comprehensive survey. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 869–885. [Google Scholar] [CrossRef]

- Lu, X.X. A Review of Solutions for Perspective-n-Point Problem in Camera Pose Estimation. J. Phys. Conf. 2018, 1087, 052009. [Google Scholar] [CrossRef]

- Okuma, K.; Taleghani, A.; de Freitas, N.; Little, J.J.; Lowe, D.G. A Boosted Particle Filter: Multitarget Detection and Tracking. In Computer Vision - ECCV 2004; Pajdla, T., Matas, J., Eds.; Springer: Berlin, Germany, 2004; pp. 28–39. [Google Scholar]

- Chang, C.; Ansari, R. Kernel particle filter for visual tracking. IEEE Signal Process. Lett. 2005, 12, 242–245. [Google Scholar] [CrossRef]

- Kosmopoulos, D.I.; Doulamis, N.D.; Voulodimos, A.S. Bayesian filter based behavior recognition in workflows allowing for user feedback. Comput. Vis. Image Underst. 2012, 116, 422–434. [Google Scholar] [CrossRef]

| Tracking Modality | Overlay Error (px) | |||

|---|---|---|---|---|

| Mean | Std Dev | Median | MAD | |

| 17.83 | 21.1 | 11 | 14.1 | |

| 14.33 | 18.22 | 10 | 10.14 | |

| 11.96 | 11.28 | 8.6 | 8.29 | |

| 9.67 | 9.92 | 7 | 6.66 | |

| Tracking Modality | Overlay Error (px) | |||

|---|---|---|---|---|

| Mean | Std Dev | Median | MAD | |

| 69.46 | 150.45 | 13 | 93.9 | |

| 66.34 | 151.2 | 11.2 | 94.75 | |

| 19.58 | 30.57 | 9.96 | 17.44 | |

| 16.7 | 27.9 | 7.62 | 15.87 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cutolo, F.; Mamone, V.; Carbonaro, N.; Ferrari, V.; Tognetti, A. Ambiguity-Free Optical–Inertial Tracking for Augmented Reality Headsets. Sensors 2020, 20, 1444. https://doi.org/10.3390/s20051444

Cutolo F, Mamone V, Carbonaro N, Ferrari V, Tognetti A. Ambiguity-Free Optical–Inertial Tracking for Augmented Reality Headsets. Sensors. 2020; 20(5):1444. https://doi.org/10.3390/s20051444

Chicago/Turabian StyleCutolo, Fabrizio, Virginia Mamone, Nicola Carbonaro, Vincenzo Ferrari, and Alessandro Tognetti. 2020. "Ambiguity-Free Optical–Inertial Tracking for Augmented Reality Headsets" Sensors 20, no. 5: 1444. https://doi.org/10.3390/s20051444

APA StyleCutolo, F., Mamone, V., Carbonaro, N., Ferrari, V., & Tognetti, A. (2020). Ambiguity-Free Optical–Inertial Tracking for Augmented Reality Headsets. Sensors, 20(5), 1444. https://doi.org/10.3390/s20051444