1. Introduction

The brain–computer interface (BCI) is a system to control the machine without human peripheral neuromuscular system [

1,

2]. This can effectively enhance the physical ability of the user [

3,

4,

5]. Event-related potential (ERP) is an evoked potential recorded from the surface of the scalp when a person performs cognitive processing (e.g., attention, memory, thinking) with a particular stimulus, which reflects the neurophysiological changes of brain during cognitive processes [

6,

7]. Less training and high performance make the ERP-based BCI system widely used, e.g., underwater manipulator [

8], consciousness detection [

9], paradigm research [

10,

11].

The ERP-based BCI meets a common problem: the performance of the BCI decreases when applying it into the real environment [

12,

13]. The sound widely exists in the daily life. When the subject uses the BCI to output commands, he/she is unavoidably affected by the sound [

14], such as talking voice, robot walking step, the sound of the machine, and so on. Whether the sound affects the performance of the visual ERP-based BCI is unclear, since there are two different views on the influence of the sound. On one hand, some researchers have shown that using the visual and auditory tasks simultaneously could improve the performance [

15] since the redundancy effect makes the subject use less time to react [

16]. On the other hand, the auditory pathway has an inhibitory effect on the visual pathway [

17], which leads to the attention paid on the visual stimulus being affected by the sound [

18]. Therefore, it is necessary to analyze the effect of the sound to find the main reason for causing the performance decline in this study.

How to evaluate the effect of the sound is also a challenge.

Table 1 lists some studies on the influence of the sound in the terms of emotion [

19,

20], stimulus-onset asynchrony [

21], natural sound, and music [

22,

23,

24]. Akinari, O. et al. found the sound with different emotions to have an influence on the auditory BCI. The auditory BCI under a very negative sound can achieve 84.1% classification accuracy [

19]. Affective stimuli may be useful for a practical auditory BCI system for patients with disabilities, which achieve 90% classification accuracy [

20]. Sijie, Z. et al. reported that the background music does not impair the auditory BCI but is preferred by users [

22]. Baykara E. et al. found training and enhanced motivation improves performance in an auditory BCI paradigm [

23]. We predict that less motivation will reduce the performance in visual BCI. Eva M.H. et al. discovered many psychological factors which improve the performance auditory BCI and visual BCI [

24]. Jeong H. et al. found that an auditory steady-steady-state response (ASSR) BCI under the music and natural sounds achieves 89.67% and 87.67% classification accuracy because of the acceptance of user increasing [

25]. Hirotaka N. et al. used the support vector machine to improve the performance of ASSR-BCI under an additive noise condition, which was improved by 10.5% compared to conventional ASSR-BCI under noiseless condition [

26]. First, most researches explore the influence of sound to auditory BCI. They are in a single pathway. We explore the influence that the sound has on the visual BCI in a dual pathway. Second, this study mainly focuses on whether the speed of the sound can affect the BCI since the speed change can directly influence the amount of information processed by the brain, which causes the change of the workload assigned to the BCI task. We use an auditory task that is with higher speed to reduce the allocation of brain resources for the visual BCI. In our work, we reduced the motivation in the use of visual BCI through a speed auditory task.

Mental workload is the ratio between the brain resources required by the task and the available brain resources of the operator [

27,

28]. Since the visual BCI requires the subject to be with a suitable mental workload [

29] to reach a high performance, this study assumes that the sound might influence the BCI performance by having an effect on the mental workload. This study proposes to use the speed of sound to adjust the mental workload. The speed of sound represents the number of words played per minute, which affects the amount of information received by the brain. The increase of information input would increase the load of processing them in the brain, which might reduce the efficiency of dealing with the BCI task, since the limited workload of the brain cannot handle the sound with high speed and BCI task simultaneously. Compared with the researches studying the auditory BCI, the novelty of this study is to propose a dual-task that includes both the visual BCI task and auditory task. The dual-task has advantages in activating both the visual and auditory pathways in the brain and is helpful for analyzing the interaction of the two pathways when the subject is under a BCI system.

It is a problem whether the speed auditory task decreases the performance of the visual BCI when the speed of auditory task occupies more brain resources. And whether the auditory task has an inhibitory effect on the visual pathway is also a problem. In order to test the effect of the sound on the visual ERP-based BCI, this study designs a dual-task experiment that requires the subject to do the visual BCI task and auditory task simultaneously. The auditory tasks are designed as three levels to simulate the daily cases in which the subject is affected by the sound in different indexes. National Aeronautics and Space Administration-Task Load Index (NASA-TLX) is used to indicate the subjective index of the workloads. We evaluate the effect by the amplitude, accuracy rate, and information transfer rate. In addition, we use the data collected under the same or the different workloads to train and test the classifier. The results show that the amplitudes of N200 and P300 potentials decreased from 2.59 μV to 1.90 μV and from 3.20 μV to 2.43 μV in occipital area when the workload increased. The results indicate that auditory noise has interference in the occipital area when inducing ERP. High workload also leads to low accuracy and information transfer rate. The accuracy and information transfer rate (ITR) of the high workload are 90.83% and 27.68 bits/min, which are higher than the low workload. After the experiment, we found that as the brain resource becomes less, the visually-induced ERP is reduced. The decrease of the ERP amplitude makes the classification accuracy and ITR decrease, which causes the visual BCI performance to decline. Besides, a large difference between the training data and testing data also leads to a decrease of the performance. These findings imply that the auditory task could make the N200 and P300 potentials different from the training phase to the testing phase by increasing the subject’s workload, which influences the visual ERP-based BCI performance. This finding is helpful for further designing a more robust visual ERP-based BCI.

Section 1 is the introduction that describes the reason why ERP-BCI is used widely and the existing problems in ERP-BCI.

Section 2 introduces the design of the experiment and the index of evaluation.

Section 3 describes the comparison of subjective fatigue values and the ERP amplitudes, as well as the accuracy and information transmission rates.

Section 4 discusses the factor why these values have differences in different auditory tasks. Finally, a brief conclusion is given in

Section 5.

2. Materials and Methods

2.1. Participants and Data Collection

Ten subjects (nine males) from the Hebei University of Technology with a mean age of 22 years (range from 19 to 24) participated in the electroencephalography (EEG) session of this study. All participants had normal or corrected-to-normal vision and were informed about the risk of seizures in epileptics due to flicker stimulation. They reported not to have ever suffered from epilepsy and gave their written informed consent. One was familiar with this experiment, and the rest had no experience. All subjects gave their informed consent for inclusion before they participated in the study. The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the ethics committee of the Hebei University of Technology (HEBOThMEC2019001).

All electrode impedances were reduced to 10 kΩ before data recording. EEG signals were sampled at 1000 Hz (SynAmps2, Neuroscan, USA). All 36 channels were grounded between Fpz and Fz channel and referenced to the binaural mastoid.

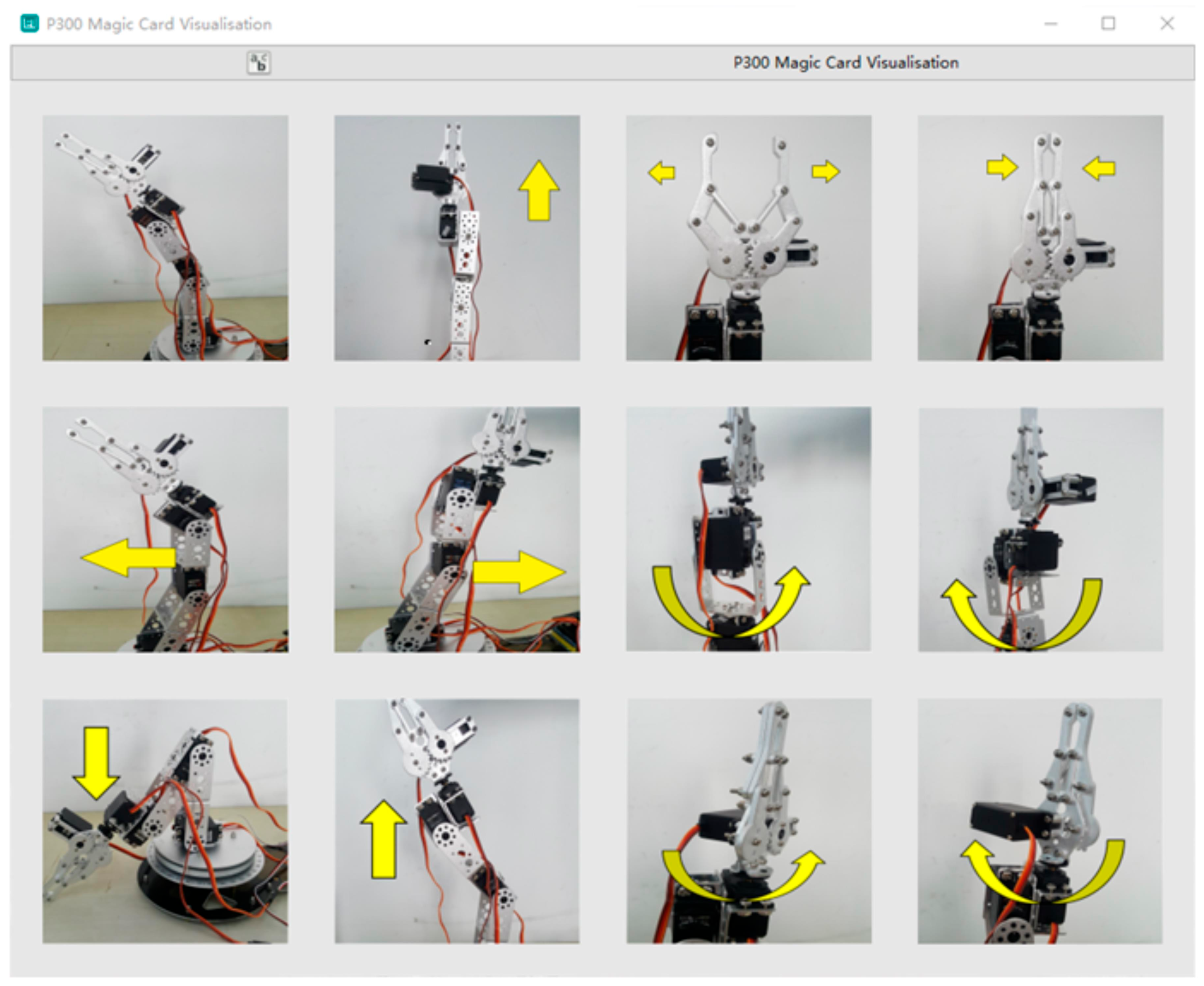

2.2. Visual Interface

Figure 1 shows the interface and protocol developed in the OpenViBE environment. There are 12 robotic static images in the stimulus interface, which is a 3 × 4 matrix. Each image represents a robotic arm behavior. To encode robotic arm behavior, we define row

i and column

j in the matrix as location (

i,

j), as shown in

Table 2. We use this interface to activate the visual stimuli. In this oddball paradigm-based interface [

30], visual images flash randomly one by one. When a visual image is presented, a black square with a white solid circle shields the others. The image that the subject focuses on is the “target”, and the image ignored is defined as “non-target”. At the beginning of the experiment, the subjects watch the interface. Twelve pictures flash randomly, and the time each picture flashes is 150 ms, pausing for 75 ms after the flashing; the remaining 11 pictures flash randomly. The stimulus onset asynchrony is set to 225 ms. A process in which the interface flashes each stimulus once is defined as a “repetition”. In our study, 10 repetitions constitute a “trial”, and the target in the trial stays the same while the other stimuli are non-targets; the interval of every trial is 500 ms. One experiment session consists of 12 trials, and each subject conducts three sessions in this study.

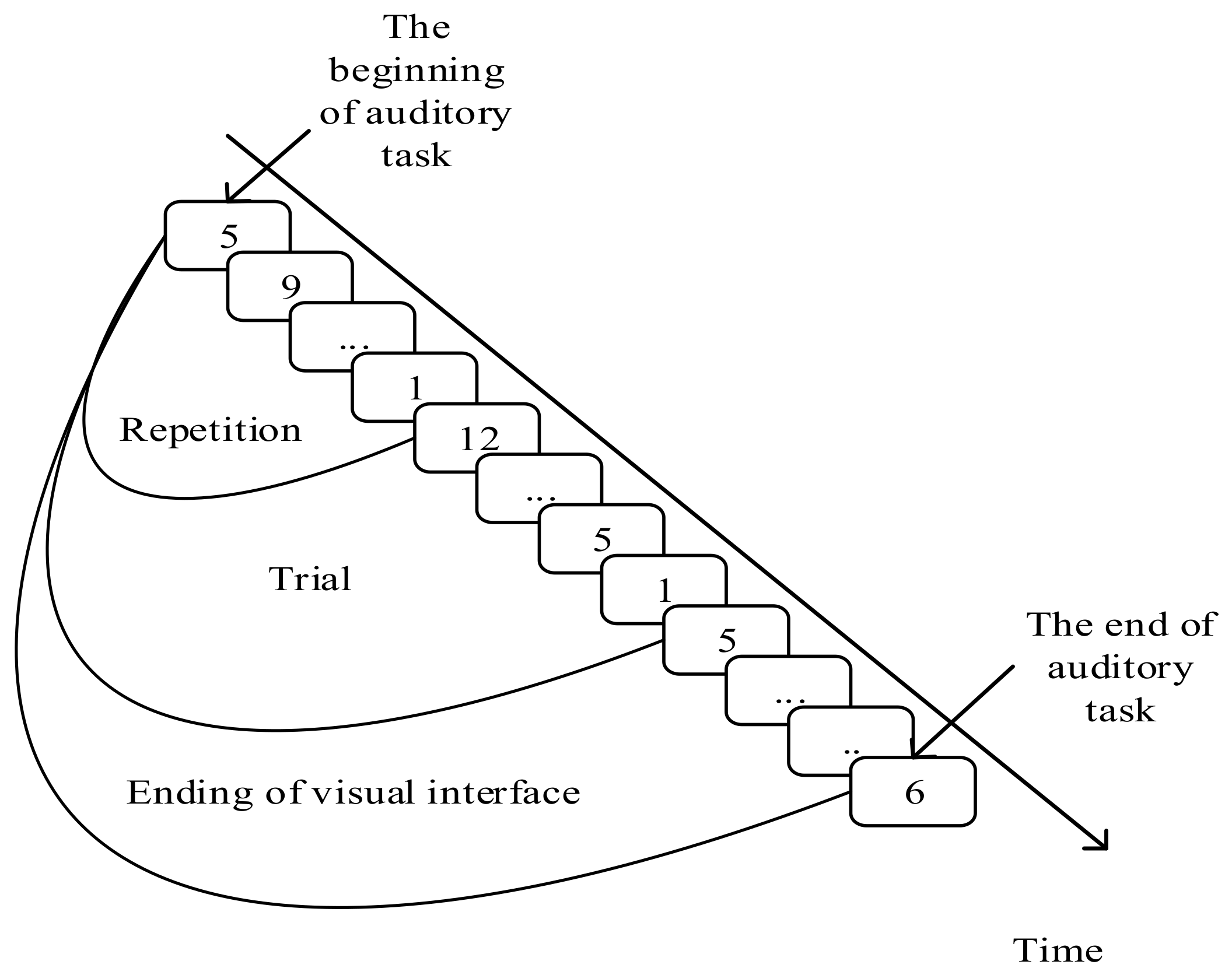

2.3. Visual-Auditory BCI Tasks

The auditory material is an excerpt of audio story “Like a Flowing River”. The time length of the material is 8 min.

The visual-auditory BCI tasks are categorized into three types. In each type, the subjects need to conduct 36 trials. Every trial is composed of 10 repetitions. Every 12 trials consist of a sub-experiment in which a certain auditory material is played when the sub-experiment starts and is stopped when the sub-experiment ends. In each sub-experiment, the subject is required to not only focus on the target stimulus in each trial, but also silently count the number of “De” noticed from the auditory material. The differences between the three tasks are the speeds of playing the material: 0–auditory task (0-T) represents the task in which there is no auditory material played; 0.5–auditory task (0.5-T) represents the task in which the auditory material is played in half of the ordinary speed; 1–auditory task (1-T) represents the task in which the auditory material is played in an ordinary speed. The numbers of the “De” in the three tasks are 0, 26 and 53. The procedure of the visual-auditory BCI tasks is shown in

Figure 2.

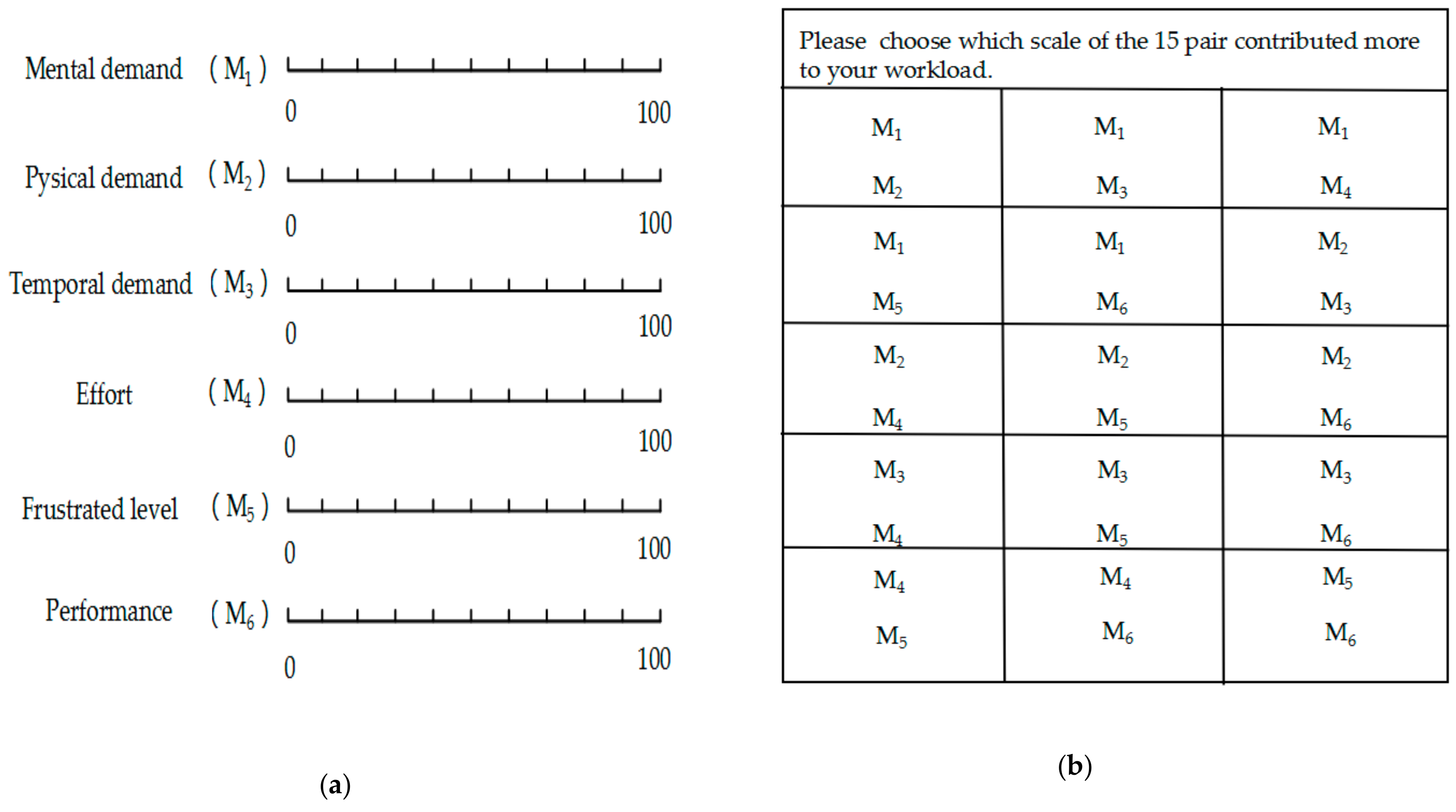

2.4. The NASA-TLX Scale

Subjects complete the fatigue scale after the experiment, and the average value the subjects get from the scale can determine the difficulty of the auditory task; the difficulty has three levels: low, medium and high. The most commonly used subjective fatigue scale is the NASA-TLX scale developed by NASA, which has good internal consistency and structural validity as a subjective fatigue assessment for BCI system operations [

27]. In

Figure 3a, we can get the scores of M

1 to M

6. In

Figure 3b, we can calculate N

1 to N

6 from the 15 pairs comparisons. The larger the total score, the greater the mental workload.

Its score expression is:

where

F is the mental workload value of the subject, and

is the number of

i-th dimensions that participants considered to have more contribution to the overall mental workload than other dimensions. The

means the number of pair-wise comparisons of all factors.

is the score of the

i-th dimension.

2.5. Feature Extraction and Classification

The EEG data from 0 ms to 600 ms post-stimulus is cut for each stimulus. Firstly, a 3rd-order Butterworth band-pass filter (0.5-10 Hz) is used to filter each epoch. Secondly, the data of 50 ms pre-stimulus is used as the baseline to make a baseline correction. Thirdly, all epochs are down-sampled to 40 Hz to reduce the data size. At last, this experiment uses 36 channels as feature channels to output a 864-dimension feature vector.

The fisher linear discriminant analysis (FLDA) is used for the binary classification problem because they have excellent classification performance in ERP-based BCI systems to solve identification problems [

31,

32].

The discriminant function of the Fisher classifier is:

is the best projection direction, is the data sample, and is the central value of the two types of data; the samples are classified by the value of : the samples is discriminated as a target when > 0 and as a non-target when < 0.

The mental workload of different people is affected by many factors, so the fatigue of each subject after an auditory task has three states which are low workload state (LD), medium workload state (MD) and high workload state (HD). The rule of division is as follows:

where

Vj is the fatigue value of the

j-th subject after completing the visual-auditory BCI task.

Vi-0,

Vi−0.5, and

Vi−1 are the fatigue values of 0-T, 0.5-T and 1-T visual-auditory BCI tasks.

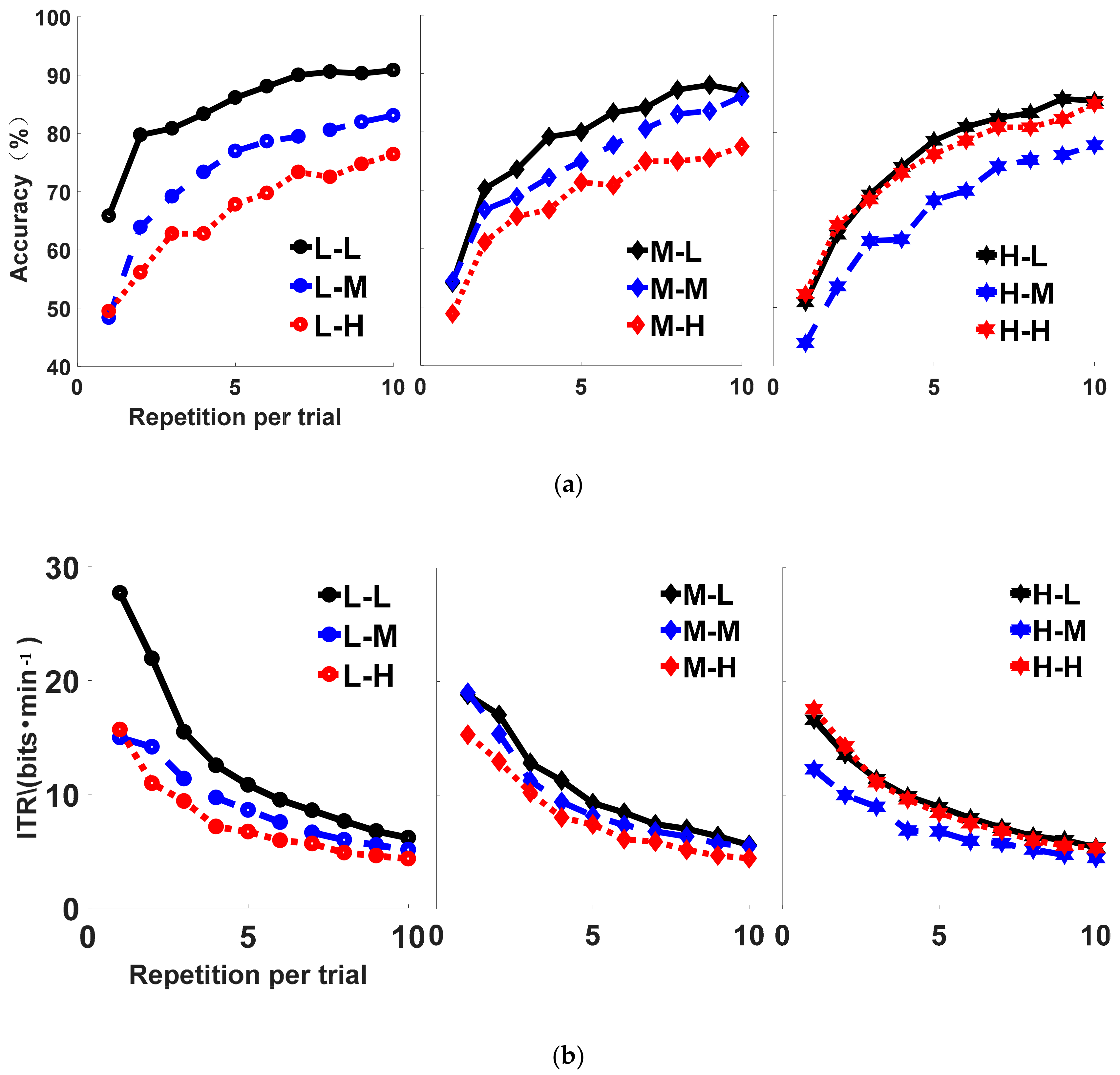

To determine the effects of auditory task on the performance of the BCI, nine train-test combinations are created, namely L-L, L-M, L-H, M-L, M-M, M-H, H-L, H-M, and H-H. The first three combinations, L-L, L-M, and L-H, represent the conditions in which BCI are built under the LD condition and tested separately under LD, MD and HD. M-L, M-M, and M-H represent the conditions in which BCI are built under the MD condition and tested separately under LD, MD, and HD. The last three combinations, H-L, H-M, and H-H, represent the condition in which BCI are separately trained on HD and tested on LD, MD, and HD.

2.6. Evaluation Criteria

The classification accuracy in this article refers to the ratio of the number of correctly classified “Trial” and the total number of “Trial”.

where

is the accuracy,

is the correctly classified number of “Trial”, and

is the total number of “Trial”, which is 36 in this research.

The information transfer rate (ITR) was originally used for the communication and computational rates of measurement systems in the communications field and was introduced in the BCI field by the Wolpaw et al. [

32,

33]. Due to the three basic performance indicators of optional target number, target recognition accuracy, and single target selection time, ITR has become one of the most commonly used comprehensive evaluation indicators for evaluating system communication rates in BCI research. Before calculating the ITR, we first need to calculate the amount of information transmitted by a single target selection, that is the bitrate (

B). The formula of

B is:

The unit of B is bits/selection, the N represents the number of possible targets (12 with the 3 × 4 matrix), and the P represents the accuracy that are correctly classified (average accuracy).

The n represents the repetition number of trials, the represents the time of a repetition which is 2700 ms, the represents the interval time between each trial, which is 500 ms.

4. Discussions

Many BCI systems perform well in the lab environment, but the performance becomes poor when applying it into the real environment. It is necessary to find the reason that causes this decline to retain a high performance. Since there are many potential factors influencing the BCI performance in the real environment and the sound is a common noise, this study mainly analyzes the influence of the sound.

4.1. The Auditory Task Increases the Mental Workload

We can see that most of the subjects regard the task with higher speed as harder. Higher speed makes the subjects receive and deal with more information within unit time, which causes an increase of mental workload. When the amount of information is too much, the subjects might ignore some information, which leads to decision mistakes, such as counting the number of “De” wrongly, or forgetting to pay attention to the target stimulus when it appears. This can be seen from the behavior results, where as the speed increases, the rate of counting correctly falls.

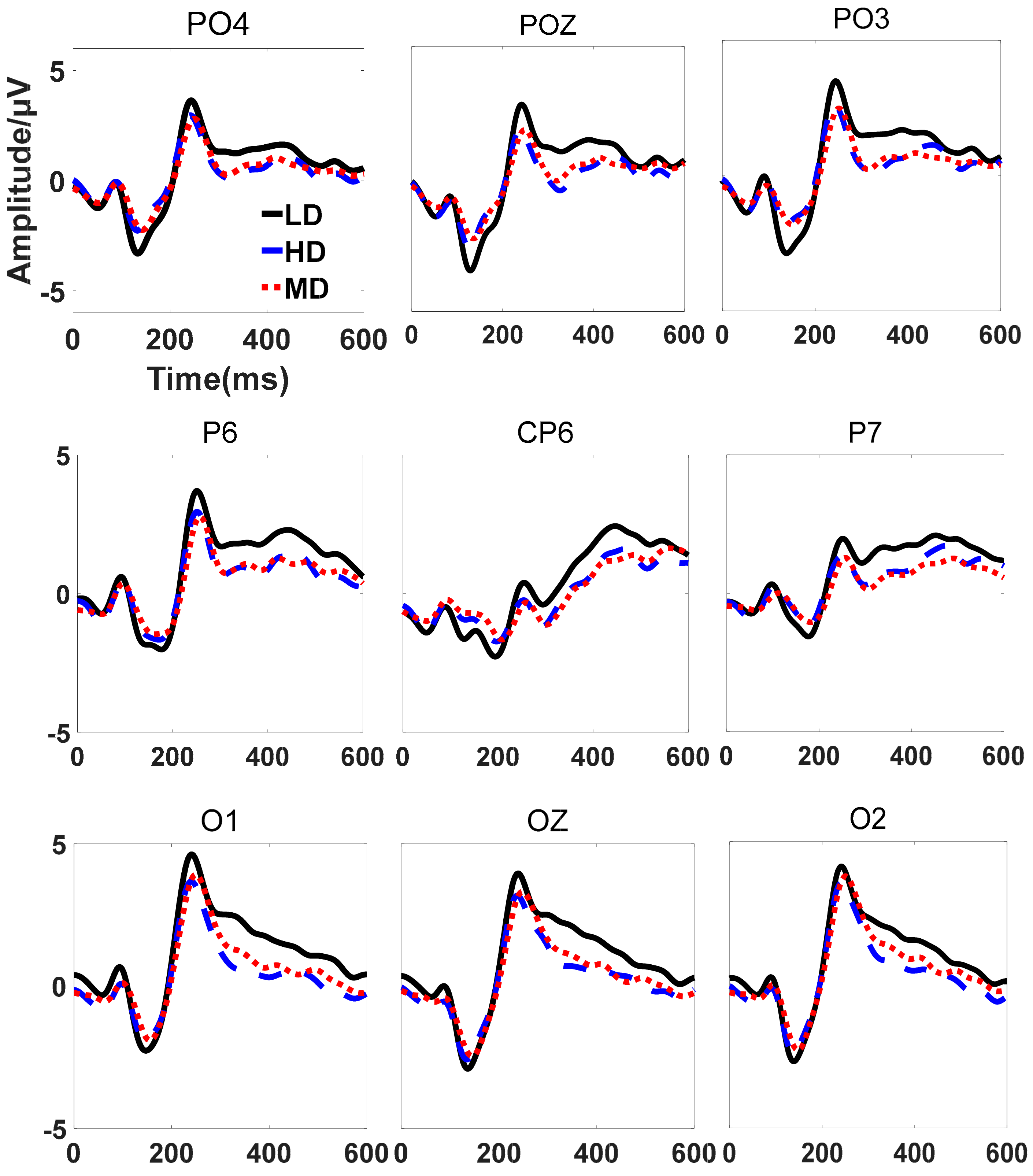

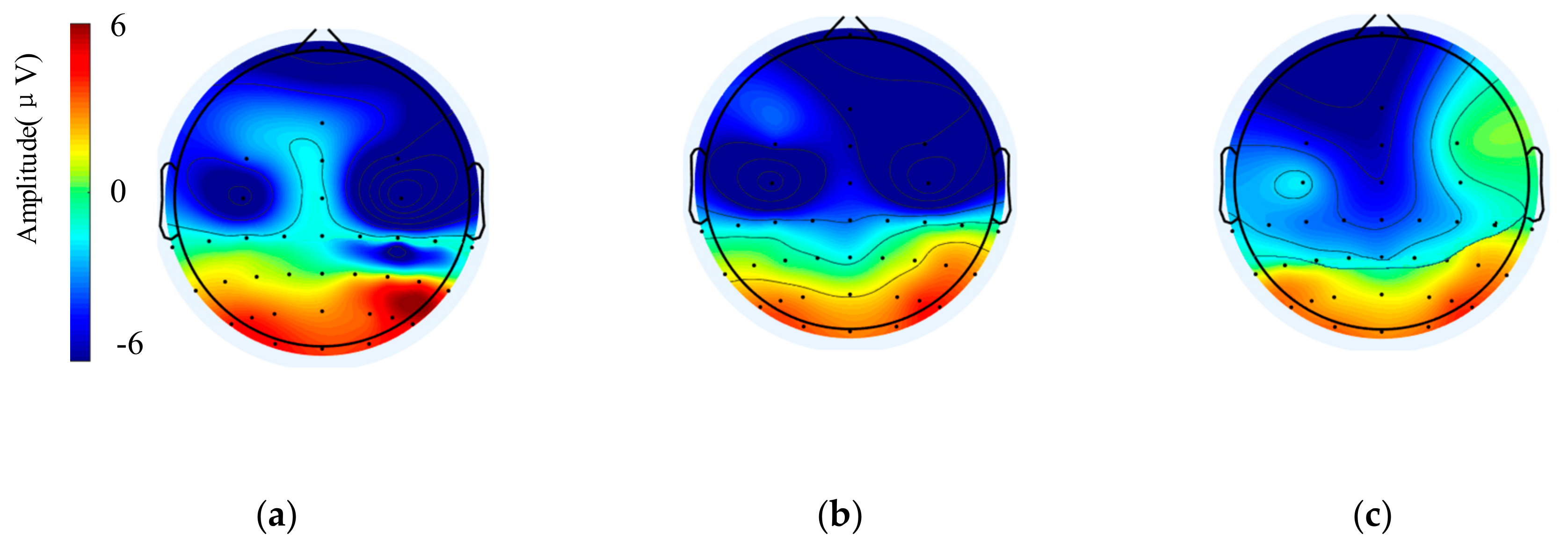

On the other hand, as the difficulty increases, the ERP decreases. The average amplitude of P300 is decreased by 0.86 μV under HD compared with LD in occipital area (p = 0.0239). The average amplitude of N200 is decreased by 0.69 μV under HD compared with LD in occipital area (p = 0.0158). The subjects allocate less resources to the visual stimulus because he/she pays parts of attentions to the auditory task, which leads to lower ERPs when stimulated by the stimulus.

4.2. The Factors of Performance Decline

When applying the BCI into the environment where the speed of the sound is higher, the performance of it declines. The accuracy or the ITR in the LD conditions are all higher than the accuracy or ITR of the HD (MD & LD: p = 0.0282; HD & LD: p = 0.0073) conditions. The accuracy is also decreased by 5.95% in HD compared with LD (p = 0.0101). Finding the reason is important for using the BCI in daily life. This study uses the data sets under the same or different data sets to explore the factors influencing the detection performance. The nine conditions can be categorized as two types: The type that uses the same data sets to train and test the classifier, such as L-L, M-M, and H-H; and the type that uses the different data sets to train and test the classifier, such as L-M, L-H, M-L, M-H, H-L, and H-M. We assume that the performance of the classification in the BCI is influenced by two factors.

Firstly, the amplitude of the ERP has an influence on the performance. In

Figure 6, as the speed of the auditory task increases, the performance of the classifiers of the first type decreases. The descending order of the three classifiers is L-L, M-M, H-H. This order is in accordance with the order of the amplitude under different tasks. Since the only difference between these classifiers is the amplitude, the amplitude affects classification. Higher amplitude could produce more obvious features, which leads to higher classification accuracy rate.

Secondly, the difference between training and testing data sets plays important roles on the performance. When using the same data to test the classifiers that are trained with different data sets, the results show that the L-L outperforms the M-L and H-L, the M-M outperforms the L-M and H-M, and the H-H outperforms the M-H and L-H. The accuracy and ITR under H-H are higher than the accuracy and ITR under H-M; the accuracy and ITR are the highest under L-L. The ITR is decreased from 16.43 bits/min under H-H to 12.36 bits/min under H-M. This indicates that the classifier performs best if the training and testing data are from the same task, even though the amplitude is not the highest. This finding indicates that if the testing environment is too noisy, the training process should take the noise into consideration to obtain better performance. When the training set data is inconsistent with the test set data, the classification accuracy and information transmission rate will decrease. When the subjects are in a low brain workload, or the classifier is trained and tested using the same state data, the classifier can obtain better classification and information transmission rate.

5. Conclusions

This study uses a dual-task to explore the influence of the speed of the sound on the visual BCI. The P300 and N200 both decline by 0.86 μV (p = 0.0239) and 0.69 μV (p = 0.0158) when the speed of the sound increases, which leads to a substantial decrease of accuracy (5.95%, p = 0.0101) and ITR (9.53 bits/min, p = 0.0416) of the visual BCI. The result demonstrates that increasing the speed of the sound has an effect on the allocation of the workload for the visual BCI task, which indicates that the auditory and visual pathways are related when dealing with the BCI. Besides, we find that the performance of the BCI also depends on the difference between the training and testing data. Large differences would lead to bad performance even though the classifier is trained well under the training environment; therefore, we should take the noise of the testing environment into consideration when training a classier.

The subject could hear the sound of servos of the robotic arm when conducting the on-line experiments. Whether this sound has an effect on the BCI performance needs to be studied in our future work. In addition, the visual BCI should take more kinds of visual images, such as the subject’s arm, to explore the way of improving the BCI performance. Whether the user’s familiarity with the sound or the memory cognitive mechanism of the human will influence the visual BCI performance needs to be studied in our future work.