AMiCUS 2.0—System Presentation and Demonstration of Adaptability to Personal Needs by the Example of an Individual with Progressed Multiple Sclerosis

Abstract

1. Introduction

1.1. Multiple Sclerosis

1.2. Assistive Systems for Tetraplegics

1.3. Direct Control Interfaces for Tetraplegic MS Patients

2. Assistive System AMiCUS

2.1. AMiCUS 1.0

2.2. AMiCUS 2.0

- Usability of the system calibration.

- Ergonomics, ease, and efficiency of group switching.

- Ergonomics and usability of the GUI.

- Intuitiveness of robot control.

- Adaptibility to the skill level of the user.

2.2.1. Usability of the System Calibration

- Startup: After startup, all calibration procedures have to be fully completed.

- Drift: In case of sensor drift, only the zero position needs to be re-calibrated.

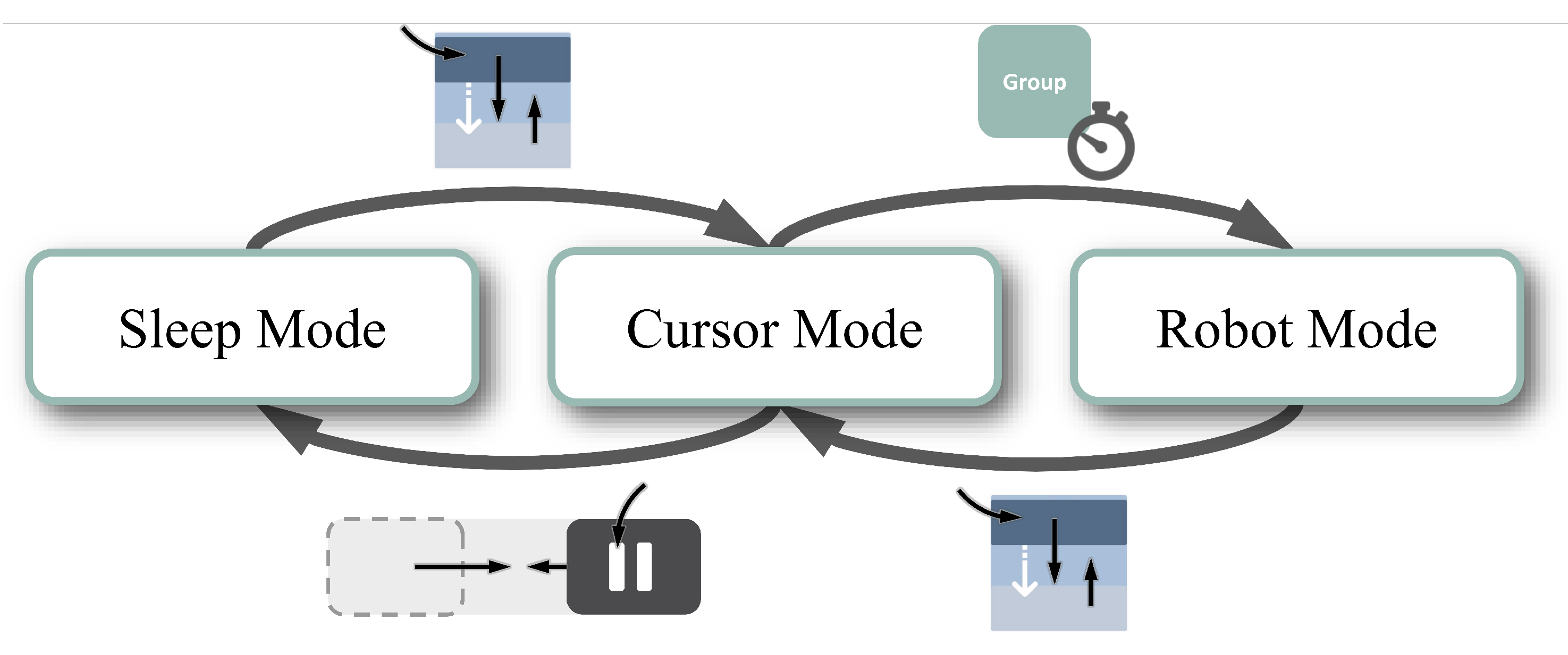

2.2.2. Ergonomics, Ease, and Efficiency of Group Switching

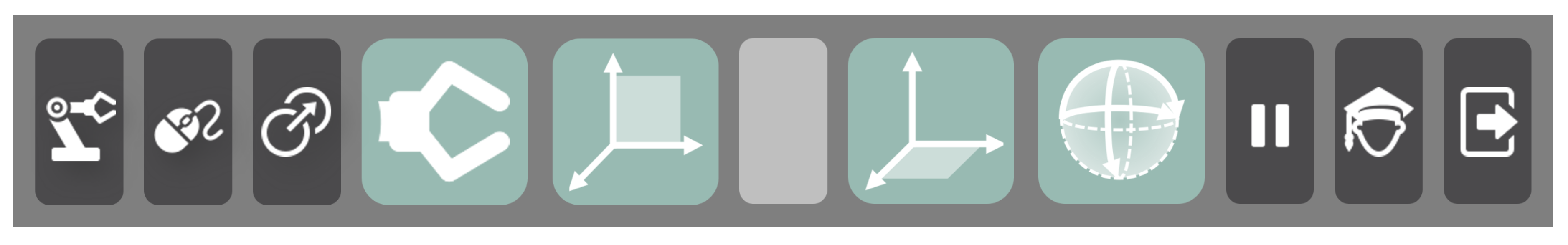

2.2.3. Ergonomics and Usability of the GUI

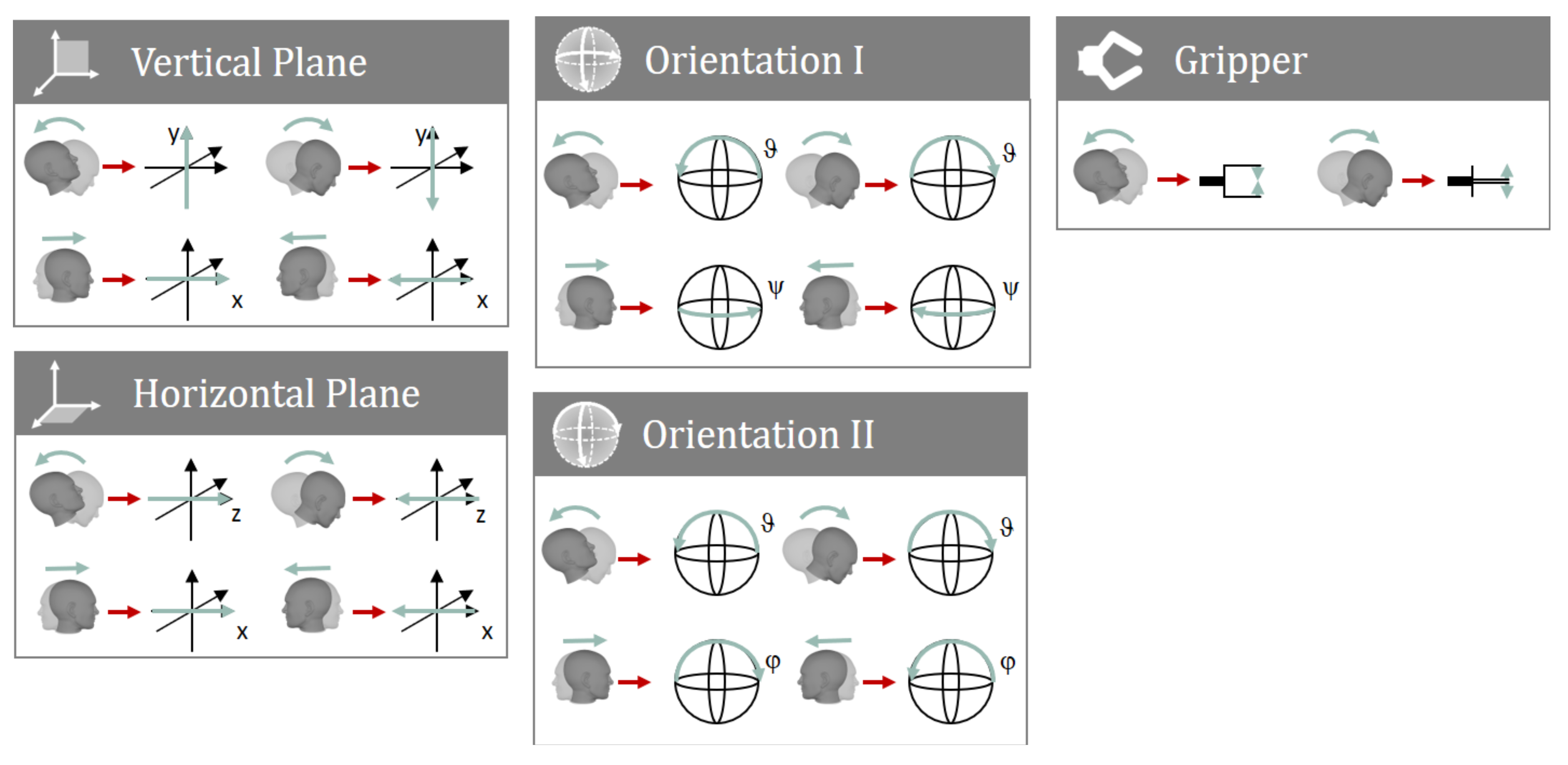

2.2.4. Usability of Robot Control

2.2.5. Adaptability to the Skill Level of the User

- Beginner’s Mode: Designed to minimize both mental and physical demands for users with restricted capabilities to control the robot arm and/or for untrained users.

- Advanced Mode: Optimized for high control efficiency, aiming at users with high spatial imagination and no or mild head movement limitations.

2.3. Mappings for Users with Less than Three DOFs of the Head

3. Materials and Methods

3.1. Research Goals

3.2. Test Subject

3.3. Experiments

3.3.1. Comparison of AMiCUS 1.0 and 2.0

3.3.2. Evaluation under Semi-Realistic Conditions

4. Results and Discussion

4.1. Comparison of AMiCUS 1.0 and 2.0

4.1.1. Usability of the System Calibration

4.1.2. Ergonomics, Ease and Efficiency of Group Switching

4.1.3. Ergonomics and Usability of the GUI

4.1.4. Usability of Robot Control

4.2. Evaluation under Semi-Realistic Conditions

5. Conclusions and Outlook

5.1. Summary of Results

5.2. Accessibility for Tetraplegic MS Patients

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ADL | Activity of daily living |

| CNS | Central nervous system |

| DOF | Degree of freedom |

| EMG | Electromyography |

| GUI | Graphical user interface |

| MS | Multiple sclerosis |

| ROM | Range of motion |

| SCI | Spinal cord injury |

Appendix A. How AMiCUS 1.0 Addresses the Needs of MS Patients

- ✓

- Intuitive mapping of head DOFs onto robot DOFs

- ✓

- Only one head gesture has to be memorized for group switching

- ✓

- Self-explanatory GUI with optical and acoustic feedback

- ✓

- Static positioning of robot group buttons

- ✓

- Implementation of Sleep Mode to take breaks

- ×

- Simultaneous rotations are mentally demanding

- ×

- Nested menu items are cumbersome to access

- ✓

- Clean GUI with high contrast

- ✓

- Large interaction elements

- ×

- Small cursor is sometimes difficult to find

- ×

- Small gripper camera image

- ✓

- Calibration for available head ROM

- ×

- The Head Gesture limits system accessibility

- ×

- Mappings for users with less than three available head DOFs are not implemented

- ✓

- Solely optical and acoustic feedback

- ✓

- Effect of tremor is not yet investigated

- ✓

- Implementation of Sleep Mode to pause control

- ✓

- Usage of slow and smooth control movements

- ×

- Head Gesture requires quick head movements

- ×

- Simultaneous rotations are physically demanding

- ×

- Assymmetrical GUI design creates one-sided strain of the neck

- ✓

- Control solely based on head motion input

Appendix B. Motivation for Dummy Eyes on Gripper Camera

Appendix C. Cursor Control with a Single Head DOF

References

- Compston, A.; Coles, A. Multiple Sclerosis. Lancet 2008, 9648, 1502–1517. [Google Scholar] [CrossRef]

- Multiple Sclerosis International Federation. Atlas of MS—Mapping Multiple Sclerosis Around the World. 2013. Available online: http://www.atlasofms.org (accessed on 1 August 2019).

- National Multiple Sclerosis Society. Who Gets MS? (Epidemiology). Available online: https://www.nationalmssociety.org/What-is-MS/Who-Gets-MS (accessed on 1 August 2019).

- MS-UK. Choices Leaflet: MS Symptoms—Multiple Sclerosis Information. 2017. Available online: https://www.ms-uk.org/choicesmssymptoms (accessed on 2 August 2019).

- National Multiple Sclerosis Society. 2019. Types of MS. Available online: https://www.nationalmssociety.org/What-is-MS/Types-of-MS (accessed on 1 August 2019).

- Gräser, A.; Heyer, T.; Fotoohi, L.; Lange, U.; Kampe, H.; Enjarini, B.; Heyer, S.; Fragkopoulos, C.; Ristic-Durrant, D. A Supportive Friend at Work: Robotic Workplace Assistance for the Disabled. IEEE Robot. Autom. Mag. 2013, 20, 148–159. [Google Scholar] [CrossRef]

- Kim, D.J.; Hazlett-Knudsen, R.; Culver-Godfrey, H.; Rucks, G.; Cunningham, T.; Portee, D.; Bricout, J.; Wang, Z.; Behal, A. How Autonomy Impacts Performance and Satisfaction: Results From a Study with Spinal Cord Injured Subjects Using an Assistive Robot. IEEE Trans. Syst. Man, Cybern.-Part A: Syst. Humans 2011, 42, 2–14. [Google Scholar] [CrossRef]

- Hochberg, L.R.; Bacher, D.; Jarosiewicz, B.; Masse, N.Y.; Simeral, J.D.; Vogel, J.; Haddadin, S.; Liu, J.; Cash, S.S.; Van Der Smagt, P.; et al. Reach and Grasp by People with Tetraplegia using a Neurally Controlled Robotic Arm. Nature 2012, 485, 372. [Google Scholar] [CrossRef] [PubMed]

- Vogel, J.; Haddadin, S.; Simeral, J.D.; Stavisky, S.D.; Bacher, D.; Hochberg, L.R.; Donoghue, J.P.; Van Der Smagt, P. Continuous Control of the DLR Light-Weight Robot III by a Human with Tetraplegia using the BrainGate2 Neural Interface System. In Experimental Robotics; Springer: Berlin/Heidelberg, Germany, 2014; pp. 125–136. [Google Scholar]

- Tigra, W.; Navarro, B.; Cherubini, A.; Gorron, X.; Gélis, A.; Fattal, C.; Guiraud, D.; Coste, C.A. A Novel EMG Interface for Individuals with Tetraplegia to Pilot Robot Hand Grasping. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 26, 291–298. [Google Scholar] [CrossRef] [PubMed]

- Hagengruber, A.; Vogel, J. Functional Tasks Performed by People with Severe Muscular Atrophy Using an sEMG Controlled Robotic Manipulator. In Proceedings of the 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 1713–1718. [Google Scholar]

- Alsharif, S. Gaze-Based Control of Robot Arm in Three-Dimensional Space. Ph.D. Thesis, University of Bremen, Bremen, Germany, 2018. [Google Scholar]

- Struijk, L.N.A.; Egsgaard, L.L.; Lontis, R.; Gaihede, M.; Bentsen, B. Wireless intraoral tongue control of an assistive robotic arm for individuals with tetraplegia. J. Neuroeng. Rehabil. 2017, 14, 110. [Google Scholar] [CrossRef] [PubMed]

- Raya, R.; Rocon, E.; Ceres, R.; Pajaro, M. A Mobile Robot Controlled by an Adaptive Inertial Interface for Children with Physical and Cognitive Disorders. In Proceedings of the IEEE International Conference on Technologies for Practical Robot Applications (TePRA), Woburn, MA, USA, 23–24 April 2012; pp. 151–156. [Google Scholar] [CrossRef]

- Music, J.; Cecic, M.; Bonkovic, M. Testing Inertial Sensor Performance as Hands-Free Human–Computer Interface. WSEAS Trans. Comput. 2009, 8, 715–724. [Google Scholar]

- Rudigkeit, N.; Gebhard, M. AMiCUS—A Head Motion-Based Interface for Control of an Assistive Robot. Sensors 2019, 19, 2836. [Google Scholar] [CrossRef] [PubMed]

- Jackowski, A.; Gebhard, M.; Thietje, R. Head Motion and Head Gesture-Based Robot Control: A Usability Study. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 161–170. [Google Scholar] [CrossRef] [PubMed]

- Nelles, J.; Schmitz-Buhl, F.; Spies, J.; Kohns, S.; Sonja, K.M.; Bröhl, C.; Brandl, C.; Mertens, A.; Schlick, C.M. Altersdifferenzierte Evaluierung von Belastung und Beanspruchung bei der kopfbasierten Steuerung eines kooperierenden Roboters. In Proceedings of the Frühjahrskongress der Gesellschaft für Arbeitswissenschaft, Brugg-Windisch, Switzerland, 15–17 February 2017. [Google Scholar]

- Van Someren, M.W.; Barnard, Y.F.; Sandberg, J.A. The Think Aloud Method: A Practical Approach to Modelling Cognitive Processes; Academic Press: London, UK, 1994. [Google Scholar]

- LoPresti, E.; Brienza, D.M.; Angelo, J.; Gilbertson, L.; Sakai, J. Neck Range of Motion and Use of Computer Head Controls. In Proceedings of the Fourth International ACM Conference on Assistive Technologies, Arlington, VA, USA, 13–15 November 2000; pp. 121–128. [Google Scholar]

- Rudigkeit, N.; Gebhard, M.; Gräser, A. Evaluation of Control Modes for Head Motion-based Control with Motion Sensors. In Proceedings of the IEEE International Symposium on Medical Measurements and Applications (MeMeA) Proceedings, Turin, Italy, 7–9 May 2015. [Google Scholar] [CrossRef]

- Lee, H.C.; Jung, C.W.; Kim, H.C. Real-time Endoscopic Image Orientation Correction System Using an Accelerometer and Gyrosensor. PLoS ONE 2017, 12, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Raya, R.; Rocon, E.; Gallego, J.A.; Ceres, R.; Pons, J.L. A Robust Kalman Algorithm to Facilitate Human–Computer Interaction for People with Cerebral Palsy, Using a New Interface Based on Inertial Sensors. Sensors 2012, 12, 3049–3067. [Google Scholar] [CrossRef] [PubMed]

- Gallese, V.; Goldman, A. Mirror Neurons and the Simulation Theory of Mind-Reading. Trends Cogn. Sci. 1998, 2, 493–501. [Google Scholar] [CrossRef]

- McGugin, R.W.; Gatenby, J.C.; Gore, J.C.; Gauthier, I. High-Resolution Imaging of Expertise Reveals Reliable Object Selectivity in the Fusiform Face Area Related to Perceptual Performance. Proc. Natl. Acad. Sci. USA 2012. [Google Scholar] [CrossRef] [PubMed]

| No. | Symptom | Requirement |

|---|---|---|

| 1.1 | Fatigue, memory/ attention deficit | The use of the system must be easy, intuitive, and provide the possibility to take breaks, reducing the mental effort |

| 1.2 | Visual impairment | Visual feedback should be large and with high contrast |

| 1.3 | (Progressing) paralysis | The system needs to be adaptable to the available head range of motion (ROM) and resulting control capabilities of the user |

| 1.4 | Disturbances in feeling | The system should not make use of tactile feedback |

| 1.5 | Tremor | The system must guarantee that unintended motion resulting from tremor is not used for robot control |

| 1.6 | Weakness | The system should provide the possibility to take breaks and use only slow and smooth movements for control, minimizing the physical effort |

| 1.7 | Speech disorders | The system should not use speech or tongue movement as additional input modality |

| No. | Requirement |

|---|---|

| 2.1 | Ergonomics of the switching process must be improved |

| 2.2 | The switching process should be simplified, possibly being faster |

| 2.3 | The level of difficulty of the gripper rotations should be adapted to the capabilities of the user |

| 2.4 | The intuitiveness of the depth control should be improved |

| 2.5 | The speed of the robot control should be adapted to the skills of the user |

| No. | Question |

|---|---|

| 1 | What do you think is good about the new switching procedure? |

| 2 | What do you think is not good about the new switching procedure and how can it be improved? |

| 3 | What do you think is good about the new menu? |

| 4 | What do you think is not good about the new menu and how can it be improved? |

| No. | Statement | Rating | ||

|---|---|---|---|---|

| Usability of the System Calibration | 1 | It is much better that I can start the calibration myself in the new version. |  | 5 |

| 2 | It is much better that Robot Calibration starts automatically after Cursor Calibration in the new version. |  | 5 | |

| 3 | The default size of the control area for robot control in the new version is very good. |  | 5 | |

| 4 | It is very good that there is a separate Offset Calibration in the new version. |  | 5 | |

| Ergonomics, Ease and Efficiency of Group Switching | 5 | The Vertical Slider is much better than the Head Gesture. |  | 5 |

| 6 | The Dwell Button is much better than the Slide Button. |  | 5 | |

| 7 | Switching is much easier with the new version. |  | 5 | |

| 8 | Switching is much faster with the new version. |  | 3 | |

| 9 | I think it is very unlikely that a robot group will be entered unintentionally with the new version. |  | 5 | |

| 10 | I think that possible remaining difficulties when using the new version will be overcome due to learning effects. |  | 5 | |

| Ergonomics and Usability of the GUI | 11 | I like the new Cursor GUI much better than the old one. |  | 3 |

| 12 | I can keep overview of the menu much better with the new version |  | 5 | |

| 13 | I can access the menu points much easier with the new version. |  | 3 | |

| 14 | I can see the new cursor much better than the old cursor. |  | 3 | |

| 15 | Cursor control is much easier with the new cursor. |  | 3 | |

| 16 | I like the new Robot GUI much better than the old one. |  | 3 | |

| 17 | I like the big camera image of the new version much better than the small one of the old version. |  | 3 | |

| Intuitiveness of Robot Control | 18 | The expansion of the deadzone in the new version has no negative effect at all on the precision of the robot control. |  | 5 |

| 19 | Depth control is much more intuitive than before. |  | 5 | |

| 20 | I can imagine gripper rotations much better if the gripper looks like a face. |  | 4 | |

| 21 | Control is much easier without simultaneous rotations. |  | 3 |

| No. | Symptom | Requirement | AMiCUS | |

|---|---|---|---|---|

| 1.0 | 2.0 | |||

| 1.1 | Fatigue, memory/ attention deficit | The use of the system must be easy, intuitive, and provide the possibility to take breaks, reducing the mental effort | + | ++ |

| 1.2 | Visual impairment | Visual feedback should be large and with high contrast | + | + |

| 1.3 | (Progressing) paralysis | The system needs to be adaptable to the available head ROM and the resulting control capabilities of the user | + | ++ |

| 1.4 | Disturbances in feeling | The system should not make use of tactile feedback | ++ | ++ |

| 1.5 | Tremor | The system must guarantee that unintended motion resulting from tremors is not used for robot control | n.a. | n.a. |

| 1.6 | Weakness | The system should provide the possibility to take breaks and use only slow and smooth movements for control, minimizing the physical effort required | o | ++ |

| 1.7 | Speech disorders | The system should not use speech or tongue movement as additional input modality | ++ | ++ |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rudigkeit, N.; Gebhard, M. AMiCUS 2.0—System Presentation and Demonstration of Adaptability to Personal Needs by the Example of an Individual with Progressed Multiple Sclerosis. Sensors 2020, 20, 1194. https://doi.org/10.3390/s20041194

Rudigkeit N, Gebhard M. AMiCUS 2.0—System Presentation and Demonstration of Adaptability to Personal Needs by the Example of an Individual with Progressed Multiple Sclerosis. Sensors. 2020; 20(4):1194. https://doi.org/10.3390/s20041194

Chicago/Turabian StyleRudigkeit, Nina, and Marion Gebhard. 2020. "AMiCUS 2.0—System Presentation and Demonstration of Adaptability to Personal Needs by the Example of an Individual with Progressed Multiple Sclerosis" Sensors 20, no. 4: 1194. https://doi.org/10.3390/s20041194

APA StyleRudigkeit, N., & Gebhard, M. (2020). AMiCUS 2.0—System Presentation and Demonstration of Adaptability to Personal Needs by the Example of an Individual with Progressed Multiple Sclerosis. Sensors, 20(4), 1194. https://doi.org/10.3390/s20041194