Employing Shadows for Multi-Person Tracking Based on a Single RGB-D Camera

Abstract

1. Introduction

2. Related Work

2.1. Multi-Person Tracking From RGB-D Data

2.2. Shadow Detection

3. Multi-Person Tracking Method

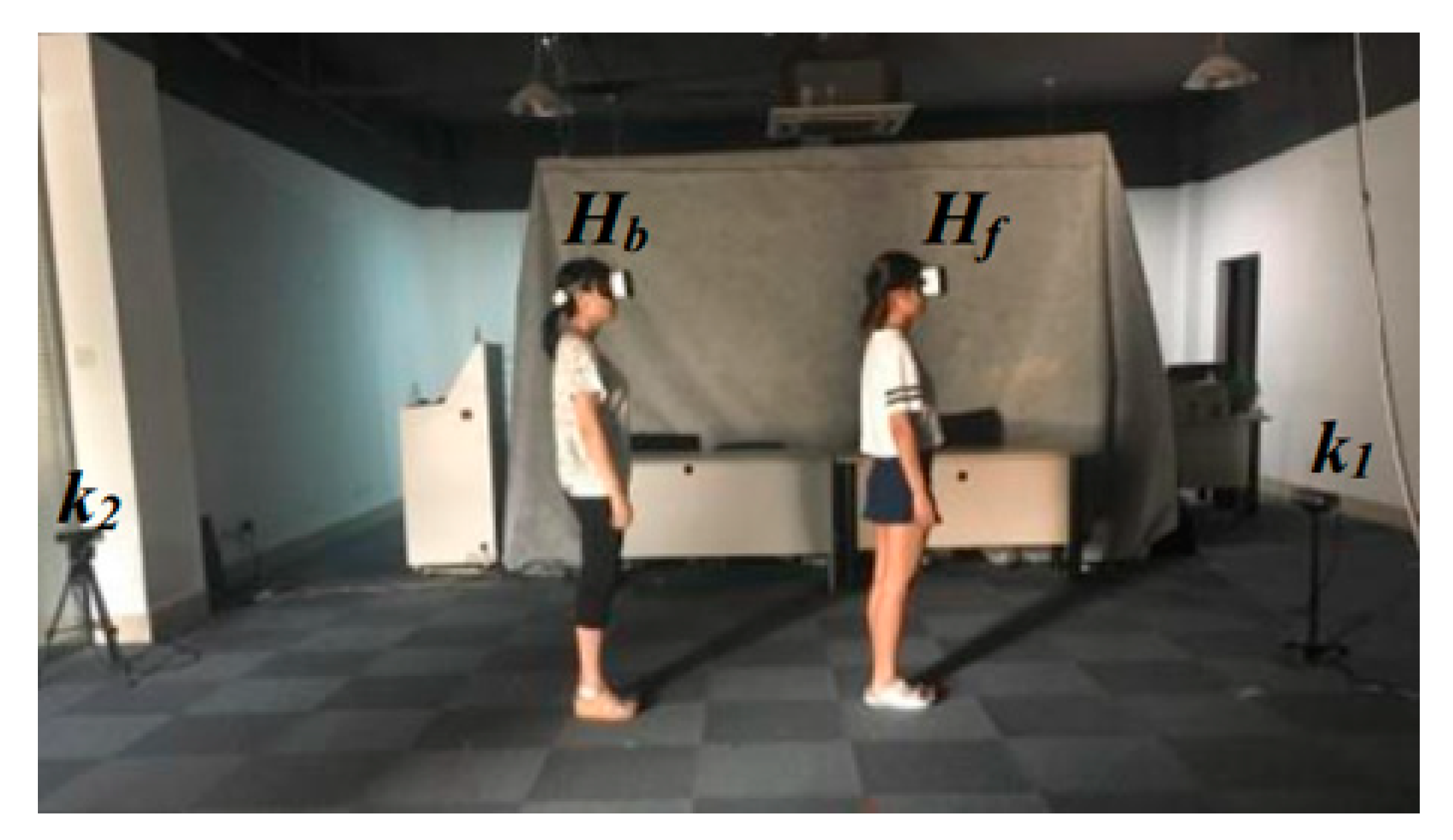

3.1. Basic Principle

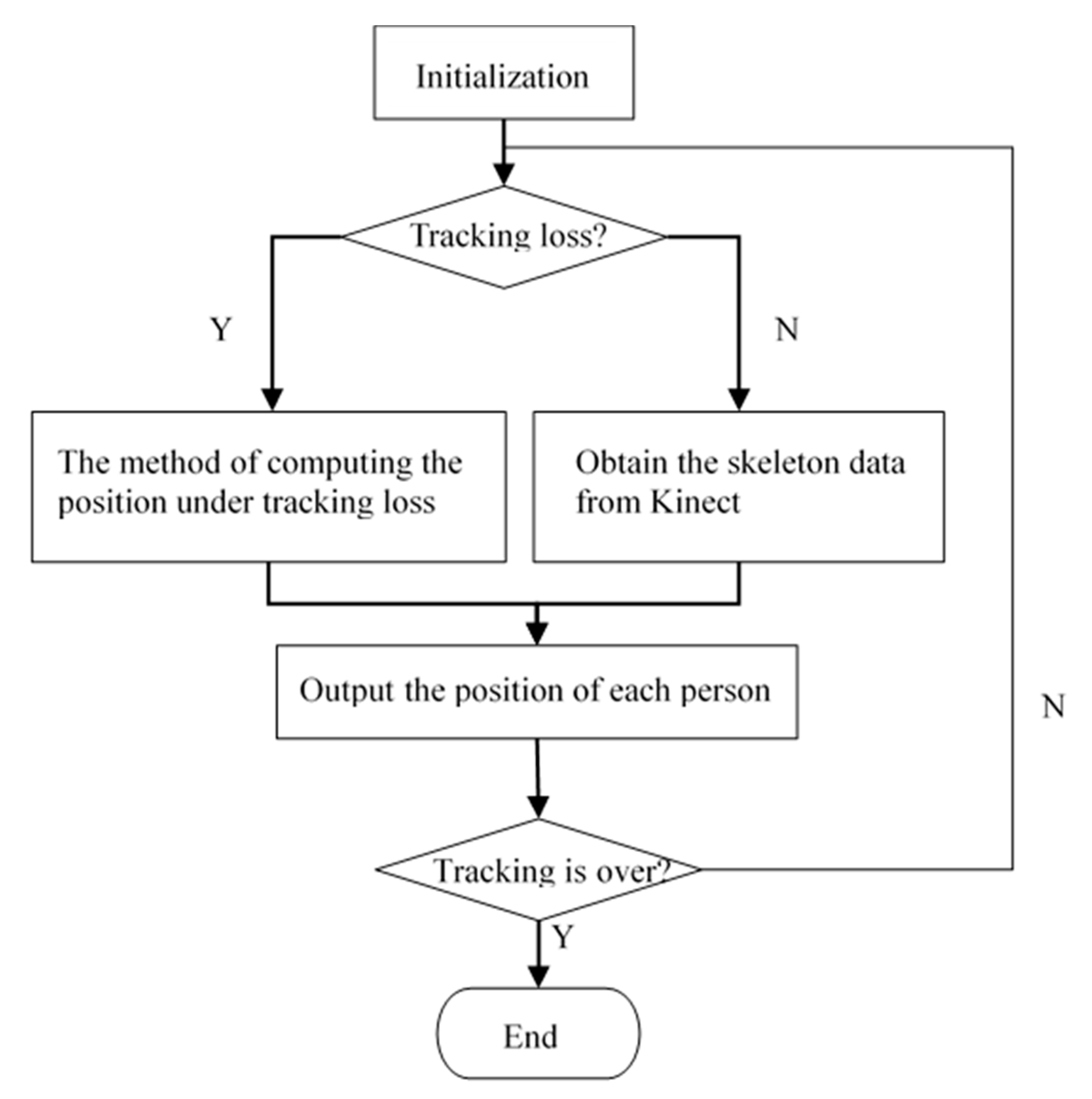

3.2. Algorithm Overview

4. STA Algorithm Design and Implementation

| Algorithm 1 Shadow-based Tracking Algorithm |

| Require: A background image, current color image, and the skeleton data of Hf. Ensure: The position of the occluded person Hb. 1: Search the region R including the shadow in the current color image according to the value of a that was computed in the initialization stage, and the skeleton data of Hf; 2: Extract Hb’s shadow in R; 3: Compute the position of Hb based on the shadow; 4: Return position of Hb |

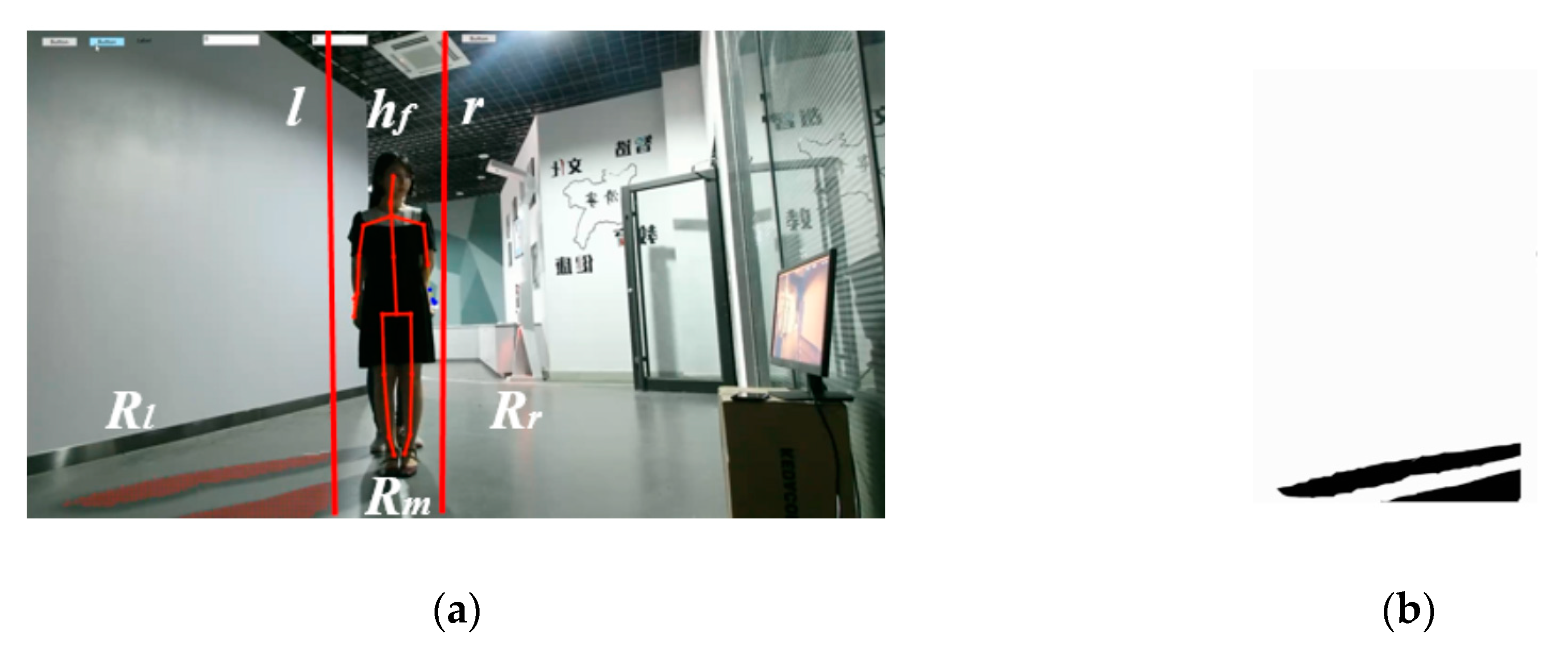

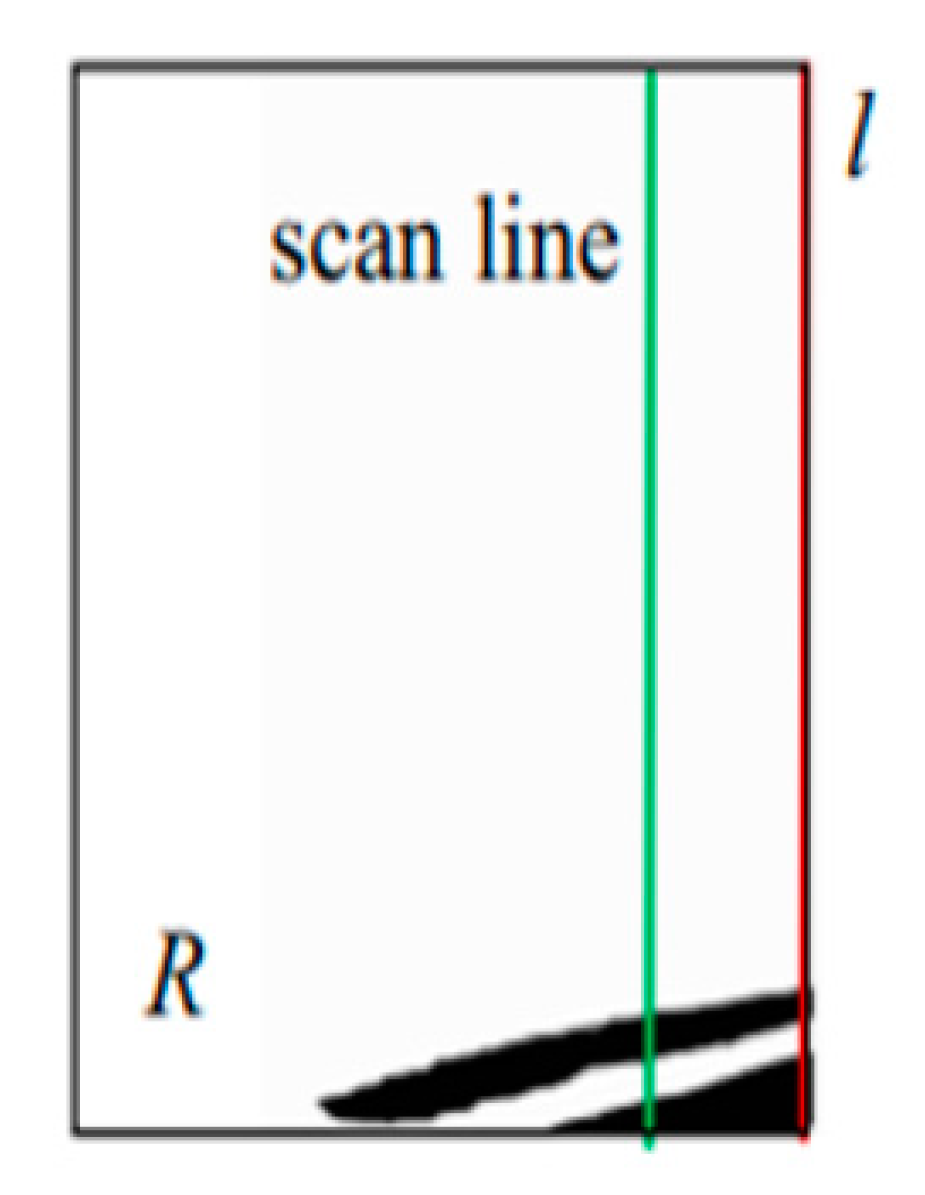

4.1. Search Shadow Region

4.2. Extract Shadow

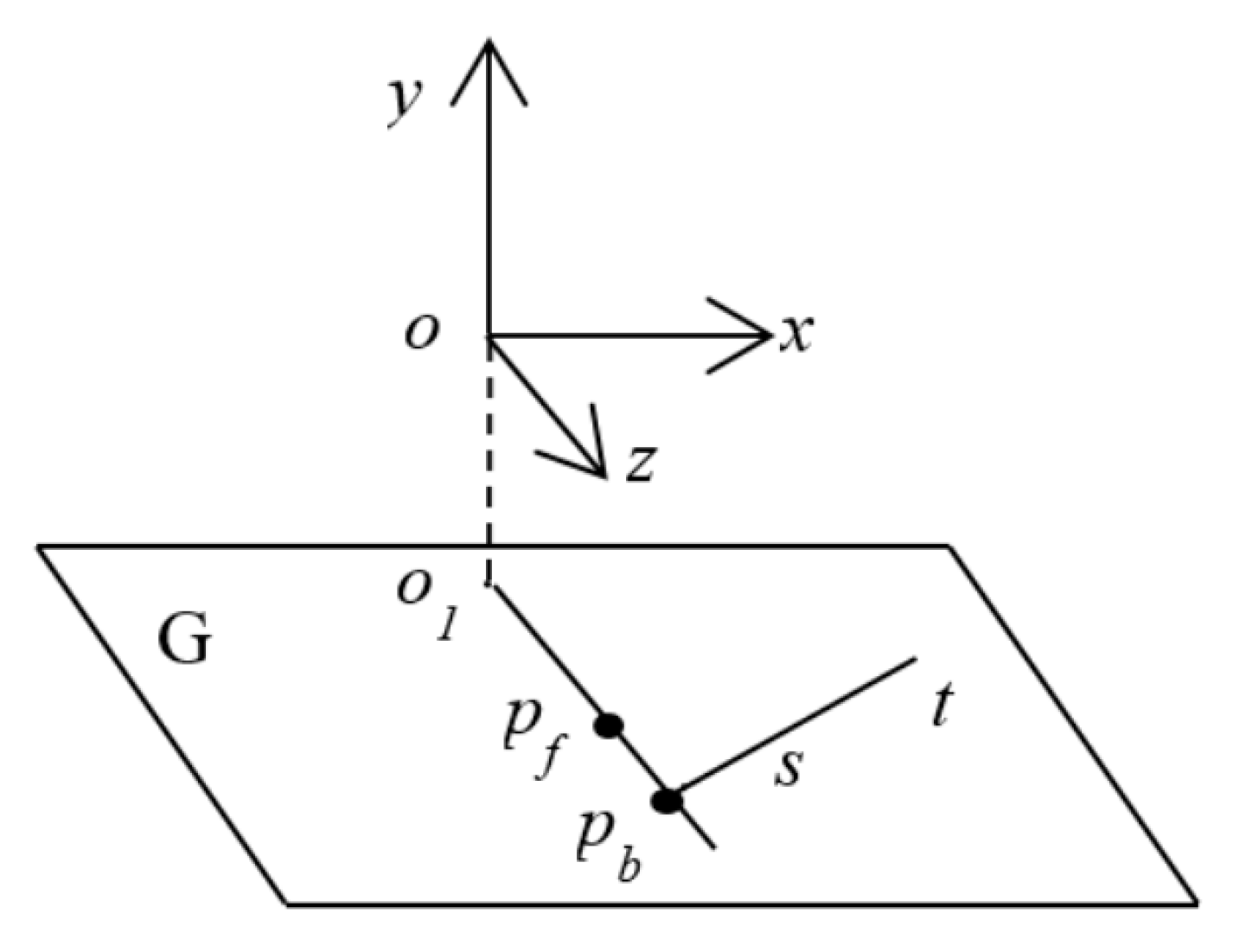

4.3. Compute the Position of Occluded People via Shadows

| Algorithm 2. Computing the Position of Occluded People via Shadows |

| Input: a represents which side of the user their shadow is located in, hf is the position of the head joint of Hf in the RGB image, R is the search region, C is the binary image obtained by background subtraction. Output: The position pb of Hb. 1: (stores points on the upper contour of Hb) 2: if a = 0 then 3: i1 = 0, i2 = hf.x − dx/2 4: else 5: i1 = hf.x + dx/2, i2 = W 6: for each i = i1 to i2 do 7: for each j = H to 0 do 8: if C(i, j) = 1 then 9: Add the point R(i, j) to S; 10: Break; 11: Use the least squares method to fit the points in S to a line, and map it onto the plane G, designated as st; 12: Compute the intersection point of st and o1pf, marked as pb; 13: Return position pb of Hb |

5. Experimental Results

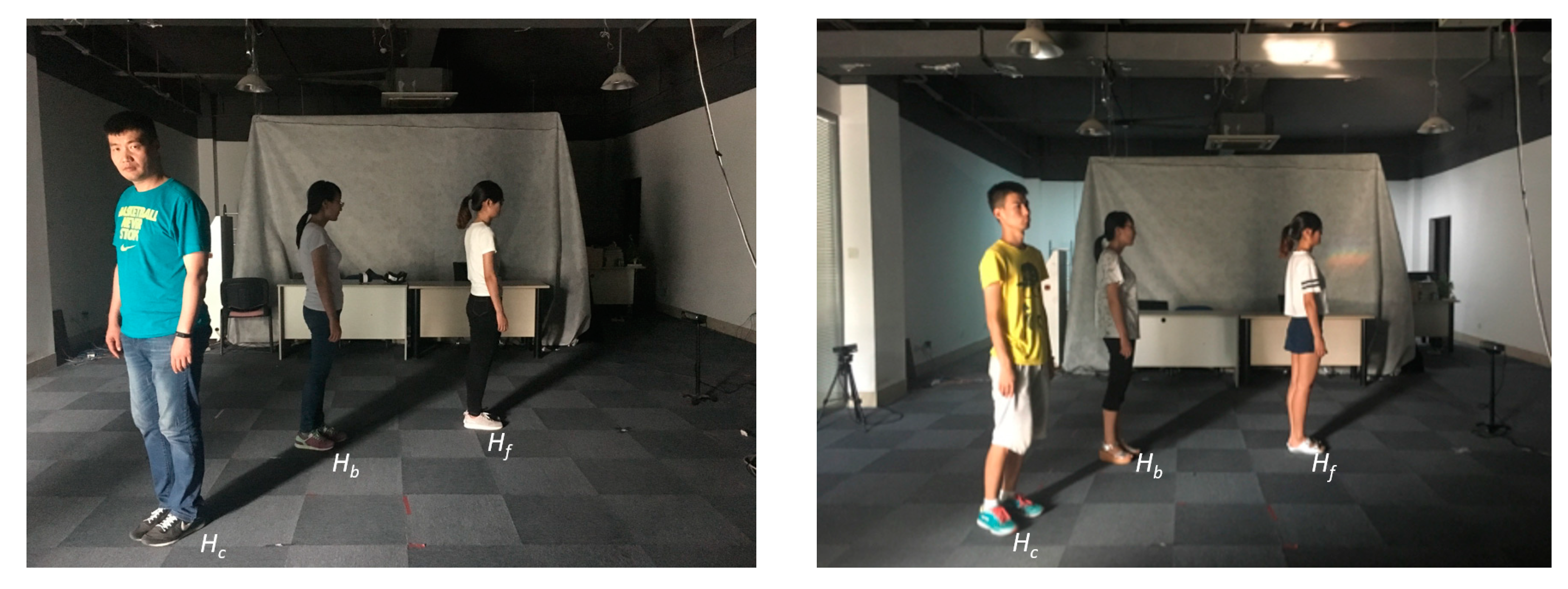

5.1. Experiment Design

5.2. Results

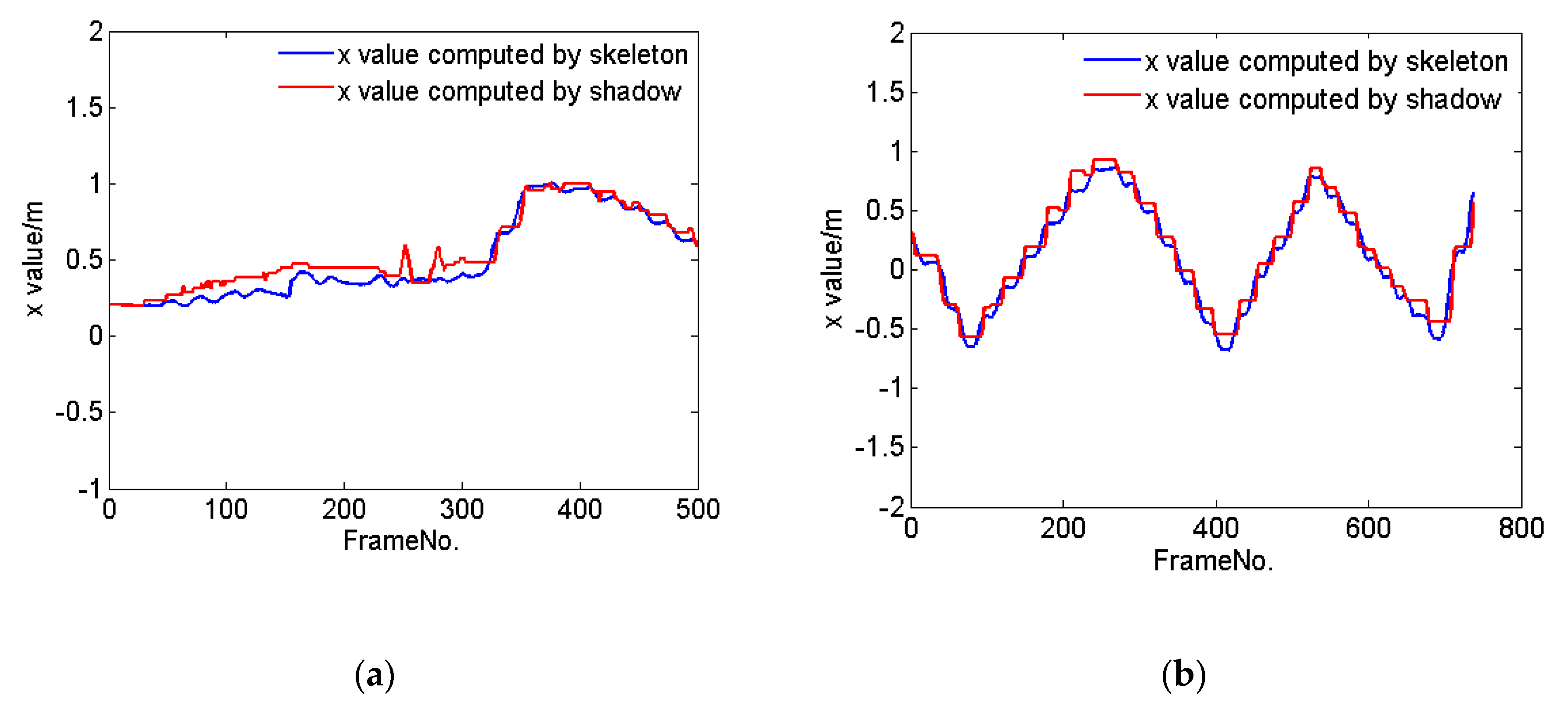

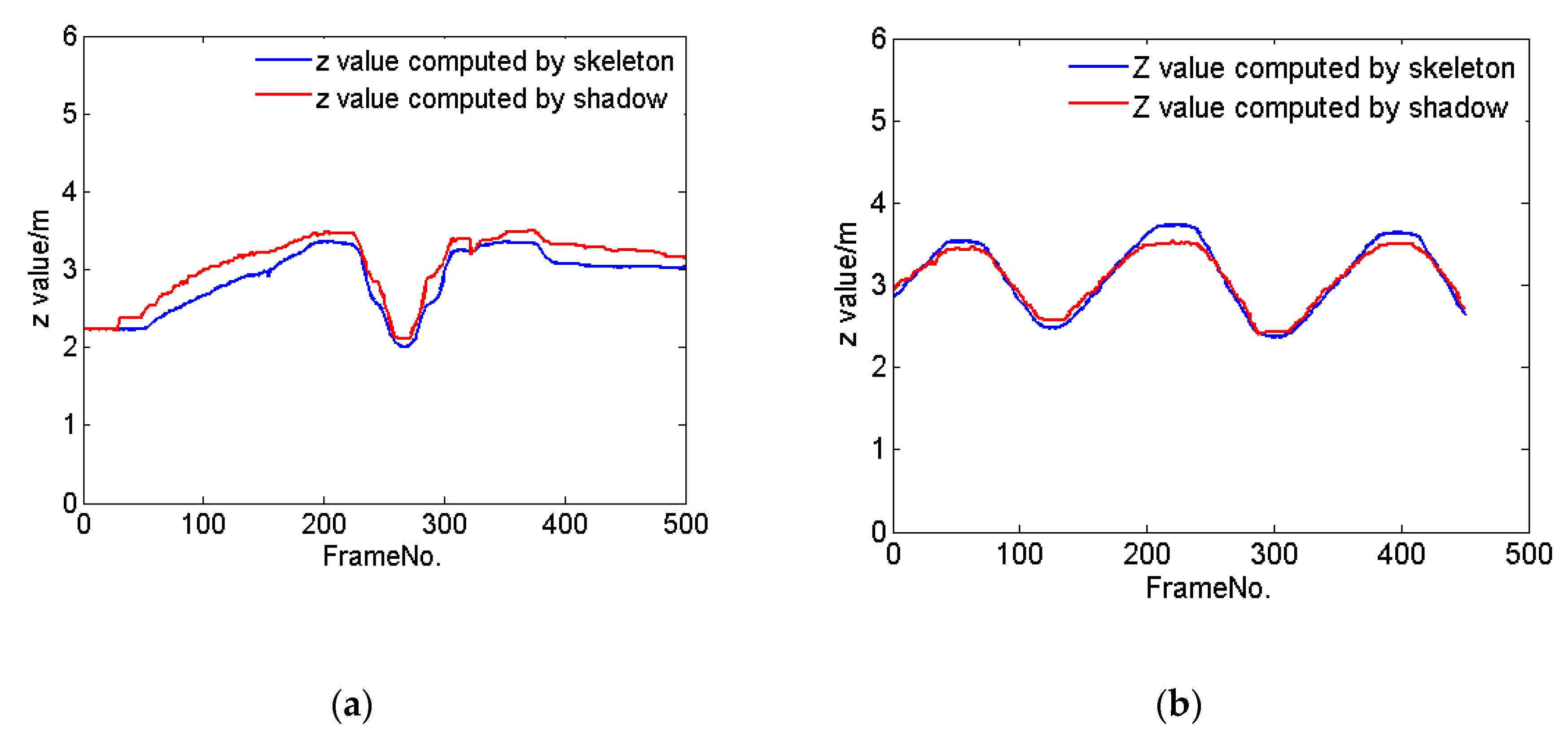

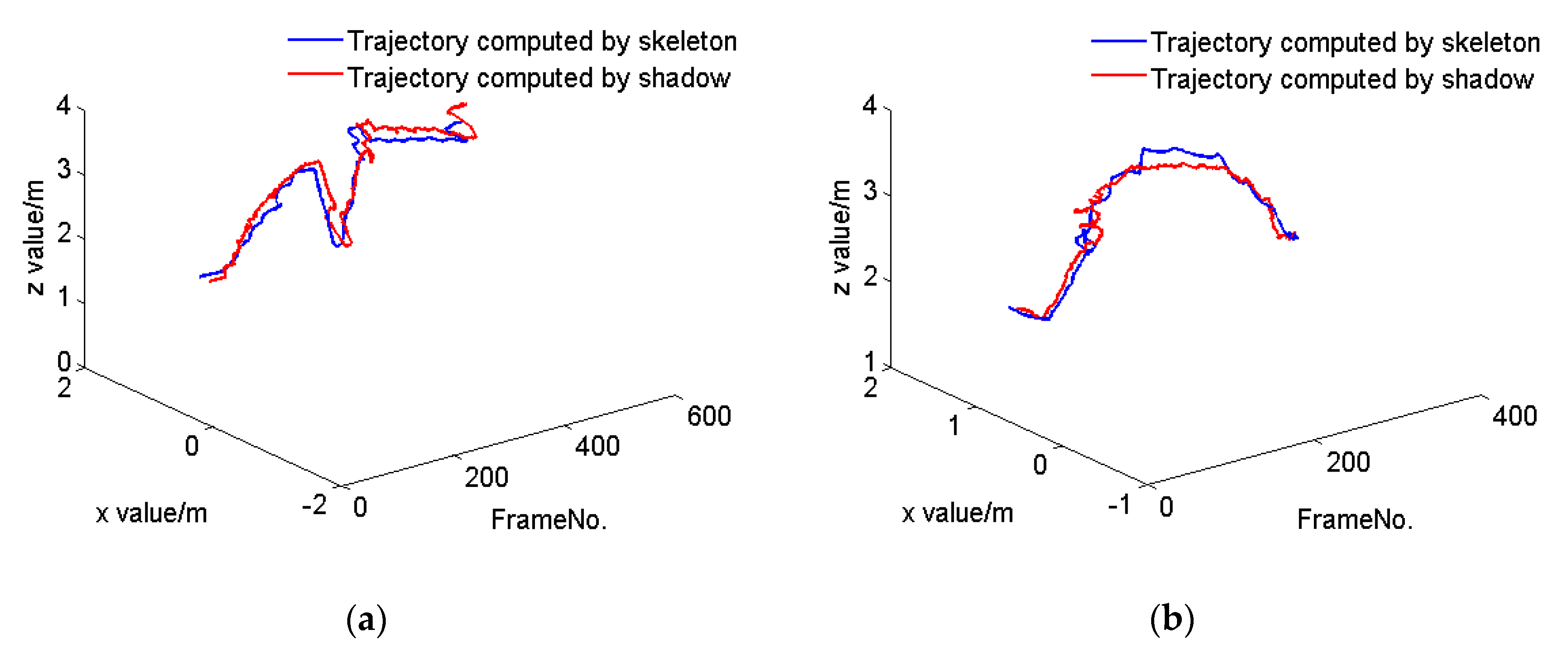

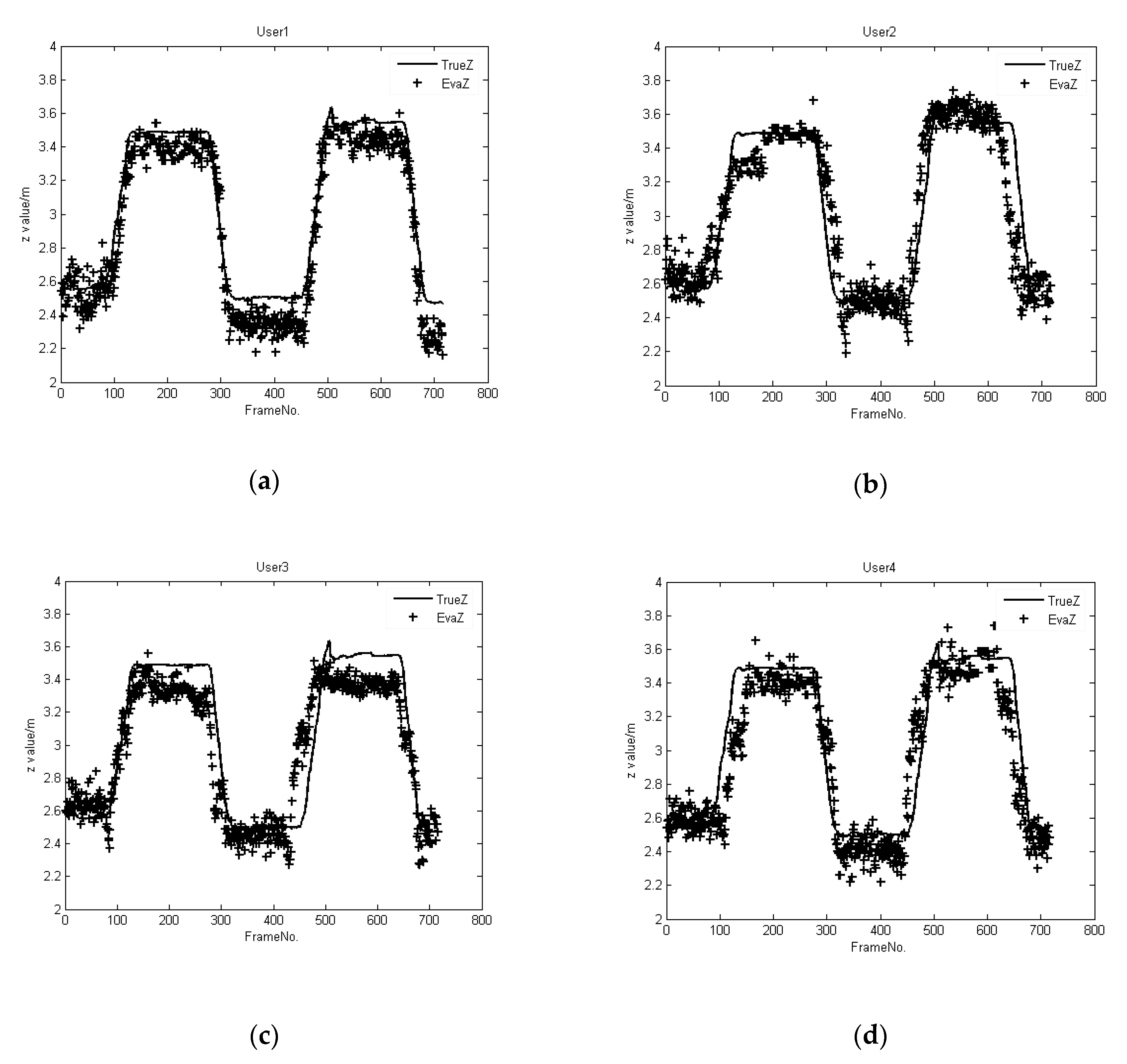

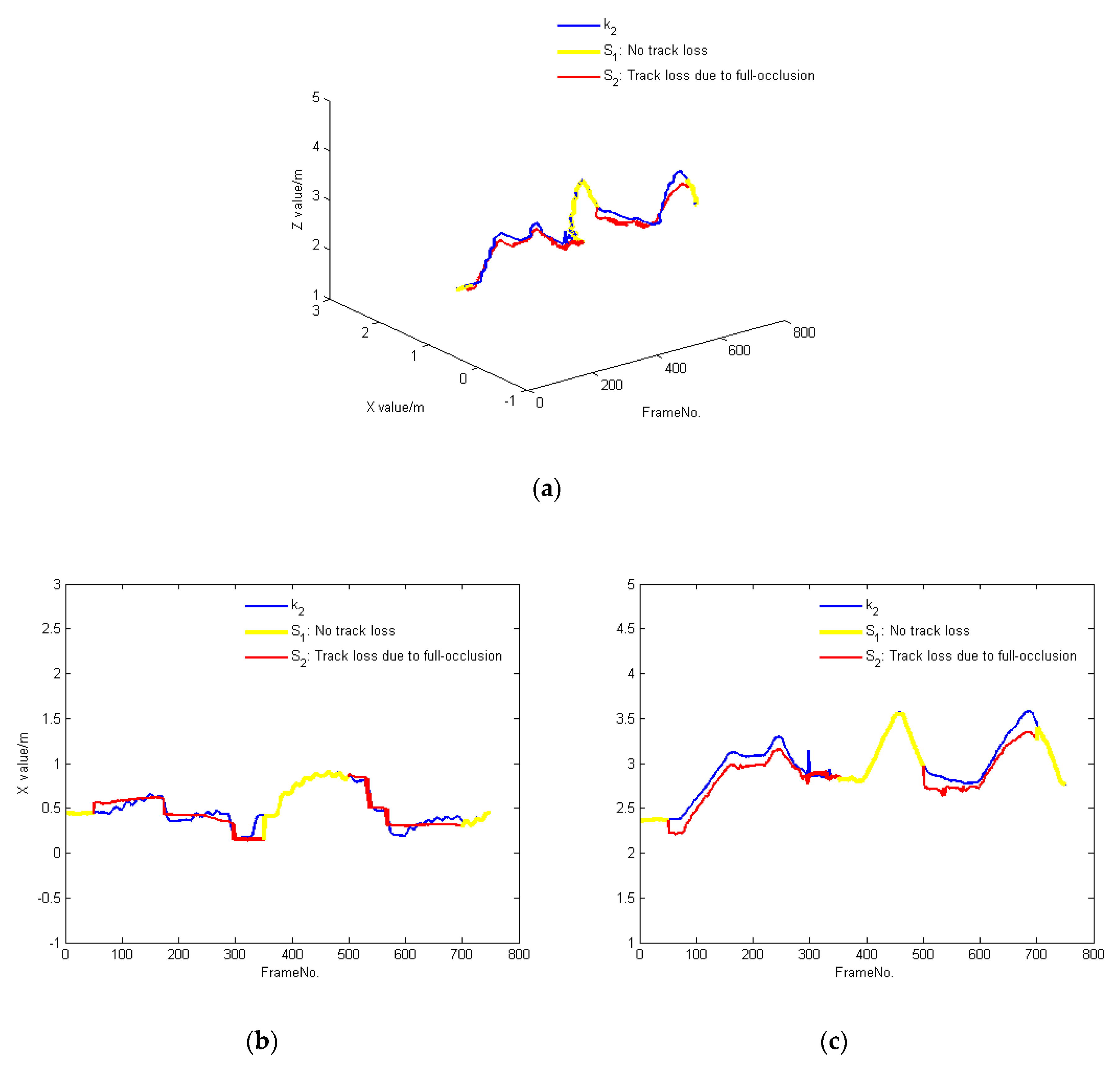

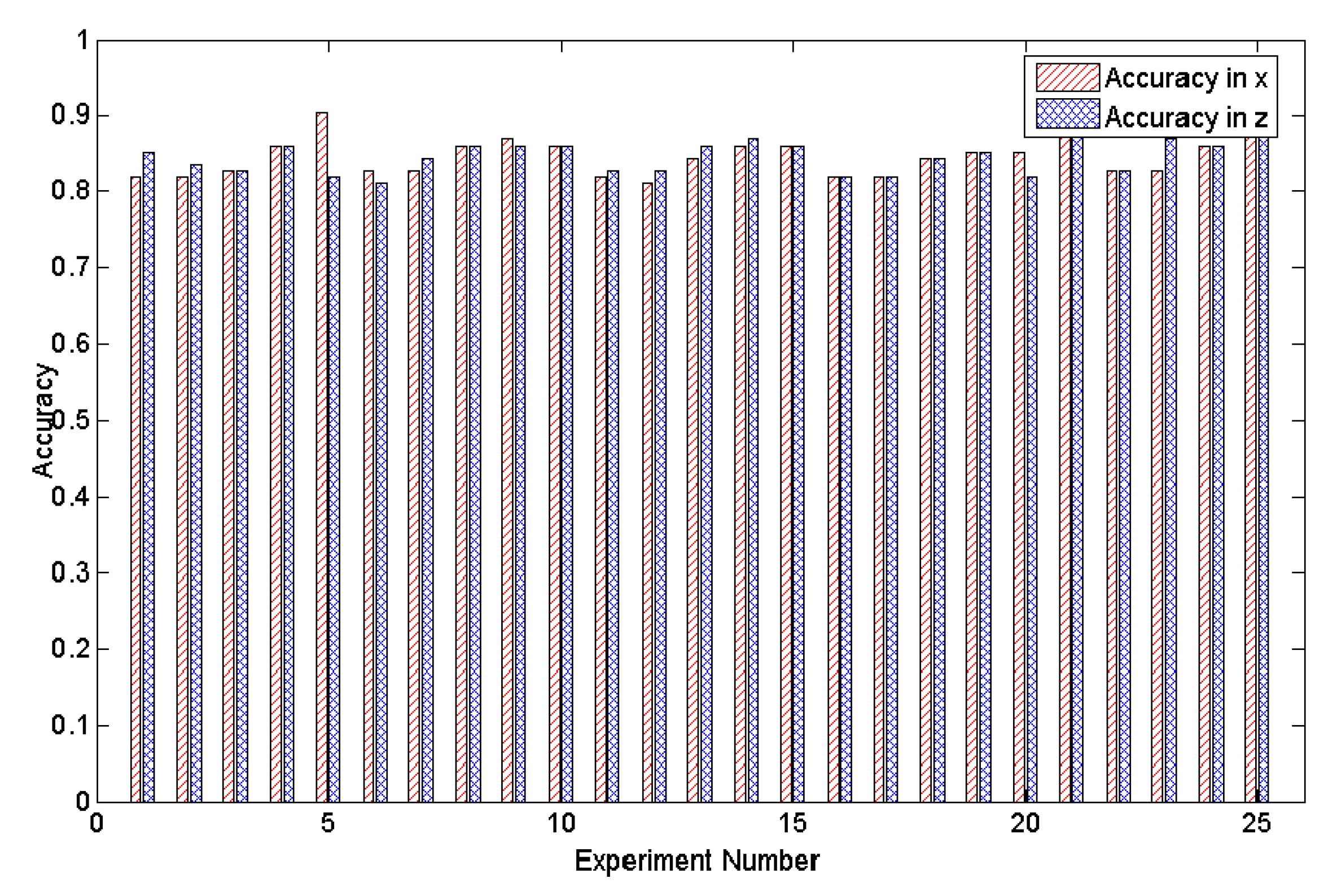

- Experiment 1

- Experiment 2

6. Discussion

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Uson, M.; Arthur, K.; Whitton, M.C.; Bastos, R.; Steed, A.; Slater, M.; Brooks, F.P.B., Jr. Walking > walking-in-place > flying, in virtual environments. In Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques, New York, NY, USA, 1 July 1999; pp. 359–364. [Google Scholar]

- Gai, W.; Yang, C.L.; Bian, Y.L.; Shen, C.; Meng, X.X.; Wang, L.; Liu, J.; Dong, M.D.; Niu, C.J.; Lin, C. Supporting easy physical-to-virtual creation of mobile vr maze games: A new genre. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 5016–5028. [Google Scholar]

- Marwecki, S.; Brehm, M.; Wagner, L.; Cheng, L.P.; Mueller, F.F.; Basudisch, P. Virtual space–overloading physical space with multiple virtual reality users. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018. [Google Scholar]

- Delden, R.V.; Moreno, A.; Poppe, R.; Reidsma, D.; Heylen, D. A thing of beauty: Steering behavior in an interactive playground. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 2462–2472. [Google Scholar]

- Sivalingam, R.; Cherian, A.; Fasching, J.; Walczak, N.; Bird, N.; Morellas, V.; Murphy, B.; Cullen, K.; Lim, K.; Sapiro, G.; et al. A multi-sensor visual tracking system for behavior monitoring of at-risk children. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012. [Google Scholar]

- Park, J.; An, K.; Kim, D.; Choi, J. Multiple human tracking using multiple kinects for an attendance check system of a smart class. In Proceedings of the 2013 10th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Jeju, Korea, 30 Octobet–2 November 2013. [Google Scholar]

- Yu, H.D.; Li, H.Y.; Sun, W.S.; Gai, W.; Cui, T.T.; Wang, C.T.; Guan, D.D.; Yang, Y.J.; Yang, C.L.; Zeng, W. A two-view VR shooting theater system. In Proceedings of the 13th ACM SIGGRAPH International Conference on Virtual-Reality Continuum and its Applications in Industry, Shenzhen, China, 30 November–2 December 2014; pp. 223–226. [Google Scholar]

- Chen, B.H.; Shi, L.F.; Ke, X. A robust moving object detection in multi-scenario big data for video surveillance. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 982–995. [Google Scholar] [CrossRef]

- Yilmaz, A.; Javed, O.; Shah, M. Object tracking: A survey. ACM Comput. Surv. (CSUR) 2006, 38. [Google Scholar] [CrossRef]

- Han, J.; Pauwels, E.J.; de Zeeuw, P.M.; de With, P.H. Employing a RGB-D sensor for real-time tracking of humans across multiple re-entries in a smart environment. IEEE Trans. Consum. Electron. 2012, 58, 255–263. [Google Scholar]

- Nebeling, M.; Teunissen, E.; Husmann, M.; Norrie, M.C. XDKinect: Development framework for cross-device interaction using kinect. In Proceedings of the 2014 ACM SIGCHI Symposium on Engineering Interactive Computing Systems, Rome, Italy, 17–20 June 2014; pp. 65–74. [Google Scholar]

- Krumm, J.; Harris, S.; Meyers, B.; Brumitt, B.; Hale, M.; Shafer, S. Multi-camera multi-person tracking for easyliving. In Proceedings of the Third IEEE International Workshop on Visual Surveillance, Dublin, Ireland, 1 July 2000. [Google Scholar]

- Sun, S.W.; Kuo, C.H.; Chang, P.C. People tracking in an environment with multiple depth cameras: A skeleton-based pairwise trajectory matching scheme. J. Vis. Commun. Image Represent. 2016, 35, 36–54. [Google Scholar] [CrossRef]

- Yang, Y.H.; Wang, W.J.; Wan, H.G. Multi-user identification based on double views coupling in cooperative interaction scenarios. J. Beijing Univ. Aeronaut. Astronaut. 2015, 41, 930–940. (In Chinese) [Google Scholar]

- Bellotto, N.; Sommerlade, E.; Benfold, B.; Bibby, C.; Reid, I.; Roth, D.; Fernamdez, C.; van Gool, L.; Gonzalez, J. A distributed camera system for multi-resolution surveillance. In Proceedings of the 2009 Third ACM/IEEE International Conference on Distributed Smart Cameras (ICDSC), Como, Italy, 30 August–2 September 2009. [Google Scholar]

- Wu, C.J.; Houben, S.; Marquardt, N. Eaglesense: Tracking people and devices in interactive spaces using real-time top-view depth-sensing. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 3929–3942. [Google Scholar]

- Li, H.; Zhang, P.; Al Moubayed, S.; Patel, S.N.; Sample, A.P. Id-match: A hybrid computer vision and rfid system for recognizing individuals in groups. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 4933–4944. [Google Scholar]

- Meng, R.; Isenhower, J.; Qin, C.; Nelakuditi, S. Can smartphone sensors enhance kinect experience. In Proceedings of the Thirteenth ACM International Symposium on MOBILE Ad Hoc Networking and Computing, Hilton Head, SC, USA, 11–14 June 2012; pp. 265–266. [Google Scholar]

- Heili, A.; López-Méndez, A.; Odobez, J.M. Exploiting long-term connectivity and visual motion in CRF-based multi-person tracking. IEEE Trans. Image Process. 2014, 23, 3040–3056. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Q.; Zhong, B.; Zhang, Y.; Li, J.; Fu, Y. Deep alignment network based multi-person tracking with occlusion and motion reasoning. IEEE Trans. Multimed. 2018, 21, 1183–1194. [Google Scholar] [CrossRef]

- Wang, J.; Yagi, Y. Shadow extraction and application in pedestrian detection. EURASIP J. Image Video Process. 2014, 2014, 12. [Google Scholar] [CrossRef]

- Litomisky, K. Consumer RGB-D Cameras and Their Applications; Rapport Technique; University of California: Riverside, USA, 2012; pp. 1–20. [Google Scholar]

- Chrapek, D.; Beran, V.; Zemcik, P. Depth-based filtration for tracking boost. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Catania, Italy, 26–29 October 2015; pp. 217–228. [Google Scholar]

- Spinello, L.; Arras, K.O. People detection in RGB-D data. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011. [Google Scholar]

- Munaro, M.; Basso, F.; Menegatti, E. Tracking people within groups with RGB-D data. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012. [Google Scholar]

- Munaro, M.; Basso, F.; Menegatti, E. OpenPTrack: Open source multi-camera calibration and people tracking for RGB-D camera networks. Robot. Auton. Syst. 2016, 75, 525–538. [Google Scholar] [CrossRef]

- Munaro, M.; Horn, A.; Illum, R.; Burke, J.; Rusu, R.B. OpenPTrack: People tracking for heterogeneous networks of color-depth cameras. In Proceedings of the IAS-13 Workshop Proceedings: 1st Intl. Workshop on 3D Robot Perception with Point Cloud Library, Padova, Italy, 15 July 2014. [Google Scholar]

- Wu, C.J.; Quigley, A.; Harris-Birtill, D. Out of sight: A toolkit for tracking occluded human joint positions. Pers. Ubiquitous Comput. 2017, 21, 125–135. [Google Scholar] [CrossRef]

- Tseng, T.E.; Liu, A.S.; Hsiao, P.H.; Huang, C.M.; Fu, L.C. Real-time people detection and tracking for indoor surveillance using multiple top-view depth cameras. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014. [Google Scholar]

- Rauter, M. Reliable human detection and tracking in top-view depth images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Breitenstein, M.D.; Reichlin, F.; Leibe, B.; Koller-Meier, E.; van Gool, L. Robust tracking-by-detection using a detector confidence particle filter. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009. [Google Scholar]

- Zhao, T.; Nevatia, R. Tracking multiple humans in complex situations. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1208–1221. [Google Scholar] [CrossRef] [PubMed]

- Meshgi, K.; Maeda, S.I.; Oba, S.; Skibbe, H.; Li, Y.Z.; Ishii, S. An occlusion-aware particle filter tracker to handle complex and persistent occlusions. Comput. Vis. Image Underst. 2016, 150, 81–94. [Google Scholar] [CrossRef]

- Norrie, L.; Murray-Smith, R. Virtual sensors: Rapid prototyping of ubiquitous interaction with a mobile phone and a Kinect. In Proceedings of the 13th International Conference on Human Computer Interaction with Mobile Devices and Services, Stockholm, Sweden, 30 August–2 September 2011. [Google Scholar]

- Koide, K.; Miura, J. Person identification based on the matching of foot strike timings obtained by LRFs and a smartphone. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016. [Google Scholar]

- Misu, K.; Miura, J. Specific person detection and tracking by a mobile robot using 3D LIDAR and ESPAR antenna. In Intelligent Autonomous Systems 13; Springer: Cham, Switzerland, 2016; pp. 705–719. [Google Scholar]

- Prati, A.; Mikic, I.; Trivedi, M.M.; Cucchiara, R. Detecting moving shadows: Algorithms and evaluation. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 918–923. [Google Scholar] [CrossRef]

- Elgammal, A.; Duraiswami, R.; Harwood, D.; Davis, L.S. Background and foreground modeling using nonparametric kernel density estimation for visual surveillance. Proc. IEEE 2002, 90, 1151–1163. [Google Scholar] [CrossRef]

- Cucchiara, R.; Grana, C.; Piccardi, M.; Prati, A. Detecting moving objects, ghosts, and shadows in video streams. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1337–1342. [Google Scholar] [CrossRef]

- Huerta, I.; Holte, M.B.; Moeslund, T.B.; Gonzàlez, J. Chromatic shadow detection and tracking for moving foreground segmentation. Image Vis. Comput. 2015, 41, 42–53. [Google Scholar] [CrossRef]

- Khan, S.H.; Bennamoun, M.; Sohel, F.; Togneri, R. Automatic feature learning for robust shadow detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Vicente, T.F.Y.; Hoai, M.; Samaras, D. Leave-one-out kernel optimization for shadow detection and removal. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 682–695. [Google Scholar] [CrossRef] [PubMed]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. In Proceedings of the NIPS Deep Learning and Representation Learning Workshop. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Nguyen, V.; Vicente, Y.; Tomas, F.; Zhao, M.; Hoai, M.; Samaras, D. Shadow detection with conditional generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Wang, J.; Li, X.; Yang, J. Stacked conditional generative adversarial networks for jointly learning shadow detection and shadow removal. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Shotton, J.; Fitzgibbon, A.; Cook, M.; Sharp, T.; Finocchio, M.; Moore, R.; Kipman, A.; Blake, A. Real-time human pose recognition in parts from single depth images. In Proceedings of the CVPR 2011, Providence, RI, USA, 20–25 June 2011. [Google Scholar]

- Elgammal, A.; Harwood, D.; Davis, L. Non-parametric model for background subtraction. In Proceedings of the European Conference on Computer Vision, Dublin, Ireland, 26 June–1 July 2000; pp. 751–767. [Google Scholar]

- Cheng, J.; Tsai, Y.H.; Hung, W.C.; Wang, S.; Yang, M.H. Fast and accurate online video object segmentation via tracking parts. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3d object detection from RGB-D data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gai, W.; Qi, M.; Ma, M.; Wang, L.; Yang, C.; Liu, J.; Bian, Y.; de Melo, G.; Liu, S.; Meng, X. Employing Shadows for Multi-Person Tracking Based on a Single RGB-D Camera. Sensors 2020, 20, 1056. https://doi.org/10.3390/s20041056

Gai W, Qi M, Ma M, Wang L, Yang C, Liu J, Bian Y, de Melo G, Liu S, Meng X. Employing Shadows for Multi-Person Tracking Based on a Single RGB-D Camera. Sensors. 2020; 20(4):1056. https://doi.org/10.3390/s20041056

Chicago/Turabian StyleGai, Wei, Meng Qi, Mingcong Ma, Lu Wang, Chenglei Yang, Juan Liu, Yulong Bian, Gerard de Melo, Shijun Liu, and Xiangxu Meng. 2020. "Employing Shadows for Multi-Person Tracking Based on a Single RGB-D Camera" Sensors 20, no. 4: 1056. https://doi.org/10.3390/s20041056

APA StyleGai, W., Qi, M., Ma, M., Wang, L., Yang, C., Liu, J., Bian, Y., de Melo, G., Liu, S., & Meng, X. (2020). Employing Shadows for Multi-Person Tracking Based on a Single RGB-D Camera. Sensors, 20(4), 1056. https://doi.org/10.3390/s20041056