Active 3D Imaging of Vegetation Based on Multi-Wavelength Fluorescence LiDAR

Abstract

1. Introduction

2. Materials and Methods

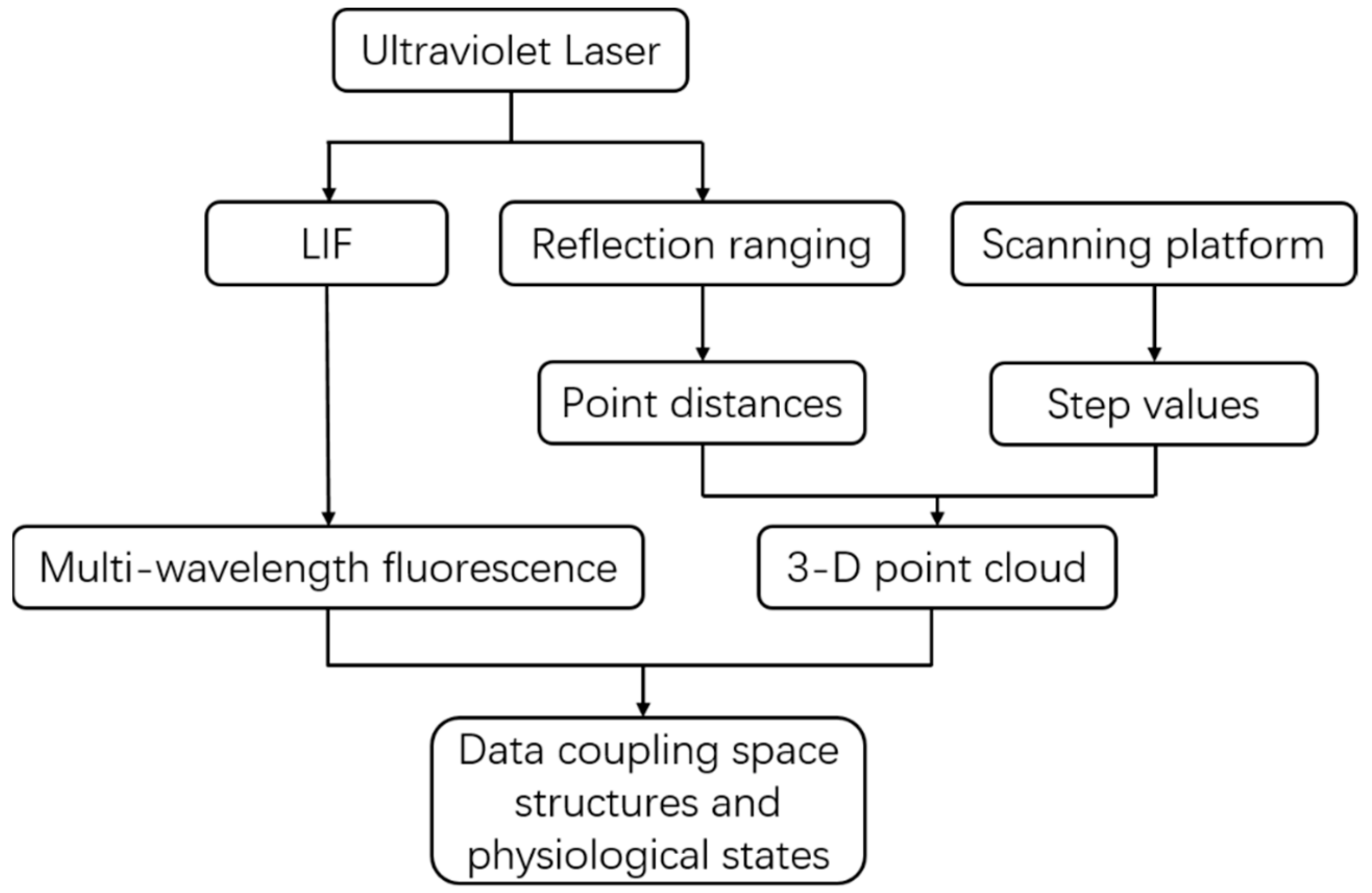

2.1. System Description

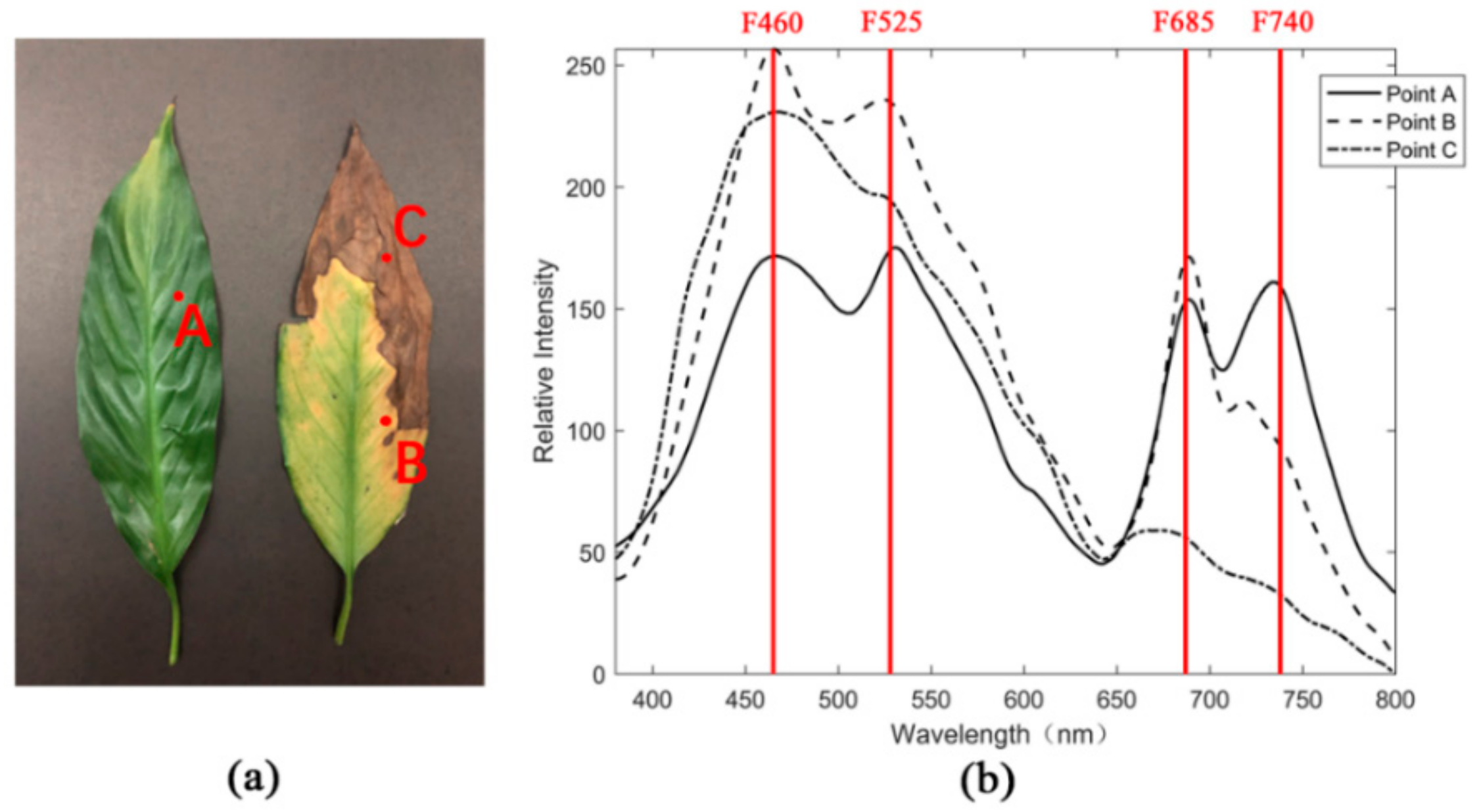

2.1.1. Selection of Fluorescence Wavelengths

2.1.2. System Components

2.1.3. Data Description

2.2. Sample Materials

2.3. Methods

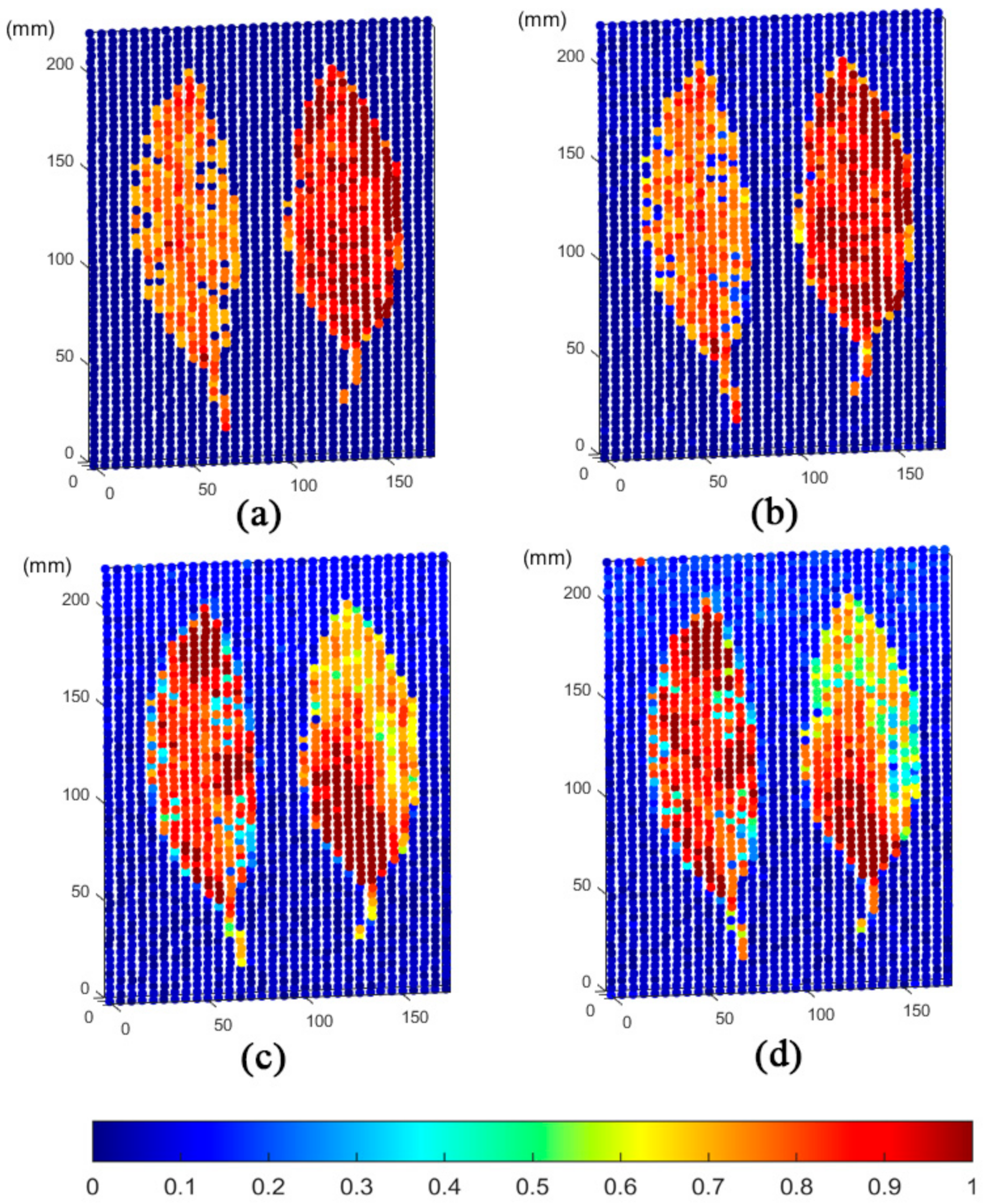

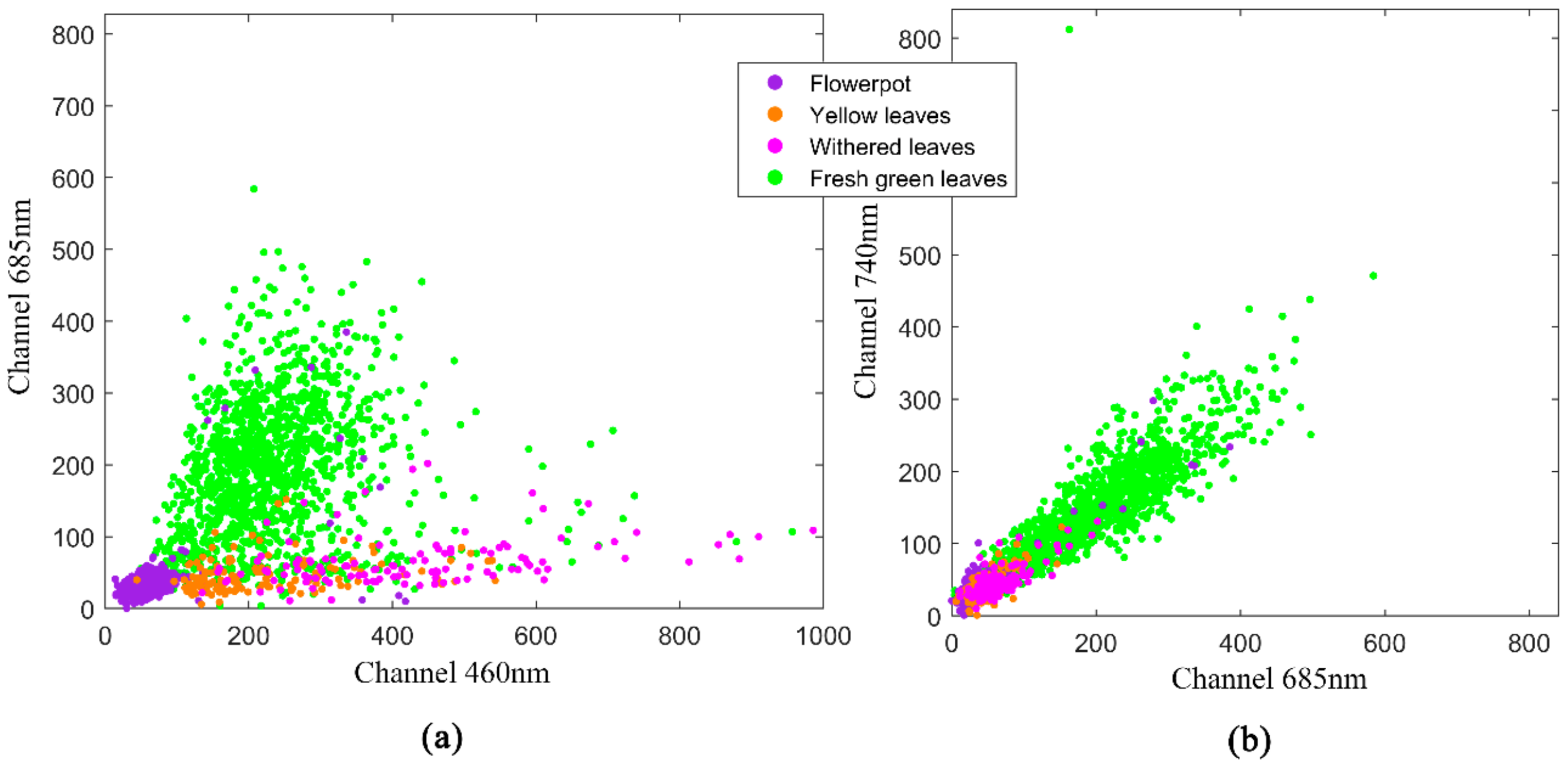

2.3.1. D Fluorescence Imaging Based on Spectral Enhancement

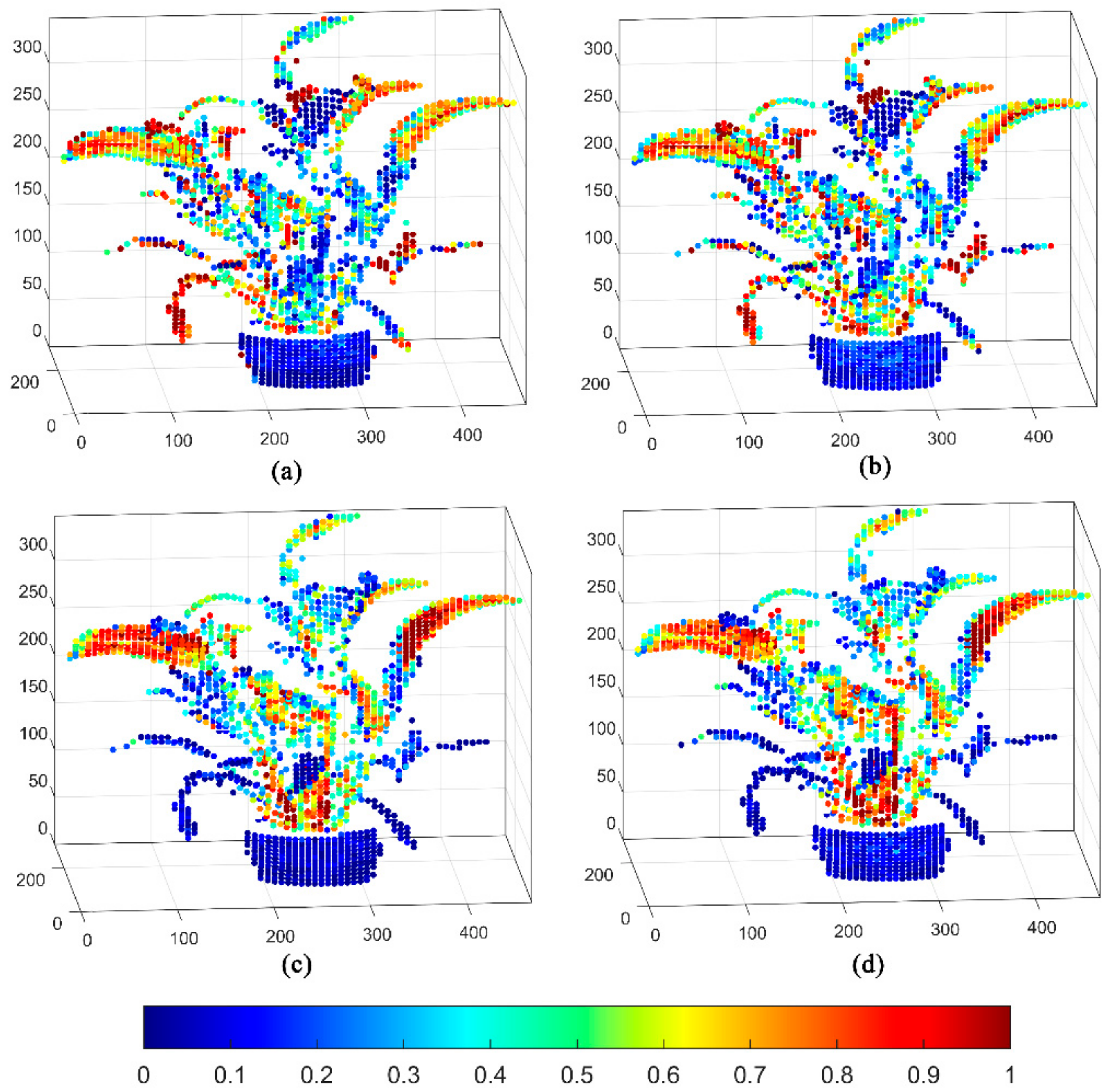

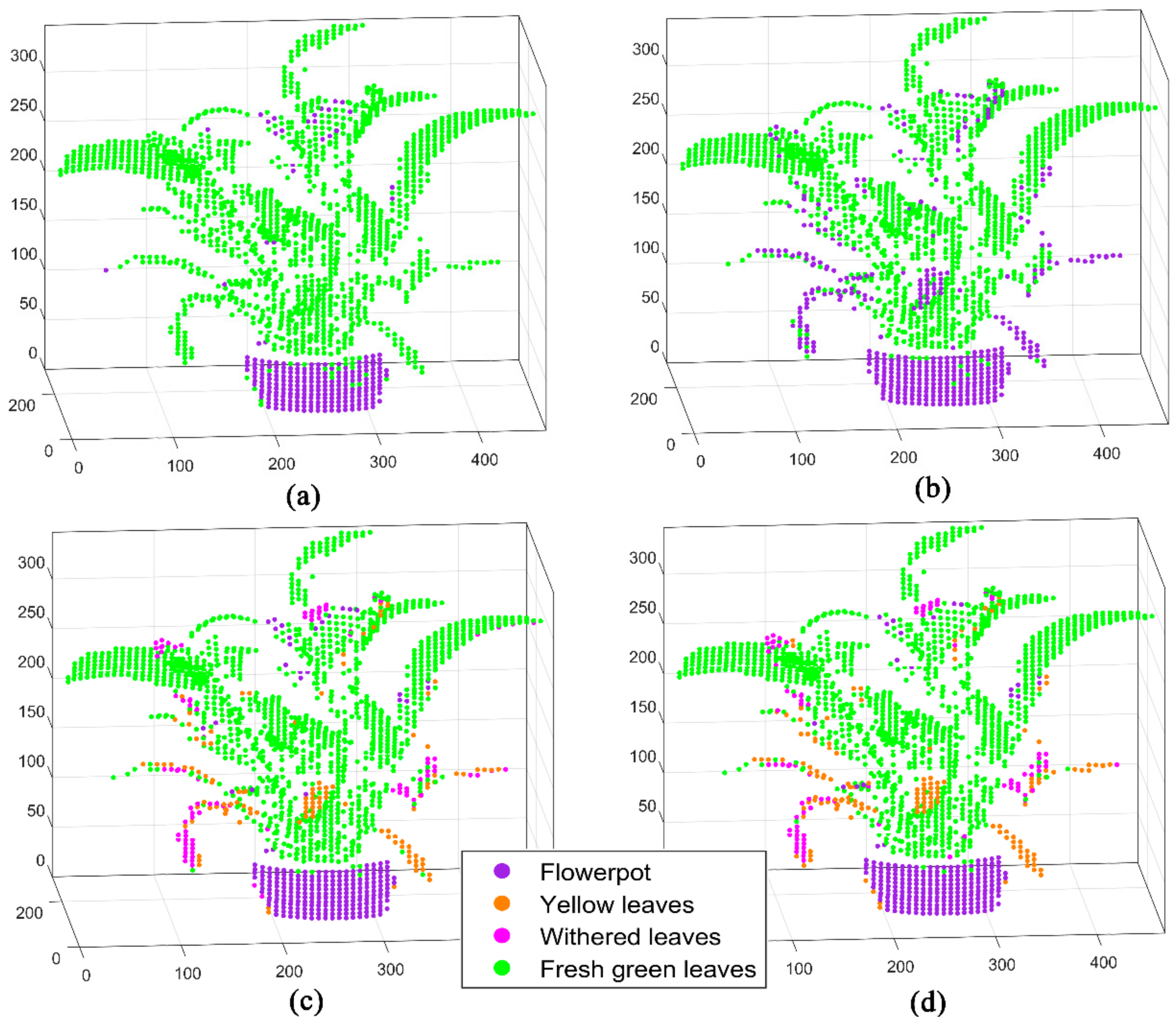

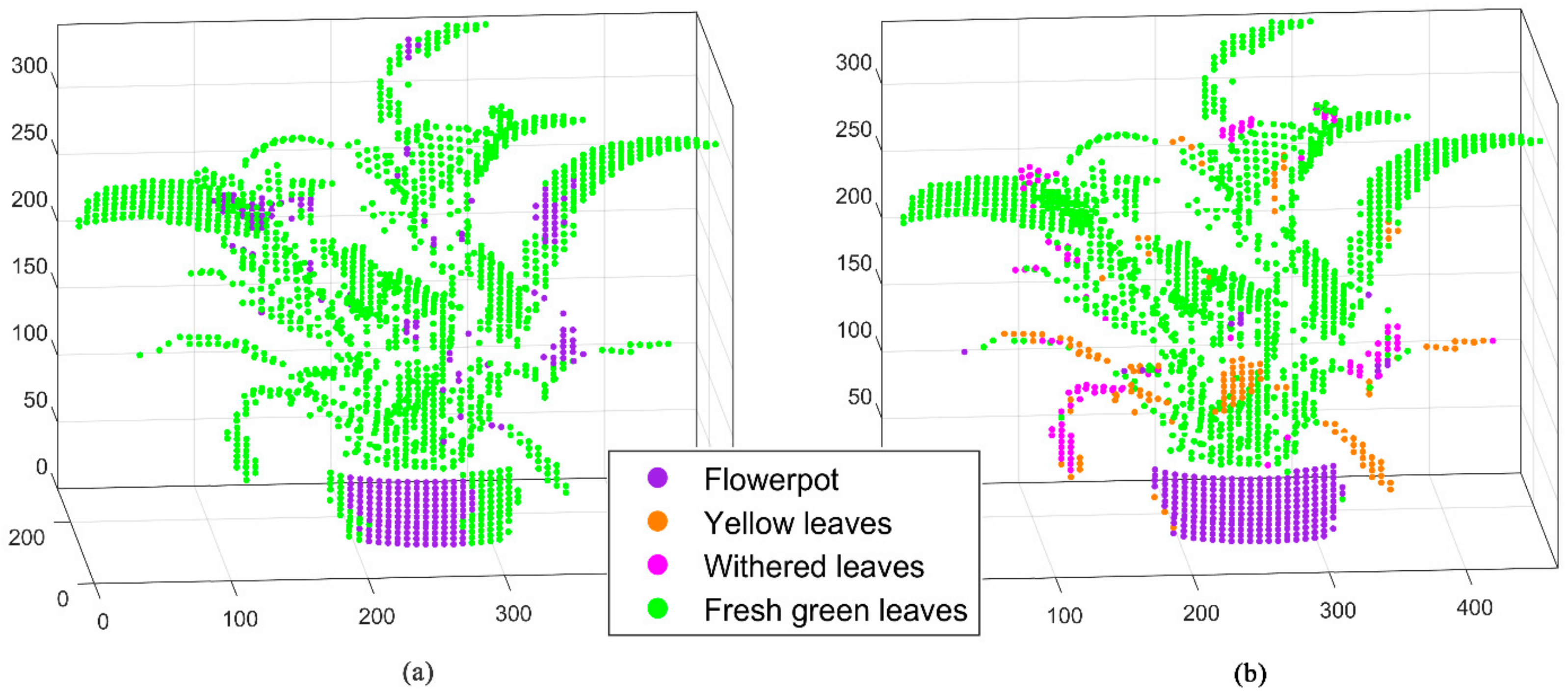

2.3.2. System Evaluation Based on Point Cloud Classification

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Anderegg, W.R.L.; Schwalm, C.; Biondi, F.; Camarero, J.J.; Koch, G.; Litvak, M.; Ogle, K.; Shaw, J.D.; Shevliakova, E.; Williams, A.P. Pervasive drought legacies in forest ecosystems and their implications for carbon cycle models. Science 2015, 349, 528–532. [Google Scholar] [CrossRef]

- Bohnert, H.J.; Jensen, R.G. Strategies for engineering water-stress tolerance in plants. Trends Biotechnol. 1996, 14, 89–97. [Google Scholar] [CrossRef]

- Croft, H.; Chen, J.M.; Zhang, Y.; Simic, A. Modelling leaf chlorophyll content in broadleaf and needle leaf canopies from ground, CASI, Landsat TM 5 and MERIS reflectance data. Remote Sens. Environ. 2013, 133, 128–140. [Google Scholar] [CrossRef]

- Dalponte, M.; Orka, H.O.; Gobakken, T.; Gianelle, D.; Naesset, E. Tree species classification in boreal forests with hyperspectral data. IEEE T. Geosci. Remote Sens. 2013, 51, 2632–2645. [Google Scholar] [CrossRef]

- Wu, C.; Niu, Z.; Tang, Q.; Huang, W. Predicting vegetation water content in wheat using normalized difference water indices derived from ground measurements. J. Plant Res. 2009, 122, 317–326. [Google Scholar] [CrossRef]

- Yang, J.; Gong, W.; Shi, S.; Du, L.; Sun, J.; Song, S. The effective of different excitation wavelengths on the identification of plant species based on Fluorescence LIDAR. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 147–150. [Google Scholar] [CrossRef]

- Van Leeuwen, M.; Nieuwenhuis, M. Retrieval of forest structural parameters using LiDAR remote sensing. Eur. J. Forest Res. 2010, 129, 749–770. [Google Scholar] [CrossRef]

- Ren, X.; Altmann, Y.; Tobin, R.; Mccarthy, A.; Mclaughlin, S.; Buller, G.S. Wavelength-time coding for multispectral 3D imaging using single-photon LiDAR. Opt. Express 2018, 26, 30146. [Google Scholar] [CrossRef]

- Moges, S.M.; Raun, W.R.; Mullen, R.W.; Freeman, K.W.; Johnson, G.V.; Solie, J.B. Evaluation of green, red, and near infrared bands for predicting winter wheat biomass, nitrogen uptake, and final grain yield. J. Plant Nutr. 2005, 27, 1431–1441. [Google Scholar] [CrossRef]

- Duniec, J.T.; Thorne, S.W. Environmental effects on fluorescence quantum efficiencies and lifetimes: A semiclassical approach. J. Phys. C Solid State Phys. 1979, 12, 4109–4117. [Google Scholar] [CrossRef]

- Guanter, L.; Zhang, Y.; Jung, M.; Joiner, J.; Voigt, M.; Berry, J.A.; Frankenberg, C.; Huete, A.R.; Zarco-Tejada, P.; Lee, J.E.; et al. Global and time-resolved monitoring of crop photosynthesis with chlorophyll fluorescence. Proc. Natl. Acad. Sci. USA 2014, 111, E1327–E1333. [Google Scholar] [CrossRef] [PubMed]

- Plascyk, J.A.; Gabriel, F.C. The fraunhofer line discriminator MKII-an airborne instrument for precise and standardized ecological luminescence measurement. IEEE T. Instrum. Meas. 1975, 24, 306–313. [Google Scholar] [CrossRef]

- Alonso, L.; Gomez-Chova, L.; Vila-Frances, J.; Amoros-Lopez, J.; Guanter, L.; Calpe, J.; Moreno, J. Improved fraunhofer line discrimination method for vegetation fluorescence quantification. IEEE Geosci. Remote Sens. Lett. 2008, 5, 620–624. [Google Scholar] [CrossRef]

- Mazzoni, M.; Falorni, P.; Del Bianco, S. Sun-induced leaf fluorescence retrieval in the O2-B atmospheric absorption band. Opt. Express 2008, 16, 7014–7022. [Google Scholar] [CrossRef]

- Tremblay, N.; Wang, Z.; Cerovic, Z.G. Sensing crop nitrogen status with fluorescence indicators. A review. Agron. Sustain. Dev. 2012, 32, 451–464. [Google Scholar] [CrossRef]

- Chappelle, E.W.; Wood, F.M.; McMurtrey, J.E.; Newcomb, W.W. Laser-induced fluorescence of green plants 1: A technique for the remote detection of plant stress and species differentiation. Appl. Opt. 1984, 23, 134. [Google Scholar] [CrossRef]

- Chappelle, E.W.; Wood, F.M.; Wayne Newcomb, W.; McMurtrey, J.E. Laser-induced fluorescence of green plants 3: LIF spectral signatures of five major plant types. Appl. Opt. 1985, 24, 74. [Google Scholar] [CrossRef]

- Lichtenthaler, H.K.; Buschmann, C.; Rinderle, U.; Schmuck, G. Application of chlorophyll fluorescence in ecophysiology. Radiat. Environ. Bioph. 1986, 25, 297–308. [Google Scholar] [CrossRef]

- Subhash, N.; Wenzel, O.; Lichtenthaler, H.K. Changes in blue-green and chlorophyll fluorescence emission and fluorescence ratios during senescence of tobacco plants. Remote Sens. Environ. 1999, 69, 215–223. [Google Scholar] [CrossRef]

- Yang, J.; Sun, J.; Du, L.; Chen, B.; Zhang, Z.; Shi, S.; Gong, W. Effect of fluorescence characteristics and different algorithms on the estimation of leaf nitrogen content based on laser-induced fluorescence lidar in paddy rice. Opt. Express 2017, 25, 3743. [Google Scholar] [CrossRef]

- Leufen, G.; Noga, G.; Hunsche, M. Fluorescence indices for the proximal sensing of powdery mildew, nitrogen supply and water deficit in sugar beet leaves. Agriculture 2014, 4, 58–78. [Google Scholar] [CrossRef]

- Apostol, S.; Viau, A.A.; Tremblay, N. A comparison of multiwavelength laser-induced fluorescence parameters for the remote sensing of nitrogen stress in field-cultivated corn. Can. J. Remote Sens. 2007, 33, 150. [Google Scholar] [CrossRef]

- Kharcheva, A.V. Fluorescence intensities ratio F685/F740 for maple leaves during seasonal color changes and with fungal infection. In Saratov Fall Meeting 2013: Optical Technologies in Biophysics and Medicine XV and Laser Physics and Photonics XV; SPIE: Bellingham, WA, USA, 2014; Volume 9031, p. 90310S. [Google Scholar]

- Yang, J.; Song, S.; Du, L.; Shi, S.; Gong, W.; Sun, J.; Chen, B. Analyzing the effect of fluorescence characteristics on leaf nitrogen concentration estimation. Remote Sens. 2018, 10, 1402. [Google Scholar] [CrossRef]

- Sun, J.; Shi, S.; Yang, J.; Chen, B.; Gong, W.; Du, L.; Mao, F.; Song, S. Estimating leaf chlorophyll status using hyperspectral lidar measurements by PROSPECT model inversion. Remote Sens. Environ. 2018, 212, 1–7. [Google Scholar] [CrossRef]

- Babichenko, S.; Dudelzak, A.; Poryvkina, L. Laser remote sensing of coastal and terrestrial pollution by FLS-LIDAR. EARSeL eProc. 2004, 3, 1–7. [Google Scholar]

- Lennon, M.; Babichenko, S.; Thomas, N.; Mariette, V.; Mercier, G.E.G.; Lisin, A. Detection and mapping of oil slicks in the sea by combined use of hyperspectral imagery and laser induced fluorescence. EARSeL eProc. 2006, 5, 120–128. [Google Scholar]

- Ohm, K.; Reuter, R.; Stolze, M.; Willkomm, R. Shipboard oceanographic fluorescence lidar development and evaluation based on measurements in Antarctic waters. EARSeL Adv. Remote Sens. 1997, 5, 104–113. [Google Scholar]

- Langsdorf, G.; Buschmann, C.; Sowinska, M.; Babani, F.; Mokry, M.; Timmermann, F.; Lichtenthaler, H.K. Multicolour Fluorescence imaging of sugar beet leaves with different nitrogen status by flash lamp UV-excitation. Photosynthetica 2000, 38, 539–551. [Google Scholar] [CrossRef]

- Kim, M.S.; McMurtrey, J.E.; Mulchi, C.L.; Daughtry, C.S.T.; Chappelle, E.W.; Chen, Y. Steady-state multispectral fluorescence imaging system for plant leaves. Appl. Opt. 2001, 40, 157. [Google Scholar] [CrossRef]

- Cadet, É.; Samson, G. Detection and discrimination of nutrient deficiencies in sunflower by blue-green and chlorophyll-a fluorescence imaging. J. Plant Nutr. 2011, 34, 2114. [Google Scholar] [CrossRef]

- Chappelle, E.W.; McMurtrey, J.E.; Kim, M.S. Identification of the pigment responsible for the blue fluorescence band in the laser induced fluorescence (LIF) spectra of green plants, and the potential use of this band in remotely estimating rates of photosynthesis. Remote Sens. Environ. 1991, 36, 213–218. [Google Scholar] [CrossRef]

- Hak, R.; Lichtenthaler, H.K.; Rinderle, U. Decrease of the chlorophyll fluorescence ratio F690/F730 during greening and development of leaves. Radiat. Environ. Biophys. 1990, 29, 329–336. [Google Scholar] [CrossRef] [PubMed]

- Saito, Y.; Kanoh, M.; Hatake, K.; Kawahara, T.D.; Nomura, A. Investigation of laser-induced fluorescence of several natural leaves for application to lidar vegetation monitoring. Appl. Opt. 1998, 37, 431. [Google Scholar] [CrossRef] [PubMed]

- Lichtenthaler, H.; Miehé, J. Fluorescence imaging as a diagnostic tool for plant stress. Trends Plant Sci. 1997, 2, 316–320. [Google Scholar] [CrossRef]

- Kim, M.S.; Lefcourt, A.M.; Chen, Y.R. Multispectral laser-induced fluorescence imaging system for large biological samples. Appl. Opt. 2003, 42, 3927–3934. [Google Scholar] [CrossRef] [PubMed]

- Pérez-Bueno, M.L.; Pineda, M.; Cabeza, F.M.; Barón, M. Multicolor Fluorescence imaging as a candidate for disease detection in plant phenotyping. Front. Plant Sci. 2016, 7, 1790. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Duan, Z.; Brydegaard, M.; Svanberg, S.; Zhao, G. Drone-based area scanning of vegetation fluorescence height profiles using a miniaturized hyperspectral lidar system. Appl. Phys. B 2018, 124, 207. [Google Scholar] [CrossRef]

- Svanberg, S. Fluorescence lidar monitoring of vegetation status. Phys. Scr. 1995, 1995, 79. [Google Scholar] [CrossRef]

- Lee, J.B.; Woodyatt, A.S.; Berman, M. Enhancement of high spectral resolution remote-sensing data by a noise-adjusted principal components transform. IEEE T. Geosci. Remote 1990, 28, 295–304. [Google Scholar] [CrossRef]

- Ali, M.; Clausi, D. Using the Canny Edge Detector for Feature Extraction and Enhancement of Remote Sensing Images. In IGARSS 2001. Scanning the Present and Resolving the Future, Proceedings of the IEEE 2001 International Geoscience and Remote Sensing Symposium, Sydney, Australia, 9–13 July 2001; IEEE: Piscataway, NJ, USA, 2001; Volume 5, pp. 2298–2300. [Google Scholar]

- Stark, J.A. Adaptive image contrast enhancement using generalizations of histogram equalization. IEEE Trans. Image Process. 2000, 9, 889–896. [Google Scholar] [CrossRef]

- Tarabalka, Y.; Fauvel, M.; Chanussot, J.; Benediktsson, J.A. SVM- and MRF-based method for accurate classification of hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2010, 7, 736–740. [Google Scholar] [CrossRef]

- Rumpf, T.; Römer, C.; Weis, M.; Sökefeld, M.; Gerhards, R.; Plümer, L. Sequential support vector machine classification for small-grain weed species discrimination with special regard to cirsium arvense and galium aparine. Comput. Electron. Agric. 2012, 80, 89–96. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Secord, J.; Zakhor, A. Tree detection in LiDAR data. In Proceedings of the 2006 IEEE Southwest Symposium on Image Analysis and Interpretation, Denver, CO, USA, 26–28 March 2006. [Google Scholar]

- Nguyen, G.H.; Bouzerdoum, A.; Phung, S.L. Learning pattern classification tasks with imbalanced data Sets. Pattern Recogn. 2009, 193–208. [Google Scholar]

- Viera, A.J.; Garrett, J.M. Understanding interobserver agreement: The kappa statistic. Fam. Med. 2005, 37, 360–363. [Google Scholar]

- Yang, J.; Cheng, Y.; Du, L.; Gong, W.; Shi, S.; Sun, J.; Chen, B. Analyzing the effect of the incidence angle on chlorophyll fluorescence intensity based on laser-induced fluorescence lidar. Opt. Express 2019, 27, 12541. [Google Scholar] [CrossRef]

| Multi-Wavelength Fluorescence LiDAR | |

|---|---|

| Laser wavelength | 355 nm |

| Repetition rate | 7 kHz |

| Pulse width | 3~5 ns |

| Pulse energy | 18 μJ |

| Beam divergence | <1 mrad |

| Telescope aperture | 200 mm |

| Spatial resolution | Distance: 10 mm |

| Scanning: 2 mm @20m | |

| Ground Truth | Predicted Class | Producer Accuracy | ||||

|---|---|---|---|---|---|---|

| Flowerpot | Withered Leaves | Yellow Leaves | Fresh Green Leaves | |||

| (a) 460 nm | Flowerpot | 108 | 0 | 0 | 140 | 0.44 |

| Withered leaves | 16 | 0 | 0 | 100 | 0 | |

| Yellow leaves | 4 | 0 | 0 | 129 | 0 | |

| Fresh green leaves | 132 | 0 | 0 | 1147 | 0.90 | |

| User accuracy | 0.42 | 0 | 0 | 0.76 | ||

| Overall accuracy (%): 70.7% | ||||||

| Kappa coefficient: 0.17 | ||||||

| (b) 685 nm | Flowerpot | 235 | 0 | 0 | 13 | 0.95 |

| Withered leaves | 30 | 5 | 0 | 81 | 0.04 | |

| Yellow leaves | 1 | 1 | 0 | 131 | 0 | |

| Fresh green leaves | 159 | 6 | 0 | 1114 | 0.87 | |

| User accuracy | 0.55 | 0.42 | 0 | 0.83 | ||

| Overall accuracy (%): 76.2% | ||||||

| Kappa coefficient: 0.43 | ||||||

| (c) 460 nm + 685 nm | Flowerpot | 236 | 2 | 3 | 7 | 0.95 |

| Withered leaves | 51 | 13 | 6 | 46 | 0.11 | |

| Yellow leaves | 5 | 12 | 18 | 98 | 0.14 | |

| Fresh green leaves | 55 | 22 | 25 | 1177 | 0.92 | |

| User accuracy | 0.68 | 0.27 | 0.35 | 0.89 | ||

| Overall accuracy (%): 81.3% | ||||||

| Kappa coefficient: 0.56 | ||||||

| (d) Four wavelengths | Flowerpot | 240 | 0 | 4 | 4 | 0.97 |

| Withered leaves | 7 | 57 | 19 | 33 | 0.49 | |

| Yellow leaves | 0 | 13 | 69 | 51 | 0.52 | |

| Fresh green leaves | 24 | 20 | 23 | 1212 | 0.95 | |

| User accuracy | 0.89 | 0.63 | 0.60 | 0.93 | ||

| Overall accuracy (%): 88.9% | ||||||

| Kappa coefficient: 0.75 | ||||||

| Ground Truth | Predicted Class | Producer Accuracy | ||||

|---|---|---|---|---|---|---|

| Flowerpot | Withered Leaves | Yellow Leaves | Fresh Green Leaves | |||

| (a) Normal vectors | Flowerpot | 107 | 0 | 0 | 141 | 0.43 |

| Withered leaves | 8 | 7 | 6 | 95 | 0.06 | |

| Yellow leaves | 3 | 0 | 9 | 121 | 0.07 | |

| Fresh green leaves | 48 | 0 | 0 | 1231 | 0.96 | |

| User accuracy | 0.64 | 1.0 | 0.60 | 0.78 | ||

| Overall accuracy (%): 76.2% | ||||||

| Kappa coefficient: 0.29 | ||||||

| (b) Normal vectors + four wavelengths | Flowerpot | 244 | 0 | 0 | 4 | 0.98 |

| Withered leaves | 4 | 73 | 13 | 26 | 0.63 | |

| Yellow leaves | 3 | 14 | 91 | 25 | 0.68 | |

| Fresh green leaves | 6 | 14 | 24 | 1235 | 0.97 | |

| User accuracy | 0.95 | 0.72 | 0.71 | 0.96 | ||

| Overall accuracy (%): 92.5% | ||||||

| Kappa coefficient: 0.84 | ||||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, X.; Shi, S.; Yang, J.; Gong, W.; Sun, J.; Chen, B.; Guo, K.; Chen, B. Active 3D Imaging of Vegetation Based on Multi-Wavelength Fluorescence LiDAR. Sensors 2020, 20, 935. https://doi.org/10.3390/s20030935

Zhao X, Shi S, Yang J, Gong W, Sun J, Chen B, Guo K, Chen B. Active 3D Imaging of Vegetation Based on Multi-Wavelength Fluorescence LiDAR. Sensors. 2020; 20(3):935. https://doi.org/10.3390/s20030935

Chicago/Turabian StyleZhao, Xingmin, Shuo Shi, Jian Yang, Wei Gong, Jia Sun, Biwu Chen, Kuanghui Guo, and Bowen Chen. 2020. "Active 3D Imaging of Vegetation Based on Multi-Wavelength Fluorescence LiDAR" Sensors 20, no. 3: 935. https://doi.org/10.3390/s20030935

APA StyleZhao, X., Shi, S., Yang, J., Gong, W., Sun, J., Chen, B., Guo, K., & Chen, B. (2020). Active 3D Imaging of Vegetation Based on Multi-Wavelength Fluorescence LiDAR. Sensors, 20(3), 935. https://doi.org/10.3390/s20030935