Noise Removal in the Developing Process of Digital Negatives

Abstract

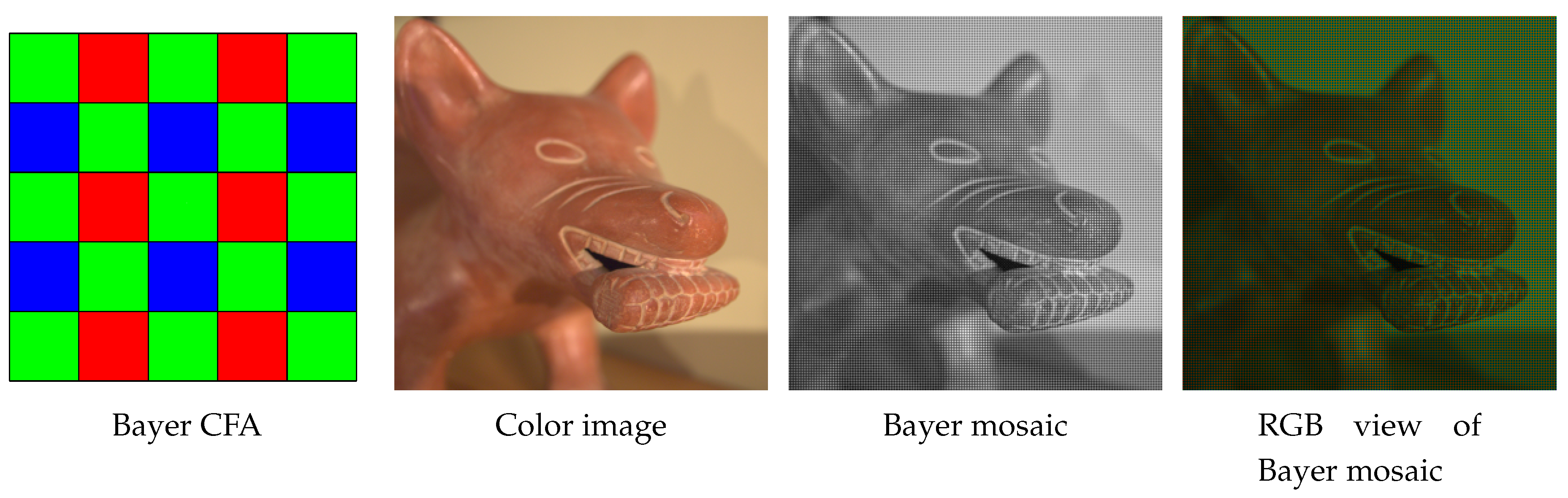

1. Introduction

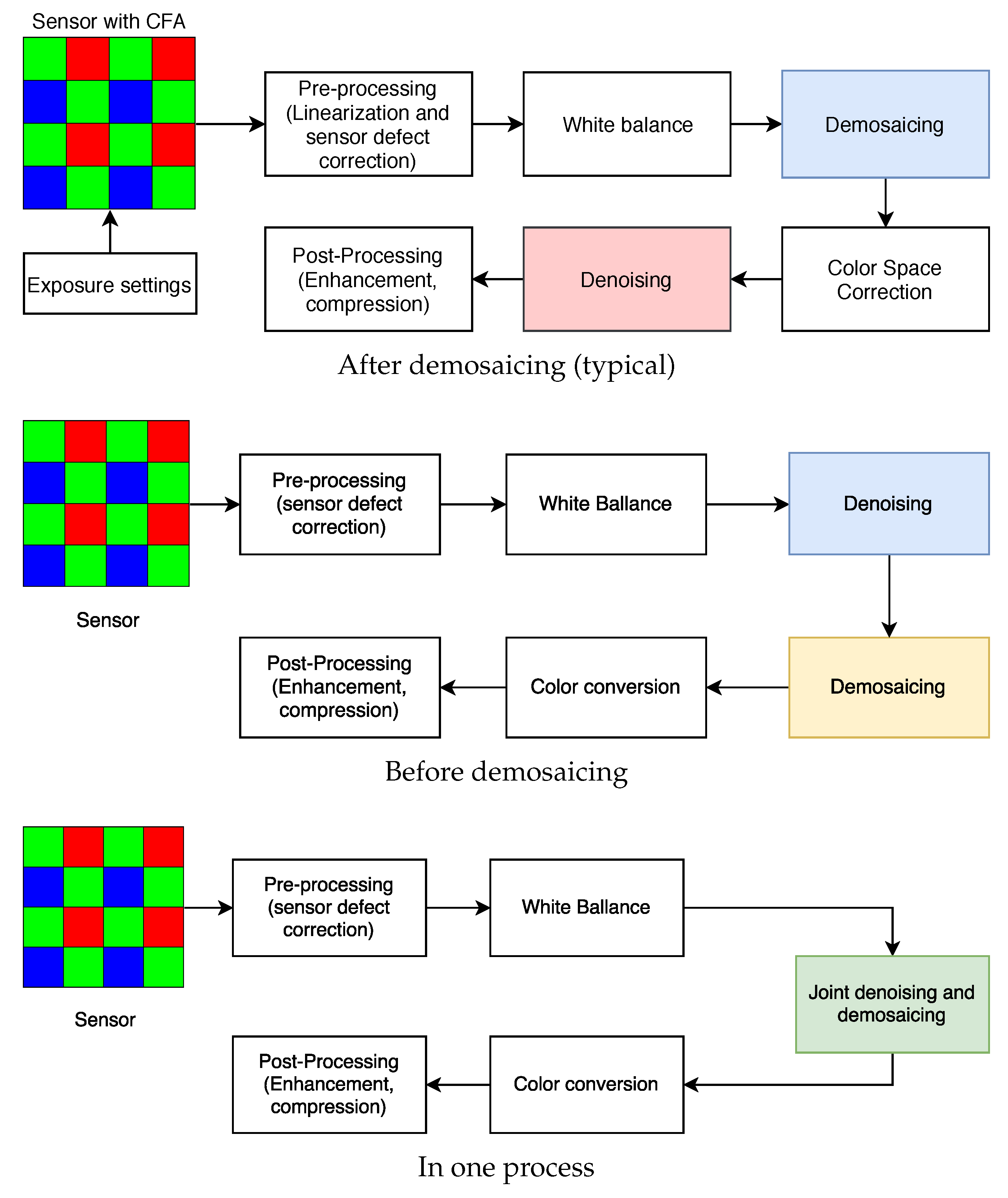

2. Raw Image Processing Pipelines

- demosaicing algorithms are changing noise distribution, and introduce additional artifacts, especially in presence of impulsive noise,

- usually more data to process—three highly correlated channels (compared to one mosaic grayscale image), and

- raw image processing may cause some data loss before denoising process.

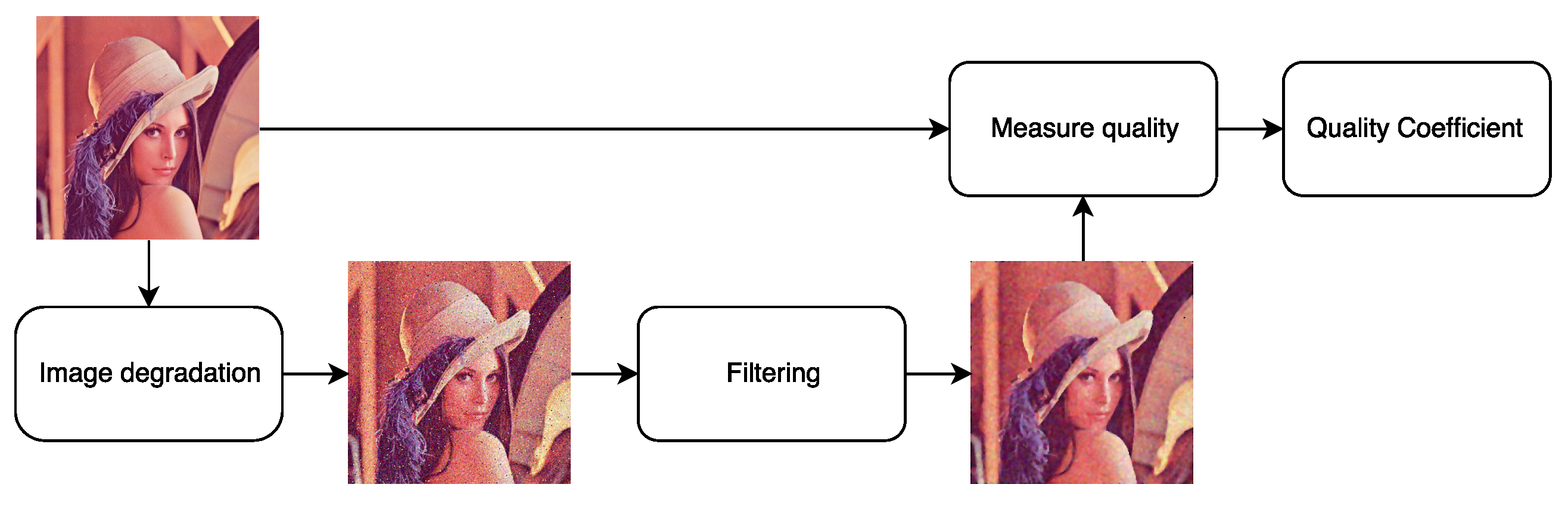

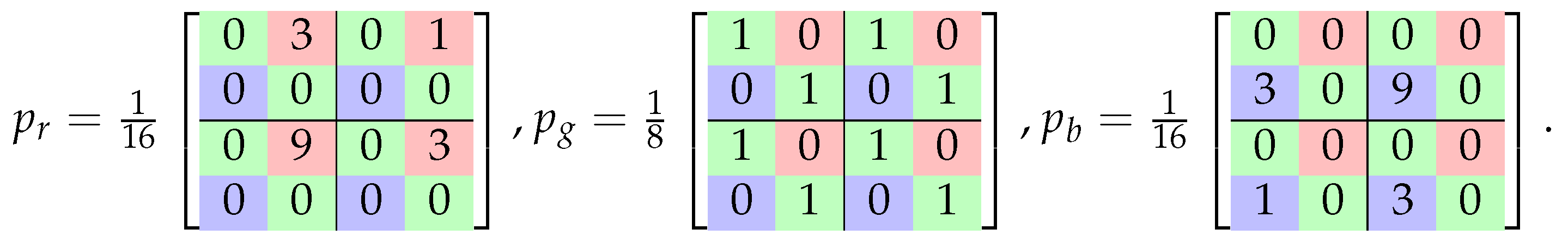

3. Generating A Set of Test Images

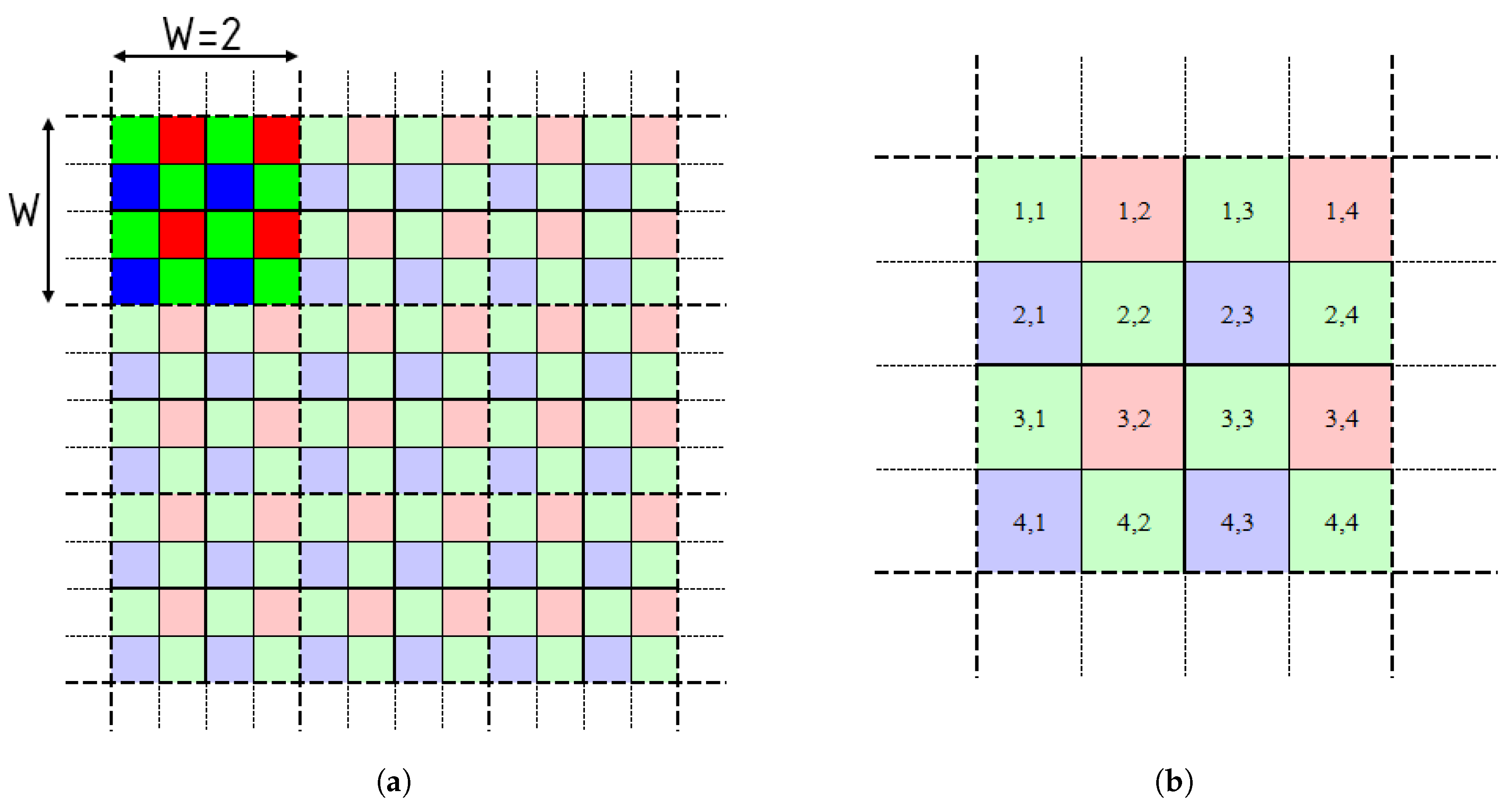

3.1. Downsampling Real Raw Images

3.2. Impulsive Noise Problem—Raw Spatial Median Filter

3.3. The Process of Preparing Ground Truth Images

- reading raw files,

- impulsive noise removal (using dark frame or with RAWSM filter),

- linearization,

- white balancing,

- maximum entropy downsampling (),

- color space correction,

- brightness and contrast adjustment.

- CFA linear - mosaiced Bayer image in linear sensor space,

- CFA sRGB - mosaiced Bayer image in sRGB color space,

- linear - RGB images in linear sensor space,

- sRGB - final sRGB images.

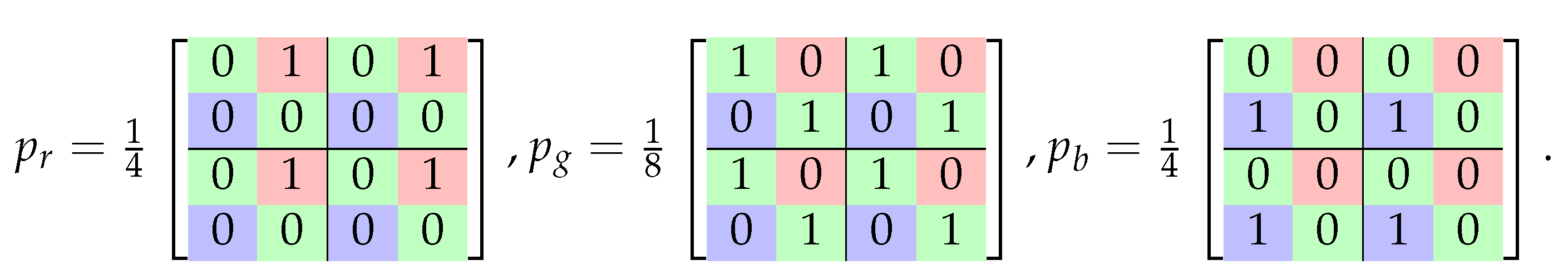

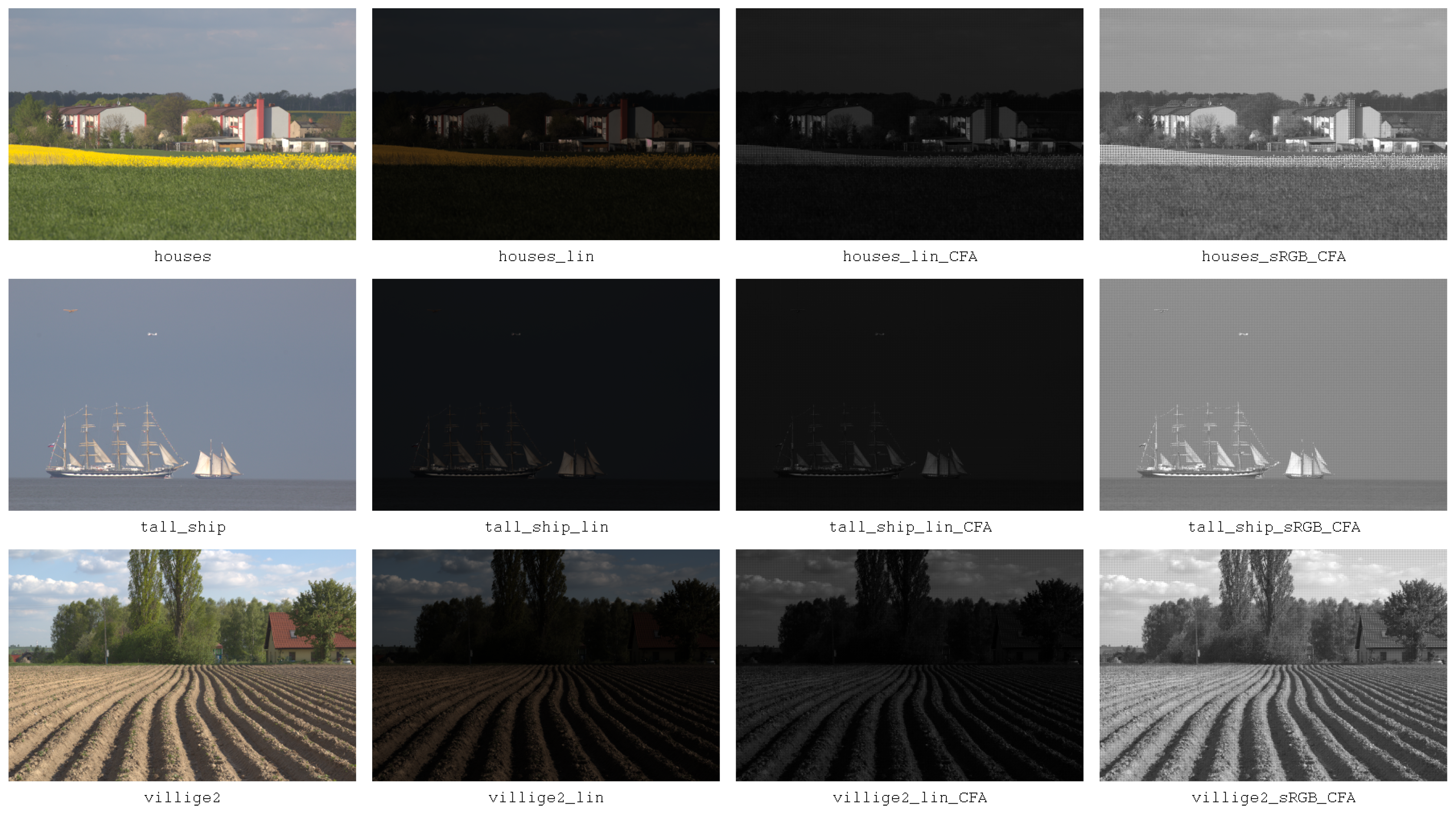

3.4. Synthetic Noise Model

4. Experiment

4.1. The Assumptions of the Experiment and the Input Data

- 48 ground truth images,

- 4 noise levels,

- 10 noise process realizations,

- 14 filtering scenarios,

- 1920 test input images, and

- 26,880 filtering results.

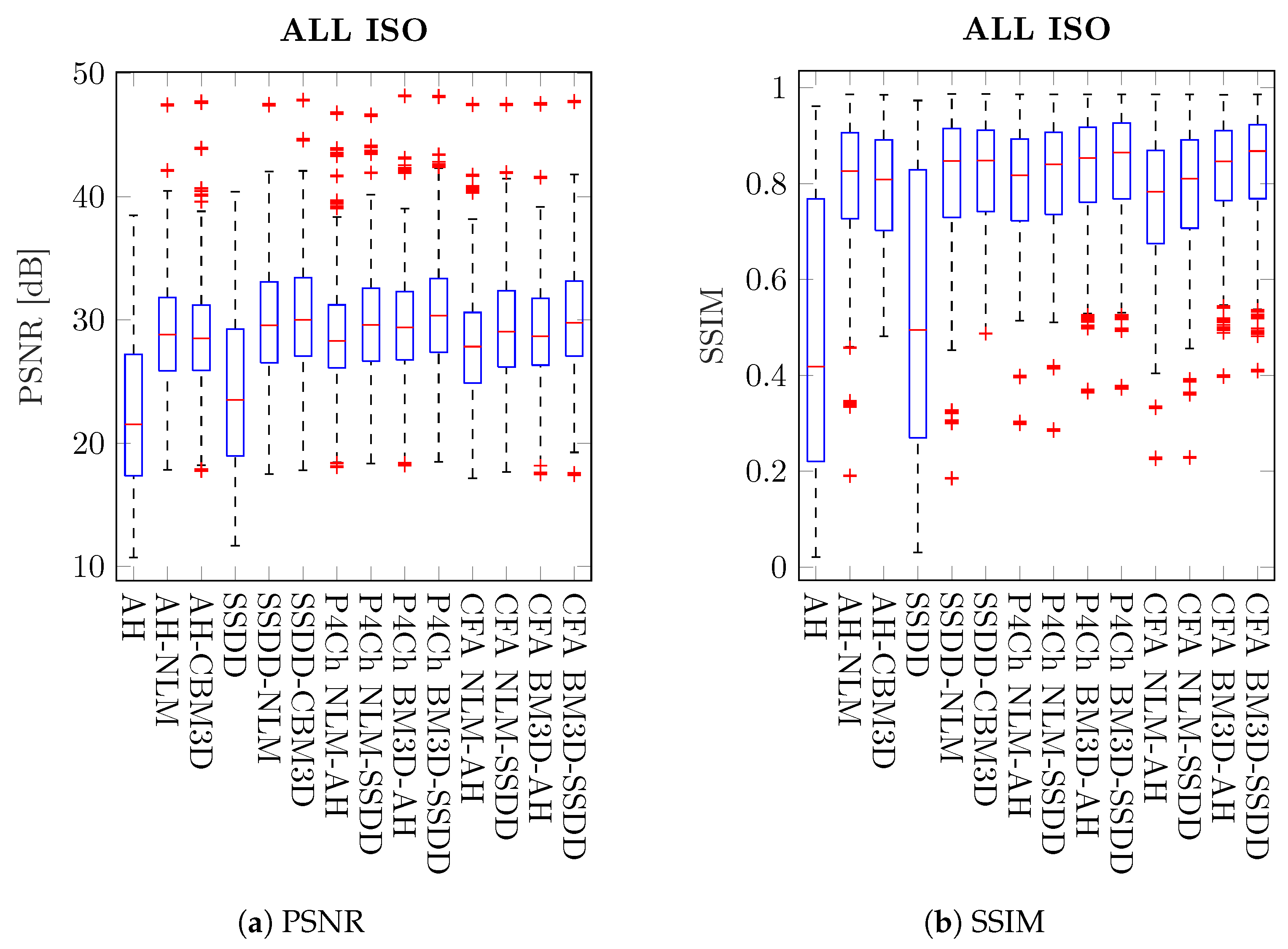

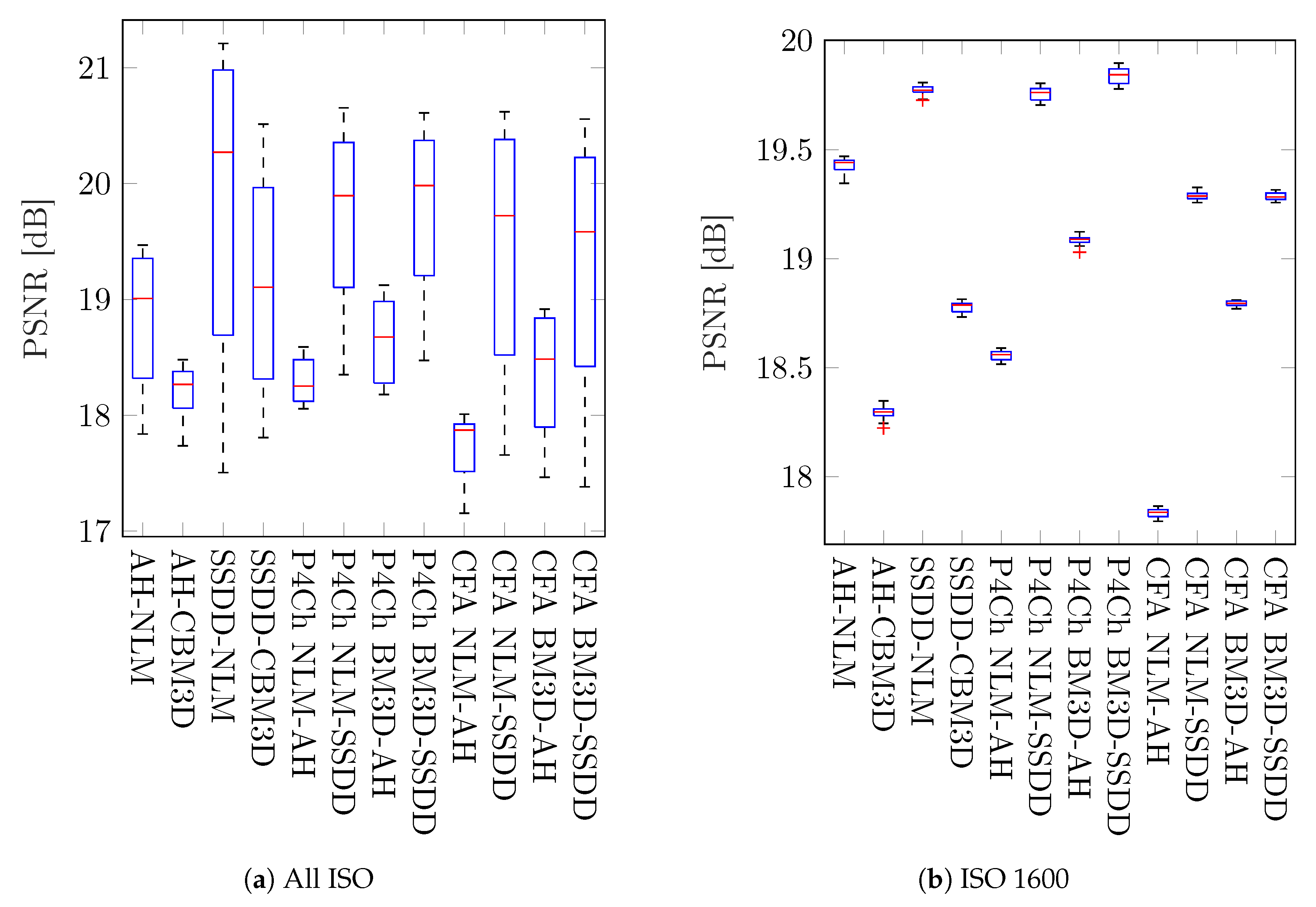

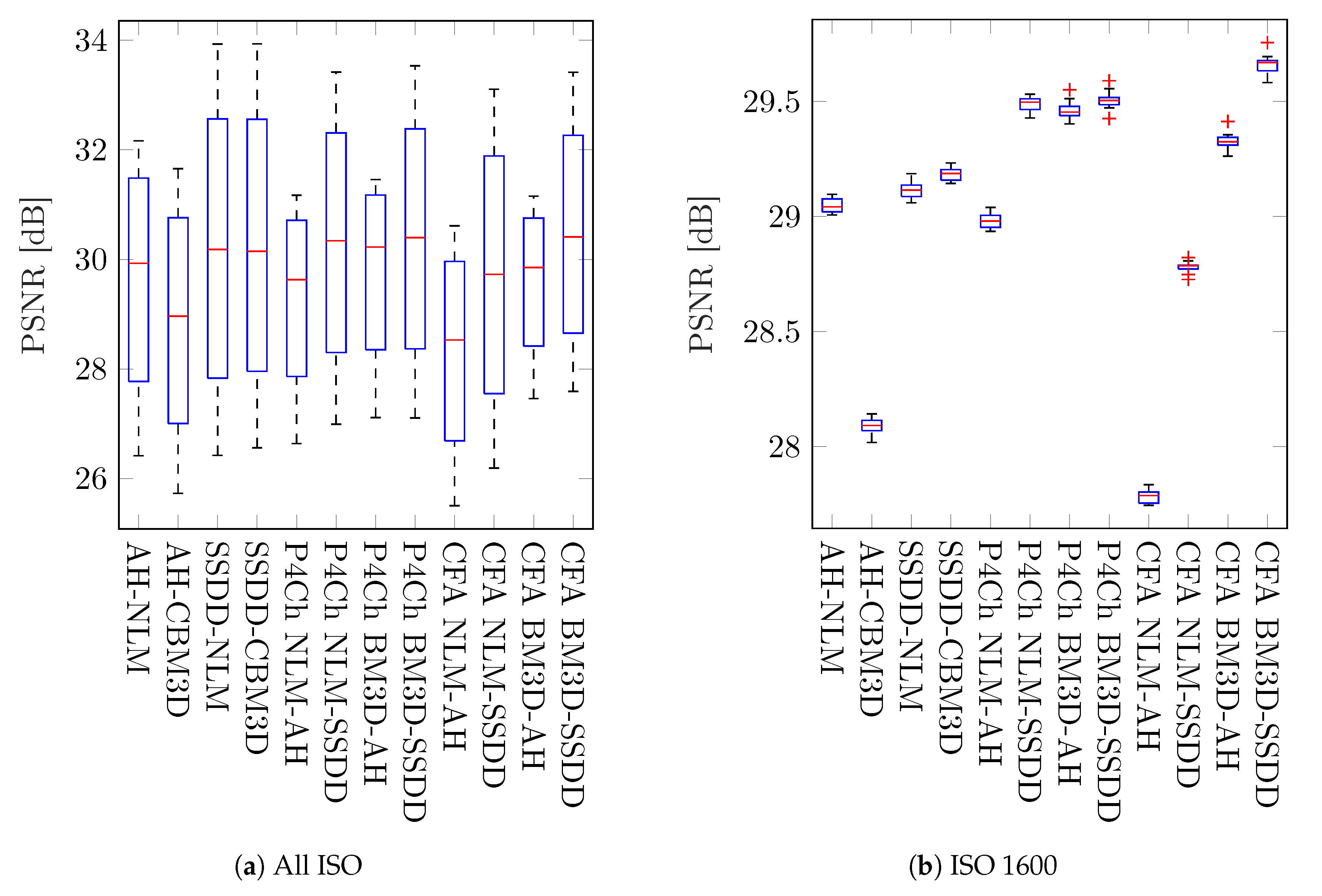

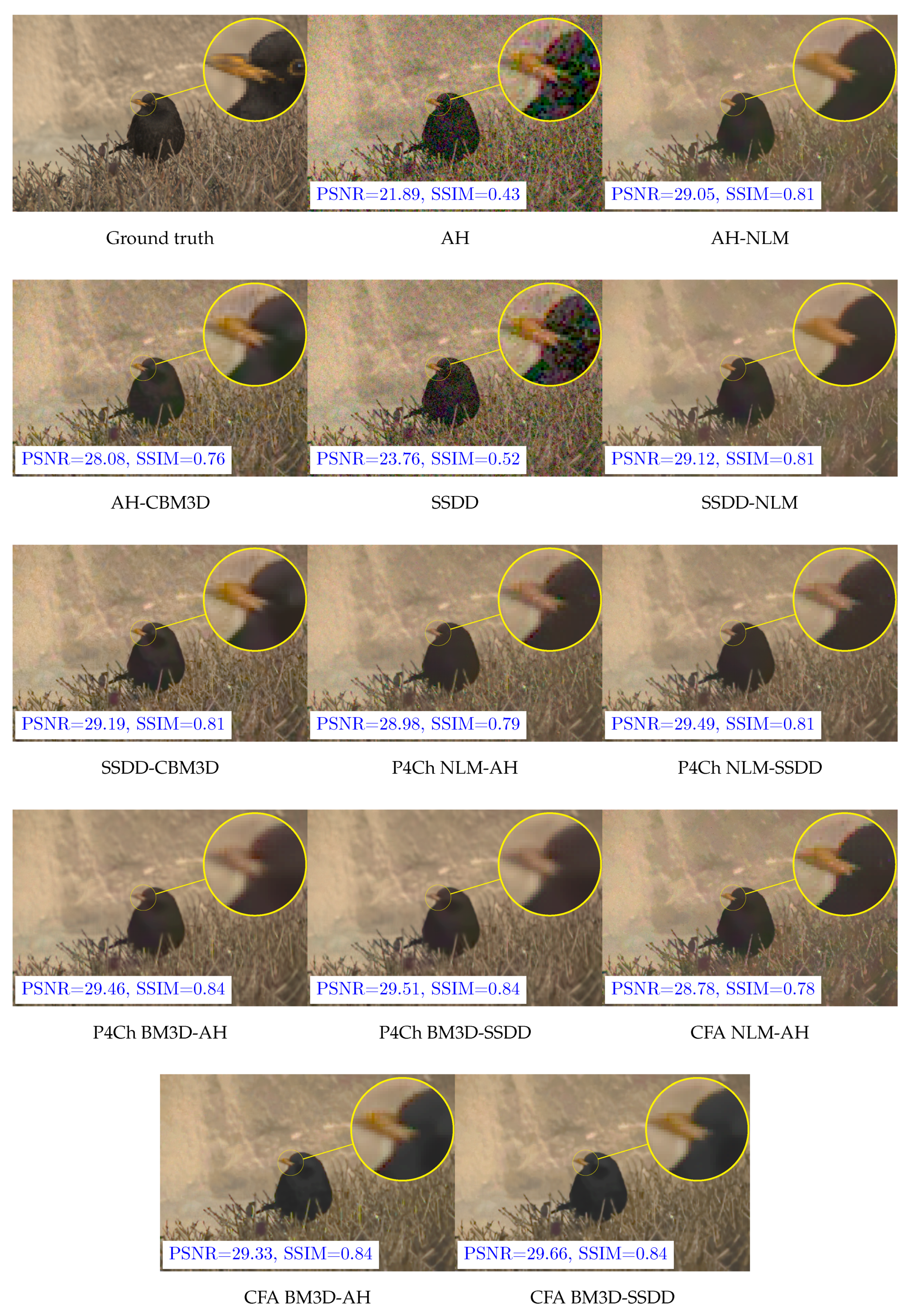

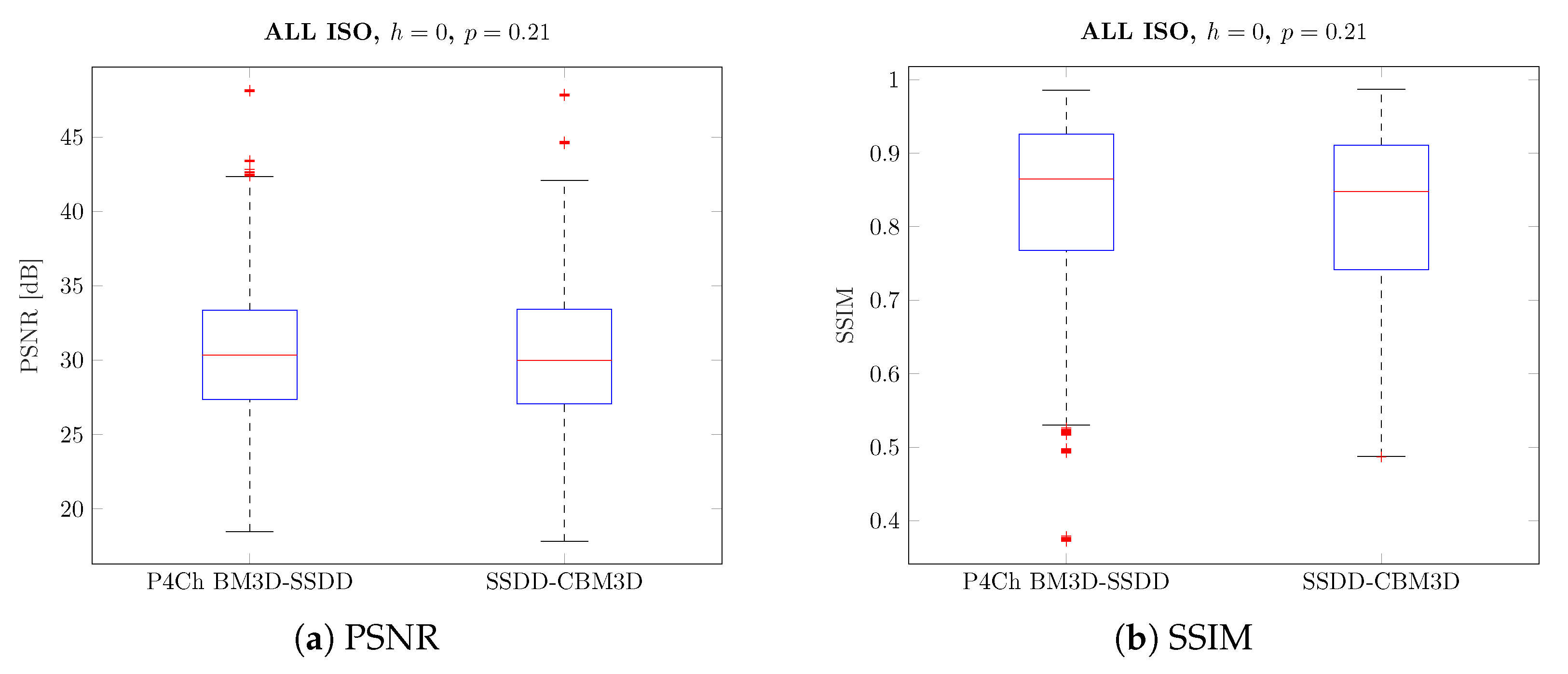

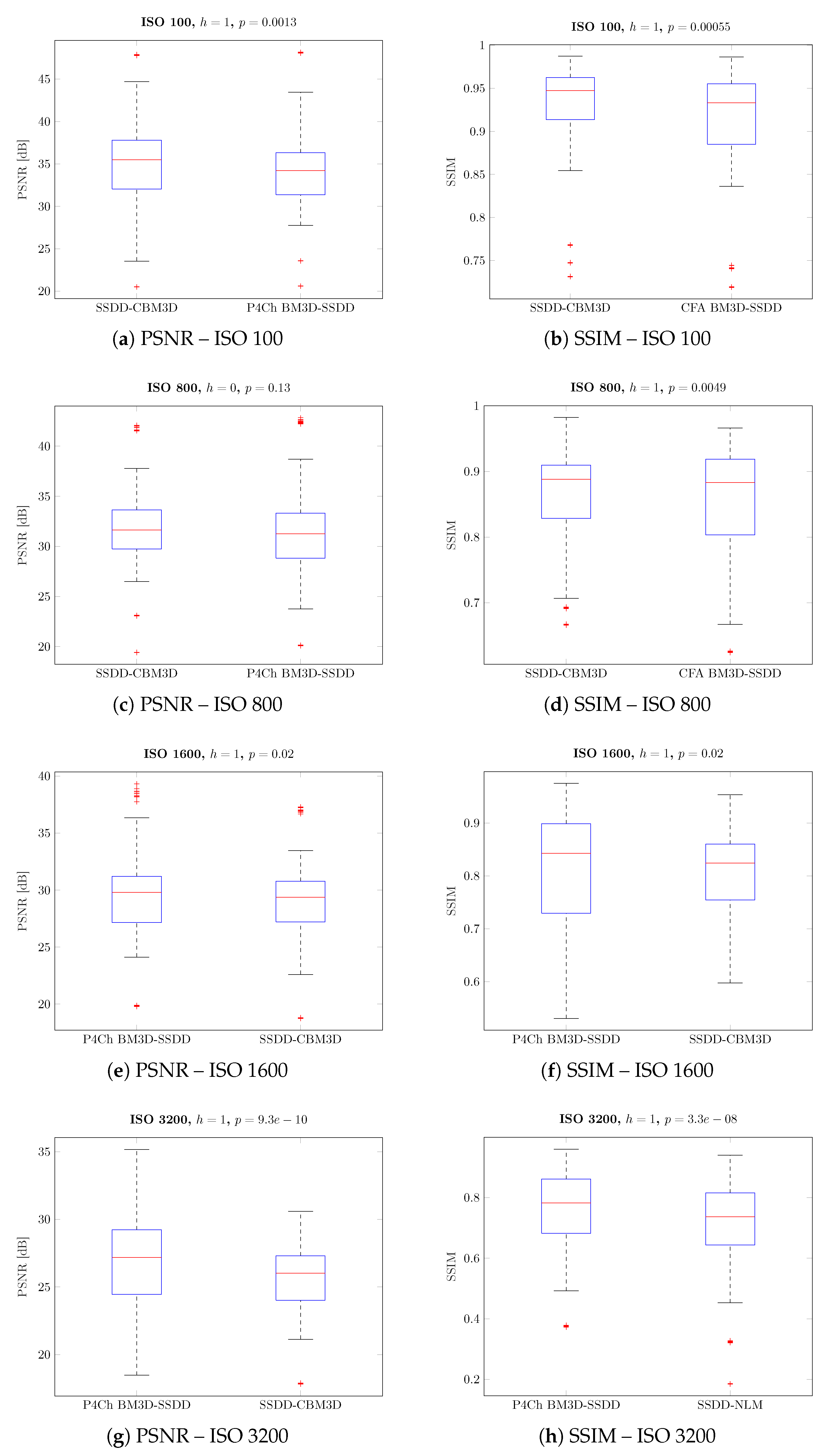

4.2. Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bayer, B.E. Color imaging array. U.S. Patent 3,971,065, 20 July 1976. [Google Scholar]

- Getreuer, P. Image Demosaicking with Contour Stencils. Image Process. Online 2012, 2, 22–34. [Google Scholar] [CrossRef]

- Khashabi, D.; Nowozin, S.; Jancsary, J.; Fitzgibbon, A.W. Joint demosaicing and denoising via learned nonparametric random fields. IEEE Trans. Image Process. 2014, 23, 4968–4981. [Google Scholar] [CrossRef] [PubMed]

- Astola, J.; Haavisto, P.; Neuovo, Y. Vector median filters. Proc. IEEE 1990, 78, 678–689. [Google Scholar] [CrossRef]

- Smolka, B.; Chydzinski, A.; Wojciechowski, K.; Plataniotis, K.; Venetsanopoulos, A. On the reduction of impulsive noise in multichannel image processing. Opt. Eng. 2001, 40, 902–908. [Google Scholar] [CrossRef]

- Smolka, B.; Szczepanski, M.; Plataniotis, K.; Venetsanopoulos, A.N. Fast modified vector median filter. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Warsaw, Poland, 5–7 September 2001; pp. 570–580. [Google Scholar]

- Buades, A.; Coll, B.; Morel, J.M. Non-Local Means Denoising. Image Process. Online 2011, 1, 208–212. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image Denoising by Sparse 3-D Transform-Domain Collaborative Filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Maggioni, M.; Katkovnik, V.; Egiazarian, K.; Foi, A. Nonlocal Transform-Domain Filter for Volumetric Data Denoising and Reconstruction. IEEE Trans. Image Process. 2013, 22, 119–133. [Google Scholar] [CrossRef] [PubMed]

- Danielyan, A.; Vehvilainen, M.; Foi, A.; Katkovnik, V.; Egiazarian, K. Cross-color BM3D filtering of noisy raw data. In Proceedings of the 2009 International Workshop on Local and Non-Local Approximation in Image Processing, Tuusula, Finland, 19–21 August 2009; pp. 125–129. [Google Scholar]

- Park, S.H.; Kim, H.S.; Lansel, S.; Parmar, M.; Wandell, B.A. A Case for Denoising Before Demosaicking Color Filter Array Data. In Proceedings of the 2009 Conference Record of the Forty-Third Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 1–4 November 2009; pp. 860–864. [Google Scholar]

- Zhang, L.; Lukac, R.; Wu, X.; Zhang, D. PCA-Based Spatially Adaptive Denoising of CFA Images for Single-Sensor Digital Cameras. IEEE Trans. Image Process. 2009, 18, 797–812. [Google Scholar] [CrossRef] [PubMed]

- Chatterjee, P.; Joshi, N.; Kang, S.B.; Matsushita, Y. Noise Suppression in Low-light Images Through Joint Denoising and Demosaicing. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 20–25 June 2011; pp. 321–328. [Google Scholar]

- Hirakawa, K.; Parks, T.W. Joint demosaicing and denoising. IEEE Trans. Image Process. 2006, 15, 2146–2157. [Google Scholar] [CrossRef]

- Condat, L.; Mosaddegh, S. Joint demosaicking and denoising by total variation minimization. In Proceedings of the 2012 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 2781–2784. [Google Scholar]

- Plotz, T.; Roth, S. Benchmarking Denoising Algorithms with Real Photographs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1586–1595. [Google Scholar]

- Brys, B.J. Image Restoration in the Presence of Bad Pixels. Master’s Thesis, University of Dayton, Dayton, OH, USA, 2010. [Google Scholar]

- Sumner, R. Processing RAW Images in MATLAB. 2014. Available online: http://www.rcsumner.net/raw_guide/RAWguide.pdf (accessed on 22 December 2019).

- Foi, A.; Trimeche, M.; Katkovnik, V.; Egiazarian, K. Practical Poissonian-Gaussian Noise Modeling and Fitting for Single-Image Raw-Data. IEEE Trans. Image Process. 2008, 17, 1737–1754. [Google Scholar] [CrossRef] [PubMed]

- Popowicz, A.; Kurek, A.R.; Błachowicz, T.; Orlov, V.; Smołka, B. On the efficiency of techniques for the reduction of impulsive noise in astronomical images. Mon. Not. R. Astron. Soc. 2016, 463, 2172–2189. [Google Scholar] [CrossRef]

- Buades, A.; Coll, B.; Morel, J.M. Nonlocal Image and Movie Denoising. Int. J. Comput. Vision 2008, 76, 123–139. [Google Scholar] [CrossRef]

- Akiyama, H.; Tanaka, M.; Okutomi, M. Pseudo four-channel image denoising for noisy CFA raw data. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 4778–4782. [Google Scholar]

- Hamilton, J.; Adams, J. Adaptive color plan interpolation in single sensor color electronic camera. U.S. Patent 5,629,734, 13 May 1997. [Google Scholar]

- Buades, A.; Coll, B.; Morel, J.M.; Sbert, C. Self-similarity driven demosaicking. Image Process. Online 2011, 1, 51–56. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image restoration by sparse 3D transform-domain collaborative filtering. In Proceedings of the Image Processing: Algorithms and Systems VI, San Jose, CA, USA, 27–31 January 2008; Volume 6812, p. 681207. [Google Scholar]

| No. | Standard Filters | No. | P4Ch CFA Approach | No. | Direct CFA Filters |

|---|---|---|---|---|---|

| 1 | AH | 7 | P4Ch NLM → AH | 11 | CFA NLM → AH |

| 2 | AH → NLM | 8 | P4Ch NLM → SSDD | 12 | CFA NLM → SSDD |

| 3 | AH → CBM3D | 9 | P4Ch BM3D → AH | 13 | CFA BM3D → AH |

| 4 | SSDD | 10 | P4Ch BM3D → SSDD | 14 | CFA BM3D → SSDD |

| 5 | SSDD → NLM | ||||

| 6 | SSDD → CBM3D |

| Method | All ISO | ISO100 | ISO800 | ISO1600 | ISO3200 | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | PSNR | PSNR | PSNR | PSNR | ||||||

| AH | 22.26 | 6.67 | 30.75 | 4.19 | 23.30 | 3.31 | 19.30 | 3.61 | 15.68 | 3.40 |

| AH → NLM | 28.91 | 4.52 | 32.15 | 4.77 | 29.88 | 3.65 | 28.10 | 3.37 | 25.50 | 3.29 |

| AH → CBM3D | 28.84 | 4.54 | 32.42 | 5.19 | 30.12 | 3.52 | 27.88 | 2.72 | 24.95 | 2.27 |

| SSDD | 24.22 | 7.01 | 33.30 | 3.92 | 25.55 | 3.18 | 21.10 | 3.55 | 16.95 | 3.40 |

| SSDD → NLM | 29.69 | 4.84 | 33.82 | 4.37 | 30.60 | 3.83 | 28.53 | 3.66 | 25.79 | 3.51 |

| SSDD → CBM3D | 30.28 | 4.86 | 34.70 | 4.73 | 31.50 | 3.64 | 29.11 | 3.09 | 25.81 | 2.60 |

| P4Ch NLM → AH | 28.75 | 4.46 | 31.59 | 4.81 | 29.69 | 3.89 | 28.12 | 3.49 | 25.59 | 3.15 |

| P4Ch NLM → SSDD | 29.74 | 4.68 | 33.43 | 4.38 | 30.64 | 3.86 | 28.82 | 3.62 | 26.09 | 3.43 |

| P4Ch BM3D → AH | 29.58 | 4.45 | 31.98 | 5.02 | 30.45 | 3.88 | 29.07 | 3.52 | 26.81 | 3.51 |

| P4Ch BM3D → SSDD | 30.41 | 4.65 | 33.79 | 4.60 | 31.23 | 3.86 | 29.55 | 3.60 | 27.05 | 3.65 |

| CFA NLM → AH | 28.03 | 4.50 | 31.36 | 4.92 | 28.98 | 3.72 | 27.18 | 3.22 | 24.61 | 2.84 |

| CFA NLM → SSDD | 29.28 | 4.70 | 33.40 | 4.41 | 30.21 | 3.66 | 28.15 | 3.35 | 25.36 | 3.12 |

| CFA BM3D → AH | 29.01 | 4.46 | 31.80 | 4.97 | 29.90 | 3.65 | 28.37 | 3.28 | 25.99 | 3.57 |

| CFA BM3D → SSDD | 29.99 | 4.74 | 33.72 | 4.51 | 30.89 | 3.72 | 29.01 | 3.48 | 26.32 | 3.83 |

| Method | All ISO | ISO100 | ISO800 | ISO1600 | ISO3200 | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| SSIM | SSIM | SSIM | SSIM | SSIM | ||||||

| AH | 0.477 | 0.288 | 0.873 | 0.065 | 0.500 | 0.166 | 0.332 | 0.171 | 0.201 | 0.132 |

| AH → NLM | 0.799 | 0.133 | 0.902 | 0.067 | 0.827 | 0.095 | 0.773 | 0.121 | 0.694 | 0.141 |

| AH → CBM3D | 0.793 | 0.120 | 0.912 | 0.066 | 0.844 | 0.067 | 0.766 | 0.067 | 0.648 | 0.077 |

| SSDD | 0.530 | 0.288 | 0.907 | 0.051 | 0.580 | 0.162 | 0.394 | 0.181 | 0.237 | 0.147 |

| SSDD → NLM | 0.809 | 0.139 | 0.912 | 0.063 | 0.835 | 0.103 | 0.782 | 0.133 | 0.709 | 0.150 |

| SSDD → CBM3D | 0.825 | 0.112 | 0.930 | 0.056 | 0.868 | 0.069 | 0.808 | 0.075 | 0.694 | 0.079 |

| P4Ch NLM → AH | 0.795 | 0.121 | 0.897 | 0.069 | 0.829 | 0.082 | 0.773 | 0.095 | 0.679 | 0.113 |

| P4Ch NLM → SSDD | 0.812 | 0.121 | 0.912 | 0.061 | 0.842 | 0.084 | 0.790 | 0.100 | 0.705 | 0.122 |

| P4Ch BM3D → AH | 0.826 | 0.114 | 0.899 | 0.071 | 0.845 | 0.089 | 0.807 | 0.106 | 0.753 | 0.130 |

| P4Ch BM3D → SSDD | 0.835 | 0.117 | 0.913 | 0.063 | 0.853 | 0.091 | 0.814 | 0.109 | 0.759 | 0.134 |

| CFA NLM → AH | 0.763 | 0.137 | 0.890 | 0.073 | 0.804 | 0.089 | 0.734 | 0.105 | 0.625 | 0.114 |

| CFA NLM → SSDD | 0.789 | 0.134 | 0.909 | 0.062 | 0.825 | 0.090 | 0.761 | 0.111 | 0.663 | 0.123 |

| CFA BM3D → AH | 0.823 | 0.111 | 0.901 | 0.071 | 0.849 | 0.083 | 0.804 | 0.096 | 0.739 | 0.118 |

| CFA BM3D → SSDD | 0.835 | 0.113 | 0.917 | 0.061 | 0.858 | 0.085 | 0.814 | 0.101 | 0.750 | 0.124 |

| No. | Filter | All ISO | 100 | 800 | 1600 | 3200 |

|---|---|---|---|---|---|---|

| 1 | AH | 0 | 0 | 0 | 0 | 0 |

| 2 | AH → NLM | 0 | 1 | 1 | 0 | 0 |

| 3 | AH → CBM3D | 0 | 2 | 0 | 0 | 1 |

| 4 | SSDD | 0 | 0 | 0 | 0 | 0 |

| 5 | SSDD → NLM | 4 | 5 | 8 | 2 | 0 |

| 6 | SSDD → CBM3D | 21 | 37 | 26 | 14 | 6 |

| 7 | P4Ch NLM → AH | 0 | 0 | 0 | 0 | 0 |

| 8 | P4Ch NLM → SSDD | 2 | 0 | 1 | 2 | 2 |

| 9 | P4Ch BM3D → AH | 2 | 1 | 0 | 2 | 2 |

| 10 | P4Ch BM3D → SSDD | 15 | 2 | 9 | 20 | 26 |

| 11 | CFA NLM → AH | 0 | 0 | 0 | 0 | 0 |

| 12 | CFA NLM → SSDD | 0 | 0 | 0 | 0 | 0 |

| 13 | CFA BM3D → AH | 0 | 0 | 0 | 0 | 1 |

| 14 | CFA BM3D → SSDD | 6 | 0 | 3 | 8 | 10 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Szczepański, M.; Giemza, F. Noise Removal in the Developing Process of Digital Negatives. Sensors 2020, 20, 902. https://doi.org/10.3390/s20030902

Szczepański M, Giemza F. Noise Removal in the Developing Process of Digital Negatives. Sensors. 2020; 20(3):902. https://doi.org/10.3390/s20030902

Chicago/Turabian StyleSzczepański, Marek, and Filip Giemza. 2020. "Noise Removal in the Developing Process of Digital Negatives" Sensors 20, no. 3: 902. https://doi.org/10.3390/s20030902

APA StyleSzczepański, M., & Giemza, F. (2020). Noise Removal in the Developing Process of Digital Negatives. Sensors, 20(3), 902. https://doi.org/10.3390/s20030902