A Hybrid Speller Design Using Eye Tracking and SSVEP Brain–Computer Interface

Abstract

1. Introduction

2. Materials and Methods

2.1. Materials

2.1.1. Proposed Hybrid SSVEP- and Eye-Tracking-Based Speller

2.1.2. Participants

2.1.3. Experimental Procedure

2.1.4. Offline Experiment

2.1.5. Online Experiment

2.1.6. Control Conditions

Basic Speller

Hybrid EEG-Eye Tacking

2.1.7. Questionnaire

2.1.8. EEG Recordings

2.1.9. Eye-Tracker Recordings

2.2. Methods

2.2.1. Sub-Matrix Detection

2.2.2. SSVEP Detection

2.2.3. Performance Evaluation

3. Results

3.1. Offline Data Analysis

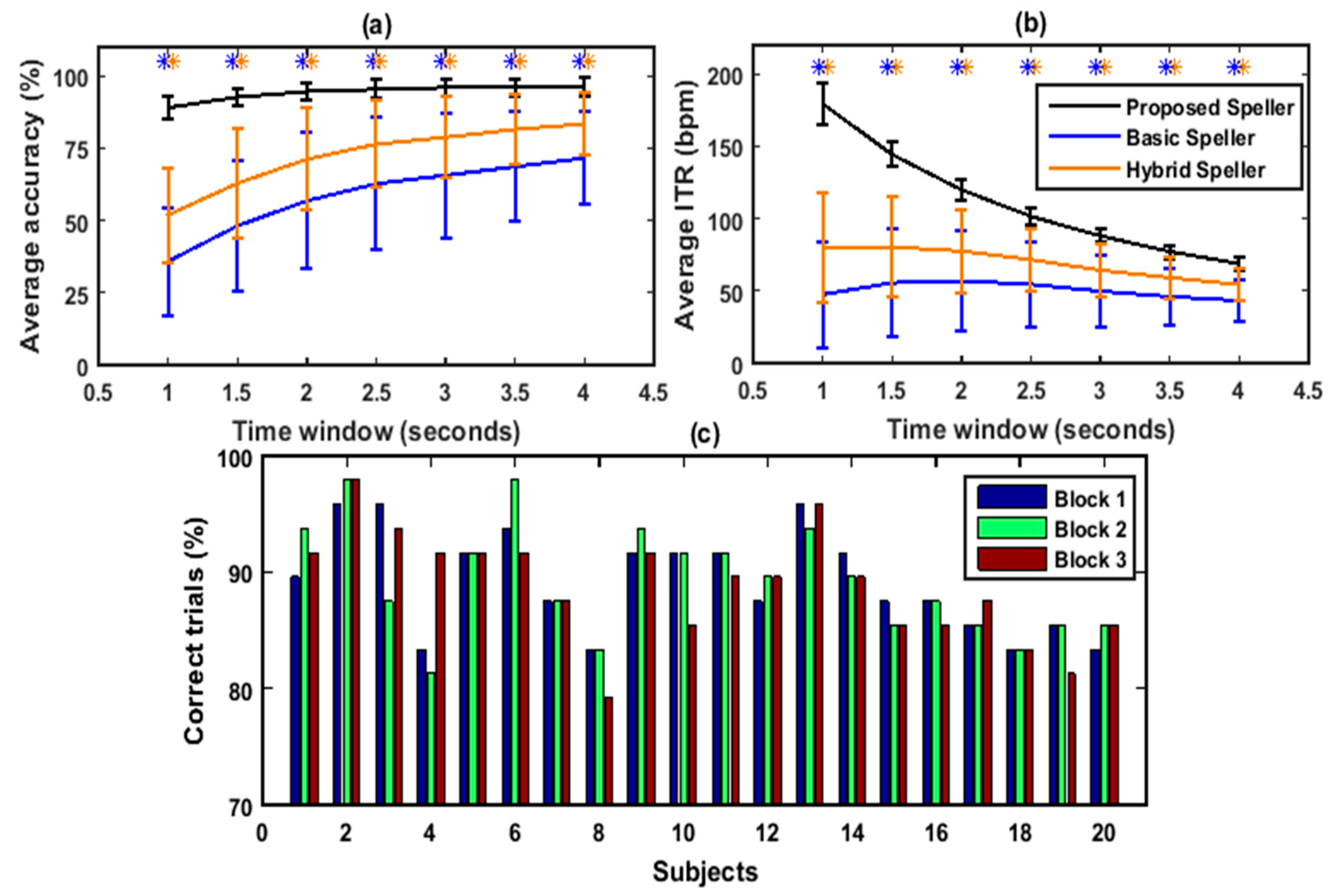

3.2. Online Data Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Availability of Data and Materials

Ethics Approval and Consent to Participate

References

- Vidal, J.J. Toward direct brain-computer communication. Annu. Rev. Biophys. Bioeng. 1973, 2, 157–180. [Google Scholar] [CrossRef] [PubMed]

- Vidal, J.J. Real-time detection of brain events in EEG. Proc. IEEE 1977, 65, 633–641. [Google Scholar] [CrossRef]

- Bozinovski, S.; Sestakov, M.; Bozinovska, L. Using EEG alpha rhythm to control a mobile robot. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, New Orleans, LA, USA, 4–7 November 1988; pp. 1515–1516. [Google Scholar]

- Farwell, L.A.; Donchin, E. Talking off the top of your head: Toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 1988, 70, 510–523. [Google Scholar] [CrossRef]

- Bozinovski, S.; Bozinovski, A. Mental states, EEG manifestations, and mentally emulated digital circuits for brain-robot interaction. IEEE Trans. Auton. Ment. Dev. 2015, 7, 39–51. [Google Scholar] [CrossRef]

- Li, Y.; Pan, J.; Long, J.; Yu, T.; Wang, F.; Yu, Z.; Wu, W. Multimodal BCIs: Target detection, multidimensional control, and awareness evaluation in patients with disorder of consciousness. Proc. IEEE 2016, 104, 332–352. [Google Scholar]

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain–computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Xie, J.; Xu, G.; Luo, A.; Li, M.; Zhang, S.; Han, C.; Yan, W. The Role of Visual Noise in Influencing Mental Load and Fatigue in a Steady-State Motion Visual Evoked Potential-Based Brain-Computer Interface. Sensors 2017, 17, 1873. [Google Scholar] [CrossRef]

- Ahn, M.; Cho, H.; Ahn, S.; Jun, S.C. User’s Self-Prediction of Performance in Motor Imagery Brain–Computer Interface. Front. Hum. Neurosci. 2018, 12, 59. [Google Scholar] [CrossRef]

- Liu, Y.-H.; Huang, S.; Huang, Y.-D. Motor Imagery EEG Classification for Patients with Amyotrophic Lateral Sclerosis Using Fractal Dimension and Fisher’s Criterion-Based Channel Selection. Sensors 2017, 17, 1557. [Google Scholar] [CrossRef]

- Lazarou, I.; Nikolopoulos, S.; Petrantonakis, P.C.; Kompatsiaris, I.; Tsolaki, M. EEG-Based Brain–Computer Interfaces for Communication and Rehabilitation of People with Motor Impairment: A Novel Approach of the 21st Century. Front. Hum. Neurosci. 2018, 12, 14. [Google Scholar] [CrossRef]

- Shin, J.; Kwon, J.; Im, C.-H. A Ternary Hybrid EEG-NIRS Brain-Computer Interface for the Classification of Brain Activation Patterns during Mental Arithmetic, Motor Imagery, and Idle State. Front. Neuroinform. 2018, 12, 5. [Google Scholar] [CrossRef] [PubMed]

- Kamran, M.A.; Mannan, M.M.N.; Jeong, M.Y. Drowsiness, Fatigue and Poor Sleep’s Causes and Detection: A Comprehensive Study. IEEE Access 2019, 7, 167172–167186. [Google Scholar] [CrossRef]

- Amiri, S.; Fazel-Rezai, R.; Asadpour, V. A review of hybrid brain-computer interface systems. Adv. Hum. -Comput. Interact. 2013, 2013, 1. [Google Scholar] [CrossRef]

- Floriano, A.; F Diez, P.; Freire Bastos-Filho, T. Evaluating the Influence of Chromatic and Luminance Stimuli on SSVEPs from Behind-the-Ears and Occipital Areas. Sensors 2018, 18, 615. [Google Scholar] [CrossRef]

- Blankertz, B.; Lemm, S.; Treder, M.; Haufe, S.; Müller, K.-R. Single-trial analysis and classification of ERP components—A tutorial. NeuroImage 2011, 56, 814–825. [Google Scholar] [CrossRef]

- Bozinovska, L.; Bozinovski, S.; Stojanov, G. Electroexpectogram: Experimental Design and Agorithms. In Proceedings of the 1992 International Biomedical Engineering Days, Istanbul, Turkey, 18–20 August 1992; pp. 55–60. [Google Scholar]

- Birbaumer, N.; Ghanayim, N.; Hinterberger, T.; Iversen, I.; Kotchoubey, B.; Kübler, A.; Perelmouter, J.; Taub, E.; Flor, H. A spelling device for the paralysed. Nature 1999, 398, 297. [Google Scholar] [CrossRef]

- Xu, M.; Chen, L.; Zhang, L.; Qi, H.; Ma, L.; Tang, J.; Wan, B.; Ming, D. A visual parallel-BCI speller based on the time–frequency coding strategy. J. Neural Eng. 2014, 11, 026014. [Google Scholar] [CrossRef]

- Nakanishi, M.; Wang, Y.; Wang, Y.-T.; Jung, T.-P. A comparison study of canonical correlation analysis based methods for detecting steady-state visual evoked potentials. PLoS ONE 2015, 10, e0140703. [Google Scholar] [CrossRef]

- Won, D.-O.; Hwang, H.-J.; Dähne, S.; Müller, K.-R.; Lee, S.-W. Effect of higher frequency on the classification of steady-state visual evoked potentials. J. Neural Eng. 2015, 13, 016014. [Google Scholar] [CrossRef]

- Yin, E.; Zeyl, T.; Saab, R.; Chau, T.; Hu, D.; Zhou, Z. A hybrid brain–computer interface based on the fusion of P300 and SSVEP scores. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 23, 693–701. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, X.; Gao, X.; Gao, S. A Benchmark Dataset for SSVEP-Based Brain–Computer Interfaces. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1746–1752. [Google Scholar] [CrossRef]

- Won, D.-O.; Hwang, H.-J.; Kim, D.-M.; Müller, K.-R.; Lee, S.-W. Motion-Based Rapid Serial Visual Presentation for Gaze-Independent Brain-Computer Interfaces. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 334–343. [Google Scholar] [CrossRef]

- Cecotti, H. A self-paced and calibration-less SSVEP-based brain–computer interface speller. IEEE Trans. Neural Syst. Rehabil. Eng. 2010, 18, 127–133. [Google Scholar] [CrossRef]

- Kimura, Y.; Tanaka, T.; Higashi, H.; Morikawa, N. SSVEP-based brain–computer interfaces using FSK-modulated visual stimuli. IEEE Trans. Biomed. Eng. 2013, 60, 2831–2838. [Google Scholar] [CrossRef]

- Chen, X.; Chen, Z.; Gao, S.; Gao, X. A high-itr ssvep-based bci speller. Brain-Comput. Interfaces 2014, 1, 181–191. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Y.; Nakanishi, M.; Gao, X.; Jung, T.-P.; Gao, S. High-speed spelling with a noninvasive brain–computer interface. Proc. Natl. Acad. Sci. USA 2015, 112, E6058–E6067. [Google Scholar] [CrossRef]

- Nakanishi, M.; Wang, Y.; Chen, X.; Wang, Y.-T.; Gao, X.; Jung, T.-P. Enhancing Detection of SSVEPs for a high-speed brain speller using task-related component analysis. IEEE Trans. Biomed. Eng. 2018, 65, 104–112. [Google Scholar] [CrossRef]

- Volosyak, I. SSVEP-based Bremen–BCI interface—boosting information transfer rates. J. Neural Eng. 2011, 8, 036020. [Google Scholar] [CrossRef]

- Spüler, M.; Rosenstiel, W.; Bogdan, M. Online adaptation of a c-VEP brain-computer interface (BCI) based on error-related potentials and unsupervised learning. PLoS ONE 2012, 7, e51077. [Google Scholar] [CrossRef]

- Gembler, F.; Stawicki, P.; Volosyak, I. Autonomous parameter adjustment for SSVEP-based BCIs with a novel BCI wizard. Front. Neurosci. 2015, 9, 474. [Google Scholar] [CrossRef]

- Bin, G.; Gao, X.; Yan, Z.; Hong, B.; Gao, S. An online multi-channel SSVEP-based brain–computer interface using a canonical correlation analysis method. J. Neural Eng. 2009, 6, 046002. [Google Scholar] [CrossRef] [PubMed]

- Nakanishi, M.; Wang, Y.; Wang, Y.-T.; Mitsukura, Y.; Jung, T.-P. Generating visual flickers for eliciting robust steady-state visual evoked potentials at flexible frequencies using monitor refresh rate. PLoS ONE 2014, 9, e99235. [Google Scholar] [CrossRef] [PubMed]

- Nakanishi, M.; Wang, Y.; Wang, Y.-T.; Mitsukura, Y.; Jung, T.-P. A high-speed brain speller using steady-state visual evoked potentials. Int. J. Neural Syst. 2014, 24, 1450019. [Google Scholar] [CrossRef] [PubMed]

- Yin, E.; Zhou, Z.; Jiang, J.; Yu, Y.; Hu, D. A dynamically optimized SSVEP brain–computer interface (BCI) speller. IEEE Trans. Biomed. Eng. 2015, 62, 1447–1456. [Google Scholar] [CrossRef]

- Maye, A.; Zhang, D.; Engel, A.K. Utilizing retinotopic mapping for a multi-target SSVEP BCI with a single flicker frequency. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1026–1036. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Y.; Zhang, S.; Gao, S.; Hu, Y.; Gao, X. A novel stimulation method for multi-class SSVEP-BCI using intermodulation frequencies. J. Neural Eng. 2017, 14, 026013. [Google Scholar] [CrossRef]

- Andersen, S.K.; Müller, M.M. Driving steady-state visual evoked potentials at arbitrary frequencies using temporal interpolation of stimulus presentation. BMC Neurosci. 2015, 16, 95. [Google Scholar] [CrossRef]

- Müller-Putz, G.R.; Scherer, R.; Brauneis, C.; Pfurtscheller, G. Steady-state visual evoked potential (SSVEP)-based communication: Impact of harmonic frequency components. J. Neural Eng. 2005, 2, 123. [Google Scholar] [CrossRef]

- Lee, P.-L.; Yeh, C.-L.; Cheng, J.Y.-S.; Yang, C.-Y.; Lan, G.-Y. An SSVEP-based BCI using high duty-cycle visual flicker. IEEE Trans. Biomed. Eng. 2011, 58, 3350–3359. [Google Scholar] [CrossRef]

- Bakardjian, H.; Tanaka, T.; Cichocki, A. Optimization of SSVEP brain responses with application to eight-command Brain–Computer Interface. Neurosci. Lett. 2010, 469, 34–38. [Google Scholar] [CrossRef] [PubMed]

- Sakurada, T.; Kawase, T.; Komatsu, T.; Kansaku, K. Use of high-frequency visual stimuli above the critical flicker frequency in a SSVEP-based BMI. Clin. Neurophysiol. 2015, 126, 1972–1978. [Google Scholar] [CrossRef] [PubMed]

- Lee, P.-L.; Sie, J.-J.; Liu, Y.-J.; Wu, C.-H.; Lee, M.-H.; Shu, C.-H.; Li, P.-H.; Sun, C.-W.; Shyu, K.-K. An SSVEP-actuated brain computer interface using phase-tagged flickering sequences: A cursor system. Ann. Biomed. Eng. 2010, 38, 2383–2397. [Google Scholar] [CrossRef] [PubMed]

- Jia, C.; Gao, X.; Hong, B.; Gao, S. Frequency and phase mixed coding in SSVEP-based brain--computer interface. IEEE Trans. Biomed. Eng. 2011, 58, 200–206. [Google Scholar] [PubMed]

- Chien, Y.-Y.; Lin, F.-C.; Zao, J.K.; Chou, C.-C.; Huang, Y.-P.; Kuo, H.-Y.; Wang, Y.; Jung, T.-P.; Shieh, H.-P.D. Polychromatic SSVEP stimuli with subtle flickering adapted to brain-display interactions. J. Neural Eng. 2017, 14, 016018. [Google Scholar] [CrossRef]

- Shyu, K.-K.; Lee, P.-L.; Liu, Y.-J.; Sie, J.-J. Dual-frequency steady-state visual evoked potential for brain computer interface. Neurosci. Lett. 2010, 483, 28–31. [Google Scholar] [CrossRef]

- Hwang, H.-J.; Kim, D.H.; Han, C.-H.; Im, C.-H. A new dual-frequency stimulation method to increase the number of visual stimuli for multi-class SSVEP-based brain–computer interface (BCI). Brain Res. 2013, 1515, 66–77. [Google Scholar] [CrossRef]

- Wang, Y.; Jung, T.-p. Visual stimulus design for high-rate SSVEP BCI. Electron. Lett. 2010, 46, 1057–1058. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, P.; Liu, T.; Hu, J.; Zhang, R.; Yao, D. Multiple frequencies sequential coding for SSVEP-based brain-computer interface. PLoS ONE 2012, 7, e29519. [Google Scholar] [CrossRef]

- Lopez-Gordo, M.; Prieto, A.; Pelayo, F.; Morillas, C. Use of phase in brain–computer interfaces based on steady-state visual evoked potentials. Neural Process. Lett. 2010, 32, 1–9. [Google Scholar] [CrossRef]

- Chang, M.H.; Baek, H.J.; Lee, S.M.; Park, K.S. An amplitude-modulated visual stimulation for reducing eye fatigue in SSVEP-based brain–computer interfaces. Clin. Neurophysiol. 2014, 125, 1380–1391. [Google Scholar] [CrossRef]

- Chen, X.; Chen, Z.; Gao, S.; Gao, X. Brain–computer interface based on intermodulation frequency. J. Neural Eng. 2013, 10, 066009. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Xu, G.; Xie, J.; Zhang, X. Brain response to luminance-based and motion-based stimulation using inter-modulation frequencies. PLoS ONE 2017, 12, e0188073. [Google Scholar] [CrossRef] [PubMed]

- Shyu, K.-K.; Chiu, Y.-J.; Lee, P.-L.; Liang, J.-M.; Peng, S.-H. Adaptive SSVEP-based BCI system with frequency and pulse duty-cycle stimuli tuning design. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 697–703. [Google Scholar] [CrossRef]

- Diez, P.F.; Müller, S.M.T.; Mut, V.A.; Laciar, E.; Avila, E.; Bastos-Filho, T.F.; Sarcinelli-Filho, M. Commanding a robotic wheelchair with a high-frequency steady-state visual evoked potential based brain–computer interface. Med. Eng. Phys. 2013, 35, 1155–1164. [Google Scholar] [CrossRef]

- Oralhan, Z.; Tokmakci, M. The Effect of Duty Cycle and Brightness Variation of Visual Stimuli on SSVEP in Brain Computer Interface Systems. IETE J. Res. 2016, 62, 795–803. [Google Scholar] [CrossRef]

- Mouli, S.; Palaniappan, R. Toward a reliable PWM-based light-emitting diode visual stimulus for improved SSVEP response with minimal visual fatigue. J. Eng. 2017, 1. [Google Scholar] [CrossRef]

- Xu, M.; Qi, H.; Wan, B.; Yin, T.; Liu, Z.; Ming, D. A hybrid BCI speller paradigm combining P300 potential and the SSVEP blocking feature. J. Neural Eng. 2013, 10, 026001. [Google Scholar] [CrossRef]

- Yin, E.; Zhou, Z.; Jiang, J.; Chen, F.; Liu, Y.; Hu, D. A novel hybrid BCI speller based on the incorporation of SSVEP into the P300 paradigm. J. Neural Eng. 2013, 10, 026012. [Google Scholar] [CrossRef]

- Wang, M.; Daly, I.; Allison, B.Z.; Jin, J.; Zhang, Y.; Chen, L.; Wang, X. A new hybrid BCI paradigm based on P300 and SSVEP. J. Neurosci. Methods 2015, 244, 16–25. [Google Scholar] [CrossRef]

- Chang, M.H.; Lee, J.S.; Heo, J.; Park, K.S. Eliciting dual-frequency SSVEP using a hybrid SSVEP-P300 BCI. J. Neurosci. Methods 2016, 258, 104–113. [Google Scholar] [CrossRef]

- Yin, E.; Zhou, Z.; Jiang, J.; Chen, F.; Liu, Y.; Hu, D. A speedy hybrid BCI spelling approach combining P300 and SSVEP. IEEE Trans. Biomed. Eng. 2014, 61, 473–483. [Google Scholar] [PubMed]

- Lin, K.; Cinetto, A.; Wang, Y.; Chen, X.; Gao, S.; Gao, X. An online hybrid BCI system based on SSVEP and EMG. J. Neural Eng. 2016, 13, 026020. [Google Scholar] [CrossRef] [PubMed]

- Suefusa, K.; Tanaka, T. A comparison study of visually stimulated brain–computer and eye-tracking interfaces. J. Neural Eng. 2017, 14, 036009. [Google Scholar] [CrossRef] [PubMed]

- Bozinovski, S. Mobile robot trajectory control: From fixed rails to direct bioelectric control. In Proceedings of the IEEE International Workshop on Intelligent Motion Control, Istanbul, Turkey, 20–22 August 1990; pp. 463–467. [Google Scholar]

- Al-Rahayfeh, A.; Faezipour, M. Eye tracking and head movement detection: A state-of-art survey. IEEE J. Transl. Eng. Health Med. 2013, 1, 2100212. [Google Scholar] [CrossRef]

- Caligari, M.; Godi, M.; Guglielmetti, S.; Franchignoni, F.; Nardone, A. Eye tracking communication devices in amyotrophic lateral sclerosis: Impact on disability and quality of life. Amyotroph. Lateral Scler. Front. Degener. 2013, 14, 546–552. [Google Scholar] [CrossRef]

- Hwang, C.-S.; Weng, H.-H.; Wang, L.-F.; Tsai, C.-H.; Chang, H.-T. An eye-tracking assistive device improves the quality of life for ALS patients and reduces the caregivers’ burden. J. Mot. Behav. 2014, 46, 233–238. [Google Scholar] [CrossRef]

- Majaranta, P.; Bulling, A. Eye tracking and eye-based human–computer interaction. In Advances in Physiological Computing; Springer: London, UK, 2014; pp. 39–65. [Google Scholar]

- Käthner, I.; Kübler, A.; Halder, S. Comparison of eye tracking, electrooculography and an auditory brain-computer interface for binary communication: A case study with a participant in the locked-in state. J. Neuroeng. Rehabil. 2015, 12, 76. [Google Scholar] [CrossRef]

- Pasqualotto, E.; Matuz, T.; Federici, S.; Ruf, C.A.; Bartl, M.; Olivetti Belardinelli, M.; Birbaumer, N.; Halder, S. Usability and workload of access technology for people with severe motor impairment: A comparison of brain-computer interfacing and eye tracking. Neurorehabilit. Neural Repair 2015, 29, 950–957. [Google Scholar] [CrossRef]

- Popa, L.; Selejan, O.; Scott, A.; Mureşanu, D.F.; Balea, M.; Rafila, A. Reading beyond the glance: Eye tracking in neurosciences. Neurol. Sci. 2015, 36, 683–688. [Google Scholar] [CrossRef]

- Mannan, M.M.N.; Kim, S.; Jeong, M.Y.; Kamran, M.A. Hybrid EEG—Eye tracker: Automatic identification and removal of eye movement and blink artifacts from electroencephalographic signal. Sensors 2016, 16, 241. [Google Scholar] [CrossRef]

- Mannan, M.M.N.; Jeong, M.Y.; Kamran, M.A. Hybrid ICA—Regression: Automatic identification and removal of ocular artifacts from electroencephalographic signals. Front. Hum. Neurosci. 2016, 10, 193. [Google Scholar] [CrossRef]

- Yong, X.; Fatourechi, M.; Ward, R.K.; Birch, G.E. The design of a point-and-click system by integrating a self-paced brain–computer interface with an Eye-tracker. IEEE J. Emerg. Sel. Top. Circuits Syst. 2011, 1, 590–602. [Google Scholar] [CrossRef]

- Yong, X.; Fatourechi, M.; Ward, R.K.; Birch, G.E. Automatic artefact removal in a self-paced hybrid brain-computer interface system. J. Neuroeng. Rehabil. 2012, 9, 50. [Google Scholar] [CrossRef] [PubMed]

- McMullen, D.P.; Hotson, G.; Katyal, K.D.; Wester, B.A.; Fifer, M.S.; McGee, T.G.; Harris, A.; Johannes, M.S.; Vogelstein, R.J.; Ravitz, A.D. Demonstration of a semi-autonomous hybrid brain–machine interface using human intracranial EEG, eye tracking, and computer vision to control a robotic upper limb prosthetic. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 784–796. [Google Scholar] [CrossRef] [PubMed]

- Stawicki, P.; Gembler, F.; Rezeika, A.; Volosyak, I. A novel hybrid mental spelling application based on eye tracking and SSVEP-based BCI. Brain Sci. 2017, 7, 35. [Google Scholar] [CrossRef]

- McCullagh, P.; Brennan, C.; Lightbody, G.; Galway, L.; Thompson, E.; Martin, S. An SSVEP and eye tracking hybrid BNCI: Potential beyond communication and control. In Proceedings of the International Conference on Augmented Cognition, Toronto, ON, Canada, 17–22 July 2016; pp. 69–78. [Google Scholar]

- Lim, J.-H.; Lee, J.-H.; Hwang, H.-J.; Kim, D.H.; Im, C.-H. Development of a hybrid mental spelling system combining SSVEP-based brain–computer interface and webcam-based eye tracking. Biomed. Signal Process. Control 2015, 21, 99–104. [Google Scholar] [CrossRef]

- Cao, T.; Wan, F.; Wong, C.M.; da Cruz, J.N.; Hu, Y. Objective evaluation of fatigue by EEG spectral analysis in steady-state visual evoked potential-based brain-computer interfaces. Biomed. Eng. Online 2014, 13, 28. [Google Scholar] [CrossRef]

- Yuan, P.; Chen, X.; Wang, Y.; Gao, X.; Gao, S. Enhancing performances of SSVEP-based brain–computer interfaces via exploiting inter-subject information. J. Neural Eng. 2015, 12, 046006. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Y.; Gao, S.; Jung, T.-P.; Gao, X. Filter bank canonical correlation analysis for implementing a high-speed SSVEP-based brain–computer interface. J. Neural Eng. 2015, 12, 046008. [Google Scholar] [CrossRef]

- Tian, Y.; Li, F.; Xu, P.; Yuan, Z.; Zhao, D.; Zhang, H. Combining canonical correlation analysis and infinite reference for frequency recognition of steady-state visual evoked potential recordings: A comparison with periodogram method. Bio-Med Mater. Eng. 2014, 24, 2901–2908. [Google Scholar] [CrossRef]

- Manyakov, N.V.; Chumerin, N.; Van Hulle, M.M. Multichannel decoding for phase-coded SSVEP brain–computer interface. Int. J. Neural Syst. 2012, 22, 1250022. [Google Scholar] [CrossRef] [PubMed]

- Carvalho, S.N.; Costa, T.B.; Uribe, L.F.; Soriano, D.C.; Yared, G.F.; Coradine, L.C.; Attux, R. Comparative analysis of strategies for feature extraction and classification in SSVEP BCIs. Biomed. Signal Process. Control 2015, 21, 34–42. [Google Scholar] [CrossRef]

- Lin, Z.; Zhang, C.; Wu, W.; Gao, X. Frequency recognition based on canonical correlation analysis for SSVEP-based BCIs. IEEE Trans. Biomed. Eng. 2006, 53, 2610–2614. [Google Scholar] [CrossRef] [PubMed]

- Lim, J.H.; Kim, Y.W.; Lee, J.H.; An, K.O.; Hwang, H.J.; Cha, H.S.; Han, C.H.; Im, C.H. An emergency call system for patients in locked-in state using an SSVEP-based brain switch. Psychophysiology 2017, 54, 1632–1643. [Google Scholar] [CrossRef] [PubMed]

- Kramberger, I.; Donaj, G. Binocular Phase-Coded Visual Stimuli for SSVEP-Based BCI. IEEE Access 2019, 7, 48912–48922. [Google Scholar] [CrossRef]

- Kwak, N.-S.; Müller, K.-R.; Lee, S.-W. A lower limb exoskeleton control system based on steady state visual evoked potentials. J. Neural Eng. 2015, 12, 056009. [Google Scholar] [CrossRef]

- Muller-Putz, G.R.; Pfurtscheller, G. Control of an electrical prosthesis with an SSVEP-based BCI. IEEE Trans. Biomed. Eng. 2008, 55, 361–364. [Google Scholar] [CrossRef]

- Martinez, P.; Bakardjian, H.; Cichocki, A. Fully online multicommand brain-computer interface with visual neurofeedback using SSVEP paradigm. Comput. Intell. Neurosci. 2007, 2007. [Google Scholar] [CrossRef]

- Min, B.-K.; Dähne, S.; Ahn, M.-H.; Noh, Y.-K.; Müller, K.-R. Decoding of top-down cognitive processing for SSVEP-controlled BMI. Sci. Rep. 2016, 6, 36267. [Google Scholar] [CrossRef]

- Liu, Q.; Chen, K.; Ai, Q.; Xie, S.Q. recent development of signal processing algorithms for SSVEP-based brain computer interfaces. J. Med. Biol. Eng. 2014, 34, 299–309. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, G.; Jin, J.; Wang, X.; Cichocki, A. Frequency recognition in SSVEP-based BCI using multiset canonical correlation analysis. Int. J. Neural Syst. 2014, 24, 1450013. [Google Scholar] [CrossRef] [PubMed]

- Carvalho, S.N.; Costa, T.B.; Uribe, L.F.; Soriano, D.C.; Almeida, S.R.; Min, L.L.; Castellano, G.; Attux, R. Effect of the combination of different numbers of flickering frequencies in an SSVEP-BCI for healthy volunteers and stroke patients. In Proceedings of the 2015 7th International IEEE/EMBS Conference onNeural Engineering (NER), Montpellier, France, 22–24 April 2015; pp. 78–81. [Google Scholar]

- Majaranta, P.; Räihä, K.-J. Twenty years of eye typing: Systems and design issues. In Proceedings of the 2002 Symposium on Eye Tracking Research & Applications, New Orleans, LA, USA, 25–27 March 2002; pp. 15–22. [Google Scholar]

- Dybdal, M.L.; Agustin, J.S.; Hansen, J.P. Gaze input for mobile devices by dwell and gestures. In Proceedings of the Symposium on Eye Tracking Research and Applications 2012, Santa Barbara, CA, USA, 28–30 March 2012; pp. 225–228. [Google Scholar]

- Lee, H.C.; Lee, W.O.; Cho, C.W.; Gwon, S.Y.; Park, K.R.; Lee, H.; Cha, J. Remote gaze tracking system on a large display. Sensors 2013, 13, 13439–13463. [Google Scholar] [CrossRef] [PubMed]

| Speller | Experience with SSVEP BCI | Flickering Annoying | Eye Fatigue | Level of Tiredness | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Yes | No | Low | Medium | High | Low | Medium | High | 1 | 2 | 3 | 4 | 5 | |

| Proposed | 4 | 16 | 12 | 8 | 0 | 12 | 8 | 0 | 7 | 10 | 3 | 0 | 0 |

| Basic | 0 | 9 | 11 | 0 | 7 | 13 | 0 | 0 | 5 | 10 | 5 | ||

| Hybrid EEG and eye tracking | 0 | 8 | 12 | 0 | 8 | 12 | 0 | 0 | 4 | 11 | 5 | ||

| Sub | Classification Accuracy (%) | Information Transfer Rate (bpm) |

|---|---|---|

| 1 | 91.67 | 188.34 |

| 2 | 97.22 | 209.89 |

| 3 | 92.36 | 190.84 |

| 4 | 85.42 | 167.03 |

| 5 | 91.67 | 188.34 |

| 6 | 95.14 | 201.38 |

| 7 | 87.50 | 173.88 |

| 8 | 81.94 | 156.02 |

| 9 | 89.58 | 180.96 |

| 10 | 92.36 | 190.85 |

| 11 | 90.97 | 185.84 |

| 12 | 88.89 | 178.59 |

| 13 | 95.14 | 201.38 |

| 14 | 90.28 | 183.40 |

| 15 | 86.11 | 169.28 |

| 16 | 86.81 | 171.58 |

| 17 | 86.11 | 169.28 |

| 18 | 83.33 | 160.35 |

| 19 | 84.03 | 162.57 |

| 20 | 84.72 | 164.78 |

| Mean | 89.03 | 179.60 |

| SD | 4.224 | 14.728 |

| Sub | Training Session | Testing Session | ||

|---|---|---|---|---|

| Classification Accuracy (%) | Information Transfer Rate (bpm) | Classification Accuracy (%) | Information Transfer Rate (bpm) | |

| 1 | 89.58 | 180.97 | 88.19 | 176.21 |

| 2 | 95.13 | 201.38 | 95.13 | 201.38 |

| 3 | 90.97 | 185.84 | 92.36 | 190.85 |

| 4 | 87.50 | 173.88 | 86.81 | 171.57 |

| 5 | 92.36 | 190.85 | 93.06 | 193.42 |

| 6 | 95.83 | 204.15 | 95.83 | 204.15 |

| 7 | 88.19 | 176.22 | 89.58 | 180.97 |

| 8 | 86.80 | 171.57 | 86.11 | 169.28 |

| 9 | 92.36 | 190.85 | 93.06 | 193.42 |

| 10 | 93.75 | 196.02 | 93.06 | 193.42 |

| 11 | 88.89 | 178.58 | 91.67 | 188.33 |

| 12 | 91.67 | 188.33 | 92.36 | 190.85 |

| 13 | 95.83 | 204.15 | 96.53 | 206.98 |

| 14 | 88.89 | 178.58 | 91.97 | 185.84 |

| 15 | 85.42 | 167.02 | 87.50 | 173.88 |

| 16 | 90.97 | 185.84 | 90.28 | 183.39 |

| 17 | 85.42 | 167.02 | 86.81 | 171.57 |

| 18 | 86.81 | 171.57 | 86.11 | 169.29 |

| 19 | 82.64 | 158.19 | 84.03 | 162.57 |

| 20 | 85.42 | 167.02 | 87.50 | 173.88 |

| Mean | 89.72 | 181.90 | 90.35 | 184.06 |

| SD | 3.788 | 13.298 | 3.597 | 12.761 |

| Sub | Trial Length (s) (Gaze Shift + Stimulus) | Total No. of Trials (Correct/Incorrect) | Spelling Rate (cpm) | Information Transfer Rate (bpm) |

|---|---|---|---|---|

| 1 | 1.5 (0.5 + 1) | 45 (41/4) | 36.44 | 186.34 |

| 2 | 1.5 (0.5 + 1) | 45 (43/2) | 38.22 | 203.03 |

| 3 | 1.5 (0.5 + 1) | 45 (43/2) | 38.22 | 203.03 |

| 4 | 2.0 (1 + 1) | 45 (44/1) | 29.36 | 159.23 |

| 5 | 1.5 (0.5 + 1) | 45 (43/2) | 38.22 | 203.03 |

| 6 | 1.5 (0.5 + 1) | 45 (44/1) | 39.11 | 212.31 |

| 7 | 1.75 (0.5 + 1.25) | 45 (43/2) | 32.76 | 174.02 |

| 8 | 2.0 (1 + 1) | 45 (44/1) | 29.36 | 159.23 |

| 9 | 1.5 (0.5 + 1) | 45 (43/2) | 38.22 | 203.03 |

| 10 | 1.5 (0.5 + 1) | 45 (44/1) | 39.11 | 212.31 |

| 11 | 1.75 (0.5 + 1.25) | 45 (44/1) | 33.52 | 181.98 |

| 12 | 1.5 (0.5 + 1) | 45 (42/3) | 37.33 | 194.45 |

| 13 | 1.5 (0.5 + 1) | 45 (44/1) | 39.11 | 212.31 |

| 14 | 1.5 (0.5 + 1) | 45 (43/2) | 38.22 | 203.03 |

| 15 | 1.75 (0.5 + 1.25) | 45 (44/1) | 33.52 | 181.98 |

| 16 | 1.5 (0.5 + 1) | 45 (43/2) | 38.22 | 203.03 |

| 17 | 1.5 (0.5 + 1) | 45 (42/3) | 37.33 | 194.45 |

| 18 | 1.75 (0.5 + 1.25) | 45 (43/2) | 32.76 | 174.03 |

| 19 | 2.0 (1 + 1) | 45 (44/1) | 29.36 | 159.23 |

| 20 | 1.5 (0.5 + 1) | 45 (42/3) | 37.33 | 194.45 |

| Mean | - | - | 35.79 | 190.73 |

| SD | 3.47 | 17.849 |

| Study | Stimuli | Multimodal | Frequency Range | NE | NC | NF | Average Accuracy (%) | Information Transfer Rate |

|---|---|---|---|---|---|---|---|---|

| Present | Rectangles | Yes | Mid | 8 | 48 | 6 | 90.35 (84.03–96.53) | 190.73 (159.23–212.31) |

| Nakanishi et al. [29] | Rectangles | No | Low | 9 | 40 | 40 | 89.83 (79.50-97.50) | 325.33 (263.00–376.58) |

| Chen et al. [84] | Rectangles | No | Low | 9 | 40 | 40 | 91.95 (78.50–99.50) | 151.18 (114.48–175) |

| Chen et al. [28] | Characters | No | Low | 9 | 40 | 40 | 91.00 (77.00–99.50) | 267.0 (199.8–315.0) |

| Bin et al. [33] | Rectangles | No | Low | 9 | 6 | 6 | 95.30 (83.30–100.0) | 58.00 (40.00–67.00) |

| Kwak et al. [91] | LED | No | Low | 8 | 5 | 5 | 91.30 (81.40–98.60) | 32.90 (19.60–51.00) |

| Müller -Putz et al. [92] | LED | No | Low | 4 | 4 | 4 | 72.50 (44.00–88.00) | 19.70 (4.10–34.20) |

| Chen et al. [27] | Characters | No | Low | 9 | 45 | 45 | 88.70 (73.30–98.90) | 61.0 (45.00–75.00) |

| Martinez et al. [93] | Checkerboard | No | Low | 6 | 4 | 4 | 96.50 (82.30–100.0) | 29.60 (17.00–38.70) |

| Min et al. [94] | Line-grid | No | Low | 3 | 6 | 6 | 42.50 (20.00–63.30) | 3.20 (0.10–9.40) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mannan, M.M.N.; Kamran, M.A.; Kang, S.; Choi, H.S.; Jeong, M.Y. A Hybrid Speller Design Using Eye Tracking and SSVEP Brain–Computer Interface. Sensors 2020, 20, 891. https://doi.org/10.3390/s20030891

Mannan MMN, Kamran MA, Kang S, Choi HS, Jeong MY. A Hybrid Speller Design Using Eye Tracking and SSVEP Brain–Computer Interface. Sensors. 2020; 20(3):891. https://doi.org/10.3390/s20030891

Chicago/Turabian StyleMannan, Malik M. Naeem, M. Ahmad Kamran, Shinil Kang, Hak Soo Choi, and Myung Yung Jeong. 2020. "A Hybrid Speller Design Using Eye Tracking and SSVEP Brain–Computer Interface" Sensors 20, no. 3: 891. https://doi.org/10.3390/s20030891

APA StyleMannan, M. M. N., Kamran, M. A., Kang, S., Choi, H. S., & Jeong, M. Y. (2020). A Hybrid Speller Design Using Eye Tracking and SSVEP Brain–Computer Interface. Sensors, 20(3), 891. https://doi.org/10.3390/s20030891