Energy per Operation Optimization for Energy-Harvesting Wearable IoT Devices †

Abstract

1. Introduction

- A detailed energy consumption analysis for wearable gesture recognition devices and novel analytical models considering different operating voltage levels;

- An algorithm to maximize the number of recognized gestures under the given energy budget and accuracy constraints;

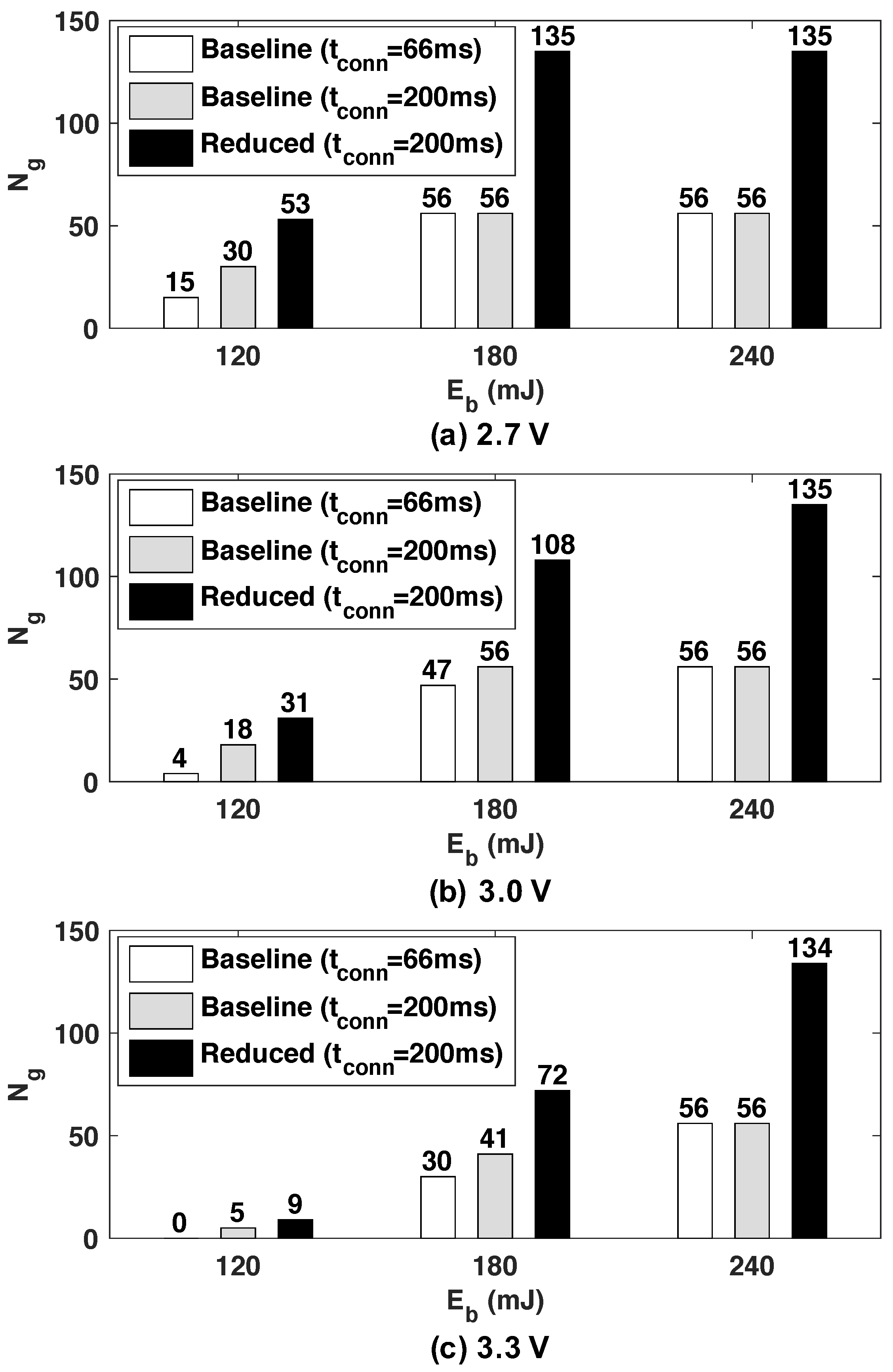

- Empirical evaluations using a wearable device prototype, which demonstrate up to 2.4× increase in the number of recognized gestures compared to a manual optimization;

2. Related Work

3. Target System Overview

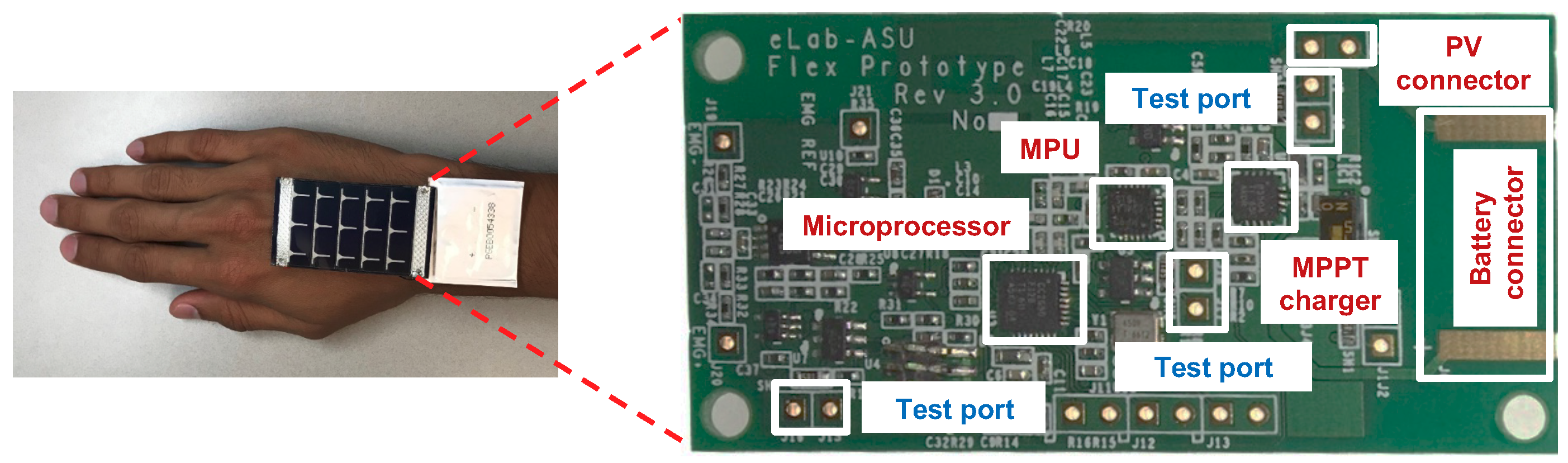

3.1. Energy-Harvesting Wearable Device Prototype

3.2. Problem Formulation

3.3. Overview of the Proposed Approach

- Develop the gesture recognition algorithm on the target hardware and characterize the power consumption of individual components (Section 4.1 and Section 4.2);

- Construct mathematical energy consumption models using this characterization (Section 4.3);

- Derive an expression for and its maximum point using the mathematical models (Section 4.4);

- Combine the output of step 3 with the lower bound on given by the gesture recognition accuracy to find the optimal solution. Note that we characterize through user studies presented in Section 5.4.

4. Energy-Optimal Gesture Recognition

4.1. Gesture Recognition Algorithm

- Baseline NN uses all 120 accelerometer samples collected by the three-axis accelerometer during as input features.

- Reduced NN employs transformed features derived from the raw accelerometer data. We utilize the minimum, maximum, and mean values of each axis () over . Hence, these amounts to a total of nine input features. Since the number of transformed features does not depend on , we can change it at runtime.

4.2. Operation and Energy Measurements

4.3. Energy Consumption Modeling

4.4. The Proposed Optimization Methodology

5. Experimental Evaluation

5.1. Experimental Setup

5.2. Neural Network Classifier Design

5.3. Energy Model Validation

5.4. Gesture Recognition Accuracy Analysis

5.5. Optimization Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Banaee, H.; Ahmed, M.U.; Loutfi, A. Data Mining for Wearable Sensors in Health Monitoring Systems: A Review of Recent Trends and Challenges. Sensors 2013, 13, 17472–17500. [Google Scholar] [CrossRef] [PubMed]

- Patel, M.; Wang, J. Applications, challenges, and prospective in emerging body area networking technologies. IEEE Wirel. Commun. 2010, 17, 80–88. [Google Scholar] [CrossRef]

- Custodio, V.; Herrera, F.J.; López, G.; Moreno, J.I. A Review on Architectures and Communications Technologies for Wearable Health-Monitoring Systems. Sensors 2012, 12, 13907–13946. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Lach, J.; Lo, B.; Yang, G.Z. Toward Pervasive Gait Analysis with Wearable Sensors: A Systematic Review. IEEE J. Biomed. Health Inform. 2016, 20, 1521–1537. [Google Scholar] [CrossRef]

- DMI International Distribution Ltd. Curved Lithium Thin Cells. Available online: http://www.dmi-international.com/data%20sheets/Curved%20Li%20Polymer.pdf (accessed on 30 January 2020).

- Rawassizadeh, R.; Price, B.A.; Petre, M. Wearables: Has the Age of Smartwatches Finally Arrived? Commun. ACM 2014, 58, 45–47. [Google Scholar] [CrossRef]

- Brogan, Q.; O’Connor, T.; Ha, D.S. Solar and Thermal Energy Harvesting With a Wearable Jacket. In Proceedings of the 2014 IEEE International Symposium on Circuits and Systems (ISCAS), Melbourne, Australia, 1–5 June 2014; pp. 1412–1415. [Google Scholar]

- Paradiso, J.A.; Starner, T. Energy Scavenging for Mobile and Wireless Electronics. IEEE Pervasive Comput. 2005, 4, 18–27. [Google Scholar] [CrossRef]

- Park, J. Flexible pv-cell modeling for energy harvesting in wearable iot applications. ACM Trans. Embed. Comput. Syst. (TECS) 2017, 16, 156. [Google Scholar] [CrossRef]

- Proto, A. Thermal Energy Harvesting On The Bodily Surfaces Of Arms And Legs Through A Wearable Thermo-Electric Generator. Sensors 2018, 18, 1927. [Google Scholar] [CrossRef]

- Gljušćić, P.; Zelenika, S.; Blažević, D.; Kamenar, E. Kinetic Energy Harvesting for Wearable Medical Sensors. Sensors 2019, 19, 4922. [Google Scholar] [CrossRef]

- Pozzi, M.; Aung, M.S.; Zhu, M.; Jones, R.K.; Goulermas, J.Y. The Pizzicato Knee-Joint Energy Harvester: Characterization With Biomechanical Data And The Effect Of Backpack Load. Smart Mater. Struct. 2012, 21, 075023. [Google Scholar] [CrossRef]

- Balsamo, D. Hibernus: Sustaining Computation During Intermittent Supply for Energy-Harvesting Systems. IEEE Embed. Syst. Lett. 2015, 7, 15–18. [Google Scholar] [CrossRef]

- Molchanov, P.; Gupta, S.; Kim, K.; Pulli, K. Multi-sensor system for driver’s hand-gesture recognition. In Proceedings of the 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, 4–8 May 2015; Volume 1, pp. 1–8. [Google Scholar]

- Benatti, S. A versatile embedded platform for EMG acquisition and gesture recognition. IEEE Trans. Biomed. Circuits Syst. 2015, 9, 620–630. [Google Scholar] [CrossRef]

- Hiremath, S.; Yang, G.; Mankodiya, K. Wearable Internet of Things: Concept, Architectural Components and Promises for Person-Centered Healthcare. In Proceedings of the Fourth International Conference on Wireless Mobile Communication and Healthcare-Transforming Healthcare through Innovations in Mobile and Wireless Technologies (MOBIHEALTH), Athens, Greece, 3–5 November 2014; pp. 304–307. [Google Scholar]

- Miorandi, D.; Sicari, S.; De Pellegrini, F.; Chlamtac, I. Internet of Things: Vision, Applications and Research Challenges. Ad Hoc Netw. 2012, 10, 1497–1516. [Google Scholar] [CrossRef]

- Gubbi, J.; Buyya, R.; Marusic, S.; Palaniswami, M. Internet of Things (IoT): A vision, architectural elements, and future directions. Future Gener. Comput. Syst. 2013, 29, 1645–1660. [Google Scholar] [CrossRef]

- Atzori, L.; Iera, A.; Morabito, G. The internet of things: A survey. Comput. Netw. 2010, 54, 2787–2805. [Google Scholar] [CrossRef]

- Paudyal, P.; Lee, J.; Banerjee, A.; Gupta, S.K. A Comparison of Techniques for Sign Language Alphabet Recognition Using Armband Wearables. ACM Trans. Interact. Intell. Syst. 2019, 9, 14. [Google Scholar] [CrossRef]

- Wu, J.; Jafari, R. Orientation independent activity/gesture recognition using wearable motion sensors. IEEE Internet Things J. 2018, 6, 1427–1437. [Google Scholar] [CrossRef]

- Castro, D.; Coral, W.; Rodriguez, C.; Cabra, J.; Colorado, J. Wearable-based human activity recognition using an iot approach. J. Sens. Actuator Netw. 2017, 6, 28. [Google Scholar] [CrossRef]

- Tavakoli, M.; Benussi, C.; Lopes, P.A.; Osorio, L.B.; de Almeida, A.T. Robust hand gesture recognition with a double channel surface EMG wearable armband and SVM classifier. Biomed. Signal Process. Control. 2018, 46, 121–130. [Google Scholar] [CrossRef]

- Teachasrisaksakul, K.; Wu, L.; Yang, G.Z.; Lo, B. Hand Gesture Recognition with Inertial Sensors. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 3517–3520. [Google Scholar]

- Zhang, Y.; Harrison, C. Tomo: Wearable, low-cost electrical impedance tomography for hand gesture recognition. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, Charlotte, NC, USA, 8–11 November 2015; pp. 167–173. [Google Scholar]

- Lu, Z.; Chen, X.; Li, Q.; Zhang, X.; Zhou, P. A hand gesture recognition framework and wearable gesture-based interaction prototype for mobile devices. IEEE Trans. Hum. Mach. Syst. 2014, 44, 293–299. [Google Scholar] [CrossRef]

- Liu, Y.T.; Zhang, Y.A.; Zeng, M. Novel Algorithm for Hand Gesture Recognition Utilizing a Wrist-Worn Inertial Sensor. IEEE Sens. J. 2018, 18, 10085–10095. [Google Scholar] [CrossRef]

- Magno, M. InfiniTime: Multi-Sensor Wearable Bracelet With Human Body Harvesting. Sustain. Comput. Inform. Syst. 2016, 11, 38–49. [Google Scholar] [CrossRef]

- Wu, T.; Wu, F.; Redouté, J.M.; Yuce, M.R. An autonomous wireless body area network implementation towards IoT connected healthcare applications. IEEE Access 2017, 5, 11413–11422. [Google Scholar] [CrossRef]

- Kansal, A.; Hsu, J.; Zahedi, S.; Srivastava, M.B. Power Management in Energy Harvesting Sensor Networks. ACM Trans. Embed. Comput. Syst. 2007, 6, 32. [Google Scholar] [CrossRef]

- Noh, D.K.; Wang, L.; Yang, Y.; Le, H.K.; Abdelzaher, T. Minimum variance energy allocation for a solar-powered sensor system. In Proceedings of the International Conference on Distributed Computing in Sensor Systems, Marina del Rey, CA, USA, 8–10 June 2009; pp. 44–57. [Google Scholar]

- Bhat, G.; Park, J.; Ogras, U.Y. Near Optimal Energy Allocation for Self-Powered Wearable Systems. In Proceedings of the International Conference on Computer Aided Design, Irvine, CA, USA, 13–16 November 2017; pp. 368–375. [Google Scholar]

- Zappi, P. Activity Recognition From On-Body Sensors: Accuracy-Power Trade-Off by Dynamic Sensor Selection. Lect. Notes Comput. Sci. 2008, 4913, 17. [Google Scholar]

- Krause, A. Trading Off Prediction Accuracy and Power Consumption for Context-Aware Wearable Computing. In Proceedings of the Ninth IEEE International Symposium on Wearable Computers (ISWC), Osaka, Japan, 18–21 October 2005; pp. 20–26. [Google Scholar]

- Liang, Y.; Zhou, X.; Yu, Z.; Guo, B. Energy-Efficient Motion Related Activity Recognition on Mobile Devices for Pervasive Healthcare. Mob. Netw. Appl. 2014, 19, 303–317. [Google Scholar] [CrossRef]

- Kalantarian, H.; Sideris, C.; Mortazavi, B.; Alshurafa, N.; Sarrafzadeh, M. Dynamic computation offloading for low-power wearable health monitoring systems. IEEE Trans. Biomed. Eng. 2016, 64, 621–628. [Google Scholar] [CrossRef]

- Park, J.; Bhat, G.; Geyik, C.S.; Ogras, U.Y.; Lee, H.G. Energy-Optimal Gesture Recognition using Self-Powered Wearable Devices. In Proceedings of the 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), Cleveland, OH, USA, 17–19 October 2018; pp. 1–4. [Google Scholar]

- TI. BQ25504 Datasheet. Available online: http://www.ti.com/lit/ds/symlink/bq25504.pdf (accessed on 30 January 2020).

- FlexSolarCells. SP3-37 Datasheet. Available online: http://www.flexsolarcells.com/index_files/OEM_Components/Flex_Cells/specification_sheets/01_FlexSolarCells.com_PowerFilm_Solar_SP3-37_Specification_Sheet.pdf (accessed on 30 January 2020).

- InvenSense. Motion Processing Unit. Available online: http://www.invensense.com/wp-content/uploads/2015/02/PS-MPU-9250A-01-v1.1.pdf (accessed on 30 January 2020).

- TI. CC2650 Datasheet. Available online: http://www.ti.com/lit/ds/symlink/cc2650.pdf (accessed on 30 January 2020).

- Akl, A.; Feng, C.; Valaee, S. A Novel Accelerometer-Based Gesture Recognition System. IEEE Trans. Signal Process. 2011, 59, 6197–6205. [Google Scholar] [CrossRef]

- Park, J.; Bhat, G.; Geyik, C.S.; Lee, H.G.; Ogras, U.Y. Optimizing Operations per Joule for Energy Harvesting IoT Devices. In Technical Report; Arizona State University: Tempe, AZ, USA, 2018; pp. 1–7. [Google Scholar]

- National Instruments. PXI PXI Multifunction I/O Module. Available online: https://www.ni.com/en-us/support/model.pxie-6356.html (accessed on 30 January 2020).

| Symbol | Description |

|---|---|

| Time spent by the device to infer a single gesture | |

| Number of gestures recognized in a finite horizon | |

| Active energy consumption of a single gesture | |

| Idle energy consumption of the device | |

| Energy budget over a finite horizon | |

| Communication energy consumption of the device | |

| Active energy consumption of the microcontroller | |

| Active energy consumption of the sensor | |

| Idle energy consumption of the microcontroller | |

| Idle energy consumption of the sensor | |

| Accuracy of gesture recognition |

| Symbols | 2.7 V | 3.0 V | 3.3 V | |||

|---|---|---|---|---|---|---|

| Baseline | Reduced | Baseline | Reduced | Baseline | Reduced | |

| (J) | 30.8 | 31.8 | 30.9 | |||

| (W) | 1134.4 | 1337.8 | 1584.7 | |||

| (W) | 71.8 | 87.3 | 105.5 | |||

| (J) | 86.8 | 89.2 | 92.1 | |||

| (J) | 102.8 | 114.8 | 153.9 | |||

| (ms) | 66 | 200 | 66 | 200 | 66 | 200 |

| (J) | 207.4 | 158.1 42.6 | 203.8 | 157.0 48.2 | 212.5 | 171.1 53.6 |

| (J) | 30.7 | 12.7 15.7 | 37.6 | 24.7 14.3 | 177.3 | 152.2 33.8 |

| (J) | 835.6 | 1047.0 3.9 | 931.6 | 1157.0 8.0 | 1040.0 | 1273.0 18.3 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.; Bhat, G.; NK, A.; Geyik, C.S.; Ogras, U.Y.; Lee, H.G. Energy per Operation Optimization for Energy-Harvesting Wearable IoT Devices. Sensors 2020, 20, 764. https://doi.org/10.3390/s20030764

Park J, Bhat G, NK A, Geyik CS, Ogras UY, Lee HG. Energy per Operation Optimization for Energy-Harvesting Wearable IoT Devices. Sensors. 2020; 20(3):764. https://doi.org/10.3390/s20030764

Chicago/Turabian StylePark, Jaehyun, Ganapati Bhat, Anish NK, Cemil S. Geyik, Umit Y. Ogras, and Hyung Gyu Lee. 2020. "Energy per Operation Optimization for Energy-Harvesting Wearable IoT Devices" Sensors 20, no. 3: 764. https://doi.org/10.3390/s20030764

APA StylePark, J., Bhat, G., NK, A., Geyik, C. S., Ogras, U. Y., & Lee, H. G. (2020). Energy per Operation Optimization for Energy-Harvesting Wearable IoT Devices. Sensors, 20(3), 764. https://doi.org/10.3390/s20030764