Fisheye-Based Smart Control System for Autonomous UAV Operation

Abstract

1. Introduction

- We develop an effective and efficient deep learning-based path planning algorithm. Compared to previous ML-based path planning algorithms, the proposed technique can be applied to a wide target area without sacrificing accuracy and speed. Specifically, we propose a Fisheye hierarchical VIN (Value Iteration Networks) algorithm that applies different map compression levels depending on the location of the drone.

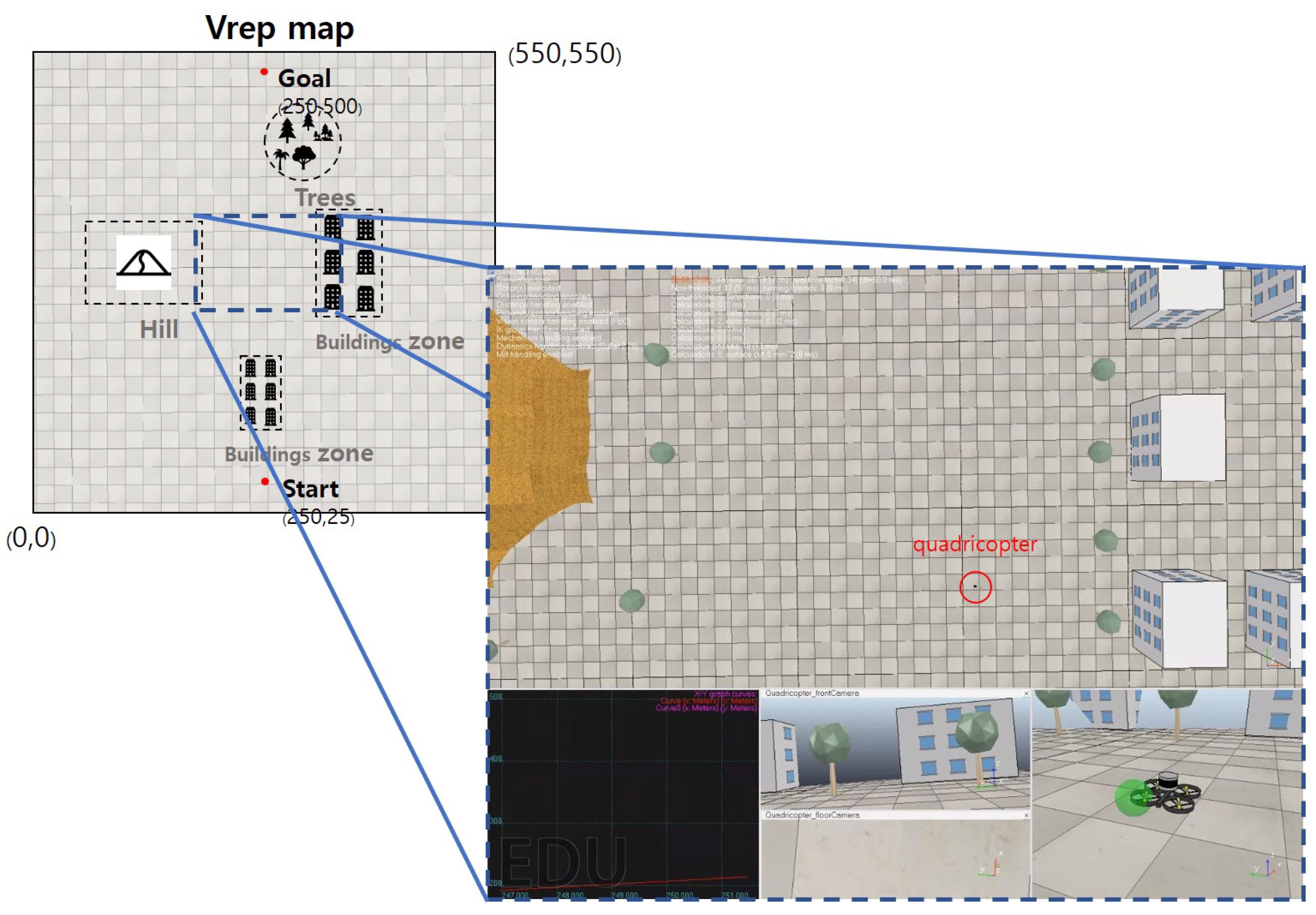

- We build an autonomous flight training and verification platform. Through the proposed simulation platform, it is possible to train ML-based path planning algorithms in a realistic environment that takes into account the physical characteristics of UAV movements. Moreover, thanks to the platform, the proposed autonomous flight algorithm can be verified in a realistic and practical way.

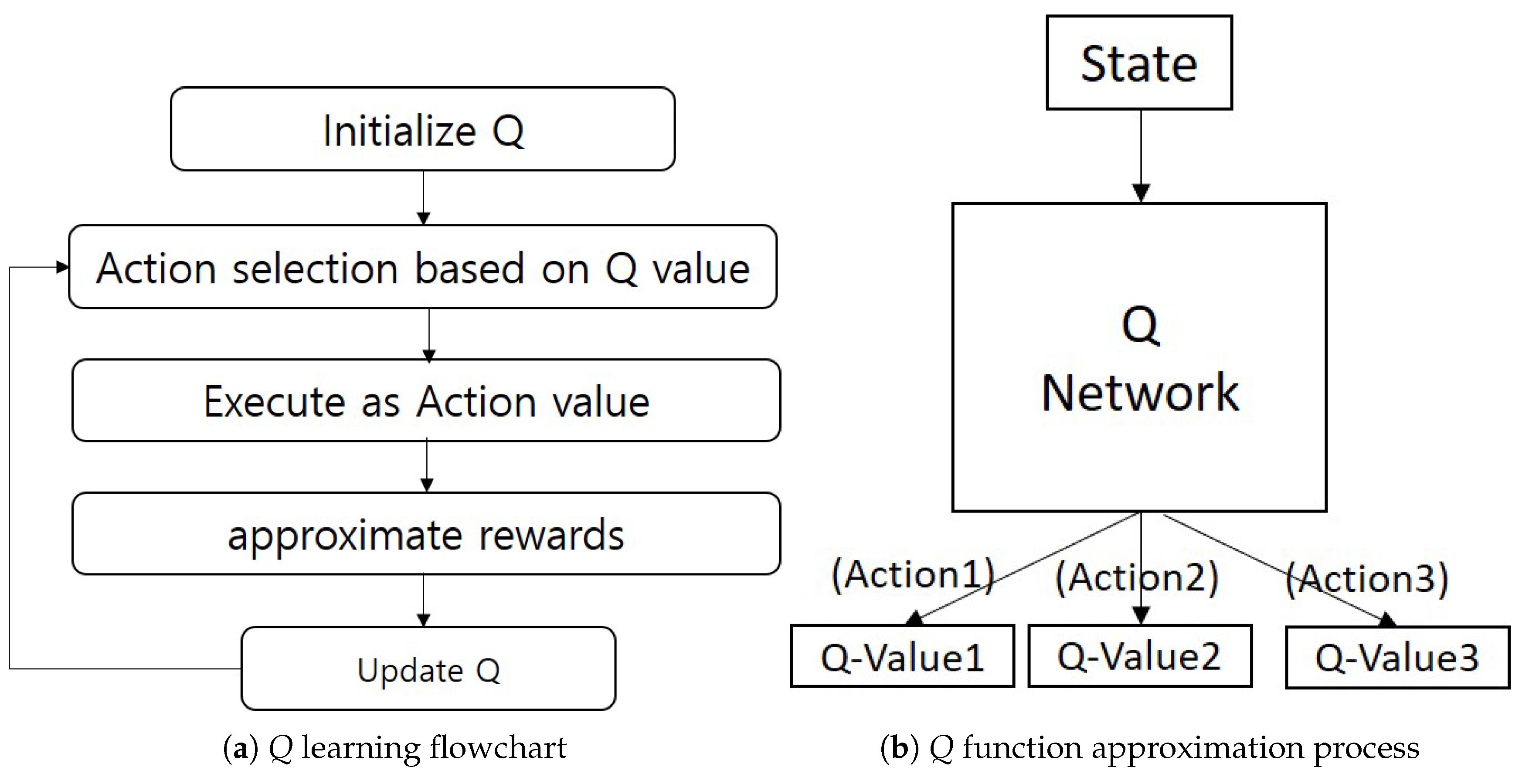

2. Background

2.1. Path Planning

2.2. Simulation Platform

3. Proposed Approach

3.1. Problem Statement

- Multi-Layer HVIN

- Fisheye HVIN

3.2. Multi-Layer Hierarchical VIN

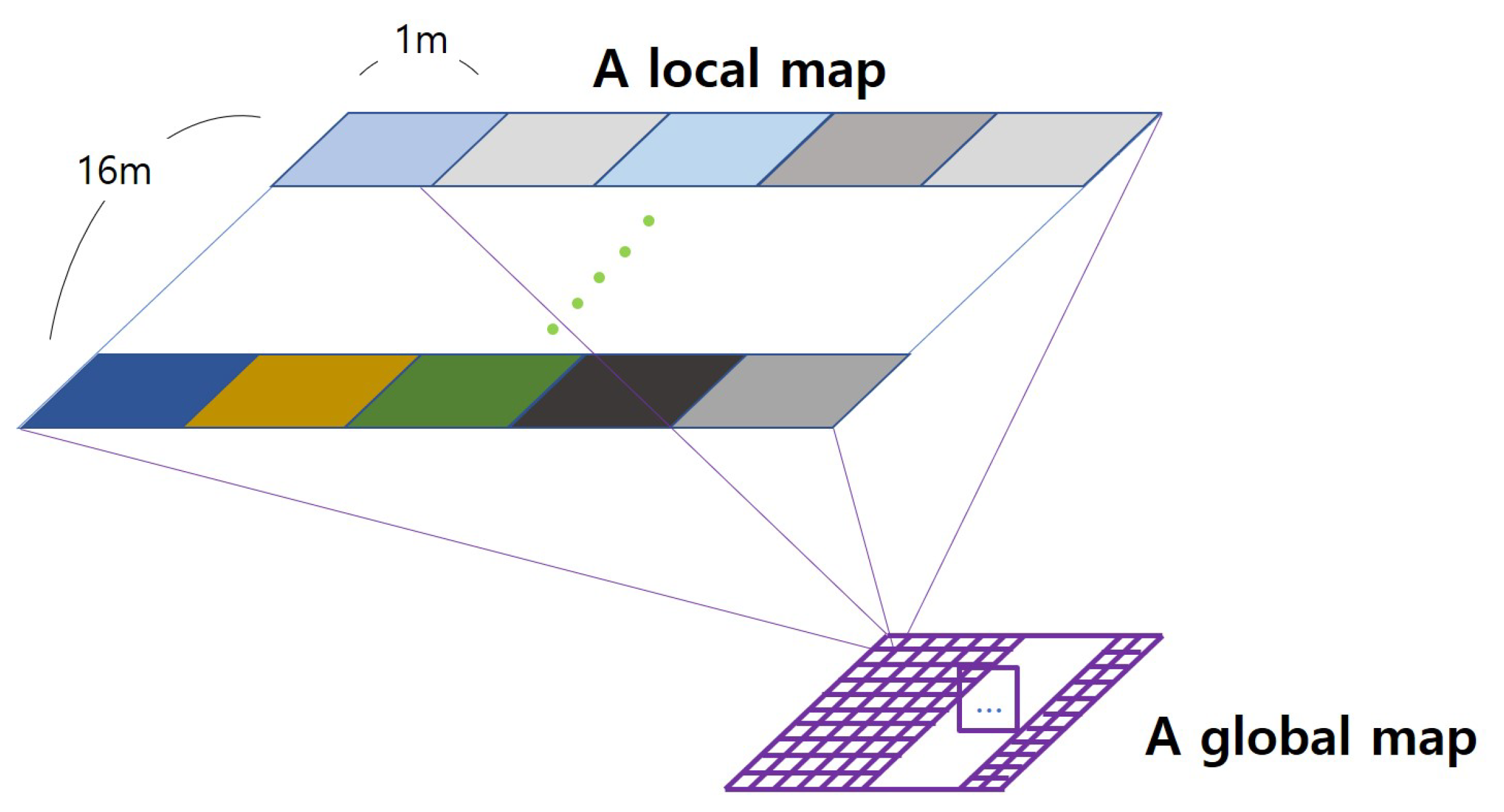

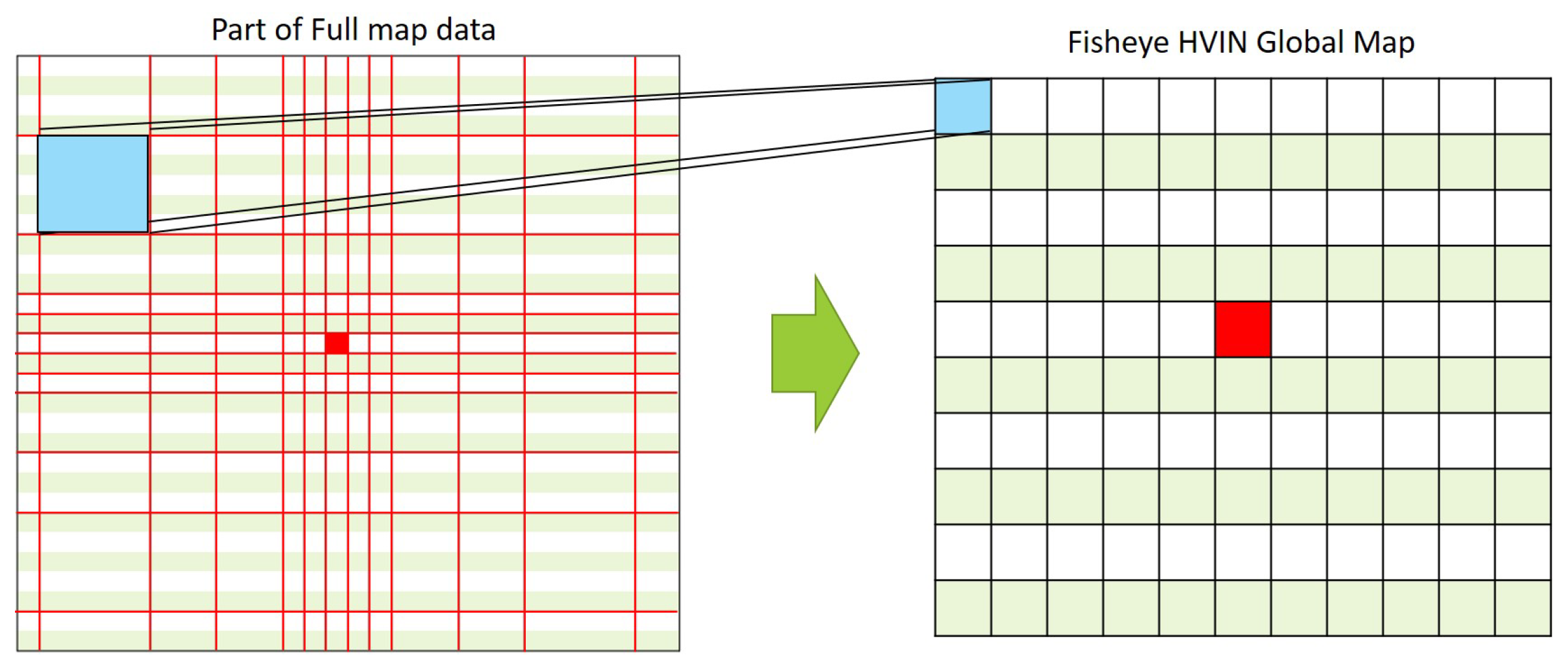

3.3. Fisheye Hierarchical VIN

- Adaptive compression rate: It applies a different compression level to the image map of the global layer according to the location of the drone.

- No more than two layers: It keeps the depth of layering up to two layers. Thus, it can avoid recursive operations with a large volume of overheads.

- Covering unlimited size of area: It can be applied to the large area without size limitation.

- The simulator calculates the drone position in the global map, taking into account the magnification in the global hierarchy. Specifically, it calculates the drone’s global position by subtracting to match the magnification N of the x and y coordinates of the absolute position.

- It calculates a global position of the goal based on the calculated position of a drone.

- It calculates the boundary to be divided into a global map considering the magnification N based on the absolute position of the drone. It maps the calculated boundary to the global map.

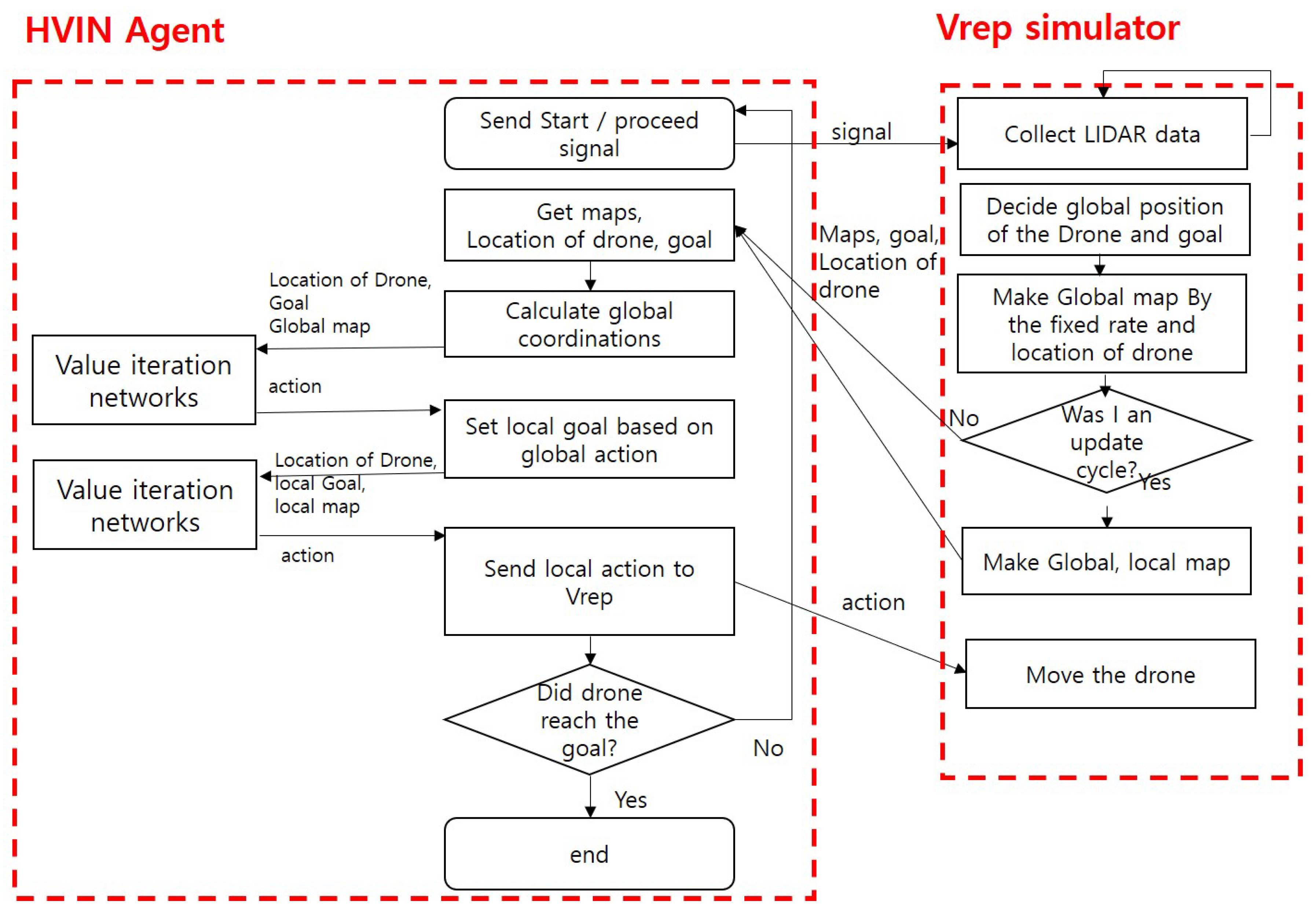

- A Fisheye HVIN agent receives data from a simulator and performs HVIN operation by running VIN in the global map and running another VIN procedure in the local map.

- The HVIN agent sends the action value back to the simulator so that the drone can move complying with the action order from the FHVIN agent.

4. Evaluation

4.1. Simulation-Based Training and Verification Platform

4.2. Experiment Setup

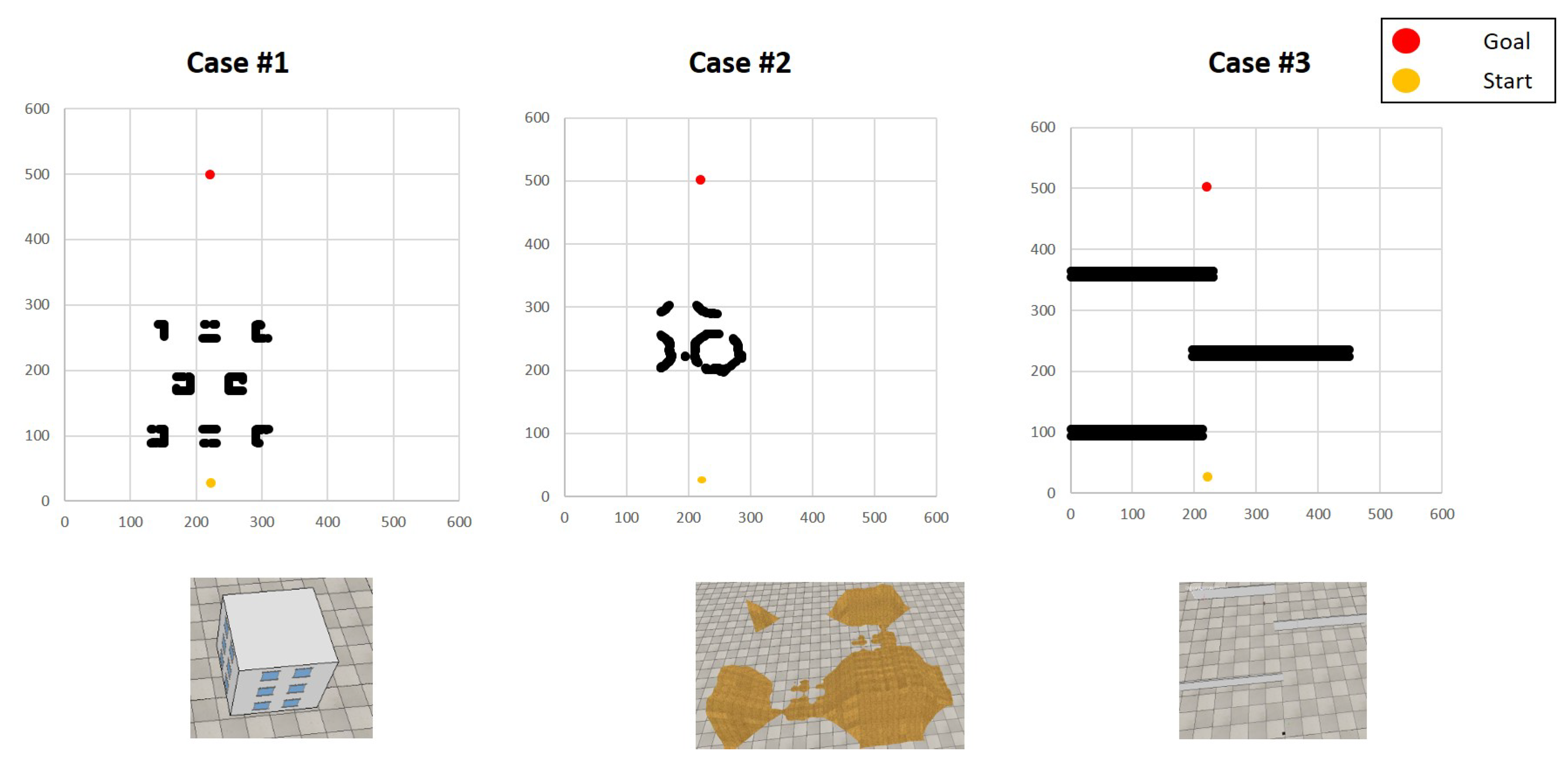

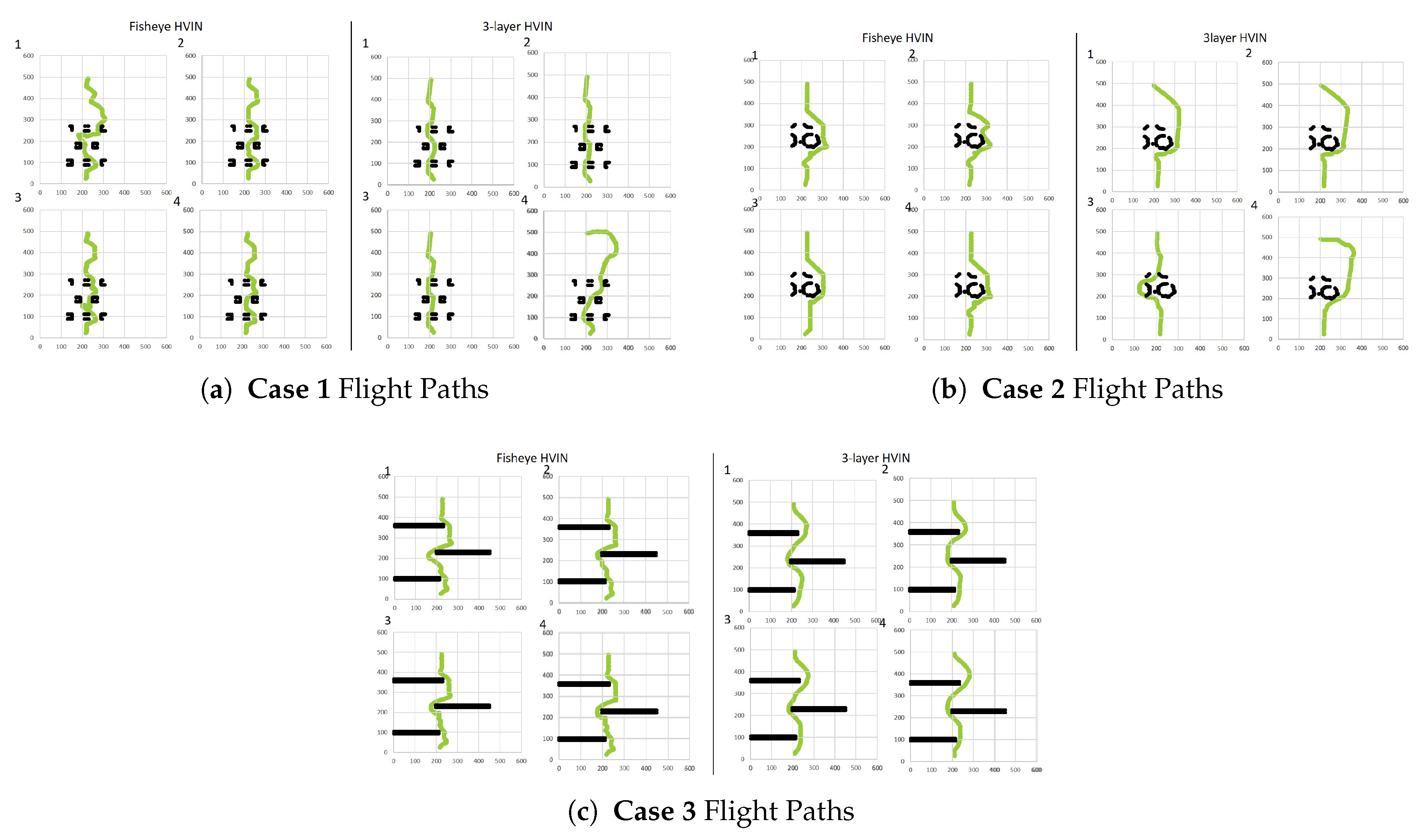

- Case 1: Arranging multiple buildings at intervals of 60 m

- Case 2: Placing large hills in the middle of the map

- Case 3: Placing long obstacles such as walls at intervals of 130 m

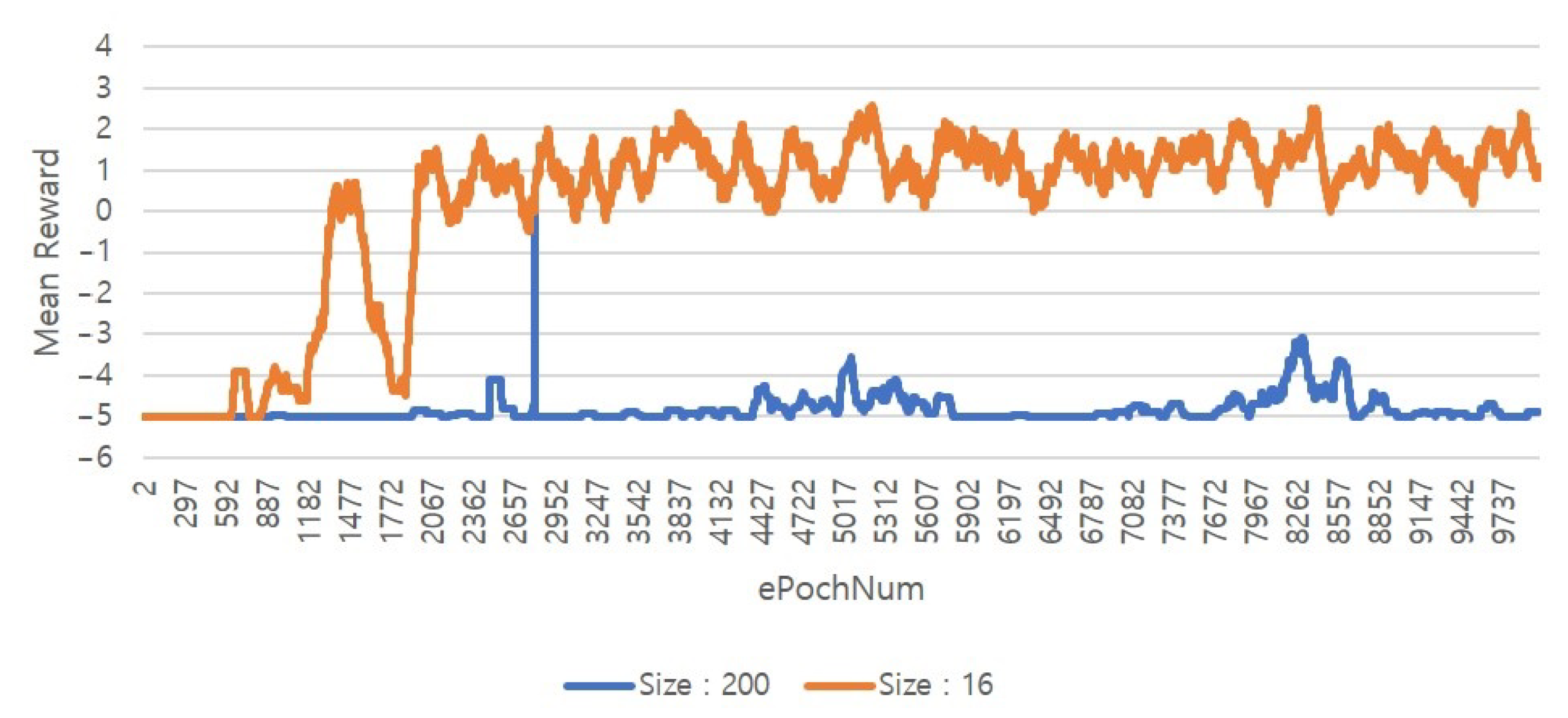

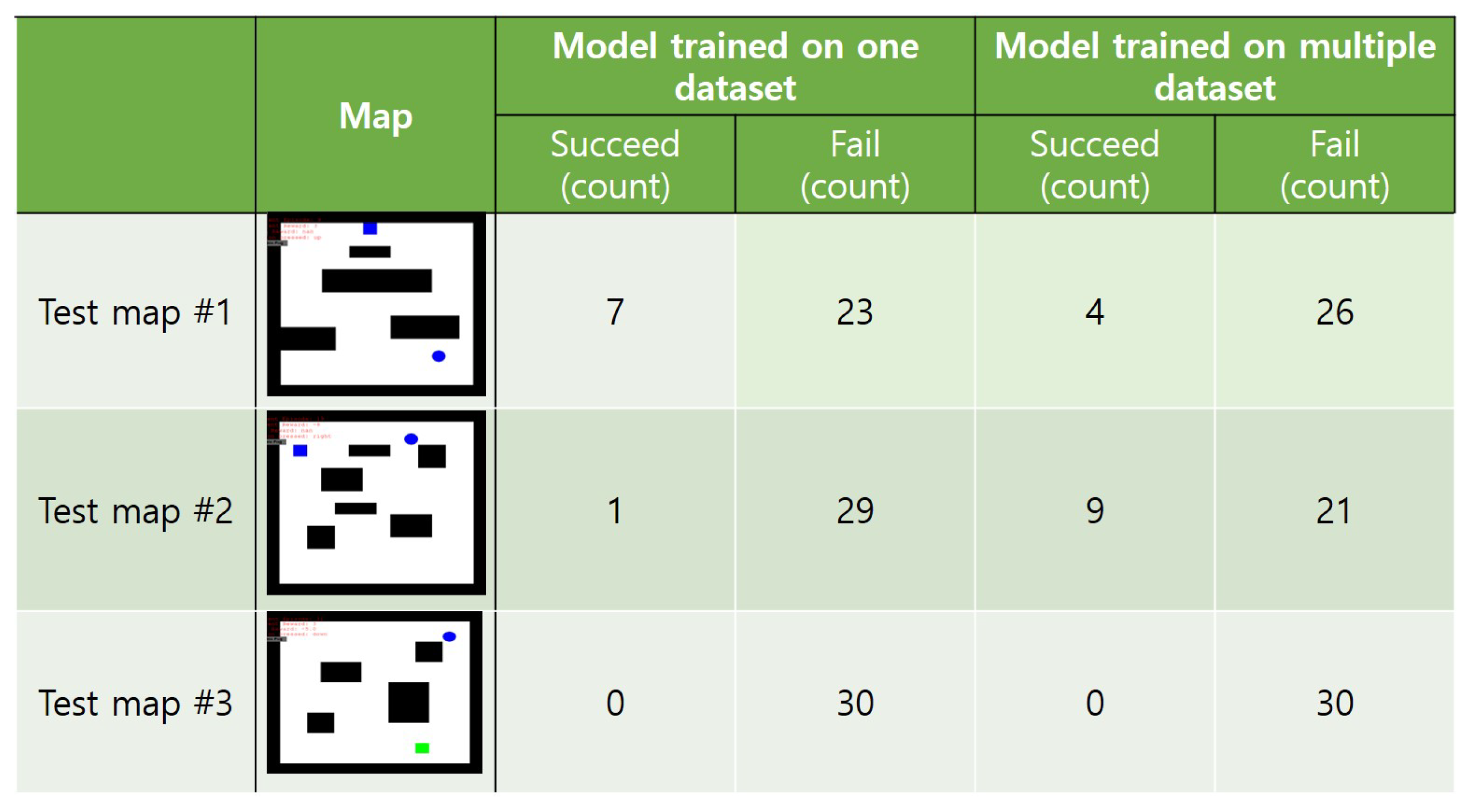

4.3. Evaluation Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| UAV | Unmmaned Aerial Vehicle |

| DQN | Deep Q Networks |

| VIN | Value Iteration Networks |

| HVIN | Hierarchical Value Iteration Networks |

| ML | Machine Learning |

| ROS | Robot Operating System |

| DDPG | Deep deterministic policy gradient algorithm |

| AMP | Autonomous motion planning |

References

- Karaca, Y.; Cicek, M.; Tatli, O.; Sahin, A.; Pasli, S.; Beser, M.F.; Turedi, S. The potential use of unmanned aircraft systems (drones) in mountain search and rescue operations. Am. J. Emerg. Med. 2018, 36, 583–588. [Google Scholar] [CrossRef] [PubMed]

- Besada, J.A.; Bergesio, L.; Campaña, I.; Vaquero-Melchor, D.; López-Araquistain, J.; Bernardos, A.M.; Casar, J.R. Drone Mission Definition and Implementation for Automated Infrastructure Inspection Using Airborne Sensors. Sensors 2018, 18, 1170. [Google Scholar] [CrossRef] [PubMed]

- Sawadsitang, S.; Niyato, D.; Tan, P.S.; Wang, P.; Nutanong, S. Multi-Objective Optimization for Drone Delivery. In Proceedings of the 2019 IEEE 90th Vehicular Technology Conference (VTC2019-Fall), Honolulu, HI, USA, 22–25 September 2019; pp. 1–5. [Google Scholar]

- Ackerman, E.; Strickland, E. Medical delivery drones take flight in East Africa. IEEE Spectrum 2018, 55, 34–35. [Google Scholar] [CrossRef]

- Ahn, T.; Seok, J.; Lee, I.; Han, J. Reliable Flying IoT Networks for UAV Disaster Rescue Operations. Mob. Inf. Syst. 2018. [Google Scholar] [CrossRef]

- Park, J.W.; Oh, H.D.; Tahk, M.J. UAV collision avoidance based on geometric approach. In Proceedings of the 2008 SICE Annual Conference, Tokyo, Japan, 20–22 August 2008; pp. 2122–2126. [Google Scholar]

- Kim, H.; Park, J.; Bennis, M.; Kim, S.L. Massive UAV-to-ground communication and its stable movement control: A mean-field approach. In Proceedings of the 2018 IEEE 19th International Workshop on Signal Processing Advances in Wireless Communications (SPAWC), Kalamata, Greece, 25–28 June 2018. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Zhou, S.; Liu, X.; Xu, Y.; Guo, J. A Deep Q-network (DQN) Based Path Planning Method for Mobile Robots. In Proceedings of the 2018 IEEE International Conference on Information and Automation (ICIA), Wuyishan, China, 11–13 August 2018; pp. 366–371. [Google Scholar] [CrossRef]

- Simao, L.B. Deep Q-Learning. Available online: https://github.com/lucasbsimao/DQN-simVSSS (accessed on 16 December 2020).

- Han, X.; Wang, J.; Xue, J.; Zhang, Q. Intelligent decision-making for 3-dimensional dynamic obstacle avoidance of UAV based on deep reinforcement learning. In Proceedings of the 11th WCSP, Xi’an, China, 23–25 October 2019. [Google Scholar]

- Kjell, K. Deep Reinforcement Learning as Control Method for Autonomous UAV. Master’s Thesis, Polytechnic University of Catalonia, Barcelona, Spain, 2018. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. In Proceedings of the 2016 International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Kong, W.; Zhou, D.; Yang, Z.; Zhao, Y.; Zhang, K. UAV Autonomous Aerial Combat Maneuver Strategy Generation with Observation Error Based on State-Adversarial Deep Deterministic Policy Gradient and Inverse Reinforcement Learning. Electronics 2020, 9, 1121. [Google Scholar] [CrossRef]

- Gupta, A.; Khwaja, A.S.; Anpalagan, A.; Guan, L.; Venkatesh, B. Policy-Gradient and Actor-Critic Based State Representation Learning for Safe Driving of Autonomous Vehicles. Sensors 2020, 20, 5991. [Google Scholar] [CrossRef] [PubMed]

- Qi, H.; Hu, Z.; Huang, H.; Wen, X.; Lu, Z. Energy Efficient 3-D UAV Control for Persistent Communication Service and Fairness: A Deep Reinforcement Learning Approach. IEEE Access 2020, 36, 53172–53184. [Google Scholar] [CrossRef]

- Hu, Z.; Wan, K.; Gao, X.; Zhai, Y.; Wang, Q. Deep Reinforcement Learning Approach with Multiple Experience Pools for UAV Autonomous Motion Planning in Complex Unknown Environments. Sensors 2020, 20, 1890. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Ramos, A.; Sampedro, C.; Bavle, H.; De La Puente, P.; Campoy, P. A Deep Reinforcement Learning Strategy for UAV Autonomous Landing on a Moving Platform. J. Intell. Robot. Syst. 2018, 93, 351–366. [Google Scholar] [CrossRef]

- Polvara, R.; Patacchiola, M.; Hanheide, M.; Neumann, G. Sim-to-Real quadrotor landing via sequential deep Q-Networks and domain randomization. Robotics 2020, 9, 8. [Google Scholar] [CrossRef]

- Tamar, A.; Wu, Y.; Thomas, G.; Levine, S.; Abbeel, P. Value iteration networks. In Proceedings of the Annual Conference on Neural Information Processing Systems (NIPS), Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Sykora, Q.; Ren, M.; Urtasun, R. Multi-Agent Routing Value Iteration Network. In Proceedings of the 37th International Conference on Machine Learning, Vienna, Austria, 12–18 July 2020. [Google Scholar]

- Niu, S.; Chen, S.; Guo, H.; Targonski, C.; Smith, M.C.; Kovačević, J. Generalized Value Iteration Networks: Life Beyond Lattices. arXiv 2017, arXiv:1706.02416. [Google Scholar]

- Radac, M.-B.; Lala, T. Learning Output Reference Model Tracking for Higher-Order Nonlinear Systems with Unknown Dynamics. Algorithms 2019, 12, 121. [Google Scholar] [CrossRef]

- Kim, D.W. Path Planning Algorithms of Mobile Robot. J. Korean Inst. Commun. Sci. 2016, 33, 80–85. [Google Scholar]

- Xin, J.; Zhao, H.; Liu, D.; Li, M. Application of deep reinforcement learning in mobile robot path planning. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 7112–7116. [Google Scholar]

- Lester, P.A. Pathfinding for Beginners. Available online: http://csis.pace.edu/benjamin/teaching/cs627/webfiles/Astar.pdf (accessed on 16 December 2020).

- Parvu, P.; Parvu, A. Dynamic Star Search Algorithms for Path Planning of Flight Vehicles. In Proceedings of the 2nd International Workshop on Numerical Modeling in Aerospace Sciences, Bucharest, Romania, 7–8 May 2014. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Duan, Y.; Chen, X.; Houthooft, R.; Schulman, J.; Abbeel, P. Benchmarking deep reinforcement learning for continuous control. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1329–1338. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Lillicrap, T.P.; Silver, D.; Kavukcuoglu, K. Asynchronous Methods for Deep Reinforcement Learning. In Proceedings of the International Conference on Machine Learning (ICML’16), New York, NY, USA, 19–24 June 2016; pp. 1928–1937. [Google Scholar]

- Li, Y.J.; Chang, H.Y.; Lin, Y.J.; Wu, P.W.; FrankWang, Y.C. Deep Reinforcement Learning for Playing 2.5D Fighting Games. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3778–3782. [Google Scholar] [CrossRef]

- Meyer, J.; Sendobry, A.; Kohlbrecher, S.; Klingauf, U.; Von Stryk, O. Comprehensive simulation of quadrotor uavs using ros and gazebo. In International Conference on Simulation, Modeling, and Programming for Autonomous Robots; Springer: Berlin/Heidelberg, Germany, 2012; pp. 400–411. [Google Scholar]

- Vrep Reference. Available online: http://www.coppeliarobotics.com/helpFiles/index.html (accessed on 16 December 2020).

- Nogueira, L. Comparative Analysis Between Gazebo and V-REP Robotic Simulators. Seminario Interno de Cognicao Artificial-SICA 2014, 2014, 5. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Ng, A.Y. ROS: An open-source Robot Operating System. ICRA Workshop Open Source Softw. 2009, 3, 5. [Google Scholar]

- ROS Tutorials. Available online: http://wiki.ros.org/ROS/Tutorials (accessed on 16 December 2020).

- Kring, A.W.; Champandard, A.J.; Samarin, N. DHPA* and SHPA*: Efficient hierarchical pathfinding in dynamic and static game worlds. In Proceedings of the Sixth Artificial Intelligence and Interactive Digital Entertainment Conference, Stanford, CA, USA, 11–13 October 2010. [Google Scholar]

- Duc, L.M.; Sidhu, A.S.; Chaudhari, N.S. Hierarchical Pathfinding and AI-based Learning Approach in Strategy Game Design. Int. J. Comput. Games Technol. 2008. [Google Scholar] [CrossRef]

- Sarkar, M.; Brown, M.H. Graphical Fisheye Views. Comm. ACM 1994, 37, 73–84. [Google Scholar] [CrossRef]

- Pei, G.; Gerla, M.; Chen, T.W. Fisheye State Routing: A Routing Scheme for Ad Hoc Wireless Networks. In Proceedings of the 2000 IEEE International Conference on Communications. ICC 2000. Global Convergence Through Communications. Conference Record, New Orleans, LA, USA, 18–22 June 2000; Volume 1, pp. 70–74. [Google Scholar]

- Photo by Dennis Dude. Available online: https://www.freeimages.com/photo/fisheye-analogue-1215930 (accessed on 16 December 2020).

- Available online: https://www.softwaretestinghelp.com/machine-learning-tools/ (accessed on 16 December 2020).

| Parameter | Value |

|---|---|

| Image size | 16 |

| Number of value iterations | 20 |

| Number of channels in each input layer | 2 |

| Number of channels in the first convolution layer | 150 |

| Number of channels in q function convolution layer | 10 |

| Learning rate | 0.002 |

| Number of epochs to train | 30 |

| Batch size | 128 |

| Number of dataset | 456,309 |

| Test Case | Proposed Algorithms | No. of Success Trials | No. of Failed Trials |

|---|---|---|---|

| Case 1 | Fisheye HVIN | 19 | 1 |

| Multi-Layer HVIN | 17 | 3 | |

| Case 2 | Fisheye HVIN | 20 | 0 |

| Multi-Layer HVIN | 7 | 13 | |

| Case 3 | Fisheye HVIN | 14 | 6 |

| Multi-Layer HVIN | 9 | 11 |

| Each Trial | Success/Fail | Fight Time (min) |

|---|---|---|

| 1 | Success | 20.1 |

| 2 | Success | 20.7 |

| 3 | Success | 19.6 |

| 4 | Success | 20.2 |

| 5 | Success | 21.6 |

| 6 | Success | 21.3 |

| 7 | Success | 20.6 |

| 8 | Success | 20.6 |

| 9 | Success | 19.9 |

| 10 | Success | 20.4 |

| Fisheye HVIN | Multi-Layer HVIN | |

|---|---|---|

| CPU Usage | Planning is performed only in two layers, local and global. | As the number of layers increases, the number of HVIN agents running operations increases |

| Data Communication | Drone action, position, goal, local & global map data | Drone action, position, goal, map data as many as layers |

| Size of Target Area | Can support without limiting size by adjusting compression rate | Maximum size is limited by the number of layers |

| Simulator Overhead | Compression overhead in a simulator | Overhead for hierarchical map configuration |

| Parameter | Value |

|---|---|

| Image size | 16 or 200 |

| epsilon | 0.1 |

| Number of epochs to train | 10,000 |

| Learning rate | 0.0001 |

| Batch size | 10 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oh, D.; Han, J. Fisheye-Based Smart Control System for Autonomous UAV Operation. Sensors 2020, 20, 7321. https://doi.org/10.3390/s20247321

Oh D, Han J. Fisheye-Based Smart Control System for Autonomous UAV Operation. Sensors. 2020; 20(24):7321. https://doi.org/10.3390/s20247321

Chicago/Turabian StyleOh, Donggeun, and Junghee Han. 2020. "Fisheye-Based Smart Control System for Autonomous UAV Operation" Sensors 20, no. 24: 7321. https://doi.org/10.3390/s20247321

APA StyleOh, D., & Han, J. (2020). Fisheye-Based Smart Control System for Autonomous UAV Operation. Sensors, 20(24), 7321. https://doi.org/10.3390/s20247321