Abstract

Human Action Recognition (HAR) is the classification of an action performed by a human. The goal of this study was to recognize human actions in action video sequences. We present a novel feature descriptor for HAR that involves multiple features and combining them using fusion technique. The major focus of the feature descriptor is to exploits the action dissimilarities. The key contribution of the proposed approach is to built robust features descriptor that can work for underlying video sequences and various classification models. To achieve the objective of the proposed work, HAR has been performed in the following manner. First, moving object detection and segmentation are performed from the background. The features are calculated using the histogram of oriented gradient (HOG) from a segmented moving object. To reduce the feature descriptor size, we take an averaging of the HOG features across non-overlapping video frames. For the frequency domain information we have calculated regional features from the Fourier hog. Moreover, we have also included the velocity and displacement of moving object. Finally, we use fusion technique to combine these features in the proposed work. After a feature descriptor is prepared, it is provided to the classifier. Here, we have used well-known classifiers such as artificial neural networks (ANNs), support vector machine (SVM), multiple kernel learning (MKL), Meta-cognitive Neural Network (McNN), and the late fusion methods. The main objective of the proposed approach is to prepare a robust feature descriptor and to show the diversity of our feature descriptor. Though we are using five different classifiers, our feature descriptor performs relatively well across the various classifiers. The proposed approach is performed and compared with the state-of-the-art methods for action recognition on two publicly available benchmark datasets (KTH and Weizmann) and for cross-validation on the UCF11 dataset, HMDB51 dataset, and UCF101 dataset. Results of the control experiments, such as a change in the SVM classifier and the effects of the second hidden layer in ANN, are also reported. The results demonstrate that the proposed method performs reasonably compared with the majority of existing state-of-the-art methods, including the convolutional neural network-based feature extractors.

1. Introduction

In machine vision, automatic understanding of video data (e.g., action recognition) remains a difficult but important challenge. The method of recognizing human actions that occur in a video sequence is defined as human action recognition (HAR). In video understanding, it is difficult to differentiate routine life actions, such as running, jogging, and walking, using an executable script. There has been an increasing interest in HAR over the past decade, and it is still an open field for many researchers. The domain of HAR has developed considerably with significant application in human motion analysis [1,2], identification of familiar people and gender [3], motion capture and animation [4], video editing [5], unusual activity detection [6], video search and indexing (useful for TV production, entertainment, social studies, security) [7], video2text (auto-scripting) [8], video annotation, and video mining [9].

Human action recognition is a challenging multi-class classification problem due to high intra-class variability within a given class. To overcome variability issue, we propose a scheme to design a feature descriptor that is highly invariant to the fluctuations present in the classes. In other words, the proposed feature descriptor fuses various diverse features. In addition, this paper addresses various challenges in HAR, such as variation in the background (outdoor or indoor), recognizing the gender of the action performer, variation in clothes worn, and scale variation. We deal with constrained video sequences that involve moving background and multiple actions in single video sequence.

Our contributions in this paper can be summarized in the following way. First, for moving object detection, we use a novel technique by incorporating the human visual attention model [10] making it background-independent. Therefore, its computational complexity is much lower than the algorithm which updates background at regular interval for moving object detection in the video. Second, we propose the feature description preparation layer, which includes the use of the HOG features with the non-overlapping windowing concept. Moreover, averaging the features reduces the size of the feature descriptor. In addition to the HOG, we also use the object displacement, which is crucial to differentiate the action performed at the same location, i.e., zero displacements (like boxing, hand waving, clapping, etc.) or at various locations, i.e., non-zero displacement (like walking, running, etc.). Furthermore, a velocity feature is used at this stage to further identify the overlapping actions having non-zero displacement (like walking, running, etc.). It is based on the observation that speed variation among such actions exists and incorporation of velocity feature can aid the classification. To consider the spatial context in terms of boundaries and smooth shapes of the human body, regional features from Fourier HOG are employed. Finally, we propose six different models for classification to demonstrate the effectiveness of the proposed features descriptor across different types of classifier families.

The rest of the paper is organized in the following way. Section 2 discusses the existing literature on HAR. Section 3 outlines the motivation for feature fusion and briefly describes the HOG, support vector machines (SVMs), artificial neural networks (ANNs), multiple kernel learning (MKL), and Meta-cognitive Neural Network (McNN). In Section 4, the proposed approach for HAR is described. Section 3 also presents the proposed techniques for fusing features. Section 4 presents and discusses the experimental results. Finally, we conclude the paper in Section 5.

2. Existing Methods

In the last two decades, most research on human action recognition is concentrated at two levels: (1) feature extraction and (2) feature classification. One of the feature extraction methods is the Dense trajectories approach [11] that extracts features at multiple scales. In addition, these features are sampled for each frame, and based on the displacement information from dense optical flow field actions are classified. In [12], an extension to Dense trajectories was proposed by replacing the Scale-Invariant Feature Transform (SIFT) feature with the Speeded Up Robust Features (SURF) feature to estimate camera motion.

The advantage of these trajectories representations is that they are robust to fast irregular motions and boundaries of human action. However, this method cannot handle the local motion in any action which involves the important movement of the hand, arm, and leg. Therefore, it is not providing enough information for action discrimination. This particular problem is overcome by using important motion parts using Motion Part Regularization Framework (MPRF) [13]. This framework uses Spatio-temporal grouping of densely extracted trajectories, which have been generated for motion part. Objective function for sparse selection of these trajectory groups has been optimized and learned motion parts are represented by fisher vector. Lan et al. again points out in [14] about the local motion of body parts, which result in small changes of intensity, resulting in low-frequency action information. In feature preparation layer, low-frequency action information is not included; therefore, resultant feature descriptors cannot capture enough detail for action classification. In order to address this problem, the Multi-skIp Feature Staking (MIFS) approach was proposed. This approach considers stacking extracted features using differential operators at various scales, which makes the task of action recognition invariant to speed and range of motion offered by the human subject. Due to consideration of various scales in feature building stage, computation complexity is increased in this approach.

In the traditional way, distinct features are derived for representing any human action. However, Liu et al. [15] proposed a human action recognition system, which extracts spatio-temporal and motion features automatically, and this is accomplished by an evolutionary algorithm such as genetic programming. These features are scale and shift invariant and extract color information as well from optical flow sequences. Finally, classification is performed using SVM but the automatic learning needs training process which is time-consuming. The approach in [16] defined the Fisher vector model based on the spatio-temporal local features. Conventional dictionary learning approaches are not appropriate for Fisher vectors extracted from features; therefore, the authors of Reference [16] proposed Multiple Instance Discriminative Dictionary Learning (MIDDL) methods for human action recognition. Recently, frequency domain representation of the multi-scale trajectories has been proposed [17]. The critical points are extracted from the optical flow field of each frame; later multi-scale trajectories are generated from these points and transformed into frequency domain. This frequency information is combined with other information like motion orientation and shapes at the end. The computational complexity of this method is high due to the consideration of the optical flow. The author [18] proposed the skeleton information as a coordinated non-cyclic diagram that gave the kinematic reliance between the joints and bones in the characteristic human body.

Recently proposed, the Deep Convolution Generate Adversarial Network (DCGANs) [19] bridges the gap between supervised and unsupervised learning. The author proposed a semisupervised framework for action recognition, which uses trained discriminator from GAN model. However, the method evaluates the feature based on the appearance of the human and does not account motion in feature building stage. Representation of action is evaluated in terms of distinct action sketches [20]. Sketch formation has been done using fast edge detection. Later on, the person in each particular frame is detected by R-CNN. Furthermore, ranking and pooling are deployed for designing distinct action sketch. Improved dense trajectories and pooling feature fusion are provided to SVM classifier for action recognition. VideoLSTM, a new recurrent neural network architecture, has been proposed in [21]. This architecture can adaptively fit the requirement of given video. This approach exploits new spatial layout of architecture, motion-based attention for relevant spatio-temporal location, and action localization from videoLSTM. In addition to that, there are several methods proposed over the decades [22].

3. Proposed Framework

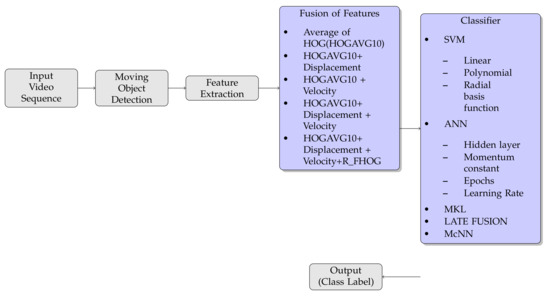

The proposed HAR framework is shown in Figure 1 and involves three parts: moving object detection, feature extraction, and action classification.

Figure 1.

Proposed framework.

3.1. Moving Object Detection

Moving object detection plays crucial role in many computer vision applications. The process involves the classification of pixels from each frame of a video stream as a background or foreground pixel, and a model representing the background is generated. Then, the background is removed from each frame to enable moving object detection and the process is referred to as background subtraction. Popular background subtraction techniques include frame differencing [23,24], Shadow removal [25], Gaussian mixture model(GMM) [26], and CNN-based background removal [27]. However, an algorithm for moving object detection without any background modeling was presented in [28,29,30], and the detailed procedure is given below.

First, an average filter is applied on video sequence of size X × Y for a particular time t.

The moving object detection performance of the method is depicted in Figure 2 and Figure 3 for two different video sequences. The first column shows the snapshot from two different videos. A saliency map is shown in the second column, and a silhouette creation is done using morphological operation and shown in third column. The detected moving objects are shown in the fourth column.

where A represents the avg filter of mask size X × Y, and ⊗ represents the convolution between two images. Next, a Gaussian filter is employed on the image,

Figure 2.

Moving Object Detection: (a) Video Sequence. (b) Saliency Map. (c) Silhouette Creation. (d) Segmented Object Image.

Figure 3.

Moving Object Detection: (a) Video Sequence. (b) Saliency Map. (c) Silhouette Creation. (d) Segmented Object Image.

The Gaussian low-pass filter is represented as G. The saliency value calculated at each pixel is given as

The distance between the respective images is represented as . contains the moving object obtained from specific video. In the proposed approach, moving object detection is performed as

where defines the moving object from the video sequence I. Therefore, the moving object detection is faster and computationally efficient, as the method is background-independent. In other words, the time-consuming process of updating the background at regular intervals is not needed.

3.2. Feature Extraction

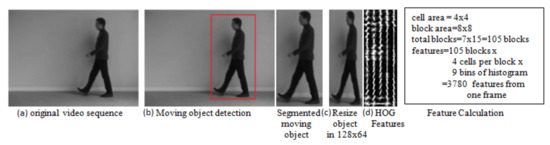

The procedure for extracting feature descriptors from a segmented object is shown in Figure 4, which represents action in a compact three-dimensional space associated with an object, background scene, and variation that appears in the object over time. After detecting and segmenting moving objects from each video sequence, compact features are extracted. In the proposed approach, we calculate the following features.

Figure 4.

Proposed feature extraction technique: (a) Original video sequence. (b) Detected moving object. (c) Resize detected moving object into 128 × 64 size. (d) Histogram oriented gradient (HOG) feature extraction.

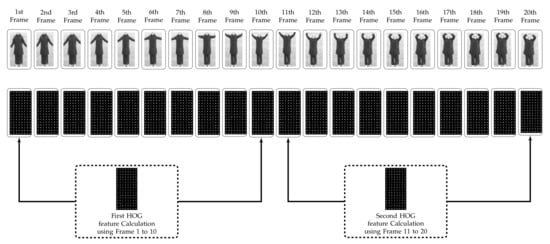

- HOG over 10 non-overlapping frames (HOGAVG10):Here, we have used HOG, which was proposed by Dalal and Trigg [31] in 2005 and is still a highly effective human detection feature. The segmented object is converted to a fixed size (e.g., 128 × 64). HOG features extracted from the resized segmented object (per frame) have a dimensionality of 3780 as explained in Figure 4. Each video has 120 frames; therefore, the final descriptor for each video having one action is 3780 × 120. Feature descriptors contain redundant data; thus, the computational cost for learning and testing is excessive. In the proposed approach, we have calculated HOG features over a window size of 10 non-overlapping frames (HOGAVG10) because the object does not change considerably over the frames as shown in Figure 5 . Thus, there is a considerable reduction in the redundant data by using 10 frames.

Figure 5. Proposed feature calculation scheme.

Figure 5. Proposed feature calculation scheme. - Displacement in Object Position (OBJ_DISP):To evaluate the displacement of an object, the centroid (or center of mass) of the silhouette corresponding to the object is calculated by taking the (arithmetic) mean of the pixels is denoted bySuppose that the centroid of the present frame is and the past frame is , . Then, the displacement (OBJ_DISP) can be approximated using

- Velocity of Object (OBJ_VELO):Similar to the displacement features, the extraction of velocity features also requires the centroid of the detected moving object. The displacement and velocity features are used to estimate the motion of the moving object as they increase the inter-class distance which subsequently increases the accuracy of the overall proposed framework.Velocity OBJ_VELO of object is estimated usingwhere t = (for example, for our proposed approach) and OBJ_DISP refers to Displacement.

- Regional Features from Fourier HOG [32] (R_FHOG):In this work, we extended the Regional Features from Fourier HOG proposed in [32] for action recognition. In Cartesian coordinate system, two-dimensional function is represented by . The polar coordinate representation of same function is defined as , as r is frequency in radius and angle . The relation between polar and Cartesian coordinate is defined asandIn the polar coordinate system, the Fourier transform is combination of radial and angular parts. The basis function for Fourier transform in polar coordinate systems is defined aswhere k is non-negative value, and its also defines the scale of the pattern; is a mth-order Bessel function; and . k can be continuous or discrete value, depending on whether the region is infinite or finite. Transform considering finite region , the basis function is reduced towhere,

The basis function (13) is orthogonal and orthonormal in nature. For , m is number of cycles in angular direction and is defined as number of zero crossing in radial direction.

As the values of m and n increase, finer details can be extracted from the image. Generally, the evaluation of HOG features involves three steps namely gradient orientation binning, spatial aggregation, and magnitude normalization, which are followed in the Fourier domain as well.

- Step 1:

- Gradient Orientation Binning:

The gradient of image is defined as , and its polar representation is defined as

where and . Gradient orientation are stored in bins of histogram using distribution function at each pixel. Suppose that the gradient of any image is resented as . The angular part of G is , and the distribution function for each pixel should be Dirac function gain with

In this work, Fourier basis has been replaced with Fourier coefficient

In HOG, for each gradient vector, its magnitude contribution is split into three closest bins. Therefore, it can be considered a triangular interpolation. In Fourier space, to build a HOG feature, a 1D triangular kernel can be employed to implement the gradient orientation binning. However, the execution of this particular step does not affect the results. Therefore, this step has not been considered in the proposed work.

- Step 2:

- Spatial Aggregation:

To achieve spatial aggregation, convolution operation is performed on a Gaussian Kernel or an isotropic kernel and Fourier coefficients obtained.

- Step 3:

- Local Normalization:

An isotropic kernel is convolved with Fourier coefficient to achieve normalization of gradient magnitude. Steps 2 and 3 are performed using two kernels. The first kernel for spatial aggregation is and the second kernel is used for local normalization. Finally, Fourier HOG is accomplished using

- Regional descriptor using Fourier HOG:

To obtain the regional descriptor, a convolution operation is performed using the Fourier basis (in polar representation) function. .

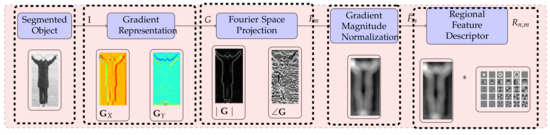

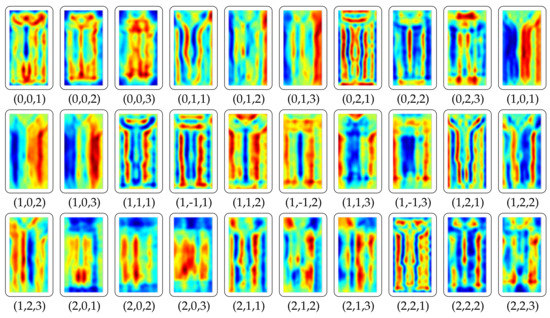

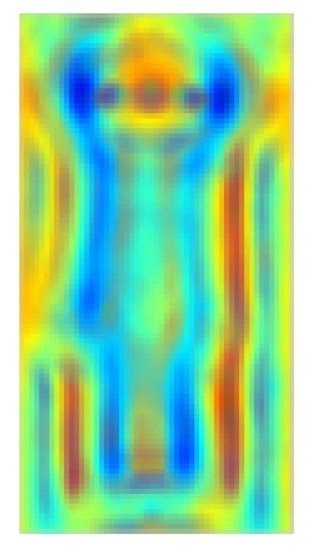

The graphical illustration of calculation of R_FHOG features is provided in Figure 6. Figure 7 depicts the positive result by showing R_FHOG (i.e., ) for the segmented object. To speed up the process, we have not considered non-redundant data. Therefore, we have selected region features which give a maximum response on a human region. The formation of the final template from region features considers a value of scale , order , and degree . Template has been shown in Figure 8.

Figure 6.

The generation process of the Region Feature Description.

Figure 7.

The generation process of the Region Feature Descriptor for segmented moving object: The value below each descriptor image is defined as the scale (k), order (m), and degree (n) of the Basis function .

Figure 8.

R_FHOG template.

3.3. Fusion of Features

The motivation behind fusing features is to increase diversity within classes and thus improve classification.

- HOGAVG10 + OBJ_DISP:Here, we fuse HOGAVG10 with OBJ_DISP. The importance of this parameter is to differentiate between actions performed at a static location (e.g., boxing, hand waving, and hand clapping) and actions performed at a dynamic location (e.g., walking, jogging, and running). Therefore, we gain inter-class discriminative power by combining these two features.The position of an object does not change drastically; thus, we propose to employ the window concept to investigate the object motion over that period. In addition, we take the average of the positions to reduce the feature set. This feature is important as it provides the inter-frame offset corresponding to the object position. The displacement values for all classes are shown in Table 1.

Table 1. Displacement for all classes.

Table 1. Displacement for all classes. - HOGAVG10 + OBJ_VELO:Actions with smaller interclass distances such as walking, jogging and running can be distinguished using velocity features. Therefore, we propose to fuse HOGAVG10 with OBJ_VELO.

- HOGAVG10 + OBJ_DISP + OBJ_VELO:The HOGAVG10 + OBJ_DISP feature combination can differentiate actions performed at static/dynamic locations, whereas the HOGAVG10 + OBJ_VELO feature combination can effectively differentiate classes with similar actions. Therefore, we propose to combine HOGAVG10 + OBJ_DISP + OBJ_VELO to effectively classify similar actions performed at static/dynamic locations present in KTH and Weizmann datasets. The velocity values of persons performing actions are reported in Table 2.

Table 2. Velocity for all classes.

Table 2. Velocity for all classes. - R_FHOG + HOGAVG10 + OBJ_DISP+ OBJ_VELO:The R_FHOG feature is effective at splitting the frequency gradient into bands, subsequently emphasizing the human action region. In other words, R_FHOG represents crucial information regarding boundaries and smoothed shapes. R_FHOG also provides information regarding the spatial context of a human subject.

3.4. Formal Description

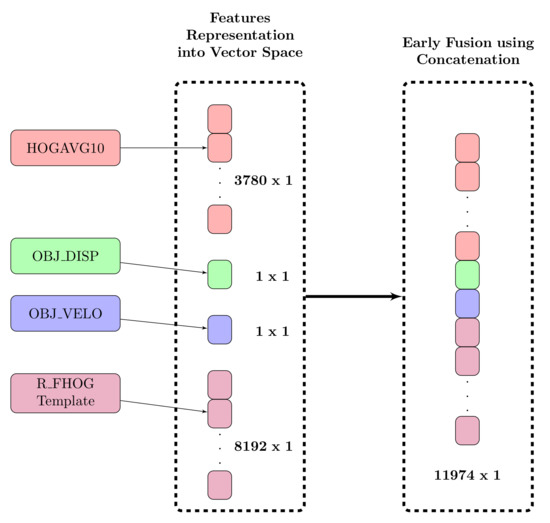

This section presents the proposed fusion techniques in detail. Fusion techniques are performed at both feature and classifier level, referred to as early and late fusion techniques, respectively.

3.4.1. Early Fusion

The task Feature Fusion is performed using basic techniques such as concatenating features one after another as shown in Figure 9.

Figure 9.

Proposed early fusion technique using concatenation method.

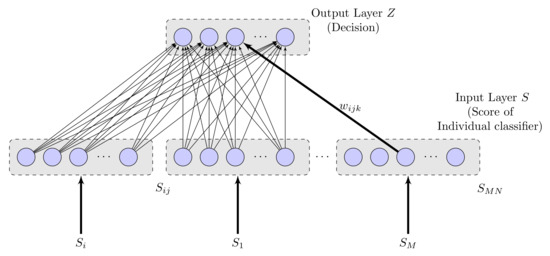

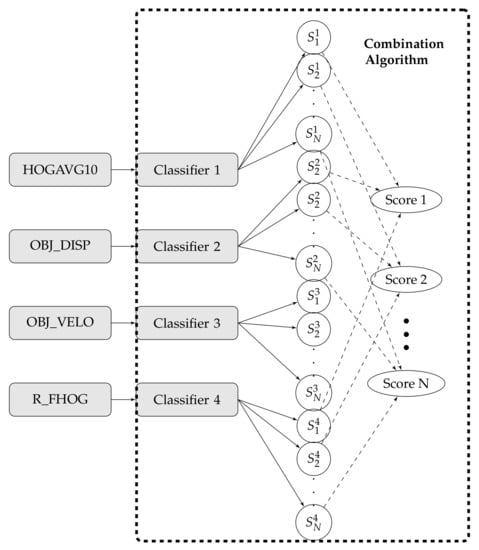

3.4.2. Late Fusion

Late combination is utilized in this work to accomplish combination at classifier level. The two distinctive late combination approaches utilized in the current investigation are Decision Combination Neural Network (DCNN) and Sugeno Fuzzy Integral.

- Decision Combination Neural Network (DCNN)

Decision Combination of Neural Network (DCNN) [33] is neural network architecture with no concealed layers. Accordingly, DCNN characterizes the straight connection between the input and output nodes. The most elevated reaction of a specific output layer node is characterized as choice or class mark for action recognition. Details of the DCNN follow.

As shown in Figure 10, this neural network organization contains two layers: input layer (S) and output layer (Z) individually. M classifier’s outputs are taken care of corresponding to the input layer and there are N inputs nodes related with the class. The connection between units (/nodes) of the input layer and output layer are between associated by weights w. Each input node gets a score , where i characterizes ith classifier and k characterizes kth class. On the off chance that of input is associated with output node j, the weight of this connection is characterized as . The greatest reaction at the output layer node is characterized as the choice of action recognition.

Figure 10.

Proposed Late Fusion Technique using Decision Combination Neural Network (DCNN).

The sigmoid activation function is used in each node, the reaction of this proposed late combination approach is characterized as

- Sugeno’s Fuzzy Integral

The supposition of a basic weighted normal system is that all classifiers are not commonly reliant. In any case, classifiers are connected. To take out a requirement for such presumption, the possibility of fuzzy integral was actualized by the authors of [34,35] and is a nonlinear mapping function characterized with a fuzzy measure. A fuzzy integral is the fuzzy normal of classifier scores. Definitions are given underneath thinking about fuzzy and fuzzy integral, separately.

Definition 1.

Let X be a finite set defined as . A fuzzy measure μ defined on X is a set of function satisfying with

- 1.

- , ,

- 2.

- , .

The fuzzy measure we adopt in this work is the Sugeno integral.

Definition 2.

Let μ be a fuzzy measure on X. The discrete Sugeno integral of function with respect to μ is defined as

where, shows the indices have been permuted so that . Moreover, and .

Fuzzy measure is a -fuzzy measure and is calculated by using Sugeno’s measure. The value of is calculated recursively as

the value of is calculated by solving the equation

where and . This can be easily computed by calculating an st degree polynomial and determining the distinct root greater than −1. The fuzzy integral is characterized in proposed work as late combination method for consolidating classifiers scores. Assume that is a bunch of action classes of interest. Let be a bunch of classifiers and A be an input pattern considered for action recognition. Let be the assessment of the object A for class , for example, is sign of guarantee in the characterization of the input pattern A for class utilizing the classifier . Value 1 for is characterizing outright guarantee of input pattern A in class and 0 shows supreme uncertainty that the object is in .

Knowledge of the density function is needed to figure the fuzzy integral and , ith density is considered as the level of significance of the source towards a ultimate choice. A maximal evaluation of comprehension between the evidence and desire is spoken to as fuzzy integral. In the proposed approach, the density function is approximated via preparing information gave to the classifier. The calculation in the proposed algorithm characterizes the late combination approach for choice combination. The Algorithm 1 defines the late fusion approach for decision fusion.

| Algorithm 1 Late fusion (decision fusion) using fuzzy integral. |

| procedureFuzzy –Integral |

| Calculate ; |

| for each action class do |

| for each classifier do |

| Compute |

| Determine |

| end for |

| Calculate fuzzy integral for the action class |

| end for |

| Find out the action class label |

| end procedure |

3.5. Classifier

Various classifiers have been used to evaluate the performance of proposed approach. The parameters and their respective values are summarized in Table 3. We have considered the parameters kernel function with degree (d), Gamma in Kernel Function (), and Regularization Parameter (c). Polynomial and Radial basis kernel functions have been used.

Table 3.

Parameters setting for SVM and their respective levels evaluated in experimentation [36].

The parameters of the ANN are hidden layer neurons (n), the value of the learning rate (lr), momentum constant (mc), and number of epochs (ep). To find out the values of these parameters efficiently, ten levels of n, nine levels of mc, and ten levels of ep are evaluated in the parameter setting experiments. The value of lr is initially fixed at 0.1. The values of these parameters and their respective levels are evaluated in Table 4.

Table 4.

Parameters setting for neural network and their respective levels evaluated in experimentation [36].

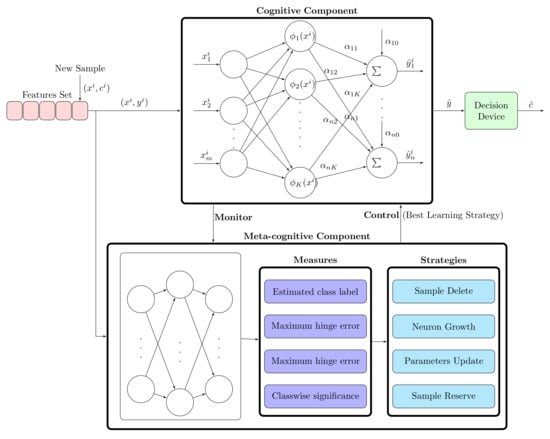

3.5.1. Meta-Cognitive Neural Network (McNN) Classifier

Neural network provides a self-learning mechanism, whereas the meta-cognitive phenomenon comprises self-regulated learning. Self-regulation makes the learning process more effective. Therefore, there is need of jump from single or simple learning to collaborative learning. The collaborative learning can be achieved using the cognitive component, which interprets knowledge, and the meta-cognitive component, which represents the dynamic model of the cognitive component.

Self-regulated learning is a key factor of meta-cognition. It is threefold mechanism: it plans, monitors, and manages the feedback. According to Flavell [37], meta-cognition is awareness and knowledge of the mental process for monitoring, regulate, and direct the desired goal. We present here Nelson and Naren’s meta-cognitive model [38]. The cognitive component and meta-cognitive component are prime entities of McNN. A detailed architecture of the Meta-cognitive Neural network is shown in Figure 11.

Figure 11.

McNN architecture.

3.5.2. Cognitive Component

The cognitive component includes three-layered feedforward radial basis function network. It comprises an input layer, an output layer, and an intermediate hidden layer. The activation function for hidden neurons is Gaussian whereas, for output neurons, it is a linear activation function. Hidden layer neurons are built by the meta-cognitive algorithm. The predicted output of the McNN classifier with k Gaussian neurons from training samples is

where = bias to jth output neuron, is weight connecting the kth neuron to the jth output neuron, and is the output of kth Gaussian neuron to the excitation x is represented as

where is the mean, is the variation in the mean value of the kth hidden neuron, and l represents the hidden layer class.

3.5.3. Meta-Cognitive Component

- Measures:

The meta cognitive component of McNN uses four parameters for regulation learning:

- Estimated class label:Estimated class label can be calculated from predicted output as

- Maximum hinge error:Hinge error estimates posterior probability more precisely than mean square error function, and, eventually, the error between the predicted output and actual output hinge error loss defined asThe maximum absolute hinge error E is as follows,

- Confidence of classifier:The classifier confidence is given as

- Class-wise significance:The input feature is mapped to higher dimensional using Gaussian activation function applied to hidden layer neurons. Therefore, it is considered to be on hyper-dimensional sphere. The feature space is described by the mean and variation in the mean value of Gaussian neurons. Moreover, steps are shown in [39] for the calculation of potential , which is given asIn the classification problem, each class distribution is considered crucial and eventually affect the accuracy of the classifier, significantly. Therefore, a measure of the spherical potential of new training data x belongs to class c with respect to neurons belongs to same class has been utilized, i.e., . Class-wise significance is calculated aswhere is the number of neurons associated with class c. The sample contains relevant information or not depends on , the lowest value of it denotes sample consider novelty.

- Learning Strategy:

Based on various measures, the meta-cognitive component has different learning strategies, which deal with the basic rules of self-regulated learning. These strategies manage sequential learning process by utilizing one of them for new training sample.

- Sample Delete Strategy:This strategy reduces the computational time consumed by learning the process. It reduces the redundancy in training samples, i.e., it prevents similar samples being learnt by the cognitive component. The measures used for this strategy are predicted class label and confidence level. When actual class label and predicted class label of the new training data is equal and the confidence score is greater than expected value, it indicates that new training data training data provides redundancy.

- Neuron growth strategy:New hidden neuron should be added to the cognitive component or not is decided by this strategy. When new training sample include substantial information and the estimated class label is different from an actual class label, new hidden neuron should be added to adopt the knowledge.

- Parameter update strategy:Parameters of the cognitive component are updated in this strategy from new training sample. The value of parameters change when an actual class label is same as the predicted class of sample and maximum hinge loss error is greater than a threshold set for adaptive parameter updation.

- Sample reverse strategy:Fine tuning of parameters of the cognitive component has been established by new training samples, which are having some information but not much relevant.

The parameters are updated in McNN, when the desired class is equal to the actual class. The value of maximum hinge error E for neuron growth and the parameter update strategy is between 1.2 and 1.5, and 0.3 and 0.8, respectively. For parameter update strategy, If the value is close to 1, it will avoid system to use any sample. The value is close to 0 cause all samples to be used in updation. In neuron addition strategy, the value 1 lead of E lead to misclassification of all samples and the value 2 causes few neurons will be added. Other parameters are selected accordingly and the range of values of parameters have been shown in Table 5.

Table 5.

Parameter settings for McNN classifier.

4. Performance Evaluation

A performance evaluation of the proposed work has been done using a sufficient set of performance parameters through extensive experiments on standard datasets, which is described as follows.

4.1. Database Used

The proposed approach was applied to two datasets: the KTH [40] and Weizmann datasets [41]. These datasets are popular benchmarks for action recognition in constrained video sequences. These datasets incorporate only one action in each frame with the static background.

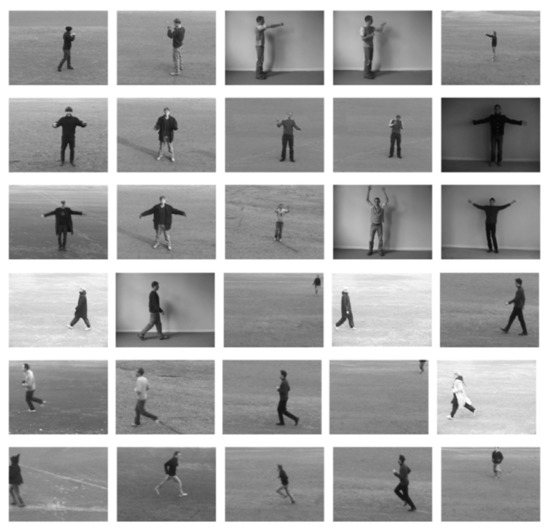

4.1.1. KTH Dataset

The KTH dataset contains action clips with variations in the background, object, and scale, and was thus useful for determining the accuracy of our proposed method. The video sequences contain six different types of human actions (i.e., walking, jogging, running, boxing, hand waving, and hand clapping) performed several times by 25 subjects in four different scenarios: outdoors, outdoors with scale variation (zooming), outdoors with different clothes (appearance), and indoors, as illustrated below. Static and homogeneous backgrounds are considered in all sequences, where the frame rate is 25 frames per second. The resolution of these videos is 160 × 120 pixels, and the duration of the videos is four seconds on average. There are 25 videos for each action in the four different categories. Certain snapshots of video sequences from the KTH dataset are shown in Figure 12.

Figure 12.

Video sequences from KTH datasets.

4.1.2. Weizmann Dataset

The Weizmann database [41] is a collection of 90 low-resolution (180 × 144, de-interlaced 50 frames per second) video sequences. The dataset contains nine different humans, each one performing ten natural actions: run, walk, skip, jumping-jack (or shortly jack), jump forward on two legs (or jump), jump in place on two legs (or pjump), gallop sideways (or side), wave two hands (or wave2), wave one hand (or wave1), or bend. Snapshots of the Weizmann dataset are shown in Figure 13.

Figure 13.

Video sequences from Weizmann datasets.

4.1.3. UCF11 Dataset

The UCF11 dataset [42] considered 11 human action with 1600 videos. These videos comprise youtube videos defining real human actions. The actions are performed by 25 various human objects under challenging conditions like large changes in viewpoint change, object scale, object appearance and pose, camera motion, cluttered background, illumination variation, etc. There are 11 action categories in UCF11: basketball shooting (Shoot), biking/cycling (Bike), diving (Dive), golf swinging (Golf), horse back riding (Ride), soccer juggling (Juggle), swinging (Swing), tennis swinging (Tennis), trampoline jumping (Jump), volleyball spiking (Spike), and walking with a dog (Dog).

4.1.4. HMDB51 Dataset

The HMDB51 dataset [43] is built up using videos, adopted from YouTube, movies, and various other sources for managing unconstrained environment. The datasets have the variety of 6849 video clips and 51 action categories. Each class has the at least 101 clips.

4.1.5. UCF101 Dataset

UCF101 is a dataset [44] of 13,320 videos including 101 different action classes. This dataset reflects the large diversity in terms of human appearance performing the action, scale, and viewpoint of the object, background clutter, and illumination variation, resulting in the most challenging dataset. This dataset is bridging path to real-time action recognition.

4.2. The Testing Strategy

The KTH dataset contains 600 video samples of 6 types of human actions. The dataset is divided into two parts: 80% and 20%. We have used a 10-fold leave-one-out cross-validation scheme on the 80% part and left out 20% for testing. In this experiment, nine splits are used for training, with the remaining split being used for the validation set, which optimizes the parameters of each classifier. The same testing strategy has been implemented for Weizmann dataset. Leave-One-Group-Out cross-validation has been used for the UCF11 dataset. A cross-validation strategy used for the HMDB51 dataset, the same as in [43]. The whole dataset is divided into three portions. Each includes 70 training and 30 testing video clips. The training strategy used for UCF101 is three split technique evaluated for training and testing.

4.3. Experimental Setup

Experiments were performed on an Intel(R) Core(TM) i5 (2nd Gen) 2430M CPU @ 2.5 GHz with 6 GB of RAM and a 64-bit operating system. The names of the parameters and the values used in this proposed work are listed in Table 3 and Table 4, respectively. In this section, we examine the performance of our proposed approach and compare it with the state-of-the-art methods. We also compare different classifier performances with our feature extraction technique for the proposed framework. All confusion matrices address the average accuracy of all features for the SVM classifier with different kernel functions, as well as for the ANN with different numbers of hidden layers.

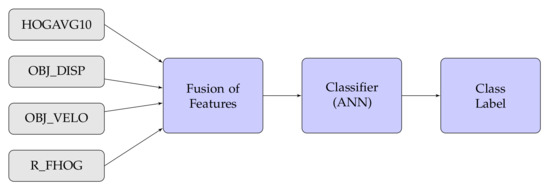

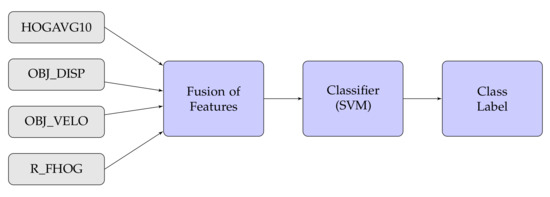

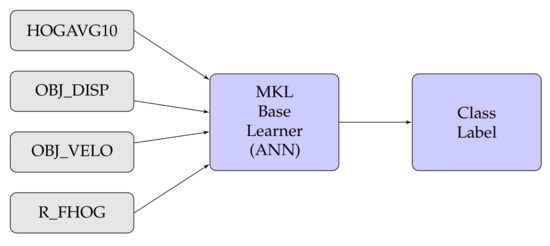

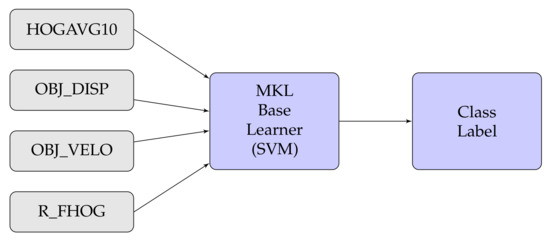

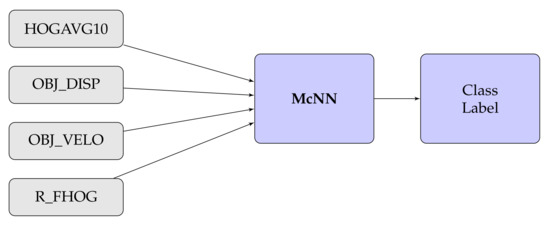

In this experiment, we have also considered different types of fusion techniques, i.e., early and late have been considered for experimentation. We have employed five various fusion strategies in the proposed work. Figure 14, Figure 15, Figure 16, Figure 17, Figure 18 and Figure 19 present the various models of the early fusion and late fusion techniques used in our experiments. In Figure 14, early fusion has been applied to features and fed to ANN classifier, and some early fusion of features are fed to SVM classifier as shown in Figure 15. Features are provided to MKL with base learner as ANN, and MKL with base learner as SVM, these strategies are defined in Figure 16 and Figure 17, respectively. Figure 18 shows a combination of classifiers scores using late fusion techniques, where we have used SVM classifier in this technique. Meta-cognitive Neural network has been used with all proposed features as shown in Figure 19.

Figure 14.

Early fusion with ANN.

Figure 15.

Early fusion with SVM.

Figure 16.

MKL with ANN.

Figure 17.

MKL with SVM.

Figure 18.

Late fusion.

Figure 19.

Meta-cognitive neural network.

4.4. Empirical Analysis

The confusion matrix is shown in Table 6 and Table 7 for different combinations of feature extraction and classifier techniques for the KTH dataset. Table 8, Table 9 and Table 10 show the results with the Weizmann dataset. We have considered linear, polynomial, and radial basis kernel functions for the SVM classification. The results demonstrate that we obtain the good result (97%) with the radial basis function SVM and best result 99.98% with the late fusion using fuzzy integral approach compare to other proposed approaches. Ambiguity arises from the classes like boxing, hand waving and hand clapping actions. Furthermore, running, walking and jogging are misclassified by all classifiers.

Table 6.

Confusion matrix for SVM classifier with different kernel functions for KTH dataset.

Table 7.

Confusion matrix for Neural Network with different number of Hidden layer for KTH dataset.

Table 8.

Confusion matrix for SVM classifier with different kernel functions for Weizmann dataset.

Table 9.

Confusion matrix for SVM classifier with different kernel functions for Weizmann dataset.

Table 10.

Confusion matrix for Neural Network with different number of Hidden layer for Weizmann dataset.

The confusion matrix with Radial basis function SVM (RBF SVM) for the UCF11 dataset is shown in Table 11. We accomplished 77.05% accuracy in this proposed approach for UCF11 dataset with previously mentioned parameters SVM classifier as in Table 4. Table 12, Table 13 and Table 14 shows the confusion matrix for KTH, Weizmann & UCF11 dataset using McNN. The UCF11 dataset has unconstrained environments and contains various challenges in video sequences; the proposed feature extraction technique is not adequate for describing the action performed by the human object. Therefore, we can see that a lot of actions are misclassified into other actions like Shoot is misclassified as Swing, etc.

Table 11.

Confusion matrix for RBF SVM for UCF11 dataset.

Table 12.

Confusion matrix of McNN for KTH dataset.

Table 13.

Confusion matrix of McNN for Weizmann dataset.

Table 14.

Confusion matrix of McNN for UCF11 dataset.

Accuracy obtained for KTH dataset using late fusion using DCNN is 99.19% and late fusion using fuzzy integral is 99.98% i.e., effectiveness of fuzzy integral technique compared to DCNN technique as late fusion is higher as shown in Table 15.

Table 15.

State-of-the-Art Comparison of Accuracy of Proposed Approaches for KTH dataset.

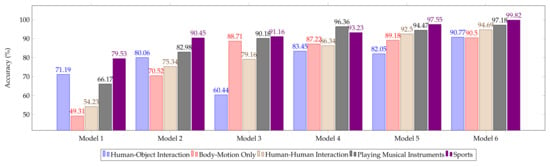

Moreover, the performance of five broad groups is evaluated in this work using the particular model as shown in Figure 20. Recognition rate has been calculated for all group categories. A Large portion of performance has been gain from sports category. Even all other categories are performing impressively.

Figure 20.

Accuracy comparison of different models on UCF101 dataset action categories.

Table 15 and Table 16 compare our results with the state-of-the-art methods. Table 15 compares our proposed approach with 21 other approaches that used the KTH dataset. Our approach obtained an accuracy of 100%, which is outperformed to those of the state-of-the-art methods. The proposed approach is compared with the state-of-the-art methods for the Weizmann dataset, which is shown in Table 16. The result shows that our method outperforms the other methods. These comparisons demonstrate that the proposed approach is effective and superior in classifying actions.

Table 16.

State-of-the-Art Comparison of Accuracy of Proposed Approaches for Weizmann dataset .

Table 17, Table 18 and Table 19 show the state-of-the-art comparison for UCF11 dataset, HMDB-51 dataset and UCF101 dataset, respectively. Our results are achieving very good classification rate compared to other approaches, but humbler than the state-of-the-art results. Compare to early fusion and intermediate fusion techniques, late fusion techniques are superior. In late fusion techniques, fuzzy integral is performing better than DCNN late fusion technique for UCF11 dataset.

Table 17.

State-of-the-Art Comparison of Accuracy of Proposed Approaches for UCF11 dataset .

Table 18.

State-of-the-Art Comparison of Accuracy of Proposed Approaches for HMDB-51 dataset .

Table 19.

State-of-the-Art Comparison of Accuracy of Proposed Approaches for UCF101 dataset .

In Table 20, we compare our approach with various convolutional neural network architectures. For this comparison, the average accuracy has been calculated over three splits as is the original setting. For the UCF101 dataset, we find that our McNN with proposed features performed well compared with state-of the-art methods. For UCF101, we get a 1% improvement in classification accuracy. However, our result for HMDB51 dataset is not the best result, but the improvement in resultant accuracy is considerable.

Table 20.

Classification accuracy against the state-of-the-art on HMDB51 and UCF101 datasets averaged over three splits with CNN architectures.

5. Conclusions

In this paper, we have employed a HAR-based novel feature fusion approach. HOG, R_FHOG, displacement, and velocity features are combined to prepare the feature descriptor in this approach. The classifiers used to classify human action are an ANN, a SVM, MKL, late fusion approach, and McNN. The experimental results demonstrate that this proposed approach can easily recognize actions such as running, walking, and jumping. The McNN outperforms other classifiers. The proposed approach performs reasonably well compared with the majority of existing state-of-the-art methods. For the KTH dataset, our proposed approach outperforms existing methods, and for the Weizmann dataset our approach performs similarly to standard available methods. We have also checked the system performance with unconstrained UCF11 dataset, HMDB51 dataset, and UCF101 dataset, and its performance is approximate to the state-of-the-art method.

In the future, an overlapping window can be utilized for the feature extraction technique to increase the accuracy of the proposed method. Here, the proposed work focuses only on a constrained video; however, we can also use this proposed feature set for an unconstrained video, where more than one object is present in the video performing the same action or in the video performing multiple actions. The traditional neural network can be replaced by the convolutional neural network for further enhancements. We can conclude that fusion of features is a vital idea to enhance the performance of the classifier, where a large complex set of features available. Late fusion was found to be better than early fusion as features are used by multiple classifiers because of their competitiveness for late fusion.

Author Contributions

Conception and design, C.I.P., D.L., S.P.; collection and assembly of data, C.I.P., D.L., S.P., K.M., H.G., M.A.; data analysis and interpretation, C.I.P., D.L., S.P., K.M., H.G., and M.A.; manuscript writing, C.I.P., D.L., S.P., K.M., H.G., and M.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The authors would like to thank the reviewers for their valuable suggestions which helped in improving the quality of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hu, F.; Hao, Q.; Sun, Q.; Cao, X.; Ma, R.; Zhang, T.; Patil, Y.; Lu, J. Cyber-physical System With Virtual Reality for Intelligent Motion Recognition and Training. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 347–363. [Google Scholar]

- Wang, L.; Hu, W.; Tan, T. Recent developments in human motion analysis. Pattern Recognit. 2003, 36, 585–601. [Google Scholar] [CrossRef]

- Vallacher, R.R.; Wegner, D.M. What do people think they’re doing? Action identification and human behavior. Psychol. Rev. 1987, 94, 3–15. [Google Scholar] [CrossRef]

- Pullen, K.; Bregler, C. Motion capture assisted animation: Texturing and synthesis. ACM Trans. Graph. 2002, 21, 501–508. [Google Scholar] [CrossRef]

- Mackay, W.E.; Davenport, G. Virtual video editing in interactive multimedia applications. Commun. ACM 1989, 32, 802–810. [Google Scholar] [CrossRef]

- Zhong, H.; Shi, J.; Visontai, M. Detecting unusual activity in video. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2004, Washington, DC, USA, 27 June–2 July 2004; Volume 2. [Google Scholar]

- Fan, C.T.; Wang, Y.K.; Huang, C.R. Heterogeneous information fusion and visualization for a large-scale intelligent video surveillance system. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 593–604. [Google Scholar] [CrossRef]

- Filippova, K.; Hall, K.B. Improved video categorization from text meta-data and user comments. In Proceedings of the 34th International ACM SIGIR Conference on Research and Development in Information Retrieval, Beijing, China, 25–29 July 2011; pp. 835–842. [Google Scholar]

- Moxley, E.; Mei, T.; Manjunath, B.S. Video annotation through search and graph reinforcement mining. IEEE Trans. Multimed. 2010, 12, 184–193. [Google Scholar] [CrossRef]

- Peng, Q.; Cheung, Y.M.; You, X.; Tang, Y.Y. A Hybrid of Local and Global Saliencies for Detecting Image Salient Region and Appearance. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 86–97. [Google Scholar] [CrossRef]

- Wang, H.; Kläser, A.; Schmid, C.; Liu, C.L. Action recognition by dense trajectories. In Proceedings of the CVPR 2011, Providence, RI, USA, 20–25 June 2011; pp. 3169–3176. [Google Scholar]

- Wang, H.; Schmid, C. Action recognition with improved trajectories. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3551–3558. [Google Scholar]

- Ni, B.; Moulin, P.; Yang, X.; Yan, S. Motion part regularization: Improving action recognition via trajectory selection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3698–3706. [Google Scholar]

- Lan, Z.; Lin, M.; Li, X.; Hauptmann, A.G.; Raj, B. Beyond Gaussian pyramid: Multi-skip feature stacking for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 204–212. [Google Scholar]

- Liu, L.; Shao, L.; Li, X.; Lu, K. Learning spatio-temporal representations for action recognition: A genetic programming approach. IEEE Trans. Cybern. 2016, 46, 158–170. [Google Scholar] [CrossRef]

- Li, H.; Chen, J.; Xu, Z.; Chen, H.; Hu, R. Multiple instance discriminative dictionary learning for action recognition. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 2014–2018. [Google Scholar]

- Beaudry, C.; Péteri, R.; Mascarilla, L. An efficient and sparse approach for large scale human action recognition in videos. Mach. Vis. Appl. 2016, 27, 529–543. [Google Scholar] [CrossRef]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Skeleton-based action recognition with directed graph neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7912–7921. [Google Scholar]

- Ahsan, U.; Sun, C.; Essa, I. DiscrimNet: Semi-Supervised Action Recognition from Videos using Generative Adversarial Networks. arXiv 2018, arXiv:1801.07230. [Google Scholar]

- Zheng, Y.; Yao, H.; Sun, X.; Zhao, S.; Porikli, F. Distinctive action sketch for human action recognition. Signal Process. 2018, 144, 323–332. [Google Scholar] [CrossRef]

- Li, Z.; Gavrilyuk, K.; Gavves, E.; Jain, M.; Snoek, C.G.M. VideoLSTM convolves, attends and flows for action recognition. Comput. Vis. Image Underst. 2018, 166, 41–50. [Google Scholar] [CrossRef]

- Zhang, H.-B.; Zhang, Y.-X.; Zhong, B.; Lei, Q.; Yang, L.; Du, J.-X.; Chen, D.-S. A comprehensive survey of vision-based human action recognition methods. Sensors 2019, 19, 1005. [Google Scholar] [CrossRef] [PubMed]

- Patel, C.I.; Garg, S. Comparative analysis of traditional methods for moving object detection in video sequence. Int. J. Comput. Sci. Commun. 2015, 6, 309–315. [Google Scholar]

- Patel, C.I.; Patel, R. Illumination invariant moving object detection. Int. J. Comput. Electr. Eng. 2013, 5, 73. [Google Scholar] [CrossRef]

- Spagnolo, P.; D’Orazio, T.; Leo, M.; Distante, A. Advances in background updating and shadow removing for motion detection algorithms. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Versailles, France, 5–8 September 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 398–406. [Google Scholar]

- Patel, C.I.; Patel, R. Gaussian mixture model based moving object detection from video sequence. In Proceedings of the International Conference & Workshop on Emerging Trends in Technology, Maharashtra, India, 25–26 February 2011; pp. 698–702. [Google Scholar]

- Mondéjar-Guerra, M.V.; Rouco, J.; Novo, J.; Ortega, M. An end-to-end deep learning approach for simultaneous background modeling and subtraction. In Proceedings of the BMVC, Cardiff, UK, 9–12 September 2019; p. 266. [Google Scholar]

- Patel, C.I.; Garg, S.; Zaveri, T.; Banerjee, A. Top-Down and bottom-up cues based moving object detection for varied background video sequences. Adv. Multimed. 2014, 2014, 879070. [Google Scholar] [CrossRef]

- Patel, C.I.; Garg, S. Robust face detection using fusion of haar and daubechies orthogonal wavelet template. Int. J. Comput. Appl. 2012, 46, 38–44. [Google Scholar]

- Ukani, V.; Garg, S.; Patel, C.; Tank, H. Efficient vehicle detection and classification for traffic surveillance system. In Proceedings of the International Conference on Advances in Computing and Data Sciences, Ghaziabad, India, 11–12 November 2016; Springer: Singapore, 2016; pp. 495–503. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Liu, K.; Skibbe, H.; Schmidt, T.; Blein, T.; Palme, K.; Brox, T.; Ronneberger, O. Rotation-invariant hog descriptors using Fourier analysis in polar and spherical coordinates. Int. J. Comput. 2014, 106, 342–364. [Google Scholar] [CrossRef]

- Lee, D.S.; Srihari, S.N. A theory of classifier combination: The neural network approach. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995; Volume 1, pp. 42–45. [Google Scholar]

- Sugeno, M. Theory of Fuzzy Integrals and Its Applications. Ph.D. Thesis, Tokyo Institute of Technology, Tokyo, Japan, 1975. [Google Scholar]

- Cho, S.B.; Kim, J.H. Combining multiple neural networks by fuzzy integral for robust classification. IEEE Trans. Syst. Man Cybern. 1995, 25, 380–384. [Google Scholar]

- Patel, J.; Shah, S.; Thakkar, P.; Kotecha, K. Predicting stock market index using fusion of machine learning techniques. Expert Syst. Appl. 2015, 42, 2162–2172. [Google Scholar] [CrossRef]

- Flavell, J.H. Metacognition and cognitive monitoring: A new area of cognitive–developmental inquiry. Am. Psychol. 1979, 34, 906. [Google Scholar] [CrossRef]

- Nelson, T.O. Metamemory: A theoretical framework and new findings. Psychol. Learn. Mot. 1990, 26, 125–173. [Google Scholar]

- Babu, G.S.; Suresh, S. Meta-cognitive neural network for classification problems in a sequential learning framework. Neurocomputing 2012, 81, 86–96. [Google Scholar] [CrossRef]

- Schuldt, C.; Laptev, I.; Caputo, B. Recognizing Human Actions: A Local SVM Approach. In Proceedings of the 17th International Conference on Pattern Recognition, (ICPR’04), Cambridge, UK, 26 August 2004; Volume 3. [Google Scholar]

- Gorelick, L.; Blank, M.; Shechtman, E.; Irani, M.; Basri, R. Actions as Space-Time Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 2247–2253. [Google Scholar] [CrossRef]

- Liu, J.; Luo, J.; Shah, M. Recognizing realistic actions from videos “in the wild”. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1996–2003. [Google Scholar]

- Kuehne, H.; Jhuang, H.; Stiefelhagen, R.; Serre, T. HMDB51: A large video database for human motion recognition. In Proceedings of the High Performance Computing in Science and Engineering ’12, Barcelona, Spain, 6–13 November 2011; pp. 571–582. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A Dataset of 101 Human Action Classes from Videos in the Wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Dollár, P.; Rabaud, V.; Cottrell, G.; Belongie, S. Behavior recognition via sparse spatio-temporal features. In Proceedings of the 2005 IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance, Beijing, China, 15–16 October 2005; pp. 65–72. [Google Scholar]

- Jiang, H.; Drew, M.S.; Li, Z.N. Successive convex matching for action detection. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; pp. 1646–1653. [Google Scholar]

- Niebles, J.C.; Wang, H.; Fei-Fei, L. Unsupervised Learning of Human Action Categories Using Spatial-Temporal Words. Int. J. Comput. Vision 2008, 79, 299–318. [Google Scholar] [CrossRef]

- Yeo, C.; Ahammad, P.; Ramchandran, K.; Sastry, S.S. Compressed Domain Real-time Action Recognition. In Proceedings of the 2006 IEEE 8th Workshop on Multimedia Signal Processing, Victoria, BC, Canada, 3–6 October 2006; pp. 33–36. [Google Scholar]

- Ke, Y.; Sukthankar, R.; Hebert, M. Spatio-temporal shape and flow correlation for action recognition. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Kim, T.K.; Wong, S.F.; Cipolla, R. Tensor canonical correlation analysis for action classification. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Jhuang, H.; Serre, T.; Wolf, L.; Poggio, T. A biologically inspired system for action recognition. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Laptev, I.; Marszalek, M.; Schmid, C.; Rozenfeld, B. Learning realistic human actions from movies. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Rapantzikos, K.; Avrithis, Y.; Kollias, S. Dense saliency-based spatio-temporal feature points for action recognition. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1454–1461. [Google Scholar]

- Bregonzio, M.; Gong, S.; Xiang, T. Recognizing action as clouds of space-time interest points. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1948–1955. [Google Scholar]

- Klaser, A.; Marszałek, M.; Schmid, C. A spatio-temporal descriptor based on 3d-gradients. In Proceedings of the BMVC 2008—19th British Machine Vision Conference, Leeds, UK, 7–10 September 2008. [Google Scholar]

- Fathi, A.; Mori, G. Action recognition by learning mid-level motion features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Le, Q.V.; Zou, W.Y.; Yeung, S.Y.; Ng, A.Y. Learning hierarchical invariant spatio-temporal features for action recognition with independent subspace analysis. In Proceedings of the CVPR 2011, Providence, RI, USA, 20–25 June 2011; pp. 3361–3368. [Google Scholar]

- Kovashka, A.; Grauman, K. Learning a hierarchy of discriminative space-time neighborhood features for human action recognition. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2046–2053. [Google Scholar]

- Yeffet, L.; Wolf, L. Local trinary patterns for human action recognition. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 492–497. [Google Scholar]

- Wang, H.; Klaser, A.; Schmid, C.; Liu, C.L. Dense trajectories and motion boundary descriptors for action Recognition. Int. J. Comput. Vis. 2013, 103, 60–79. [Google Scholar] [CrossRef]

- Grundmann, M.; Meier, F.; Essa, I. 3D shape context and distance transform for action recognition. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–4. [Google Scholar]

- Weinland, D.; Boyer, E. Action recognition using exemplar-based embedding. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–7. [Google Scholar]

- Hoai, M.; Lan, Z.Z.; De la Torre, F. Joint segmentation and classification of human actions in video. In Proceedings of the CVPR 2011, Providence, RI, USA, 20–25 June 2011; pp. 3265–3272. [Google Scholar]

- Ballan, L.; Bertini, M.; Del Bimbo, A.; Seidenari, L.; Serra, G. Recognizing human actions by fusing spatio-temporal appearance and motion descriptors. In Proceedings of the International Conference on Image Processing, Cairo, Egypt, 7–10 November 2009; pp. 3569–3572. [Google Scholar]

- Wang, Y.; Mori, G. Learning a discriminative hidden part model for human action recognition. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–11 December 2009; pp. 1721–1728. [Google Scholar]

- Chen, C.C.; Aggarwal, J.K. Recognizing human action from a far field of view. In Proceedings of the 2009 Workshop on Motion and Video Computing (WMVC), Snowbird, UT, USA, 8–9 December 2009; pp. 1–7. [Google Scholar]

- Vezzani, R.; Baltieri, D.; Cucchiara, R. HMM based action recognition with projection histogram features. In Proceedings of the Recognizing Patterns in Signals, Speech, Images and Videos, Istanbul, Turkey, 23–26 August 2010; pp. 286–293. [Google Scholar]

- Dhillon, P.S.; Nowozin, S.; Lampert, C.H. Combining appearance and motion for human action classification in videos. In Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops, Miami, FL, USA, 20–25 June 2009; pp. 22–29. [Google Scholar]

- Lin, Z.; Jiang, Z.; Davis, L.S. Recognizing actions by shape-motion prototype trees. In Proceedings of the International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 444–451. [Google Scholar]

- Natarajan, P.; Singh, V.K.; Nevatia, R. Learning 3d action models from a few 2d videos for view invariant action recognition. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2006–2013. [Google Scholar]

- Yang, M.; Lv, F.; Xu, W.; Yu, K.; Gong, Y. Human action detection by boosting efficient motion features. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops, Kyoto, Japan, 27 September–4 October 2009; pp. 522–529. [Google Scholar]

- Liu, J.; Shah, M. Learning human actions via information maximization. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Ikizler-Cinbis, N.; Sclaroff, S. Object, scene and actions: Combining multiple features for human action Recognition. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 494–507. [Google Scholar]

- Mota, V.F.; Perez, E.D.A.; Maciel, L.M.; Vieira, M.B.; Gosselin, P.H. A tensor motion descriptor based on histograms of gradients and optical flow. Pattern Recognit. Lett. 2014, 39, 85–91. [Google Scholar] [CrossRef]

- Sad, D.; Mota, V.F.; Maciel, L.M.; Vieira, M.B.; De Araujo, A.A. A tensor motion descriptor based on multiple gradient estimators. In Proceedings of theConference on Graphics, Patterns and Images, Arequipa, Peru, 5–8 August 2013; pp. 70–74. [Google Scholar]

- Figueiredo, A.M.; Maia, H.A.; Oliveira, F.L.; Mota, V.F.; Vieira, M.B. A video tensor self-descriptor based on block matching. In Proceedings of the International Conference on Computational Science and Its Applications, Guimarães, Portugal, 30 June–3 July 2014; pp. 401–414. [Google Scholar]

- Hasan, M.; Roy-Chowdhury, A.K. Incremental activity modeling and recognition in streaming videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 796–803. [Google Scholar]

- Kihl, O.; Picard, D.; Gosselin, P.H. Local polynomial space-time descriptors for action classification. Mach. Vis. Appl. 2016, 27, 351–361. [Google Scholar] [CrossRef]

- Maia, H.A.; Figueiredo, A.M.D.O.; De Oliveira, F.L.M.; Mota, V.F.; Vieira, M.B. A video tensor self-descriptor based on variable size block matching. J. Mob. Multimed. 2015, 11, 90–102. [Google Scholar]

- Patel, C.I.; Garg, S.; Zaveri, T.; Banerjee, A.; Patel, R. Human action recognition using fusion of features for unconstrained video sequences. Comput. Electr. Eng. 2018, 70, 284–301. [Google Scholar] [CrossRef]

- Kliper-Gross, O.; Gurovich, Y.; Hassner TWolf, L. Motion Interchange Patterns for Action Recognition in Unconstrained Videos. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 256–269. [Google Scholar]

- Can, E.F.; Manmatha, R. Formulating action recognition as a ranking problem. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 23–28 June 2013; pp. 251–256. [Google Scholar]

- Liu, W.; Zha, Z.J.; Wang, Y.; Lu, K.; Tao, D. p-Laplacian regularized sparse coding for human activity recognition. IEEE Trans. Ind. Electron. 2016, 63, 5120–5129. [Google Scholar] [CrossRef]

- Lan, Z.; Yi, Z.; Alexander, G.H.; Shawn, N. Deep local video feature for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1–7. [Google Scholar]

- Zhu, J.; Zhu, Z.; Zou, W. End-to-end video-level representation learning for action recognition. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 645–650. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Bangkok, Thailand, 18–20 November 2020; pp. 568–576. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Li, F.-F. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar]

- Donahue, J.; Hendricks, L.A.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Sun, L.; Jia, K.; Yeung, D.-Y.; Shi, B.E. Human action recognition using factorized spatio-temporal convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4597–4605. [Google Scholar]

- Christoph, F.; Pinz, A.; Zisserman, A. Convolutional two-stream network fusion for video action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1933–1941. [Google Scholar]

- Zhang, B.; Wang, L.; Wang, Z.; Qiao, Y.; Wang, H. Real-time action recognition with enhanced motion vector CNNs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2718–2726. [Google Scholar]

- Cherian, A.; Fernando, B.; Harandi, M.; Gould, S. Generalized rank pooling for activity recognition. arXiv 2017, arXiv:1704.02112. [Google Scholar]

- Seo, J.-J.; Kim, H.-I.; de Neve, W.; Ro, Y.M. Effective and efficient human action recognition using dynamic frame skipping and trajectory rejection. Image Vis. Comput. 2017, 58, 76–85. [Google Scholar] [CrossRef]

- Shi, Y.; Tian, Y.; Wang, Y.; Huang, T. Sequential deep trajectory descriptor for action recognition with three-stream cnn. IEEE Trans. Multimed. 2017, 19, 1510–1520. [Google Scholar] [CrossRef]

- Wang, J.; Cherian, A.; Porikli, F. Ordered pooling of optical flow sequences for action recognition. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 168–176. [Google Scholar]

- Zhu, Y.; Lan, Z.; Newsam, S.; Hauptmann, A. Hidden two-stream convolutional networks for action recognition. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; pp. 363–378. [Google Scholar]

- João, C.; Zisserman, A. Quo Vadis, Action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4724–4733. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).