EEG-Based Emotion Classification for Alzheimer’s Disease Patients Using Conventional Machine Learning and Recurrent Neural Network Models

Abstract

1. Introduction

2. Background

2.1. Dementia

- Definite dementialess than 19.

- Questionable dementia—between 20 and 23.

- Definite non-dementia—over 24.

2.2. Emotion Classification

2.2.1. Emotion Classification on Healthy People

2.2.2. Emotional Classification of Patients with Neurological Disorders

2.2.3. Summary

3. Data Collection

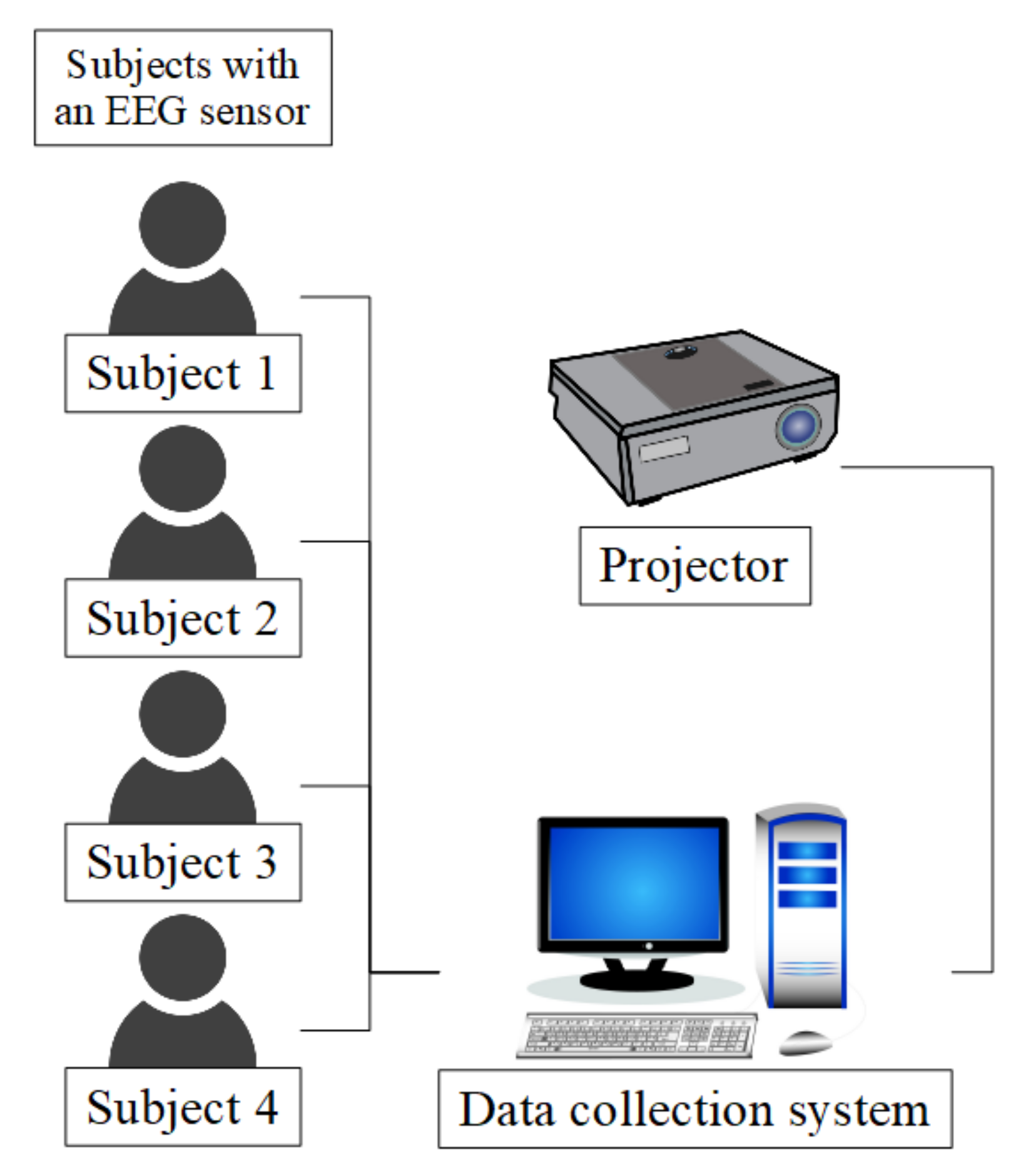

3.1. Participants

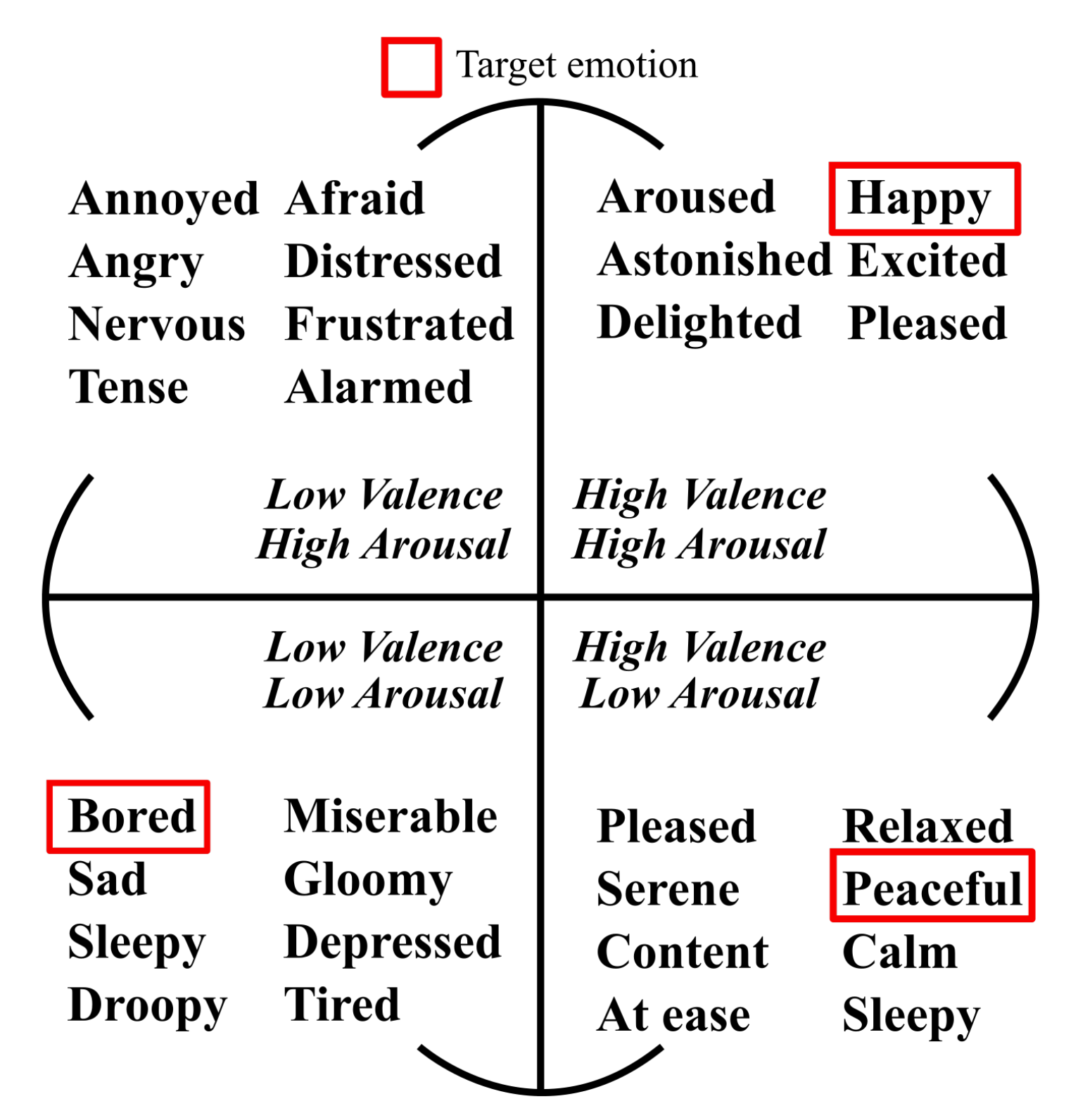

3.2. Target Emotions

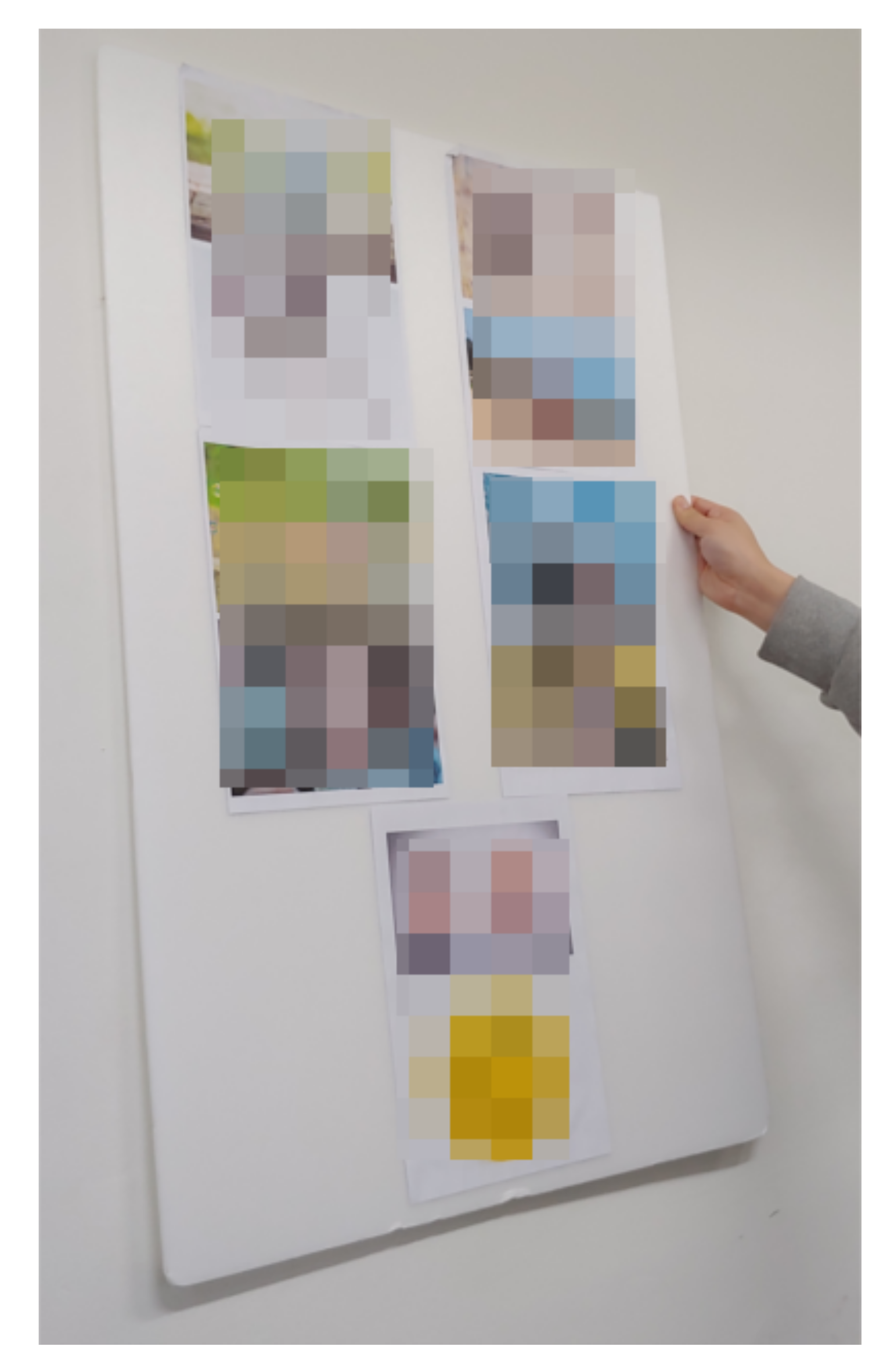

3.3. Stimuli

3.4. Sensor

- Delta (1–4 Hz).

- Theta (4–8 Hz).

- Alpha (7.5–13 Hz).

- Beta (13–30 Hz).

- Gamma (30–44 Hz).

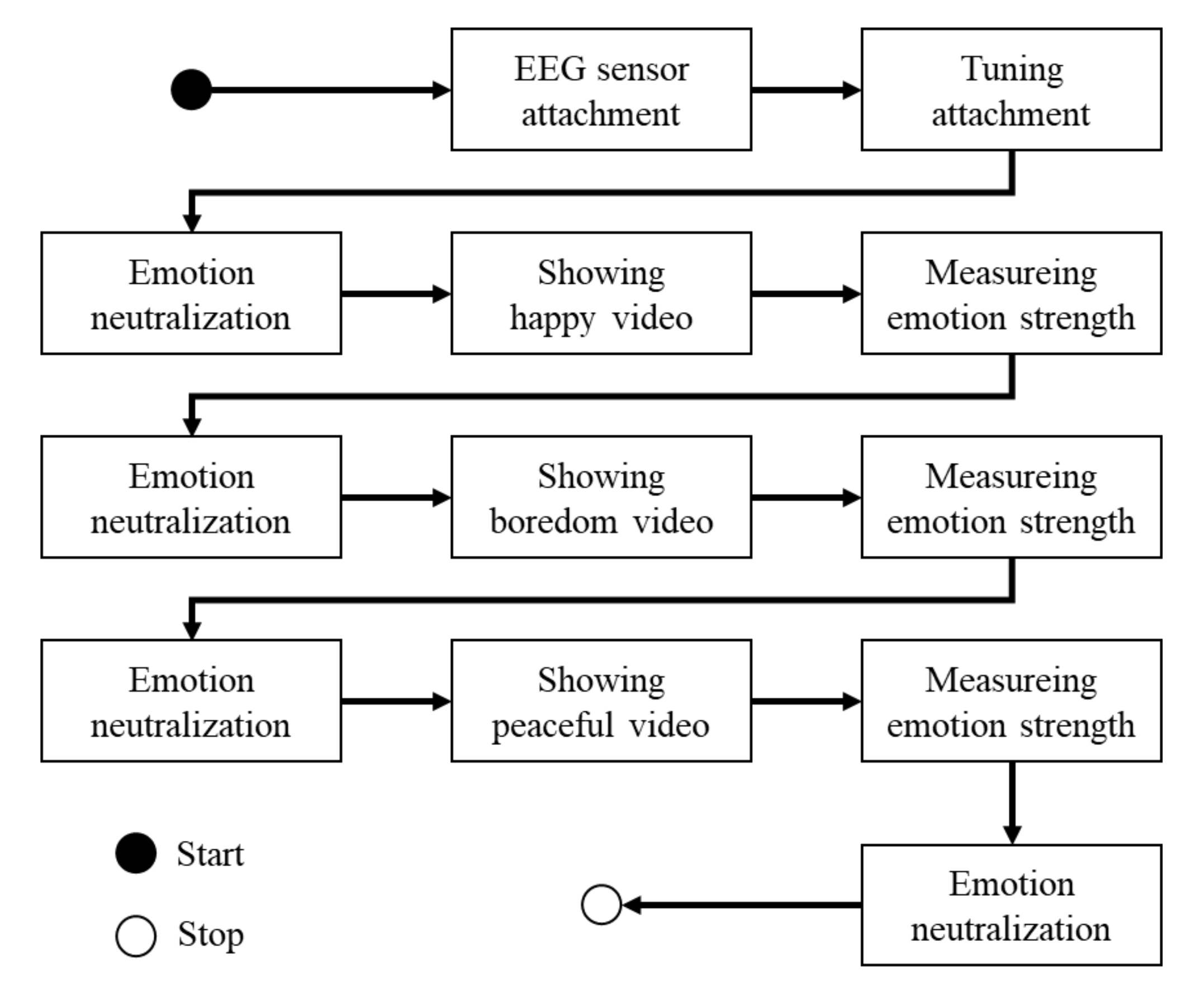

3.5. Protocol

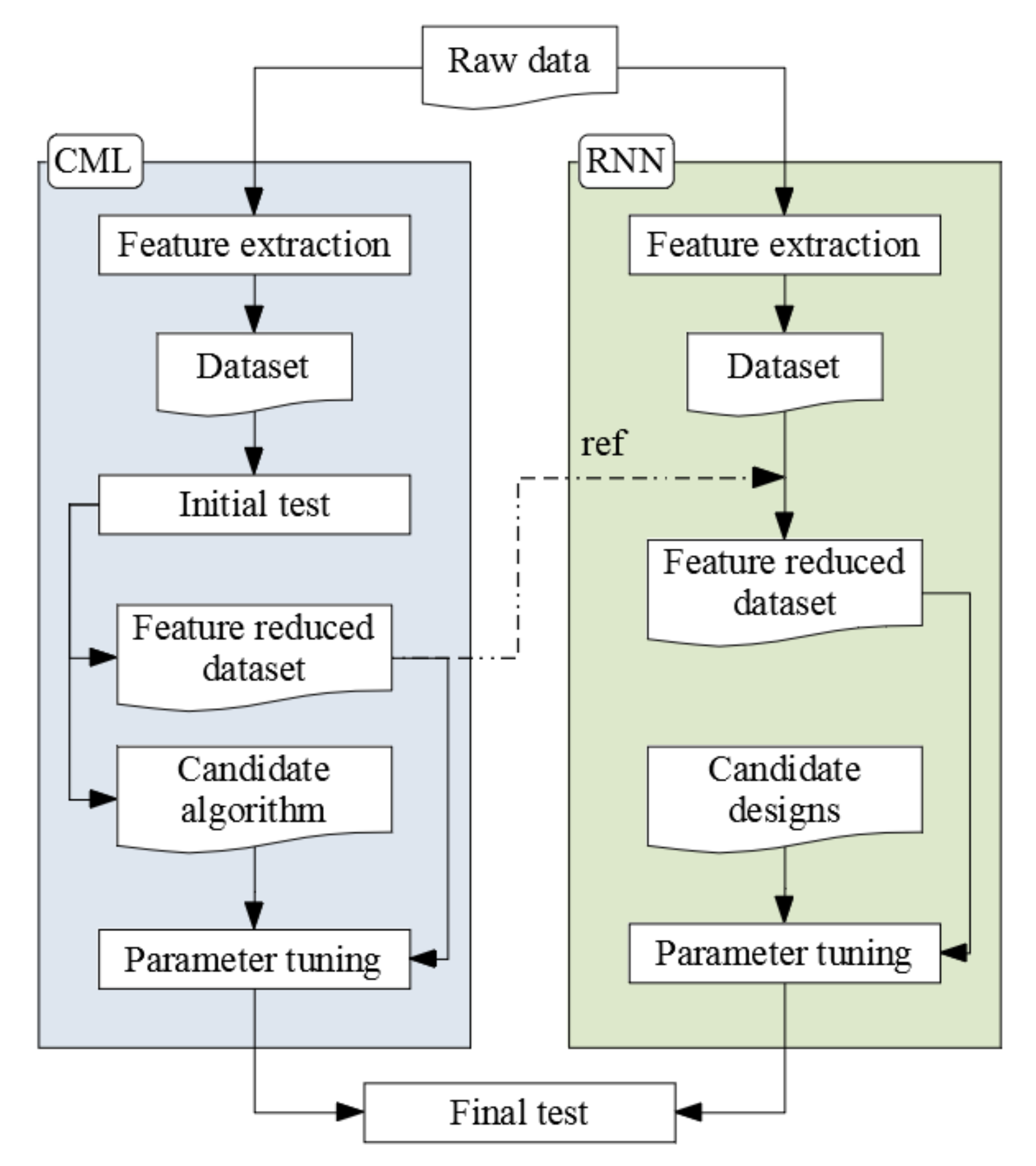

4. Modeling

4.1. Feature Extraction

- ABP—mean, standard deviation (std) by the frequency bands and electrodes.

- Differential entropy (DE)—by the frequency bands and electrodes.

- Rational asymmetry (RASM)—by the frequency bands.

- Differential asymmetry (DASM)—by the frequency bands.

4.2. Initial Test

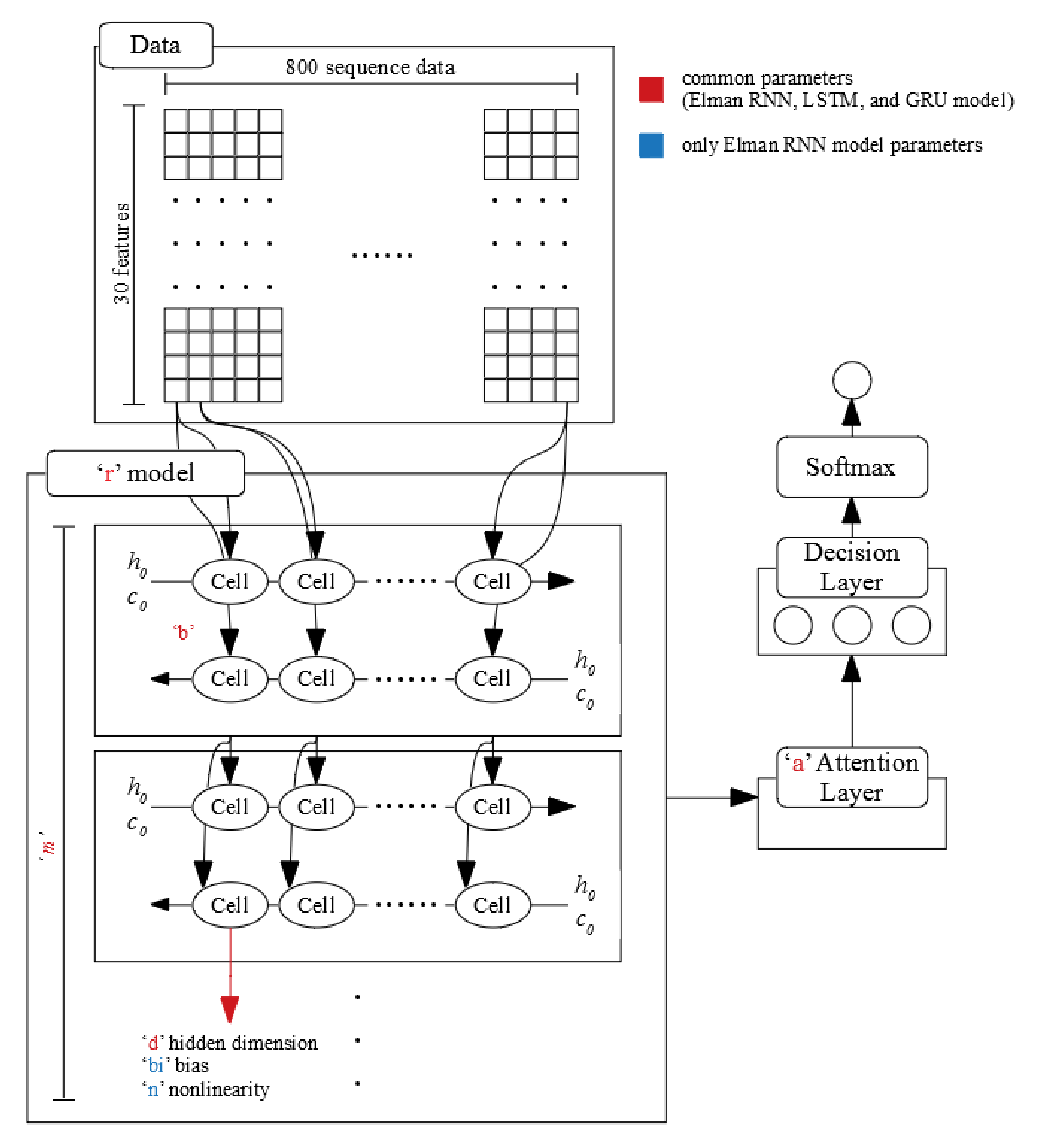

4.3. Design for Deep Learning

4.4. Parameter Tuning

- Learning rate: 0.001–1.000 (0.001 unit).

- Epochs: 1–500 (1 unit).

- Hidden layer design: a, t, and i (see Table 5).

- Momentum: 0.2 fix.

- Other parameters: default fix.

- r: LSTM, GRU, and Elman RNN.

- m: 1,2, and 3.

- b: Single direction and bi-directional.

- d: 2, 4, 8, 16, 32, 64, 128, 256, and 512.

- a: true and false.

- bi (only using Elman RNN): True and False.

- n (only using Elman RNN): “relu” and “tanh.”

- Epochs: 1–1000 (1 unit)

- Learning rate: 0.0001 fix.

4.5. Final Test

5. Results

5.1. Interviews

5.2. Initial Test

5.3. Parameter Tuning

5.4. Final Test

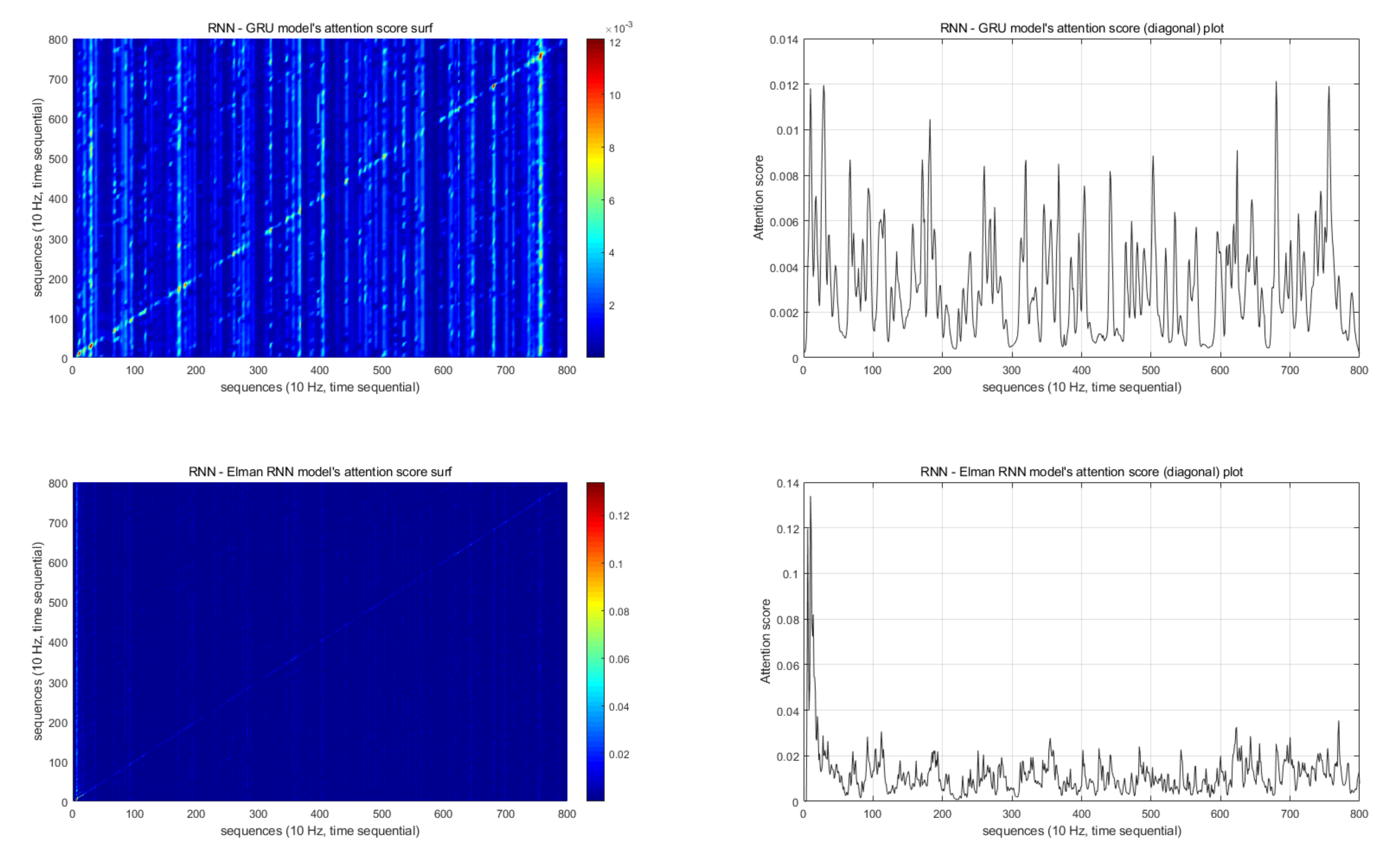

6. Discussion

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Prince, M.; Wimo, A.; Guerchet, M.; Ali, G.C.; Wu, Y.T.; Prina, M. World Alzheimer Report 2015 The Global Impact of Dementia An Analysis of Prevalence, Incidence, Cost and Trends; Technical Report; Alzheimer’s Disease International: London, UK, 2015. [Google Scholar]

- Patterson, C. World Alzheimer Report 2018 the State of the Art of Dementia Research: New Frontiers; Technical Report; Alzheimer’s Disease International: London, UK, 2018. [Google Scholar]

- Blackman, T.; Van Schalk, P.; Martyr, A. Outdoor environments for people with dementia: An exploratory study using virtual reality. Ageing Soc. 2007, 27, 811–825. [Google Scholar] [CrossRef]

- Donovan, R.; Healy, M.; Zheng, H.; Engel, F.; Vu, B.; Fuchs, M.; Walsh, P.; Hemmje, M.; Kevitt, P.M. SenseCare: Using Automatic Emotional Analysis to Provide Effective Tools for Supporting Wellbeing. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2682–2687. [Google Scholar] [CrossRef]

- Lin, C.C.; Chiu, M.J.; Hsiao, C.C.; Lee, R.G.; Tsai, Y.S. Wireless Health Care Service System for Elderly with Dementia. IEEE Trans. Inf. Technol. Biomed. 2006, 10, 696–704. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Lu, B.L. Emotion classification based on gamma-band EEG. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 2–6 September 2009; pp. 1223–1226. [Google Scholar] [CrossRef]

- Singh, G.; Jati, A.; Khasnobish, A.; Bhattacharyya, S.; Konar, A.; Tibarewala, D.N.; Janarthanan, R. Negative emotion recognition from stimulated EEG signals. In Proceedings of the 2012 Third International Conference on Computing Communication Networking Technologies (ICCCNT), Coimbatore, India, 26–28 July 2012; pp. 1–8. [Google Scholar] [CrossRef]

- Petrantonakis, P.C.; Hadjileontiadis, L.J. A novel emotion elicitation index using frontal brain asymmetry for enhanced EEG-based emotion recognition. IEEE Trans. Inf. Technol. Biomed. 2011, 15, 737–746. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.D.; Kim, K.B. Brain-wave Analysis using fMRI, TRS and EEG for Human Emotion Recognition. J. Korean Inst. Intell. Syst. 2007, 17, 832–837. [Google Scholar] [CrossRef]

- Kim, D.; Kim, Y. Pattern Classification of Four Emotions using EEG. J. Korea Inst. Inf. Electron. Commun. Technol. 2010, 3, 23–27. [Google Scholar]

- Lee, H.J.; Shin, D.I.K. A Study on an emotion-classification algorithm of users adapting Brainwave. In Proceedings of Symposium of the Korean Institute of Communications and Information Sciences; Korea Institute Of Communication Sciences: Seoul, Korea, 2013; pp. 786–787. [Google Scholar]

- Logeswaran, N.; Bhattacharya, J. Crossmodal transfer of emotion by music. Neurosci. Lett. 2009, 455, 129–133. [Google Scholar] [CrossRef]

- Nie, D.; Wang, X.W.; Shi, L.C.; Lu, B.L. EEG-based emotion recognition during watching movies. In Proceedings of the 5th International IEEE/EMBS Conference on Neural Engineering, Cancun, Mexico, 27 April–1 May 2011; pp. 667–670. [Google Scholar] [CrossRef]

- Baumgartner, T.; Esslen, M.; Jäncke, L. From emotion perception to emotion experience: Emotions evoked by pictures and classical music. Int. J. Psychophysiol. 2006, 60, 34–43. [Google Scholar] [CrossRef]

- Horlings, R.; Datcu, D.; Rothkrantz, L.J.M. Emotion recognition using brain activity. In Proceedings of the 9th International Conference on Computer Systems and Technologies and Workshop for PhD Students in Computing, Gabrovo, Bulgaria, 12–13 June 2008; p. 6. [Google Scholar]

- Khalili, Z.; Moradi, M.H. Emotion detection using brain and peripheral signals. In Proceedings of the 2008 Cairo International Biomedical Engineering Conference, Cairo, Egypt, 18–20 December 2008; pp. 1–4. [Google Scholar] [CrossRef]

- Lin, Y.P.; Wang, C.H.; Wu, T.L.; Jeng, S.K.; Chen, J.H. EEG-based emotion recognition in music listening: A comparison of schemes for multiclass support vector machine. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; pp. 489–492. [Google Scholar] [CrossRef]

- Lin, Y.P.; Wang, C.H.; Wu, T.L.; Jeng, S.K.; Chen, J.H. Support vector machine for EEG signal classification during listening to emotional music. In Proceedings of the IEEE 10th Workshop on Multimedia Signal Processing, Cairns, Australia, 8–10 October 2008; pp. 127–130. [Google Scholar] [CrossRef]

- Lin, Y.P.; Wang, C.H.; Jung, T.P.; Wu, T.L.; Jeng, S.K.; Duann, J.R.; Chen, J.H. EEG-based emotion recognition in music listening. IEEE Trans. Biomed. Eng. 2010, 57, 1798–1806. [Google Scholar] [CrossRef]

- Petrantonakis, P.C.; Hadjileontiadis, L.J. Emotion Recognition from Brain Signals Using Hybrid Adaptive Filtering and Higher Order Crossings Analysis. IEEE Trans. Affect. Comput. 2010, 1, 81–97. [Google Scholar] [CrossRef]

- Vijayan, A.E.; Sen, D.; Sudheer, A. EEG-Based Emotion Recognition Using Statistical Measures and Auto-Regressive Modeling. In Proceedings of the IEEE International Conference on Computational Intelligence & Communication Technology, Ghaziabad, India, 13–14 February 2015; pp. 587–591. [Google Scholar] [CrossRef]

- Mohammadpour, M.; Hashemi, S.M.R.; Houshmand, N. Classification of EEG-based emotion for BCI applications. In Proceedings of the 2017 Artificial Intelligence and Robotics (IRANOPEN), Qazvin, Iran, 10 April 2017; pp. 127–131. [Google Scholar] [CrossRef]

- Takahashi, K. Remarks on emotion recognition from bio-potential signals. In Proceedings of the 2nd International Conference on Autonomous Robots and Agents, Palmerston North, New Zealand, 13–15 December 2004. [Google Scholar]

- Chanel, G.; Kronegg, J.; Grandjean, D.; Pun, T. Emotion assessment: Arousal evaluation using EEG’s and peripheral physiological signals. In Multimedia Content Representation, Classification and Security; Gunsel, B., Jain, A.K., Tekalp, A.M., Sankur, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 530–537. [Google Scholar]

- Shen, L.; Wang, M.; Shen, R. Affective e-Learning: Using “Emotional” Data to Improve Learning in Pervasive Learning Environment Related Work and the Pervasive e-Learning Platform. Educ. Technol. Soc. 2009, 12, 176–189. [Google Scholar]

- Murugappan, M.; Rizon, M.; Nagarajan, R.; Yaacob, S.; Zunaidi, I.; Hazry, D. Lifting scheme for human emotion recognition using EEG. In Proceedings of the 2008 International Symposium on Information Technology, Kuala Lumpur, Malaysia, 26–29 August 2008; Volume 2, pp. 1–6. [Google Scholar] [CrossRef]

- Zheng, W.L.; Lu, B.L. Investigating Critical Frequency Bands and Channels for EEG-Based Emotion Recognition with Deep Neural Networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Schmidt, L.A.; Trainor, L.J. Frontal brain electrical activity (EEG) distinguishes valence and intensity of musical emotions. Cogn. Emot. 2001, 15, 487–500. [Google Scholar] [CrossRef]

- Zheng, W.L.; Zhu, J.Y.; Lu, B.L. Identifying Stable Patterns over Time for Emotion Recognition from EEG. IEEE Trans. Affect. Comput. 2017, PP, 1. [Google Scholar] [CrossRef]

- Kim, J.; Seo, J.; Laine, T.H. Detecting Boredom from Eye Gaze and EEG. Biomed. Signal Process. Control. 2018, 46, 302–313. [Google Scholar] [CrossRef]

- Katahira, K.; Yamazaki, Y.; Yamaoka, C.; Ozaki, H.; Nakagawa, S.; Nagata, N. EEG Correlates of the Flow State: A Combination of Increased Frontal Theta and Moderate Frontocentral Alpha Rhythm in the Mental Arithmetic Task. Front. Psychol. 2018, 9, 300. [Google Scholar] [CrossRef] [PubMed]

- Kiefer, P.; Giannopoulos, I.; Kremer, D.; Schlieder, C.; Raubal, M. Starting to get bored: An outdoor eye tracking study of tourists exploring a city panorama. Etra 2014, 315–318. [Google Scholar] [CrossRef]

- Seo, J.; Laine, T.H.; Sohn, K.A. Machine learning approaches for boredom classification using EEG. J. Ambient. Intell. Humaniz. Comput. 2019, 1, 1–16. [Google Scholar] [CrossRef]

- Seo, J.; Laine, T.H.; Sohn, K.A. An exploration of machine learning methods for robust boredom classification using EEG and GSR data. Sensors 2019, 19, 4561. [Google Scholar] [CrossRef]

- Rosen, H.J.; Perry, R.J.; Murphy, J.; Kramer, J.H.; Mychack, P.; Schuff, N.; Weiner, M.; Levenson, R.W.; Miller, B.L. Emotion comprehension in the temporal variant of frontotemporal dementia. Brain 2002, 125, 2286–2295. [Google Scholar] [CrossRef]

- Yuvaraj, R.; Murugappan, M.; Ibrahim, N.M.; Sundaraj, K.; Omar, M.I.; Mohamad, K.; Palaniappan, R. Optimal set of EEG features for emotional state classification and trajectory visualization in Parkinson’s disease. Int. J. Psychophysiol. 2014, 94, 482–495. [Google Scholar] [CrossRef]

- Yuvaraj, R.; Murugappan, M.; Ibrahim, N.M.; Sundaraj, K.; Omar, M.I.; Mohamad, K.; Palaniappan, R. Detection of emotions in Parkinson’s disease using higher order spectral features from brain’s electrical activity. Biomed. Signal Process. Control. 2014, 14, 108–116. [Google Scholar] [CrossRef]

- Yuvaraj, R.; Murugappan, M.; Acharya, U.R.; Adeli, H.; Ibrahim, N.M.; Mesquita, E. Brain functional connectivity patterns for emotional state classification in Parkinson’s disease patients without dementia. Behav. Brain Res. 2016, 298, 248–260. [Google Scholar] [CrossRef] [PubMed]

- Chiu, I.; Piguet, O.; Diehl-Schmid, J.; Riedl, L.; Beck, J.; Leyhe, T.; Holsboer-Trachsler, E.; Kressig, R.W.; Berres, M.; Monsch, A.U.; et al. Facial emotion recognition performance differentiates between behavioral variant frontotemporal dementia and major depressive disorder. J. Clin. Psychiatry 2018, 79. [Google Scholar] [CrossRef] [PubMed]

- Kumfor, F.; Hazelton, J.L.; Rushby, J.A.; Hodges, J.R.; Piguet, O. Facial expressiveness and physiological arousal in frontotemporal dementia: Phenotypic clinical profiles and neural correlates. Cogn. Affect. Behav. Neurosci. 2019, 19, 197–210. [Google Scholar] [CrossRef]

- Pan, J.; Xie, Q.; Huang, H.; He, Y.; Sun, Y.; Yu, R.; Li, Y. Emotion-Related Consciousness Detection in Patients With Disorders of Consciousness Through an EEG-Based BCI System. Front. Hum. Neurosci. 2018, 12, 198. [Google Scholar] [CrossRef]

- Peter, C.; Waterwoth, J.; Waterworth, E.; Voskamp, J. Sensing Mood to Counteract Dementia. In Proceedings of the International Workshop on Pervasive Technologies for the support of Alzheimer’s Disease and Related Disorders, Thessaloniki, Greece, 24 February 2007. [Google Scholar]

- Larsen, I.U.; Vinther-Jensen, T.; Gade, A.; Nielsen, J.E.; Vogel, A. Do I misconstrue? Sarcasm detection, emotion recognition, and theory of mind in Huntington disease. Neuropsychology 2016, 30, 181–189. [Google Scholar] [CrossRef]

- Balconi, M.; Cotelli, M.; Brambilla, M.; Manenti, R.; Cosseddu, M.; Premi, E.; Gasparotti, R.; Zanetti, O.; Padovani, A.; Borroni, B. Understanding emotions in frontotemporal dementia: The explicit and implicit emotional cue mismatch. J. Alzheimer’s Dis. 2015, 46, 211–225. [Google Scholar] [CrossRef]

- World Health Organization (WHO). Dementia. Available online: https://www.who.int/news-room/fact-sheets/detail/dementia (accessed on 16 December 2020).

- Kim, B.C.; Na, D.L. A clinical approach for patients with dementia. In Dementia: A Clinical Approach, 2nd ed.; Korean Dementia Association: Seoul, Korea, 2011; pp. 67–85. [Google Scholar]

- Alzheimer’s Association. Frontotemporal Dementia (FTD)|Symptoms & Treatments. Available online: https://www.alz.org/alzheimers-dementia/what-is-dementia/types-of-dementia/frontotemporal-dementia (accessed on 16 December 2020).

- Folstein, M.F.; Folstein, S.E.; McHugh, P.R. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 1975, 12, 189–198. [Google Scholar] [CrossRef]

- Na, H.R.; Park, M.H. Dementia screening test. In Dementia: A Clinical Approach, 2nd ed.; Korean Dementia Association: Seoul, Korea, 2011; pp. 89–98. [Google Scholar]

- Kang, Y.W.; Na, D.L.; Hahn, S.H. A validity study on the korean mini-mental state examination (K-MMSE) in dementia patients. J. Korean Neurol. Assoc. 1997, 15, 300–308. [Google Scholar]

- Kwon, Y.C.; Park, J.H. Korean Version of Mini-Mental State Examination (MMSE-K) Part I: Developement of the Test for the Elderly. J. Korean Neuropsychiatr. Assoc. 1989, 28, 125–135. [Google Scholar] [CrossRef]

- Hui, K.T.; Jhoo, J.H.; Park, J.H.; Kim, J.L.; Ryu, S.H.; Moon, S.W.; Choo, I.H.; Lee, D.W.; Yoon, J.C.; Do, Y.J.; et al. Korean version of mini mental status examination for dementia screening and its short form. Psychiatry Investig. 2010, 7, 102–108. [Google Scholar] [CrossRef]

- Park, J.H.; Kwon, Y.C. Standardization of Korean Version of the Mini-Mental State Examination (MMSE-K) for Use in the Elderly. Part II. Diagnostic Validity. J. Korean Neuropsychiatr. Assoc. 1989, 28, 508–513. [Google Scholar]

- Yoon, S.J.; Park, K.W. Behavioral and psychological symptoms of dementia (BPSD). In Dementia: A Clinical Approach, 2nd ed.; Korean Dementia Association: Seoul, Korea, 2011; pp. 305–312. [Google Scholar]

- Yang, Y.S.; Han, I.W. Pharmacological treatement and non-pharmacological approach for BPSD. In Dementia: A Clinical Approach, 2nd ed.; Korean Dementia Association: Seoul, Korea, 2011; pp. 315–347. [Google Scholar]

- Na, C.; Kim, J.; Che, W.; Park, S. The latest development in Dementia. J. Intern. Korean Med. 1998, 19, 291–300. [Google Scholar]

- Jung, P.; Lee, S.W.; Song, C.G.; Kim, D. Counting Walk-steps and Detection of Phone’s Orientation/Position Using Inertial Sensors of Smartphones. J. KIISE Comput. Pract. Lett. 2013, 19, 45–50. [Google Scholar]

- Mandryk, R.L.; Atkins, M.S. A fuzzy physiological approach for continuously modeling emotion during interaction with play technologies. Int. J. Hum. Comput. Stud. 2007, 65, 329–347. [Google Scholar] [CrossRef]

- Jang, E.H.; Park, B.J.; Park, M.S.; Kim, S.H.; Sohn, J.H. Analysis of physiological signals for recognition of boredom, pain, and surprise emotions. J. Physiol. Anthropol. 2015, 34, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Giakoumis, D.; Vogiannou, A.; Kosunen, I.; Moustakas, K.; Tzovaras, D.; Hassapis, G. Identifying psychophysiological correlates of boredom and negative mood induced during HCI. In Proceedings of the Bio-inspired Human-Machine Interfaces and Healthcare Applications, Valencia, Spain, 20–23 January 2010; pp. 3–12. [Google Scholar] [CrossRef]

- Giakoumis, D.; Tzovaras, D.; Moustakas, K.; Hassapis, G. Automatic recognition of boredom in video games using novel biosignal moment-based features. IEEE Trans. Affect. Comput. 2011, 2, 119–133. [Google Scholar] [CrossRef]

- Mello, S.K.D.; Craig, S.D.; Gholson, B.; Franklin, S.; Picard, R.; Graesser, A.C. Integrating affect sensors in an intelligent tutoring system. In Proceedings of the Computer in the Affective Loop Workshop at 2005 International Conference Intelligent User Interfaces, San Diego, CA, USA, 10–13 January 2005; pp. 7–13. [Google Scholar] [CrossRef]

- Timmermann, M.; Jeung, H.; Schmitt, R.; Boll, S.; Freitag, C.M.; Bertsch, K.; Herpertz, S.C. Oxytocin improves facial emotion recognition in young adults with antisocial personality disorder. Psychoneuroendocrinology 2017, 85, 158–164. [Google Scholar] [CrossRef]

- Lee, C.C.; Shih, C.Y.; Lai, W.P.; Lin, P.C. An improved boosting algorithm and its application to facial emotion recognition. J. Ambient. Intell. Humaniz. Comput. 2012, 3, 11–17. [Google Scholar] [CrossRef]

- Jiang, R.; Ho, A.T.; Cheheb, I.; Al-Maadeed, N.; Al-Maadeed, S.; Bouridane, A. Emotion recognition from scrambled facial images via many graph embedding. Pattern Recognit. 2017, 67, 245–251. [Google Scholar] [CrossRef]

- Tan, J.W.; Walter, S.; Scheck, A.; Hrabal, D.; Hoffmann, H.; Kessler, H.; Traue, H.C. Repeatability of facial electromyography (EMG) activity over corrugator supercilii and zygomaticus major on differentiating various emotions. J. Ambient. Intell. Humaniz. Comput. 2012, 3, 3–10. [Google Scholar] [CrossRef]

- Mistry, K.; Zhang, L.; Neoh, S.C.; Lim, C.P.; Fielding, B. A Micro-GA Embedded PSO Feature Selection Approach to Intelligent Facial Emotion Recognition. IEEE Trans. Cybern. 2017, 47, 1496–1509. [Google Scholar] [CrossRef] [PubMed]

- Busso, C.; Deng, Z.; Yildirim, S.; Bulut, M.; Lee, C.M.; Kazemzadeh, A.; Lee, S.; Neumann, U.; Narayanan, S. Analysis of emotion recognition using facial expressions, speech and multimodal information. In Proceedings of the 6th international conference on Multimodal interfaces—ICMI ’04, State College, PA, USA, 13–15 October 2004; pp. 205–211. [Google Scholar] [CrossRef]

- Castellano, G.; Kessous, L.; Caridakis, G. Emotion recognition through multiple modalities: Face, body gesture, speech. In Affect and Emotion in Human-Computer Interaction: From Theory to Applications; Peter, C., Beale, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 92–103. [Google Scholar] [CrossRef]

- Gunes, H.; Piccardi, M. Bi-modal emotion recognition from expressive face and body gestures. J. Netw. Comput. Appl. 2007, 30, 1334–1345. [Google Scholar] [CrossRef]

- Jaques, N.; Conati, C.; Harley, J.M.; Azevedo, R. Predicting affect from gaze data during interaction with an intelligent tutoring system. In International Conference on Intelligent Tutoring Systems; Springer International Publishing: Cham, Switzerland, 2014; pp. 29–38. [Google Scholar]

- Zhou, J.; Yu, C.; Riekki, J.; Karkkainen, E. Ame framework: A model for emotion-aware ambient intelligence. In Proceedings of the Second International Conference on Affective Computing and Intelligent Interaction, Lisbon, Portugal, 12–14 September 2007; p. 171. [Google Scholar] [CrossRef]

- Glowinski, D.; Camurri, A.; Volpe, G.; Dael, N.; Scherer, K. Technique for automatic emotion recognition by body gesture analysis. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Anchorage, AK, USA, 23–28 June; pp. 1–6. [CrossRef]

- Healy, M.; Donovan, R.; Walsh, P.; Zheng, H. A machine learning emotion detection platform to support affective well being. In Proceedings of the IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2694–2700. [Google Scholar] [CrossRef]

- Lee, H.J.; Shin, D.I.D.K.; Shin, D.I.D.K. The Classification Algorithm of Users ’ Emotion Using Brain-Wave. J. Korean Inst. Commun. Inf. Sci. 2014, 39, 122–129. [Google Scholar]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Baker, R.; D’Mello, S.; Rodrigo, M.; Graesser, A. Better to be frustrated than bored: The incidence and persistence of affect during interactions with three different computer-based learning environments. Int. J. Hum. Comput. Stud. 2010, 68, 223–241. [Google Scholar] [CrossRef]

- Fagerberg, P.; Ståhl, A.; Höök, K. EMoto: Emotionally engaging interaction. Pers. Ubiquitous Comput. 2004, 8, 377–381. [Google Scholar] [CrossRef]

- Feldman, L. Variations in the circumplex structure of mood. Personal. Soc. Psychol. Bull. 1995, 21, 806–817. [Google Scholar] [CrossRef]

- Yang, Y.H.; Chen, H.H. Machine Recognition of Music Emotion. Acm Trans. Intell. Syst. Technol. 2012, 3, 1–30. [Google Scholar] [CrossRef]

- Ekman, P. An Argument for Basic Emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Lang, P.J.; Bradley, M.M.; Cuthbert, B.N. International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual; Technical Report; University of Florida: Gainesville, FL, USA, 2008. [Google Scholar]

- MUSE. MUSE TM Headband. Available online: https://choosemuse.com/ (accessed on 15 December 2020).

- Jasper, H. Report of the committee on methods of clinical examination in electroencephalography: 1957. Electroencephalogr. Clin. Neurophysiol. 1958, 10, 370–375. [Google Scholar] [CrossRef]

- Allen, J.J.B.; Coan, J.A.; Nazarian, M. Issues and assumptions on the road from raw signals to metrics of frontal EEG asymmetry in emotion. Biol. Psychol. 2004, 67, 183–218. [Google Scholar] [CrossRef] [PubMed]

- Allen, J.J.B.; Kline, J.P. Frontal EEG asymmetry, emotion, and psychopathology: The first, and the next 25 years. Biol. Psychol. 2004, 67, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Gross, J.J.; Levenson, R.W. Emotion elicitation using films. Cogn. Emot. 1995, 9, 87–108. [Google Scholar] [CrossRef]

- Palaniappan, R. Utilizing gamma band to improve mental task based brain-computer interface design. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 299–303. [Google Scholar] [CrossRef] [PubMed]

- Elman, J.L. Finding structure in time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Muse Research Team. Available online: https://www.mendeley.com/profiles/muse-research-team/publications/ (accessed on 15 December 2020).

- Nie, C.Y.; Li, R.; Wang, J. Emotion Recognition Based on Chaos Characteristics of Physiological Signals. Appl. Mech. Mater. 2013, 380, 3750–3753. [Google Scholar] [CrossRef]

- Schaaff, K.; Schultz, T. Towards an EEG-based emotion recognizer for humanoid robots. In Proceedings of the 18th IEEE International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009; pp. 792–796. [Google Scholar] [CrossRef]

- Vivancos, D. The MindBigData. Available online: http://www.mindbigdata.com/ (accessed on 15 December 2020).

- Kim, J.; Seo, J.; Sohn, K.A. Deep learning based recognition of visual digit reading using frequency band of EEG. In Proceedings of the Korea Software Congress 2019, Pyeongchang, Korea, 18–20 December 2019; pp. 569–571. [Google Scholar]

- Gao, D.; Ju, C.; Wei, X.; Liu, Y.; Chen, T.; Yang, Q. HHHFL: Hierarchical Heterogeneous Horizontal Federated Learning for Electroencephalography. arXiv 2019, arXiv:1909.05784. [Google Scholar]

- Chien, V.S.; Tsai, A.C.; Yang, H.H.; Tseng, Y.L.; Savostyanov, A.N.; Liou, M. Conscious and non-conscious representations of emotional faces in asperger’s syndrome. J. Vis. Exp. 2016. [Google Scholar] [CrossRef]

- O’Connor, K.; Hamm, J.P.; Kirk, I.J. The neurophysiological correlates of face processing in adults and children with Asperger’s syndrome. Brain Cogn. 2005, 59, 82–95. [Google Scholar] [CrossRef] [PubMed]

| Study | Target Emotions | Approach | Accuracy (%) | Validation |

|---|---|---|---|---|

| [23] | Joy, anger, sadness, fear, relaxation | Support vector machine (SVM) | 41.68 | LOOCV |

| [25] | Engagement, confusion, boredom, hopefulness | SVM, k-nearest neighbors (KNN) | 67.1 (SVM) 62.5 (KNN) | - |

| [7] | Sadness, disgust | Linear SVM | 78.04 (Sad) 76.31 (Disgust) | - |

| [11] | Arousal, valence | SVM, K-means | - | - |

| [33] | Boredom, non-boredom | KNN | 86.73 | 5-fold cross-validation |

| [34] | Boredom, non-boredom | Multilayer Perceptron (MLP) | 79.98 | 5-fold cross-validation |

| [30] | Boredom, frustration | Analysis | - | - |

| Study | Health Status |

|---|---|

| [36,37,38] | Parkinson’s disease |

| [41] | Vegetative state(VS) and minimally conscious state(MCS) |

| [40] | Behavioural-variant FTD (bvFTD) and semantic dementia (SD) |

| Study | Physiological Data Source | Target Emotions | Approach | Validation |

|---|---|---|---|---|

| [36] | EEG (16 electrodes) | Happiness, Sadness, Fear, Anger, Surprise, and Disgust | SVM and Fuzzy KNN | 10-fold cross-validation |

| [37] | EEG (16 electrodes) | Happiness, Sadness, Fear, Anger, Surprise, and Disgust | SVM and KNN | 10-fold cross-validation |

| [38] | EEG (16 electrodes) | Happiness, Sadness, Fear, Anger, Surprise, and Disgust | SVM | 10-fold cross-validation |

| [40] | GSR and facial | Positive, Neutral, and Negative | ANOVA | - |

| [41] | EEG (30 electrodes) | Happiness, and Sadness | SVM | Train test split |

| MMSE Range | Number of Participants |

|---|---|

| ≥24 | 1 |

| 20–23 | 6 |

| ≤ 19 | 21 |

| Unknown | 2 |

| Classifier | Option | Classifier | Option | Classifier | Option |

|---|---|---|---|---|---|

| IBk | No | LibSVM | Linear | J48 | Default |

| 1/distance | Polynomial | JRip | |||

| 1-distance | Radial | Naive Bayes | |||

| Multilayer Perceptron (MLP) | t | Sigmoid | KStar | ||

| i | Decision Stump | Default | LMT | ||

| a | Decision Table | PART | |||

| o | Hoeffding Tree | Logistic | |||

| t,a | Random Tree | Simple Logistic | |||

| t,a,o | Random Forest (RF) | Zero R | |||

| t,i,a,o | REP Tree | One R | |||

| Parameter options of MLP for designing network (number of node per each layer) “i”= number of features, “o” = number of labels, “t” = “i” + “o”; “a” = “t”/2 | |||||

| Interview | Result | Label | ||

|---|---|---|---|---|

| Boredom | Others | 5 | Others | 21 |

| No | 16 | |||

| Yes | 6 | Boredom | 9 | |

| Very Much | 3 | |||

| Happy | Others | 3 | Others | 5 |

| No | 2 | |||

| Yes | 16 | Happy | 25 | |

| Very Much | 9 | |||

| Peaceful | Others | 2 | Others | 4 |

| No | 2 | |||

| Yes | 16 | Peaceful | 26 | |

| Very Much | 10 |

| Trained Algorithm | Window Size (s) | Option | Average Accuracy (%) | |

|---|---|---|---|---|

| Before WSE | After WSE | |||

| MLP | 80 | i | 37.33 | 68.83 |

| MLP | 80 | a | 38.50 | 68.00 |

| MLP | 80 | t | 36.67 | 66.50 |

| MLP | 80 | t,a | 40.83 | 64.83 |

| MLP | 50 | t,a | 40.67 | 64.17 |

| Kstar | 80 | Default | 40.67 | 64.17 |

| PART | 50 | Default | 41.67 | 64.00 |

| MLP | 80 | o | 43.83 | 63.83 |

| Kstar | 20 | Default | 31.67 | 63.67 |

| RF | 80 | Default | 45.67 | 63.33 |

| Trained Algorithm | Selected Features | Related Electrode | Related Frequency Band | |

|---|---|---|---|---|

| MLP-a | AF8 Alpha DE | - | AF8 | Alpha |

| Gamma DASM | AF7 | AF8 | Gamma | |

| Gamma RASM | AF7 | AF8 | Gamma | |

| MLP-i | AF8 Alpha DE | - | AF8 | Alpha |

| Gamma DASM | AF7 | AF8 | Gamma | |

| Gamma RASM | AF7 | AF8 | Gamma | |

| MLP-t | AF7 Beta ABP mean | AF7 | - | Beta |

| AF8 Alpha DE | - | AF8 | Alpha | |

| Gamma RASM | AF7 | AF8 | Gamma | |

| Trained Algorithm | Learning Rate | Epochs |

|---|---|---|

| MLP-a | 0.811 | 105 |

| MLP-i | 0.991 | 256 |

| MLP-t | 0.470 | 229 |

| r | m | b | d | a | bi | n | Epochs |

|---|---|---|---|---|---|---|---|

| GRU | 2 | Bi | 256 | True | - | - | 487 |

| LSTM | 1 | Single | 512 | False | - | - | 656 |

| Elman RNN | 3 | Single | 512 | True | False | tanh | 424 |

| Accuracy (%) | AUC | F1-Score | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Average | Min | Max | Average | Min | Max | Macro | Weight | Micro | |

| MLP-a | 67.18 | 53.33 | 76.67 | 0.699 | 0.605 | 0.765 | 0.486 | 0.619 | 0.672 |

| MLP-i | 70.97 | 61.67 | 76.67 | 0.697 | 0.630 | 0.750 | 0.516 | 0.657 | 0.710 |

| MLP-t | 63.74 | 55.00 | 71.67 | 0.642 | 0.577 | 0.701 | 0.464 | 0.592 | 0.637 |

| RNN - GRU | 42.83 | 33.33 | 53.33 | 0.523 | 0.427 | 0.630 | 0.377 | 0.418 | 0.428 |

| RNN - LSTM | 44.58 | 35.00 | 53.33 | 0.513 | 0.429 | 0.606 | 0.311 | 0.395 | 0.446 |

| RNN - Elman RNN | 48.18 | 35.00 | 61.67 | 0.592 | 0.439 | 0.721 | 0.483 | 0.482 | 0.482 |

| MLP-a | GRU | ||||||

| B | H | P | Classified as | B | H | P | Classified as |

| 0 | 1272 | 7728 | B | 139 | 276 | 485 | B |

| 0 | 17573 | 7427 | H | 29 | 1169 | 1302 | H |

| 29 | 3238 | 22733 | P | 141 | 1197 | 1262 | P |

| MLP-i | LSTM | ||||||

| B | H | P | Classified as | B | H | P | Classified as |

| 15 | 1326 | 7659 | B | 4 | 210 | 686 | B |

| 39 | 19325 | 5636 | H | 21 | 785 | 1694 | H |

| 252 | 2506 | 23242 | P | 12 | 702 | 1886 | P |

| MLP-t | Elman RNN | ||||||

| B | H | P | Classified as | B | H | P | Classified as |

| 0 | 2299 | 6701 | B | 396 | 279 | 225 | B |

| 187 | 17183 | 7630 | H | 157 | 1203 | 1140 | H |

| 834 | 4103 | 21063 | P | 166 | 1142 | 1292 | P |

| B: Boredom, H: happiness, P: Peacefulness | |||||||

| Accuracy (%) | Weighted F1-Score | |

|---|---|---|

| MLP-a | 68.33 | 0.631 |

| MLP-i | 71.67 | 0.662 |

| MLP-t | 65 | 0.603 |

| MLP-i | MLP-a | MLP-t | ||||

|---|---|---|---|---|---|---|

| Accuracy (%) | Weighted F1 | Accuracy (%) | Weighted F1 | Accuracy (%) | Weighted F1 | |

| B - NB | 85 | 0.781 | 85 | 0.781 | 83.33 | 0.833 |

| H-NH | 83.33 | 0.831 | 73.33 | 0.726 | 78.33 | 0.784 |

| P-NP | 58.33 | 0.585 | 51.67 | 0.518 | 60 | 0.601 |

| P-H | 84.31 | 0.843 | 80.39 | 0.801 | 76.47 | 0.761 |

| B: Boredom, NB: Non-boredom, P: Peacefulness NP: Non-peacefulness, H: happiness, NH: Non-happiness | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seo, J.; Laine, T.H.; Oh, G.; Sohn, K.-A. EEG-Based Emotion Classification for Alzheimer’s Disease Patients Using Conventional Machine Learning and Recurrent Neural Network Models. Sensors 2020, 20, 7212. https://doi.org/10.3390/s20247212

Seo J, Laine TH, Oh G, Sohn K-A. EEG-Based Emotion Classification for Alzheimer’s Disease Patients Using Conventional Machine Learning and Recurrent Neural Network Models. Sensors. 2020; 20(24):7212. https://doi.org/10.3390/s20247212

Chicago/Turabian StyleSeo, Jungryul, Teemu H. Laine, Gyuhwan Oh, and Kyung-Ah Sohn. 2020. "EEG-Based Emotion Classification for Alzheimer’s Disease Patients Using Conventional Machine Learning and Recurrent Neural Network Models" Sensors 20, no. 24: 7212. https://doi.org/10.3390/s20247212

APA StyleSeo, J., Laine, T. H., Oh, G., & Sohn, K.-A. (2020). EEG-Based Emotion Classification for Alzheimer’s Disease Patients Using Conventional Machine Learning and Recurrent Neural Network Models. Sensors, 20(24), 7212. https://doi.org/10.3390/s20247212