Research on Bearing Fault Diagnosis Method Based on an Adaptive Anti-Noise Network under Long Time Series

Abstract

1. Introduction

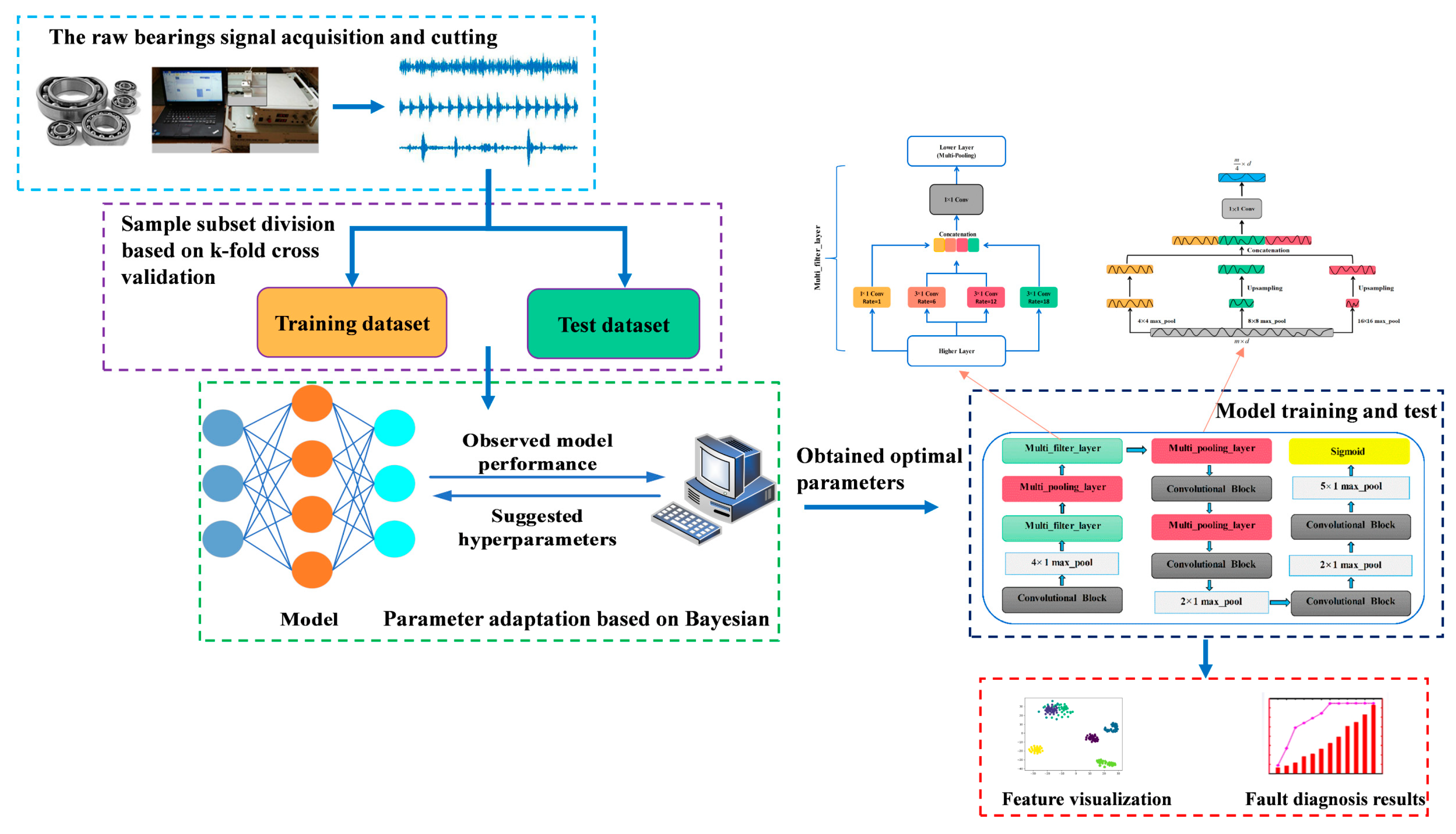

- An end-to-end ALSCN is proposed to bearing fault diagnosis which performs well under strong noise and different loads environment simultaneously. In addition, ALSCN can directly act on longer original signals, thereby reducing the workload of manual signal preprocessing.

- The multi-filter-layer based on improved atrous spatial pyramid pooling (ASPP) [30] is developed to preserve the spatial correlation of longer raw fault signal under noise, and the multi-scale pooling module is constructed to compensate for the loss.

- The Bayesian optimization algorithm are applied to optimize the hyperparameters for reducing the time of manual parameter adjustment. Furthermore, after removing the fully connected layer, ALSCN has an ideal cost in parameter calculation.

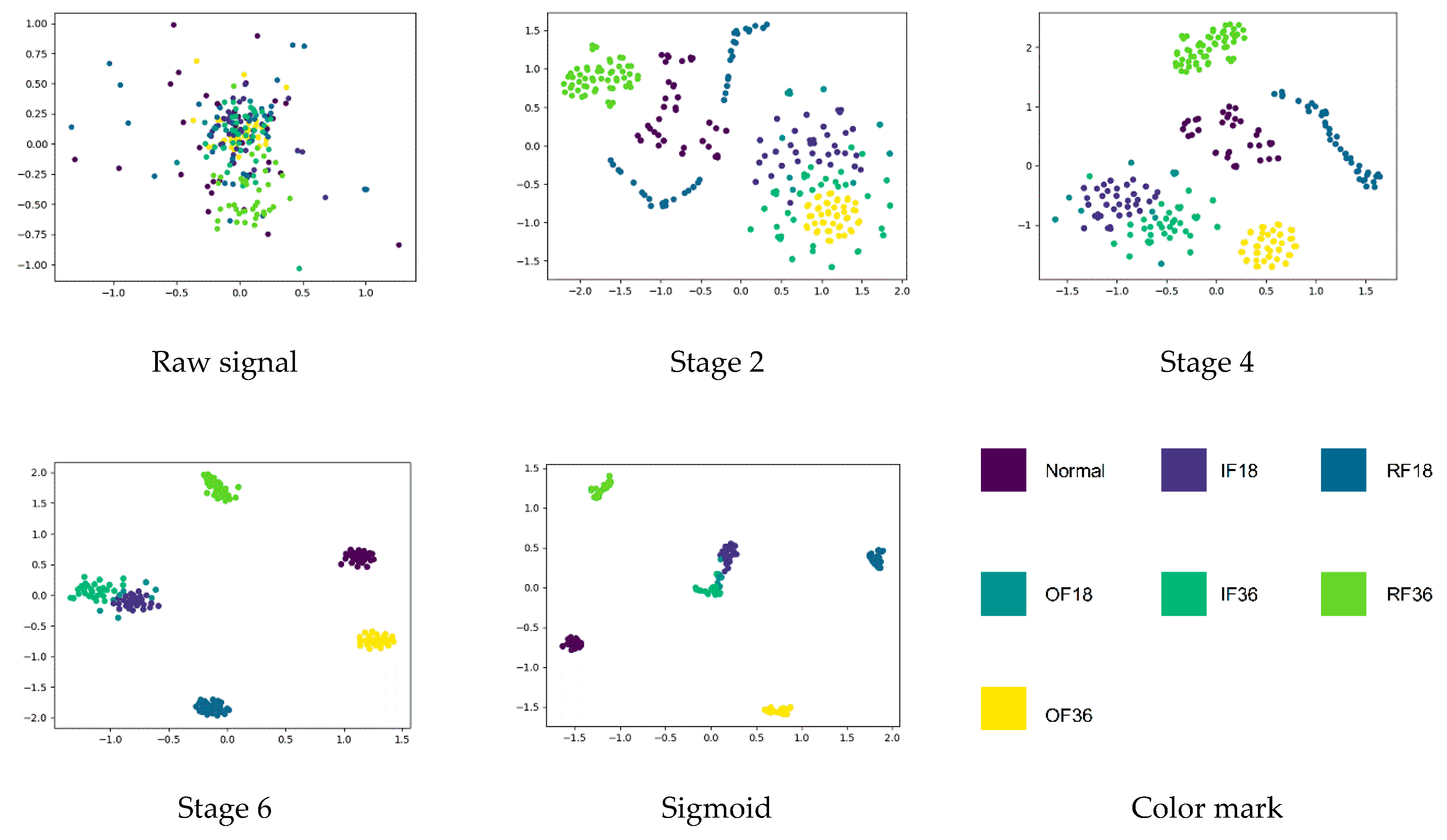

- Firstly, by visualizing the feature learning process between different layers of the network, the internal mechanism is explored. Then, the different results which are caused by the different order of modules and pooling operations are discussed and explained. Finally, the best structure for the ALSCN is given.

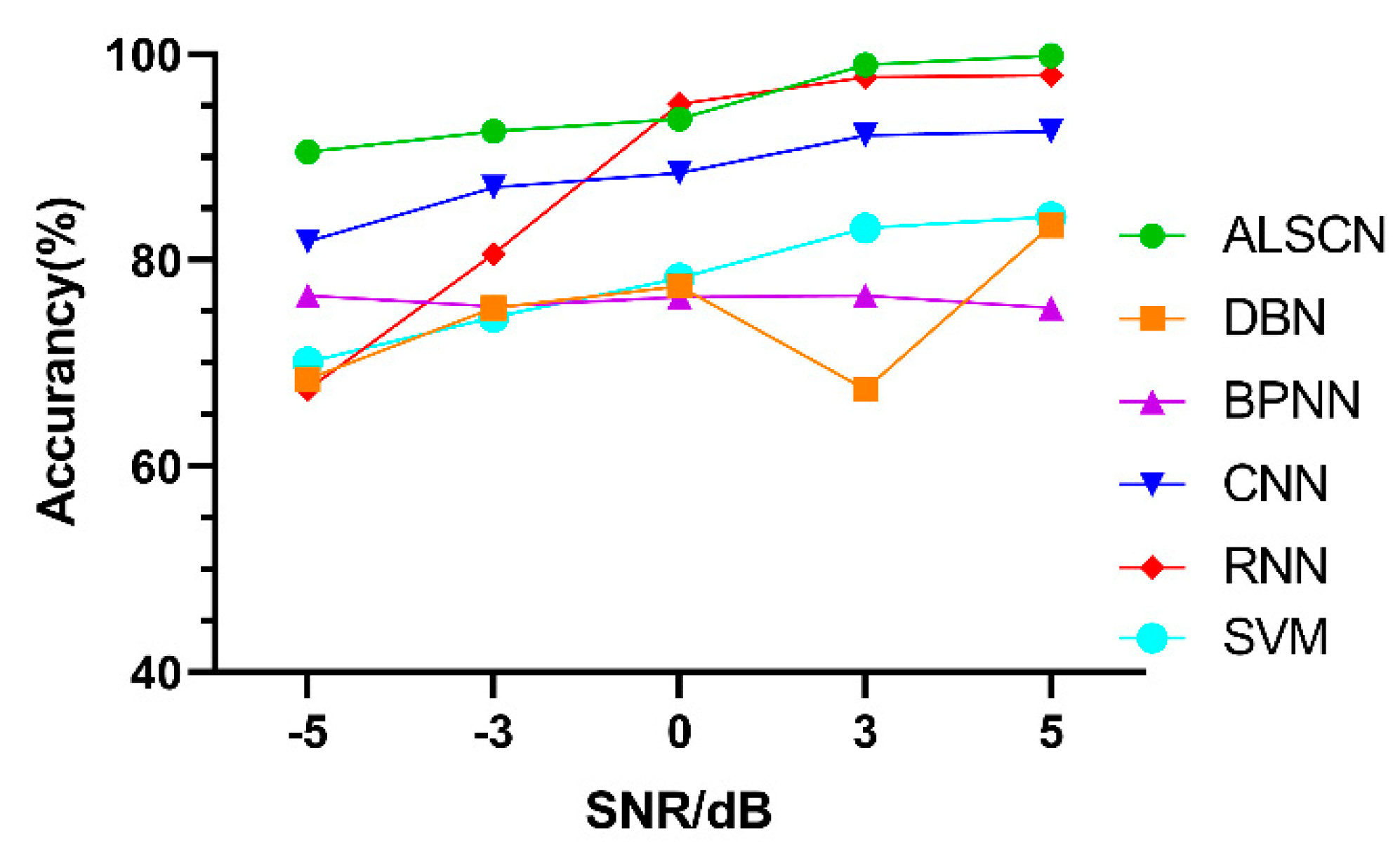

- Based on the bearing fault data set of Case Western Reserve University (CWRU) and the mechanical fault prevention technology (MFPT), the ALSCN model proposed outperforms SVM, CNN, RNN, DBN, and BP neural network models.

2. Related Theories

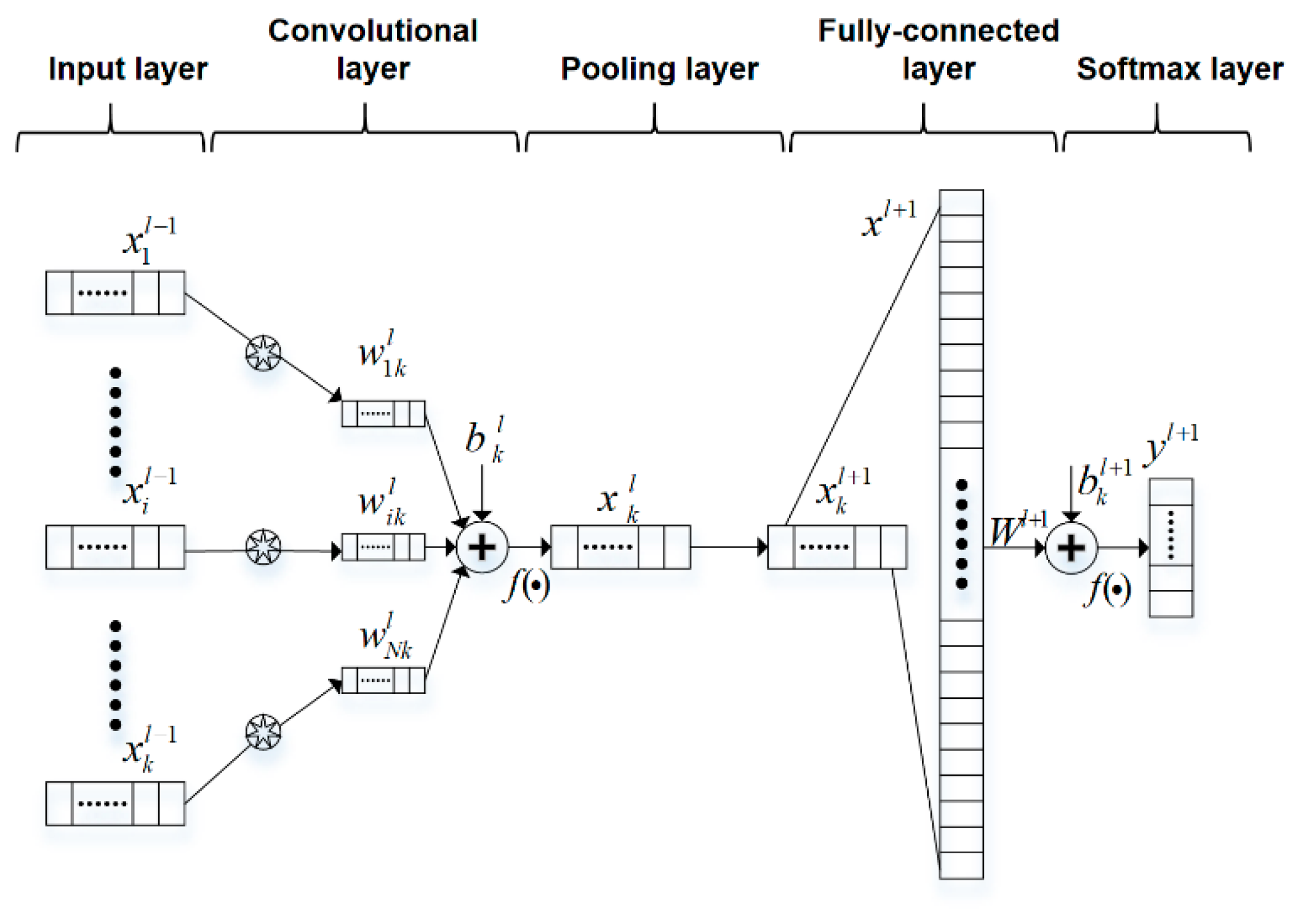

2.1. One-Dimensional Convolutional Network

2.2. Receptive Field

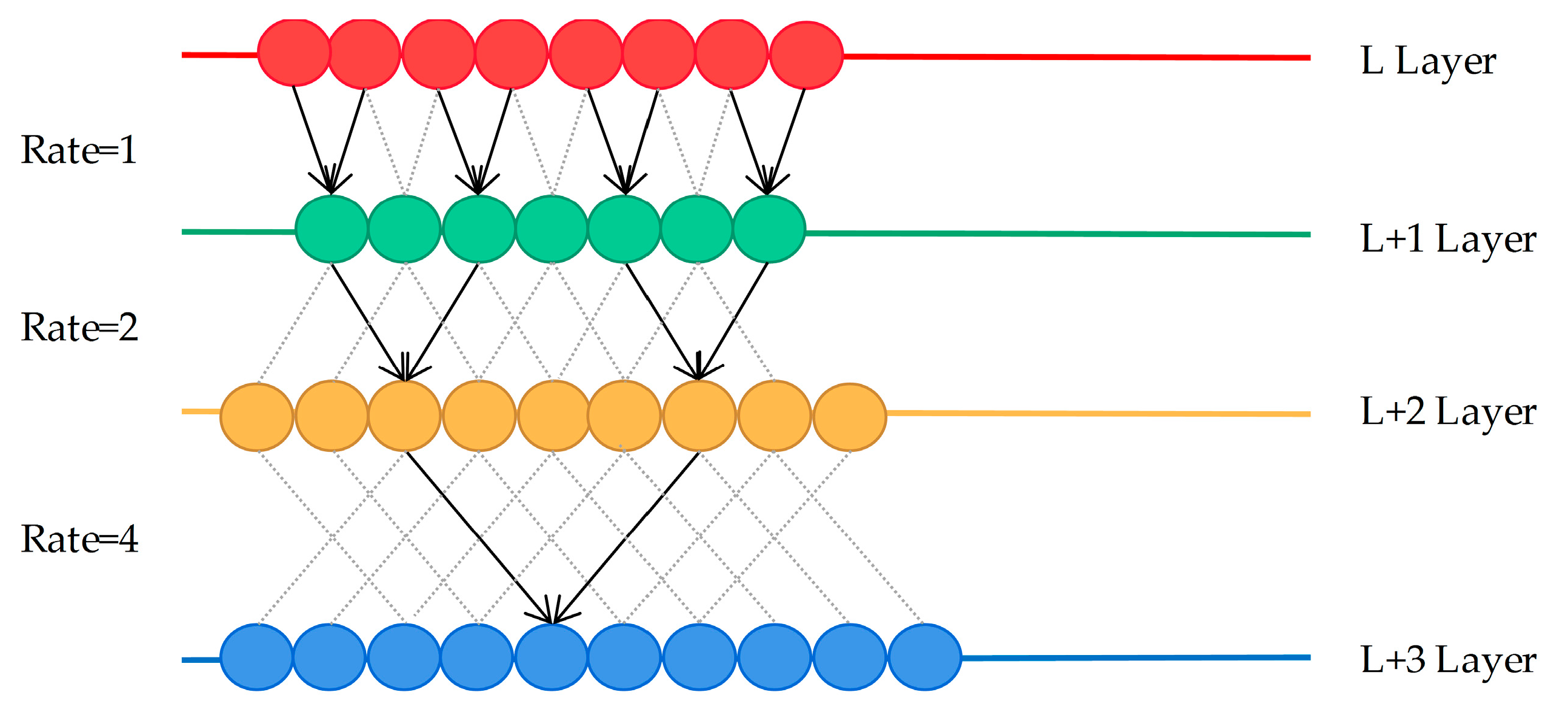

2.3. Dilated Convolution

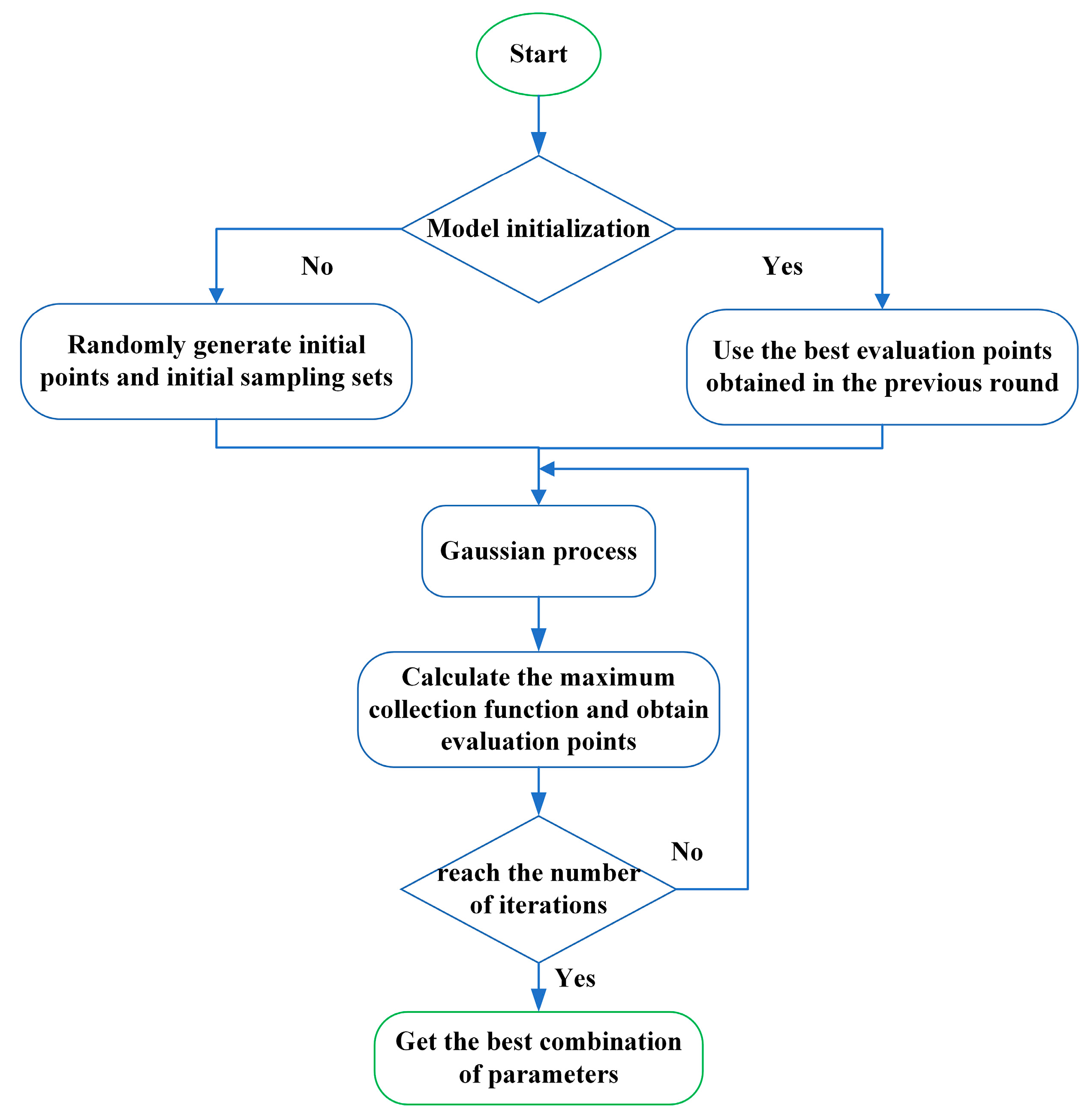

2.4. Bayesian Optimization Algorithm

- Given the objective function, random sampling is performed in the parameter space.

- Obtain the initial objective function distribution, and then continuously search for the optimal solution of the objective function based on historical information.

- Iterate continuously until the distribution fitted by the sampling points is roughly the same as the true objective function. In order to fit the relationship between parameter selection and objective function more comprehensively, Bayesian optimization puts forward the idea of probabilistic surrogate model. Bayesian optimization consists of two parts, the probabilistic surrogate model and the acquisition function.

3. Bearing Fault Diagnosis Method Based on ALSCN

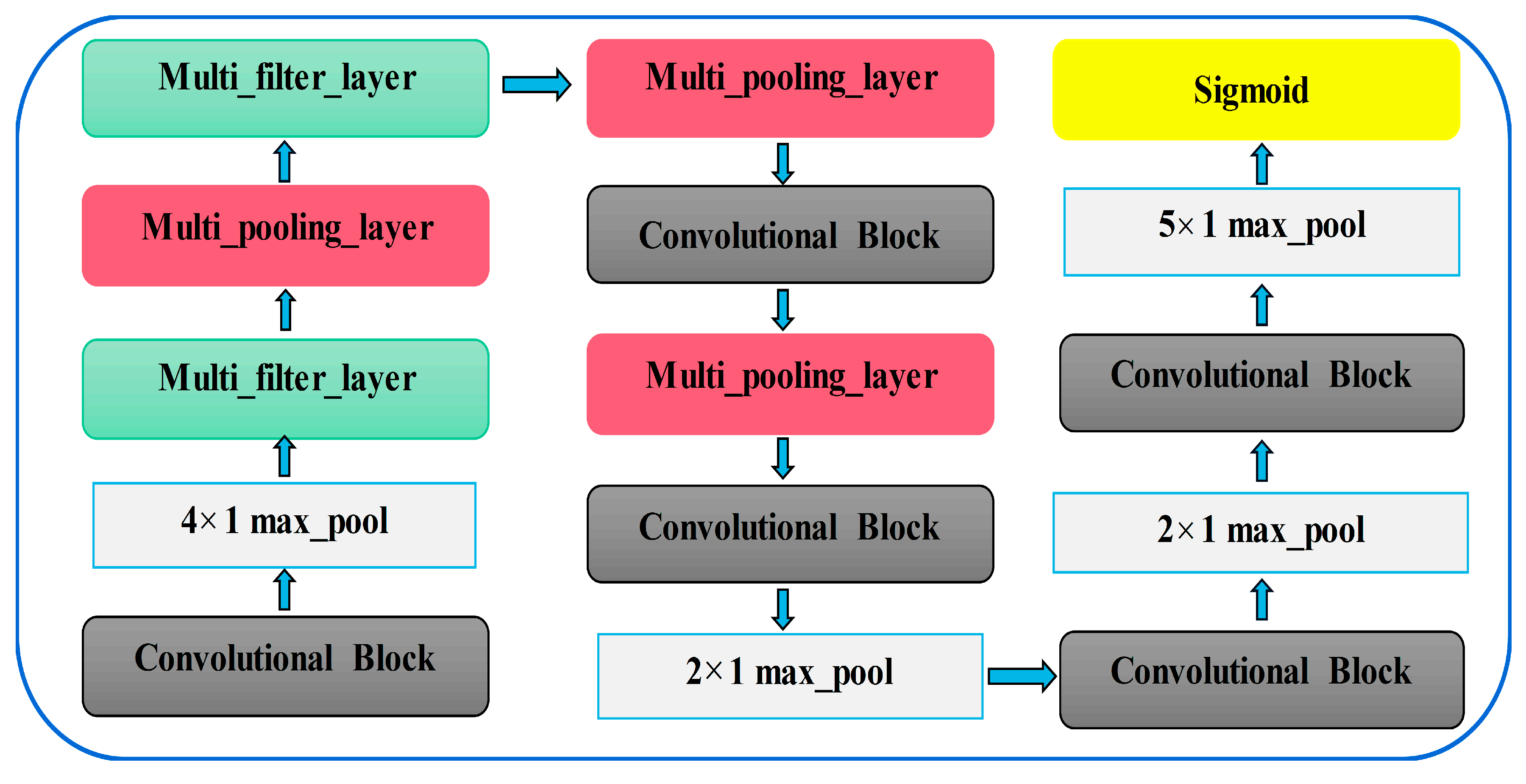

3.1. The Model Structure Proposed in This Paper

3.2. Multi-Scale Feature Extraction Module and Multi-Scale Max Pooling Module

3.2.1. Introduction of Multi-Scale Feature Extraction Module

| Algorithm 1 Multi-scale feature extraction module |

| Input: features , = 1, 2…N; Output: features , = 1, 2…N.

|

3.2.2. Introduction of Multi-Scale Max Pooling Module

| Algorithm 2 Multi-scale max pooling module |

| Input: features , = 1, 2…N; Output: features , = 1, 2…N.

|

4. Experimental Verification

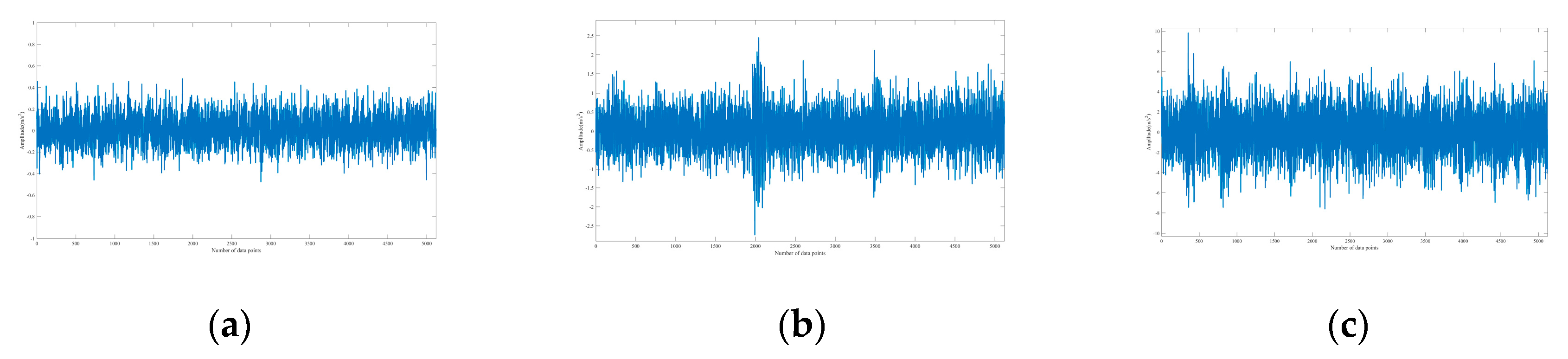

4.1. Experimental Data

4.2. Accuracy Comparison of Different Length Signals

4.3. Ablation Experiment

4.4. Model Optimal Structure Verification Experiment

4.5. Model Time-Consuming Verification Experiment

4.6. Network Performance Verification

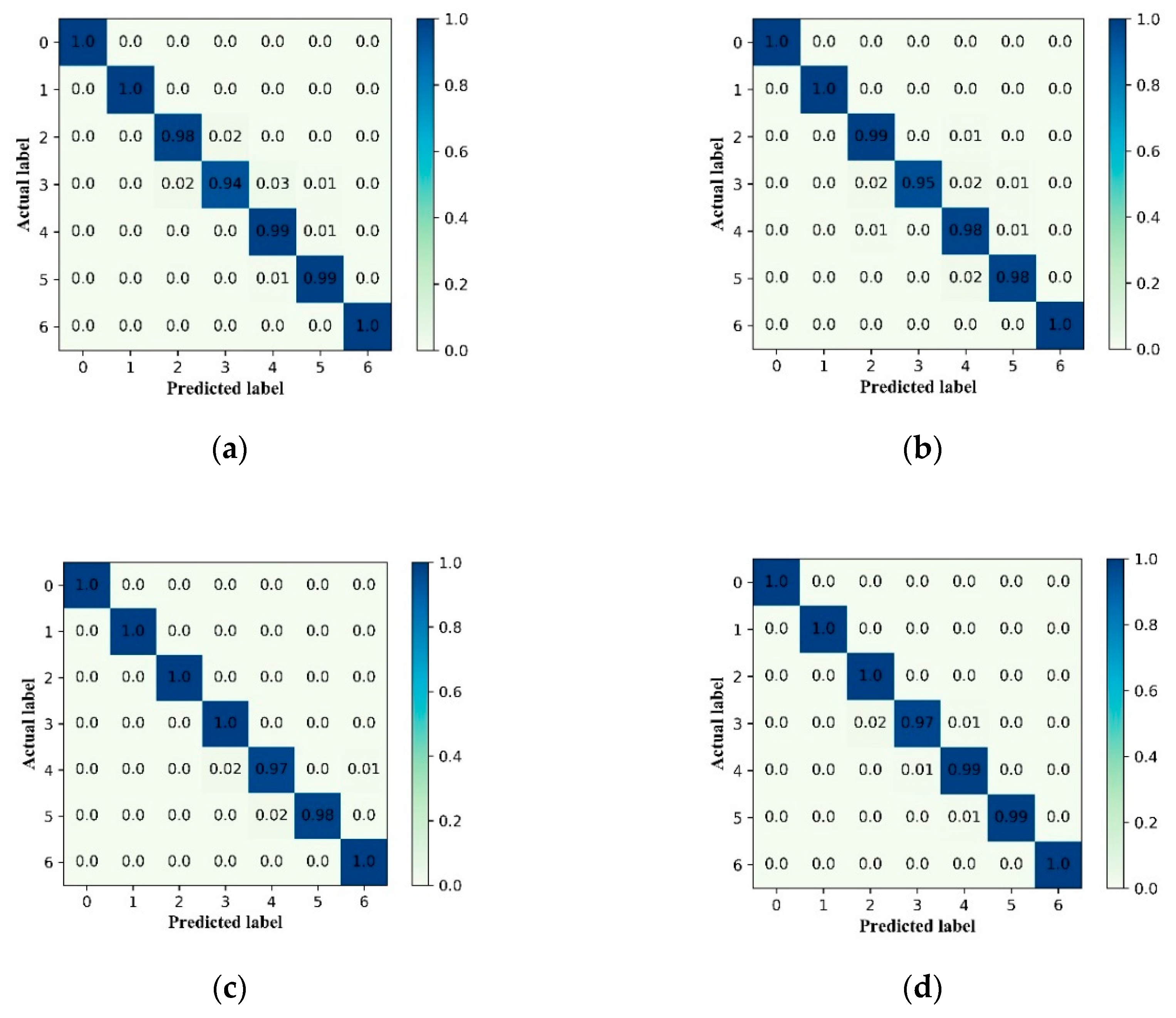

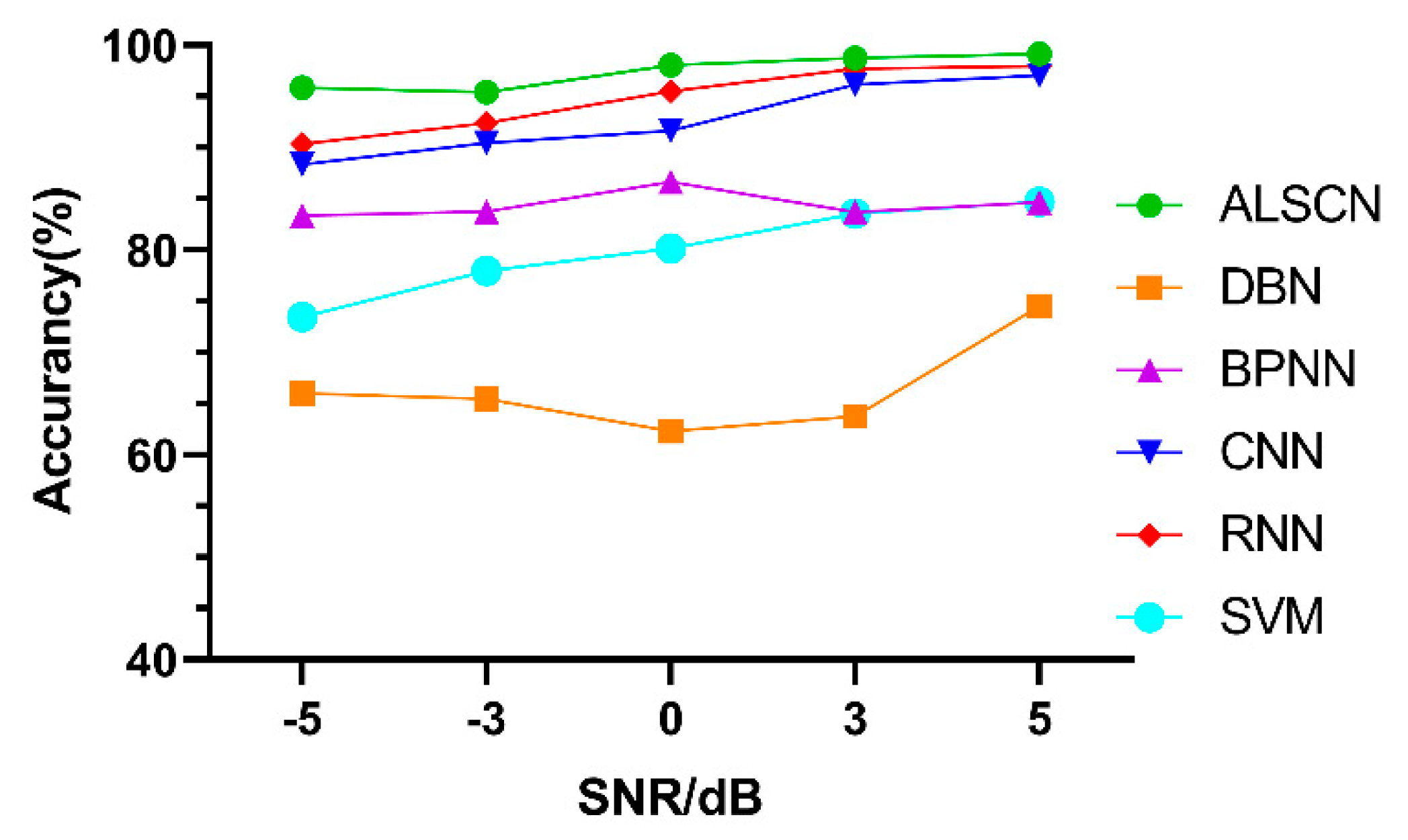

4.6.1. Robustness Verification

4.6.2. Generalization Verification

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Liu, R.; Yang, B.; Zio, E.; Chen, X. Artificial intelligence for fault diagnosis of rotating machinery: A review. Mech. Syst. Signal Process. 2018, 108, 33–47. [Google Scholar] [CrossRef]

- Jia, F.; Lei, Y.; Lin, J.; Zhou, X.; Lu, N. Deep neural networks: A promising tool for fault characteristic mining and intelligent diagnosis of rotating machinery with massive data. Mech. Syst. Signal Process. 2016, 72–73, 303–315. [Google Scholar] [CrossRef]

- Smith, W.A.; Randall, R.B. Rolling element bearing diagnostics using the Case Western Reserve University data: A benchmark study. Mech. Syst. Signal Process. 2015, 64–65, 100–131. [Google Scholar] [CrossRef]

- Zhao, X.P.; Wu, J.X.; Qian, C.S.; Zhang, Y.H.; Wang, L.H. Gearbox multi-fault diagnosis method based on multi-task deep learning. J. Vib. Shock 2019, 38, 271–278. [Google Scholar]

- Ma, Y.C. Research on High Speed and Reliable Data Transmission Method in Large-Scale Land Seismic Instruments. Ph.D. Thesis, University of Science and Technology of China, Hefei, China, 2011. [Google Scholar]

- Li, G.Q.; Deng, C.; Wu, J. Sensor Data-Driven Bearing Fault Diagnosis Based on Deep Convolutional Neural Networks and S-Transform. Sensors 2019, 19, 2750. [Google Scholar] [CrossRef]

- Wang, Y.; Ning, D.J.; Feng, S.L. A Novel Capsule Network Based on Wide Convolution and Multi-Scale Convolution for Fault Diagnosis. Appl. Sci. 2020, 10, 3659. [Google Scholar] [CrossRef]

- Zhou, G.L.; Jiang, Y.Q.; Chen, J.X.; Mei, G.M. Comparison of vibration signal processing methods of fault diagnosis model based on convolutional neural network. China Sci. Technol. Pap. 2020, 15, 729–734. [Google Scholar]

- Che, C.C.; Wang, H.W.; Fu, Q. Deep transfer learning for rolling bearing fault diagnosis under variable operating conditions. Adv. Mech. Eng. 2019, 11, 12. [Google Scholar] [CrossRef]

- Xing, S.W.; Yao, W.K.; Huang, Y. Multi-sensor signal fault diagnosis with unknown compound faults based on deep learning. J. Chongqing Univ. 2020, 43, 93–100. [Google Scholar]

- Ince, T.; Kiranyaz, S.; Eren, L.; Askar, M.; Gabbouj, M. Real-Time Motor Fault Detection by 1-D Convolutional Neural Networks. IEEE Trans. Ind. Electron. 2016, 63, 7067–7075. [Google Scholar] [CrossRef]

- Lei, Y.; Yang, B.; Jiang, X.; Jia, F.; Li, N.; Nandi, A.K. Applications of machine learning to machine fault diagnosis: A review and roadmap. Mech. Syst. Signal Process. 2020, 138, 106587. [Google Scholar] [CrossRef]

- Heng, L.; Qing, Z.; Rong, Q.X.; Sun, Y. Raw vibration signal pattern recognition with automatic hyper-parameter-optimized convolutional neural network for bearing fault diagnosis. Proc. Inst. Mech. Eng. 2020, 234, 343–360. [Google Scholar]

- Kamara, A.F.; Chen, E.; Liu, Q.; Pan, Z. Combining contextual neural networks for time series classification. Neurocomputing 2019, 384, 57–66. [Google Scholar] [CrossRef]

- Gao, J.H.; Guo, Y.; Wu, X. Fault diagnosis of gearbox bearing based on SANC and one-dimensional convolutional neural network. J. Vib. Shock 2020, 39, 204–209+257. [Google Scholar]

- Liu, H.; Zhou, J.; Zheng, Y.; Jiang, W.; Zhang, Y. Fault diagnosis of rolling bearings with recurrent neural network-based autoencoders. ISA Trans. 2018, 77, 167–178. [Google Scholar] [CrossRef] [PubMed]

- Zhu, J.; Hu, T.Z.; Jiang, B. Intelligent bearing fault diagnosis using PCA–DBN framework. Neural Comput. Appl. 2019, 5, 1–9. [Google Scholar] [CrossRef]

- Hu, X.Y.; Jing, Y.J.; Song, Z.K.; Hou, Y.Q. Bearing fault identification by using deep convolution neural networks based on CNN-SVM. J. Vib. Shock 2019, 18, 173–178. [Google Scholar]

- Cabrera, D.; Sancho, F.; Cerrada, M.; Sánchez, R.-V.; Li, C. Knowledge extraction from deep convolutional neural networks applied to cyclo-stationary time-series classification. Inf. Sci. 2020, 524, 1–14. [Google Scholar] [CrossRef]

- Li, J.M.; Yao, X.F.; Wang, X.D. Multiscale local features learning based on BP neural network for rolling bearing intelligent fault diagnosis. Measurement 2020, 153, 107419. [Google Scholar] [CrossRef]

- Fawaz, H.I.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.-A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef]

- Yang, J.H.; Han, S.; Zhang, S.; Liu, H.G.; Tang, C.Q. The Empirical Mode Decomposition of the Feature Signals of the Weak Faults of Rolling Bearings under Strong Noise. J. Vib. Eng. 2020, 33, 582–589. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Janssens, O.; Slavkovikj, V.; Vervisch, B.; Stockman, K.; Loccufier, M.; Verstockt, S.; Van de Walle, R.; Hoecke, S.V. Convolutional Neural Network Based Fault Detection for Rotating Machinery. J. Sound Vib. 2016, 377, 331–345. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. In NIPS 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. arXiv 2014, arXiv:1409.4842. [Google Scholar]

- Abdel-Hamid, O.; Mohamed, A.R.; Jiang, H.; Deng, L.; Penn, G. Convolutional Neural Networks for Speech Recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 1533–1545. [Google Scholar] [CrossRef]

- Yang, R.; Singh, S.K.; Tavakkoli, M.; Amiri, N. CNN-LSTM deep learning architecture for computer vision-based modal frequency detection. Mech. Syst. Signal Process. 2020, 144, 106885. [Google Scholar] [CrossRef]

- Liu, S.; Huang, D.; Wang, Y. Receptive Field Block Net for Accurate and Fast Object Detection. arXiv 2017, arXiv:1711.07767. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Luo, W.; Li, Y.; Urtasun, R.; Zemel, R. Understanding the Effective Receptive Field in Deep Convolutional Neural Networks. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Curran Associates Inc.: Red Hook, NY, USA, 2016; pp. 4905–4913. [Google Scholar]

- He, K.; Zhang, X.; Ren, S. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1904–1916. [Google Scholar] [CrossRef]

- Deng, S. CNN hyperparameter optimization method based on improved Bayesian optimization algorithm. Appl. Res. Comput. 2019, 36, 1984–1987. [Google Scholar]

- Boender, C.G.E. Bayesian Approach to Global Optimization--Theory and Applications by Jonas Mockus. Math. Comput. 1991, 56, 878–879. [Google Scholar] [CrossRef]

- Wang, X.; Han, T. Transformer fault diagnosis based on Bayesian optimized random forest. Electr. Meas. Instrum. 2020, 10, 1–8. [Google Scholar]

- Zhu, H.; Ning, Q.; Lei, Y.J.; Chen, B.C.; Yan, H. Rolling bearing fault classification based on attention mechanism-Inception-CNN model. J. Vib. Shock 2020, 39, 84–93. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. arXiv 2015, arXiv:1512.00567. [Google Scholar]

- Shi, L.; Zhou, Z.; Jia, H.; Yu, P.; Huang, Y. Fault Diagnosis of Functional Circuit in Avionics System Based on BPNN. In Proceedings of the 2019 Prognostics and System Health Management Conference (PHM-Qingdao), Qingdao, China, 25–27 October 2019. [Google Scholar]

- Zhou, Q.C.; Shen, H.H.; Zhao, J.; Liu, X.C. Bearing fault diagnosis based on improved stacked recurrent neural network. J. Tongji Univ. 2019, 47, 1500–1507. [Google Scholar]

- Ma, N.; Ni, Y.Y.; Ge, H.J. Research on Fault Diagnosis Method of Aviation Generator Based on DBN. J. Aviat. Comput. Technol. 2020, 50, 71–75. [Google Scholar]

- Loparo, K. Case Western Reserve University Bearing Data Centre Website. Available online: http://csegroups.case.edu/bearingdatacenter/pages/download-data-file (accessed on 20 September 2017).

- Machinery Failure Prevention Technology (MFPT) Datasets. Available online: https://www.mfpt.org/fault-data-sets/ (accessed on 17 January 2013).

| Process | Output Size | Process | Output Size | ||

|---|---|---|---|---|---|

| Stage 0 | Convolutional block | N × 20 × 5120 | Stage 4 | Convolutional block | N × 20 × 20 |

| 4 × 1 max_pool | N × 20 × 1280 | 2 × 1 max_pool | N × 20 × 10 | ||

| Stage 1 | Multi_filter_layer | N × 40 × 1280 | Stage 5 | Convolutional block | N × 10 × 10 |

| Multi_pooling_layer | N × 40 × 320 | 2 × 1 max_pool | N × 10 × 5 | ||

| Stage 2 | Multi_filter_layer | N × 80 × 320 | Stage 6 | Convolutional block | N × 10 × 5 |

| Multi_pooling_layer | N × 80 × 80 | 5 × 1 max_pool | N × 7 × 5 | ||

| Stage 3 | Convolutional block | N × 40 × 80 | Stage 7 | Sigmoid | N × 7 × 1 |

| Multi_pooling_layer | N × 40 × 20 | ||||

| Kernel Number | Size | Stride | |

|---|---|---|---|

| First convolutional layer | 20 | 1 | 1 |

| 4 × 1 max pooling layer | / | 4 | 4 |

| Multi-filter layer | 200 | / | / |

| Multi-pooling layer | / | / | / |

| Second Multi-filter layer | 400 | / | / |

| Second Multi-pooling layer | / | / | / |

| Second convolutional layer | 40 | 1 | 1 |

| Third Multi-pooling layer | / | / | / |

| Third convolutional layer | 20 | 1 | 1 |

| 2 × 1 pooling layer | / | 2 | 2 |

| Forth convolutional layer | 10 | 1 | 1 |

| 2 × 1 pooling layer | / | 2 | 2 |

| Fifth convolutional layer | 7 | 1 | 1 |

| 5 × 1 pooling layer | / | 5 | 5 |

| sigmoid | / | / | / |

| Data Set | Motor Speed/rpm | Load/hp | Number of Training Samples | Number of Test Samples | Fault Type |

|---|---|---|---|---|---|

| A | 1772 | 1 | 530 | 133 | NORMAL IF18 RF18 OF18 IF36 RF36 OF36 |

| B | 1750 | 2 | 530 | 133 | |

| C | 1730 | 3 | 530 | 133 | |

| D | 1797, 1772, 1750, 1730 | 0, 1, 2, 3 | 1590 | 399 |

| 1024Dim Test (%) | 5120Dim Test (%) | |

|---|---|---|

| A | 95.00 | 93.23 |

| B | 97.05 | 79.70 |

| C | 97.43 | 90.23 |

| D | 97.51 | 82.22 |

| Average (%) | 96.75 | 86.35 |

| Model 1 | Model 2 | Model 3 | ||||

|---|---|---|---|---|---|---|

| Module 1 | Module 2 | Module 1 | Module 2 | Module 1 | Module 2 | |

| Stage 0 | ||||||

| Stage 1 | √ | √ | √ | √ | ||

| Stage 2 | √ | √ | √ | √ | ||

| Stage 3 | √ | √ | √ | √ | ||

| Stage 4 | √ | √ | ||||

| Stage 5 | √ | |||||

| Stage 6 | ||||||

| Stage 7 | ||||||

| Sigmoid | ||||||

| Accuracy (%) | 99.17 | 95.82 | 97.03 | |||

| Stage | Order 1 | Order 2 | Order 3 |

|---|---|---|---|

| Stage 4 | 2 × 1 | 2 × 1 | 5 × 1 |

| Stage 5 | 2 × 1 | 5 × 1 | 2 × 1 |

| Stage 6 | 5 × 1 | 2 × 1 | 2 × 1 |

| Accuracy (%) | 99.17 | 96.76 | 95.07 |

| SNR/(dB) | ALSCN | DBN | BPNN | CNN | RNN | SVM |

|---|---|---|---|---|---|---|

| −5 | 90.51 ± 1.09 | 68.45 ± 2.41 | 76.60 ± 0.64 | 81.82 ± 0.45 | 67.47 ± 3.52 | 70.11 ± 0.89 |

| −3 | 92.53 ± 0.76 | 75.36 ± 1.99 | 75.51 ± 1.38 | 87.07 ± 0.92 | 80.61 ± 1.97 | 74.48 ± 1.27 |

| 0 | 93.74 ± 0.34 | 77.49 ± 2.08 | 76.41 ± 1.67 | 88.45 ± 1.03 | 95.15 ± 0.85 | 78.26 ± 0.33 |

| 3 | 98.99 ± 1.01 | 67.48 ± 3.44 | 76.57 ± 2.06 | 92.12 ± 0.14 | 97.78 ± 0.39 | 83.14 ± 1.64 |

| 5 | 99.07 ± 0.11 | 83.37 ± 1.78 | 75.36 ± 2.52 | 92.53 ± 0.57 | 97.95 ± 0.77 | 84.20 ± 1.17 |

| Data Sets | ALSCN | CNN | DBN | BPNN | RNN | SVM |

|---|---|---|---|---|---|---|

| A | 98.18 ± 0.59 | 93.23 ± 0.82 | 74.51 ± 1.67 | 76.95 ± 2.14 | 39.10 ± 2.75 | 90.25 ± 0.37 |

| B | 98.05 ± 0.74 | 79.70 ± 1.96 | 70.06 ± 2.54 | 75.04 ± 1.62 | 38.35 ± 2.16 | 85.46 ± 0.94 |

| C | 99.11 ± 0.12 | 90.23 ± 1.20 | 75.65 ± 1.83 | 77.14 ± 1.99 | 51.35 ± 3.22 | 91.23 ± 0.47 |

| D | 99.12 ± 0.05 | 82.22 ± 0.81 | 77.57 ± 1.08 | 75.00 ± 1.47 | 97.59 ± 0.45 | 90.17 ± 1.03 |

| Type of Fault | Load/1bs | Number of Training Samples | Number of Test Samples | Sampling Rate/sps |

|---|---|---|---|---|

| NORMAL | 270 | 273 | 69 | 97,656 |

| ORF1 | 270 | 273 | 69 | 97,656 |

| ORF2 | 25, 50, 100, 150, 200, 250, 300 | 156 | 40 | 48,828 |

| IRF | 0, 50, 100, 150, 200, 250, 300 | 156 | 40 | 48,828 |

| SNR/(dB) | ALSCN | DBN | BPNN | CNN | RNN | SVM |

|---|---|---|---|---|---|---|

| −5 | 95.87 ± 0.64 | 66.05 ± 3.12 | 83.39 ± 1.01 | 88.36 ± 0.32 | 90.37 ± 0.50 | 73.45 ± 1.25 |

| −3 | 95.4 ± 0.24 | 65.45 ± 2.23 | 83.77 ± 0.92 | 90.45 ± 0.56 | 92.41 ± 0.37 | 77.98 ± 1.41 |

| 0 | 98.08 ± 0.92 | 62.32 ± 2.42 | 86.69 ± 1.01 | 91.66 ± 1.15 | 95.54 ± 0.22 | 80.17 ± 0.44 |

| 3 | 98.76 ± 0.23 | 63.76 ± 1.97 | 83.70 ± 1.74 | 96.17 ± 0.32 | 97.72 ± 0.87 | 83.60 ± 0.97 |

| 5 | 99.17 ± 0.03 | 74.52 ± 1.06 | 84.67 ± 1.49 | 97.06 ± 0.29 | 97.98 ± 0.34 | 84.73 ± 0.31 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Sun, H.; Zhao, R.; Cao, X. Research on Bearing Fault Diagnosis Method Based on an Adaptive Anti-Noise Network under Long Time Series. Sensors 2020, 20, 7031. https://doi.org/10.3390/s20247031

Wang C, Sun H, Zhao R, Cao X. Research on Bearing Fault Diagnosis Method Based on an Adaptive Anti-Noise Network under Long Time Series. Sensors. 2020; 20(24):7031. https://doi.org/10.3390/s20247031

Chicago/Turabian StyleWang, Changdong, Hongchun Sun, Rong Zhao, and Xu Cao. 2020. "Research on Bearing Fault Diagnosis Method Based on an Adaptive Anti-Noise Network under Long Time Series" Sensors 20, no. 24: 7031. https://doi.org/10.3390/s20247031

APA StyleWang, C., Sun, H., Zhao, R., & Cao, X. (2020). Research on Bearing Fault Diagnosis Method Based on an Adaptive Anti-Noise Network under Long Time Series. Sensors, 20(24), 7031. https://doi.org/10.3390/s20247031