A Comprehensive Survey on Local Differential Privacy toward Data Statistics and Analysis

Abstract

1. Introduction

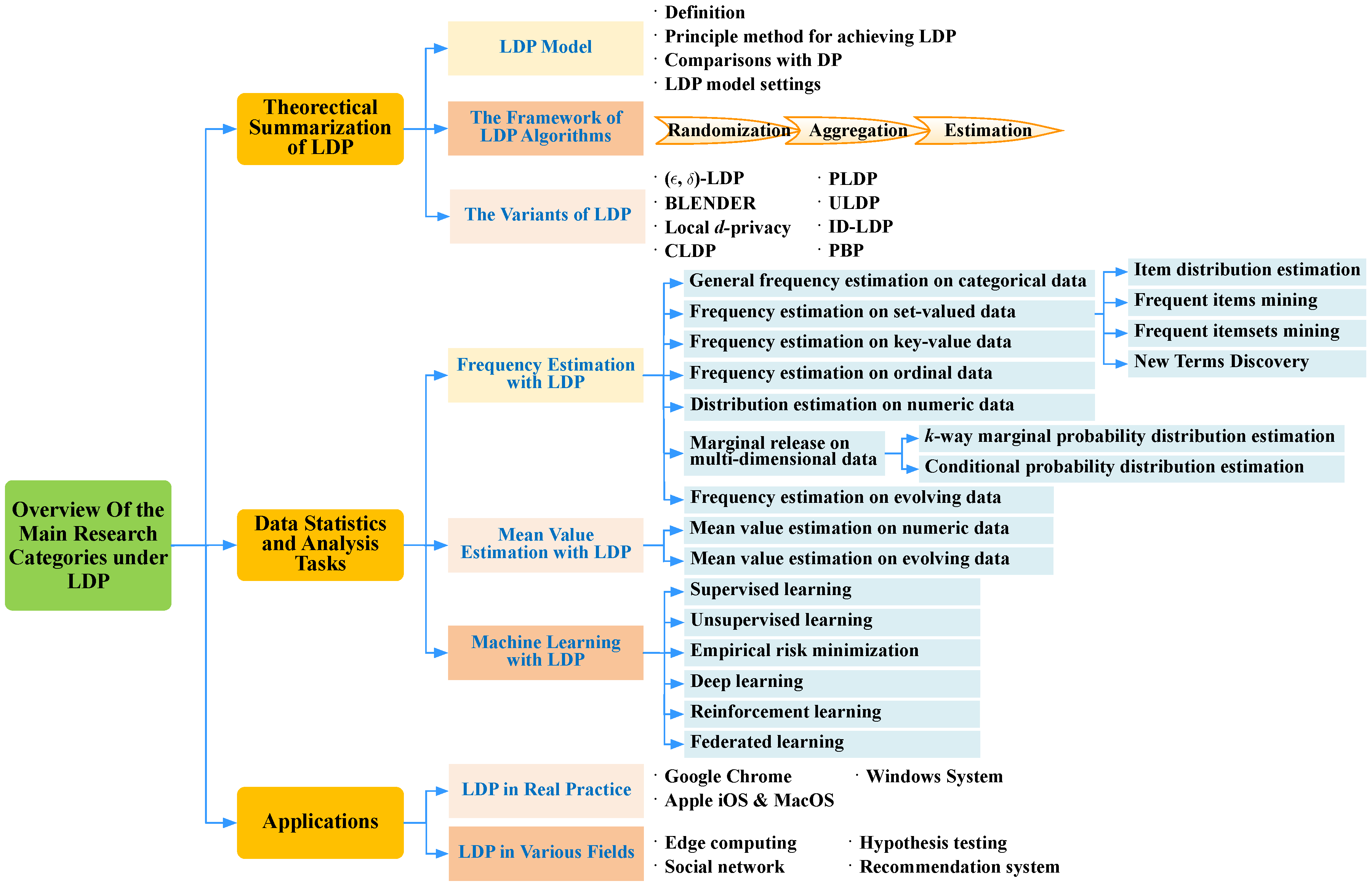

- We firstly provide a theoretical summarization of LDP from the perspectives of the LDP models, the general framework of LDP algorithms, and the variants of LDP.

- We systematically investigate and summarize the enabling LDP mechanisms for various data statistics and analysis tasks. In particular, the existing state-of-the-art LDP mechanisms are thoroughly concluded from the perspectives of frequency estimation, mean value estimation, and machine learning.

- We explore the practical applications with LDP to show how LDP is to be implemented in various applications, including in real systems (e.g., Google Chrome, Apple iOS), edge computing, hypothesis testing, social networks, and recommendation systems.

- We further distinguish some promising research directions of LDP, which can provide useful guidance for new researchers.

2. Theoretical Summarization of LDP

2.1. LDP Model

2.1.1. Definition

2.1.2. The Principle Method for Achieving LDP

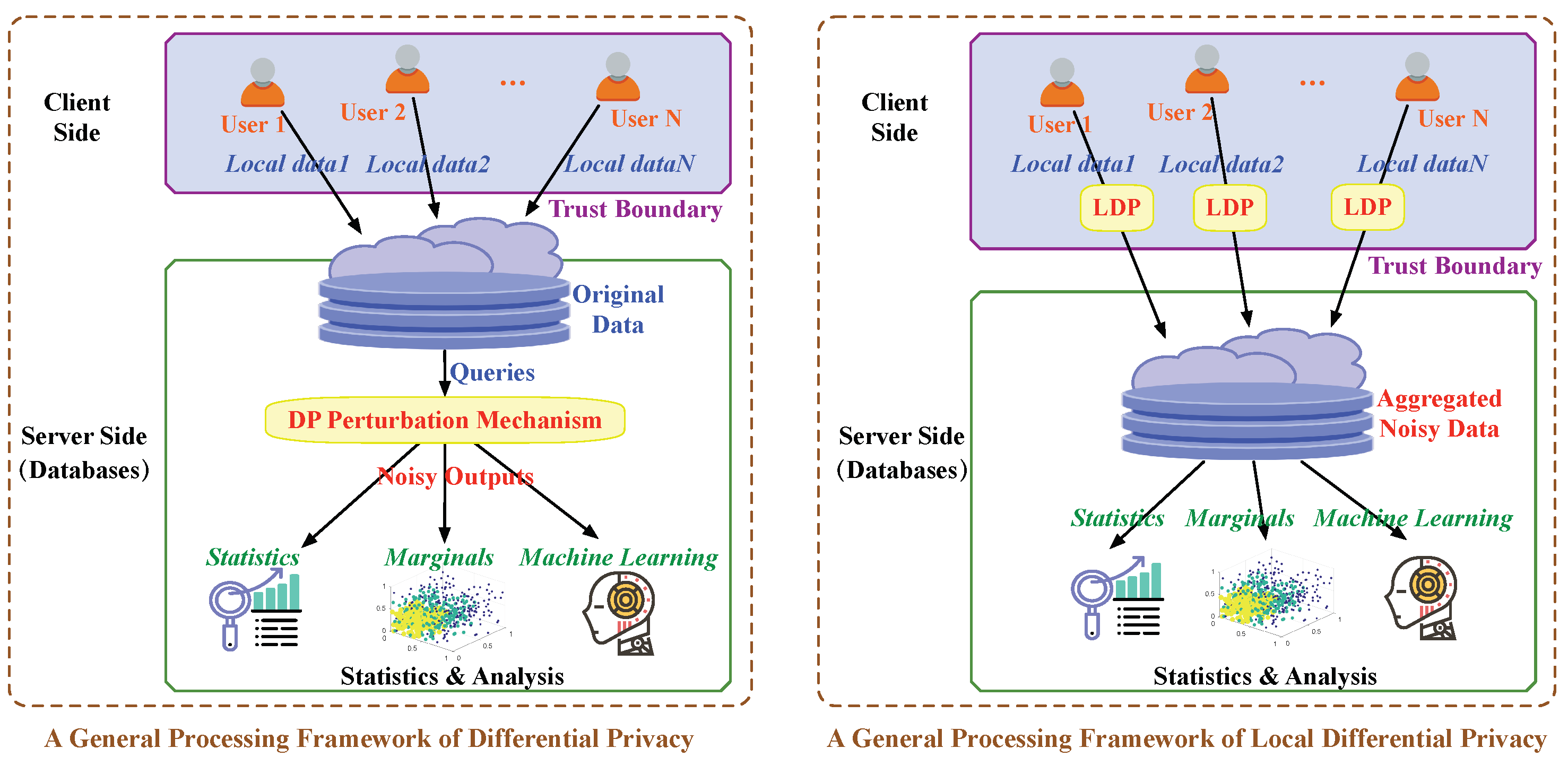

2.1.3. Comparisons with Global Differential Privacy

2.1.4. LDP Model Settings

2.2. The Framework of LDP Algorithm

| Algorithm 1: The General Procedure of LDP-based Privacy-preserving Mechanisms |

|

2.3. The Variants of LDP

2.3.1. -LDP

2.3.2. BLENDER

2.3.3. Local -Privacy

2.3.4. CLDP

2.3.5. PLDP

2.3.6. ULDP

2.3.7. ID-LDP

2.3.8. PBP

3. Frequency Estimation with LDP

3.1. General Frequency Estimation on Categorical Data

3.1.1. Direct Perturbation

3.1.2. Unary Encoding

3.1.3. Hash Encoding

3.1.4. Transformation

3.1.5. Subset Selection

| Algorithm 2: The Randomization of -SM |

|

3.2. Frequency Estimation on Set-Valued Data

3.2.1. Item Distribution Estimation

3.2.2. Frequent Items Mining

3.2.3. Frequent Itemset Mining

3.2.4. New Terms Discovery

3.3. Frequency Estimation on Key-Value Data

3.4. Frequency Estimation on Ordinal Data

3.5. Frequency Estimation on Numeric Data

3.6. Marginal Release on Multi-Dimensional Data

3.6.1. k-Way Marginal Probability Distribution Estimation

3.6.2. Conditional Probability Distribution Estimation

3.7. Frequency Estimation on Evolving Data

4. Mean Value Estimation with LDP

4.1. Mean Value Estimation on Numeric Data

4.2. Mean Value Estimation on Evolving Data

5. Machine Learning with LDP

5.1. Supervised Learning

5.2. Unsupervised Learning

5.3. Empirical Risk Minimization

5.4. Deep Learning

5.5. Reinforcement Learning

5.6. Federated Learning

6. Applications

6.1. LDP in Real Practice

- RAPPOR in Google Chrome. As the first practical deployment of LDP, RAPPOR [27] is proposed by Google in 2014 and was integrated into Google Chrome to constantly collect the statistics of Chrome usage (e.g., the homepage and search engine settings) while protecting users’ privacy. By analyzing the distribution of these settings, the malicious software tampered with the settings without user consent will be targeted. Furthermore, a follow-up work [87] by the Google team has extended RAPPOR to collect more complex statistical tasks when there is no prior knowledge about dictionary knowledge.

- LDP in iOS and MacOS. The Apple company has deployed LDP in iOS and MacOS to collect typing statistics (e.g., emoji frequency detection) while providing privacy guarantees for users [26,85,171]. The deployed algorithm uses the Fourier transformation and sketching technique to realize a good trade-off between massive learning and high utility.

- LDP in Microsoft Systems. Microsoft company has also adopted LDP and deployed LDP starting with Windows Insiders in Windows 10 Fall Creators Update [28]. This deployment is used to collect telemetry data statistics (e.g., histogram and mean estimations) across millions of devices over time. Both -point rounding and memoization technique are used to solve the problem that privacy leakage accumulates as time continues to grow.

- LDP in SAP HANA. SAP announced that its database management system SAP HANA has integrates LDP to provide privacy-enhanced processing capabilities [172] since May 2018. There are two reasons for choosing LDP. One is to provide privacy guarantees when counting sum average on the database. The other is to ensure a maximum transparency of the privacy-enhancing methods since LDP can avoid the trouble and overhead of maintaining a privacy budget.

6.2. LDP in Various Fields

6.2.1. Edge Computing

6.2.2. Hypothesis Testing

6.2.3. Social Network

6.2.4. Recommendation System

7. Discussions and Future Directions

7.1. Strengthen Theoretical Underpinnings

- (1)

- The lower bound of the accuracy is not very clear. Duchi et al. [115] showed a provably optimal estimation procedure under LDP. However, the lower bounds on the accuracy of other LDP protocols should be proved elaborately.

- (2)

- Can the sample complexity under LDP be further reduced? One of the limitations of LDP algorithms is that the number of users should be substantially large to ensure the data utility. A general rule of thumb [27] is , where is the domain size of the ith attribute and N is the data size. Some studies in [56,67] have focused on the scenarios with a small number of users and tried to reduce the sample complexity for all privacy regimes.

- (3)

- There is relatively little research on relaxation of LDP (i.e., -LDP). It needs to be theoretically studied to show whether we can get any improvements in utility or other factors when adding a relaxation to LDP [81].

- (4)

- The more general variant definitions of LDP can be further studied. Some novel and more strict definitions based on LDP were proposed. However, they are only for some specific datasets. For example, d-privacy is only for location datasets, and decentralized differential privacy (DDP) is only for graph datasets.

7.2. Overcome the Challenge of Knowing Data Domain.

7.3. Focus on Data Correlations

7.4. Address High-Dimensional Data Analysis

7.5. Adopt Personalized/Granular Privacy Constraints

7.6. Develop Prototypical Systems

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Cheng, X.; Fang, L.; Yang, L.; Cui, S. Mobile Big Data: The Fuel for Data-Driven Wireless. IEEE Internet Things J. 2017, 4, 1489–1516. [Google Scholar] [CrossRef]

- Guo, B.; Wang, Z.; Yu, Z.; Wang, Y.; Yen, N.Y.; Huang, R.; Zhou, X. Mobile Crowd Sensing and Computing: The Review of an Emerging Human-Powered Sensing Paradigm. ACM Comput. Surv. 2015, 48, 1–31. [Google Scholar] [CrossRef]

- Shu, J.; Jia, X.; Yang, K.; Wang, H. Privacy-Preserving Task Recommendation Services for Crowdsourcing. IEEE Trans. Services Comput. 2018, 1–13. [Google Scholar] [CrossRef]

- Lu, R.; Jin, X.; Zhang, S.; Qiu, M.; Wu, X. A Study on Big Knowledge and Its Engineering Issues. IEEE Trans. Knowl. Data Eng. 2019, 31, 1630–1644. [Google Scholar] [CrossRef]

- Jarrett, J.; Blake, M.B.; Saleh, I. Crowdsourcing, Mixed Elastic Systems and Human-Enhanced Computing— A Survey. IEEE Trans. Serv. Comput. 2018, 11, 202–214. [Google Scholar] [CrossRef]

- Krzywicki, A.; Wobcke, W.; Kim, Y.S.; Cai, X.; Bain, M.; Mahidadia, A.; Compton, P. Collaborative filtering for people-to-people recommendation in online dating: Data analysis and user trial. Int. J. Hum.-Comput. Stud. 2015, 76, 50–66. [Google Scholar] [CrossRef]

- Chen, R.; Li, H.; Qin, A.K.; Kasiviswanathan, S.P.; Jin, H. Private spatial data aggregation in the local setting. In Proceedings of the 2016 IEEE 32nd International Conference on Data Engineering (ICDE), Helsinki, Finland, 16–20 May 2016; pp. 289–300. [Google Scholar]

- Yao, Y.; Xiong, S.; Qi, H.; Liu, Y.; Tolbert, L.M.; Cao, Q. Efficient histogram estimation for smart grid data processing with the loglog-Bloom-filter. IEEE Trans. Smart Grid 2014, 6, 199–208. [Google Scholar] [CrossRef]

- Liu, Y.; Guo, W.; Fan, C.I.; Chang, L.; Cheng, C. A practical privacy-preserving data aggregation (3PDA) scheme for smart grid. IEEE Trans. Ind. Inform. 2018, 15, 1767–1774. [Google Scholar] [CrossRef]

- Fung, B.C.; Wang, K.; Chen, R.; Yu, P.S. Privacy-preserving data publishing: A survey of recent developments. ACM Comput. Surv. 2010, 42, 1–53. [Google Scholar] [CrossRef]

- Zhu, T.; Li, G.; Zhou, W.; Yu, P.S. Differentially Private Data Publishing and Analysis: A Survey. IEEE Trans. Knowl. Data Eng. 2017, 29, 1619–1638. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, L.; Yin, G.; Li, L.; Zhao, H. A survey on security and privacy issues in Internet-of-Things. IEEE Internet Things J. 2017, 4, 1250–1258. [Google Scholar] [CrossRef]

- Soria-Comas, J.; Domingo-Ferrer, J. Big data privacy: Challenges to privacy principles and models. Data Sci. Eng. 2016, 1, 21–28. [Google Scholar] [CrossRef]

- Yu, S. Big privacy: Challenges and opportunities of privacy study in the age of big data. IEEE Access 2016, 4, 2751–2763. [Google Scholar] [CrossRef]

- Sun, Z.; Strang, K.D.; Pambel, F. Privacy and security in the big data paradigm. J. Comput. Inf. Syst. 2020, 60, 146–155. [Google Scholar] [CrossRef]

- Hino, H.; Shen, H.; Murata, N.; Wakao, S.; Hayashi, Y. A versatile clustering method for electricity consumption pattern analysis in households. IEEE Trans. Smart Grid 2013, 4, 1048–1057. [Google Scholar] [CrossRef]

- Zhao, J.; Jung, T.; Wang, Y.; Li, X. Achieving differential privacy of data disclosure in the smart grid. In Proceedings of the IEEE INFOCOM 2014—IEEE Conference on Computer Communications, Toronto, ON, Canada, 27 April–2 May 2014; pp. 504–512. [Google Scholar]

- Barbosa, P.; Brito, A.; Almeida, H. A technique to provide differential privacy for appliance usage in smart metering. Inf. Sci. 2016, 370, 355–367. [Google Scholar] [CrossRef]

- Wang, T.; Zhao, J.; Yu, H.; Liu, J.; Yang, X.; Ren, X.; Shi, S. Privacy-preserving Crowd-guided AI Decision-making in Ethical Dilemmas. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 1311–1320. [Google Scholar]

- General Data Protection Regulation GDPR. Available online: https://gdpr-info.eu/ (accessed on 25 May 2018).

- Privacy Framework. Available online: https://www.nist.gov/privacy-framework (accessed on 11 July 2019).

- Dwork, C.; Roth, A. The algorithmic foundations of differential privacy. Found. Trends® Theor. Comput. Sci. 2014, 9, 211–407. [Google Scholar] [CrossRef]

- Yang, X.; Wang, T.; Ren, X.; Yu, W. Survey on improving data utility in differentially private sequential data publishing. IEEE Trans. Big Data 2017, 1–19. [Google Scholar] [CrossRef]

- Abowd, J.M. The U.S. Census Bureau Adopts Differential Privacy. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 18–23 August 2018; p. 2867. [Google Scholar]

- Kasiviswanathan, S.P.; Lee, H.K.; Nissim, K.; Raskhodnikova, S.; Smith, A. What can we learn privately? SIAM J. Comput. 2011, 40, 793–826. [Google Scholar] [CrossRef]

- Learning with Privacy at Scale. Available online: https://machinelearning.apple.com/research/learning-with-privacy-at-scale (accessed on 31 December 2017).

- Erlingsson, Ú.; Pihur, V.; Korolova, A. Rappor: Randomized aggregatable privacy-preserving ordinal response. In Proceedings of the 2014 ACM SIGSAC Conference on Computer and Communications Security, Scottsdale, AZ, USA, 3–7 November 2014; pp. 1054–1067. [Google Scholar]

- Ding, B.; Kulkarni, J.; Yekhanin, S. Collecting telemetry data privately. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 4–9 December 2017; pp. 3571–3580. [Google Scholar]

- Wang, N.; Xiao, X.; Yang, Y.; Zhao, J.; Hui, S.C.; Shin, H.; Shin, J.; Yu, G. Collecting and Analyzing Multidimensional Data with Local Differential Privacy. In Proceedings of the 2019 IEEE 35th International Conference on Data Engineering (ICDE), Macao, China, 8–11 April 2019; pp. 638–649. [Google Scholar]

- Qin, Z.; Yang, Y.; Yu, T.; Khalil, I.; Xiao, X.; Ren, K. Heavy hitter estimation over set-valued data with local differential privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 192–203. [Google Scholar]

- Cormode, G.; Kulkarni, T.; Srivastava, D. Marginal release under local differential privacy. In Proceedings of the 2018 International Conference on Management of Data, Houston, TX, USA, 10–15 June 2018; pp. 131–146. [Google Scholar]

- Wang, D.; Gaboardi, M.; Xu, J. Empirical risk minimization in non-interactive local differential privacy revisited. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 3–8 December 2018; pp. 965–974. [Google Scholar]

- Wang, Y.; Tong, Y.; Shi, D. Federated Latent Dirichlet Allocation: A Local Differential Privacy Based Framework. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 6283–6290. [Google Scholar]

- Arachchige, P.C.M.; Bertok, P.; Khalil, I.; Liu, D.; Camtepe, S.; Atiquzzaman, M. Local Differential Privacy for Deep Learning. IEEE Internet Things J. 2019, 7, 5827–5842. [Google Scholar] [CrossRef]

- Wang, T.; Blocki, J.; Li, N.; Jha, S. Locally differentially private protocols for frequency estimation. In Proceedings of the 26th USENIX Security Symposium (USENIX Security 17), Baltimore, MD, USA, 15–17 August 2017; pp. 729–745. [Google Scholar]

- Ye, Q.; Hu, H. Local Differential Privacy: Tools, Challenges, and Opportunities. In Proceedings of the Workshop of Web Information Systems Engineering (WISE), Hong Kong, SAR, China, 26–30 November 2019; pp. 13–23. [Google Scholar]

- Bebensee, B. Local differential privacy: A tutorial. arXiv 2019, arXiv:1907.11908. [Google Scholar]

- Li, N.; Ye, Q. Mobile Data Collection and Analysis with Local Differential Privacy. In Proceedings of the IEEE International Conference on Mobile Data Management (MDM), Hong Kong, SAR, China, 10–13 June 2019; pp. 4–7. [Google Scholar]

- Zhao, P.; Zhang, G.; Wan, S.; Liu, G.; Umer, T. A survey of local differential privacy for securing internet of vehicles. J. Supercomput. 2019, 76, 1–22. [Google Scholar] [CrossRef]

- Yang, M.; Lyu, L.; Zhao, J.; Zhu, T.; Lam, K.Y. Local Differential Privacy and Its Applications: A Comprehensive Survey. arXiv 2020, arXiv:2008.03686. [Google Scholar]

- Xiong, X.; Liu, S.; Li, D.; Cai, Z.; Niu, X. A Comprehensive Survey on Local Differential Privacy. Secur. Commun. Netw. 2020, 2020, 8829523. [Google Scholar] [CrossRef]

- Duchi, J.C.; Jordan, M.I.; Wainwright, M.J. Local privacy and statistical minimax rates. In Proceedings of the IEEE Annual Symposium on Foundations of Computer Science, Berkeley, CA, USA, 26–29 October 2013; pp. 429–438. [Google Scholar]

- Warner, S.L. Randomized response: A survey technique for eliminating evasive answer bias. J. Am. Stat. Assoc. 1965, 60, 63–69. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, X.; Hu, D. Using Randomized Response for Differential Privacy Preserving Data Collection. In Proceedings of the EDBT/ICDT Workshops, Bordeaux, France, 15–16 March 2016; Volume 1558, pp. 1–8. [Google Scholar]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating Noise to Sensitivity in Private Data Analysis. In Proceedings of the Theory of Cryptography Conference, New York, NY, USA, 4–7 March 2006; pp. 265–284. [Google Scholar]

- McSherry, F.; Talwar, K. Mechanism Design via Differential Privacy. In Proceedings of the IEEE Symposium on Foundations of Computer Science (FOCS), Providence, RI, USA, 20–23 October 2007; Volume 7, pp. 94–103. [Google Scholar]

- Duchi, J.C.; Jordan, M.I.; Wainwright, M.J. Local privacy, data processing inequalities, and statistical minimax rates. arXiv 2013, arXiv:1302.3203. [Google Scholar]

- Joseph, M.; Mao, J.; Neel, S.; Roth, A. The Role of Interactivity in Local Differential Privacy. In Proceedings of the IEEE Annual Symposium on Foundations of Computer Science (FOCS), Baltimore, MD, USA, 9–12 November 2019; pp. 94–105. [Google Scholar]

- Wang, D.; Xu, J. On sparse linear regression in the local differential privacy model. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6628–6637. [Google Scholar]

- Dwork, C.; Kenthapadi, K.; McSherry, F.; Mironov, I.; Naor, M. Our data, ourselves: Privacy via distributed noise generation. In Proceedings of the Theory and Applications of Cryptographic Techniques, St. Petersburg, Russia, 28 May–1 June 2006; pp. 486–503. [Google Scholar]

- Bassily, R. Linear queries estimation with local differential privacy. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Naha, Okinawa, Japan, 16–18 April 2019; pp. 721–729. [Google Scholar]

- Avent, B.; Korolova, A.; Zeber, D.; Hovden, T.; Livshits, B. BLENDER: Enabling local search with a hybrid differential privacy model. In Proceedings of the USENIX Security Symposium, Vancouver, BC, Canada, 16–18 August 2017; pp. 747–764. [Google Scholar]

- Andrés, M.; Bordenabe, N.; Chatzikokolakis, K.; Palamidessi, C. Geo-Indistinguishability: Differential Privacy for Location-Based Systems. In Proceedings of the 2013 ACM SIGSAC Conference on Computer & Communications Security, Berlin, Germany, 4–8 November 2013; pp. 901–914. [Google Scholar]

- Alvim, M.S.; Chatzikokolakis, K.; Palamidessi, C.; Pazii, A. Metric-based local differential privacy for statistical applications. arXiv 2018, arXiv:1805.01456. [Google Scholar]

- Chatzikokolakis, K.; Andrés, M.E.; Bordenabe, N.E.; Palamidessi, C. Broadening the scope of differential privacy using metrics. In Proceedings of the International Symposium on Privacy Enhancing Technologies Symposium, Bloomington, IN, USA, 10–12 July 2013; pp. 82–102. [Google Scholar]

- Gursoy, M.E.; Tamersoy, A.; Truex, S.; Wei, W.; Liu, L. Secure and Utility-Aware Data Collection with Condensed Local Differential Privacy. IEEE Trans. Dependable Secur. Comput. 2019, 1–13. [Google Scholar] [CrossRef]

- NIE, Y.; Yang, W.; Huang, L.; Xie, X.; Zhao, Z.; Wang, S. A Utility-Optimized Framework for Personalized Private Histogram Estimation. IEEE Trans. Knowl. Data Eng. 2019, 31, 655–669. [Google Scholar] [CrossRef]

- Murakami, T.; Kawamoto, Y. Utility-optimized local differential privacy mechanisms for distribution estimation. In Proceedings of the USENIX Security Symposium, Santa Clara, CA, USA, 14–16 August 2019; pp. 1877–1894. [Google Scholar]

- Gu, X.; Li, M.; Xiong, L.; Cao, Y. Providing Input-Discriminative Protection for Local Differential Privacy. In Proceedings of the 2020 IEEE 36th International Conference on Data Engineering (ICDE), Dallas, TX, USA, 20–24 April 2020; pp. 505–516. [Google Scholar]

- Takagi, S.; Cao, Y.; Yoshikawa, M. POSTER: Data Collection via Local Differential Privacy with Secret Parameters. In Proceedings of the 15th ACM Asia Conference on Computer and Communications Security, Taipei, Taiwan, 5–9 October 2020; pp. 910–912. [Google Scholar]

- Bassily, R.; Smith, A. Local, private, efficient protocols for succinct histograms. In Proceedings of the Forty-Seventh Annual ACM Symposium on Theory of Computing, Portland, OR, USA, 14–17 June 2015; pp. 127–135. [Google Scholar]

- Wang, T.; Zhao, J.; Yang, X.; Ren, X. Locally differentially private data collection and analysis. arXiv 2019, arXiv:1906.01777. [Google Scholar]

- Kairouz, P.; Oh, S.; Viswanath, P. Extremal mechanisms for local differential privacy. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 8–13 December 2014; pp. 2879–2887. [Google Scholar]

- Kairouz, P.; Bonawitz, K.; Ramage, D. Discrete distribution estimation under local privacy. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 2436–2444. [Google Scholar]

- Wang, S.; Huang, L.; Wang, P.; Deng, H.; Xu, H.; Yang, W. Private Weighted Histogram Aggregation in Crowdsourcing; Springer: Berlin/Heidelberg, Germany, 2016; pp. 250–261. [Google Scholar]

- Bloom, B.H. Space/time trade-offs in hash coding with allowable errors. Commun. ACM 1970, 13, 422–426. [Google Scholar] [CrossRef]

- Acharya, J.; Sun, Z.; Zhang, H. Hadamard Response: Estimating Distributions Privately, Efficiently, and with Little Communication. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Naha, Okinawa, Japan, 16–18 April 2019; pp. 1120–1129. [Google Scholar]

- Cormode, G.; Kulkarni, T.; Srivastava, D. Answering range queries under local differential privacy. Proc. VLDB Endow. 2019, 12, 1126–1138. [Google Scholar] [CrossRef]

- Wang, S.; Huang, L.; Wang, P.; Nie, Y.; Xu, H.; Yang, W.; Li, X.Y.; Qiao, C. Mutual information optimally local private discrete distribution estimation. arXiv 2016, arXiv:1607.08025. [Google Scholar]

- Wang, S.; Huang, L.; Nie, Y.; Zhang, X.; Wang, P.; Xu, H.; Yang, W. Local Differential Private Data Aggregation for Discrete Distribution Estimation. IEEE Trans. Parallel Distrib. Syst. 2019, 30, 2046–2059. [Google Scholar] [CrossRef]

- Ren, X.; Yu, C.M.; Yu, W.; Yang, S.; Yang, X.; McCann, J. High-dimensional crowdsourced data distribution estimation with local privacy. In Proceedings of the IEEE International Conference on Computer and Information Technology (CIT), Nadi, Fiji, 8–10 December 2016; pp. 226–233. [Google Scholar]

- Ren, X.; Yu, C.M.; Yu, W.; Yang, S.; Yang, X.; McCann, J.A.; Philip, S.Y. LoPub: High-Dimensional Crowdsourced Data Publication with Local Differential Privacy. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2151–2166. [Google Scholar] [CrossRef]

- Yang, X.; Wang, T.; Ren, X.; Yu, W. Copula-Based Multi-Dimensional Crowdsourced Data Synthesis and Release with Local Privacy. In Proceedings of the IEEE Global Communications Conference (GLOBECOM), Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar]

- Zhang, Z.; Wang, T.; Li, N.; He, S.; Chen, J. CALM: Consistent adaptive local marginal for marginal release under local differential privacy. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, Toronto, ON, Canada, 15–19 October 2018; pp. 212–229. [Google Scholar]

- Wang, T.; Li, N.; Jha, S. Locally differentially private heavy hitter identification. IEEE Trans. Dependable Secure Comput. 2019, 1–12. [Google Scholar] [CrossRef]

- Wang, S.; Huang, L.; Nie, Y.; Wang, P.; Xu, H.; Yang, W. PrivSet: Set-Valued Data Analyses with Locale Differential Privacy. In Proceedings of the IEEE INFOCOM 2018-IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018; pp. 1088–1096. [Google Scholar]

- Zhao, X.; Li, Y.; Yuan, Y.; Bi, X.; Wang, G. LDPart: Effective Location-Record Data Publication via Local Differential Privacy. IEEE Access 2019, 7, 31435–31445. [Google Scholar] [CrossRef]

- Mishra, N.; Sandler, M. Privacy via pseudorandom sketches. In Proceedings of the Twenty-Fifth ACM SIGMOD-SIGACT-SIGART Symposium on Principles of Database Systems, Chicago, IL, USA, 26–28 June 2006; pp. 143–152. [Google Scholar]

- Hsu, J.; Khanna, S.; Roth, A. Distributed private heavy hitters. In Proceedings of the International Colloquium on Automata, Languages, and Programming, Warwick, UK, 9–13 July 2012; pp. 461–472. [Google Scholar]

- Bassily, R.; Nissim, K.; Stemmer, U.; Thakurta, A.G. Practical locally private heavy hitters. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 4–9 December 2017; pp. 2288–2296. [Google Scholar]

- Bun, M.; Nelson, J.; Stemmer, U. Heavy hitters and the structure of local privacy. In Proceedings of the ACM SIGMOD-SIGACT-SIGAI Symposium on Principles of Database Systems, Houston, TX, USA, 10–15 June 2018; pp. 435–447. [Google Scholar]

- Jia, J.; Gong, N.Z. Calibrate: Frequency Estimation and Heavy Hitter Identification with Local Differential Privacy via Incorporating Prior Knowledge. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 2008–2016. [Google Scholar]

- Sun, C.; Fu, Y.; Zhou, J.; Gao, H. Personalized privacy-preserving frequent itemset mining using randomized response. Sci. World J. 2014, 2014, 686151. [Google Scholar] [CrossRef]

- Wang, T.; Li, N.; Jha, S. Locally differentially private frequent itemset mining. In Proceedings of the IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 21–23 May 2018; pp. 127–143. [Google Scholar]

- Thakurta, A.G.; Vyrros, A.H.; Vaishampayan, U.S.; Kapoor, G.; Freudinger, J.; Prakash, V.V.; Legendre, A.; Duplinsky, S. Emoji Frequency Detection and Deep Link Frequency. U.S. Patent 9,705,908, 19 June 2017. [Google Scholar]

- Tang, J.; Korolova, A.; Bai, X.; Wang, X.; Wang, X. Privacy loss in apple’s implementation of differential privacy on macos 10.12. arXiv 2017, arXiv:1709.02753. [Google Scholar]

- Fanti, G.; Pihur, V.; Erlingsson, Ú. Building a with the unknown: Privacy-preserving learning of associations and data dictionaries. Priv. Enhancing Technol. 2016, 2016, 41–61. [Google Scholar] [CrossRef]

- Wang, N.; Xiao, X.; Yang, Y.; Hoang, T.D.; Shin, H.; Shin, J.; Yu, G. PrivTrie: Effective frequent term discovery under local differential privacy. In Proceedings of the IEEE ICDE, Paris, France, 16–19 April 2018; pp. 821–832. [Google Scholar]

- Kim, S.; Shin, H.; Baek, C.; Kim, S.; Shin, J. Learning New Words from Keystroke Data with Local Differential Privacy. IEEE Trans. Knowl. Data Eng. 2020, 32, 479–491. [Google Scholar] [CrossRef]

- Ye, Q.; Hu, H.; Meng, X.; Zheng, H. PrivKV: Key-Value Data Collection with Local Differential Privacy. In Proceedings of the IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019. [Google Scholar]

- Nguyên, T.T.; Xiao, X.; Yang, Y.; Hui, S.C.; Shin, H.; Shin, J. Collecting and analyzing data from smart device users with local differential privacy. arXiv 2016, arXiv:1606.05053. [Google Scholar]

- Sun, L.; Zhao, J.; Ye, X.; Feng, S.; Wang, T.; Bai, T. Conditional Analysis for Key-Value Data with Local Differential Privacy. arXiv 2019, arXiv:1907.05014. [Google Scholar]

- Gu, X.; Li, M.; Cheng, Y.; Xiong, L.; Cao, Y. PCKV: Locally Differentially Private Correlated Key-Value Data Collection with Optimized Utility. arXiv 2019, arXiv:1911.12834. [Google Scholar]

- Wang, S.; Nie, Y.; Wang, P.; Xu, H.; Yang, W.; Huang, L. Local private ordinal data distribution estimation. In Proceedings of the IEEE INFOCOM, Atlanta, GA, USA, 1–4 May 2017; pp. 1–9. [Google Scholar]

- Yang, J.; Cheng, X.; Su, S.; Chen, R.; Ren, Q.; Liu, Y. Collecting Preference Rankings Under Local Differential Privacy. In Proceedings of the IEEE ICDE, Macau, China, 8–11 April 2019; pp. 1598–1601. [Google Scholar]

- Black, D. The Theory of Committees and Elections; Springer Science & Business Media: Berlin, Germany, 2012. [Google Scholar]

- Reilly, B. Social choice in the south seas: Electoral innovation and the borda count in the pacific island countries. Int. Political Sci. Rev. 2002, 23, 355–372. [Google Scholar] [CrossRef]

- Brandt, F.; Conitzer, V.; Endriss, U.; Lang, J.; Procaccia, A.D. Handbook of Computational Social Choice; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar]

- Wang, S.; Du, J.; Yang, W.; Diao, X.; Liu, Z.; Nie, Y.; Huang, L.; Xu, H. Aggregating Votes with Local Differential Privacy: Usefulness, Soundness vs. Indistinguishability. arXiv 2019, arXiv:1908.04920. [Google Scholar]

- Li, C.; Hay, M.; Miklau, G.; Wang, Y. A Data- and Workload-Aware Query Answering Algorithm for Range Queries Under Differential Privacy. Proc. VLDB Endow. 2014, 7, 341–352. [Google Scholar] [CrossRef]

- Alnemari, A.; Romanowski, C.J.; Raj, R.K. An Adaptive Differential Privacy Algorithm for Range Queries over Healthcare Data. In Proceedings of the IEEE International Conference on Healthcare Informatics, Park City, UT, USA, 23–26 August 2017; pp. 397–402. [Google Scholar]

- Kulkarni, T. Answering Range Queries Under Local Differential Privacy. In Proceedings of the 2019 International Conference on Management of Data, Amsterdam, The Netherlands, 30 June–5 July 2019; pp. 1832–1834. [Google Scholar]

- Gu, X.; Li, M.; Cao, Y.; Xiong, L. Supporting Both Range Queries and Frequency Estimation with Local Differential Privacy. In Proceedings of the IEEE Conference on Communications and Network Security (CNS), Washington, DC, USA, 10–12 June 2019; pp. 124–132. [Google Scholar]

- Li, Z.; Wang, T.; Lopuhaä-Zwakenberg, M.; Li, N.; Škoric, B. Estimating Numerical Distributions under Local Differential Privacy. In Proceedings of the ACM SIGMOD International Conference on Management of Data, Portland, OR, USA, 14–19 June 2020; pp. 621–635. [Google Scholar]

- Wang, T.; Yang, X.; Ren, X.; Yu, W.; Yang, S. Locally Private High-dimensional Crowdsourced Data Release based on Copula Functions. IEEE Trans. Serv. Comput. 2019, 1–14. [Google Scholar] [CrossRef]

- Qardaji, W.; Yang, W.; Li, N. PriView: Practical differentially private release of marginal contingency tables. In Proceedings of the 2014 ACM SIGMOD International Conference on Management of Data, Snowbird, UT, USA, 22–27 June 2014; pp. 1435–1446. [Google Scholar]

- Wang, T.; Ding, B.; Zhou, J.; Hong, C.; Huang, Z.; Li, N.; Jha, S. Answering multi-dimensional analytical queries under local differential privacy. In Proceedings of the International Conference Management of Data, Amsterdam, The Netherlands, 30 June–5 July 2019; pp. 159–176. [Google Scholar]

- Xu, M.; Wang, T.; Ding, B.; Zhou, J.; Hong, C.; Huang, Z. DPSAaS: Multi-Dimensional Data Sharing and Analytics as Services under Local Differential Privacy. Proc. VLDB Endow. 2019, 12, 1862–1865. [Google Scholar] [CrossRef]

- Xue, Q.; Zhu, Y.; Wang, J. Joint Distribution Estimation and Naïve Bayes Classification under Local Differential Privacy. IEEE Trans. Emerg. Topics Comput. 2019, 1–11. [Google Scholar] [CrossRef]

- Cao, Y.; Yoshikawa, M.; Xiao, Y.; Xiong, L. Quantifying Differential Privacy under Temporal Correlations. In Proceedings of the IEEE ICDE, San Diego, CA, USA, 19–22 April 2017; pp. 821–832. [Google Scholar]

- Wang, H.; Xu, Z. CTS-DP: Publishing correlated time-series data via differential privacy. Knowl. Based Syst. 2017, 122, 167–179. [Google Scholar] [CrossRef]

- Cao, Y.; Yoshikawa, M.; Xiao, Y.; Xiong, L. Quantifying Differential Privacy in Continuous Data Release Under Temporal Correlations. IEEE Trans. Knowl. Data Eng. 2019, 31, 1281–1295. [Google Scholar] [CrossRef] [PubMed]

- Joseph, M.; Roth, A.; Ullman, J.; Waggoner, B. Local Differential Privacy for Evolving Data. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 3–8 December 2018; pp. 2381–2390. [Google Scholar]

- Dwork, C.; Naor, M.; Pitassi, T.; Rothblum, G.N. Differential privacy under continual observation. In Proceedings of the ACM Symposium on Theory of Computing, Cambridge, MA, USA, 5–8 June 2010; pp. 715–724. [Google Scholar]

- Duchi, J.C.; Jordan, M.I.; Wainwright, M.J. Minimax optimal procedures for locally private estimation. J. Am. Stat. Assoc. 2018, 113, 182–201. [Google Scholar] [CrossRef]

- Shokri, R.; Stronati, M.; Song, C.; Shmatikov, V. Membership inference attacks against machine learning models. In Proceedings of the IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 3–18. [Google Scholar]

- Song, C.; Ristenpart, T.; Shmatikov, V. Machine learning models that remember too much. In Proceedings of the ACM SIGSAC CCS, Dallas, TX, USA, 30 October–3 November 2017; pp. 587–601. [Google Scholar]

- Fredrikson, M.; Jha, S.; Ristenpart, T. Model inversion attacks that exploit confidence information and basic countermeasures. In Proceedings of the ACM SIGSAC CCS, Denver, CO, USA, 12–16 October 2015; pp. 1322–1333. [Google Scholar]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep learning with differential privacy. In Proceedings of the ACM SIGSAC CCS, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- Phan, N.; Wu, X.; Hu, H.; Dou, D. Adaptive laplace mechanism: Differential privacy preservation in deep learning. In Proceedings of the IEEE International Conference on Data Mining (ICDM), New Orleans, LA, USA, 18–21 November 2017; pp. 385–394. [Google Scholar]

- Lee, J.; Kifer, D. Concentrated differentially private gradient descent with adaptive per-iteration privacy budget. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 1656–1665. [Google Scholar]

- Zhao, J.; Chen, Y.; Zhang, W. Differential Privacy Preservation in Deep Learning: Challenges, Opportunities and Solutions. IEEE Access 2019, 7, 48901–48911. [Google Scholar] [CrossRef]

- Jayaraman, B.; Evans, D. Evaluating Differentially Private Machine Learning in Practice. In Proceedings of the USENIX Security Symposium, Santa Clara, CA, USA, 14–16 August 2019; pp. 1895–1912. [Google Scholar]

- Yilmaz, E.; Al-Rubaie, M.; Chang, J.M. Locally differentially private naive bayes classification. arXiv 2019, arXiv:1905.01039. [Google Scholar]

- Berrett, T.; Butucea, C. Classification under local differential privacy. arXiv 2019, arXiv:1912.04629. [Google Scholar]

- Kung, S.Y. Kernel Methods and Machine Learning; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Nissim, K.; Stemmer, U.; Vadhan, S. Locating a small cluster privately. In Proceedings of the ACM SIGMOD-SIGACT-SIGART Symposium on Principles of Database Systems, San Francisco, CA, USA, 26 June–1 July 2016; pp. 413–427. [Google Scholar]

- Su, D.; Cao, J.; Li, N.; Bertino, E.; Jin, H. Differentially Private K-Means Clustering. In Proceedings of the ACM Conf. Data and Application Security and Privacy, New Orleans, LA, USA, 9–11 March 2016; pp. 26–37. [Google Scholar]

- Feldman, D.; Xiang, C.; Zhu, R.; Rus, D. Coresets for differentially private k-means clustering and applications to privacy in mobile sensor networks. In Proceedings of the ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN), Pittsburgh, PA, USA, 18–21 April 2017; pp. 3–16. [Google Scholar]

- Nissim, K.; Stemmer, U. Clustering Algorithms for the Centralized and Local Models. In Algorithmic Learning Theory; Springer Verlag: Berlin/Heidelberg, Germany, 2018; pp. 619–653. [Google Scholar]

- Sun, L.; Zhao, J.; Ye, X. Distributed Clustering in the Anonymized Space with Local Differential Privacy. arXiv 2019, arXiv:1906.11441. [Google Scholar]

- Karapiperis, D.; Gkoulalas-Divanis, A.; Verykios, V.S. Distance-aware encoding of numerical values for privacy-preserving record linkage. In Proceedings of the IEEE ICDE, San Diego, CA, USA, 19–22 April 2017; pp. 135–138. [Google Scholar]

- Karapiperis, D.; Gkoulalas-Divanis, A.; Verykios, V.S. FEDERAL: A framework for distance-aware privacy-preserving record linkage. IEEE Trans. Knowl. Data Eng. 2018, 30, 292–304. [Google Scholar] [CrossRef]

- Li, Y.; Liu, S.; Wang, J.; Liu, M. A local-clustering-based personalized differential privacy framework for user-based collaborative filtering. In Proceedings of the International Conference on Database Systems for Advanced Applications, Suzhou, China, 27–30 March 2017; pp. 543–558. [Google Scholar]

- Akter, M.; Hashem, T. Computing aggregates over numeric data with personalized local differential privacy. In Proceedings of the Australasian Conference on Information Security and Privacy, Auckland, New Zealand, 3–5 July 2017; pp. 249–260. [Google Scholar]

- Xia, C.; Hua, J.; Tong, W.; Zhong, S. Distributed K-Means clustering guaranteeing local differential privacy. Comput. Secur. 2020, 90, 1–11. [Google Scholar] [CrossRef]

- Chaudhuri, K.; Monteleoni, C.; Sarwate, A.D. Differentially private empirical risk minimization. J. Mach. Learn. Res. 2011, 12, 1069–1109. [Google Scholar]

- Smith, A.; Thakurta, A.; Upadhyay, J. Is interaction necessary for distributed private learning? In Proceedings of the IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 58–77. [Google Scholar]

- Zheng, K.; Mou, W.; Wang, L. Collect at once, use effectively: Making non-interactive locally private learning possible. In Proceedings of the International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; pp. 4130–4139. [Google Scholar]

- Wang, D.; Chen, C.; Xu, J. Differentially Private Empirical Risk Minimization with Non-convex Loss Functions. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6526–6535. [Google Scholar]

- Wang, D.; Zhang, H.; Gaboardi, M.; Xu, J. Estimating Smooth GLM in Non-interactive Local Differential Privacy Model with Public Unlabeled Data. arXiv 2019, arXiv:1910.00482. [Google Scholar]

- Wang, D.; Xu, J. Principal component analysis in the local differential privacy model. Theor. Comput. Sci. 2020, 809, 296–312. [Google Scholar] [CrossRef]

- Fan, W.; He, J.; Guo, M.; Li, P.; Han, Z.; Wang, R. Privacy preserving classification on local differential privacy in data centers. J. Parallel Distrib. Comput. 2020, 135, 70–82. [Google Scholar] [CrossRef]

- Yin, C.; Zhou, B.; Yin, Z.; Wang, J. Local privacy protection classification based on human-centric computing. Hum.-Centric Comput. Inf. Sci. 2019, 9, 33. [Google Scholar] [CrossRef]

- Van der Hoeven, D. User-Specified Local Differential Privacy in Unconstrained Adaptive Online Learning. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 8–14 December 2019; pp. 14080–14089. [Google Scholar]

- Jun, K.S.; Orabona, F. Parameter-Free Locally Differentially Private Stochastic Subgradient Descent. arXiv 2019, arXiv:1911.09564. [Google Scholar]

- Osia, S.A.; Shamsabadi, A.S.; Sajadmanesh, S.; Taheri, A.; Katevas, K.; Rabiee, H.R.; Lane, N.D.; Haddadi, H. A hybrid deep learning architecture for privacy-preserving mobile analytics. IEEE Internet Things J. 2020, 7, 4505–4518. [Google Scholar] [CrossRef]

- Zhao, J. Distributed Deep Learning under Differential Privacy with the Teacher-Student Paradigm. In Proceedings of the Workshops of AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Xu, C.; Ren, J.; She, L.; Zhang, Y.; Qin, Z.; Ren, K. EdgeSanitizer: Locally Differentially Private Deep Inference at the Edge for Mobile Data Analytics. IEEE Internet Things J. 2019, 6, 5140–5151. [Google Scholar] [CrossRef]

- Pan, X.; Wang, W.; Zhang, X.; Li, B.; Yi, J.; Song, D. How you act tells a lot: Privacy-leaking attack on deep reinforcement learning. In Proceedings of the International Conference on Autonomous Agents and MultiAgent Systems, Montreal, QC, Canada, 13–17 May 2019; pp. 368–376. [Google Scholar]

- Gajane, P.; Urvoy, T.; Kaufmann, E. Corrupt bandits for preserving local privacy. In Algorithmic Learning Theory; Springer Verlag: Berlin/Heidelberg, Germany, 2018; pp. 387–412. [Google Scholar]

- Basu, D.; Dimitrakakis, C.; Tossou, A. Differential Privacy for Multi-armed Bandits: What Is It and What Is Its Cost? arXiv 2019, arXiv:1905.12298. [Google Scholar]

- Ono, H.; Takahashi, T. Locally Private Distributed Reinforcement Learning. arXiv 2020, arXiv:2001.11718. [Google Scholar]

- Ren, W.; Zhou, X.; Liu, J.; Shroff, N.B. Multi-Armed Bandits with Local Differential Privacy. arXiv 2020, arXiv:2007.03121. [Google Scholar]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. Deep reinforcement learning: A brief survey. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated Machine Learning: Concept and Applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Cheng, Y.; Kang, Y.; Chen, T.; Yu, H. Federated learning. Synth. Lect. Artif. Intell. Mach. Learn. 2019, 13, 1–207. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated Learning: Challenges, Methods, and Future Directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Li, Q.; Wen, Z.; He, B. Federated Learning Systems: Vision, Hype and Reality for Data Privacy and Protection. arXiv 2019, arXiv:1907.09693. [Google Scholar]

- Zheng, H.; Hu, H.; Han, Z. Preserving User Privacy For Machine Learning: Local Differential Privacy or Federated Machine Learning. IEEE Intell. Syst. 2020, 35, 5–14. [Google Scholar] [CrossRef]

- Cao, H.; Liu, S.; Zhao, R.; Xiong, X. IFed: A novel federated learning framework for local differential privacy in Power Internet of Things. Int. J. Distrib. Sens. Netw. 2020, 16, 1–13. [Google Scholar] [CrossRef]

- Seif, M.; Tandon, R.; Li, M. Wireless federated learning with local differential privacy. arXiv 2020, arXiv:2002.05151. [Google Scholar]

- Geyer, R.C.; Klein, T.; Nabi, M. Differentially private federated learning: A client level perspective. arXiv 2017, arXiv:1712.07557. [Google Scholar]

- Wei, K.; Li, J.; Ding, M.; Ma, C.; Yang, H.H.; Farokhi, F.; Jin, S.; Quek, T.Q.; Poor, H.V. Federated learning with differential privacy: Algorithms and performance analysis. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3454–3469. [Google Scholar] [CrossRef]

- Truex, S.; Liu, L.; Chow, K.H.; Gursoy, M.E.; Wei, W. LDP-Fed: Federated learning with local differential privacy. In Proceedings of the ACM International Workshop on Edge Systems, Analytics and Networking, Heraklion, Greece, 27 April 2020; pp. 61–66. [Google Scholar]

- Bhowmick, A.; Duchi, J.; Freudiger, J.; Kapoor, G.; Rogers, R. Protection against reconstruction and its applications in private federated learning. arXiv 2018, arXiv:1812.00984. [Google Scholar]

- Li, J.; Khodak, M.; Caldas, S.; Talwalkar, A. Differentially private meta-learning. arXiv 2019, arXiv:1909.05830. [Google Scholar]

- Liu, R.; Cao, Y.; Yoshikawa, M.; Chen, H. FedSel: Federated SGD under Local Differential Privacy with Top-k Dimension Selection. arXiv 2020, arXiv:2003.10637. [Google Scholar]

- Sun, L.; Qian, J.; Chen, X.; Yu, P.S. LDP-FL: Practical Private Aggregation in Federated Learning with Local Differential Privacy. arXiv 2020, arXiv:2007.15789. [Google Scholar]

- Naseri, M.; Hayes, J.; De Cristofaro, E. Toward Robustness and Privacy in Federated Learning: Experimenting with Local and Central Differential Privacy. arXiv 2020, arXiv:2009.03561. [Google Scholar]

- Apple iOS Security. Available online: https://developer.apple.com/documentation/security (accessed on 10 May 2019).

- Kessler, S.; Hoff, J.; Freytag, J.C. SAP HANA goes private: From privacy research to privacy aware enterprise analytics. Proc. VLDB Endow. 2019, 12, 1998–2009. [Google Scholar] [CrossRef]

- Lin, J.; Yu, W.; Zhang, N.; Yang, X.; Zhang, H.; Zhao, W. A Survey on Internet of Things: Architecture, Enabling Technologies, Security and Privacy, and Applications. IEEE Internet Things J. 2017, 4, 1125–1142. [Google Scholar] [CrossRef]

- Usman, M.; Jan, M.A.; Puthal, D. PAAL: A Framework based on Authentication, Aggregation and Local Differential Privacy for Internet of Multimedia Things. IEEE Internet Things J. 2020, 7. [Google Scholar] [CrossRef]

- Ou, L.; Qin, Z.; Liao, S.; Li, T.; Zhang, D. Singular Spectrum Analysis for Local Differential Privacy of Classifications in the Smart Grid. IEEE Internet Things J. 2020, 7, 5246–5255. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, J.; Yang, M.; Wang, T.; Wang, N.; Lyu, L.; Niyato, D.; Lam, K.Y. Local differential privacy based federated learning for Internet of Things. arXiv 2020, arXiv:2004.08856. [Google Scholar] [CrossRef]

- Song, Z.; Li, Z.; Chen, X. Local Differential Privacy Preserving Mechanism for Multi-attribute Data in Mobile Crowdsensing with Edge Computing. In Proceedings of the IEEE International Conference on Smart Internet of Things (SmartIoT), Tianjin, China, 9–11 August 2019; pp. 283–290. [Google Scholar]

- Gaboardi, M.; Lim, H.W.; Rogers, R.M.; Vadhan, S.P. Differentially private chi-squared hypothesis testing: Goodness of fit and independence testing. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 2111–2120. [Google Scholar]

- Cai, B.; Daskalakis, C.; Kamath, G. Priv’IT: Private and sample efficient identity testing. In Proceedings of the International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; pp. 635–644. [Google Scholar]

- Sheffet, O. Differentially private ordinary least squares. In Proceedings of the International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; pp. 3105–3114. [Google Scholar]

- Tong, X.; Xi, B.; Kantarcioglu, M.; Inan, A. Gaussian mixture models for classification and hypothesis tests under differential privacy. In Proceedings of the IFIP Annual Conference on Data and Applications Security and Privacy, Philadelphia, PA, USA, 19–21 July 2017; pp. 123–141. [Google Scholar]

- Kairouz, P.; Oh, S.; Viswanath, P. Extremal Mechanisms for Local Differential Privacy. J. Mach. Learn. Res. 2016, 17, 1–51. [Google Scholar]

- Sheffet, O. Locally Private Hypothesis Testing. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 4612–4621. [Google Scholar]

- Gaboardi, M.; Rogers, R. Local Private Hypothesis Testing: Chi-Square Tests. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 1612–1621. [Google Scholar]

- Gaboardi, M.; Rogers, R.; Sheffet, O. Locally Private Mean Estimation: Z-test and Tight Confidence Intervals. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Naha, Okinawa, Japan, 16–18 April 2019; pp. 2545–2554. [Google Scholar]

- Acharya, J.; Canonne, C.; Freitag, C.; Tyagi, H. Test without Trust: Optimal Locally Private Distribution Testing. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Naha, Okinawa, Japan, 16–18 April 2019; pp. 2067–2076. [Google Scholar]

- Qin, Z.; Yu, T.; Yang, Y.; Khalil, I.; Xiao, X.; Ren, K. Generating synthetic decentralized social graphs with local differential privacy. In Proceedings of the ACM SIGSAC CCS, Dallas, TX, USA, 30 October–3 November 2017; pp. 425–438. [Google Scholar]

- Zhang, Y.; Wei, J.; Zhang, X.; Hu, X.; Liu, W. A two-phase algorithm for generating synthetic graph under local differential privacy. In Proceedings of the International Conference on Communication and Network Security, Beijing, China, 30 May–1 June 2018; pp. 84–89. [Google Scholar]

- Liu, P.; Xu, Y.; Jiang, Q.; Tang, Y.; Guo, Y.; Wang, L.E.; Li, X. Local differential privacy for social network publishing. Neurocomputing 2020, 391, 273–279. [Google Scholar] [CrossRef]

- Gao, T.; Li, F.; Chen, Y.; Zou, X. Preserving local differential privacy in online social networks. In Proceedings of the International Conference on Wireless Algorithms, Systems, and Applications, Guilin, China, 19–21 June 2017; pp. 393–405. [Google Scholar]

- Gao, T.; Li, F.; Chen, Y.; Zou, X. Local differential privately anonymizing online social networks under hrg-based model. IEEE Trans. Comput. Soc. Syst. 2018, 5, 1009–1020. [Google Scholar] [CrossRef]

- Yang, J.; Ma, X.; Bai, X.; Cui, L. Graph Publishing with Local Differential Privacy for Hierarchical Social Networks. In Proceedings of the IEEE International Conference on Electronics Information and Emergency Communication (ICEIEC), Beijing, China, 17–19 July 2020; pp. 123–126. [Google Scholar]

- Ye, Q.; Hu, H.; Au, M.H.; Meng, X.; Xiao, X. Towards Locally Differentially Private Generic Graph Metric Estimation. In Proceedings of the IEEE ICDE, Dallas, TX, USA, 20–24 April 2020; pp. 1922–1925. [Google Scholar]

- Sun, H.; Xiao, X.; Khalil, I.; Yang, Y.; Qin, Z.; Wang, H.; Yu, T. Analyzing subgraph statistics from extended local views with decentralized differential privacy. In Proceedings of the ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 703–717. [Google Scholar]

- Wei, C.; Ji, S.; Liu, C.; Chen, W.; Wang, T. AsgLDP: Collecting and Generating Decentralized Attributed Graphs With Local Differential Privacy. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3239–3254. [Google Scholar] [CrossRef]

- Jeong, H.; Park, B.; Park, M.; Kim, K.B.; Choi, K. Big data and rule-based recommendation system in Internet of Things. Clust. Comput. 2019, 22, 1837–1846. [Google Scholar] [CrossRef]

- Calandrino, J.A.; Kilzer, A.; Narayanan, A.; Felten, E.W.; Shmatikov, V. You Might Also Like: Privacy Risks of Collaborative Filtering. In Proceedings of the IEEE Symposium on Security and Privacy (SP), Berkeley, CA, USA, 22–25 May 2011; pp. 231–246. [Google Scholar]

- Liu, R.; Cao, J.; Zhang, K.; Gao, W.; Liang, J.; Yang, L. When Privacy Meets Usability: Unobtrusive Privacy Permission Recommendation System for Mobile Apps Based on Crowdsourcing. IEEE Trans. Serv. Comput. 2018, 11, 864–878. [Google Scholar] [CrossRef]

- Shin, H.; Kim, S.; Shin, J.; Xiao, X. Privacy enhanced matrix factorization for recommendation with local differential privacy. IEEE Trans. Knowl. Data Eng. 2018, 30, 1770–1782. [Google Scholar] [CrossRef]

- Jiang, J.Y.; Li, C.T.; Lin, S.D. Towards a more reliable privacy-preserving recommender system. Inf. Sci. 2019, 482, 248–265. [Google Scholar] [CrossRef]

- Guo, T.; Luo, J.; Dong, K.; Yang, M. Locally differentially private item-based collaborative filtering. Inf. Sci. 2019, 502, 229–246. [Google Scholar] [CrossRef]

- Gao, C.; Huang, C.; Lin, D.; Jin, D.; Li, Y. DPLCF: Differentially Private Local Collaborative Filtering. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, China, 25–30 July 2020; pp. 961–970. [Google Scholar]

- Wang, S.; Huang, L.; Tian, M.; Yang, W.; Xu, H.; Guo, H. Personalized privacy-preserving data aggregation for histogram estimation. In Proceedings of the IEEE Global Communications Conference (GLOBECOM), San Diego, CA, USA, 6–10 December 2015; pp. 1–6. [Google Scholar]

| Notation | Explanation |

|---|---|

| Data record of user | |

| Domain of categorical attribute with size | |

| v/ | Input value/Perturbed value |

| B | Vector of the encoded value |

| N | Number of users |

| // | The true/reported/estimated number of value v |

| A | Attribute |

| d | Dimension |

| / | Privacy budget/Probability of failure |

| Perturbation probability | |

| / | The true/estimated frequency of value v |

| /H | Hash function universe/Hash function |

| Notion | Model | Server | Neighboring Datasets | Basic Mechanism | Property | Applications |

|---|---|---|---|---|---|---|

| DP [22,45] | Central | Trusted | Two datasets | Laplace/Exponential Mechanisms [45,46] | Sequential Composition, Post-processing | Data collection, statistics, publishing, analysis |

| LDP [25,42] | Local | No requirement | Two records | Randomized Response [42,43] |

| LDP Variants | Definition | Purpose | Design Idea | Target Data Type | Main Protocol | = LDP? |

|---|---|---|---|---|---|---|

| LDP [35] | - | - | All data type | RR-based method | - | |

| -LDP [61,62] | See Formula (5) | A relaxed variant of LDP | LDP fails with a small probability | All data type | RR-based method | When |

| BLENDER [52] | same as -DP | Improve data utility by combine global DP and LDP | Group user pool | Categorical data | Laplace mechanism | - |

| Local d-privacy [54] | Enhance data utility for metric spaces | Metric-based method | Metric data, e.g., location data | Discrete Laplace Geometric mechanisms | - | |

| CLDP [56] | Solve the problem of a small number of users | Metric-based method | Categorical data | Exponential mechanism | - | |

| PLDP [57] | Achieve granular privacy constraints | Advanced combination [57] PCE [7] | Categorical data | RR-based method | When | |

| ULDP [58] | See Definition 6 | Optimize data utility | Only provide privacy guarantees for sensitive data | Categorical data | RR-based method | When and |

| ID-LDP [59] | Provide input-discriminative protection for different inputs | Quantify indistinguishability | Categorical data | Unary Encoding | When for each value v | |

| PBP [60] | See Definition 9 | Achieve privacy amplification of LDP | Keep privacy parameters secret | Categorical data | RR-based method | - |

| Encoding Principle | LDP Algo. | Comm. Cost | Error Bound | Variance | Know Domain? |

|---|---|---|---|---|---|

| Direct Perturbation | BRR [43,63] | Y | |||

| GRR [64] (or, DE/k-RR) | Y | ||||

| Unary Encoding | SUE [35] | Y | |||

| OUE [35] | Y | ||||

| Hash Encoding | RAPPOR [27] | Y | |||

| O-RAPPOR [64] | N | ||||

| O-RR [64] | N | ||||

| BLH [35] | Y | ||||

| OLH [35] | Y | ||||

| Transformation | S-Hist [61] | Y | |||

| HRR [31] | Y | ||||

| Subset Selection | -SM [69,70] | Y |

| Task | LDP Algorithm | Comm. Cost | Key Technique | Know Domain? |

|---|---|---|---|---|

| Item distribution estimation | PrivSet [76] | Padding-and-sampling; Subset selection | Y | |

| LDPart [77] | Tree-based (partition tree); Users grouping | Y | ||

| Frequent items mining | TreeHist [80] | Tree-based (binary prefix tree) | Y | |

| LDPMiner [30] | Padding-and-sampling; Wiser budget allocation | Y | ||

| PEM [75] | Tree-based (binary prefix tree); Users grouping | Y | ||

| Calibrate [82] | Consider prior knowledge | Y | ||

| Frequent itemset mining | Personalized [83] | Personalized privacy regime | Y | |

| SVSM [84] | Padding-and-sampling; Privacy amplification | Y | ||

| New terms discovering | A-RAPPOR [87] | Select n-grams; Construct partite graph | N | |

| PrivTrie [88] | Tree-based (trie); Adaptive users grouping; Consistency constraints | N |

| LDP Algorithm | Goal | Address Multiple Pairs | Learn Correlations | Composition | Allocation of |

|---|---|---|---|---|---|

| [90] | Mean value of values; frequency of keys | Simple sampling | Mechanism iteration | Sequential | Fixed |

| CondiFre [92] | Mean value of values; frequency of keys; L-way conditional analysis | Simple sampling | Not consider | Sequential | Fixed |

| PCKV-UE/ PCKV-GRR [93] | Mean value of values; frequency of keys | Padding-and-sampling | Correlated perturbation | Tighter bound | Optimal |

| LDP Algorithm | Key Technique | Comm. Cost | Variance | Time Complexity | Space Complexity |

|---|---|---|---|---|---|

| RAPPOR [27] | Equal to naïve method when d>2 | Var | High | High | |

| Fanti et al. [87] | Expectation Maximization (EM) | Var | |||

| LoPub [72] | Lasso regression; Dimensionality and sparsity reduction | Var | Medium | High | |

| Cormode et al. [31] | Hadamard Transformation (HT) | Var | |||

| LoCop [105] | Lasso-based regression; Attribute correlations learning | Var | Low | High | |

| CALM [74] | Subset selection; Consistency constraints | Var |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, T.; Zhang, X.; Feng, J.; Yang, X. A Comprehensive Survey on Local Differential Privacy toward Data Statistics and Analysis. Sensors 2020, 20, 7030. https://doi.org/10.3390/s20247030

Wang T, Zhang X, Feng J, Yang X. A Comprehensive Survey on Local Differential Privacy toward Data Statistics and Analysis. Sensors. 2020; 20(24):7030. https://doi.org/10.3390/s20247030

Chicago/Turabian StyleWang, Teng, Xuefeng Zhang, Jingyu Feng, and Xinyu Yang. 2020. "A Comprehensive Survey on Local Differential Privacy toward Data Statistics and Analysis" Sensors 20, no. 24: 7030. https://doi.org/10.3390/s20247030

APA StyleWang, T., Zhang, X., Feng, J., & Yang, X. (2020). A Comprehensive Survey on Local Differential Privacy toward Data Statistics and Analysis. Sensors, 20(24), 7030. https://doi.org/10.3390/s20247030