The Role of Features Types and Personalized Assessment in Detecting Affective State Using Dry Electrode EEG

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection

2.1.1. Subjects

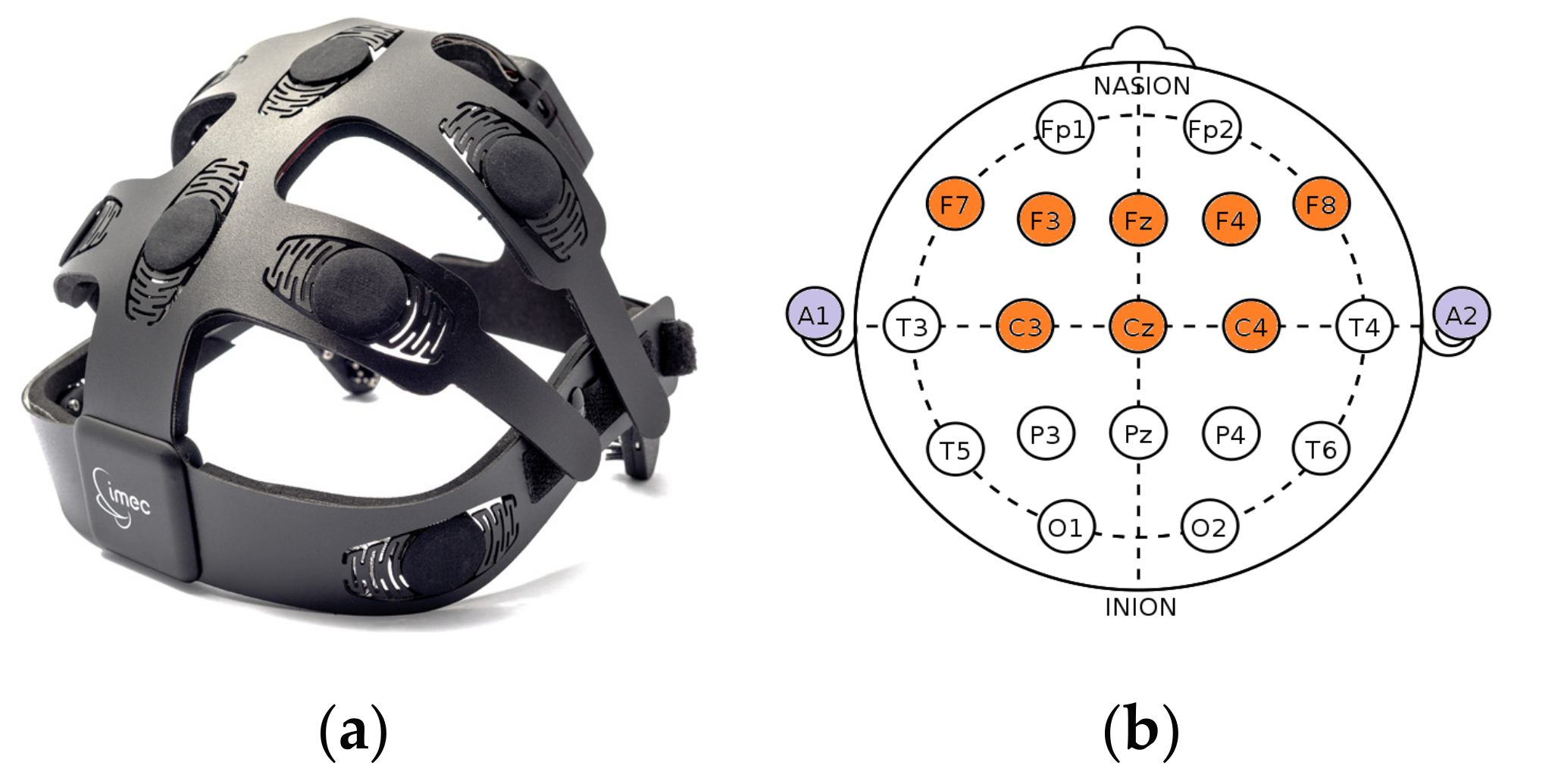

2.1.2. Acquisition Setup

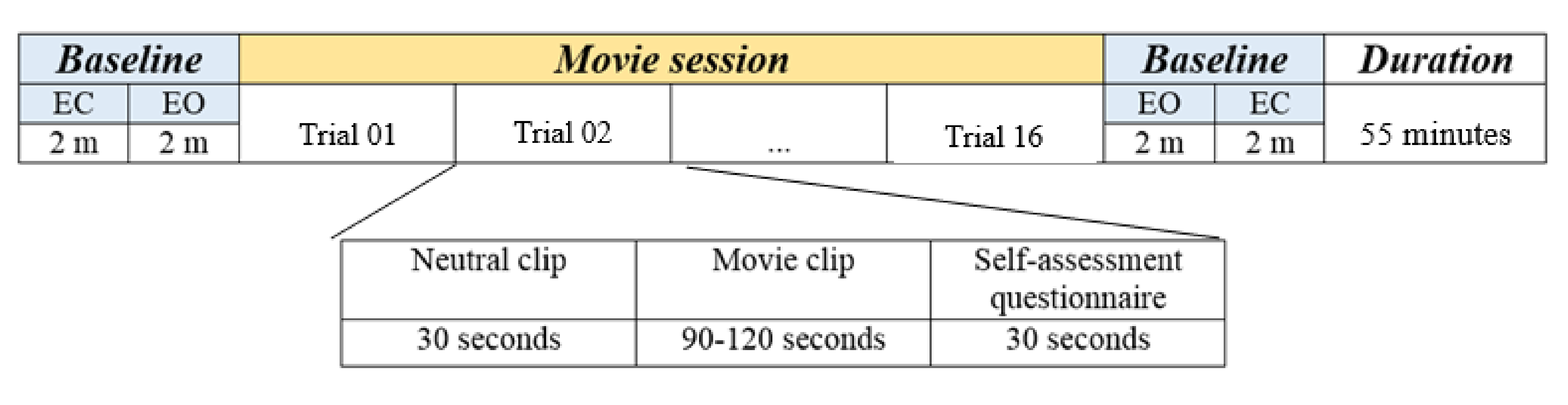

2.1.3. Protocol Description

2.2. Data Analysis

2.2.1. Data Preprocessing

2.2.2. Signal Quality Estimation

2.2.3. Feature Extraction

- Decompose the given signal into intrinsic mode functions (IMFs) using MEMD;

- Compute entropy for each IMF.

2.2.4. Performance Evaluation

2.2.5. Subjective Valence and Arousal Scores

3. Results

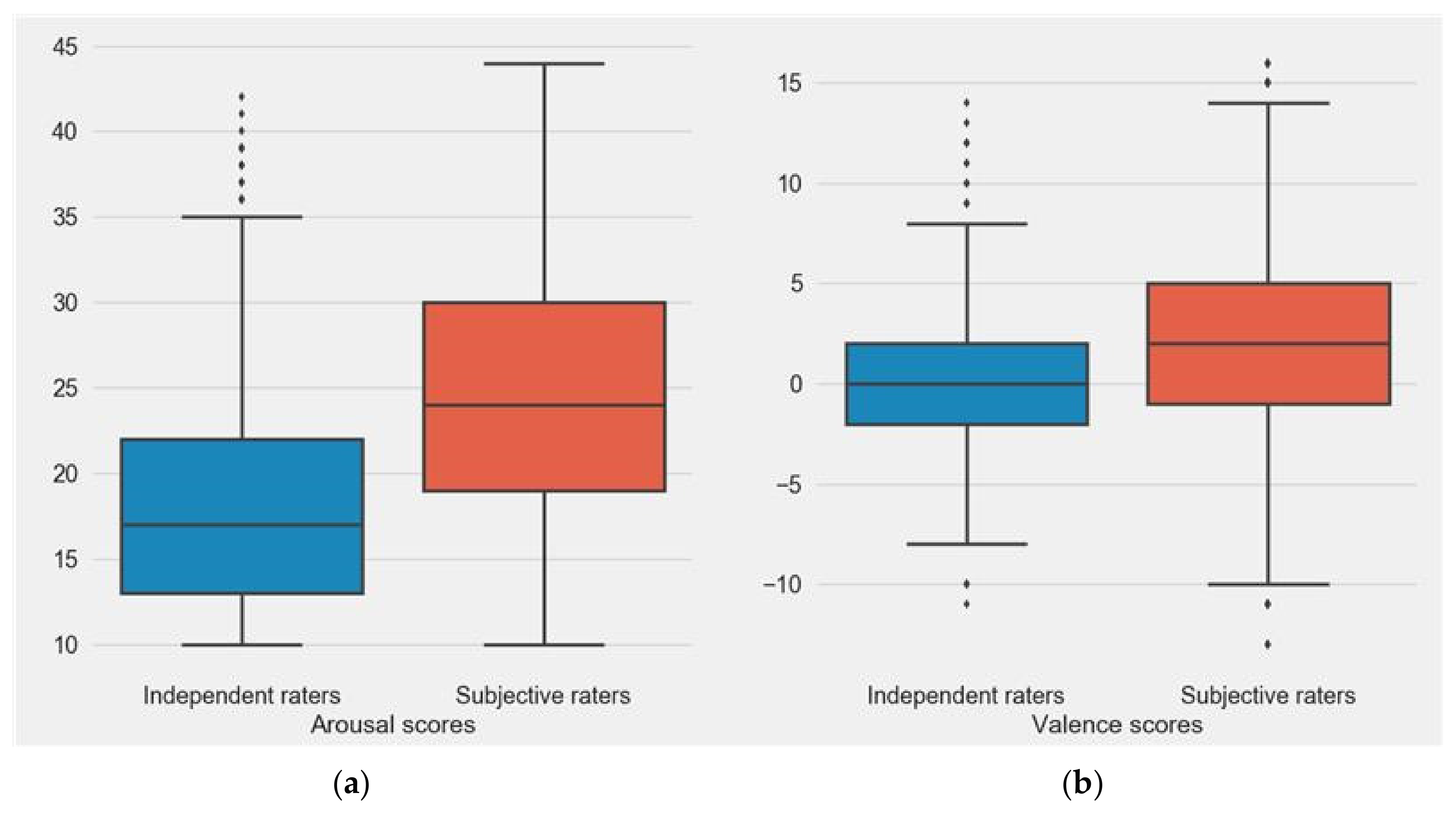

3.1. Comparison between Subjects’ and Independent Rater’s Valence and Arousal Scores

3.2. Signal Quality Estimation

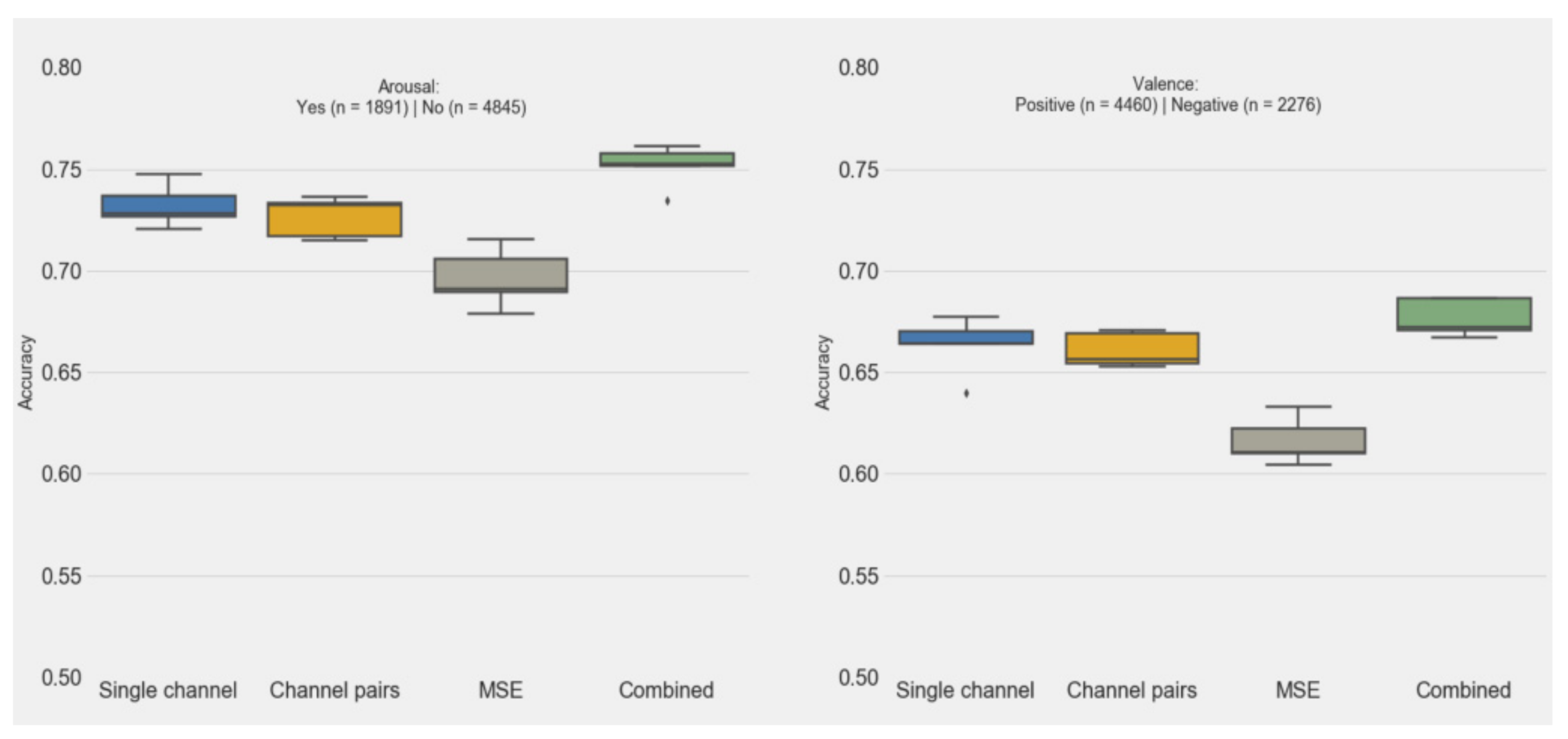

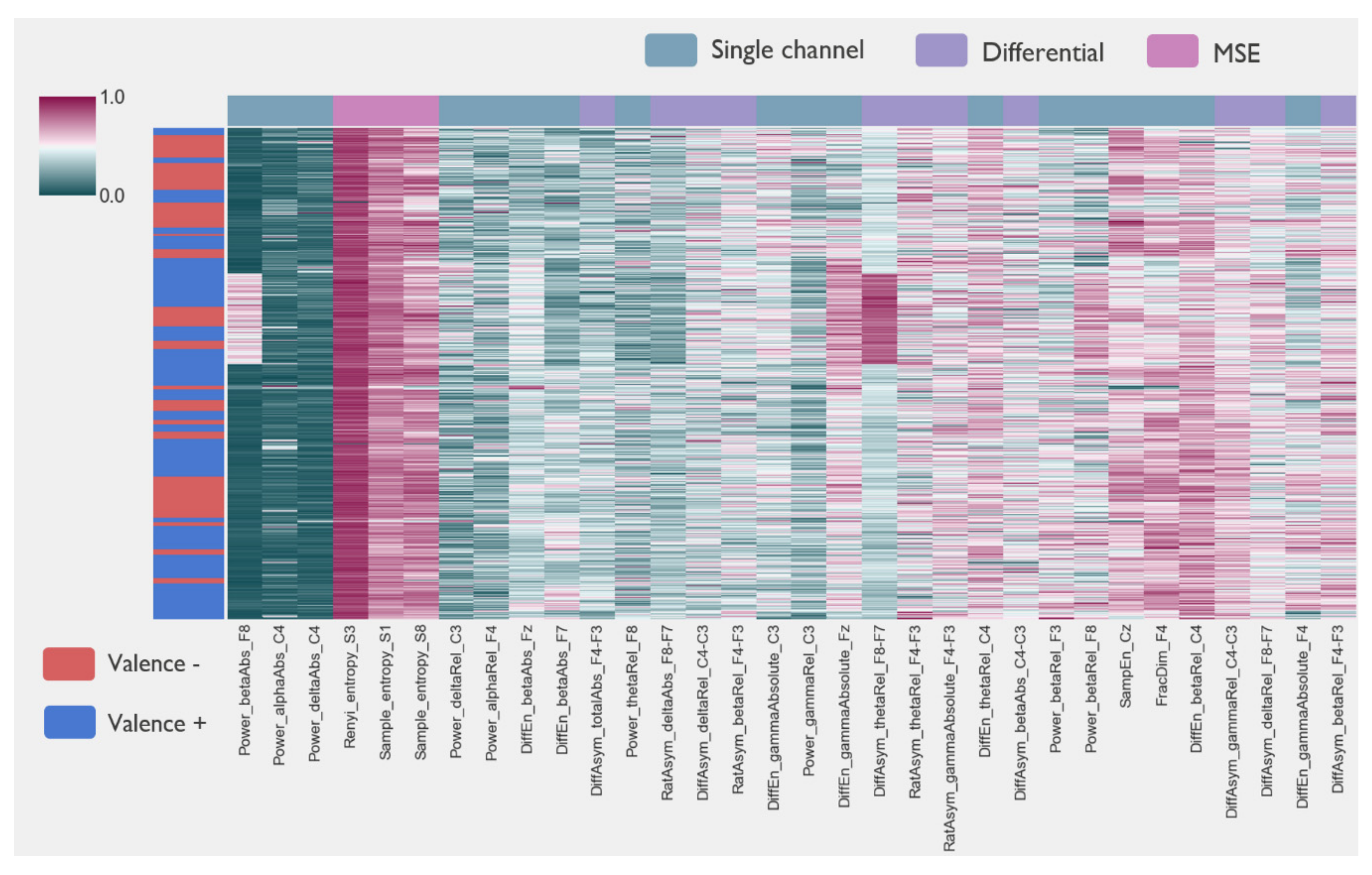

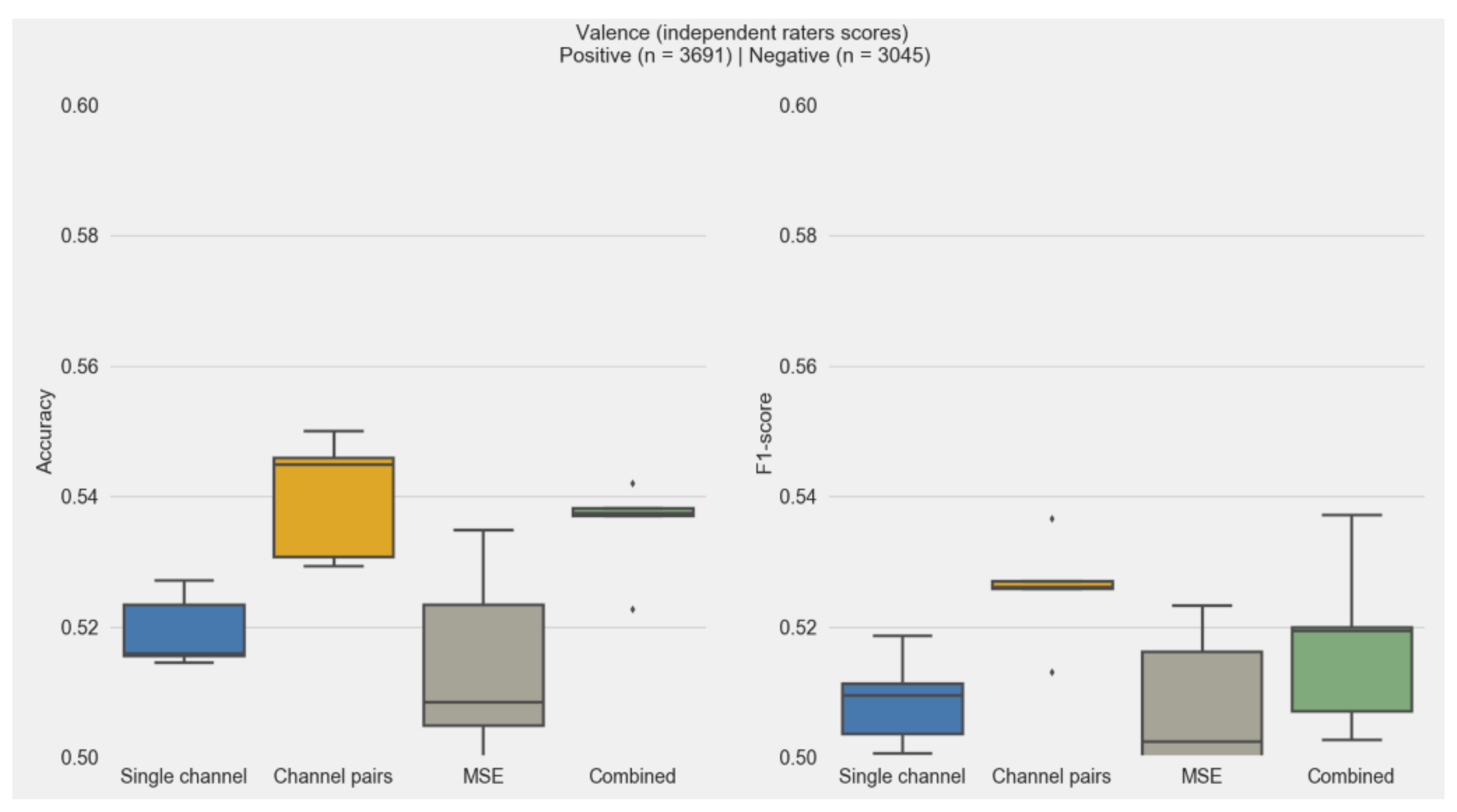

3.3. Features and Classification Performance

3.4. Subjective and Independent Rater Scores

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. Movie Clips

| Scene | Start Time | Stop Time | Description of the Start of the Scene |

| 1 | 00:09:05 | 00:10:09 | Jim (Cillian Murphy) walks in the deserted streets of London with plastic bag in his hand |

| 2 | 00:13:39 | 00:15:15 | Starts with a close shot on Jim’s face in the church |

| 3 | 00:42:33 | 00:44:55 | The taxi starts ascending the trash dump in the tunnel |

| 4 | 00:49:04 | 00:50:02 | Inside the grocery store in the deserted gas station |

| 5 | 01:08:29 | 01:09:24 | Major Henry West (Christopher Eccleston) and Jim are walking in a corridor |

| 6 | 01:34:58 | 01:35:46 | Hannah (Megan Burns) is sitting in a red dress frightened in a room |

| 7 | 01:36:09 | 01:37:58 | A fight between the black soldier and a zombie |

| 8 | 01:39:14 | 01:39:48 | Jim opens the taxi’s door facing Major Henry West |

| Scene | Start Time | Stop Time | Description of the Start of the Scene |

| 1 | 00:07:09 | 00:07:50 | General Bizimungu (Fana Mokoena) and Colonel Oliver(Nick Nolte) are talking in the hotel’s garden (while tourists are in the pool in the background) |

| 2 | 00:09:37 | 00:10:58 | In the Paul’s (Don Cheadle) house, their son run into the living room frightened |

| 3 | 00:23:04 | 00:24:21 | Discussion between Paul and the Rwandan officer and he asks for Paul’s ID |

| 4 | 00:51:04 | 00:54:33 | French soldiers are checking tourists’ passportst |

| 5 | 01:07:45 | 01:09:16 | The hotel’s van is passing by is passing by burning houses at night |

| 6 | 01:11:41 | 01:12:25 | The hotel’s van is on the road in a foggy dawn |

| 7 | 01:27:03 | 01:28:40 | Rebels are dancing around the road waiting for the UN trucks |

| 8 | 01:28:15 | 01:29:39 | Rebels are hitting the refugees in the truck |

| Scene | Start Time | Stop Time | Description of the Start of the Scene |

| 1 | 00:05:46 | 00:06:46 | Uma Thurman is fighting with Vernita Green (Vivica A. Fox) |

| 2 | 00:58:50 | 01:01:00 | The Japanese gangs are sitting around a black table |

| 3 | 01:10:13 | 01:11:40 | Japanese gangs are drinking in a room |

| 4 | 01:15:02 | 01:17:07 | Gogo Yubari (Chiaki Kuriyama) starts fighting with Uma Thurman |

| 5 | 01:18:24 | 01:22:50 | The fight scene of Uma Thurman and Japanese fighters in black suits |

| 6 | 01:24:43 | 01:25:40 | The fight scene of Uma Thurman and the Japanese bald fighter (Kenji Ohba) |

| 7 | 01:28:48 | 01:30:53 | The final fight scene between Uma Thurman and O-Ren Ishii (Lucy Lin) |

| 8 | 01:02:10 | 01:03:35 | Motorbikes are escorting a Mercedes in Tokyo streets |

| Scene | Start Time | Stop Time | Description of the Start of the Scene |

| 1 | 00:12:19 | 00:13:57 | Colin Frissell (Kriss Marchall) serves at the party |

| 2 | 00:47:37 | 00:49:09 | Aurelia (Lúcia Moniz) is bringing a cup of coffee for Jamie Bennett (Colin Firth) in the garden |

| 3 | 01:18:28 | 01:20:33 | The jewelry salesman (Rowan Atkinson) is wrapping a necklace |

| 4 | 01:23:45 | 01:27:04 | Colin Frissell arrives at Milwaukee |

| 5 | 01:43:19 | 01:44:57 | The old lady opens the door and surprises by seeing the prime minister (Hugh Grant) |

| 6 | 01:51:38 | 01:54:14 | School’s Christmas concert starts |

| 7 | 01:58:15 | 02:05:00 | Jamie Bennett arrived at Portugal |

| 8 | 00:28:26 | 00:29:52 | Daniel (Liam Neelson) and Sam (Thomas Sangster) are sitting on a bench |

| Scene | Start Time | Stop Time | Description of the Start of the Scene |

| 1 | 00:05:39 | 00:07:22 | Mr. Bean (Rowan Atkinson) takes a taxi at the train station |

| 2 | 00:10:41 | 00:12:59 | Mr. Bean is being served in a French restaurant |

| 3 | 00:31:52 | 00:33:50 | Mr. Bean is trying to raise money by dancing and imitating singer’s acts |

| 4 | 00:37:33 | 00:39:19 | Mr. Bean rides a bike on a road |

| 5 | 00:40:37 | 00:42:35 | Mr. Bean tries to hitchhike |

| 6 | 00:45:45 | 00:47:15 | Mr. Bean wakes up in a middle of the shooting of a commercial |

| 7 | 01:08:04 | 01:09:02 | Dressed as a woman tries to get into the theater with a fake ID |

| 8 | 01:11:35 | 01:13:22 | Mr. Bean is changing the projecting movie to his webcam videos |

| Scene | Start Time | Stop Time | Description of the Start of the Scene |

| 1 | 00:05:32 | 00:08:15 | School girls are frightened by hearing the ring tone |

| 2 | 00:27:39 | 00:29:38 | Reiko (Nanako Matsushima) watches the video alone in an empty room |

| 3 | 00:59:39 | 01:01:52 | Reiko sees the past in black and white |

| 4 | 01:19:10 | 01:21:48 | Reiko is descending into the well |

| 5 | 01:25:24 | 01:27:54 | Ryuji (Hiroyuki Sanada) is writing at home and he notices that the TV is on and showing the terrifying video |

| 6 | 01:29:56 | 01:31:55 | Reiko seats on the sofa in her house |

| 7 | 01:12:05 | 01:14:48 | Reiko and Ryuji are pushing the well’s lead |

| 8 | 00:48:20 | 01:49:42 | Reiko is sleeping in her father’s house |

| Scene | Start Time | Stop Time | Description of the Start of the Scene |

| 1 | 00:04:29 | 00:06:08 | Start scene of the approaching of boats with these words appear “6 June 1944” |

| 2 | 00:06:09 | 00:08:15 | Landing on the Omaha beach |

| 3 | 00:09:33 | 00:12:56 | Combat scene on the beach |

| 4 | 02:13:38 | 02:14:23 | The sniper is praying when he is on a tower |

| 5 | 00:18:51 | 00:21:38 | The commander is looking into a mirror to see the source of the gunfire in a combat scene |

| 6 | 02:26:05 | 02:27:21 | The combat scene where Capt. John H. Miller (Tom Hanks) was shot |

| 7 | 00:56:04 | 00:57:25 | While they are looking for private Ryan, by accident a wall collapses and they face a group of German soldiers on the other side of the destroyed wall |

| 8 | 01:20:00 | 01:20:45 | Group of soldiers are walking on a green field |

| Scene | Start Time | Stop Time | Description of the Start of the Scene |

| 1 | 00:00:24 | 00:02:09 | Warsaw in 1939 (black and white shots) |

| 2 | 00:21:10 | 00:22:34 | Szpilman (Adrien Brody) is playing in a restaurant |

| 3 | 00:24:28 | 00:25:54 | Szpilman walks in the streets of Warsaw |

| 4 | 00:32:57 | 00:34:11 | A crazy man and children on the street |

| 5 | 01:56:12 | 01:58:13 | Szpilman (with long hair and beards) tries to open a can |

| 6 | 00:44:34 | 00:47:07 | Jewish families are waiting to be sent to concentration camps |

| 7 | 00:58:01 | 01:59:27 | Szpilman in a construction site |

| 8 | 01:50:38 | 01:51:52 | German soldiers are burning everything with flamethrower |

Appendix B

| Single Channel Features | Channel Pairs | MSE |

|---|---|---|

| DiffEn_gammaAbs_F8 0.1 DiffEn_totalAbs_F8 0.08 Power_gammaRel_F3 0.08 Power_alphaRel_C4 0.06 DiffEn_thetaRel_F7 0.06 Power_thetaRel_C4 0.06 DiffEn_betaAbs_F3 0.04 DiffEn_alphaRel_C4 0.04 DiffEn_totalAbs_F7 0.04 Power_betaAbs_F3 0.02 Power_totalAbs_F4 0.02 Power_betaAbs_F7 0.02 DiffEn_deltaAbs_C4 0.02 Power_deltaRel_C4 0.02 Power_gammaAbs_F3 0.02 DiffEn_thetaRel_F3 0.02 | DiffAsym_totalAbs_F8-F7 0.1 DiffAsym_deltaAbs_C4-C3 0.1 DiffAsym_totalAbs_F4-F3 0.1 DiffAsym_betaRel_C4-C3 0.1 RatAsym_gammaRel_C4-C3 0.1 RatAsym_betaRel_F8-F7 0.1 DiffAsym_thetaAbs_F4-F3 0.08 DiffAsym_betaAbs_F8-F7 0.08 DiffAsym_betaAbs_F4-F3 0.08 DiffAsym_deltaRel_F8-F7 0.06 DiffAsym_deltaRel_F4-F3 0.06 RatAsym_gammaRel_F4-F3 0.02 DiffAsym_betaAbs_C4-C3 0.02 | multi_sample_entropy_S4 0.25 multi_renyi_entropy_S5 0.25 multi_renyi_entropy_S0 0.25 multi_sample_entropy_S0 0.25 |

| Single Channel Features | Channel Pairs | MSE |

|---|---|---|

| DiffEn_totalAbs_F7 0.1 DiffEn_totalAbs_F8 0.1 DiffEn_gammaAbs_F8 0.1 DiffEn_thetaAbs_F4 0.1 Power_gammaRel_F3 0.1 Power_alphaRel_C4 0.1 DiffEn_alphaRel_C4 0.1 DiffEn_deltaAbs_C4 0.08 Power_alphaRel_Fz 0.06 DiffEn_deltaAbs_F4 0.06 DiffEn_alphaRel_F7 0.04 Power_thetaRel_C4 0.02 DiffEn_thetaRel_C3 0.02 Power_deltaRel_C4 0.02 | DiffAsym_thetaAbs_F4-F3 0.1 DiffAsym_deltaAbs_C4-C3 0.1 DiffAsym_totalAbs_F4-F3 0.1 DiffAsym_betaRel_C4-C3 0.1 DiffAsym_betaAbs_F4-F3 0.1 DiffAsym_totalAbs_F8-F7 0.1 DiffAsym_betaAbs_F8-F7 0.08 RatAsym_gammaRel_F4-F3 0.08 RatAsym_betaRel_F8-F7 0.08 DiffAsym_betaAbs_C4-C3 0.06 DiffAsym_deltaRel_F8-F7 0.04 RatAsym_gammaRel_C4-C3 0.04 DiffAsym_deltaRel_F4-F3 0.02 | multi_sample_entropy_S4 0.25 multi_renyi_entropy_S5 0.25 multi_renyi_entropy_S0 0.25 multi_sample_entropy_S0 0.25 |

References

- Lindquist, K.A.; Wager, T.D.; Kober, H.; Bliss-Moreau, E.; Barrett, L.F. The brain basis of emotion: A meta-analytic review. Behav. Brain Sci. 2012, 35, 121–143. [Google Scholar] [CrossRef]

- Ochsner, K.N.; Silvers, J.A.; Buhle, J.T. Functional imaging studies of emotion regulation: A synthetic review and evolving model of the cognitive control of emotion. Ann. N. Y. Acad. Sci. 2012, 1251, E1–E24. [Google Scholar] [CrossRef] [PubMed]

- Jacob, S.; Shear, P.; Norris, M.; Smith, M.; Osterhage, J.; Strakowski, S.; Cerullo, M.; Fleck, D.; Lee, J.; Eliassen, J. Impact of functional magnetic resonance imaging (fMRI) scanner noise on affective state and attentional performance. J. Clin. Exp. Neuropsychol. 2015, 37, 563–570. [Google Scholar] [CrossRef]

- de Smedt, B.; Ansari, D.; Grabner, R.; Hannula-Sormunen, M.; Schneider, M.; Verschaffel, L. Cognitive neuroscience meets mathematics education: It takes two to tango. Educ. Res. Rev. 2011, 6, 232–237. [Google Scholar] [CrossRef]

- Cowie, R.; Douglas-Cowie, E.; Taspatsoulis, N.; Votsis, G.; Kollias, S.; Fellenz, W.; Taylor, J. Emotion recognition in human-computer interaction. IEEE Signal Process. Mag. 2001, 18, 32–80. [Google Scholar] [CrossRef]

- Sander, D.; Grandjean, D.; Scherer, K. A systems approach to appraisal mechanisms in emotion. Neural Netw. 2005, 18, 317–352. [Google Scholar] [CrossRef] [PubMed]

- Basar, E.; Basar-Eroglu, C.; Karakas, S.; Schurmann, M. Are cognitive processes manifested in event-related gamma, alpha, theta and delta oscillations in EEG? Neurosci. Lett. 1999, 259, 165–168. [Google Scholar] [CrossRef]

- Goldapple, K.; Segal, Z.; Garson, C.; Lau, M.; Bieling, P.; Kennedy, S.; Mayberg, H. Modulation of cortical-limbic pathways in major depression: Treatment-specific effects of cognitive behavior therapy. Arch. Gen. Psychiatry 2004, 61, 34–41. [Google Scholar] [CrossRef]

- Huster, R.; Stevens, S.; Gerlach, A.; Rist, F. A spectralanalytic approach to emotional responses evoked through picture presentation. Intern. J. Psychophysiol. 2009, 72, 212–216. [Google Scholar] [CrossRef]

- Lang, P.; Bradley, M.; Cuthbert, B. International Affective Picture System (IAPS): Digitized Photographs, Instruction Manual and Affective Ratings; University of Florida: Gainesville, FL, USA, 2005. [Google Scholar]

- Dan-Glauser, E.; Scherer, K. The Geneva affective picture database (GAPED): A new 730-picture database focusing on valence and normative significance. Behav. Res. Methods 2011, 43, 468. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A database for emotion analysis using physiological signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Scmidt, L.; Trainor, L. Frontal brain electrical activity (EEG) distinguishes valence and intensity of musical excerpts. Cogn. Emot. 2001, 15, 487–500. [Google Scholar] [CrossRef]

- Davidson, R. The Neuropsychology of Emotion and Affective Style; Guilford Press: New York, NY, USA, 1993. [Google Scholar]

- Fox, N. If it’s not left, it’s right: Electroencephalograph asymmetry and the development of emotion. Am. Psychol. 1991, 46, 863–872. [Google Scholar] [CrossRef] [PubMed]

- Heller, W. Neuropsychological mechanisms of individual differences in emotion, personality, and arousal. Neuropsychology 1993, 7, 476–489. [Google Scholar] [CrossRef]

- Davidson, R. Cerebral asymmetry and emotion: Conceptual and methodological conundrums. Cogn. Emot. 1993, 7, 115–138. [Google Scholar] [CrossRef]

- Davidson, R. Affective style and affective disorders: Perspectives from affective neuroscience. Cogn. Emot. 1998, 12, 307–330. [Google Scholar] [CrossRef]

- Coan, J.; Allen, J.; Harmon-Jones, E. Voluntary facial expression and hemispheric asymmetry over the frontal cortex. Psychophysiology 2001, 38, 912–925. [Google Scholar] [CrossRef]

- Coan, J.; Allen, J. Frontal EEG asymmetry and the behavioral activation and inhibition systems. Psychophysiology 2003, 40, 106–114. [Google Scholar] [CrossRef]

- Tomarken, A.; Davidson, R.; Henriques, J. Resting frontal brain asymmetry predicts affective responses to films. J. Personal. Soc. Psychol. 1990, 59, 791–801. [Google Scholar] [CrossRef]

- Aftanas, L.; Varlamov, A.; Pavlov, S.; Makhnev, V.; Reva, N. Time-dependent cortical asymmetries induced by emotional arousal: EEG analysis of event related synchronization and desynchronization in individually defined frequency bands. Int. J. Psychophysiol. 2002, 44, 67–82. [Google Scholar] [CrossRef]

- Duan, R.; Zhu, J.; Lu, B. Differential entropy feature for EEG-based emotion classification. In Proceedings of the 6th International IEEE/EMBS Conference on Neural Engineering, San Diego, CA, USA, 6–8 November 2013. [Google Scholar]

- Aftanas, L.; Lotova, N.; Koshkarov, V.; Popov, S. Non-linear dynamical coupling between different brain areas during evoked emotions: An EEG investigation. Biol. Psychol. 1998, 48, 121–138. [Google Scholar] [CrossRef]

- Stam, C.J. Nonlinear dynamical analysis of EEG and MEG: Review of an emerging field. Clin. Neurophysiol. 2005, 116, 2266–2301. [Google Scholar] [CrossRef] [PubMed]

- Sourina, O.; Liu, Y. A fractal-based algorithm of emotion recognition from EEG using arousal-valence model. In Proceedings of the International Conference on Bio-inspired Systems and Signal Processing, Rome, Italy, 26–29 January 2011; pp. 209–214. [Google Scholar]

- Wang, X.; Nie, D.; Lu, B. Emotional state classification from EEG using machine learning approach. Neurocomputing 2014, 129, 94–106. [Google Scholar] [CrossRef]

- Tonoyan, Y.; Looney, D.; Mandic, D.P.; van Hulle, M.M. Discriminating multiple emotional states from EEG using a data-adaptive, multiscale information-theoretic approach. Int. J. Neural Syst. 2016, 26, 1650005. [Google Scholar] [CrossRef] [PubMed]

- Michalopoulos, K.; Bourbakis, N. Application of multiscale entropy on EEG signals for emotion detection. In Proceedings of the IEEE EMBS International Conference on Biomedical and Health Informatics, Orlando, FL, USA, 16–19 February 2017. [Google Scholar]

- Baumgartner, T.; Esslen, M.; Jancke, L. From emotion perception to emotion experience: Emotions evoked by pictures and classical music. Int. J. Psychophysiol. 2006, 60, 34–43. [Google Scholar] [CrossRef]

- Baveye, Y.; Dallandrea, E.; Chamaret, C.; Chen, L. LIRIS-ACCEDE: A video database for affective content analysis. IEEE Trans. Affect. Comput. 2015, 6, 43–55. [Google Scholar] [CrossRef]

- Jenkins, L.; Andrewes, D. A new set of standardized verbal and non-verbal contemporary film stimuli for the elucidation of emotions. Brain Impair. 2012, 13, 212–227. [Google Scholar] [CrossRef]

- Schaefer, A.; Nils, N.; Sanchez, X.; Philippot, P. Assessing the effectiveness of a large database of emotion-eliciting films: A new tool for emotion researchers. Cogn. Emot. 2010, 24, 1153–1172. [Google Scholar] [CrossRef]

- Soleymani, M.; Chanel, G.; Kierkels, J.; Pun, T. Affective ranking of movie scenes using physiological signals and content analysis. In Proceedings of the 2nd ACM Workshop on Multimedia Semantics, Vancouver, BC, Canada, 31 October 2008. [Google Scholar]

- Kreutz, G.; Ott, U.; Teichmann, D.; Osawa, P.; Vaiti, D. Using music to induce emotions: Influences of musical preference and absorption. Psychol. Music 2007, 37, 101–126. [Google Scholar] [CrossRef]

- Khalfa, S.; Isabelle, P.; Jean-Pierre, B.; Mannon, R. Event-related skin conductance responses to musical emotions in humans. Neurosci. Lett. 2002, 328, 145–149. [Google Scholar] [CrossRef]

- Gabrielsson, A. Emotion perceived and emotion felt: Same or different? Musicae Sci. 2002, 5, 123–147. [Google Scholar] [CrossRef]

- Kallinen, K.; Ravaja, N. Emotion perceived and emotion felt: Same and different. Musicae Sci. 2006, 10, 191–213. [Google Scholar] [CrossRef]

- Schubert, E. Emotion felt by the listener and expressed by the music: Literature review and theoretical perspectives. Front. Psychol. 2013, 4, 837. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Mitra, S.; Matsumoto, A.; Patki, S.; van Hoof, C.; Makinwa, K.; Yazicioglu, R. A wearable 8-channel active-electrode EEG/ETI acquisition system for body area networks. IEEE J. Solid-State Circuits 2014, 49, 2005–2016. [Google Scholar] [CrossRef]

- Mackinnon, A.; Jorm, A.; Christensen, H.; Korten, A.; Jacomb, P.; Rodgers, B. A short from of the Positive and Negative Affect Schedule: Evaluation of factorial validity and invariance across demographic variables in a community sample. Personal. Individ. Differ. 1999, 27, 405–416. [Google Scholar] [CrossRef]

- Torres, E.P.; Torres, E.A.; Hernández-Álvarez, M.; Yoo, S.G. EEG-Based BCI Emotion Recognition: A Survey. Sensors 2020, 20, 5083. [Google Scholar] [CrossRef] [PubMed]

- Sorinas, J.; Ferrández, J.M.; Fernandez, E. Brain and Body Emotional Responses: Multimodal Approximation for Valence Classification. Sensors 2019, 20, 313. [Google Scholar] [CrossRef]

- Candra, H.; Yuwono, M.; Chai, R.; Handojoseno, A.; Elamvazhuthi, I.; Nguyen, H.; Su, S. Investigation of window size in classification of EEG-emotion signal with wavelet entropy and support vector machine. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Milan, Italy, 25–29 August 2015. [Google Scholar]

- Witteveen, J.; Pradhapan, P.; Mihajlovic, V. Comparison of a pragmatic and regression approach for wearable EEG signal quality assessment. IEEE J. Biomed. Health Inform. 2020, 24, 735–746. [Google Scholar] [CrossRef]

- Pincus, S.; Huang, W. Approximate entropy—Statistical properties and applications. Commun. Stat. Theory Methods 1992, 21, 3061–3077. [Google Scholar] [CrossRef]

- Yentes, J.M.; Hunt, N.; Schmid, K.K.; Kaipust, J.P.; McGrath, D.; Stergiou, N. The appropriate use of approximate entropy and sample entropy with short data sets. Ann. Biomed. Eng. 2013, 41, 349–365. [Google Scholar] [CrossRef]

- Doyle, T.; Dugan, E.; Humphries, B.; Newton, E. Discriminating between elderly and young using a fractal dimension analysis of centre of pressure. Int. J. Med Sci. 2004, 1, 11–20. [Google Scholar] [CrossRef] [PubMed]

- Sharma, R.; Pachori, R.; Acharya, U. Application of entropy measures on intrinsic mode functions for the automated identification of focal electroencephalogram signals. Entropy 2015, 17, 669–691. [Google Scholar] [CrossRef]

- Martinez-Rodrigo, A.; Garcia-Martinez, B.; Alcaraz, R.; Gonzalez, P.; Fernandez-Caballero, A. Multiscale entropy analysis for recognition of visually elicited negative stress from EEG recordings. Int. J. Neural Syst. 2019, 29, 1850038. [Google Scholar] [CrossRef] [PubMed]

- Ozel, P.; Akan, A.; Yilmaz, B. Noise-assisted multivariate empirical mode decomposition based emotion recognition. Electrica 2018, 18, 263–274. [Google Scholar] [CrossRef]

- Tonoyan, Y.; Chanwimalueang, T.; Mandic, D.; van Hulle, M. Discrimination of emotional states from scalp- and intracranial EEG using multiscale Rényi entropy. PLoS ONE 2017, 12, e0186916. [Google Scholar] [CrossRef]

- Renyi, A. On measures of entropy and information. In Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability; University of California Press: Berkeley, CA, USA, 1961. [Google Scholar]

- Daly, I.; Mailk, A.; Hwang, F.; Roesch, E.; Weaver, J.; Kirke, A.; Williams, D.; Miranda, E.; Nasuto, S. Neural correlates of emotional responses to music: An EEG study. Neurosci. Lett. 2014, 573, 52–57. [Google Scholar] [CrossRef]

- Arefein, N.; Zali, A.; Seddighi, A.; Fathi, M.; Teymourian, H.; Dabir, S.; Radpay, B. Clinical analysis of EEG parameters in prediction of the depth of anesthesia in different stages: A comparative study. Tanaffos 2009, 8, 46–53. [Google Scholar]

- Sourina, O.; Wang, Q.; Liu, Y.; Nguyen, M. A real-time fractal-based brain state recognition from EEG and its application. In Proceedings of the International Conference on Bio-inspired Systems and Signal Processing, Rome, Italy, 26–29 January 2011; pp. 82–90. [Google Scholar]

- Bajaj, V.; Pachori, R. Detection of Human Emotions Using Features Based on the Multiwavelet Transform of EEG Signals. Available online: https://link.springer.com/chapter/10.1007/978-3-319-10978-7_8 (accessed on 2 June 2020).

- Xu, D.; Erdogmuns, D. Renyi’s entropy, divergence and their nonparametric estimators. In Information Theoretic Learning; Springer: New York, NY, USA, 2010; pp. 47–102. [Google Scholar]

- Kantz, H.; Schreiber, T. Nonlinear Time Series Analysis; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Flandrin, P.; Rilling, G.; Goncalves, P. Empirical mode decomposition as a filter bank. IEEE Signal Process. Lett. 2004, 11, 112–114. [Google Scholar] [CrossRef]

- Rehman, N.; Mandic, D. Multivariate empirical mode decomposition, Proceedings of the Royal Society A: Mathematical. Phys. Eng. Sci. 2009, 466, 1291–1302. [Google Scholar]

- Doma, V.; Pirouz, M. A comparative analysis of machine learning methods for emotion recognition using EEG and peripheral physiological signals. J. Big Data 2020, 7, 18. [Google Scholar] [CrossRef]

- Pan, C.; Shi, C.; Mu, H.; Li, J.; Gao, X. EEG-Based Emotion Recognition Using Logistic Regression with Gaussian Kernel and Laplacian Prior and Investigation of Critical Frequency Bands. Sensors 2020, 10, 1619. [Google Scholar] [CrossRef]

- García-Martínez, B.; Martinez-Rodrigo, A.; Alcaraz, R.; Fernández-Caballero, A. A Review on Nonlinear Methods Using Electroencephalographic Recordings for Emotion Recognition. IEEE Trans. Affect. Comput. 2019. [Google Scholar] [CrossRef]

- Gao, Z.; Cui, X.; Wan, W.; Gu, Z. Recognition of Emotional States Using Multiscale Information Analysis of High Frequency EEG Oscillations. Sensors 2019, 21, 609. [Google Scholar] [CrossRef]

- Dzedzickis, A.; Kaklauskas, A.; Bucinskas, V. Human Emotion Recognition: Review of Sensors and Methods. Sensors 2020, 20, 529. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pradhapan, P.; Velazquez, E.R.; Witteveen, J.A.; Tonoyan, Y.; Mihajlović, V. The Role of Features Types and Personalized Assessment in Detecting Affective State Using Dry Electrode EEG. Sensors 2020, 20, 6810. https://doi.org/10.3390/s20236810

Pradhapan P, Velazquez ER, Witteveen JA, Tonoyan Y, Mihajlović V. The Role of Features Types and Personalized Assessment in Detecting Affective State Using Dry Electrode EEG. Sensors. 2020; 20(23):6810. https://doi.org/10.3390/s20236810

Chicago/Turabian StylePradhapan, Paruthi, Emmanuel Rios Velazquez, Jolanda A. Witteveen, Yelena Tonoyan, and Vojkan Mihajlović. 2020. "The Role of Features Types and Personalized Assessment in Detecting Affective State Using Dry Electrode EEG" Sensors 20, no. 23: 6810. https://doi.org/10.3390/s20236810

APA StylePradhapan, P., Velazquez, E. R., Witteveen, J. A., Tonoyan, Y., & Mihajlović, V. (2020). The Role of Features Types and Personalized Assessment in Detecting Affective State Using Dry Electrode EEG. Sensors, 20(23), 6810. https://doi.org/10.3390/s20236810