Estimation of Peanut Leaf Area Index from Unmanned Aerial Vehicle Multispectral Images

Abstract

1. Introduction

2. Material and Methods

2.1. Test Design

2.2. Data Acquisition

2.2.1. Multispectral Data Acquisition and Processing

2.2.2. Collection and Processing of the Leaf Area Index

2.3. Selection of the Vegetation Index

2.4. Prediction Model Construction and Verification Accuracy

3. Results

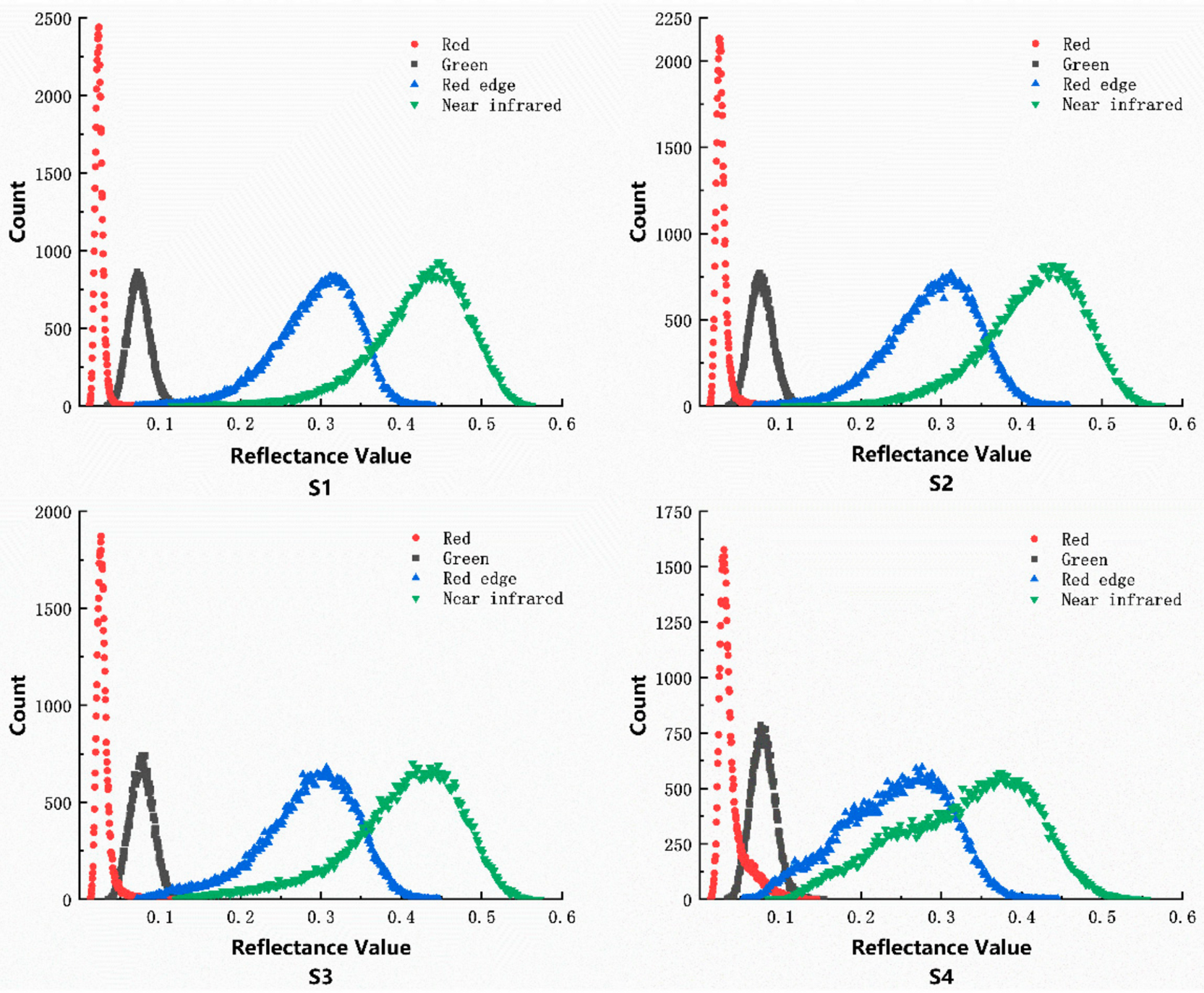

3.1. Spectral Data Processing

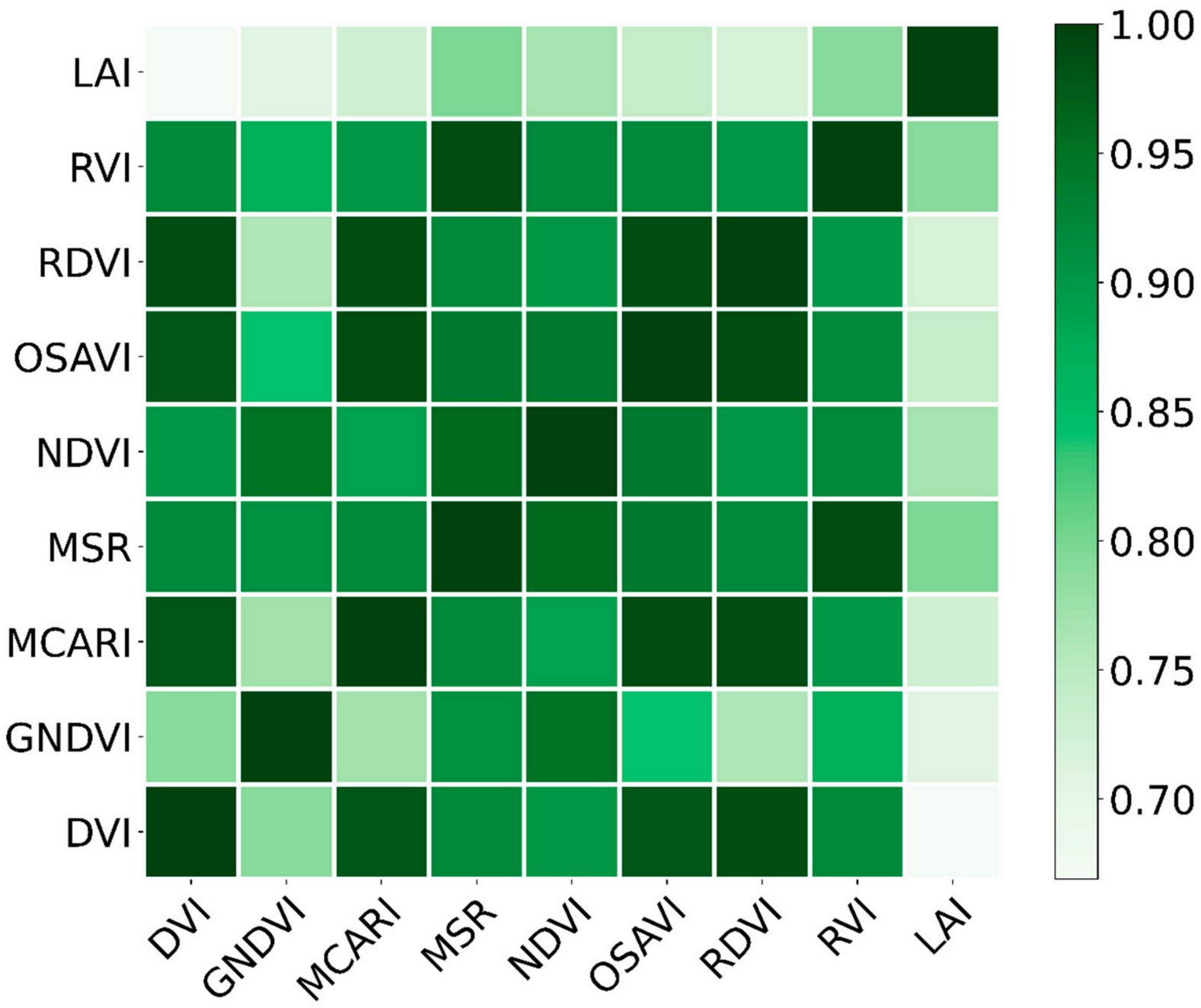

3.2. Correlation Analysis between the Vegetation Indices and Measured LAIs

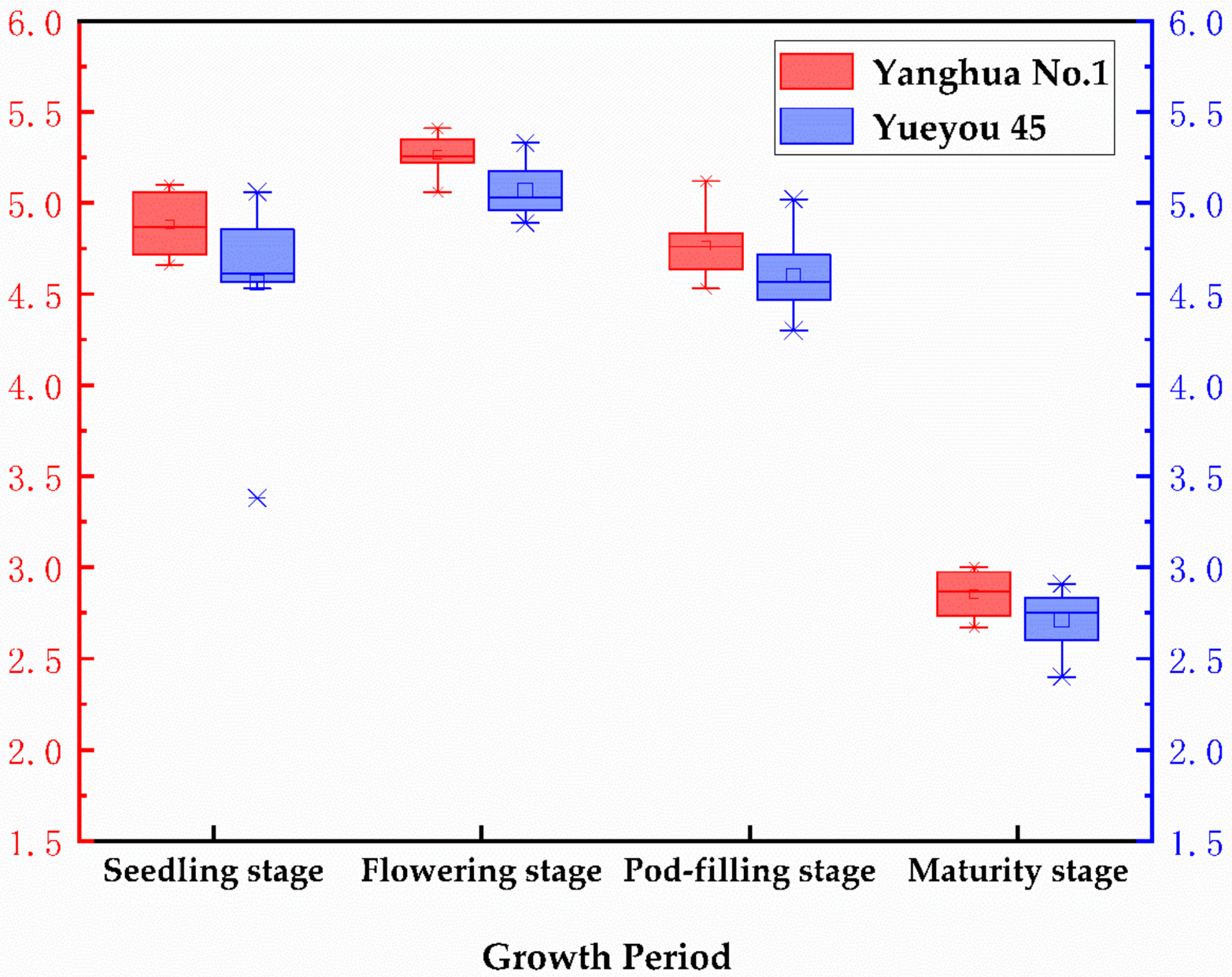

3.3. Peanut Leaf Area Index Analysis

3.4. Simple Regression Model

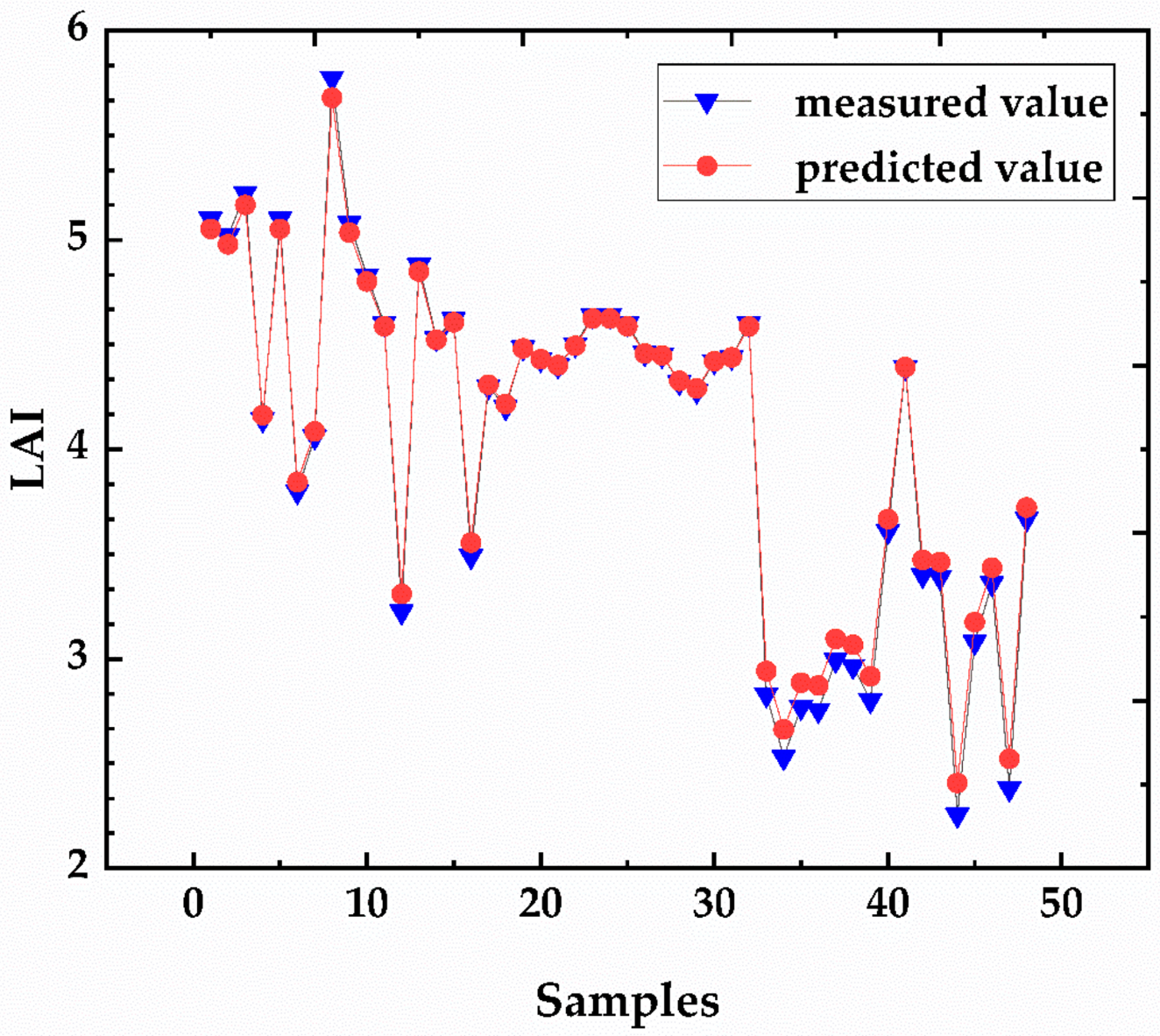

3.5. BP Neural Network Model

3.6. Mapping the LAI Prediction

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chen, J.M.; Black, T.A. Defining leaf area index for non-flat leaves. Agric. For. Meteorol. 1992, 15, 421–429. [Google Scholar] [CrossRef]

- Yao, X.; Wang, N.; Liu, Y.; Cheng, T.; Tian, Y.; Chen, Q.; Zhu, Y. Estimation of Wheat LAI at Middle to High Levels Using Unmanned Aerial Vehicle Narrowband Multispectral Imagery. Remote Sens. 2017, 9, 1304. [Google Scholar] [CrossRef]

- Casa, R.; Varella, H.; Buis, S.; Guérif, M.; De Solan, B.; Baret, F. Forcing a wheat crop model with LAI data to access agronomic variables: Evaluation of the impact of model and LAI uncertainties and comparison with an empirical approach. Eur. J. Agron. 2012, 37, 1–10. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral Remote Sensing from Unmanned Aircraft: Image Processing Workflows and Applications for Rangeland Environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef]

- Dente, L.; Satalino, G.; Mattia, F.; Rinaldi, M. Assimilation of leaf area index derived from ASAR and MERIS data into CERES-Wheat model to map wheat yield. Remote Sens. Environ. 2008, 112, 1395–1407. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, K.; Tang, C.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; Liu, X. Estimation of Rice Growth Parameters Based on Linear Mixed-Effect Model Using Multispectral Images from Fixed-Wing Unmanned Aerial Vehicles. Remote Sens. 2019, 11, 1371. [Google Scholar] [CrossRef]

- Guindin-Garcia, N.; Gitelson, A.A.; Arkebauer, T.J.; Shanahan, J.; Weiss, A. An evaluation of MODIS 8- and 16-day composite products for monitoring maize green leaf area index. Agric. For. Meteorol. 2012, 161, 15–25. [Google Scholar] [CrossRef]

- Jonckheere, I.; Fleck, S.; Nackaerts, K.; Muys, B.; Coppin, P.; Weiss, M.; Baret, F. Review of methods for in situ leaf area index determination. Agric. For. Meteorol. 2004, 121, 19–35. [Google Scholar] [CrossRef]

- Huang, J.; Sedano, F.; Huang, Y.; Ma, H.; Li, X.; Liang, S.; Tian, L.; Zhang, X.; Fan, J.; Wu, W. Assimilating a synthetic Kalman filter leaf area index series into the WOFOST model to improve regional winter wheat yield estimation. Agric. For. Meteorol. 2016, 216, 188–202. [Google Scholar] [CrossRef]

- Lu, D.; Chen, Q.; Wang, G.; Moran, E.; Batistella, M.; Zhang, M.; Vaglio Laurin, G.; Saah, D. Aboveground Forest Biomass Estimation with Landsat and LiDAR Data and Uncertainty Analysis of the Estimates. Int. J. For. Res. 2012, 2012, 1–16. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Nebiker, S.; Lack, N.; Abächerli, M.; Läderach, S. Light-weight multispectral UAV sensors and their capabilities for predicting grain yield and detecting plant diseases. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 963–970. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Martínez-Guanter, J.; Egea, G.; Raja, P.; Pérez-Ruiz, M. Deep learning techniques for estimation of the yield and size of citrus fruits using a UAV. Eur. J. Agron. 2020, 115, 126030. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Dalla Corte, A.P.; Rex, F.E.; Almeida, D.R.A.D.; Sanquetta, C.R.; Silva, C.A.; Moura, M.M.; Wilkinson, B.; Zambrano, A.M.A.; Cunha Neto, E.M.D.; Veras, H.F.P.; et al. Measuring Individual Tree Diameter and Height Using GatorEye High-Density UAV-Lidar in an Integrated Crop-Livestock-Forest System. Remote Sens. 2020, 12, 863. [Google Scholar] [CrossRef]

- Guo, T.; Kujirai, T.; Watanabe, T. Mapping Crop Status from an Unmanned Aerial Vehicle for Precision Agriculture Applications. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B1, 485–490. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Xie, Y.; Wang, P.; Sun, H.; Zhang, S.; Li, L. Assimilation of Leaf Area Index and Surface Soil Moisture With the CERES-Wheat Model for Winter Wheat Yield Estimation Using a Particle Filter Algorithm. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1303–1316. [Google Scholar] [CrossRef]

- Xiao, Z.; Liang, S.; Wang, J.; Jiang, B.; Li, X. Real-time retrieval of Leaf Area Index from MODIS time series data. Remote Sens. Environ. 2011, 115, 97–106. [Google Scholar] [CrossRef]

- Atzberger, C.; Darvishzadeh, R.; Immitzer, M.; Schlerf, M.; Skidmore, A.; le Maire, G. Comparative analysis of different retrieval methods for mapping grassland leaf area index using airborne imaging spectroscopy. Int. J. Appl. Earth Obs. 2015, 43, 19–31. [Google Scholar] [CrossRef]

- Ganguly, S.; Nemani, R.R.; Zhang, G.; Hashimoto, H.; Milesi, C.; Michaelis, A.; Wang, W.; Votava, P.; Samanta, A.; Melton, F.; et al. Generating global Leaf Area Index from Landsat: Algorithm formulation and demonstration. Remote Sens. Environ. 2012, 122, 185–202. [Google Scholar] [CrossRef]

- Le Maire, G.; Marsden, C.; Nouvellon, Y.; Stape, J.; Ponzoni, F. Calibration of a Species-Specific Spectral Vegetation Index for Leaf Area Index (LAI) Monitoring: Example with MODIS Reflectance Time-Series on Eucalyptus Plantations. Remote Sens. 2012, 4, 3766–3780. [Google Scholar] [CrossRef]

- Baghzouz, M.; Devitt, D.A.; Fenstermaker, L.F.; Young, M.H. Monitoring Vegetation Phenological Cycles in Two Different Semi-Arid Environmental Settings Using a Ground-Based NDVI System: A Potential Approach to Improve Satellite Data Interpretation. Remote Sens. 2010, 2, 990–1013. [Google Scholar] [CrossRef]

- Su, J.; Liu, C.; Coombes, M.; Hu, X.; Wang, C.; Xu, X.; Li, Q.; Guo, L.; Chen, W. Wheat yellow rust monitoring by learning from multispectral UAV aerial imagery. Comput. Electron. Agric. 2018, 155, 157–166. [Google Scholar] [CrossRef]

- Devia, C.A.; Rojas, J.P.; Petro, E.; Martinez, C.; Mondragon, I.F.; Patino, D.; Rebolledo, M.C.; Colorado, J. High-Throughput Biomass Estimation in Rice Crops Using UAV Multispectral Imagery. J. Intell. Robot. Syst. 2019, 96, 573–589. [Google Scholar] [CrossRef]

- Wang, Y.; Chang, K.; Chen, R.; Lo, J.; Shen, Y. Large-area rice yield forecasting using satellite imageries. Int. J. Appl. Earth Obs. 2010, 12, 27–35. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Guillén-Climent, M.L.; Hernández-Clemente, R.; Catalina, A.; González, M.R.; Martín, P. Estimating leaf carotenoid content in vineyards using high resolution hyperspectral imagery acquired from an unmanned aerial vehicle (UAV). Agric. For. Meteorol. 2013, 171–172, 281–294. [Google Scholar] [CrossRef]

- Muhammad, H.; Mengjiao, Y.; Awais, R.; Xiuliang, J.; Xianchun, X.; Yonggui, X. Time-series multispectral indices from unmanned aerial vehicle imagery reveal senescence rate in bread wheat. Remote Sens. 2018, 10, 809. [Google Scholar]

- Glenn, E.P.; Huete, A.R.; Nagler, P.L.; Nelson, S.G. Relationship Between Remotely-sensed Vegetation Indices, Canopy Attributes and Plant Physiological Processes: What Vegetation Indices Can and Cannot Tell Us About the Landscape. Sensors 2008, 8, 2136–2160. [Google Scholar] [CrossRef]

- Haboudane, D. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Cao, Y.; Li, G.L.; Luo, Y.K.; Pan, Q.; Zhang, S.Y. Monitoring of sugar beet growth indicators using wide-dynamic-range vegetation index (WDRVI) derived from UAV multispectral images. Comput. Electron. Agric. 2020, 171, 105331. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Tanre, D. Atmospherically resistant vegetation index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide Dynamic Range Vegetation Index for Remote Quantification of Biophysical Characteristics of Vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef]

- Viña, A.; Gitelson, A.A.; Nguy-Robertson, A.L.; Peng, Y. Comparison of different vegetation indices for the remote assessment of green leaf area index of crops. Remote Sens. Environ. 2011, 115, 3468–3478. [Google Scholar] [CrossRef]

- Liu, J.; Pattey, E.; Jégo, G. Assessment of vegetation indices for regional crop green LAI estimation from Landsat images over multiple growing seasons. Remote Sens. Environ. 2012, 123, 347–358. [Google Scholar] [CrossRef]

- Qi, H.; Zhu, B.; Kong, L.; Yang, W.; Zou, J.; Lan, Y.; Zhang, L. Hyperspectral Inversion Model of Chlorophyll Content in Peanut Leaves. Appl. Sci. 2020, 10, 2259. [Google Scholar] [CrossRef]

- Delegido, J.; Verrelst, J.; Rivera, J.P.; Ruiz-Verdú, A.; Moreno, J. Brown and green LAI mapping through spectral indices. Int. J. Appl. Earth Obs. 2015, 35, 350–358. [Google Scholar] [CrossRef]

- Chen, L.; Huang, J.F.; Wang, F.M.; Tang, Y.L. Comparison between back propagation neural network and regression models for the estimation of pigment content in rice leaves and panicles using hyperspectral data. Int. J. Remote Sens. 2007, 28, 3457–3478. [Google Scholar] [CrossRef]

- Fortin, J.G.; Anctil, F.; Parent, L.E. Comparison of physically based and empirical models to estimate corn (Zea mays L) LAI from multispectral data in eastern Canada. Can. J. Remote Sens. 2013, 39, 89–99. [Google Scholar] [CrossRef]

- Peng, Y.; Lu, R. Modeling multispectral scattering profiles for prediction of apple fruit firmness. Trans. ASABE 2005, 48, 235–242. [Google Scholar] [CrossRef]

- Zhu, Z.; Bi, J.; Pan, Y.; Ganguly, S.; Anav, A.; Xu, L.; Samanta, A.; Piao, S.; Nemani, R.; Myneni, R. Global Data Sets of Vegetation Leaf Area Index (LAI)3g and Fraction of Photosynthetically Active Radiation (FPAR)3g Derived from Global Inventory Modeling and Mapping Studies (GIMMS) Normalized Difference Vegetation Index (NDVI3g) for the Period 1981 to 2011. Remote Sens. 2013, 5, 927–948. [Google Scholar]

- Gómez-Candón, D.; De Castro, A.I.; López-Granados, F. Assessing the accuracy of mosaics from unmanned aerial vehicle (UAV) imagery for precision agriculture purposes in wheat. Precis. Agric. 2014, 15, 44–56. [Google Scholar] [CrossRef]

- Kang, Y.; Özdoğan, M.; Zipper, S.; Román, M.; Walker, J.; Hong, S.; Marshall, M.; Magliulo, V.; Moreno, J.; Alonso, L.; et al. How Universal Is the Relationship between Remotely Sensed Vegetation Indices and Crop Leaf Area Index? A Global Assessment. Remote Sens. 2016, 8, 597. [Google Scholar] [CrossRef]

- Pinty, B.; Lavergne, T.; Widlowski, J.L.; Gobron, N.; Verstraete, M.M. On the need to observe vegetation canopies in the near-infrared to estimate visible light absorption. Remote Sens. Environ. 2009, 113, 10–23. [Google Scholar] [CrossRef]

| Band Number | Band Name | Center Wavelength (nm) | Bandwidth FWHM (nm) | Definition |

|---|---|---|---|---|

| 1 | Green | 550 | 40 | 1.4 MP |

| 2 | Red | 660 | 40 | 1.4 MP |

| 3 | Red-Edge | 735 | 10 | 1.4 MP |

| 4 | Near-Infrared | 790 | 40 | 1.4 MP |

| Vegetation Index Equation | References |

|---|---|

| Jordan et al., 1969 | |

| Peñuelas et al., 1997 | |

| Haboudane et al., 2004 | |

| Roujean et al., 2005 | |

| Gitelson et al., 1996 | |

| - | Chen et al., 1996 |

| Becker et al., 1988 | |

| - | Gitelson et al., 1994 |

| - | Gitelson et al., 2005 |

| Rondeaux et al., 1996 | |

| Daughtry et al., 2000 | |

| Haboudane et al., 2004 |

| Growth Stage | Vegetation Index | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| GNDVI | MCARI | MSR | MTVI1 | NDRE | NDVI | OSAVI | RDVI | SAVI | RVI | |||

| Seedling stage | 0.124 | 0.467 | 0.383 | 0.422 | 0.481 | 0.422 | 0.232 | 0.506 | 0.529 | 0.515 | 0.513 | 0.426 |

| Flowering stage | 0.28 | 0.643 | 0.545 | 0.627 | 0.554 | 0.35 | 0.326 | 0.525 | 0.631 | 0.625 | 0.61 | 0.582 |

| Pod-filling stage | 0.548 | 0.389 | 0.524 | 0.377 | 0.644 | 0.377 | 0.506 | 0.918 | 0.481 | 0.443 | 0.421 | 0.641 |

| Maturity stage | 0.062 | 0.178 | 0.231 | 0.182 | 0.319 | 0.156 | 0.064 | 0.281 | 0.2 | 0.203 | 0.188 | 0.341 |

| Whole growth period | 0.394 | 0.669 | 0.709 | 0.725 | 0.796 | 0.659 | 0.438 | 0.767 | 0.739 | 0.72 | 0.714 | 0.789 |

| Model | Equation | R2 | RMSE |

|---|---|---|---|

| NDVI-LAI | 0.773 | 0.407 | |

| MSR-LAI | 0.792 | 0.389 | |

| RVI-LAI | 0.790 | 0.390 | |

| 8 VIs | LAI = 52.95DVI − 2.12GNDVI + 0.3MCARI + 7.31MSR + 11.12NDVI − 66.48RDVI − 0.77RVI | 0.830 | 0.376 |

| Model | R2 | RMSE |

|---|---|---|

| DVI-BPN | 0.857 | 0.127 |

| GNDVI-BPN | 0.875 | 0.2 |

| MCARI-BPN | 0.863 | 0.235 |

| MSR-BPN | 0.896 | 0.145 |

| NDVI-BPN | 0.923 | 0.124 |

| OSAVI-BPN | 0.873 | 0.165 |

| RDVI-BPN | 0.885 | 0.144 |

| RVI-BPN | 0.927 | 0.059 |

| All VIs-BPN | 0.968 | 0.165 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qi, H.; Zhu, B.; Wu, Z.; Liang, Y.; Li, J.; Wang, L.; Chen, T.; Lan, Y.; Zhang, L. Estimation of Peanut Leaf Area Index from Unmanned Aerial Vehicle Multispectral Images. Sensors 2020, 20, 6732. https://doi.org/10.3390/s20236732

Qi H, Zhu B, Wu Z, Liang Y, Li J, Wang L, Chen T, Lan Y, Zhang L. Estimation of Peanut Leaf Area Index from Unmanned Aerial Vehicle Multispectral Images. Sensors. 2020; 20(23):6732. https://doi.org/10.3390/s20236732

Chicago/Turabian StyleQi, Haixia, Bingyu Zhu, Zeyu Wu, Yu Liang, Jianwen Li, Leidi Wang, Tingting Chen, Yubin Lan, and Lei Zhang. 2020. "Estimation of Peanut Leaf Area Index from Unmanned Aerial Vehicle Multispectral Images" Sensors 20, no. 23: 6732. https://doi.org/10.3390/s20236732

APA StyleQi, H., Zhu, B., Wu, Z., Liang, Y., Li, J., Wang, L., Chen, T., Lan, Y., & Zhang, L. (2020). Estimation of Peanut Leaf Area Index from Unmanned Aerial Vehicle Multispectral Images. Sensors, 20(23), 6732. https://doi.org/10.3390/s20236732