Optical and Mass Flow Sensors for Aiding Vehicle Navigation in GNSS Denied Environment

Abstract

1. Introduction

2. System Overview

2.1. Monocular Visual Odometry

2.2. Mass Flow Sensors

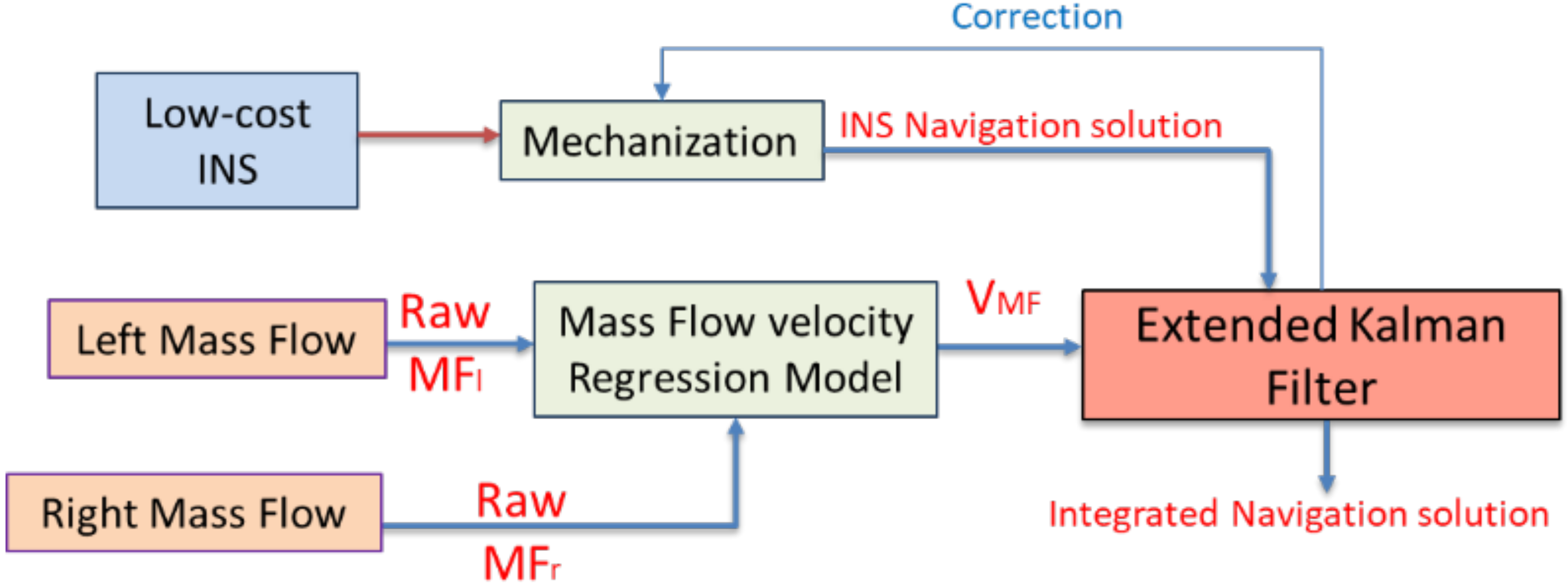

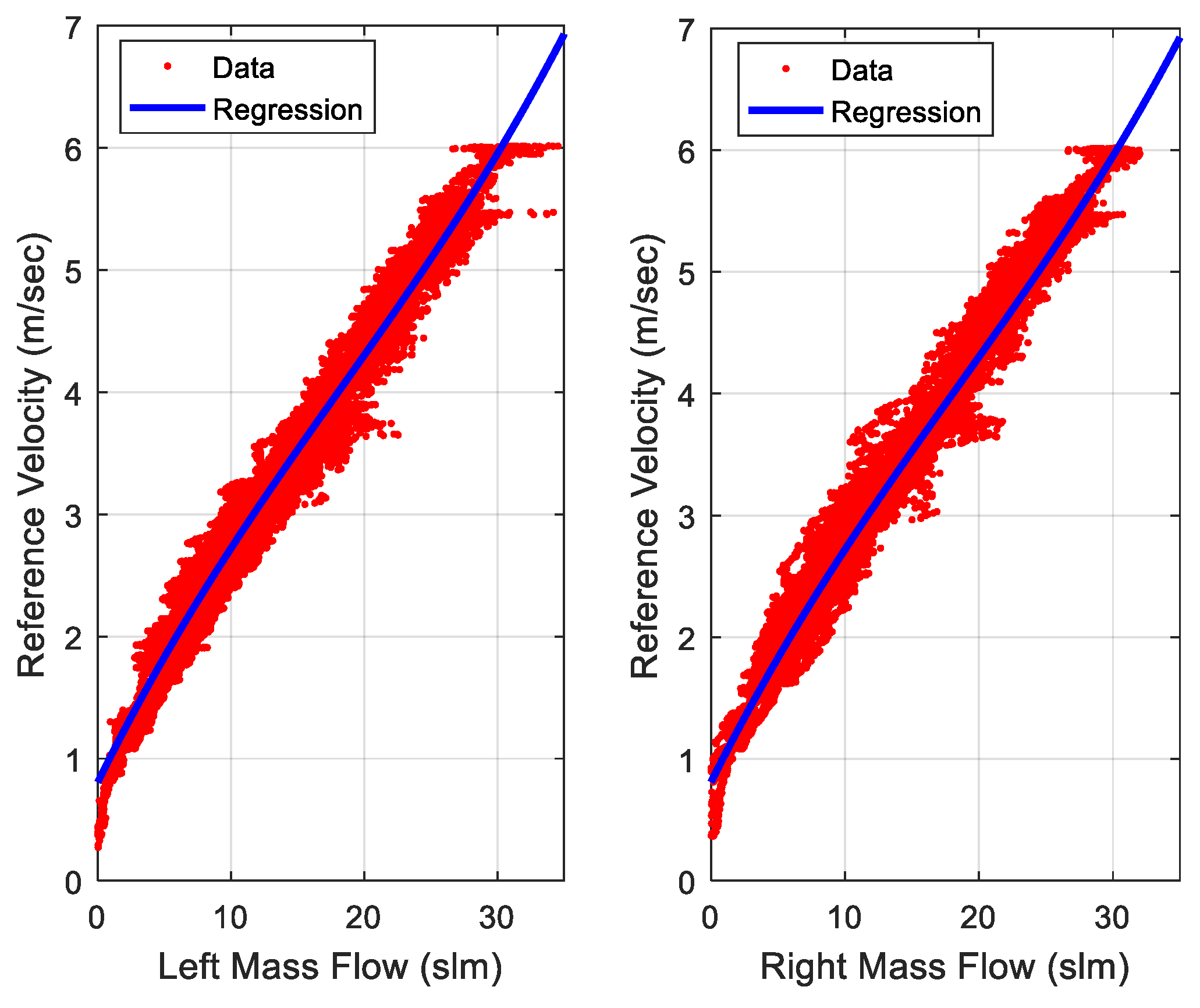

2.2.1. Mass Flow Velocity Estimation

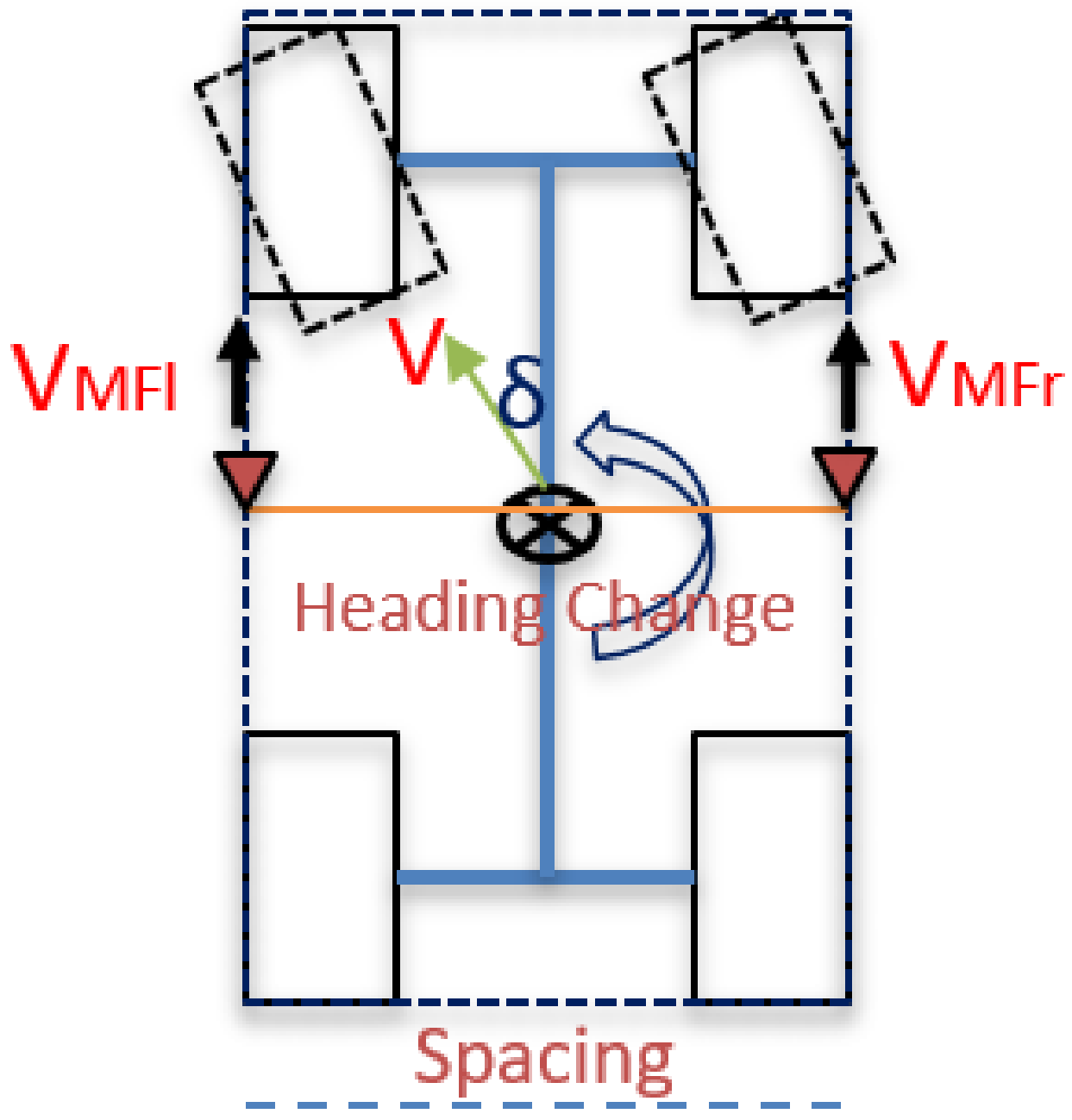

2.2.2. Mass Flow Heading Change Estimation

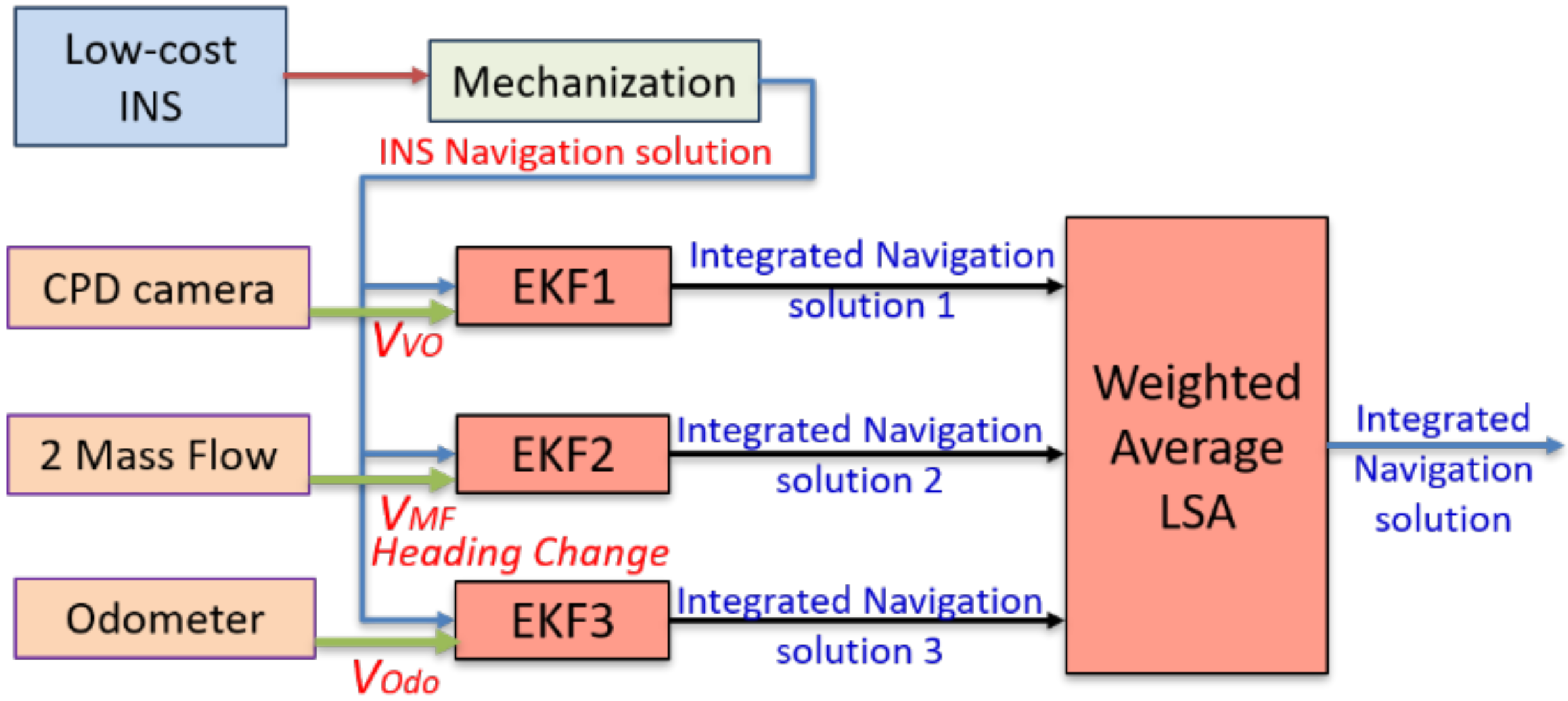

2.3. Integration Scheme

3. Experimental Results

3.1. Velocity Estimation Results

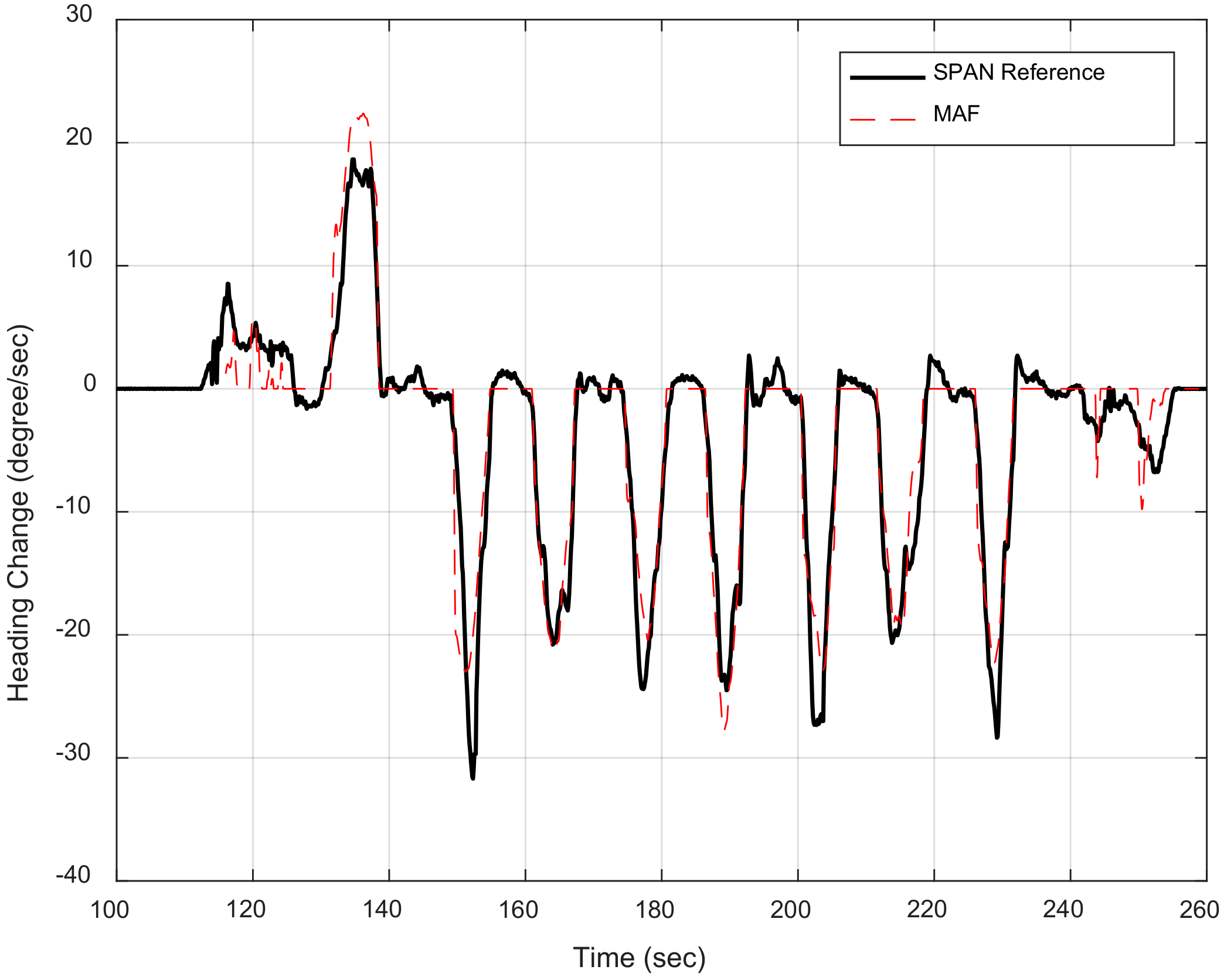

3.2. Heading Change Estimation Results

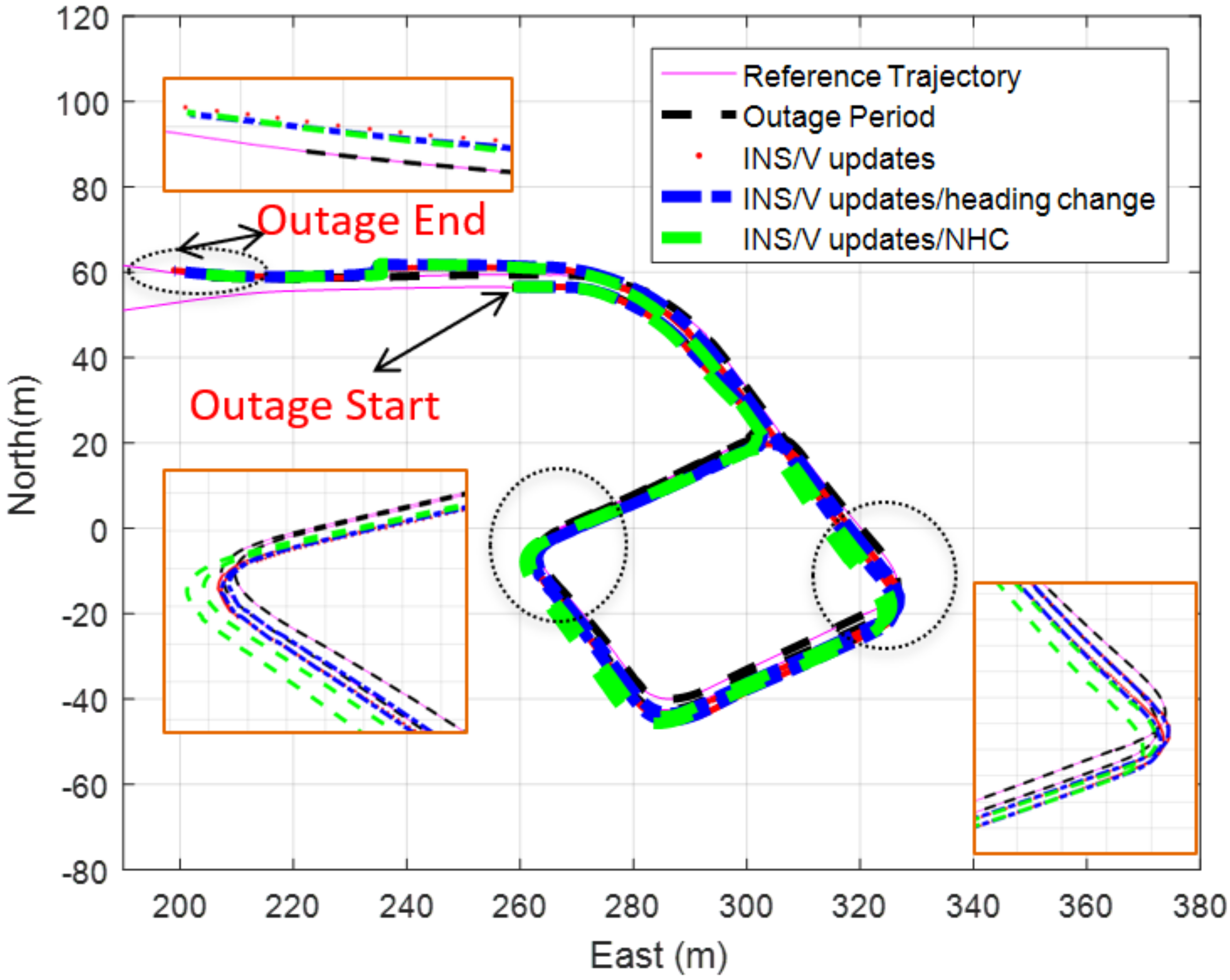

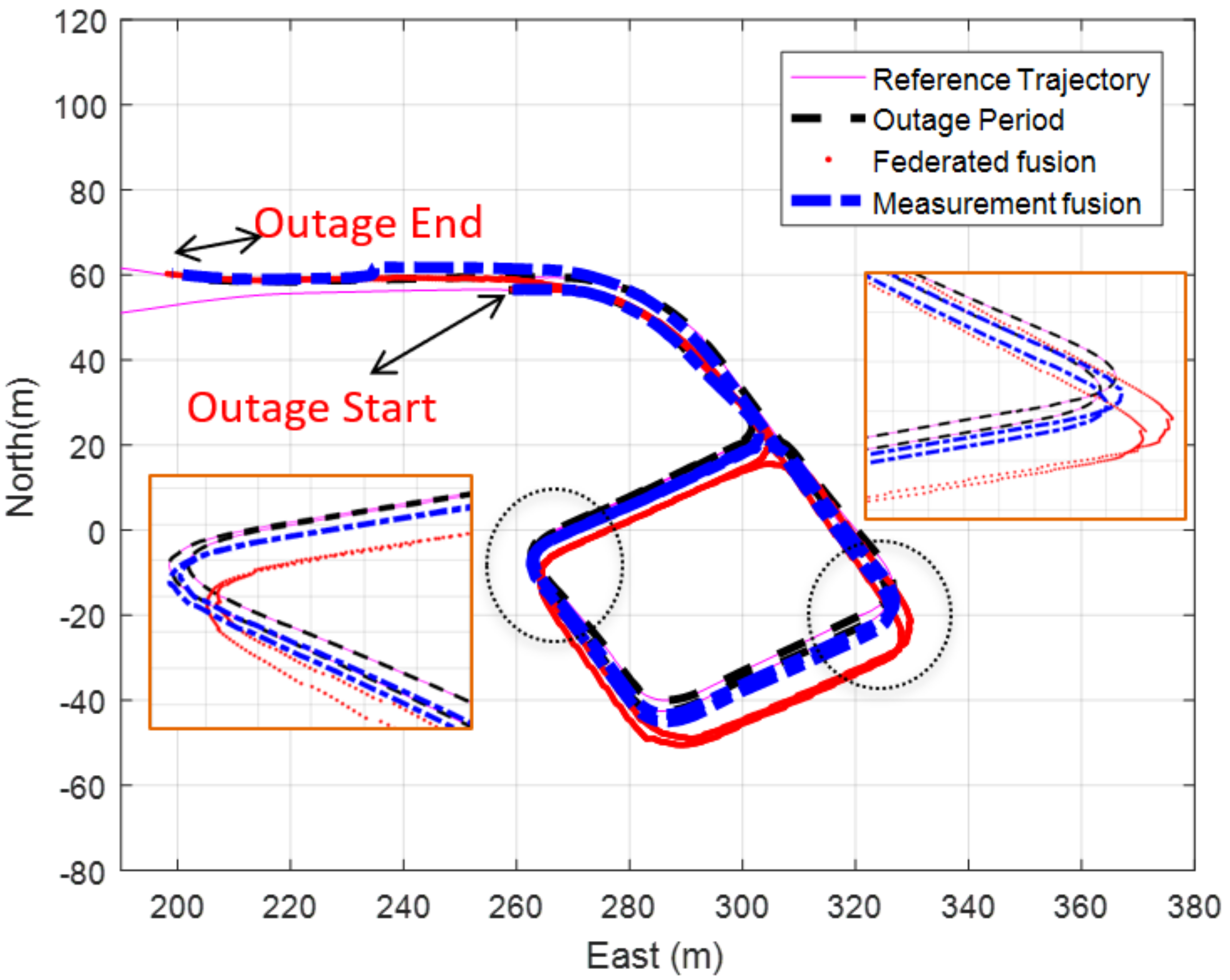

3.3. Navigation States Estimation Results

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Abd Rabbou, M.; El-Rabbany, A. Integration of GPS precise point positioning and MEMS-based INS using unscented particle filter. Sensors 2015, 15, 7228–7245. [Google Scholar] [CrossRef]

- Chiang, K.W.; Duong, T.T.; Liao, J.K. The performance analysis of a real-time integrated INS/GPS vehicle navigation system with abnormal GPS measurement elimination. Sensors 2013, 13, 10599–10622. [Google Scholar] [CrossRef]

- Iqbal, U.; Georgy, J.; Korenberg, M.J.; Noureldin, A. Augmenting Kalman filtering with parallel cascade identification for improved 2D land vehicle navigation. In Proceedings of the 72nd IEEE Vehicular Technology Conference, VTC Fall 2010, Ottawa, ON, Canada, 6–9 September 2010. [Google Scholar] [CrossRef]

- Shin, E.H. Accuracy Improvement of Low Cost INS/GPS for Land Applications. Master’s Thesis, University of Calgary, Calgary, AB, Canada, 2001. [Google Scholar]

- Liu, H.; Nassar, S.; El-Sheimy, N. Two-filter smoothing for accurate INS/GPS land-vehicle navigation in urban centers. IEEE Trans. Veh. Technol. 2010, 59, 4256–4267. [Google Scholar] [CrossRef]

- Falco, G.; Pini, M.; Marucco, G. Loose and tight GNSS/INS integrations: Comparison of performance assessed in real Urban scenarios. Sensors 2017, 17, 255. [Google Scholar] [CrossRef]

- Navidi, N.; Landry, R. A new survey on self-tuning integrated low-cost GPS/INS vehicle navigation system in Harsh environment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 75–81. [Google Scholar] [CrossRef]

- Niu, X.; Nassar, S.; El-Sheimy, N. An Accurate Land-Vehicle MEMS IMU/GPS Navigation System Using 3D Auxiliary Velocity Updates. J. Inst. Navig. 2007, 54, 177–188. [Google Scholar] [CrossRef]

- Lambrecht, S.; Nogueira, S.L.; Bortole, M.; Siqueira, A.A.G.; Terra, M.H.; Rocon, E.; Pons, J.L. Inertial sensor error reduction through calibration and sensor fusion. Sensors 2016, 16, 235. [Google Scholar] [CrossRef] [PubMed]

- Aftatah, M.; Lahrech, A.; Abounada, A. Fusion of GPS/INS/Odometer measurements for land vehicle navigation with GPS outage. In Proceedings of the 2016 2nd International Conference on Cloud Computing Technologies and Applications (CloudTech), Marrakech, Morocco, 24–26 May 2016; pp. 48–55. [Google Scholar] [CrossRef]

- Won, D.; Ahn, J.; Sung, S.; Heo, M.; Im, S.H.; Lee, Y.J. Performance Improvement of Inertial Navigation System by Using Magnetometer with Vehicle Dynamic Constraints. J. Sens. 2015, 2015. [Google Scholar] [CrossRef]

- Moussa, M.; Moussa, A.; El-Sheimy, N. Multiple Ultrasonic Aiding System for Car Navigation in GNSS Denied Environment. In Proceedings of the 2018 IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 23–26 April 2018; pp. 133–140. [Google Scholar]

- Travis, W.; Simmons, A.T.; Bevly, D.M. Corridor Navigation with a LiDAR / INS Kalman Filter Solution. In Proceedings of the IEEE Intelligent Vehicles Symposium, Las Vegas, NV, USA, 6–8 June 2005; pp. 343–348. [Google Scholar]

- Parviainen, J.; López, M.A.V.; Pekkalin, O.; Hautamäki, J.; Collin, J.; Davidson, P. Using Doppler radar and MEMS gyro to augment DGPS for land vehicle navigation. In Proceedings of the 2009 IEEE Control Applications, (CCA) & Intelligent Control, St. Petersburg, Russia, 8–10 July 2009; pp. 1690–1695. [Google Scholar] [CrossRef]

- Kim, S.B.; Bazin, J.C.; Lee, H.K.; Choi, K.H.; Park, S.Y. Ground vehicle navigation in harsh urban conditions by integrating inertial navigation system, global positioning system, odometer and vision data. IET Radar Sonar Navig. 2011, 5, 814. [Google Scholar] [CrossRef]

- Liu, Z.; El-Sheimy, N.; Yu, C.; Qin, Y. Motion Constraints and Vanishing Point Aided Land Vehicle Navigation. Micromachines 2018, 9, 249. [Google Scholar] [CrossRef]

- Niu, X.; Zhang, H.; Chiang, K.; El-sheimy, N. Using Land-Vehicle Steering Constraint To Improve the Heading Estimation of Mems GPS/INS Georeferencing Systems. In Proceedings of the The 2010 Canadian Geomatics Conference and Symposium of Commission I, ISPRS Convergence in Geomatics—Shaping Canada’s Competitive Landscape, Calgary, AB, Canada, 14–18 June 2010. [Google Scholar]

- Velaga, N.R.; Quddus, M.A.; Bristow, A.L.; Zheng, Y. Map-aided integrity monitoring of a land vehicle navigation system. IEEE Trans. Intell. Transp. Syst. 2012, 13, 848–858. [Google Scholar] [CrossRef]

- Borenstein, J.; Feng, L. Measurments and Correction of Systematic Odometry Errors in Mobile Robots. IEEE Trans. Robot. Autom. 1996, 12, 869–880. [Google Scholar] [CrossRef]

- Wang, Z.; Tan, J.; Sun, Z. Error Factor and Mathematical Model of Positioning with Odometer Wheel. Adv. Mech. Eng. 2015, 7, 305981. [Google Scholar] [CrossRef] [PubMed]

- Afzal, M.H.; Renaudin, V.; Lachapelle, G. Assessment of Indoor Magnetic Field Anomalies using Multiple Magnetometers. In Proceedings of the ION Gnss 2010, Portland, Oregon, 21–24 September 2010; pp. 21–24. [Google Scholar]

- Han, S.; Park, S.; Lee, K. Mobile Robot Navigation by Circular Path Planning Algorithm Using Camera and Ultrasonic Sensor. In Proceedings of the 2009 IEEE International Symposium on Industrial Electronics, Seoul, Korea, 5–8 July 2009; pp. 1749–1754. [Google Scholar]

- Moussa, M.; Moussa, A.; El-sheimy, N. Ultrasonic Wheel Based Aiding for Land Vehicle Navigation in GNSS denied environment. In Proceedings of the 2019 International Technical Meeting, ION ITM 2019, Reston, VA, USA, 28–31 January 2019; pp. 319–333. [Google Scholar]

- Moussa, M.; Moussa, A.; El-Sheimy, N. Ultrasonic based heading estimation for aiding land vehilce navigation in GNSS denied environment. In Proceedings of the ISPRS TC I Mid-term Symposium Innovative Sensing—From Sensors to Methods Applications, Karlsruhe, Germany, 10–12 October 2018; pp. 10–12. [Google Scholar]

- Gao, Y.; Liu, S.; Atia, M.M.; Noureldin, A. INS/GPS/LiDAR integrated navigation system for urban and indoor environments using hybrid scan matching algorithm. Sensors 2015, 15, 23286–23302. [Google Scholar] [CrossRef]

- Sun, Z.; Bebis, G.; Miller, R. On-road vehicle detection using optical sensors: A review. In Proceedings of the 7th International IEEE Conference on Intelligent Transportation Systems, Washington, DC, USA, 3–6 October 2004; pp. 585–590. [Google Scholar] [CrossRef]

- Liu, X.; Mei, H.; Lu, H.; Kuang, H.; Ma, X. A vehicle steering recognition system based on low-cost smartphone sensors. Sensors 2017, 17, 633. [Google Scholar] [CrossRef]

- Takahashi, Y.; Honma, N.; Sato, J.; Murakami, T.; Murata, K. Accuracy comparison of wireless indoor positioning using single anchor: Tof only versus tof-doa hybrid method. In Proceedings of the Asia-Pacific Microwave Conference APMC 2019, Singapore, 10–13 December 2019; pp. 1679–1681. [Google Scholar] [CrossRef]

- Huh, J.H.; Seo, K. An indoor location-based control system using bluetooth beacons for IoT systems. Sensors 2017, 17, 2917. [Google Scholar] [CrossRef]

- Wilfinger, R.; Moder, T.; Wieser, M.; Grosswindhager, B. Indoor Position Determination Using Location Fingerprinting and Vehicle Sensor Data. In Proceedings of the 2016 European Navigation Conference (ENC), Helsinki, Finland, 30 May–2 June 2016; pp. 1–9. [Google Scholar] [CrossRef]

- Yol, A.; Delabarre, B.; Dame, A.; Dartois, J.É.; Marchand, E. Vision-based absolute localization for unmanned aerial vehicles. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 3429–3434. [Google Scholar] [CrossRef]

- Zheng, F.; Member, S.; Tang, H.; Member, S.; Liu, Y. Odometry-Vision-Based Ground Vehicle Motion. IEEE Trans. Cybern. 2018, 49, 2652–2663. [Google Scholar] [CrossRef]

- Dabove, P.; Lingua, A.M.; Piras, M. Photogrammetric visual odometry with unmanned ground vehicle using low cost sensors. In Proceedings of the 2018 IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 23–26 April 2018; pp. 426–431. [Google Scholar] [CrossRef]

- Tsai, S.E.; Zhuang, S.H. Optical flow sensor integrated navigation system for quadrotor in GPS-denied environment. In Proceedings of the 2016 International Conference on Robotics and Automation Engineering (ICRAE), Jeju, Korea, 27–29 August 2016; pp. 87–91. [Google Scholar] [CrossRef]

- Georgy, J.; Noureldin, A.; Syed, Z.; Goodall, C. Nonlinear Filtering for Tightly Coupled RISS / GPS Integration. In Proceedings of the IEEE/ION Position, Location and Navigation Symposium, Indian Wells, CA, USA, 4–6 May 2010; pp. 1014–1021. [Google Scholar] [CrossRef]

- Gupta, A.; Chang, H.; Yilmaz, A. Gps-Denied Geo-Localisation Using Visual Odometry. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, III-3, 263–270. [Google Scholar] [CrossRef]

- Parra, I.; Ángel Sotelo, M.; Llorca, D.F.; Fernández, C.; Llamazares, A.; Hernández, N.; García, I. Visual odometry and map fusion for GPS navigation assistance. In Proceedings of the 2011 IEEE International Symposium on Industrial Electronics, Gdansk, Poland, 27–30 June 2011; pp. 832–837. [Google Scholar] [CrossRef]

- Lovegrove, S.; Davison, A.J.; Iba??ez-Guzmn, J. Accurate visual odometry from a rear parking camera. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 788–793. [Google Scholar] [CrossRef]

- Gakne, P.V.; O’Keefe, K. Tightly-coupled GNSS/vision using a sky-pointing camera for vehicle navigation in urban areas. Sensors 2018, 18, 1244. [Google Scholar] [CrossRef]

- Mostafa, M.M.; Moussa, A.M.; El-Sheimy, N.; Sesay, A.B. A smart hybrid vision aided inertial navigation system approach for UAVs in a GNSS denied environment. Navig. J. Inst. Navig. 2018, 65, 533–547. [Google Scholar] [CrossRef]

- Carlson, C.R.; Gerdes, J.C.; Powell, J.D. Practical Position and Yaw Rate Estimation with GPS and Differential Wheelspeeds. In Proceedings of the AVEC 2002 6th International Symposium Of Advanced Vehicle Control, Hiroshima, Japan, 9–13 September 2002. [Google Scholar]

- Rogers, R.M. Improved heading using dual speed sensors for angular rate and odometry in land navigation. In Proceedings of the IEEE 1998 Position Location and Navigation Symposium (Cat. No.98CH36153), Palm Springs, CA, USA, 20–23 April 1998; pp. 177–184. [Google Scholar] [CrossRef]

- Stephen, J. Development of a Multi-Sensor GNSS Based Vehicle Navigation System. 2000. Available online: https://www.ucalgary.ca/engo_webdocs/GL/00.20140.JStephen.pdf (accessed on 1 October 2020).

- Bonnifait, P.; Bouron, P.; Crubille, P.; Meizel, D. Data fusion of four ABS sensors and GPS for an enhanced localization of car-like vehicles. In Proceedings of the 2001 ICRA IEEE International Conference on Robotics and Automation (Cat. No.01CH37164), Seoul, Korea, 21–26 May 2001; Volume 2, pp. 1597–1602. [Google Scholar] [CrossRef]

- Xiao, Z.; Li, P.; Havyarimana, V.; Georges, H.M.; Wang, D.; Li, K. GOI: A Novel Design for Vehicle Positioning and Trajectory Prediction Under Urban Environments. IEEE Sens. J. 2018, 18, 5586–5594. [Google Scholar] [CrossRef]

- Martinez, F.; Gonzalez, L.C.; Carlos, M.R. Identifying Roadway Surface Disruptions Based on Accelerometer Patterns. IEEE Lat. Am. Trans. 2014, 12, 455–461. [Google Scholar] [CrossRef]

- Wahlström, J.; Skog, I.; Händel, P. Smartphone-Based Vehicle Telematics: A Ten-Year Anniversary. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2802–2825. [Google Scholar] [CrossRef]

- Lai, C.H.; Chuang, S.M.; Chu, P.C.; Li, C.H. An real-time roadside sign recognition scheme for mobile probing cars with smart phones. In Proceedings of the 2012 IEEE International Conference on Imaging Systems and Techniques, Manchester, UK, 16–17 July 2012; pp. 267–272. [Google Scholar] [CrossRef]

- Song, T.; Capurso, N.; Cheng, X.; Yu, J.; Chen, B.; Zhao, W. Enhancing GPS with Lane-Level Navigation to Facilitate Highway Driving. IEEE Trans. Veh. Technol. 2017, 66, 4579–4591. [Google Scholar] [CrossRef]

- Zhu, S.; Wang, X.; Zhang, Z.; Tian, X.; Wang, X. Lane-level vehicular localization utilizing smartphones. In Proceedings of the 2016 IEEE 84th Vehicular Technology Conference (VTC-Fall), Montreal, QC, Canada, 18–21 September 2016. [Google Scholar] [CrossRef]

- Yokozuka, M.; Hashimoto, N.; Matsumoto, O. Low-cost 3D mobile mapping system by 6 DOF localization using smartphone embedded sensors. In Proceedings of the 2015 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Yokohama, Japan, 5–7 November 2015; pp. 182–189. [Google Scholar] [CrossRef]

- Walter, O.; Schmalenstroeer, J.; Engler, A.; Haeb-Umbach, R. Smartphone-based sensor fusion for improved vehicular navigation. In Proceedings of the 2013 10th Workshop on Positioning, Navigation and Communication (WPNC), Dresden, Germany, 20–21 March 2013. [Google Scholar] [CrossRef]

- Niu, X.; Zhang, Q.; Li, Y.; Cheng, Y.; Shi, C. Using inertial sensors of iPhone 4 for car navigation. In Proceedings of the 2012 IEEE/ION Position, Location and Navigation Symposium, Myrtle Beach, SC, USA, 23–26 April 2012; pp. 555–561. [Google Scholar] [CrossRef]

- Moussa, M.; Moussa, A.; El-Sheimy, N. Steering Angle Assisted Vehicular Navigation Using Portable Devices in GNSS-Denied Environments. Sensors 2019, 19, 1618. [Google Scholar] [CrossRef]

- Honegger, D.; Meier, L.; Tanskanen, P.; Pollefeys, M. An open source and open hardware embedded metric optical flow CMOS camera for indoor and outdoor applications. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 1736–1741. [Google Scholar] [CrossRef]

- Carlson, C.R.; Gerdes, J.C.; Powell, J.D. Error sources when land vehicle dead reckoning with differential wheelspeeds. Navig. J. Inst. Navig. 2004, 51, 13–27. [Google Scholar] [CrossRef]

- Noureldin, A.; Karamat, T.B.; Georgy, J. Fundamentals of Inertial Navigation, Satellite-Based Positioning and Their Integration; Springer Publishing: New York, NY, USA, 2013; pp. 1–313. [Google Scholar] [CrossRef]

| Navigation Solution | RMSE (m) |

|---|---|

| INS stand-alone | 1980 |

| INS/non-holonomic constraints (NHC) | 14.30 |

| INS/odometer velocity update | 4.85 |

| INS/velocity update | 3.96 |

| INS/velocity/NHC | 4.00 |

| INS/velocity/heading change | 3.60 |

| Navigation Solution | RMSE (m) |

|---|---|

| INS stand-alone | 1980 |

| INS/NHC | 14.30 |

| INS/velocity update | 7.94 |

| INS/velocity/NHC | 6.07 |

| INS/velocity/heading change | 6.74 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moussa, M.; Zahran, S.; Mostafa, M.; Moussa, A.; El-Sheimy, N.; Elhabiby, M. Optical and Mass Flow Sensors for Aiding Vehicle Navigation in GNSS Denied Environment. Sensors 2020, 20, 6567. https://doi.org/10.3390/s20226567

Moussa M, Zahran S, Mostafa M, Moussa A, El-Sheimy N, Elhabiby M. Optical and Mass Flow Sensors for Aiding Vehicle Navigation in GNSS Denied Environment. Sensors. 2020; 20(22):6567. https://doi.org/10.3390/s20226567

Chicago/Turabian StyleMoussa, Mohamed, Shady Zahran, Mostafa Mostafa, Adel Moussa, Naser El-Sheimy, and Mohamed Elhabiby. 2020. "Optical and Mass Flow Sensors for Aiding Vehicle Navigation in GNSS Denied Environment" Sensors 20, no. 22: 6567. https://doi.org/10.3390/s20226567

APA StyleMoussa, M., Zahran, S., Mostafa, M., Moussa, A., El-Sheimy, N., & Elhabiby, M. (2020). Optical and Mass Flow Sensors for Aiding Vehicle Navigation in GNSS Denied Environment. Sensors, 20(22), 6567. https://doi.org/10.3390/s20226567