Abstract

Image matching forms an essential means of data association for computer vision, photogrammetry and remote sensing. The quality of image matching is heavily dependent on image details and naturalness. However, complex illuminations, denoting extreme and changing illuminations, are inevitable in real scenarios, and seriously deteriorate image matching performance due to their significant influence on the image naturalness and details. In this paper, a spatial-frequency domain associated image-optimization method, comprising two main models, is specially designed for improving image matching with complex illuminations. First, an adaptive luminance equalization is implemented in the spatial domain to reduce radiometric variations, instead of removing all illumination components. Second, a frequency domain analysis-based feature-enhancement model is proposed to enhance image features while preserving image naturalness and restraining over-enhancement. The proposed method associates the advantages of the spatial and frequency domain analyses to complete illumination equalization, feature enhancement and naturalness preservation, and thus acquiring the optimized images that are robust to the complex illuminations. More importantly, our method is generic and can be embedded in most image-matching schemes to improve image matching. The proposed method was evaluated on two different datasets and compared with four other state-of-the-art methods. The experimental results indicate that the proposed method outperforms other methods under complex illuminations, in both matching performances and practical applications such as structure from motion and multi-view stereo.

1. Introduction

Image matching is used to establish the correspondence between two or more images of the same scene taken from different viewpoints by the same or different sensors. In particular, feature-based image matching methods extract distinctive structures from the images, which depend heavily on image details (i.e., physical structures and textures) and naturalness as salient features for image matching [1,2,3,4,5,6]. The technique possesses the merits of high computational efficiency, high theoretical accuracy, and insensitivity to geometric deformations and differences [7]. As a result, feature-based image matching has received a lot of attention in the field of computer vision [8,9,10,11], photogrammetry and remote sensing [12,13,14,15], in applications [16,17,18] such as multiple view 3D reconstruction, remote sensing image fusion and visual localization.

The intensity of an image is the result of visual sensors, which is a combination of the scene information and the illumination situation. More specifically, image component is viewed as a product of irradiance and reflectance [19]. The reflectance is decided by the spectral property of the imaging scene, which is mostly related to image details [20]. The irradiance is essentially the received illumination at each point of the imaging scene, which represents the image naturalness [21]. However, complex illuminations can be easily induced by light sources, vignetting, exposure differences and other factors [22], which are inevitable in practical situations. In this paper, the complex illuminations are generally categorized into two classes. The first is defined as extreme illuminations, which includes very weak and bright illuminations. In this case, the images may not provide enough distinctive details for feature extracting and matching owing to the inadequate irradiance in weak illumination and the excessive irradiance in bright illumination [23]. The second class is the changing illumination, in which the overlap regions of images with the same reflectance present obvious differences in the irradiance. Under this condition, color of objects and their textures might present significant variations [24]. Consequently, the complex illuminations significantly influence the image naturalness and details, and thus seriously degrade the performance of the image matching methods.

A variety of techniques have been proposed to address the problem of the complex illuminations in image matching. Numerous feature detectors or descriptors are available in the literature [25,26,27,28,29]. The majority of these methods can be used for illumination-invariant image matching, but show low applicability in practical situations [30]. In addition, most existing image-optimization methods [22,31] were applied to remove illumination variations for visual perception, rather than improving image matching. In this paper, a spatial-frequency domain associated image-optimization method, which optimizes the image naturalness and details, is particularly proposed to improve image matching in the presence of the complex illuminations.

2. Related Work

2.1. Illumination-Robust Feature-Based Matching

Various radiation-invariant feature-matching methods have recently been proposed [32], including order-based descriptors, phase congruency (PC)-based algorithms, and self-similarity-based methods. Order-based methods that rely on the relative ordering of pixel values are invariant to most radiometric changes. The census transform was introduced to compute the visual correspondence based on intensity comparisons [33,34]. For tolerance to illumination variation, the local binary pattern operator and its extensions have been investigated [35] to construct order-based features for each pixel by comparing the intensity of pixels with that of adjacent pixels. The ordinal spatial intensity distribution [36] was constructed by ordering the pixel intensities in both the ordinal and spatial spaces. To obtain less sensitivity to illumination differences, PC-based descriptors have also been proposed. For example, the histogram of orientated phase congruency descriptor [14] was constructed for toleration to the illumination distribution of images based on the physical structure contained in images. Similarly, the PC-based structural descriptor [13] was built based on a PC structural image to obtain less sensitivity to nonlinear variations in illumination. Another recent approach based on local self-similarity (LSS) has been used as an illumination-invariant descriptor. For example, a brightness description method [37] was introduced for quantitatively describing radiation changes based on the geometric moment of the neighboring pixels. The dense LSS [38] was developed based on the robustness of the shape similarity for image correlation under changing illumination. These methods, which seek to develop novel feature-matching algorithms, achieve superior performance in illumination-invariant image matching. However, they may show poor performance under extreme illumination condition, and could not be used in the practical applications when images contain both geometric and radiometric variations.

2.2. Image Optimization in the Spatial Domain

Image optimization in the spatial domain can be realized based on the color constancy, histogram equalization and sharpness (HES) and illumination map estimation. As with color constancy [39], the edge-based color constancy (EBCC) algorithm was proposed [40] based on a new gray-edge hypothesis, which is achromatic for the average edge diversity in a scene. The EBCC algorithm was improved [41] using an edge weighting scheme with the use of distinct edge types. In [42], a stereo color histogram equalization method was proposed, which allied accurate disparity maps and color-consistent images to process stereo images with changing illumination. In addition, the HES approaches have been widely employed to correct the brightness difference. A histogram equalization-based algorithm [43] was particularly designed to improve the quality of stereo image matching with illumination differences. The adaptive histogram equalization technique in [44] was proposed to improve weak illumination and low contrast contained in matched images. A modified HE-based contrast enhancement technique was designed to refine the histograms into sub-histograms, which enhances the contrast for non-uniform illuminated images [45]. Moreover, illumination estimation was used to optimize the images with illumination differences [46]. Low-light image enhancement (LIME) was proposed to enhance a low-light image, which only estimates the illumination map to shrink the solution space and reduce the computational cost [47]. Above all, these techniques can effectively reduce illumination variations while preserving illumination components. However, it is not easy to solve the non-uniform illumination and over-enhancement under the complex illuminations. In addition, these approaches mainly concentrate on the consistency of the color and brightness without an effective enhancement for image features.

2.3. Image Optimization in the Frequency Domain

The effect of complex illuminations on image matching can also be alleviated in the frequency domain through PC-based image representations. Based on local frequency analysis (LFA) [22], the texture and edge preserving mappings were computed to preserve the texture information and fine edges of the images, respectively. The two mappings were then combined to obtain an illumination-invariant image presentation. To develop a multisource image registration method, Li and Man [48] proposed the phase correlation of principal phase congruency based on the magnitude of the PC. This technique was further developed [49], where both the orientation and magnitude of the PC were integrated to construct a structural radiation-invariant image correlation method. PC-based methods achieve a high performance for radiation consistency by removing the illumination, but lose the naturalness of the image. This may induce a decrease in the distinction of image features, and hence leads to more incorrect matches. Alternatively, to preserve naturalness of the image, the enhancement algorithm (NPEA) was constructed [50] to equalize the non-uniform illumination, decomposing an image into reflectance and illumination, and then making a balance between image details and illumination. The aim of the NPEA approach is to optimize non-uniform illumination image for perceptual quality, rather than an effective feature enhancement for image matching under the complex illuminations.

Based on the above brief analysis, the issues resolved in this study for the problem of illumination-robust image matching can be summarized as follows:

- (1)

- How can we find an effective approach to reduce the radiometric variations while preserving the naturalness for the images under complex illuminations?

- (2)

- How can the approach handle inconspicuous image features caused by extreme illuminations without over-enhancement and loss of the naturalness?

- (3)

- How can the method apply in practical applications to achieve robust image matching when image sequences contain both geometric and radiometric variations?

3. Contribution

In this paper, a spatial-frequency domain associated image-optimization method is specifically designed to improve the accuracy and efficiency of image matching under complex illuminations, by reducing radiometric variations and enhancing image details. Generally, spatial domain analysis can effectively equalize image illumination components. In addition, image illumination and details can be quantitatively represented in the frequency domain. We explicitly take both the spatial domain and frequency domain analyses into account, and propose a novel image-optimization method. More specifically, two simple yet effective models, a spatial domain analysis-based adaptive luminance equalization model and a frequency domain analysis-based feature enhancement model, are developed to reduce the radiometric variations and enhance the image details, respectively. More importantly, instead of removing all illumination components, the proposed method equalizes the illumination component in both spatial and frequency domains in order to restrain over-enhancement and preserve image naturalness. Therefore, the optimized images, which are robust to complex illuminations, can be used with most image-matching algorithms to achieve accurate and efficient image matching. More precisely, the main contributions of this paper are summarized as follows:

- (1)

- An adaptive luminance equalization model is proposed based on the spatial domain analysis to equalize non-uniform illumination with preserving the image naturalness.

- (2)

- A frequency domain analysis-based feature enhancement model is constructed to enhance the image details without over-enhancement and destruction of naturalness.

- (3)

- A spatial-frequency domain associated image-optimization method is proposed by combining the advantages of the spatial and frequency domain analyses to improve image matching in complex illuminations. The demo of our approach can be available at: https://github.com/jiashoujun/image-optimization-for-image-matching.

- (4)

- Comprehensive performance evaluation and analysis of the proposed method and four other state-of-the-art methods are presented using real scenario and standard datasets.

4. The Proposed Method

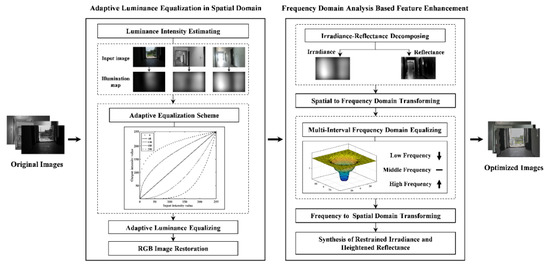

This study is aimed at providing optimized images that contain distinct details and naturalness for improving image matching under complex illuminations. For this purpose, a spatial-frequency domain associated image-optimization method is constructed to complete illumination equalization, feature enhancement and naturalness preservation. The flowchart of the proposed method is shown in Figure 1. The input of our algorithm is the original images containing complex illuminations. The adaptive luminance equalization model is performed in the spatial domain to reduce radiometric variations without removing all illumination information. Then, the feature-enhancement model is implemented in the frequency domain to enhance the image features while restraining over-enhancement and destruction of naturalness. The outputs are the optimized images that are resistive to the complex illuminations, and then can be embedded into image-matching schemes to achieve illumination-robust image matching.

Figure 1.

Flowchart of the proposed image-optimization method, including two steps, namely, adaptive luminance equalization and feature enhancement.

4.1. Adaptive Luminance Equalization in the Spatial Domain

Image features are severely dependent on image naturalness and details that are expressed in a gray form in the spatial domain [20]. It has been reported that radiometric differences between images caused by complex illuminations are inevitable in real applications. It seriously influences the pixel intensity and image naturalness and details, and degrades the performance of most image matching methods [23,24]. Therefore, we propose an adaptive method to equalize the differences in pixel intensities caused by the complex illuminations, and thus reduce the effect of the complex illuminations on image features, which is the main mission in this section.

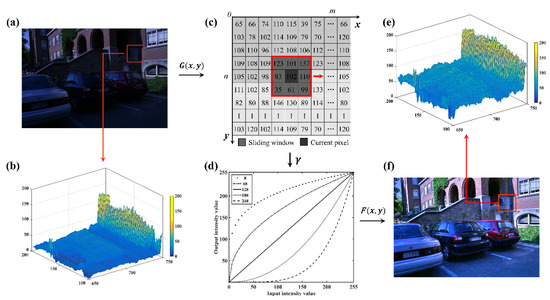

On the basis of our review, spatial domain analysis has been demonstrated to be effective to reduce illumination variation and enhance image contrast [42,43,47]. In addition, the spatial domain analysis-based image optimization focus on illumination equalization rather than removing all illumination components, which can preserve more image information than other methods such as phase congruency [22,49]. In view of the success of these methods, instead of removing all illuminations, the paper adopts spatial domain analysis to equalize the pixel intensity of images under the complex illuminations for reducing most radiometric variations. The process of illumination equalization is as shown in Figure 2.

Figure 2.

Adaptive luminance equalization: (a) original image G(x, y); (b) the luminance distribution of a local region for original image (red rectangle in (a)); (c) the adaptive equalizing scheme based on illumination map; (d) adaptive luminance equalization model; (e) the luminance distribution of a local region for the transformed image (red rectangle in (f)); and (f) the transformed image F(x, y).

4.1.1. Luminance Intensity Estimation

It has been reported that image component consists of illumination and reflectance components. In addition, the low-pass filter can preserve illumination information and remove reflectance component [51]. Thus, to determine the luminance intensities of input images, the low-pass filter is employed to obtain the illumination maps. The luminance intensity estimation can be expressed as follows:

where is the estimated luminance intensity, is the intensity of original image, is the Euclidean distance from the current point to the center of image, is a shape adjustment parameter, and is the low-frequency range. The illumination maps of different illumination intensities are illustrated in Figure 1.

4.1.2. Luminance Equalization Model

Using the estimated illumination map, the adaptive illumination equalization model is constructed in the spatial frequency. The model is used to enhance the brightness of low-light images and weaken the luminance of images under bright light, and correct the luminance values of the corresponding pixels to be on the same level. The luminance equalization model can be expressed as follows:

where is the transformed image, is the original image, is an equalization parameter containing the estimated luminance intensity, and denotes the size of image. The illustration of luminance equalization model is as shown in Figure 2d.

4.1.3. Adaptive Equalizing Scheme

Under the complex illumination, the brightness level may be significantly different between images. Moreover, there is also a variant intensity in an image, due to the non-uniform illumination, as shown in Figure 2a,b. Consequently, a global illumination equalization with constant equalization parameter cannot complete the satisfactory performance for images in the complex illuminations [52]. Hence, in order to adaptively control the equalization parameter , a sliding window is used to compute local mean luminance value of the illumination map. The adaptive luminance equalization is then achieved, which is expressed as follows:

where is luminance intensity of illumination map within a sliding window, denotes the mean luminance value within the sliding window, is the size of sliding window, and expresses the adjustment parameter containing the standard deviation (i.e., ) of the original image. The adaptive equalizing scheme is illustrated as shown in Figure 2c.

Figure 2d illustrates how the luminance values of transformed images change when varying the luminance values and mean luminance value of sliding window. The performance of the adaptive luminance equalization model is shown in Figure 2e,f. Here, a rather low mean luminance value indicates that the image is under weak illumination and equalization parameter needs to be decreased. In contrast, a relatively large mean luminance value suggests that the image is under bright illumination. In this case, the equalization parameter is adaptively increased.

4.2. Frequency Domain Analysis-Based Feature Enhancement

Although most illumination variations can be reduced in the spatial frequency, the structures of objects and their textures remain inconspicuous in the images due to complex illuminations, which also create challenges with respect to feature extraction and matching [23,24]. In this section, an effective feature-enhancement model is proposed to improve the performance of image matching.

As stated above, image component is the product of irradiance and reflectance, which are essentially the illumination and property of imaging scene, respectively [20]. Generally, the irradiance represents the global naturalness, and the reflectance represents local details of an image [50]. The image feature, which is dependent of the image details and naturalness, can be enhanced by heightening the reflectance and restraining the irradiance. In the spatial domain, image enhancement synchronously changes the two components, and hence, these algorithms may not complete the requested performance due to strengthening the irradiance. Moreover, in the frequency domain, Fourier transform (FT)-based frequency component has been used to represent the irradiance and reflectance in [53,54,55,56]. In addition, the irradiance and reflectance are related to low-frequency and high-frequency components in the FT-based frequency spectrum, respectively [19,20]. In view of the theories described above, the irradiance and reflectance components are separated, and image analysis is transformed from the spatial domain to the frequency domain in our strategy. Therefore, a frequency domain analysis-based feature enhancement approach is proposed to enhance image details, while preserving naturalness and restraining over-enhancement. The aim is to enhance inconspicuous image features, and hence improving feature extracting and matching under the complex illuminations.

4.2.1. Irradiance-Reflectance Component Decomposing

Image component is the product of irradiance and reflectance. Starting with the output image of adaptive luminance equalization in the spatial domain , the irradiance and reflectance components can be separated based on a logarithmic transformation, which are expressed as follows [57]:

where denotes the transformed image, and and denote the irradiance and reflectance components, respectively. Moreover, fast Fourier transform (FFT) is used to complete the spatial to frequency domain transformation, which can be expressed as follows:

where is the image component after FFT, and denote the results after FFT of and , respectively.

4.2.2. Multi-Interval Frequency Domain Equalization

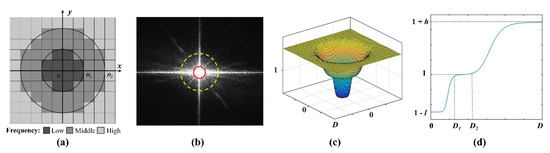

It has been demonstrated that removing all illuminations can obviously enhance image details, while the remaining illumination information is useful for preserving image naturalness and restraining over-enhancement [50]. To enhance the image details without losing the naturalness and over-enhancement, a multi-interval frequency domain dividing strategy is designed in our method. More specifically, the image frequency range is divided into low , high , and middle frequencies in our strategy, as shown in Figure 3a,b. The low and high frequencies are relevant to the irradiance and reflectance, respectively, and the middle frequency is regarded as the transition between low and high frequencies. Based on the multi-interval frequency domains, a multi-interval frequency domain equalization model is proposed in our strategy, as shown in Figure 3c,d. We restrain the low-frequency component rather than removing all low-frequency information in order to reduce the effect of redundant illumination on image features and preserve the naturalness of the image. In addition, high-frequency component is heightened to enhance image details. Please note that remaining middle-frequency component constant is aimed at restraining over-compression of the illumination and over-enhancement of image details. The multi-interval frequency domain equalization model can be presented as:

where is the Euclidean distance from the current point to the center, is an adjustment parameter, is the iteration order, denotes the whole image, and are the compression and enhancement coefficients, respectively.

Figure 3.

A graphical illustration of frequency domain equalizing: (a) the division of frequency domain; (b) an example of FT-based frequency power spectrum and frequency domain division (the low and high frequency region are displayed within the red and yellow circles, respectively); (c) multi-interval frequency domain equalization model visualized in3D; and (d) the multi-interval frequency domain equalization model and its performance in theory.

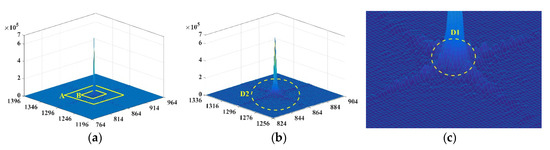

The frequency domain amplitude distribution is used to determine the range of the frequency domain, which can be expressed as follows:

where denotes the frequency domain amplitude. In Figure 4a, the amplitudes are distributed in a cross shape, and the core of the frequency domain lies in the spatial center of the images, which decreases from the center to the periphery. The low and high frequencies occur at the center and external areas, respectively, where the low-frequency amplitude occurs at the peak, whereas the high frequency has the minimum amplitude. Figure 4a shows that the peak in the low-frequency region is significantly high, whereas the high-frequency region is relatively flat. Thus, in Figure 4b,c, the boundary of the high (i.e., ) and low (i.e., ) frequencies can be obtained based on amplitude distribution. The region of the middle frequency can then be uniquely determined from to .

Figure 4.

The frequency domain amplitude spectrum visualized in 3D: (a) the amplitude distribution of the local regions in the frequency domain; (b) a partial enlarged image of region A in (a) (the high-frequency region is depicted beyond the yellow ellipse ); and (c) a partial enlarged image of region B in (a) (the low-frequency region is displayed within the yellow ellipse ).

Subsequently, based on the multi-interval frequency domain equalization model , the frequency domain equalization is implemented, which is expressed as:

where presents the equalized image, and operator denotes dot product of matrices. Hence, the high-frequency component is strengthened, the low-frequency component is compressed, and the middle frequency can remain constant.

4.2.3. Synthesis of Restrained Irradiance and Heightened Reflectance

As mentioned above, the irradiance and reflectance are related to low-frequency and high-frequency components in the frequency domain, respectively. To achieve reflectance enhancement and irradiance restraint, the inverse fast Fourier transform (IFFT) is employed for frequency-to-spatial domain transformation. In addition, the restrained irradiance and heightened reflectance were synthesized to get the final optimized image. The processes can be represented as follows:

where is the image component after IFFT, is the optimized image, and and denote the restrained irradiance and heightened reflectance.

Therefore, the proposed algorithm can enhance image details while preserving naturalness to improve image matching under complex illuminations. Please note that for color images, this algorithm is implemented in multiple channels after RGB transformation to preserve the color information.

5. Experimental Results and Analysis

To verify the proposed method, this paper conducted image optimization experiments on two different datasets. The matching performances were assessed both visually and numerically. The results of structure from motion (SFM) and multi-view stereo (MVS) were shown to evaluate the applicability of our method. Moreover, four other state-of-the-art approaches, including HES [43], LFA [22], LIME [47] and NPEA [50], were used for comparisons.

5.1. Experimental Dataset and Implementation

The proposed method was tested on two datasets, considering extreme and changing illumination. The first dataset contained 623 image pairs taken in a long corridor with extreme and uneven illumination. The scenario covers an area of 2.5 m × 51.8 m, in which four ground control points and six checkpoints were available using the total station in this experiment. All images are collected in RGB format and have a resolution of 2592 × 1728, using Canon 600D SLR cameras. Furthermore, the images cover some planar objects, including walls, floors, and roofs. Thus, the dataset can provide image pairs with the extreme illumination. The other dataset was a standard dataset for an outdoor scenario under changing illumination, from the Department of Engineering Science, University of Oxford. The image pairs are from the public datasets downloaded from the Internet and used in the literature [2,58,59]. The dataset comprises the RGB images with a resolution of 921 × 614. The images contain same scenes under varying illumination. Thus, this dataset can be used for validating our method in changing illumination.

Based on the two datasets, the results obtained with the proposed methods were compared with those of the other image-optimization methods. The other methods, including HES [43], LFA [22], LIME [47] and NPEA [50], which can be classified into two types, namely, spatial and frequency domain analysis. HES is an important family among the spatial domain analysis-based image-optimization approaches and has been proposed for illumination-robust image matching. LIME is also an efficient and standard method to enhance a low-light image based on spatial domain analysis. Additionally, LFA and NPEA are two popular frequency domain analysis-based methods. LFA has been reported to achieve high performance in illumination-invariant image matching. NPEA can equalize non-uniform illumination contained in images while preserving the naturalness. The implementations of these four methods basically accorded with the code and original papers. Our method has two groups of important parameters: frequency domain division (i.e., ) and the compression and enhancement coefficients (i.e., ). The parameters setting in this paper as shown in Table 1.

Table 1.

Parameter setting in this paper.

5.2. Evaluation Criteria

To evaluate the performance of the proposed methods, qualitative and numerical matching criteria were introduced following previous important works [51,60]. The number of features (F) and the number of matches (M) were obtained before using Random Sample Consensus (RANSAC) filtering [61]. The number of precise matches (NPM) was computed using RANSAC with a threshold of, for which the matches are retained. The processing time (T) was measured using a computer with an Intel single Core i7-8700K CPU and 32 GB of RAM. Moreover, three relative indicators are introduced to neutralize the effect of absolute factors. The first is the matching precision (MP), which is expressed as . The second is the ratio of precise matches to the number of features (RPMF), which is the ratio between NPM and the minimum number of features. RPMF can be written as , where denote the number of features of left and right images used for image matching, respectively. The ratio of precise matches to the processing time (RPMT) is regarded as another relative indicator to exhibit the computational efficiency, which can be written as . For , , and , the higher value means the better performance.

In addition, the image pairs are neither accurate camera matrices nor fundamental matrices, but the accurate homography matrices are available in the Oxford dataset. Consequently, the matching error (ME) is designed to exhibit the accuracy of the image matching. Let be the match after filtering using RANSAC. The ME is defined as follows:

where is a match calculated through known homography matrices between images. The term presents the Euclidean distance between and .

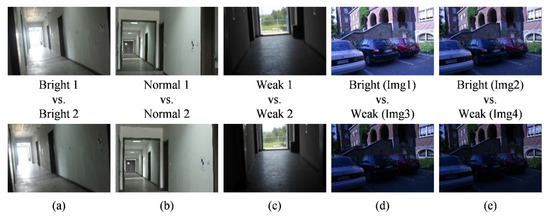

Using these two datasets, the matching experiments are designed as shown in Figure 5. The first three image pairs from the first dataset are under normal and extreme illuminations (bright and weak). The others are from the Oxford dataset. The fourth image pair is characterized as same scene with changing illumination. The fifth is similar to the fourth but has a decrease in illumination intensity. To determine matching metrics, the image matching was implemented using SIFT and SURF. These two algorithms have promising capabilities for resistance to geometric differences, and can avoid the influence of geometric differences on the matching experiments. In addition, these two algorithms have been significantly applied in computer vision, photogrammetry and remote sensing applications, which are representative among most image-matching methods [25,26].

Figure 5.

The experimental images from the datasets along with their description: (a) the first image pair; (b) the second image pair; (c) the third image pair; (d) the fourth image pair; (e) the fifth image pair.

Moreover, the qualitative and numerical metrics of 3D reconstruction were used to evaluate the effectiveness and applicability of the proposed method. For SFM, the success rate of image localization (SRIL) and the mean reprojection error (RE) are calculated to evaluate the effect of sparse reconstruction, where the SRIL is defined as ; here, is the number of successful image localizations and is the number of images calculated. Moreover, to assess the precision of the 3D reconstruction, the total error (TE) for each checkpoint is computed, which is the square sum of errors in X, Y and Z direction. The higher value of means the better performance. For and , lower values mean better performance. To analyze the effect of illumination on the precision, four ground control points and six checkpoints under different illumination were selected. The commercial software (Pix4D) was used for carrying out SFM and MVS.

5.3. Matching Performance

5.3.1. Performance of Visual Indicators

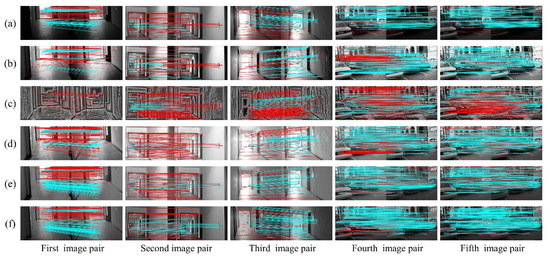

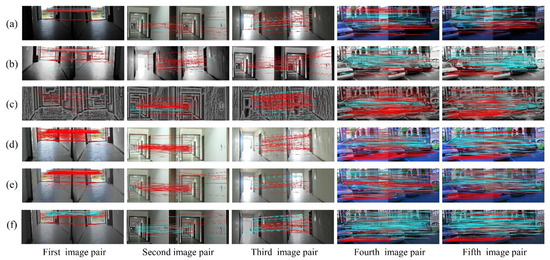

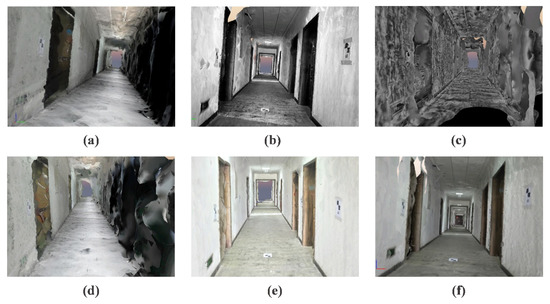

This section presents the results of image optimization for our method and other techniques as well as their visual matching performances under complex illuminations. As shown in Figure 6 and Figure 7, the blue and red lines represent precise and false matches, respectively. The transformed images with our method contain more uniform brightness, and clearer physical structures and textures. However, the HES approach enhances the edge information of images, but further worsens the uneven distribution of illumination intensity. In contrast to HES, the LFA achieves fine results in illumination invariance at the cost of the naturalness of the image. The LIME and NPEA significantly improve the visualization of images in weak illumination, but provide unsatisfactory results in equalizing bright illumination.

Figure 6.

The visual matching performances when applying SIFT: (a) original images; (b) images transformed with HES; (c) images transformed with LFA; (d) images transformed with LIME; (e) images transformed with NPEA; and (f) images transformed with our method.

Figure 7.

The visual matching performances when applying SURF: (a) original images; (b) images transformed with HES; (c) images transformed with LFA; (d) images transformed with LIME; (e) images transformed with NPEA; and (f) images transformed with our method.

The matching performances are depicted visually in Figure 6 and Figure 7. It is clear from Figure 6 (first image pair) that precise matches of the original images using SIFT in weak illumination are only concentrated on the floor with very few matches on the wall. In addition, the proposed method is more effective than the other methods to increase matches on the wall. As for the result using SURF, the advantage of proposed algorithm is more significant. For the second and third image pairs, the matches using SIFT are primarily distributed on the wall under normal and bright illuminations. These five algorithms significantly increase the number of matches on the wall and floor, and our method outperforms the others in terms of precise matches. The matching results using SURF, as shown in Figure 7, are similar to those using SIFT. In terms of the matching performance under changing illuminations (fourth and fifth image pairs), the most precise matches for the original image are located on the building façade and non-planar objects, as shown in Figure 6 and Figure 7. Other approaches, including HES, LFA and NPEA, increase the precise matches only on the building façade and non-planar objects. For the LIME, the optimized images contain more matches but significantly false matches located in the non-planar objects. However, our method yields better performances in the number and distribution of precise matches. Moreover, the results of SIFT and SURF are similar. Therefore, our method can be considered satisfactory for improving illumination-robust image matching.

5.3.2. Performance of Numerical Indicators

Using the evaluation criteria, the matching performances of our method and other methods were numerically compared and evaluated, as shown in Table 2, Table 3, Table 4 and Table 5 and in Figure 8 and Figure 9. For the first image pair, Table 2 and Table 3 show that the NPM and MP are small, except for our algorithm. This is because our method effectively enhances image features. The LFA provide many false matches owing to losing the image naturalness. The LIME and NPEA significantly increase the number of matches but result in lower matching precision than our approach. Moreover, the relatively large RPMF and RPMT of our strategy indicate high effectiveness and efficiency. Thus, the results suggest that our algorithm can significantly improve image matching under weak illumination even when other techniques fail.

Table 2.

Numerical performance indicators of SIFT for the first to third image pairs.

Table 3.

Numerical performance indicators of SURF for the first to third image pairs.

Table 4.

Numerical performance indicators of SIFT for the fourth and fifth image pairs.

Table 5.

Numerical performance indicators of SURF for the fourth and fifth image pairs.

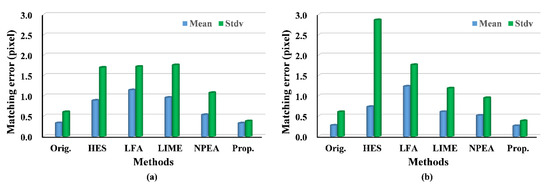

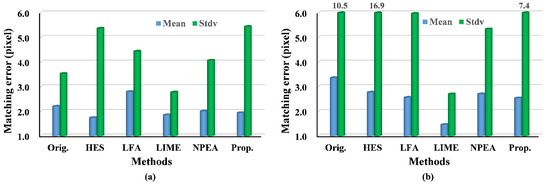

Figure 8.

Histograms of matching error using SIFT: (a) the fourth image pair; (b) the fifth image pair.

Figure 9.

Histograms of matching error using SURF: (a) the fourth image pair; (b) the fifth image pair.

As for the second image pair, the results in Table 2 and Table 3 show that our algorithm and other approaches are helpful for improving the image matching. In particular, the NPM of LFA is higher than that of our method when using the SIFT. This is owing to the advantage of combining texture preserving and edge preserving in LFA. However, our approach achieves higher MP and RPMF compared with those of other methods. Furthermore, our method significantly outperforms the others in efficiency due to the larger RPMT. Thus, our strategy is demonstrated to be more effective for improving image matching under relatively uniform illumination than traditional methods.

For the third image pair, the results are similar to the second image pair. In particular, our method achieves an MP of 0.735 with an NPM of 100, whereas the LFA achieves an MP of 0.374 with an NMP of 120, as shown in Table 2. Using SIFT and SURF, the MP of LFA is lower than that of the original image. Furthermore, the performances associated with the HES, LIME and NPEA seem to be worse in bright illumination conditions because the M and NPM are significantly lower compared with those of our techniques, even original images. Therefore, the results in this experiment indicate the capability of our strategy to improve image matching under bright illumination.

The experiments on the fourth and fifth image pairs are aimed at testing our method under changing illuminations. The results of the fourth image pair show that our method outperforms the others, because the NMP and MP of our approach are larger than that of other methods. As expected, the results of RPMF and RPMT, as indicated in Table 4, reveal a higher effectiveness of our algorithm, expect for the better results of the LFA and NPEA when using SIFT. Moreover, for matching errors, the proposed method provides more accurate matches, because the mean matching errors of our approach are the smallest, except for the result of the LIME. The upper bound of the matching errors can be described with their standard deviation, which is relatively lower in our approach compared with that of the other approaches and original images when using SIFT. For the fifth image pair, the experimental results follow the same pattern as the fourth image pair, and our algorithm achieves better performance than that in the fourth image pair. For example, NMP and RPMT are greater for the fifth image pair, although the matching accuracy is lower when applying SURF owing to the greater illumination variance. Hence, the experimental results show that the proposed method outperforms others under the changing illuminations.

Thus, based on the analysis above, the results demonstrate the better efficiency of the proposed approach for improving the image matching under extreme illumination and higher robustness to illumination variation, compared with other methods.

5.4. Application to SFM and MVS

The results of SFM for the first dataset are presented in Table 6 and Table 7. The results indicate that LFA achieves the highest SRIL and RE, which means that LFA can improve the continuity of the 3D model, but decreases the precision of the 3D model. Moreover, LIME and NPEA also significantly reduce reconstruction precision owing to the few precise matches and high RE. Our approach, which contains relatively high SRIL and the lowest RE, achieves a better performance compared with other algorithms. This is because of the better matching performance, which can be proved through the matching performance in Table 2 and Table 3. In terms of precision of 3D model, the results in Table 7 indicate the poor results of other approaches. For example, other methods significantly increase the errors of 3D model, even result in loss of some checkpoints when using HES and LIME, as the “loss” in Table 7. Our method obviously reduces the total error of the 3D model, especially under bright and weak illuminations, and the mean error is as low as 20 mm.

Table 6.

Performance indicators in SFM.

Table 7.

Total error of checkpoints (mm).

Furthermore, the dense reconstruction performances of MVS are visually depicted in Figure 10. It is clear that the 3D models of the original images and transformed images with other algorithms are incomplete within the red circle, with indistinct walls and floors as well as missing doors. Especially for LFA and NPEA, the results display very little textures and physical structures in the reconstructed model. The 3D reconstruction model with the proposed approach contains more integrated physical structures and richer textural details, as shown in Figure 10f. Thus, the proposed method can significantly improve the integrity and visuality of the 3D dense model, and outperforms the other state-of-the-art approaches.

Figure 10.

Comparison of the results of MVS with original and optimized images: (a) original; (b) HES; (c) LFA; (d) LIME; (e) NPEA; and (f) our method.

Therefore, the proposed approach can present higher applicability in both SFM and MVS under complex illuminations compared with other approaches. More importantly, it can be clear that the proposed algorithm can improve image matching to effectively apply in the sparse and dense reconstruction of scenarios under the complex illuminations.

6. Discussion

6.1. Image Naturalness Assessment

Image naturalness is essential for image optimization to achieve pleasing perceptual quality [3,4,5], which influences the performance of image matching. The spatial-frequency domain associated image-optimization method is proposed to enhance image details while preserving the image naturalness. To quantitatively assess the naturalness preservation performances of the image-optimization methods, we used a quantitative measure, namely, the lightness-order-error (LOE), following previous work [50].

The quantitative measurement results of LOE is shown in Table 8. The results demonstrate that the proposed method achieves a moderate level of the naturalness preservation performance, outperforming LFA and LIME. This reason could be that LFA and LIME restrain illumination components contained in the images and lose naturalness of the image. However, HES and NPEA achieve better performances than the proposed method. The possible reason is that these HES equalizes the illumination components rather than removing all illumination information, while NPEA was particularly designed for the naturalness preservation. Based on the analysis, we consider that our method can achieve satisfactory performance in the image naturalness preservation.

Table 8.

Quantitative measurement results of LOE.

6.2. Frequency Domain Division Influence

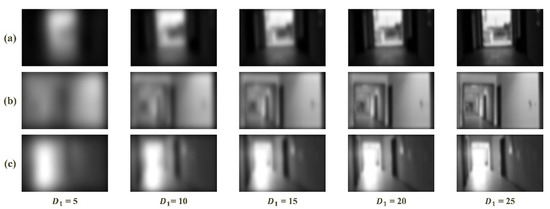

In the frequency domain analysis-based feature enhancement method, the results of frequency domain division significantly influence performance of the method. As stated above, the illumination and textures of scenario are distributed in the low and high frequency, respectively. To evaluate the influence of frequency domain division and to test the sensitivity to visual image, a low-pass filter was executed repeatedly with different radius of the cutoff frequency (i.e., ) to acquire visual images with only low-frequency information. Five values of , 5, 10, 15, 20 and 25, were used.

Figure 11 shows the results of low-pass filter on images with bright, normal and weak illumination. It can be clear that the increase of radius (i.e., ) leads to more brightness and textural details. The results under bright, normal and weak illuminations follow the same trend. Moreover, when became larger than 5, the textural details of the three groups of images increased without variations in illumination. As continues to increase, there are no variations in textures under the bright, normal and weak illuminations when the value of is 20, 10 or 15, respectively.

Figure 11.

Visual results of low-pass filter with a sequence of : (a) weak illumination; (b) normal illumination; and (c) bright illumination.

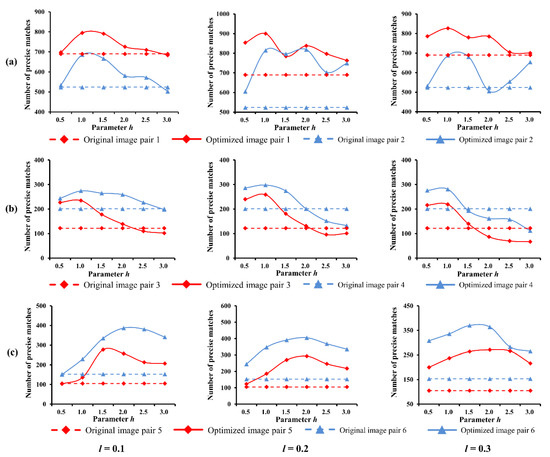

6.3. Parameter Influence

As stated above, the qualities of the final image optimization results strongly depend on the performance of feature enhancement. Specifically, two essential parameters can affect the result of feature enhancement in our method, which are the compression and enhancement coefficients (i.e., ,), respectively. To evaluate the sensitivity to these two parameters, the proposed method was executed repeatedly with different pairwise sets of (,) on six groups of image pairs in the first dataset, which are under bright, normal and weak illuminations, respectively. Three values of , such as 0.1, 0.2, 0.3, and six values of in the range [0–3] were used.

The influences on the SIFT-based image matching are shown in Figure 12. The results show that NPM is maintained at a good level within a certain range of and . The transformed images generally achieved higher NPM than the originals, and two curves with same conditions followed similar trend. Based on the peaks of curves, the optimal values of l and can be found to achieve best matching performances. Such as, 0.2 and 1.0 under weak and normal illumination conditions, 0.2 and 2.0 for bright illumination.

Figure 12.

Parameter influence on image matching: (a) weak illumination; (b) normal illumination; (c) bright illumination.

7. Conclusions

In this study, a spatial-frequency domain associated image-optimization method is specially designed to improve illumination-robustness image matching. An adaptive luminance equalization model is implemented in the spatial domain to equalize illumination variation instead of removing all illumination information. A frequency domain analysis-based feature-enhancement algorithm is proposed to enhance features without over-enhancement and destruction of naturalness. Our method is then used with image-matching algorithms for illumination-robust image matching. Various experiments were conducted to test the proposed approach. The results show our method achieve a higher accuracy and efficiency for image matching and a better application in SFM and MVS, compared with the other state-of-the-art methods.

The drawback of the proposed method is that the implementation is dependent on the scenario variation. In the algorithm, the division of frequency domain (i.e., , ) and the parameters (i.e., , ) are scene dependent, and need to be set scene adaptively. Therefore, future studies will improve the parameter setting scheme for automatic applications at high frame rates, and extend our concept to more complex scenarios. In addition, because the influence of the light source position on image intensity is complicated, we will investigate the detailed influence of the illumination variation in direction on the performances of image optimization and image matching, and take more complex illumination into account in future studies.

Author Contributions

Conceptualization and methodology, C.L., S.J.; Validation, H.W., S.J. and D.Z.; Writing—original draft preparation, S.J.; Writing—review and editing, C.L., H.W., and S.J.; Visualization, F.C. and S.Z.; Supervision, H.W.; Funding acquisition, C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Science and Technology Major Program (No. 2016YFB0502102, No. 2018YFB1305003), the National Science Foundation of China (No. 41771481, No. 41671451), and the Fundamental Research Funds for the Central Universities of China (No. 22120190195).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cheng, Z.-Q.; Chen, Y.; Martin, R.R.; Lai, Y.-K.; Wang, A. SuperMatching: Feature Matching Using Supersymmetric Geometric Constraints. IEEE Trans. Vis. Comput. Graph. 2013, 19, 1885–1894. [Google Scholar] [CrossRef] [PubMed]

- Xing, J.; Wei, Z.; Zhang, G. A Line Matching Method Based on Multiple Intensity Ordering with Uniformly Spaced Sampling. Sensors 2020, 20, 1639. [Google Scholar] [CrossRef] [PubMed]

- Berman, M.G.; Hout, M.C.; Kardan, O.; Hunter, M.R.; Yourganov, G.; Henderson, J.M.; Hanayik, T.; Karimi, H.; Jonides, J. The Perception of Naturalness Correlates with Low-Level Visual Features of Environmental Scenes. PLoS ONE 2014, 9, e114572. [Google Scholar] [CrossRef] [PubMed]

- Ibarra, F.F.; Kardan, O.; Hunter, M.R.; Kotabe, H.P.; Meyer, F.A.C.; Berman, M.G. Image Feature Types and Their Predictions of Aesthetic Preference and Naturalness. Front. Psychol. 2017, 8, 632. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Z.; Liu, Y.; Huang, B.Q.; Yu, H.W. No-reference stereoscopic images quality assessment method based on monocular superpixel visual features and binocular visual features. J. Vis. Commun. Image Represent. 2020, 71, 102848. [Google Scholar] [CrossRef]

- Yendrikhovskij, S.N.; Blommaert, F.J.J.; de Ridder, H. Color reproduction and the naturalness constraint. Color Res. Appl. 1999, 24, 52–67. [Google Scholar] [CrossRef]

- Leng, C.; Zhang, H.; Li, B.; Cai, G.; Pei, Z.; He, L. Local Feature Descriptor for Image Matching: A Survey. IEEE Access 2019, 7, 6424–6434. [Google Scholar] [CrossRef]

- Kim, Y.; Han, W.; Lee, Y.-H.; Kim, C.G.; Kim, K.J. Object Tracking and Recognition Based on Reliability Assessment of Learning in Mobile Environments. Wirel. Pers. Commun. 2017, 94, 267–282. [Google Scholar] [CrossRef]

- Li, Y.; Hu, Z.; Huang, G.; Li, Z.; Angel Sotelo, M. Image Sequence Matching Using Both Holistic and Local Features for Loop Closure Detection. IEEE Access 2017, 5, 13835–13846. [Google Scholar] [CrossRef]

- Ozyesil, O.; Voroninski, V.; Basri, R.; Singer, A. A survey of structure from motion. Acta Numer. 2017, 26, 305–364. [Google Scholar] [CrossRef]

- Chen, L.; Huang, P.; Cai, J. Extracting and Matching Lines of Low-Textured Region in Close-Range Navigation of Tethered Space Robot. IEEE Trans. Ind. Electron. 2019, 66, 7131–7140. [Google Scholar] [CrossRef]

- Ye, Y.; Shan, J. A local descriptor based registration method for multispectral remote sensing images with non-linear intensity differences. ISPRS J. Photogramm. Remote Sens. 2014, 90, 83–95. [Google Scholar] [CrossRef]

- Fan, J.; Wu, Y.; Li, M.; Liang, W.; Cao, Y. SAR and Optical Image Registration Using Nonlinear Diffusion and Phase Congruency Structural Descriptor. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5368–5379. [Google Scholar] [CrossRef]

- Ye, Y.; Shan, J.; Hao, S.; Bruzzone, L.; Qin, Y. A local phase based invariant feature for remote sensing image matching. ISPRS J. Photogramm. Remote Sens. 2018, 142, 205–221. [Google Scholar] [CrossRef]

- Yu, Q.; Zhou, S.; Jiang, Y.; Wu, P.; Xu, Y. High-Performance SAR Image Matching Using Improved SIFT Framework Based on Rolling Guidance Filter and ROEWA-Powered Feature. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 920–933. [Google Scholar] [CrossRef]

- Trujillo, J.C.; Munguia, R.; Urzua, S.; Guerra, E.; Grau, A. Monocular Visual SLAM Based on a Cooperative UAV-Target System. Sensors 2020, 20, 3531. [Google Scholar] [CrossRef]

- Uygur, I.; Miyagusuku, R.; Pathak, S.; Moro, A.; Yamashita, A.; Asama, H. Robust and Efficient Indoor Localization Using Sparse Semantic Information from a Spherical Camera. Sensors 2020, 20, 4128. [Google Scholar] [CrossRef]

- Yang, Y.; Li, Z.G.; Wu, S.Q. Low-Light Image Brightening via Fusing Additional Virtual Images. Sensors 2020, 20, 4614. [Google Scholar] [CrossRef]

- Voicu, L.I.; Myler, H.R.; Weeks, A.R. Practical considerations on color image enhancement using homomorphic filtering. J. Electron. Imaging 1997, 6, 108–113. [Google Scholar] [CrossRef]

- Bi, G.-L.; Xu, Z.-J.; Zhao, J.; Sun, Q. Multispectral image enhancement based on irradiation-reflection model and bounded operation. Acta Phys. Sin. 2015, 64, 100701. [Google Scholar]

- Lu, L.; Ichimura, S.; Moriyama, T.; Yamagishi, A.; Rokunohe, T. A System to Detect Small Amounts of Oil Leakage with Oil Visualization for Transformers using Fluorescence Recognition. IEEE Trans. Dielectr. Electr. Insul. 2017, 24, 1249–1255. [Google Scholar] [CrossRef]

- Mouats, T.; Aouf, N.; Richardson, M.A. A Novel Image Representation via Local Frequency Analysis for Illumination Invariant Stereo Matching. IEEE Trans. Image Process. 2015, 24, 2685–2700. [Google Scholar] [CrossRef] [PubMed]

- Rana, A.; Valenzise, G.; Dufaux, F. An Evaluation of HDR Image Matching under Extreme Illumination Changes; Visual Communications & Image Processing IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Jacobs, D.W.; Belhumeur, P.N.; Basri, R. Comparing Images under Variable Illumination. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Santa Barbara, CA, USA, 25 June 1998; p. 610. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Morel, J.-M.; Yu, G. ASIFT: A New Framework for Fully Affine Invariant Image Comparison. Siam J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Zhang, S.L.; Tian, Q.; Lu, K.; Huang, Q.M.; Gao, W. Edge-SIFT: Discriminative Binary Descriptor for Scalable Partial-Duplicate Mobile Search. IEEE Trans. Image Process. 2013, 22, 2889–2902. [Google Scholar] [CrossRef]

- Sedaghat, A.; Mohammadi, N. Illumination-Robust remote sensing image matching based on oriented self-similarity. ISPRS J. Photogramm. Remote Sens. 2019, 153, 21–35. [Google Scholar] [CrossRef]

- Wan, X.; Liu, J.G.; Yan, H.S.; Morgan, G.L.K. Illumination-invariant image matching for autonomous UAV localisation based on optical sensing. ISPRS J. Photogramm. Remote Sens. 2016, 119, 198–213. [Google Scholar] [CrossRef]

- Wachinger, C.; Navab, N. Entropy and Laplacian images: Structural representations for multi-modal registration. Med. Image Anal. 2012, 16, 1–17. [Google Scholar] [CrossRef]

- Ma, J.Y.; Jiang, X.Y.; Fan, A.X.; Jiang, J.J.; Yan, J.C. Image Matching from Handcrafted to Deep Features: A Survey; International Journal of Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Li, Y.F.; Wang, H.J. An Efficient and Robust Method for Detecting Region Duplication Forgery Based on Non-parametric Local Transforms. In Proceedings of the IEEE International Congress on Image & Signal Processing, Chongqing, China, 16–18 October 2012. [Google Scholar]

- Luan, X.; Yu, F.; Zhou, H.; Li, X.; Dalei, S.; Bingwei, W. Illumination-robust area-based stereo matching with improved census transform. In Proceedings of the IEEE International Conference on Measurement, Harbin, China, 18–20 May 2012. [Google Scholar]

- Hill, P.R.; Bhaskar, H.; Al-Mualla, M.E.; Bull, D.R.; IEEE. Improved illumination invariant homomorphic filtering using the dual tree complexwavelet transform. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing Proceedings, Shanghai, China, 20–25 March 2016; pp. 1214–1218. [Google Scholar]

- Tang, F.; Lim, S.H.; Chang, N.L.; Tao, H.; IEEE. A Novel Feature Descriptor Invariant to Complex Brightness Changes. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2009), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Kharbat, M.; Aouf, N.; Tsourdos, A.; White, B. Robust Brightness Description for Computing Optical Flow. In Proceedings of the British Machine Conference, Leeds, UK, 1–4 September 2008. [Google Scholar] [CrossRef]

- Ye, Y.X.; Shen, L.; Hao, M.; Wang, J.C.; Xu, Z. Robust Optical-to-SAR Image Matching Based on Shape Properties. IEEE Geosci. Remote Sens. Lett. 2017, 14, 564–568. [Google Scholar] [CrossRef]

- Gijsenij, A.; Gevers, T.; van de Weijer, J. Computational Color Constancy: Survey and Experiments. IEEE Trans. Image Process. 2011, 20, 2475–2489. [Google Scholar] [CrossRef] [PubMed]

- Van de Weijer, J.; Gevers, T.; Gijsenij, A. Edge-based color constancy. IEEE Trans. Image Process. 2007, 16, 2207–2214. [Google Scholar] [CrossRef] [PubMed]

- Gijsenij, A.; Gevers, T.; van de Weijer, J. Improving Color Constancy by Photometric Edge Weighting. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 918–929. [Google Scholar] [CrossRef]

- Heo, Y.S.; Lee, K.M.; Lee, S.U. Joint Depth Map and Color Consistency Estimation for Stereo Images with Different Illuminations and Cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1094–1106. [Google Scholar] [PubMed]

- Deepa; Jyothi, K.; IEEE. A Robust and Efficient Pre Processing Techniques for Stereo Images. In Proceedings of the 2017 International Conference on Electrical, Electronics, Communication, Computer, and Optimization Techniques (ICEECCOT), Mysuru, India, 15–16 December 2017; pp. 89–92. [Google Scholar]

- Thamizharasi, A.; Jayasudha, J.S. An Image Enhancement Technique for Poor Illumination Face Images. In International Proceedings on Advances in Soft Computing, Intelligent Systems and Applications, Asisa 2016; Reddy, M.S., Viswanath, K., Prasad, K.M.S., Eds.; Springer: Singapore, 2018; Volume 628, pp. 167–179. [Google Scholar]

- Tan, S.F.; Isa, N.A.M. Exposure Based Multi-Histogram Equalization Contrast Enhancement for Non-Uniform Illumination Images. IEEE Access 2019, 7, 70842–70861. [Google Scholar] [CrossRef]

- Lecca, M.; Torresani, A.; Remondino, F. On Image Enhancement for Unsupervised Image Description and Matching; Springer: Cham, Switzerland, 2019; pp. 82–92. [Google Scholar]

- Guo, X.J.; Li, Y.; Ling, H.B. LIME: Low-Light Image Enhancement via Illumination Map Estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar] [CrossRef]

- Li, H.C.; Man, Y.Y. Robust Multi-Source Image Registration for Optical Satellite Based on Phase Information. Photogramm. Eng. Remote Sens. 2016, 82, 865–878. [Google Scholar] [CrossRef]

- Ye, Z.; Tong, X.H.; Zheng, S.Z.; Guo, C.C.; Gao, S.; Liu, S.J.; Xu, X.; Jin, Y.M.; Xie, H.; Liu, S.C.; et al. Illumination-Robust Subpixel Fourier-Based Image Correlation Methods Based on Phase Congruency. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1995–2008. [Google Scholar] [CrossRef]

- Wang, S.H.; Zheng, J.; Hu, H.M.; Li, B. Naturalness Preserved Enhancement Algorithm for Non-Uniform Illumination Images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef]

- Ali, R.; Szilagyi, T.; Gooding, M.; Christlieb, M.; Brady, M. On the Use of Low-Pass Filters for Image Processing with Inverse Laplacian Models. J. Math. Imaging Vis. 2012, 43, 156–165. [Google Scholar] [CrossRef]

- Lee, S.; Kwon, H.; Han, H.; Lee, G.; Kang, B. A Space-Variant Luminance Map based Color Image Enhancement. IEEE Trans. Consum. Electron. 2010, 56, 2636–2643. [Google Scholar] [CrossRef]

- Lee, S.L.; Tseng, C.C.; IEEE. Image Enhancement Using DCT-Based Matrix Homomorphic Filtering Method. In Proceedings of the 2016 IEEE Asia Pacific Conference on Circuits and Systems (APCCAS), Jeju, Korea, 25–28 October 2016; pp. 1–4. [Google Scholar]

- Plichoski, G.F.; Chidambaram, C.; Parpinelli, R.S. Optimizing a Homomorphic Filter for Illumination Compensation In Face Recognition Using Population-based Algorithms. In Proceedings of the 2017 Workshop of Computer Vision (WVC), Natal, Brazil, 30 October–1 November 2017; pp. 78–83. [Google Scholar]

- Orcioni, S.; Paffi, A.; Camera, F.; Apollonio, F.; Liberti, M. Automatic decoding of input sinusoidal signal in a neuron model: High pass homomorphic filtering. Neurocomputing 2018, 292, 165–173. [Google Scholar] [CrossRef]

- Kaur, K.; Jindal, N.; Singh, K. Improved homomorphic filtering using fractional derivatives for enhancement of low contrast and non-uniformly illuminated images. Multimed. Tools Appl. 2019, 78, 27891–27914. [Google Scholar] [CrossRef]

- Zhang, C.M.; Liu, W.B.; Xing, W.W. Color image enhancement based on local spatial homomorphic filtering and gradient domain variance guided image filtering. J. Electron. Imaging 2018, 27, 063026. [Google Scholar] [CrossRef]

- Fan, B.; Wu, F.C.; Hu, Z.Y. Robust line matching through line-point invariants. Pattern Recognit. 2012, 45, 794–805. [Google Scholar] [CrossRef]

- Zhang, L.L.; Koch, R. An efficient and robust line segment matching approach based on LBD descriptor and pairwise geometric consistency. J. Vis. Commun. Image Represent. 2013, 24, 794–805. [Google Scholar] [CrossRef]

- Hossein-Nejad, Z.; Nasri, M. An adaptive image registration method based on SIFT features and RANSAC transform. Comput. Electr. Eng. 2017, 62, 524–537. [Google Scholar] [CrossRef]

- Mohammed, H.M.; El-Sheimy, N. A Descriptor-less Well-Distributed Feature Matching Method Using Geometrical Constraints and Template Matching. Remote Sens. 2018, 10, 747. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).