Unsupervised End-to-End Deep Model for Newborn and Infant Activity Recognition

Abstract

1. Introduction

2. Related Works

3. Data Gathering and Proposed Method

3.1. Data Gathering

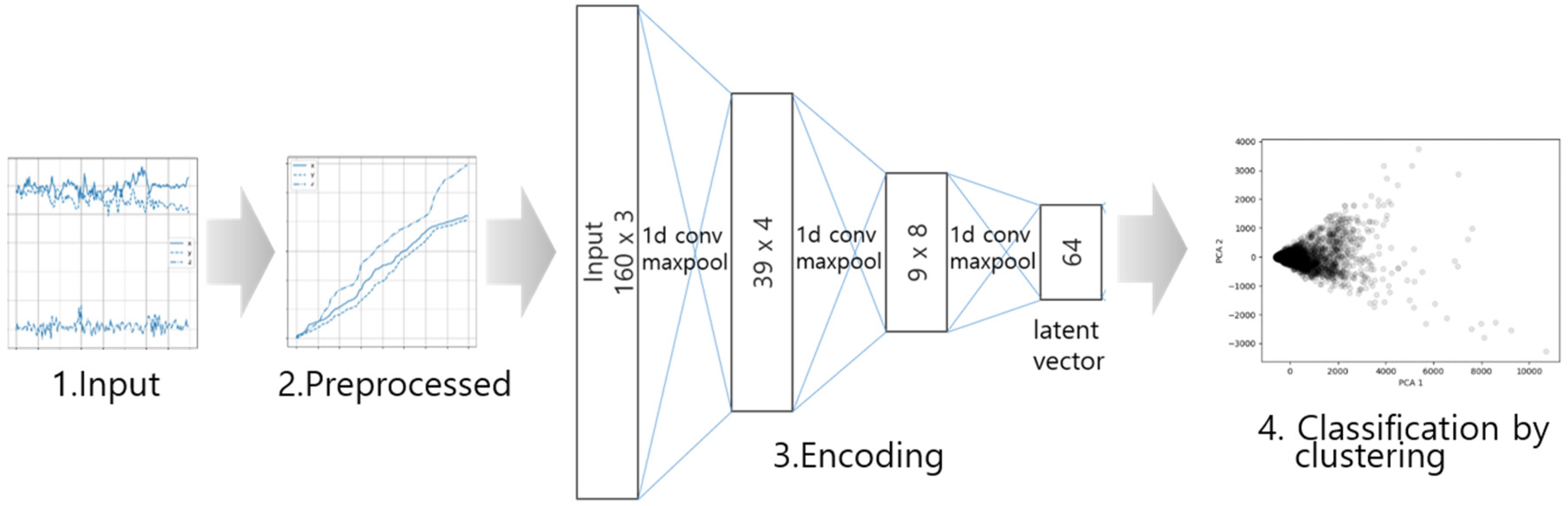

3.2. Proposed Method Using Autoencoder and K-Means Clustering

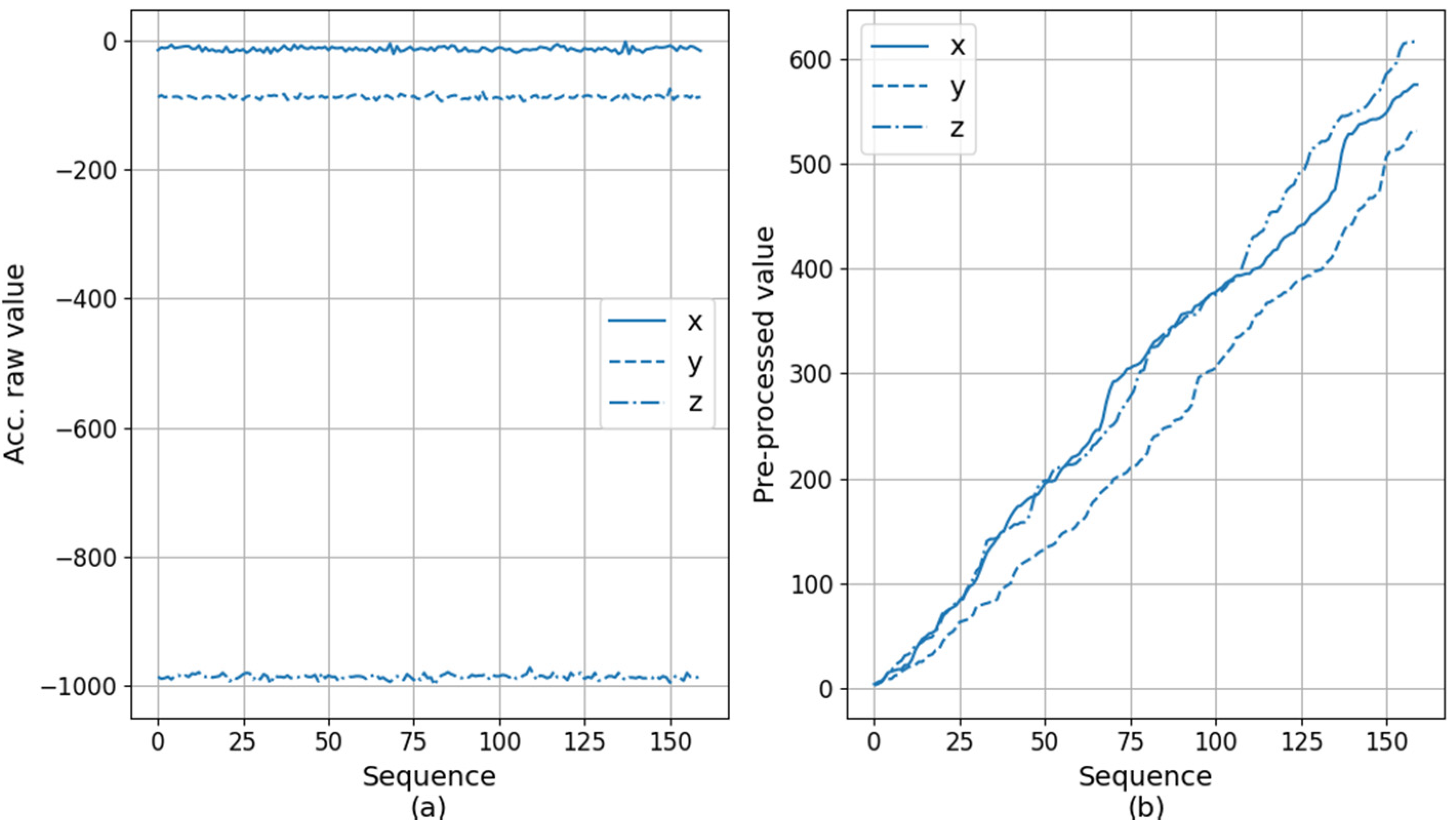

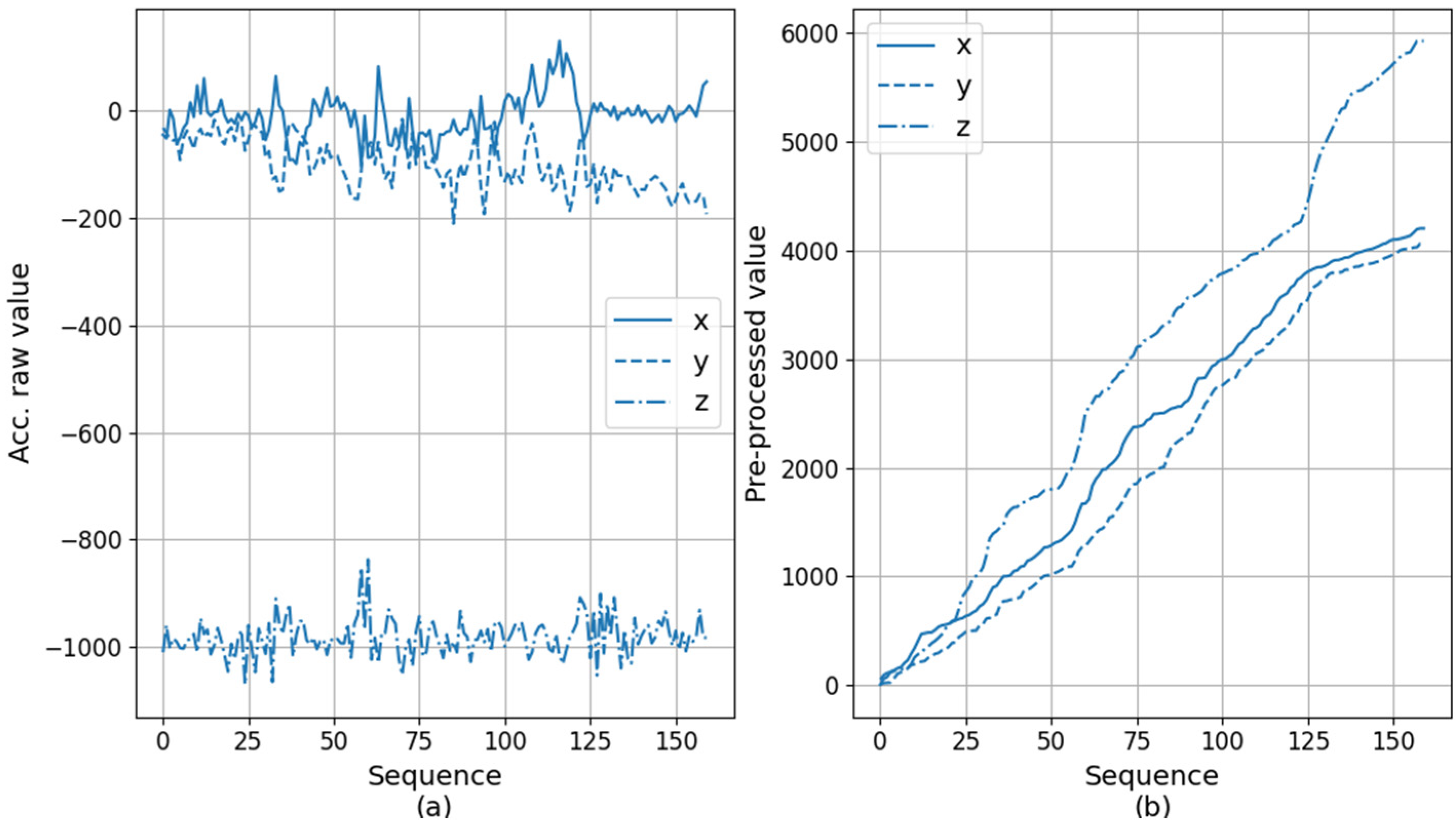

3.2.1. Preprocessing

3.2.2. Autoencoder

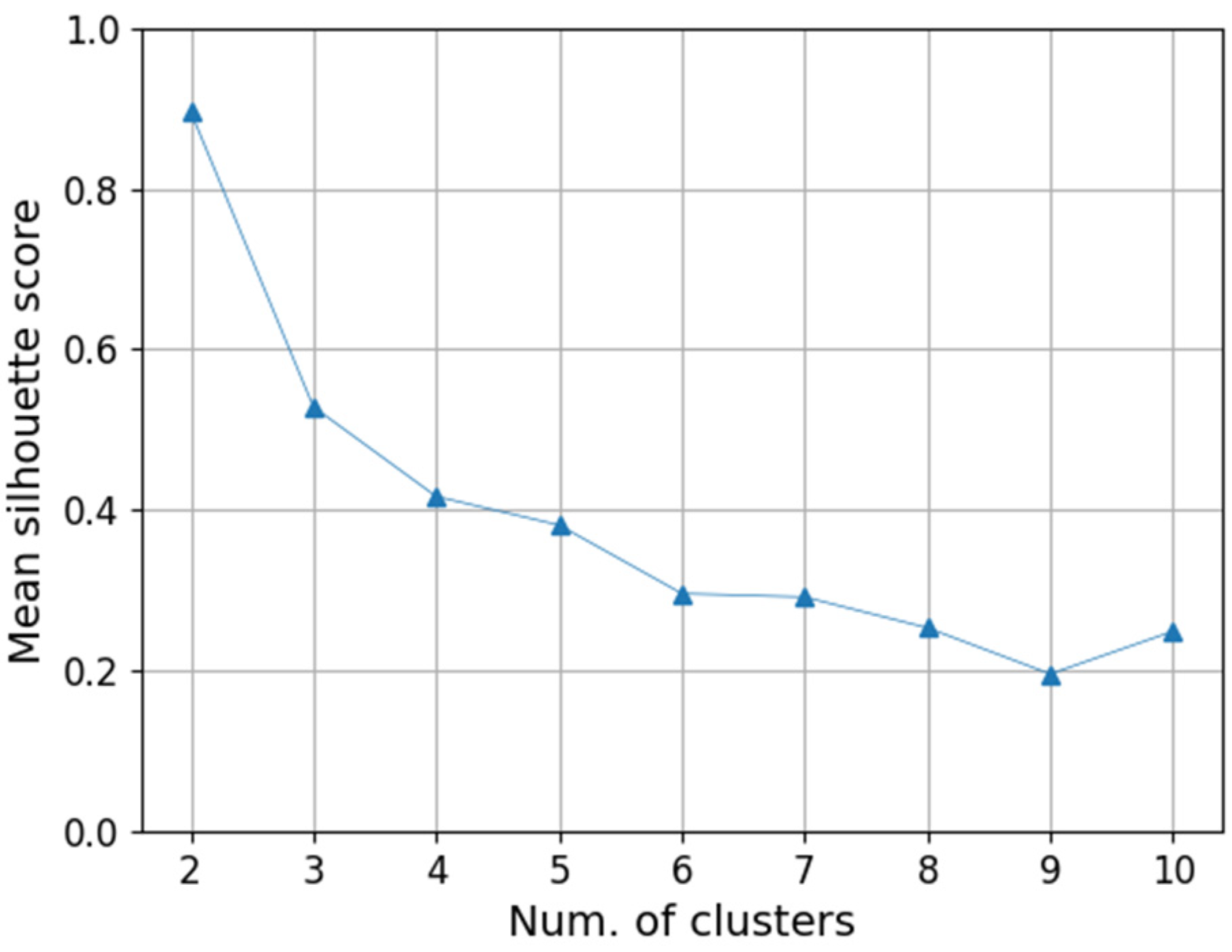

3.2.3. Clustering

4. Experiments for Performance Evaluation

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Ethical Statement

References

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. Pattern Recog. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Baker, C.R.; Armijo, K.; Belka, S.; Benhabib, M.; Bhargava, V.; Burkhart, N.; Der Minassians, A.; Dervisoglu, G.; Gutnik, L.; Haick, M.B. Wireless sensor networks for home health care. In Proceedings of the 21st International Conference on Advanced Information Networking and Applications Workshops (AINAW’07), Niagara Falls, ON, Canada, 21–23 May 2007; IEEE: New York, NY, USA, 2007; pp. 832–837. [Google Scholar]

- Cao, H.; Hsu, L.; Ativanichayaphong, T.; Sin, J.; Chiao, J. A non-invasive and remote infant monitoring system using CO 2 sensors. In Proceedings of the SENSORS, 2007 IEEE, Atlanta, GA, USA, 28–31 October 2007; IEEE: New York, NY, USA, 2007; pp. 989–992. [Google Scholar]

- Nishida, Y.; Hiratsuka, K.; Mizoguchi, H. Prototype of infant drowning prevention system at home with wireless accelerometer. In Proceedings of the SENSORS, 2007 IEEE, Atlanta, GA, USA, 28–31 October 2007; IEEE: New York, NY, USA, 2007; pp. 1209–1212. [Google Scholar]

- Linti, C.; Horter, H.; Osterreicher, P.; Planck, H. Sensory baby vest for the monitoring of infants. In Proceedings of the International Workshop on Wearable and Implantable Body Sensor Networks (BSN’06), Cambridge, MA, USA, 3–5 April 2006; IEEE: New York, NY, USA, 2006; pp. 3–137. [Google Scholar]

- Schiavone, G.; Guglielmelli, E.; Keller, F.; Zollo, L.; Chersi, F. A wearable ergonomic gaze-tracker for infants. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; IEEE: New York, NY, USA, 2010; pp. 1283–1286. [Google Scholar]

- Yao, X.; Plötz, T.; Johnson, M.; Barbaro, K.D. Automated Detection of Infant Holding Using Wearable Sensing: Implications for Developmental Science and Intervention. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol 2019, 3, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Airaksinen, M.; Räsänen, O.; Ilén, E.; Häyrinen, T.; Kivi, A.; Marchi, V.; Gallen, A.; Blom, S.; Varhe, A.; Kaartinen, N. Automatic posture and movement tracking of infants with wearable movement sensors. Sci. Rep. 2020, 10, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Z.; Liu, T.; Li, G.; Li, T.; Inoue, Y. Wearable sensor systems for infants. Sensors 2015, 15, 3721–3749. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Xue, M.; Mei, Z.; Bambang Oetomo, S.; Chen, W. A review of wearable sensor systems for monitoring body movements of neonates. Sensors 2016, 16, 2134. [Google Scholar] [CrossRef] [PubMed]

- Infant, Newborn. Available online: https://www.who.int/infant-newborn/en/ (accessed on 3 August 2020).

- Chois Technology Thermosafer. Available online: http://www.choistec.com/products/product_view.php?sq=142&code1=6&code2= (accessed on 5 August 2020).

- San-Segundo, R.; Zhang, A.; Cebulla, A.; Panev, S.; Tabor, G.; Stebbins, K.; Massa, R.E.; Whitford, A.; de la Torre, F.; Hodgins, J. Parkinson’s Disease Tremor Detection in the Wild Using Wearable Accelerometers. Sensors 2020, 20, 5817. [Google Scholar] [CrossRef] [PubMed]

- Thapa, K.; Al, A.; Md, Z.; Lamichhane, B.; Yang, S. A Deep Machine Learning Method for Concurrent and Interleaved Human Activity Recognition. Sensors 2020, 20, 5770. [Google Scholar] [CrossRef] [PubMed]

- Homayounfar, M.; Malekijoo, A.; Visuri, A.; Dobbins, C.; Peltonen, E.; Pinsky, E.; Teymourian, K.; Rawassizadeh, R. Understanding Smartwatch Battery Utilization in the Wild. Sensors 2020, 20, 3784. [Google Scholar] [CrossRef] [PubMed]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Oakland, CA, USA, 7 January 1966; pp. 281–297. [Google Scholar]

- Chen, H.; Gu, X.; Mei, Z.; Xu, K.; Yan, K.; Lu, C.; Wang, L.; Shu, F.; Xu, Q.; Oetomo, S.B. A wearable sensor system for neonatal seizure monitoring. In Proceedings of the 2017 IEEE 14th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Eindhoven, The Netherlands, 9–12 May 2017; IEEE: New York, NY, USA, 2017; pp. 27–30. [Google Scholar]

- Yamada, T.; Watanabe, T. Development of a small pressure-sensor-driven round bar grip measurement system for infants. In Proceedings of the the SICE Annual Conference 2013, Nagoya, Japan, 14–17 September 2013; IEEE: New York, NY, USA, 2013; pp. 259–264. [Google Scholar]

- Monbaby Sleep Monitors Official Website. Available online: https://monbabysleep.com/ (accessed on 22 October 2020).

- Krenzel, D.; Warren, S.; Li, K.; Natarajan, B.; Singh, G. Wireless slips and falls prediction system. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; IEEE: New York, NY, USA, 2012; pp. 4042–4045. [Google Scholar]

- Shimizu, A.; Ishii, A.; Okada, S. Monitoring preterm infants’ body movement to improve developmental care for their health. In Proceedings of the 2017 IEEE 6th Global Conference on Consumer Electronics (GCCE), Nagoya, Japan, 24–27 October 2017; IEEE: New York, NY, USA, 2017; pp. 1–5. [Google Scholar]

- Vepakomma, P.; De, D.; Das, S.K.; Bhansali, S. A-Wristocracy: Deep learning on wrist-worn sensing for recognition of user complex activities. In Proceedings of the 2015 IEEE 12th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Cambridge, MA, USA, 9–12 June 2015; IEEE: New York, NY, USA, 2015; pp. 1–6. [Google Scholar]

- Walse, K.H.; Dharaskar, R.V.; Thakare, V.M. Pca based optimal ann classifiers for human activity recognition using mobile sensors data. In Proceedings of the First International Conference on Information and Communication Technology for Intelligent Systems, Ahmedabad, India, 28–29 November 2015; Springer: Berlin/Heidelberg, Germany, 2016; Volume 1, pp. 429–436. [Google Scholar]

- Chen, Y.; Xue, Y. A deep learning approach to human activity recognition based on single accelerometer. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Kowloon, China, 9–12 October 2015; IEEE: New York, NY, USA, 2015; pp. 1488–1492. [Google Scholar]

- Singh, M.S.; Pondenkandath, V.; Zhou, B.; Lukowicz, P.; Liwickit, M. Transforming sensor data to the image domain for deep learning—An application to footstep detection. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; IEEE: New York, NY, USA, 2017; pp. 2665–2672. [Google Scholar]

- Almaslukh, B.; AlMuhtadi, J.; Artoli, A. An effective deep autoencoder approach for online smartphone-based human activity recognition. Int. J. Comput. Sci. Netw. Secur. 2017, 17, 160–165. [Google Scholar]

- Edel, M.; Köppe, E. Binarized-blstm-rnn based human activity recognition. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Alcala de Henares, Spain, 4–7 October 2016; IEEE: New York, NY, USA, 2016; pp. 1–7. [Google Scholar]

- Inoue, M.; Inoue, S.; Nishida, T. Deep recurrent neural network for mobile human activity recognition with high throughput. Artif. Life Robot. 2018, 23, 173–185. [Google Scholar] [CrossRef]

- Yao, S.; Hu, S.; Zhao, Y.; Zhang, A.; Abdelzaher, T. Deepsense: A unified deep learning framework for time-series mobile sensing data processing. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 351–360. [Google Scholar]

- Ordóñez, F.J.; Roggen, D. Deep convolutional and lstm recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed]

- Srivastava, N.; Mansimov, E.; Salakhudinov, R. Unsupervised learning of video representations using lstms. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 843–852. [Google Scholar]

- Park, D.; Hoshi, Y.; Kemp, C.C. A multimodal anomaly detector for robot-assisted feeding using an lstm-based variational autoencoder. IEEE Robot. Autom. Lett. 2018, 3, 1544–1551. [Google Scholar] [CrossRef]

- Chauhan, S.; Vig, L. Anomaly detection in ECG time signals via deep long short-term memory networks. In Proceedings of the 2015 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Paris, France, 19–21 October 2015; IEEE: New York, NY, USA, 2015; pp. 1–7. [Google Scholar]

- Wess, M.; Manoj, P.S.; Jantsch, A. Neural network based ECG anomaly detection on FPGA and trade-off analysis. In Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017; IEEE: New York, NY, USA, 2017; pp. 1–4. [Google Scholar]

- Keogh, E.; Lin, J.; Fu, A. HOT SAX: Finding the most unusual time series subsequence: Algorithms and applications. In Proceedings of the 5th IEEE International Conference on Data Mining, Houston, TX, USA, 27–30 November 2005; pp. 440–449. [Google Scholar]

- Gao, X.; Luo, H.; Wang, Q.; Zhao, F.; Ye, L.; Zhang, Y. A human activity recognition algorithm based on stacking denoising autoencoder and lightGBM. Sensors 2019, 19, 947. [Google Scholar] [CrossRef] [PubMed]

- Seyfioğlu, M.S.; Özbayoğlu, A.M.; Gürbüz, S.Z. Deep convolutional autoencoder for radar-based classification of similar aided and unaided human activities. IEEE Trans. Aerosp. Electron. Syst. 2018, 54, 1709–1723. [Google Scholar] [CrossRef]

- Zou, H.; Zhou, Y.; Yang, J.; Jiang, H.; Xie, L.; Spanos, C.J. Deepsense: Device-free human activity recognition via autoencoder long-term recurrent convolutional network. In Proceedings of the 2018 IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018; IEEE: New York, NY, USA, 2018; pp. 1–6. [Google Scholar]

- ICM-20600. Available online: https://invensense.tdk.com/wp-content/uploads/2015/12/DS-000184-ICM-20600-v1.0.pdf (accessed on 31 October 2020).

- Banos, O.; Galvez, J.; Damas, M.; Pomares, H.; Rojas, I. Window size impact in human activity recognition. Sensors 2014, 14, 6474–6499. [Google Scholar] [CrossRef] [PubMed]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A public domain dataset for human activity recognition using smartphones. In Proceedings of the 2013 European Symposium on Artificial Neural Networks (Esann), Bruges, Belgium, 24–26 April 2013; p. 3. [Google Scholar]

- Rousseeuw, P.J. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef]

- Calciolari, G.; Montirosso, R. The sleep protection in the preterm infants. J. Matern. Fetal Neonatal Med. 2011, 24, 12–14. [Google Scholar] [CrossRef] [PubMed]

- Colombo, G.; De Bon, G. Strategies to protect sleep. J. Matern. Fetal Neonatal Med. 2011, 24, 30–31. [Google Scholar] [CrossRef] [PubMed]

- Lin, W.; Tsai, C. Missing value imputation: A review and analysis of the literature (2006–2017). Artif. Intell. Rev. 2020, 53, 1487–1509. [Google Scholar] [CrossRef]

| Sample | Gender | Age (Days) | Video | Sensor Data | Note | |

|---|---|---|---|---|---|---|

| Length (min.) | Size (MB) | Size (MB) | ||||

| (a) | F | 360 | 20 | 341 | 2.05 | |

| (b) | M | 720 | 95 | 840 | 9.06 | |

| (c) | M | 180 | 10 | 75 | 1 | |

| (d) | F | 9 | 125 | 622 | 12.2 | |

| (e) | F | 2 | 95 | 601 | 9.81 | |

| (f) | M | 2 | 105 | 836 | 10.2 | |

| (g) | M | 16 | 135 | 923 | 13.6 | |

| (h) | M | 3 | 150 | 972 | 14.9 | |

| (i) | M | 4 | 85 | 459 | 8.62 | |

| (j) | M | 180 | 5 | 40 | 0.5 | Same as (c) |

| Total | 700 | 4453 | 69.83 | |||

| Parameter | Meaning |

|---|---|

| Original signal vector of accelerometer [x, y, z] | |

| Mean-subtracted signal vector of [x, y, z] | |

| Absolute difference between neighboring signal vectors of [x, y, z] | |

| ACumulative sum vector |

| No. | Layer | # of Param. | No. | Layer | # of Param. |

|---|---|---|---|---|---|

| 1 | Conv1d | 28 | 7 | ReLU/MaxPool1d | - |

| 2 | ReLU/MaxPool1d | - | 8 | ConvTranspose1d | 520 |

| 3 | Conv1d | 72 | 9 | ReLU | - |

| 4 | ReLU/MaxPool1d | - | 10 | ConvTranspose1d | 99 |

| 5 | Conv1d | 272 | 11 | ReLU | - |

| 6 | BatchNorm1d | 32 | 12 | Linear | 10,400 |

| Label | 1st Classification | 2nd Classification | Final Classification | Description |

|---|---|---|---|---|

| 0 | Sleeping | Subjects are sleeping without substantial movement. | ||

| 1 | Non-sleeping | Active Movement | Strong movement | Subjects are struggling with crying or in agony. |

| 2 | Weak movement | Subjects are awake and moving in comfy state. | ||

| 3 | External force movement | External force from nurses or care-giver is applied to subjects. | ||

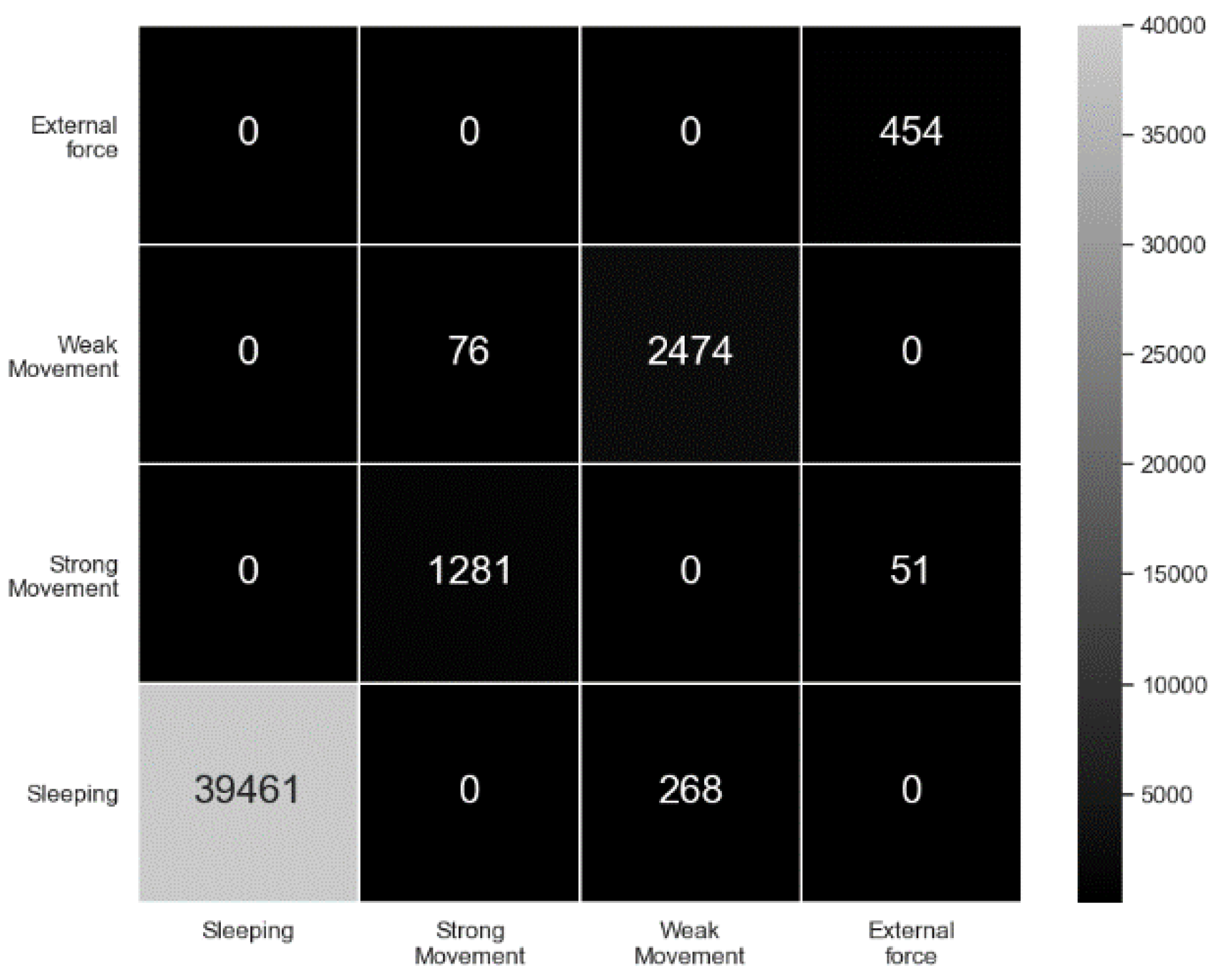

| Activity | Precision | Recall | Specificity | Balanced Accuracy | F1-Score |

|---|---|---|---|---|---|

| Sleeping | 0.99 | 1 | 0.94 | 0.97 | 0.9966 |

| String movement | 0.96 | 0.94 | 0.99 | 0.97 | 0.9527 |

| Weak movement | 0.97 | 0.90 | 0.99 | 0.95 | 0.9349 |

| External force | 1 | 0.89 | 1 | 0.95 | 0.9468 |

| Avg. (std.) | 0.98 (0.018) | 0.93 (0.049) | 0.98 (0.027) | 0.96 (0.011) | 0.95 (0.026) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jun, K.; Choi, S. Unsupervised End-to-End Deep Model for Newborn and Infant Activity Recognition. Sensors 2020, 20, 6467. https://doi.org/10.3390/s20226467

Jun K, Choi S. Unsupervised End-to-End Deep Model for Newborn and Infant Activity Recognition. Sensors. 2020; 20(22):6467. https://doi.org/10.3390/s20226467

Chicago/Turabian StyleJun, Kyungkoo, and Soonpil Choi. 2020. "Unsupervised End-to-End Deep Model for Newborn and Infant Activity Recognition" Sensors 20, no. 22: 6467. https://doi.org/10.3390/s20226467

APA StyleJun, K., & Choi, S. (2020). Unsupervised End-to-End Deep Model for Newborn and Infant Activity Recognition. Sensors, 20(22), 6467. https://doi.org/10.3390/s20226467