1. Introduction

Traditional Chinese sport has been a compulsory component of Physical Education (PE) in universities in China since 2002 [

1]. Although there are various traditional Chinese sports to choose from, 76.7% of universities taught martial arts in their PE curriculum [

2]. In 2016, the Communist Party of China and the Chinese government adopted the ‘Healthy China 2030’ national health plan [

3]. In this plan, Baduanjin was identified as a traditional Chinese sport that was promoted and supported by the government. This resulted in increased Baduanjin teaching and research in universities throughout the country [

4].

Although universities in China must incorporate traditional Chinese sports into their PE curriculum, there have been problems with its implementation. These include a high student-teacher ratio, uninteresting forms of teaching-learning resources, and an incomplete assessment system. These three problems adversely affected the requirements for teaching quality set by the People’s Republic of China Ministry of Education [

5,

6]. Although the high student–teacher ratio has been a problem since 2005, it has yet to be resolved [

7,

8]. Teachers are not able to provide individual guidance to each student because of the large number of students in the class. As a result, teachers cannot correct all the students’ mistakes, and students are not aware of their incorrect movements [

9].

In recent years, motion capture (Mocap) has been widely applied in fields such as clinical and sports biomechanics to distinguish between different types of motions or analyze differences between motions [

10,

11]. Studies have also applied Mocap in PE for adaptive motion analysis to evaluate the motion quality of learners and feedback the information to assist them in detecting and correcting their inaccurate motions. In the study by Koji Yamada et al. [

12], a system based on Mocap was developed for Frisbee learners. Researchers used the Kinect device to obtain 3D motion data of learners during exercise, detect their pre-motion/motion/post-motion, and display the feedback information to improve their motions. The results showed that the system developed by the researchers can effectively improve the motions of learners [

12]. Chen et al. [

13] applied Kinect in Taichi courses in universities. The 3D data of motions from novice students captured by Kinect were compared with an expert in order to evaluate the quality of motions and students were informed of their results. The research showed that the motion evaluation system on Kinect developed by the researchers accelerated learning by novice Taichi students. More recently, Amani Elaoud et al. [

14] used Kinect V2 to obtain red, green, blue and depth (RGB-D) data of motion. They used these data to compare the differences between novice students and experts on the central angles of points that affect throwing performance in handball. These experiments show how researchers have used various categories of Mocap.

Based on different technical characteristics, the application of Mocap in PE can be divided into four categories: optoelectronic system (OMS) [

15], electromagnetic system (EMS), image processing systems (IMS), and inertial sensor measurement system (IMU) [

16]. In these four Mocap categories, OMS is the most accurate and is considered to be the gold standard in motion capture [

16,

17]. However, OMS requires a large number of high-precision and high-speed cameras that will inevitably result in issues related to cost, coordination, and manual use [

18]. Moreover, OMS cannot capture the movement of objects when the marker is obscured [

19]. These deficiencies have limited the practical application of OMS in PE. The advantage of EMS over OMS is that it can measure motion data of a specific point of the body regardless of visual shielding [

20]. However, EMS is susceptible to interference from the electromagnetic environment which distorts measurement data [

21]. Also, EMS has to be kept within a certain distance from the base station, which limits the use range [

22]. IMS has better accuracy compared to EMS and an improved range compared to OMS [

16]. Most studies have used low-cost IMS (such as the Kinect device) to capture motion for analyzing motion in PE. However, there are some disadvantages in low-cost IMS, namely low-accuracy, insufficient environment adaptability, and limited range of motion because the Kinect sensor has a small field of vision [

16]. High-performance IMS does not have these shortcomings. Generally, high-performance IMS has favorable accuracy and a good measurement range. However, high-performance IMS requires expensive high quality and/or high-speed cameras which has limited its application [

16].

Based on the disadvantages of low-cost IMS in its application in PE, applying IMU (a motion capture consisting of an accelerometer, gyroscope, and a magnetometer) in PE may mitigate these application problems [

23]. In recent years, the development of technology has reduced the cost of IMU, making it possible to be used in PE. The validity of assessing motion accuracy of IMUs has been confirmed. Poitras et al. [

24] confirmed the criterion validity of a commercial IMU system (MVN Awinda system, Xsens) by comparing it to a gold standard optoelectronic system (Vicon). Compared to low-cost IMS, IMU has certain advantages in environmental adaptability and a sufficient range of motion. The IMU does not require any base station to work, which means it is the most mobile of the available motion capture systems [

16]. Moreover, IMU can measure high-speed movements and is non-invasive for the user, making it an attractive application for PE [

16,

25].

However, there are a few issues capturing motions using IMU. First, the IMU sensors are sensitive to metal objects nearby which distort the measurement data [

16]. Therefore, participants should wear fewer metal objects when capturing motion using IMU. Fortunately, in traditional Chinese martial arts such as Baduanjin, exercisers should, in principle, wear traditional Chinese costumes without any metal, which minimizes the impact of metals on IMU sensors. Second, a common IMU system, Perception Neuron 2.0, was used in our research which uses data cables to connect all sensors with a transmitter. Although users cannot wear this wired IMU system on their own, it does not affect the accuracy of the data. Also, the latest IMU overcomes this problem that users can’t wear it on their own by having each sensor transmit the data to the external receiving terminal directly [

26].

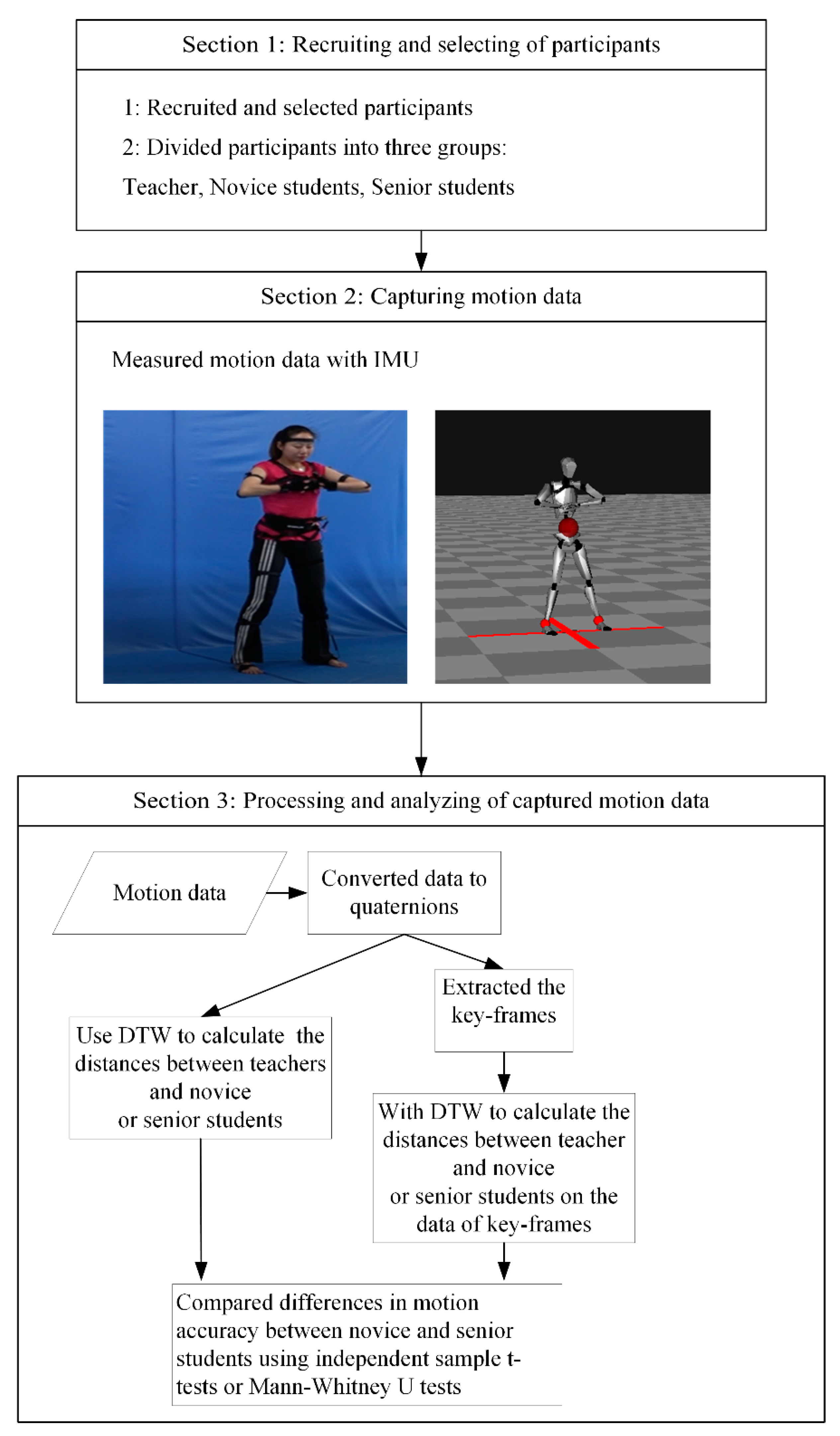

Therefore, we propose applying IMU in Baduanjin by developing a system that assesses and records the quality of motions to assist teachers and students in determining inaccurate motions. Using IMU, students can learn Baduanjin independently after class and teachers can evaluate students’ progress, which is useful for formative assessment. That may alleviate current problems faced in PE classes in Chinese universities. For this purpose, we explored the feasibility of using an IMU to distinguish the difference in motion accuracy of Baduanjin between novice and senior students.

4. Discussion

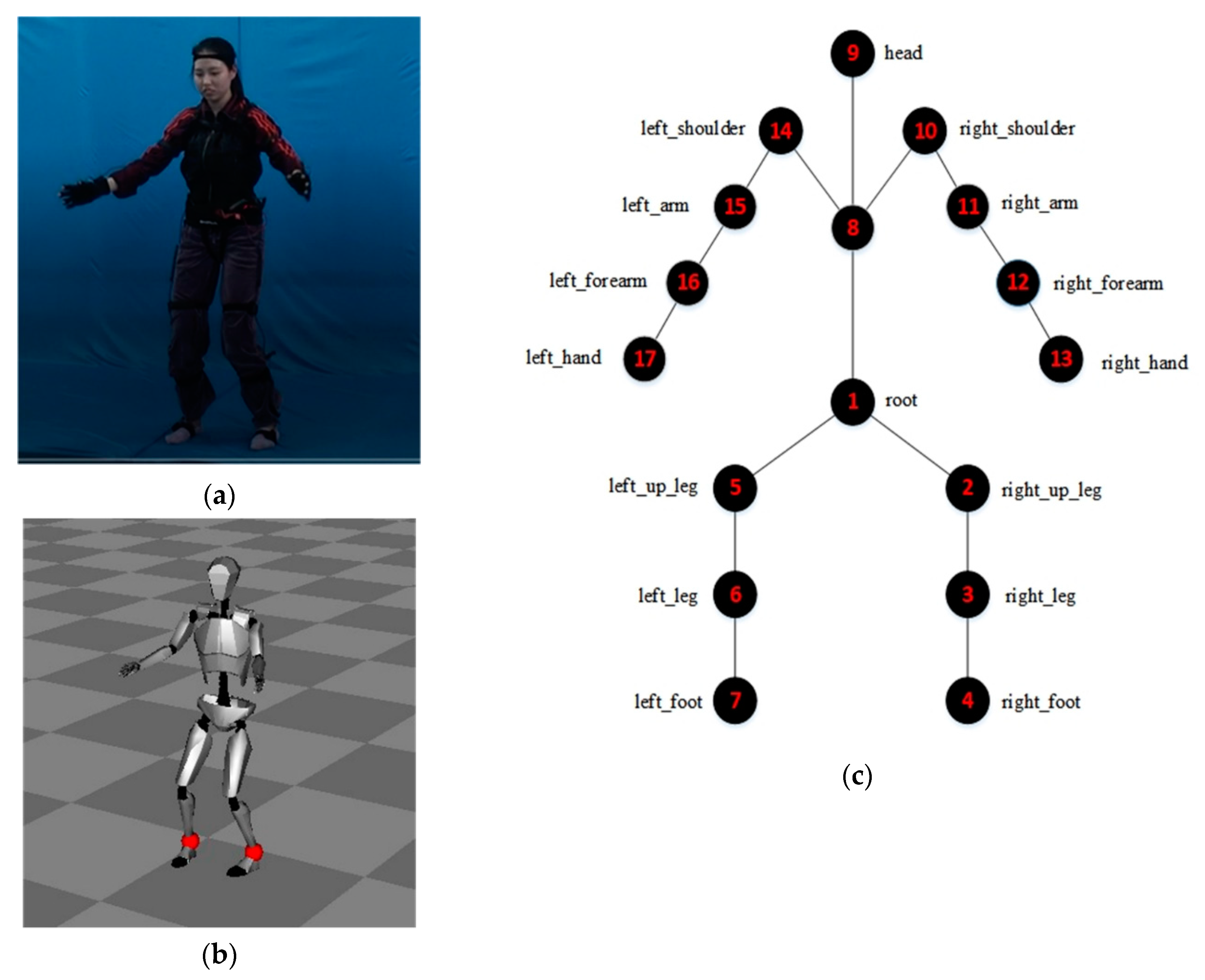

When using mathematical methods, the macro differences between the motion data of novice students and the teacher were higher than the distances between the motion data of senior students and the teacher on eight motions of Baduanjin. Because the motion data of the experimental analysis are the rotation data of specific skeleton points measured by the IMU, if the teacher’s motions were taken as the standard, the results show that the motions of senior students were closer to the standard motions. Therefore, IMU can effectively distinguish the differences in motion accuracy in Baduanjin between novice and senior students.

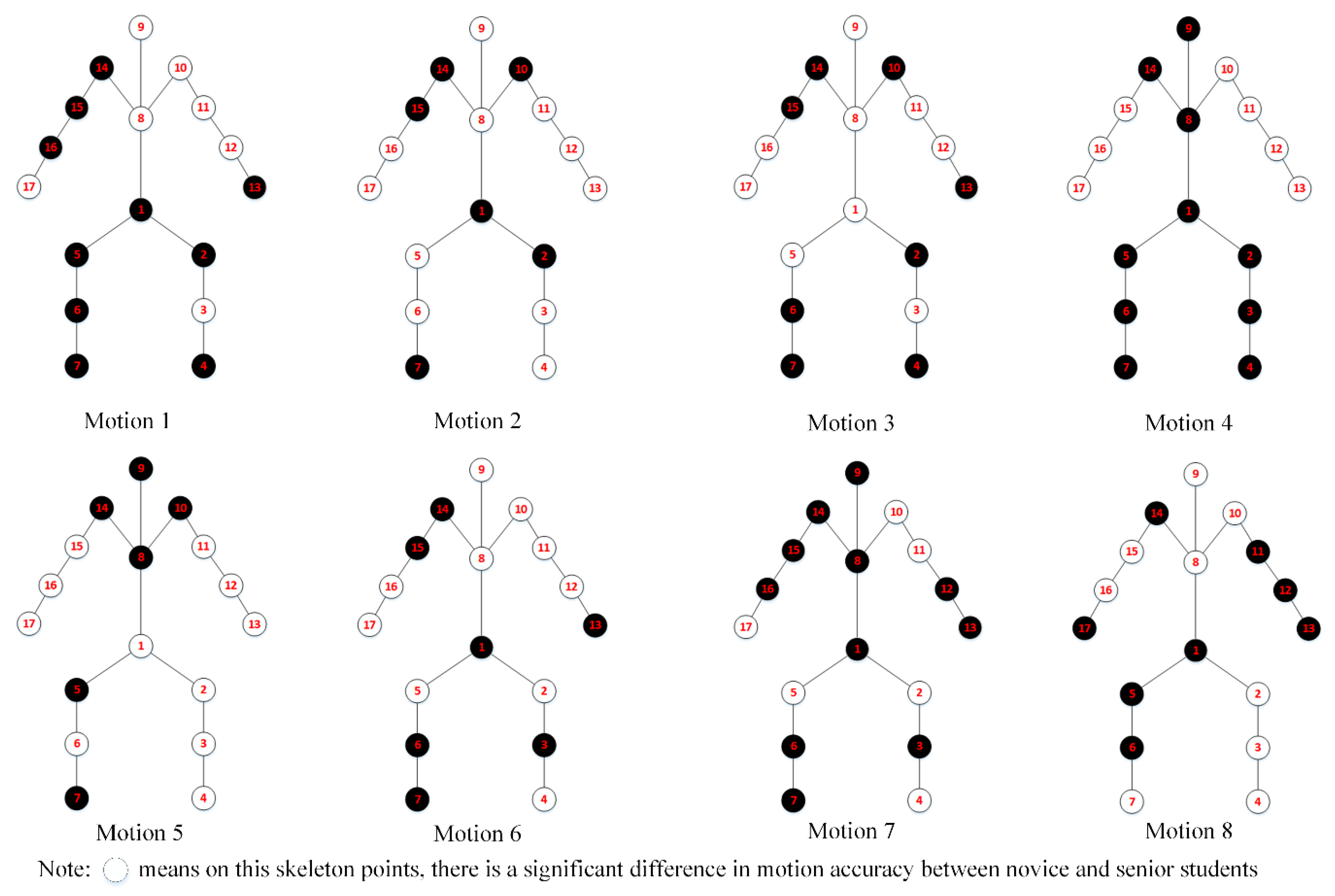

When using the original frames to evaluate the differences at 17 skeleton points in eight motions between novice and senior students, the results show the differences in motion accuracy between the two groups on skeleton points varied for the different motions. For Motion 1, the differences between the two groups were mainly concentrated on the head-spine segment and upper limbs, especially the right upper limb. The differences mean that the motion errors of novice students relative to senior students were mainly concentrated on these joints. The results are consistent with the common motion errors described in the official book: “When holding the palms up, the head is not raised enough, or the arms are not raised enough” [

30]. However, for Motion 4, the common motion errors are described in the official book as: “Rotating head and arm are insufficient” [

30]. The description shows that the main errors occur in the head-spine and bilateral upper limbs. However, significant differences of skeleton points were at bilateral upper limbs but not head-spine. This difference may be related to the small number of participates in this study.

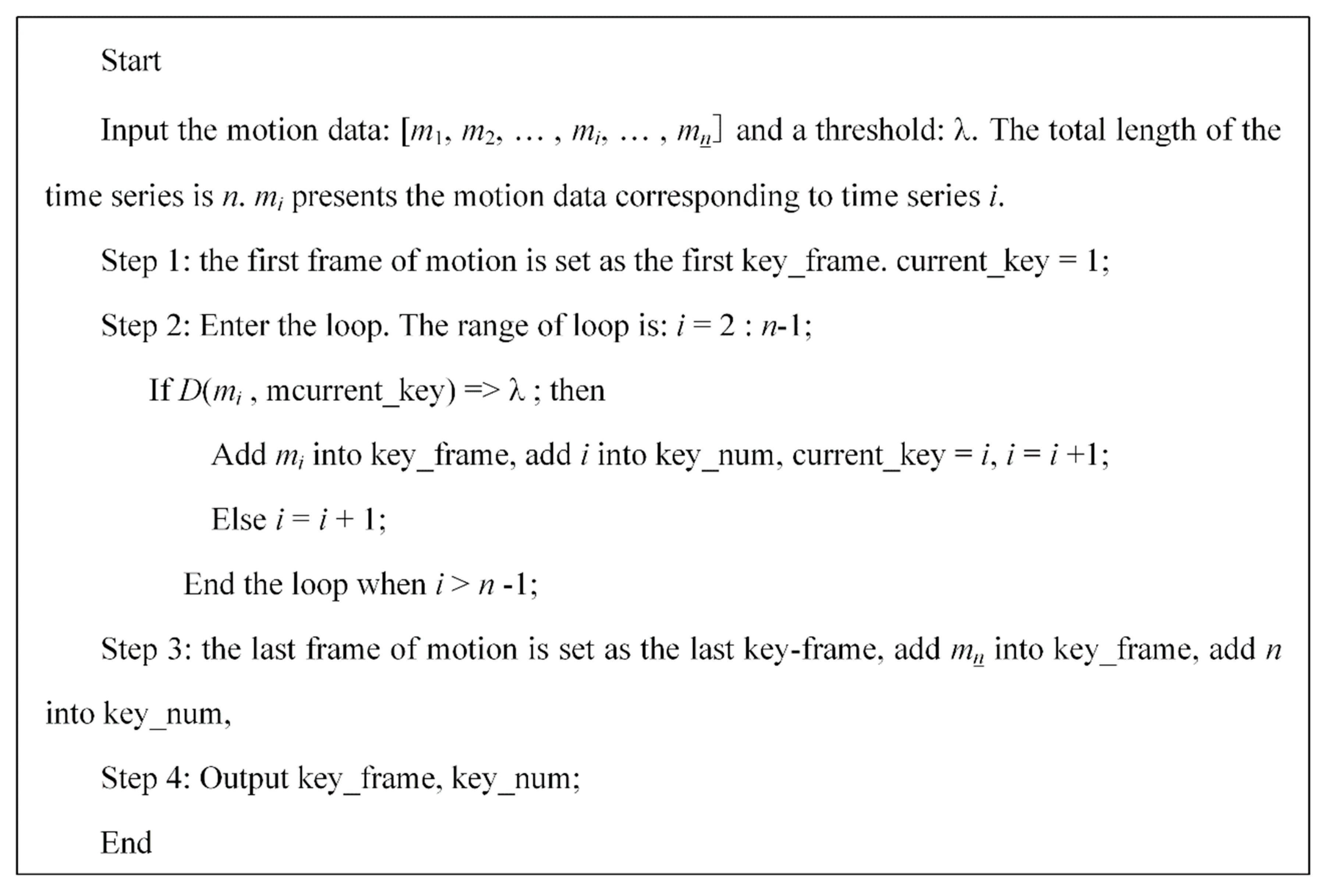

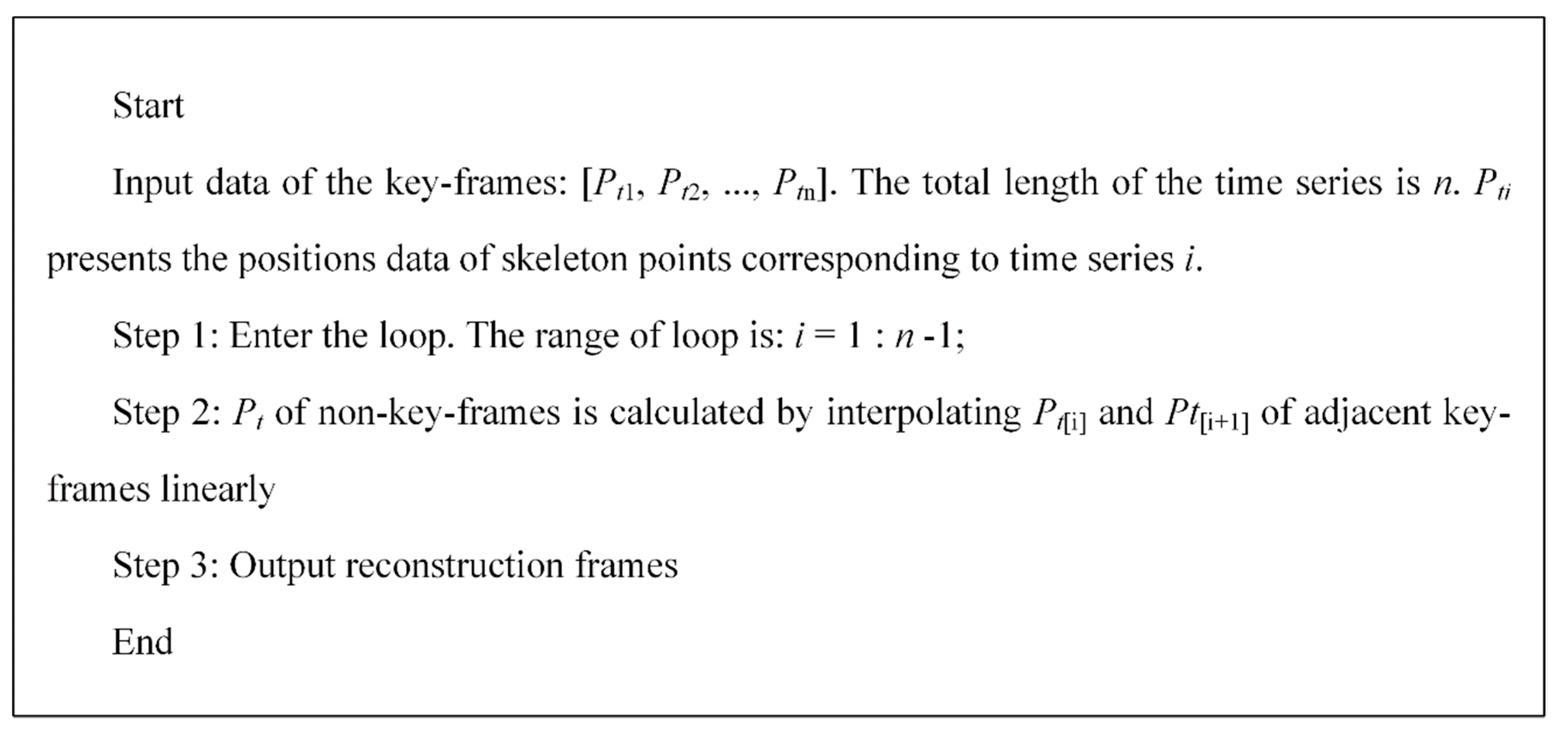

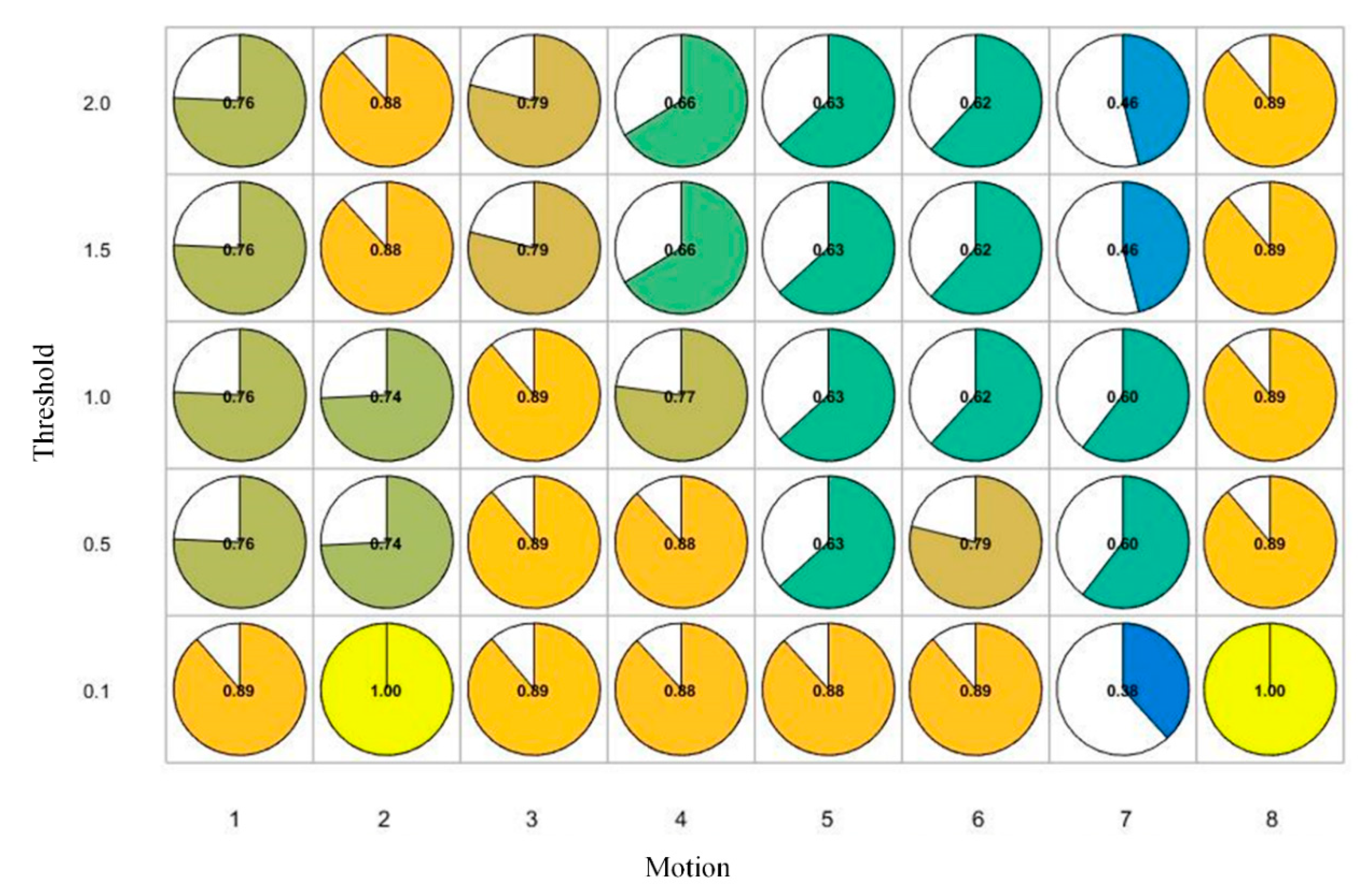

In this study, we also used two methods to extract key-frames. The raw data can be effectively compressed to decrease the data storage space using extracting key-frames [

40,

41]. The repetitiveness of action exercises in the teaching process will generate an extremely large amount of raw data. From the results, both key-frames extraction methods can effectively compress the raw data. We also found that the data processing speed could be accelerated on key-frames. However, the compression rates of key-frames on different motions when using key-frames on inter-frame pitch were different. We found that the differences in skeleton points on the key-frames on inter-frame pitch were not consistent with the results on the original frames. However, there was high consistency between the results on the key-frames on clustering and the results on the original frames, especially when the compression rate was 15%. Therefore, we can use key-frames to replace the original frames to evaluate motion accuracy of Baduanjin in order to decrease data storage space and processing time.

However, the small number of participants in our study limits the application of the results. As the participants were from a university in China, the results might only be suitable for university students in China because different populations have variations in anatomical characteristics, physiological characteristics, and athletic ability.

Based on our results, IMU can effectively distinguish the difference in the motion accuracy of Baduanjin between novice and senior students. Therefore, in the following work, we can develop a system using IMU to evaluate the motion quality of students and provide feedback to teachers and students. Thus, it would be able to assist teachers in correcting errors in the motions of students immediately.