Dual Arm Co-Manipulation Architecture with Enhanced Human–Robot Communication for Large Part Manipulation

Abstract

1. Introduction

2. Related Work

3. Proposed Architecture

- During the transportation of large parts by humans, both actors agree (implicit or explicitly) on an approximate trajectory, which will be the basis for the transportation process. Following this premise, robots will manage a nominal trajectory which can be manually defined by users or generated automatically (e.g., using artificial vision).

- When humans transport large parts along a previously agreed path, any of them are able to deform this nominal trajectory in order to adapt the process to any unexpected event. In these cases, both actors are able to adapt their movements in a coordinated way without any prior knowledge, just based on the sensed forces. The implementation of impedance control [23,24] is proposed to mimic this behavior.

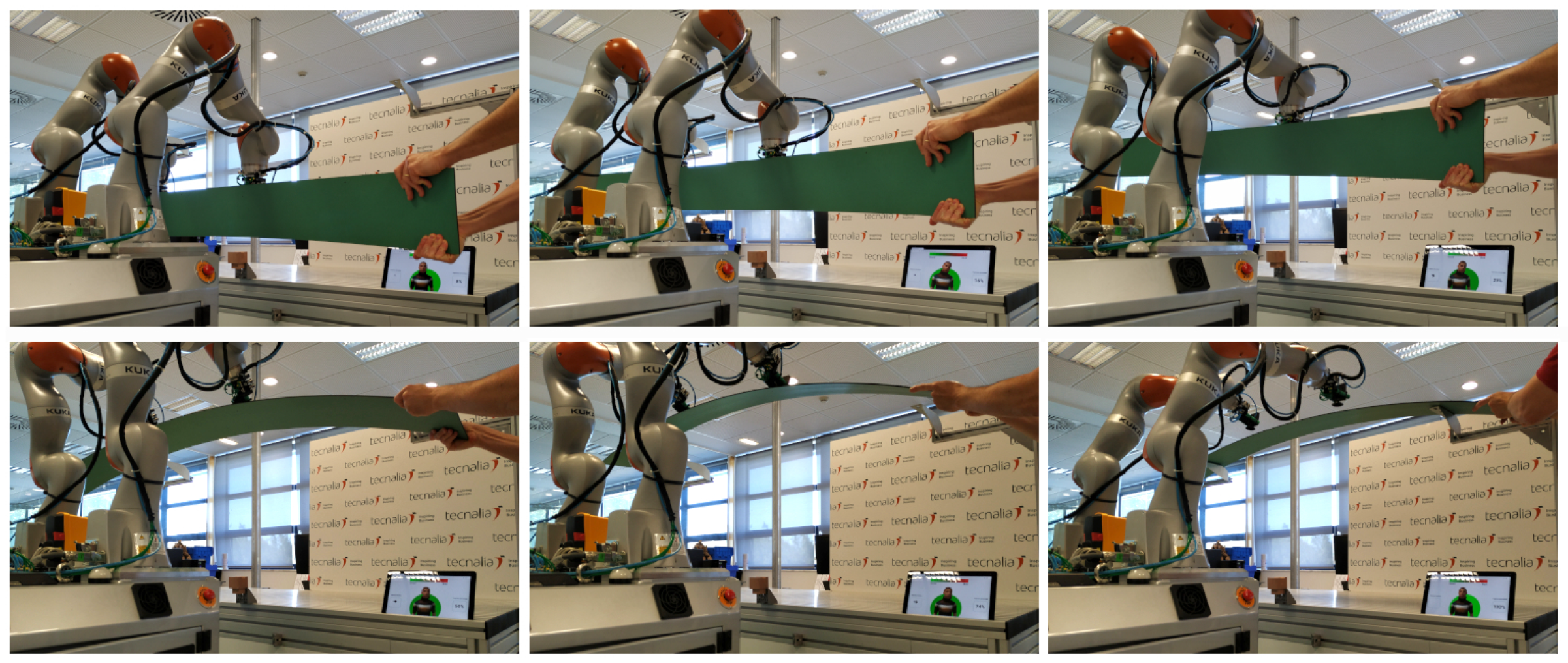

- The premise of this implementation is that robots act as assistants to the human. Taking this into account, robots will only advance in a trajectory when the operator moves the part along the defined path. Therefore, the operators will always play a master role in the co-manipulation task.

- As we are working with a dual arm robotic system, both arms need to move with a degree of coordination. Even so, this coordination will not be totally tight as in traditional robotics, as large part manipulation may require the adaptation of both robots due to uncertainties like the deformation of the objects or the human factor.

- During the part manipulation, besides the force feedback, humans exchange additional feedback as voice commands or gestures. It will be necessary to investigate how to include these cues in the robotic system.

- Guidance Control Layer: This initial layer is in charge of the low-level control of the robots, implementing a Trajectory Driven Guidance with Impedance Control.

- Guidance Information Management Layer: This second layer collects real time data of the Guidance Control Layer and generates meaningful information to be used as robot-to-human feedback.

- User Interface Layer: This last layer is the one in charge of presenting the guidance feedback to operators, using different cues to this end.

4. Guidance Control Layer

4.1. Guidance Module

- Project the current robot pose in the vector , where is the current setpoint and is the end of the current segment of the trajectory.

- At this step, the corrected advance vector is calculated using the projection vector and correction factor . It allows reducing the advance when the robot’s trajectory coverage is above the other robot’s, and increasing this advance otherwise.The correction factor is calculated using values , , and , where is the percentage of the trajectory covered by the robot arm, is the percentage of the trajectory of the other robot arm, and parameter allows to tune this correction factor, adjusting the increase and decrease rate. If takes high values, the robot that has covered less trajectory percentage will be boosted (a maximum of 2) while the robot with greater trajectory percentage covered will be dampened (a minimum of 0). Otherwise, if is set to 0, there will not be any kind of coordination between the robots and the value of will always be 1.Additionally, the direction of the projection vector is checked; if the vector points backwards the correction factor is set to 0 to avoid reverse movements.

- The new translation vector is calculated aswhile quaternion is interpolated between the rotations of poses and using the spherical linear interpolation [26] aswhere w is calculated as

- Finally, the desired set point is composed using translation vector and rotation matrix created from quaternion as

4.2. Impedance Control Module

5. Guidance Information Management Layer

6. User Interface Layer

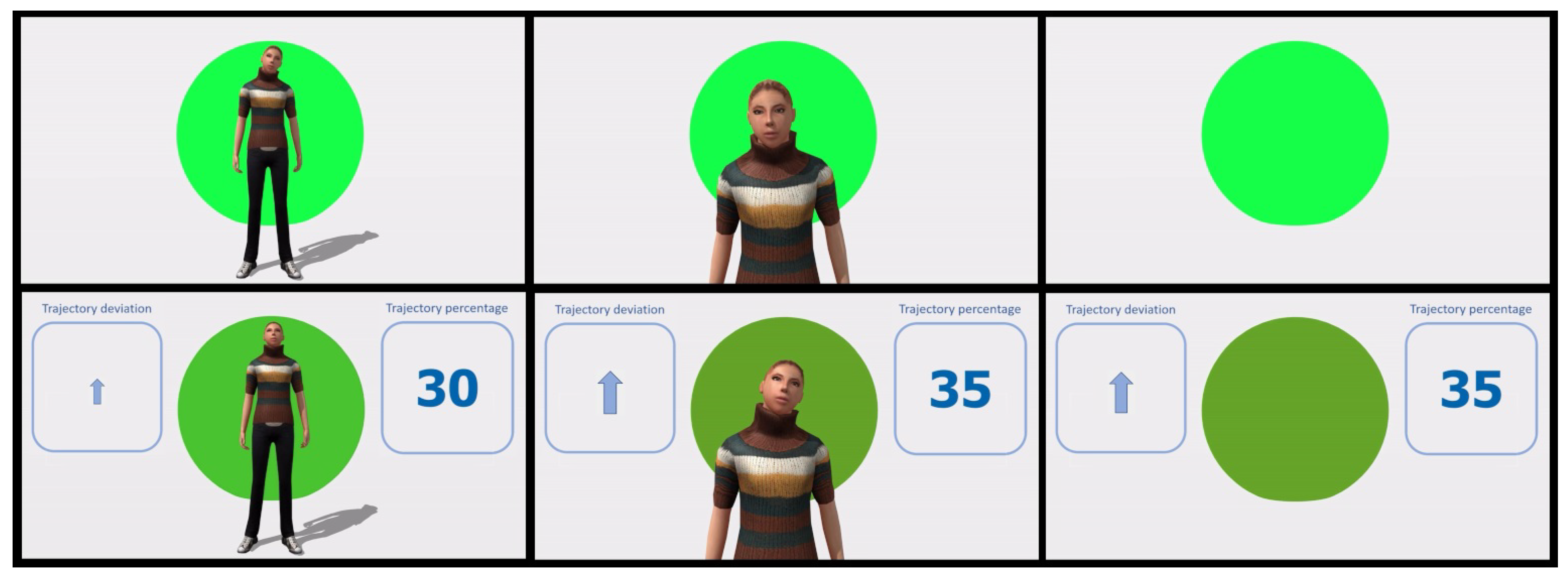

6.1. User Study 1

- Full body avatar and background color (A)

- Avatar torso and background colour (B)

- Background colour (C)

- Dashboard, full body avatar and background color (D)

- Dashboard, avatar torso and background colour (E)

- Dashboard and background colour (F)

- Participants’ opinions about the use of the avatar were split: Some participants appreciated that the avatar “makes (it) more comfortable to interact with a robot”, other participants expressed dissatisfaction with it: “I already have my partner that bosses me around at home, I don’t need another one in the workshop”. Participants communicated that having only the avatar torso was more useful than the full body size avatar as the legs do not convey any task related information. Furthermore, the avatar’s head movements were subtle and not all participants understood/noticed them; therefore, for future development more pronounced head movements were suggested.

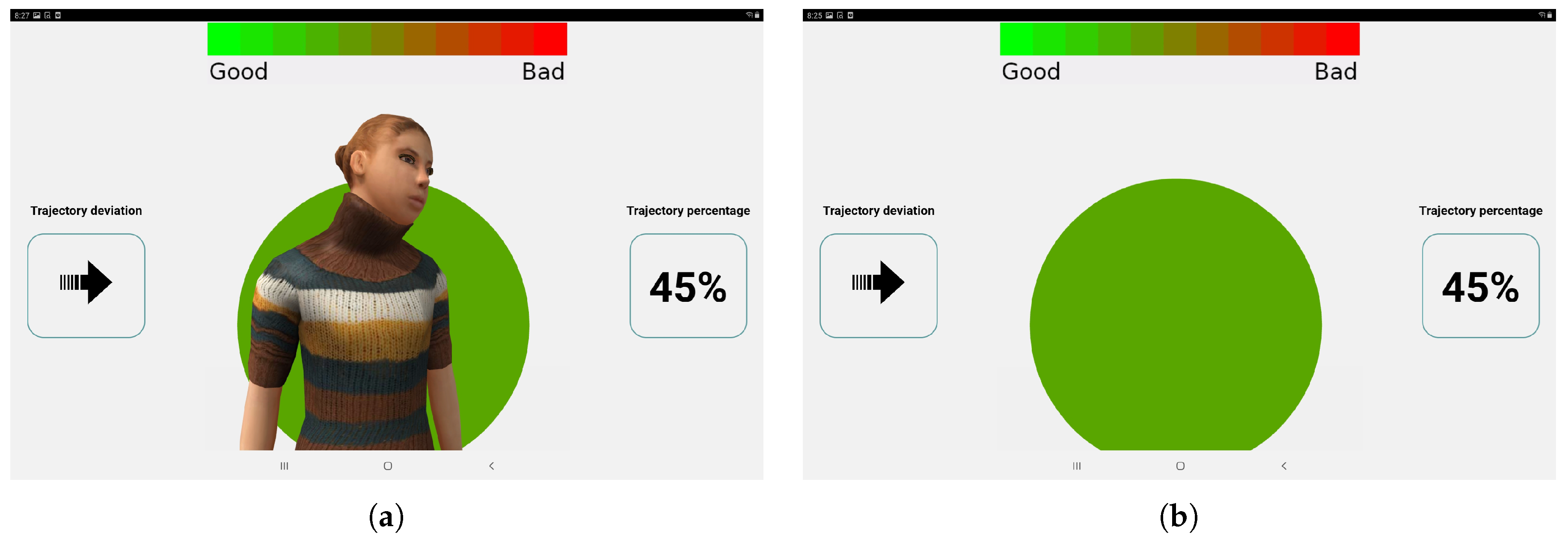

- In relation to the other user interface features, participants indicated that the background circle should be accompanied by a benchmark scale indicating trajectory from “good” to “bad”.

- Participants also commented that it might be useful to introduce commands or a feedback display to keep the communication less ambiguous. Some of the participants indicated that this could be done via audio feedback to the user.

6.2. User Study 2: Laboratory Results

- Dashboard with the deviation from the trajectory and trajectory percentage.

- Avatar with head movements to indicate the deviation from the trajectory.

- Background color indicating the deviation from the trajectory.

- Voice commands indicating deviations from the trajectory.

7. Implementation

8. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Roveda, L.; Castaman, N.; Ghidoni, S.; Franceschi, P.; Boscolo, N.; Pagello, E.; Pedrocchi, N. Human-Robot Cooperative Interaction Control for the Installation of Heavy and Bulky Components. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 339–344. [Google Scholar] [CrossRef]

- Roveda, L.; Haghshenas, S.; Caimmi, M.; Pedrocchi, N.; Molinari Tosatti, L. Assisting Operators in Heavy Industrial Tasks: On the Design of an Optimized Cooperative Impedance Fuzzy-Controller With Embedded Safety Rules. Front. Robot. AI 2019, 6, 75. [Google Scholar] [CrossRef]

- Lichiardopol, S.; van de Wouw, N.; Nijmeijer, H. Control scheme for human-robot co-manipulation of uncertain, time-varying loads. In Proceedings of the 2009 American Control Conference, St. Louis, MO, USA, 10–12 June 2009; pp. 1485–1490. [Google Scholar] [CrossRef]

- Hayes, B.; Scassellati, B. Challenges in shared-environment human-robot collaboration. Learning 2013, 8, 14217706. [Google Scholar]

- Dimeas, F.; Aspragathos, N. Reinforcement learning of variable admittance control for human-robot co-manipulation. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1011–1016. [Google Scholar] [CrossRef]

- Otani, K.; Bouyarmane, K.; Ivaldi, S. Generating Assistive Humanoid Motions for Co-Manipulation Tasks with a Multi-Robot Quadratic Program Controller. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 3107–3113. [Google Scholar] [CrossRef]

- Dimeas, F.; Aspragathos, N. Online Stability in Human-Robot Cooperation with Admittance Control. IEEE Trans. Haptics 2016, 9, 267–278. [Google Scholar] [CrossRef] [PubMed]

- Peternel, L.; Kim, W.; Babič, J.; Ajoudani, A. Towards ergonomic control of human-robot co-manipulation and handover. In Proceedings of the 2017 IEEE-RAS 17th International Conference on Humanoid Robotics (Humanoids), Birmingham, UK, 15–17 November 2017; pp. 55–60. [Google Scholar] [CrossRef]

- Su, H.; Hu, Y.; Karimi, H.R.; Knoll, A.; Ferrigno, G.; De Momi, E. Improved recurrent neural network-based manipulator control with remote center of motion constraints: Experimental results. Neural Netw. 2020, 131, 291–299. [Google Scholar] [CrossRef] [PubMed]

- Roveda, L.; Maskani, J.; Franceschi, P.; Abdi, A.; Braghin, F.; Tosatti, L.; Pedrocchi, N. Model-Based Reinforcement Learning Variable Impedance Control for Human-Robot Collaboration. J. Intell. Robot. Syst. 2020, 100, 417–433. [Google Scholar] [CrossRef]

- Su, H.; Qi, W.; Yang, C.; Sandoval, J.; Ferrigno, G.; Momi, E.D. Deep Neural Network Approach in Robot Tool Dynamics Identification for Bilateral Teleoperation. IEEE Robot. Autom. Lett. 2020, 5, 2943–2949. [Google Scholar] [CrossRef]

- Gan, Y.; Duan, J.; Chen, M.; Dai, X. Multi-Robot Trajectory Planning and Position/Force Coordination Control in Complex Welding Tasks. Appl. Sci. 2019, 9, 924. [Google Scholar] [CrossRef]

- Jlassi, S.; Tliba, S.; Chitour, Y. An Online Trajectory generator-Based Impedance control for co-manipulation tasks. In Proceedings of the 2014 IEEE Haptics Symposium (HAPTICS), Houston, TX, USA, 23–26 February 2014; pp. 391–396. [Google Scholar] [CrossRef]

- Raiola, G.; Lamy, X.; Stulp, F. Co-manipulation with multiple probabilistic virtual guides. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 7–13. [Google Scholar] [CrossRef]

- Sanders, T.L.; Wixon, T.; Schafer, K.E.; Chen, J.Y.; Hancock, P. The influence of modality and transparency on trust in human-robot interaction. In Proceedings of the 2014 IEEE International Inter-Disciplinary Conference on Cognitive Methods in Situation Awareness and Decision Support (CogSIMA), San Antonio, TX, USA, 3–6 March 2014; pp. 156–159. [Google Scholar]

- Wortham, R.H.; Theodorou, A. Robot transparency, trust and utility. Connect. Sci. 2017, 29, 242–248. [Google Scholar] [CrossRef]

- Lakhmani, S.G.; Wright, J.L.; Schwartz, M.R.; Barber, D. Exploring the Effect of Communication Patterns and Transparency on Performance in a Human-Robot Team. Proc. Hum. Factors Ergon. Soc. Annu. 2019, 63, 160–164. [Google Scholar] [CrossRef]

- Hayes, B.; Shah, J.A. Improving robot controller transparency through autonomous policy explanation. In Proceedings of the 2017 12th ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6 March 2017; pp. 303–312. [Google Scholar]

- Wright, J.L.; Chen, J.Y.; Barnes, M.J.; Hancock, P.A. Agent Reasoning Transparency: The Influence of Information Level on Automation Induced Complacency; Technical Report; US Army Research Laboratory Aberdeen Proving Ground: Aberdeen Proving Ground, MD, USA, 2017. [Google Scholar]

- Chen, J.; Ren, B.; Song, X.; Luo, X. Revealing the “Invisible Gorilla” in Construction: Assessing Mental Workload through Time-frequency Analysis. In Proceedings of the 32nd International Symposium on Automation and Robotics in Construction, Oulu, Finland, 15–18 June 2015; Volume 32, p. 1. [Google Scholar]

- Saxby, D.J.; Matthews, G.; Warm, J.S.; Hitchcock, E.M.; Neubauer, C. Active and passive fatigue in simulated driving: Discriminating styles of workload regulation and their safety impacts. J. Exp. Psychol. Appl. 2013, 19, 287. [Google Scholar] [CrossRef]

- Weiss, A.; Huber, A.; Minichberger, J.; Ikeda, M. First Application of Robot Teaching in an Existing Industry 4.0 Environment: Does It Really Work? Societies 2016, 6, 20. [Google Scholar] [CrossRef]

- Hogan, N. Impedance Control: An Approach to Manipulation. In Proceedings of the 1984 American Control Conference, San Diego, CA, USA, 6–8 June 1984; pp. 304–313. [Google Scholar] [CrossRef]

- Hogan, N. Impedance Control: An Approach to Manipulation: Part I—Theory. J. Dyn. Syst. Meas. Control. 1985, 107, 1–7. Available online: https://asmedigitalcollection.asme.org/dynamicsystems/article-pdf/107/1/1/5492345/1_1.pdf (accessed on 28 October 2020). [CrossRef]

- Ibarguren, A.; Daelman, P.; Prada, M. Control Strategies for Dual Arm Co-Manipulation of Flexible Objects in Industrial Environments. In Proceedings of the 2020 IEEE International Conference on Industrial Cyber Physical Systems (ICPS), Tampere, Finland, 9–12 June 2020. [Google Scholar]

- Shoemake, K. Animating Rotation with Quaternion Curves. In Proceedings of the 12th Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH ’85, San Francisco, CA, USA, 22–26 July 1985; Association for Computing Machinery: New York, NY, USA, 1985; pp. 245–254. [Google Scholar] [CrossRef]

- Albu-Schaffer, A.; Hirzinger, G. Cartesian impedance control techniques for torque controlled light-weight robots. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No.02CH37292), Washington, DC, USA, 11–15 May 2002; Volume 1, pp. 657–663. [Google Scholar] [CrossRef]

- Albu-Schaffer, A.; Ott, C.; Frese, U.; Hirzinger, G. Cartesian impedance control of redundant robots: Recent results with the DLR-light-weight-arms. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation (Cat. No.03CH37422), Taipei, Taiwan, 14–19 September 2003; Volume 3, pp. 3704–3709. [Google Scholar] [CrossRef]

- Selkowitz, A.R.; Lakhmani, S.G.; Larios, C.N.; Chen, J.Y. Agent transparency and the autonomous squad member. Hum. Factors Ergon. Soc. Annu. Meet. 2016, 60, 1319–1323. [Google Scholar] [CrossRef]

- Tufte, E. The Visual Display of Quantitative Information; Graphics Press: Cheshire, CT, USA, 1973. [Google Scholar]

- Lamont, D.; Kenyon, S.; Lyons, G. Dyslexia and mobility-related social exclusion: The role of travel information provision. J. Transp. Geogr. 2013, 26, 147–157. [Google Scholar] [CrossRef]

- Ben-Bassat, T.; Shinar, D. Ergonomic guidelines for traffic sign design increase sign comprehension. Hum. Factors 2006, 48, 182–195. [Google Scholar] [CrossRef] [PubMed]

- Maurer, B.; Lankes, M.; Tscheligi, M. Where the eyes meet: Lessons learned from shared gaze-based interactions in cooperative and competitive online games. Entertain. Comput. 2018, 27, 47–59. [Google Scholar] [CrossRef]

- Wahn, B.; Schwandt, J.; Krüger, M.; Crafa, D.; Nunnendorf, V.; König, P. Multisensory teamwork: Using a tactile or an auditory display to exchange gaze information improves performance in joint visual search. Ergonomics 2016, 59, 781–795. [Google Scholar] [CrossRef] [PubMed]

- Charalambous, G.; Fletcher, S.; Webb, P. The development of a scale to evaluate trust in industrial human-robot collaboration. Int. J. Soc. Robot. 2016, 8, 193–209. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Applying the user experience questionnaire (UEQ) in different evaluation scenarios. In Proceedings of the International Conference of Design, User Experience, and Usability, Heraklion, Crete, Greece, 22–27 June 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 383–392. [Google Scholar]

| Avatar Torso and Background | Dashboard, Avatar Torso and Background | Dashboard and Background | Background | Full Body Avatar and Background | Dashboard, Full Body Avatar and Background | |

|---|---|---|---|---|---|---|

| Avatar torso and background | p = 0.043 | p = 0.007 | p = 0.025 | p = 0.072 | ||

| Dashboard, avatar torso and background | p = 0.021 | p = 0.463 | ||||

| Dashboard and background | p = 0.018 | p = 0.034 | ||||

| Background | p = 0.017 | |||||

| Full body avatar and background | p = 0.378 | |||||

| Mean (SD) | 20.63 (4.11) | 31.42 (4.95) | 45.85 (6.82) | 42.39 (7.63) | 21.87 (4.28) | 31.27 (4.49) |

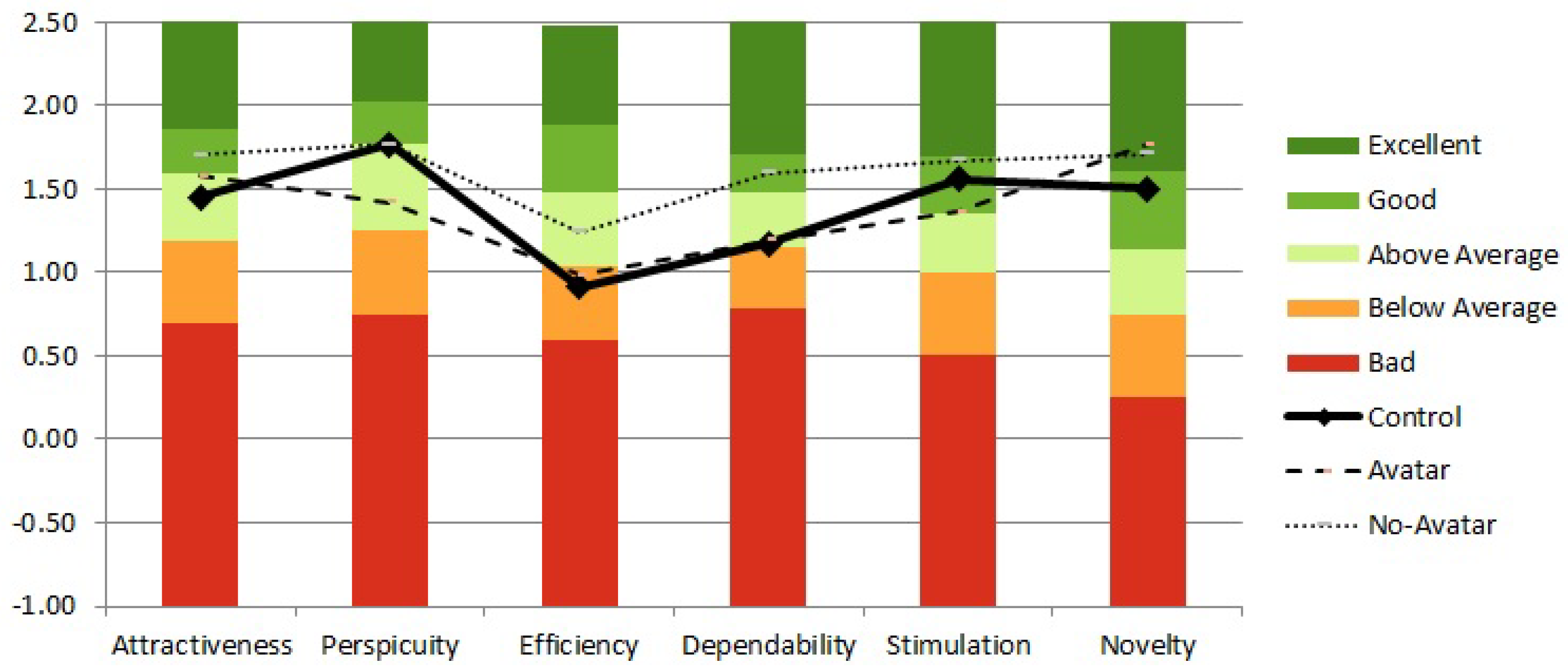

| Control | Avatar | No-Avatar | ||||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | |

| Behavioral data | ||||||

| Completion time (sec) | 42.71 | 10.74 | 45.57 | 12.56 | 43.85 | 11.68 |

| Mean deviation | 46.58 | 12.08 | 39.69 | 7.30 | 42.15 | 13.18 |

| User Experience Questionnaire | ||||||

| Attractiveness | 1.45 | 0.69 | 1.58 | 0.98 | 1.70 | 0.78 |

| Perspicuity | 1.77 | 0.85 | 1.42 | 0.97 | 1.77 | 0.75 |

| Efficiency | 0.92 | 0.71 | 0.98 | 0.88 | 1.24 | 0.86 |

| Dependability | 1.17 | 0.70 | 1.19 | 0.74 | 1.60 | 0.67 |

| Stimulation | 1.56 | 0.46 | 1.3 | 1.10 | 1.67 | 0.74 |

| Novelty | 1.50 | 0.87 | 1.77 | 0.89 | 1.71 | 0.97 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ibarguren, A.; Eimontaite, I.; Outón, J.L.; Fletcher, S. Dual Arm Co-Manipulation Architecture with Enhanced Human–Robot Communication for Large Part Manipulation. Sensors 2020, 20, 6151. https://doi.org/10.3390/s20216151

Ibarguren A, Eimontaite I, Outón JL, Fletcher S. Dual Arm Co-Manipulation Architecture with Enhanced Human–Robot Communication for Large Part Manipulation. Sensors. 2020; 20(21):6151. https://doi.org/10.3390/s20216151

Chicago/Turabian StyleIbarguren, Aitor, Iveta Eimontaite, José Luis Outón, and Sarah Fletcher. 2020. "Dual Arm Co-Manipulation Architecture with Enhanced Human–Robot Communication for Large Part Manipulation" Sensors 20, no. 21: 6151. https://doi.org/10.3390/s20216151

APA StyleIbarguren, A., Eimontaite, I., Outón, J. L., & Fletcher, S. (2020). Dual Arm Co-Manipulation Architecture with Enhanced Human–Robot Communication for Large Part Manipulation. Sensors, 20(21), 6151. https://doi.org/10.3390/s20216151