A Novel Method with Stacking Learning of Data-Driven Soft Sensors for Mud Concentration in a Cutter Suction Dredger

Abstract

1. Introduction

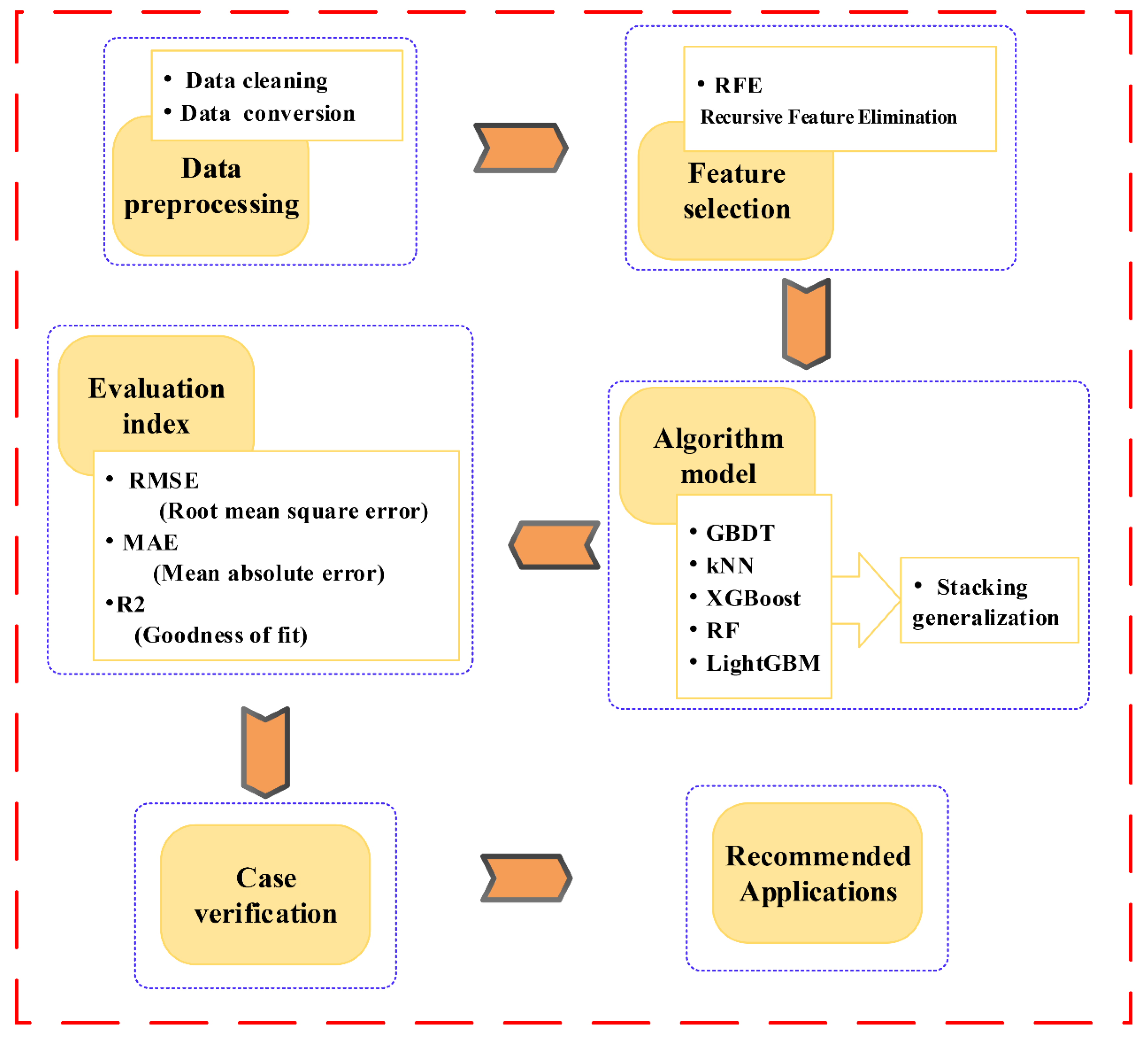

2. Analysis and Methods

2.1. Data Preprocessing

2.1.1. Data Cleaning

2.1.2. Data Conversion

2.2. Feature Selection with RFE

2.3. Stacking Generalization

2.3.1. Stacking Generalization (SG)

| Algorithm 1. Stacking algorithm. |

| Inputs: Training sets Base model Meta-model Process: 1: for do 2: 3: end for 4: 5: for do 6: for do 7: 8: end for 9: 10: end for 11: Outputs: |

2.3.2. Gradient Boosting Decision Tree (GBDT)

| Algorithm 2. Gradient boosting decision tree (GBDT) algorithm. |

| Inputs: Training data set: Loss function (1) Initialize: (2) For m = 1, 2, …, M (a) For i = 1, 2, …, N, compute (b) Fit a regression tree to the targets giving terminal regions Rjm, j = 1, 2,…, Jm. (c) For j = 1, 2, …, Jm compute (d) Update Outputs: |

2.3.3. K-Nearest Neighbor (KNN)

| Algorithm3. K-nearest neighbor (KNN) algorithm. |

| Input: Label data set Pseudocode labeled unlabeled data set The parameter K in the KNN algorithm Process 1. for j = 1: u 2. for i = 1: l 3. Calculate the Euclidean distance between and 4. end 5. Sort the labelled data set in ascending order according to the distance 6. Select the first K data with tags, record their distance and tag information 7. for k = 1: K 8. 9. end 11. end 12. Get pseudo-labelled data set Outputs: |

2.3.4. Extreme Gradient Boosting (XGBoost)

| Algorithm 4. Extreme gradient boosting (XGBoost) algorithm. |

| Input: I, instance set of current node

Input: d, feature dimension Gain ← 0 for k = 1 to m do for j in sorted (I, by ) do end end Output: Split with max score |

2.3.5. Random Forest (RF)

2.3.6. Light Gradient Boosting Machine (LightGBM)

2.4. Evaluation Index

- (1)

- Root mean square error (RMSE)

- (2)

- Mean absolute error (MAE)

- (3)

- Goodness of fit (R2)

3. Case Study

3.1. Case 1

3.1.1. Method

3.1.2. Evaluation

3.2. Case 2

3.2.1. Method

3.2.2. Evaluation

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Helmons, R.L.J.; Miedema, S.A.; Grima, M.A.; Rhee, C. Modeling fluid pressure effects when cutting saturated rock. Eng. Geol. 2016, 211, 50–60. [Google Scholar] [CrossRef]

- Zhang, M.; Fan, S.; Zhu, H.; Han, S. Numerical Simulation of Solid-Fluid 2-Phase-Flow of Cutting System for Cutter Suction Dredgers. Pol. Marit. Res. 2018, 25, 117–124. [Google Scholar] [CrossRef]

- Tang, J.-Z.; Wang, Q.-F.; Bi, Z.-Y. Expert system for operation optimization and control of cutter suction dredger. Expert Syst. Appl. 2008, 34, 2180–2192. [Google Scholar] [CrossRef]

- Wei, C.; Ni, F.; Chen, X. Obtaining Human Experience for Intelligent Dredger Control: A Reinforcement Learning Approach. Appl. Sci. 2019, 9, 1769. [Google Scholar] [CrossRef]

- Bai, S.; Li, M.; Kong, R.; Han, S.; Li, H.; Qin, L. Data mining approach to construction productivity prediction for cutter suction dredgers. Autom. Constr. 2019, 105, 102833. [Google Scholar] [CrossRef]

- Li, M.; Kong, R.; Han, S.; Tian, G.; Qin, L. Novel Method of Construction-Efficiency Evaluation of Cutter Suction Dredger Based on Real-Time Monitoring Data. J. Waterw. Port Coast. Ocean Eng. 2018, 144, 05018007. [Google Scholar] [CrossRef]

- Wang, X.; Hu, Y.; Hu, H.; Li, L. Evaluation of the Performance of Capacitance Sensor for Concentration Measurement of Gas/Solid Particles Flow by Coupled Fields. IEEE Sens. J. 2017, 17, 3754–3764. [Google Scholar] [CrossRef]

- Michot, D.; Benderitter, Y.; Dorigny, A.; Nicoullaud, B.; King, D.; Tabbagh, A. Spatial and temporal monitoring of soil water content with an irrigated corn crop cover using surface electrical resistivity tomography. Water Resour. Res. 2003, 39, 1138. [Google Scholar] [CrossRef]

- Tian, W.; Liang, X.; Qu, X.; Sun, J.; Gao, S.; Xu, L.; Yang, W. Investigation of Multi-Plane Scheme for Compensation of Fringe Effect of Electrical Resistance Tomography Sensor. Sensors 2019, 19, 3132. [Google Scholar] [CrossRef]

- Paulsson, D.; Gustavsson, R.; Mandenius, C.F. A soft sensor for bioprocess control based on sequential filtering of metabolic heat signals. Sensors 2014, 14, 17864–17882. [Google Scholar] [CrossRef]

- Canete, J.F.; Saz-Orozco, P.; Baratti, R.; Mulas, M.; Ruano, A.; Garcia-Cerezo, A. Soft-sensing estimation of plant effluent concentrations in a biological wastewater treatment plant using an optimal neural network. Expert Syst. Appl. 2016, 63, 8–19. [Google Scholar] [CrossRef]

- Gholami, A.; Shahbazian, M.; Safian, G. Soft Sensor Development for Distillation Columns Using Fuzzy C-Means and the Recursive Finite Newton Algorithm with Support Vector Regression (RFN-SVR). Ind. Eng. Chem. Res. 2015, 54, 12031–12039. [Google Scholar] [CrossRef]

- Xu, W.; Zhang, L.; Gu, X. Soft sensor for ammonia concentration at the ammonia converter outlet based on an improved particle swarm optimization and BP neural network. Chem. Eng. Res. Des. 2011, 89, 2102–2109. [Google Scholar] [CrossRef]

- Wang, B.; Fan, S.; Wen, Q.; Liu, B.; Zhang, M. Digital Simulation Matching Calculation of Suction System of Cutter Suction Dredger. Ship Eng. 2020, 42, 61–67. [Google Scholar]

- Tang, J.; Wang, Q.; Zhong, T. Automatic monitoring and control of cutter suction dredger. Autom. Constr. 2009, 18, 194–203. [Google Scholar] [CrossRef]

- Spann, R.; Roca, C.; Kold, D.; Lantz, A.E.; Gernaey, K.V.; Sin, G. A probabilistic model-based soft sensor to monitor lactic acid bacteria fermentations. Biochem. Eng. J. 2018, 135, 49–60. [Google Scholar] [CrossRef]

- Zhang, J. Offset-Free Inferential Feedback Control of Distillation Compositions Based on PCR and PLS Models. Chem. Eng. Technol. 2006, 29, 560–566. [Google Scholar] [CrossRef]

- Miao, A.; Li, P.; Ye, L. Locality preserving based data regression and its application for soft sensor modelling. Can. J. Chem. Eng. 2016, 94, 1977–1986. [Google Scholar] [CrossRef]

- Lee, J.-M.; Yoo, C.; Choi, S.W.; Vanrolleghem, P.A.; Lee, I.-B. Nonlinear process monitoring using kernel principal component analysis. Chem. Eng. Sci. 2004, 59, 223–234. [Google Scholar] [CrossRef]

- Khosrozade, A.; Mehranbod, N. Comparison of support vector regression- and neural network-based soft sensors for cement plant exhaust gas composition. Int. J. Environ. Sci. Technol. 2019, 17, 2865–2874. [Google Scholar] [CrossRef]

- Pisa, I.; Santin, I.; Vicario, J.L.; Morell, A.; Vilanova, R. ANN-Based Soft Sensor to Predict Effluent Violations in Wastewater Treatment Plants. Sensors 2019, 19, 1280. [Google Scholar] [CrossRef] [PubMed]

- Mei, C.; Su, Y.; Liu, G.; Ding, Y.; Liao, Z. Dynamic soft sensor development based on Gaussian mixture regression for fermentation processes. Chin. J. Chem. Eng. 2017, 25, 116–122. [Google Scholar] [CrossRef]

- Dai, X.; Wang, W.; Ding, Y.; Sun, Z. “Assumed inherent sensor” inversion based ANN dynamic soft-sensing method and its application in erythromycin fermentation process. Comput. Chem. Eng. 2006, 30, 1203–1225. [Google Scholar] [CrossRef]

- Bidar, B.; Shahraki, F.; Sadeghi, J.; Khalilipour, M.M. Soft Sensor Modeling Based on Multi-State-Dependent Parameter Models and Application for Quality Monitoring in Industrial Sulfur Recovery Process. IEEE Sens. J. 2018, 18, 4583–4591. [Google Scholar] [CrossRef]

- Chen, J.; Gui, W.; Dai, J.; Yuan, X.; Chen, N. An ensemble just-in-time learning soft-sensor model for residual lithium concentration prediction of ternary cathode materials. J. Chemom. 2020, 34, e3225. [Google Scholar] [CrossRef]

- Han, H.; Zhu, S.; Qiao, J.; Guo, M. Data-driven intelligent monitoring system for key variables in wastewater treatment process. Chin. J. Chem. Eng. 2018, 26, 2093–2101. [Google Scholar] [CrossRef]

- He, J.; Zhang, J.; Shang, H. A soft sensor for the sulphur dioxide converter in an industrial smelter. Can. J. Chem. Eng. 2017, 95, 1093–1100. [Google Scholar] [CrossRef]

- Kazemi, P.; Steyer, J.-P.; Bengoa, C.; Font, J.; Giralt, J. Robust Data-Driven Soft Sensors for Online Monitoring of Volatile Fatty Acids in Anaerobic Digestion Processes. Processes 2020, 8, 67. [Google Scholar] [CrossRef]

- Murugan, C.; Natarajan, P. Estimation of fungal biomass using multiphase artificial neural network based dynamic soft sensor. J. Microbiol. Methods 2019, 159, 5–11. [Google Scholar] [CrossRef]

- Balram, D.; Lian, K.Y.; Sebastian, N. Air quality warning system based on a localized PM2.5 soft sensor using a novel approach of Bayesian regularized neural network via forward feature selection. Ecotoxicol. Environ. Saf. 2019, 182, 109386. [Google Scholar] [CrossRef]

- Bi, Z.; Wang, Q.F.; Tang, J.Z. Soft Sensor Model for Dredging Discharge Pipeline Slurry Concentration Measurement Based on Radial Basis Function Neural Network. Chin. J. Sens. Actuators 2007, 20, 1630–1634. [Google Scholar]

- Wang, B.; Fan, S.; Jiang, P.; Xing, T.; Fang, Z.; Wen, Q. Research on predicting the productivity of cutter suction dredgers based on data mining with model stacked generalization. Ocean Eng. 2020, 217, 108001. [Google Scholar] [CrossRef]

- Wu, S.; Li, G.; Deng, L.; Wu, D.; Xie, Y.; Shi, L. L1 -Norm Batch Normalization for Efficient Training of Deep Neural Networks. IEEE Trans. Neural. Netw. Learn. Syst. 2018, 30, 2043–2051. [Google Scholar] [CrossRef] [PubMed]

- Wood, S.N.; Pya, N.; Säfken, B. Smoothing Parameter and Model Selection for General Smooth Models. J. Am. Stat. Assoc. 2016, 111, 1548–1563. [Google Scholar] [CrossRef]

- Guyon, I.; Weston, J.; Barnhill, S.; Vapnik, V. Gene Selection for Cancer Classification using Support Vector Machines. Mach. Learn. 2002, 46, 389–422. [Google Scholar] [CrossRef]

- Vapnik, V.N. The Nature of Statistical Learning Theory; Springer Science Business Media: Berlin, Gremany, 1995. [Google Scholar]

- Breiman, L. Stacked regressions. Mach. Learn. 1996, 24, 49–64. [Google Scholar] [CrossRef]

- Wolpert, D.H. Stacked generalization. Neural Netw. 1992, 5, 241–259. [Google Scholar] [CrossRef]

- Sagi, O.; Rokach, L. Ensemble learning: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, 1249. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy Function Approximation: A Gradient Boosting Machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Zhang, M.-L.; Zhou, Z.-H. ML-KNN: A lazy learning approach to multi-label learning. Pattern Recognit. 2007, 40, 2038–2048. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2006, 45, 243–254. [Google Scholar]

- Ke, G.; Meng, Q.; Finkey, T.; Wang, T. LightGBM: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 3146–3154. [Google Scholar]

- Tyralis, H.; Papacharalampous, G.; Langousis, A. Super ensemble learning for daily streamflow forecasting: Large-scale demonstration and comparison with multiple machine learning algorithms. Neural Comput. Appl. 2020, 1, 1–16. [Google Scholar]

| ID | F0 | F1 | F2 | F3 | F4 | F5 | … | F238 |

|---|---|---|---|---|---|---|---|---|

| Water Level (m) | 1 Main Pump Efficiency (%) | Left Trunnion Draft (m) | Right Trunnion Draft (m) | Trunnion Average Draft (m) | Ladder Length (Projection) (m) | … | Main Hydraulic Oil Tank Temperature (°C) | |

| 1 | 2.6863 | 21.3508 | 2.7771 | 2.5987 | 2.6879 | 34.5083 | … | 30.8449 |

| 2 | 1.9 | 21.3411 | 2.7641 | 2.6012 | 2.6826 | 34.5103 | … | 30.8449 |

| 3 | 1.9 | 21.3347 | 2.7608 | 2.6136 | 2.6872 | 34.5117 | … | 30.8449 |

| 4 | 1.9 | 21.3573 | 2.7594 | 2.5871 | 2.6733 | 34.5069 | … | 30.8449 |

| 5 | 1.9 | 21.3573 | 2.7594 | 2.5871 | 2.6733 | 34.5069 | … | 30.8449 |

| … | … | … | … | … | … | … | … | … |

| Model | Parameters |

|---|---|

| GBDT | eta = 0.05; max_depth = 4; n_estimators = 3000 |

| KNN | n_estimators = 1; leaf_size = 1; p = 1 |

| XGBoost | eta = 0.5; max_depth = 5; n_estimators = 2200 |

| RF | n_estimators = 100; max_depth = 100; random_state = 55 |

| LightGBM | eta = 0.05; n_estimators = 720; max_bin = 55; num_leaves = 5 |

| R2 | MAE | RMSE | |

|---|---|---|---|

| KNN | 0.4825 | 3.481 | 4.755 |

| LightGBM | 0.6887 | 2.745 | 3.686 |

| RF | 0.9106 | 1.286 | 1.975 |

| GBDT | 0.9113 | 1.276 | 1.968 |

| XGBoost | 0.9468 | 0.966 | 1.524 |

| SG Model 1 | SG Model 2 | SG Model 3 | SG Model 4 | SG Model 5 | |

|---|---|---|---|---|---|

| KNN | Meta model | Base model | Base model | Base model | Base model |

| LightGBM | Base model | Meta model | Base model | Base model | Base model |

| RF | Base model | Base model | Meta model | Base model | Base model |

| GBDT | Base model | Base model | Base model | Meta model | Base model |

| XGBoost | Base model | Base model | Base model | Base model | Meta model |

| R2 | 0.9023 | 0.9549 | 0.9745 | 0.9774 | 0.9330 |

| MAE | 1.362 | 0.924 | 0.626 | 0.595 | 1.152 |

| RMSE | 1.956 | 1.402 | 1.048 | 0.987 | 1.699 |

| Model | Parameters |

|---|---|

| GBDT | eta = 0.05; max_depth = 3; n_estimators = 2000 |

| KNN | n_estimators = 5; leaf_size = 50; p = 1 |

| XGBoost | eta = 0.01; max_depth = 5; n_estimators = 2000 |

| RF | n_estimators = 100; max_depth = 50; random_state = 55 |

| LightGBM | eta = 0.1; n_estimators = 720; max_bin = 55; num_leaves = 5 |

| R2 | MAE | RMSE | |

|---|---|---|---|

| LightGBM | 0.9309 | 1.9911 | 2.9591 |

| GBDT | 0.9367 | 1.7354 | 2.8323 |

| KNN | 0.9402 | 1.1952 | 2.7514 |

| XGBoost | 0.9649 | 1.1782 | 1.7856 |

| RF | 0.9681 | 1.0974 | 1.7651 |

| SG Model 1 | SG Model 2 | SG Model 3 | SG Model 4 | SG Model 5 | |

|---|---|---|---|---|---|

| KNN | Meta model | Base model | Base model | Base model | Base model |

| LightGBM | Base model | Meta model | Base model | Base model | Base model |

| RF | Base model | Base model | Meta model | Base model | Base model |

| GBDT | Base model | Base model | Base model | Meta model | Base model |

| XGBoost | Base model | Base model | Base model | Base model | Meta model |

| R2 | 0.9691 | 0.9904 | 0.9791 | 0.9919 | 0.9909 |

| MAE | 1.1030 | 0.6802 | 0.9731 | 0.6200 | 0.6491 |

| RMSE | 1.7963 | 1.107 | 1.6245 | 1.0205 | 1.075 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, B.; Fan, S.-d.; Jiang, P.; Zhu, H.-h.; Xiong, T.; Wei, W.; Fang, Z.-l. A Novel Method with Stacking Learning of Data-Driven Soft Sensors for Mud Concentration in a Cutter Suction Dredger. Sensors 2020, 20, 6075. https://doi.org/10.3390/s20216075

Wang B, Fan S-d, Jiang P, Zhu H-h, Xiong T, Wei W, Fang Z-l. A Novel Method with Stacking Learning of Data-Driven Soft Sensors for Mud Concentration in a Cutter Suction Dredger. Sensors. 2020; 20(21):6075. https://doi.org/10.3390/s20216075

Chicago/Turabian StyleWang, Bin, Shi-dong Fan, Pan Jiang, Han-hua Zhu, Ting Xiong, Wei Wei, and Zhen-long Fang. 2020. "A Novel Method with Stacking Learning of Data-Driven Soft Sensors for Mud Concentration in a Cutter Suction Dredger" Sensors 20, no. 21: 6075. https://doi.org/10.3390/s20216075

APA StyleWang, B., Fan, S.-d., Jiang, P., Zhu, H.-h., Xiong, T., Wei, W., & Fang, Z.-l. (2020). A Novel Method with Stacking Learning of Data-Driven Soft Sensors for Mud Concentration in a Cutter Suction Dredger. Sensors, 20(21), 6075. https://doi.org/10.3390/s20216075