Mapping Utility Poles in Aerial Orthoimages Using ATSS Deep Learning Method

Abstract

1. Introduction

2. Material and Methods

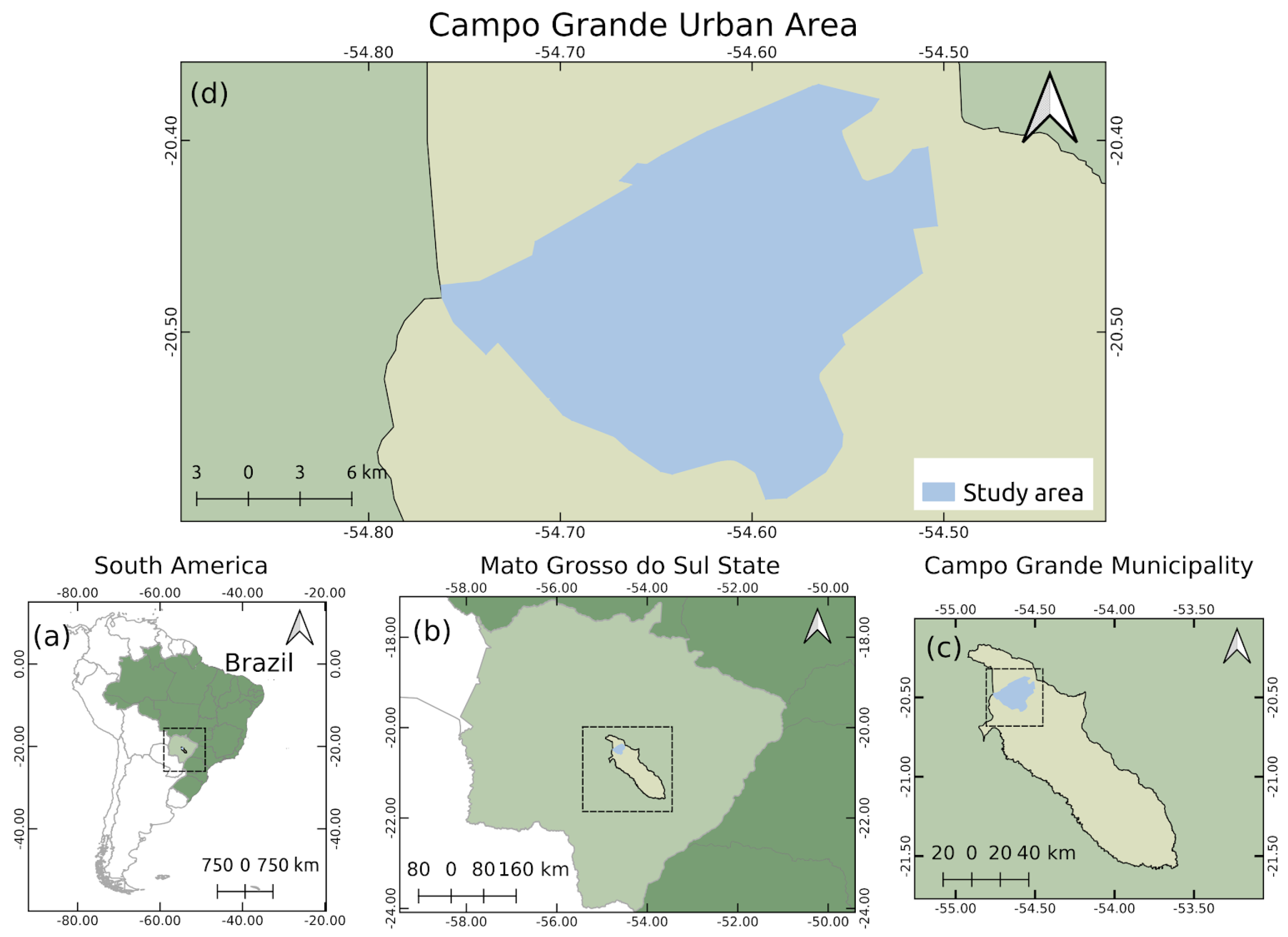

2.1. Study Area

2.2. Pole Detection Approach

2.3. Experimental Setup

2.4. Method Assessment

3. Results

3.1. Quantitative Analysis

3.2. Qualitative Analysis

3.3. Computational Costs

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Alam, M.M.; Zhu, Z.; Tokgoz, B.E.; Zhang, J.; Hwang, S. Automatic Assessment and Prediction of the Resilience of Utility Poles Using Unmanned Aerial Vehicles and Computer Vision Techniques. Int. J. Disaster Risk Sci. 2020, 11, 119–132. [Google Scholar] [CrossRef]

- Joukoski, A.; Portella, K.F.; Baron, O.; Garcia, C.M.; Verg, G.R.; Sales, A.; Paula, J.F.D. The influence of cement type and admixture on life span of reinforced concrete utility poles subjected to the high salinity environment of Northeastern Brazil, studied by corrosion potential testing. Cer A 2004, 50, 12–20. [Google Scholar] [CrossRef][Green Version]

- Das, S.; Storey, B.; Shimu, T.H.; Mitra, S.; Theel, M.; Maraghehpour, B. Severity analysis of tree and utility pole crashes: Applying fast and frugal heuristics. IATSS Res. 2020, 44, 85–93. [Google Scholar] [CrossRef]

- Lehtomäki, M.; Jaakkola, A.; Hyyppä, J.; Kukko, A.; Kaartinen, H. Detection of Vertical Pole-Like Objects in a Road Environment Using Vehicle-Based Laser Scanning Data. Remote Sens. 2010, 2, 641. [Google Scholar] [CrossRef]

- Sharma, H.; Adithya, V.; Dutta, T.; Balamuralidhar, P. Image Analysis-Based Automatic Utility Pole Detection for Remote Surveillance. In Proceedings of the 2015 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Adelaide, Australia, 23–25 November 2015; pp. 1–7. [Google Scholar]

- Cabello, F.; Iano, Y.; Arthur, R.; Dueñas, A.; León, J.; Caetano, D. Automatic Detection of Utility Poles Using the Bag of Visual Words Method for Different Feature Extractors. In Computer Analysis of Images and Patterns (CAIP 2017); Springer: Cham, Switzerland, 2017; pp. 116–126. [Google Scholar] [CrossRef]

- Meng, L.; Peng, Z.; Zhou, J.; Zhang, J.; Lu, Z.; Baumann, A.; Du, Y. Real-Time Detection of Ground Objects Based on Unmanned Aerial Vehicle Remote Sensing with Deep Learning: Application in Excavator Detection for Pipeline Safety. Remote Sens. 2020, 12, 182. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L.; Han, L.; Zhu, L. How Well Do Deep Learning-Based Methods for Land Cover Classification and Object Detection Perform on High Resolution Remote Sensing Imagery? Remote Sens. 2020, 12, 417. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Chaudhuri, U.; Banerjee, B.; Bhattacharya, A.; Datcu, M. CMIR-NET: A deep learning based model for cross-modal retrieval in remote sensing. Pattern Recognit. Lett. 2020, 131, 456–462. [Google Scholar] [CrossRef]

- Osco, L.P.; dos Santos de Arruda, M.; Marcato Junior, J.; da Silva, N.B.; Ramos, A.P.M.; Moryia, É.A.S.; Imai, N.N.; Pereira, D.R.; Creste, J.E.; Matsubara, E.T.; et al. A convolutional neural network approach for counting and geolocating citrus-trees in UAV multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2020, 160, 97–106. [Google Scholar] [CrossRef]

- Lobo Torres, D.; Queiroz Feitosa, R.; Nigri Happ, P.; Elena Cué La Rosa, L.; Marcato Junior, J.; Martins, J.; Olã Bressan, P.; Gonçalves, W.N.; Liesenberg, V. Applying Fully Convolutional Architectures for Semantic Segmentation of a Single Tree Species in Urban Environment on High Resolution UAV Optical Imagery. Sensors 2020, 20, 563. [Google Scholar] [CrossRef]

- Zhu, L.; Huang, L.; Fan, L.; Huang, J.; Huang, F.; Chen, J.; Zhang, Z.; Wang, Y. Landslide Susceptibility Prediction Modeling Based on Remote Sensing and a Novel Deep Learning Algorithm of a Cascade-Parallel Recurrent Neural Network. Sensors 2020, 20, 1576. [Google Scholar] [CrossRef] [PubMed]

- Castro, W.; Marcato Junior, J.; Polidoro, C.; Osco, L.P.; Gonçalves, W.; Rodrigues, L.; Santos, M.; Jank, L.; Barrios, S.; Valle, C.; et al. Deep Learning Applied to Phenotyping of Biomass in Forages with UAV-Based RGB Imagery. Sensors 2020, 20, 4802. [Google Scholar] [CrossRef] [PubMed]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Zhang, W.; Witharana, C.; Li, W.; Zhang, C.; Li, X.J.; Parent, J. Using Deep Learning to Identify Utility Poles with Crossarms and Estimate Their Locations from Google Street View Images. Sensors 2018, 18, 2484. [Google Scholar] [CrossRef] [PubMed]

- Tang, Q.; Wang, Z.; Majumdar, A.; Rajagopal, R. Fine-Grained Distribution Grid Mapping Using Street View Imagery. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Li, L.; Chen, J.; Mu, X.; Li, W.; Yan, G.; Xie, D.; Zhang, W. Quantifying Understory and Overstory Vegetation Cover Using UAV-Based RGB Imagery in Forest Plantation. Remote Sens. 2020, 12, 298. [Google Scholar] [CrossRef]

- Liu, L.; Zhang, T.; Zhao, K.; Wiliem, A.; Astin-Walmsley, K.; Lovell, B. Deep Inspection: An Electrical Distribution Pole Parts Study VIA Deep Neural Networks. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 4170–4174. [Google Scholar]

- Santos, A.A.D.; Marcato Junior, J.; Araújo, M.S.; Di Martini, D.R.; Tetila, E.C.; Siqueira, H.L.; Aoki, C.; Eltner, A.; Matsubara, E.T.; Pistori, H.; et al. Assessment of CNN-Based Methods for Individual Tree Detection on Images Captured by RGB Cameras Attached to UAVs. Sensors 2019, 19, 3595. [Google Scholar] [CrossRef]

- Santos, A.; Marcato Junior, J.; de Andrade Silva, J.; Pereira, R.; Matos, D.; Menezes, G.; Higa, L.; Eltner, A.; Ramos, A.P.; Osco, L.; et al. Storm-Drain and Manhole Detection Using the RetinaNet Method. Sensors 2020, 20, 4450. [Google Scholar] [CrossRef]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the Gap Between Anchor-based and Anchor-free Detection via Adaptive Training Sample Selection. arXiv 2019, arXiv:1912.02424. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2017, arXiv:1708.02002. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–3 November 2019. [Google Scholar]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. arXiv 2016, arXiv:1612.03144. [Google Scholar]

| Dataset | Number of Patches | Number of Orthoimages |

|---|---|---|

| Training | 60,124 | 634 |

| Validation | 19,869 | 212 |

| Testing | 19,480 | 211 |

| Total | 99,473 | 1057 |

| Method | ||

|---|---|---|

| Faster R-CNN | 0.875 | 0.682 |

| RetinaNet | 0.874 | 0.673 |

| ATSS | 0.913 | 0.749 |

| Size | ||

|---|---|---|

| 0.879 | 0.623 | |

| 0.913 | 0.749 | |

| 0.931 | 0.815 | |

| 0.944 | 0.854 | |

| 0.944 | 0.853 |

| Method | Average Time (s) | Area Scan Speed (m2/s) |

|---|---|---|

| Faster R-CNN | 0.048 | 13,651.14 |

| Retina Net | 0.047 | 13,943.82 |

| ATSS | 0.048 | 13,651.14 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gomes, M.; Silva, J.; Gonçalves, D.; Zamboni, P.; Perez, J.; Batista, E.; Ramos, A.; Osco, L.; Matsubara, E.; Li, J.; et al. Mapping Utility Poles in Aerial Orthoimages Using ATSS Deep Learning Method. Sensors 2020, 20, 6070. https://doi.org/10.3390/s20216070

Gomes M, Silva J, Gonçalves D, Zamboni P, Perez J, Batista E, Ramos A, Osco L, Matsubara E, Li J, et al. Mapping Utility Poles in Aerial Orthoimages Using ATSS Deep Learning Method. Sensors. 2020; 20(21):6070. https://doi.org/10.3390/s20216070

Chicago/Turabian StyleGomes, Matheus, Jonathan Silva, Diogo Gonçalves, Pedro Zamboni, Jader Perez, Edson Batista, Ana Ramos, Lucas Osco, Edson Matsubara, Jonathan Li, and et al. 2020. "Mapping Utility Poles in Aerial Orthoimages Using ATSS Deep Learning Method" Sensors 20, no. 21: 6070. https://doi.org/10.3390/s20216070

APA StyleGomes, M., Silva, J., Gonçalves, D., Zamboni, P., Perez, J., Batista, E., Ramos, A., Osco, L., Matsubara, E., Li, J., Marcato Junior, J., & Gonçalves, W. (2020). Mapping Utility Poles in Aerial Orthoimages Using ATSS Deep Learning Method. Sensors, 20(21), 6070. https://doi.org/10.3390/s20216070