Planetary-Scale Geospatial Open Platform Based on the Unity3D Environment

Abstract

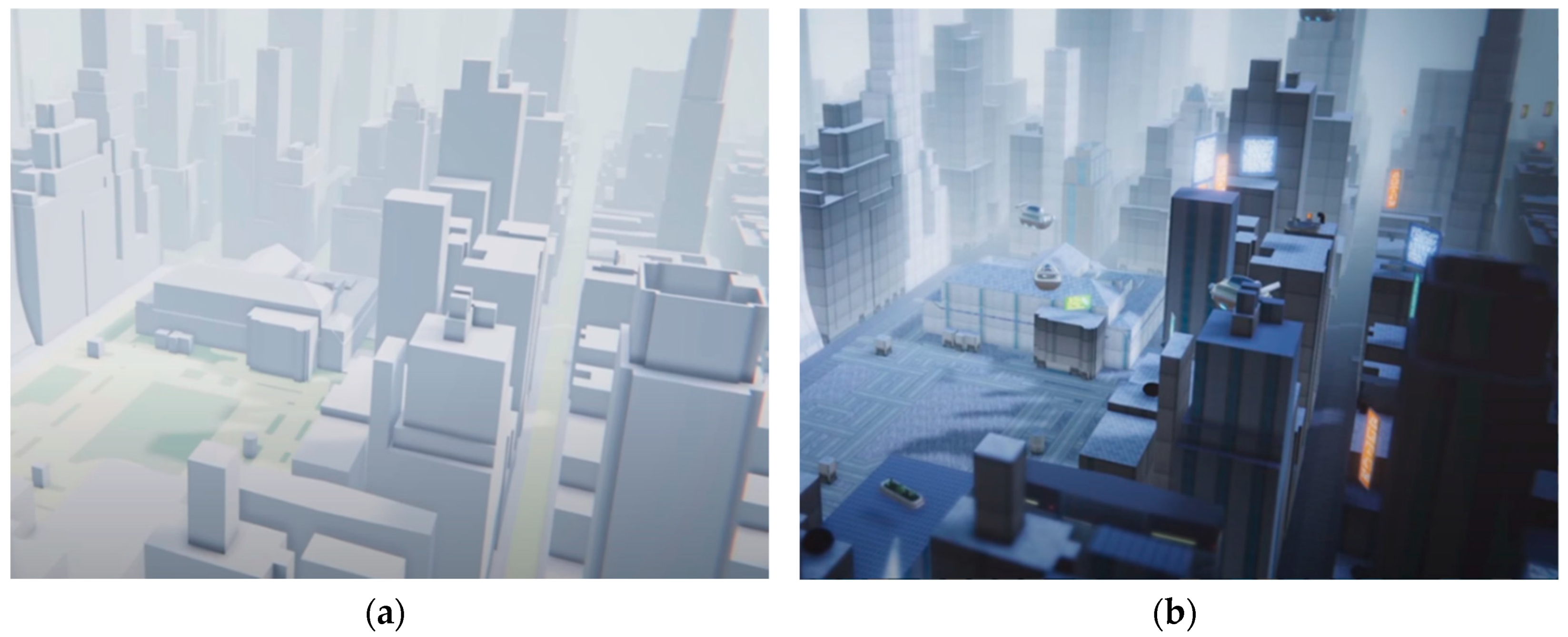

1. Introduction

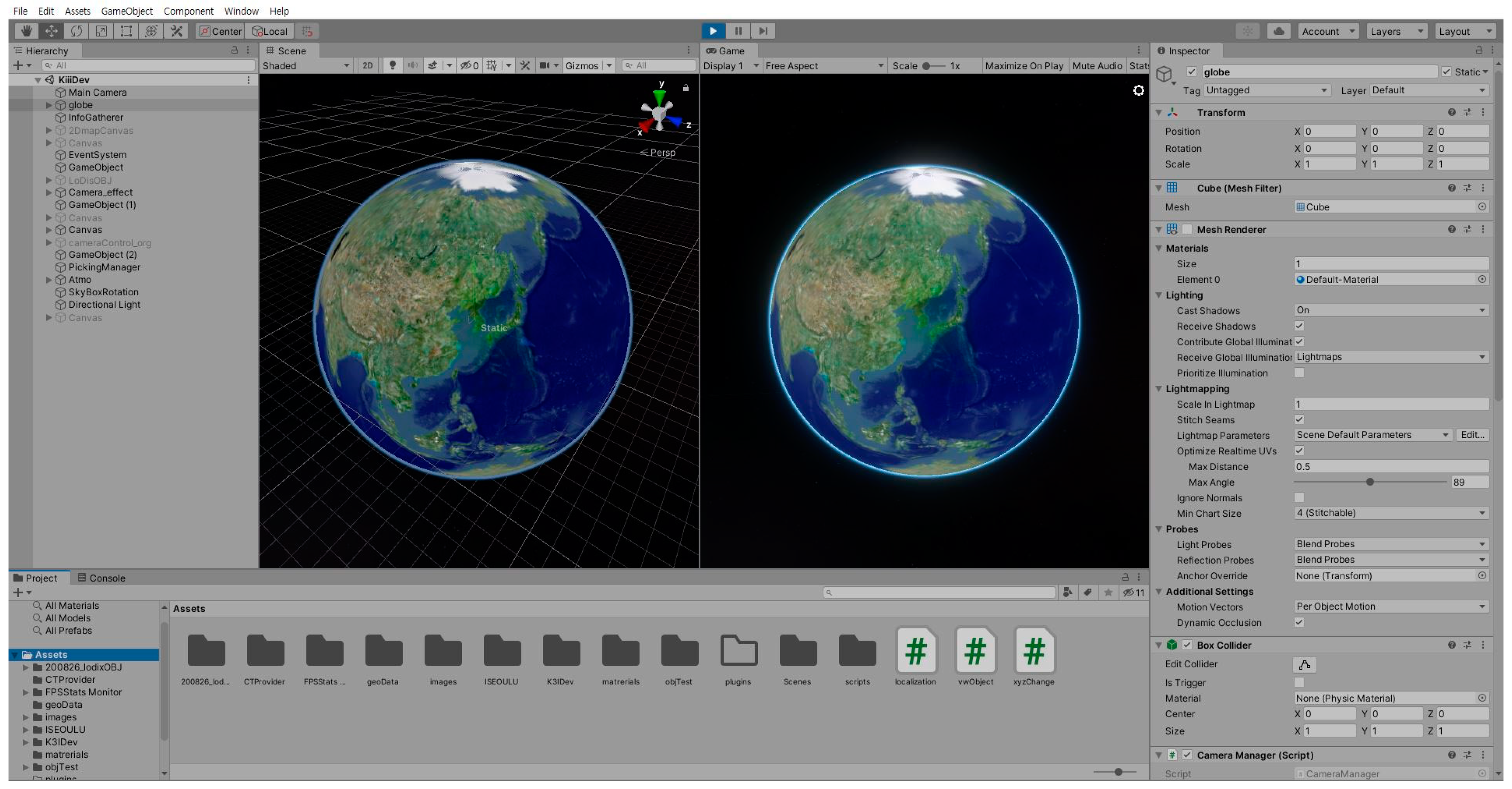

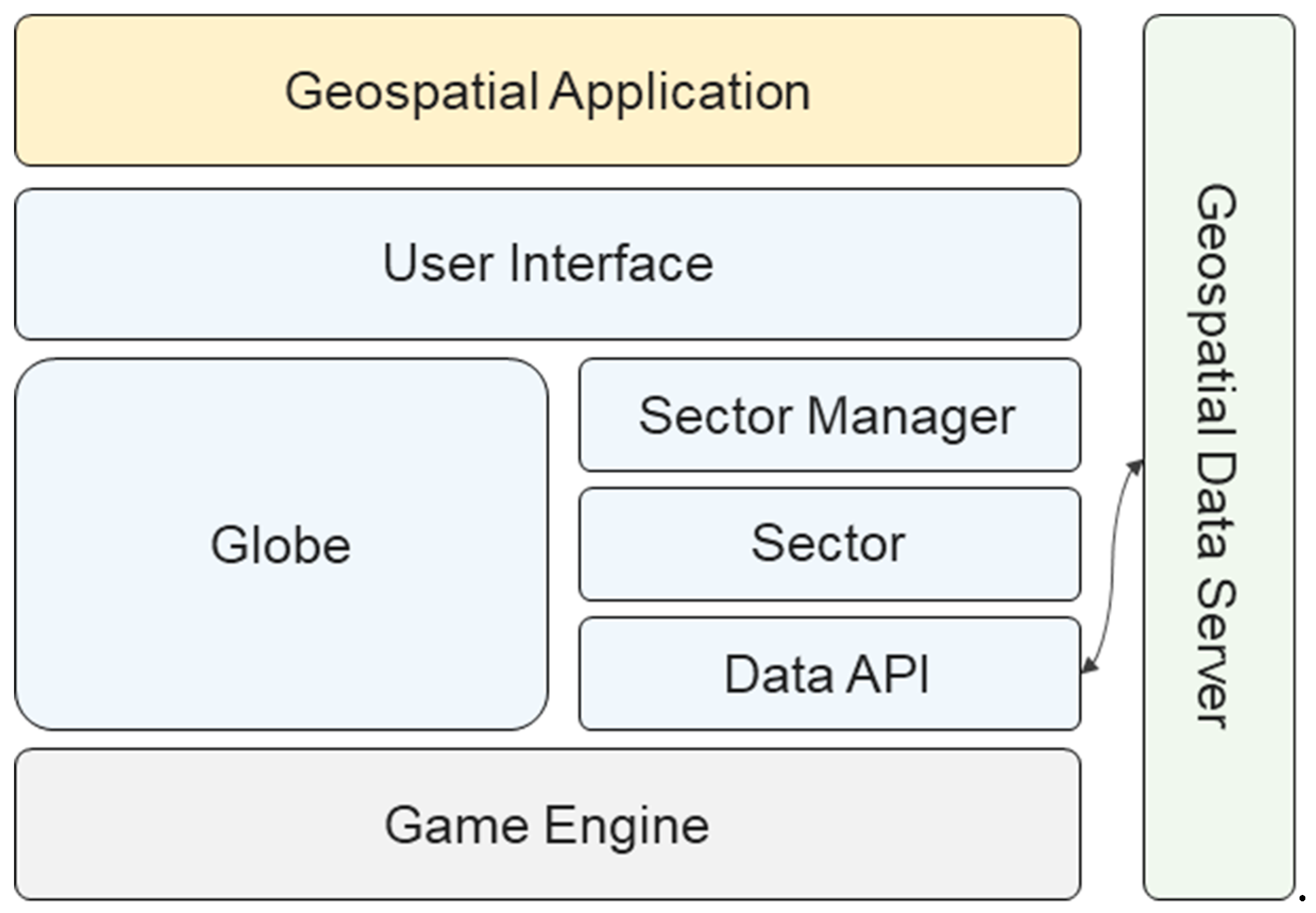

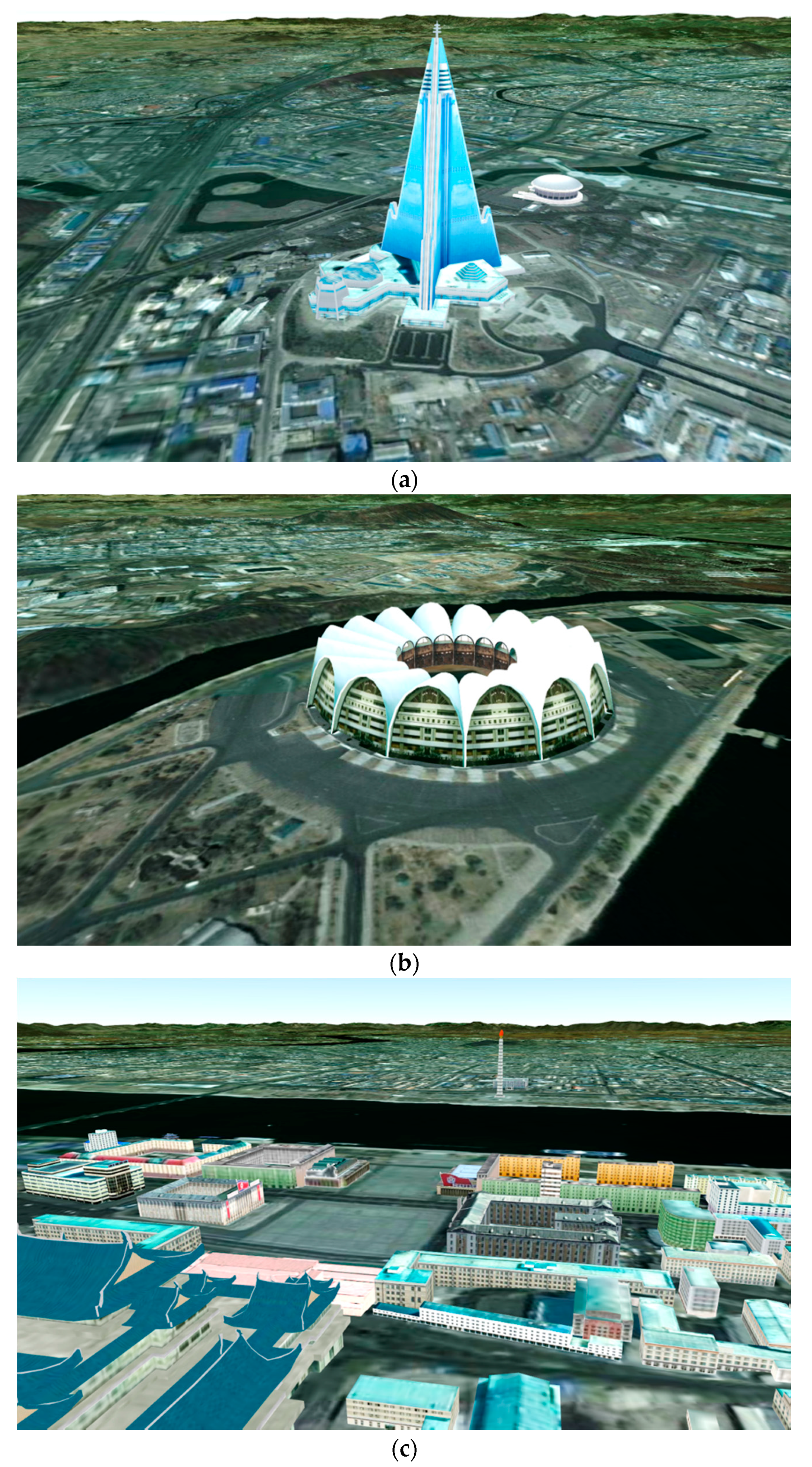

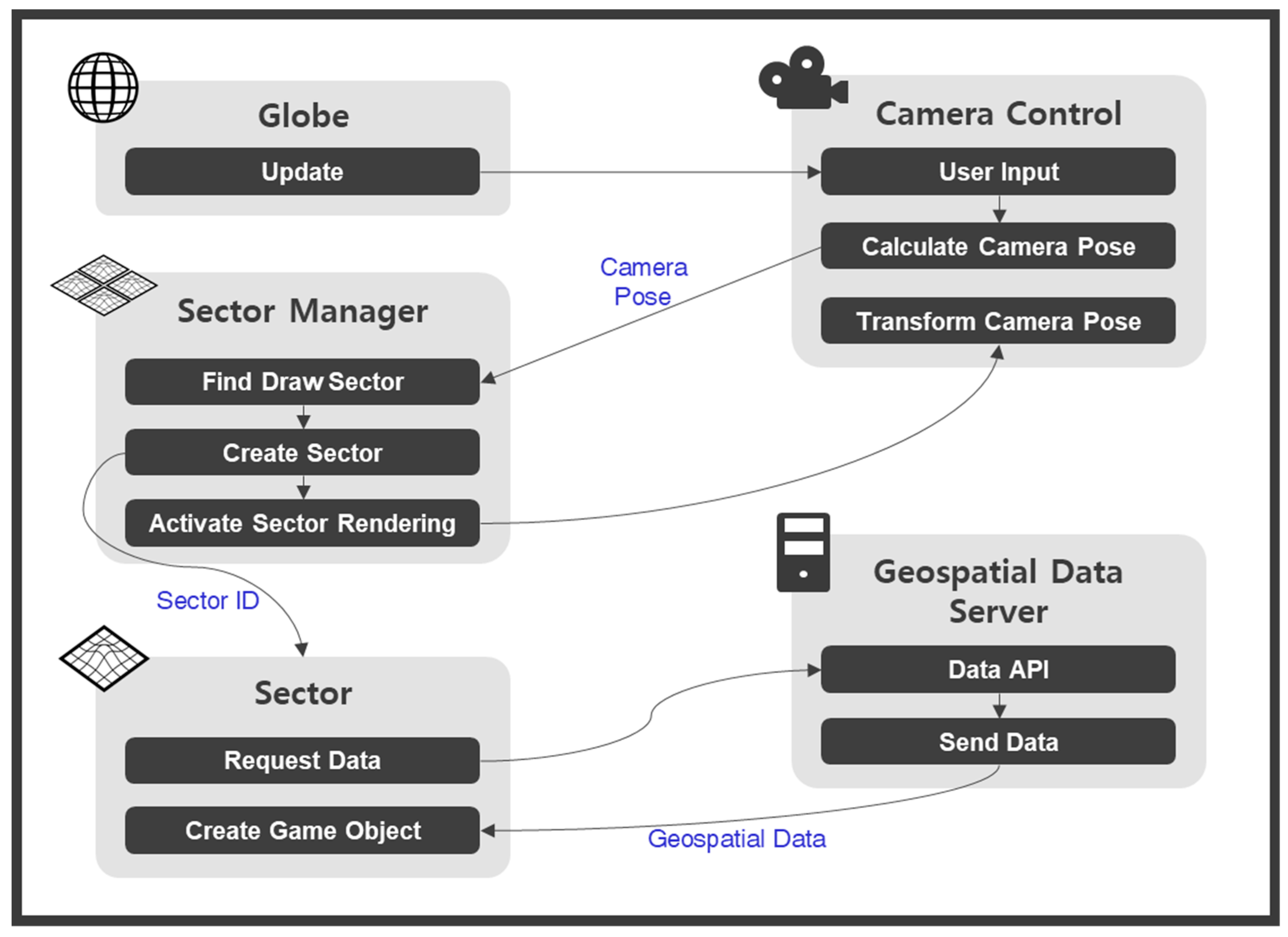

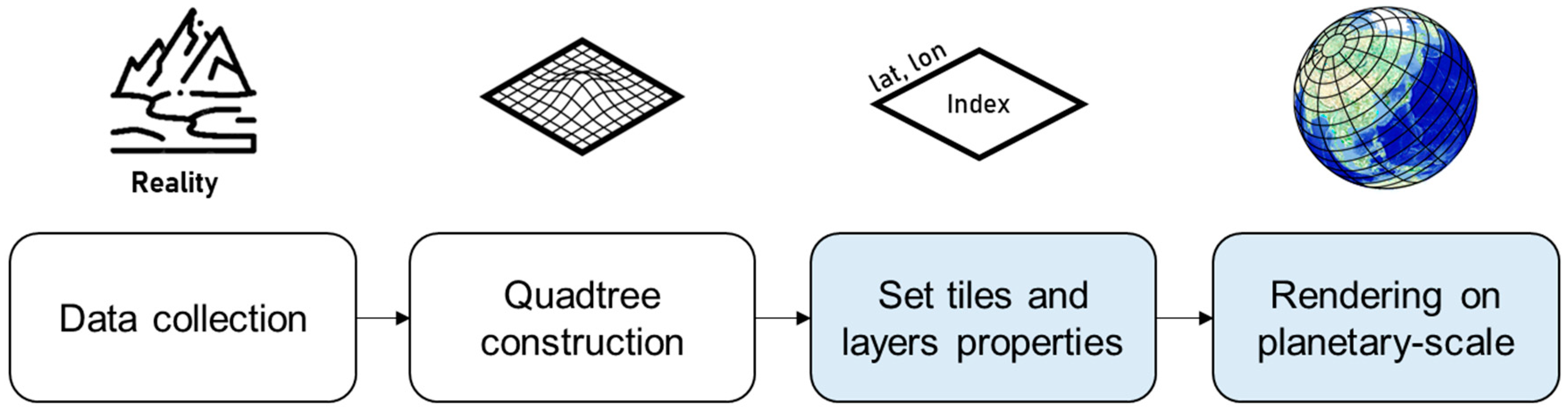

2. Methods: Unity3D-Based Platform

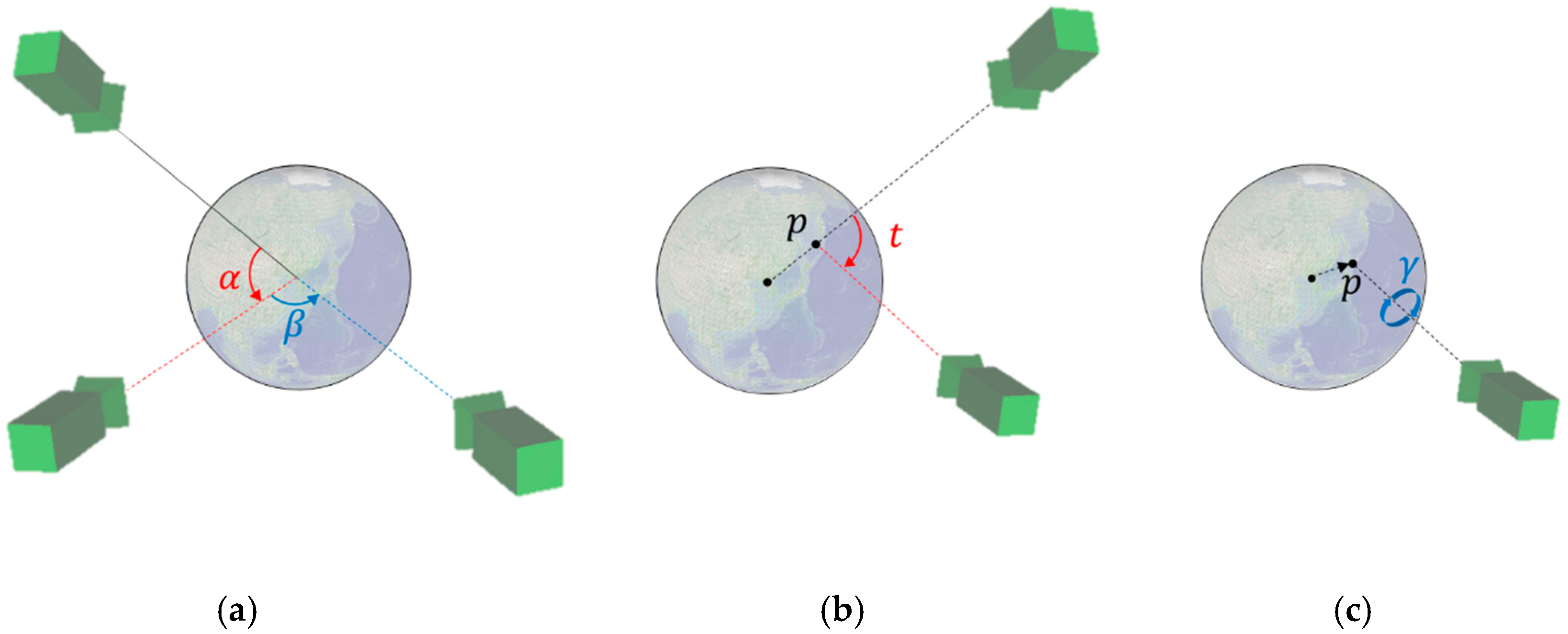

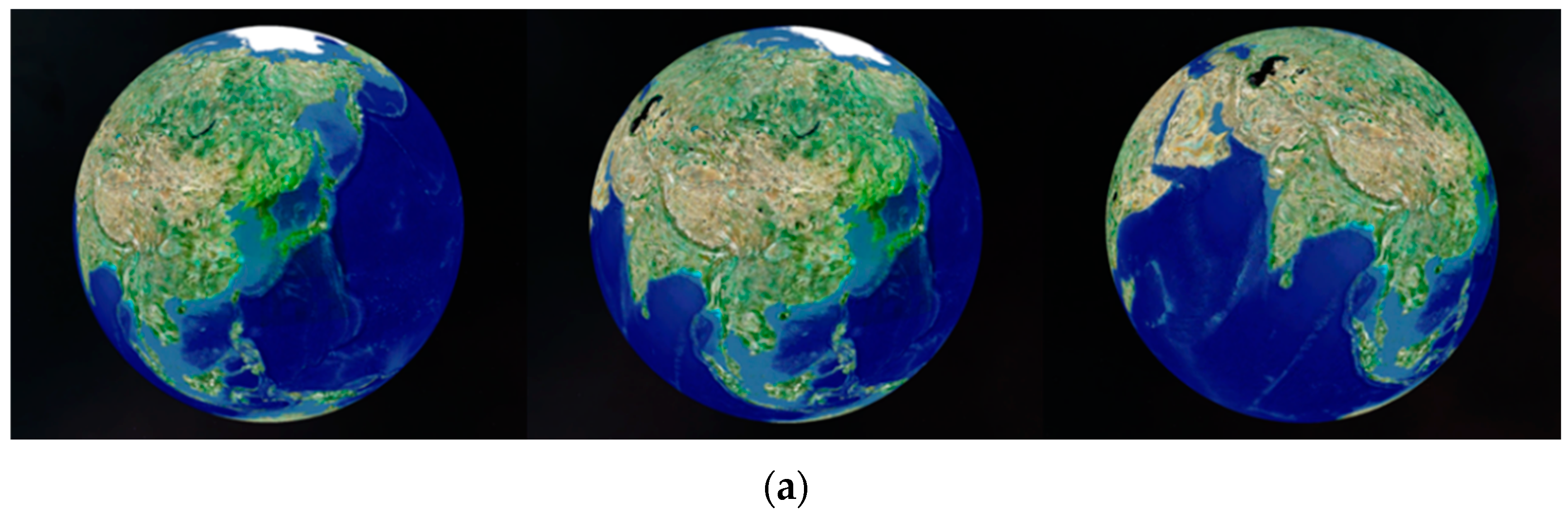

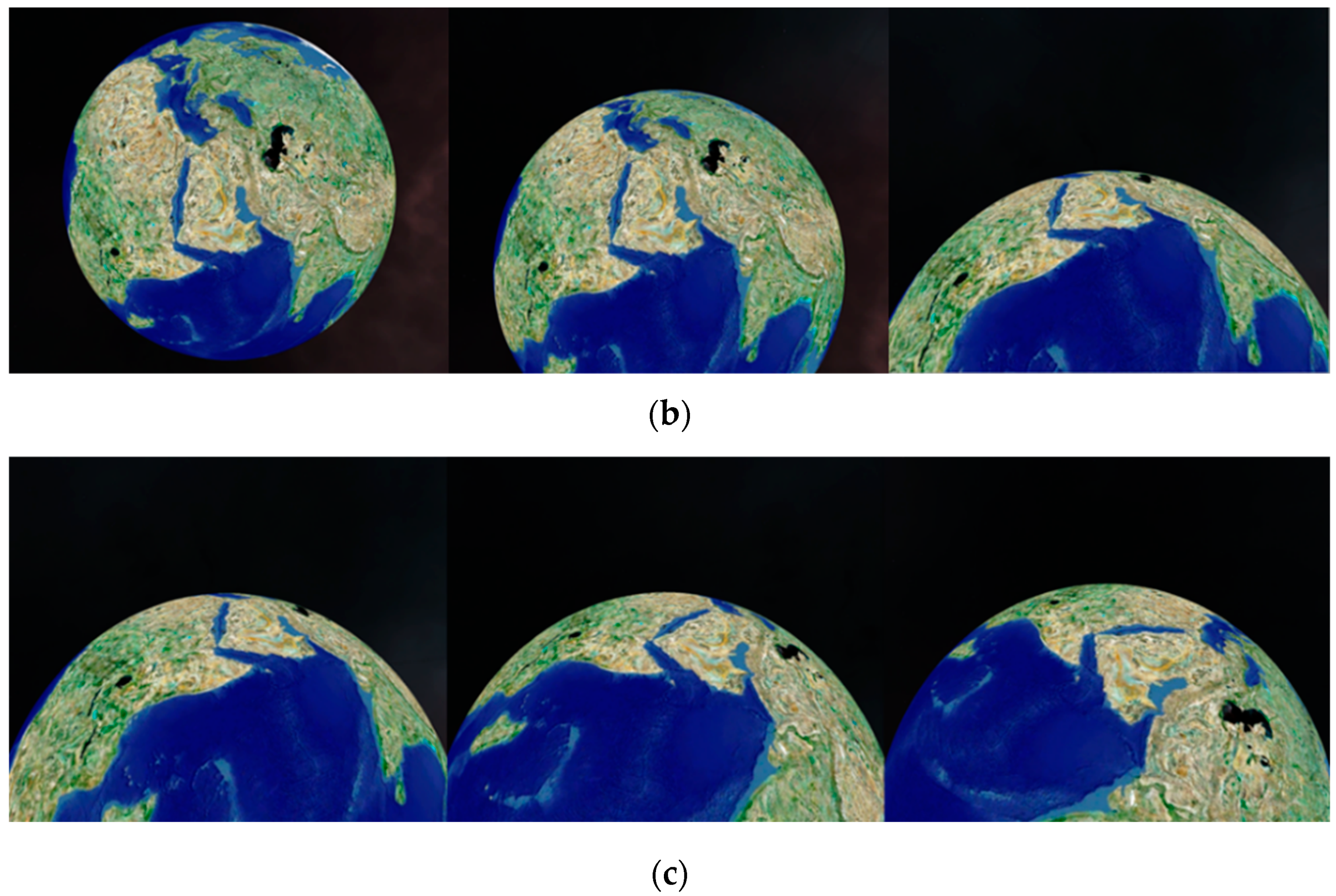

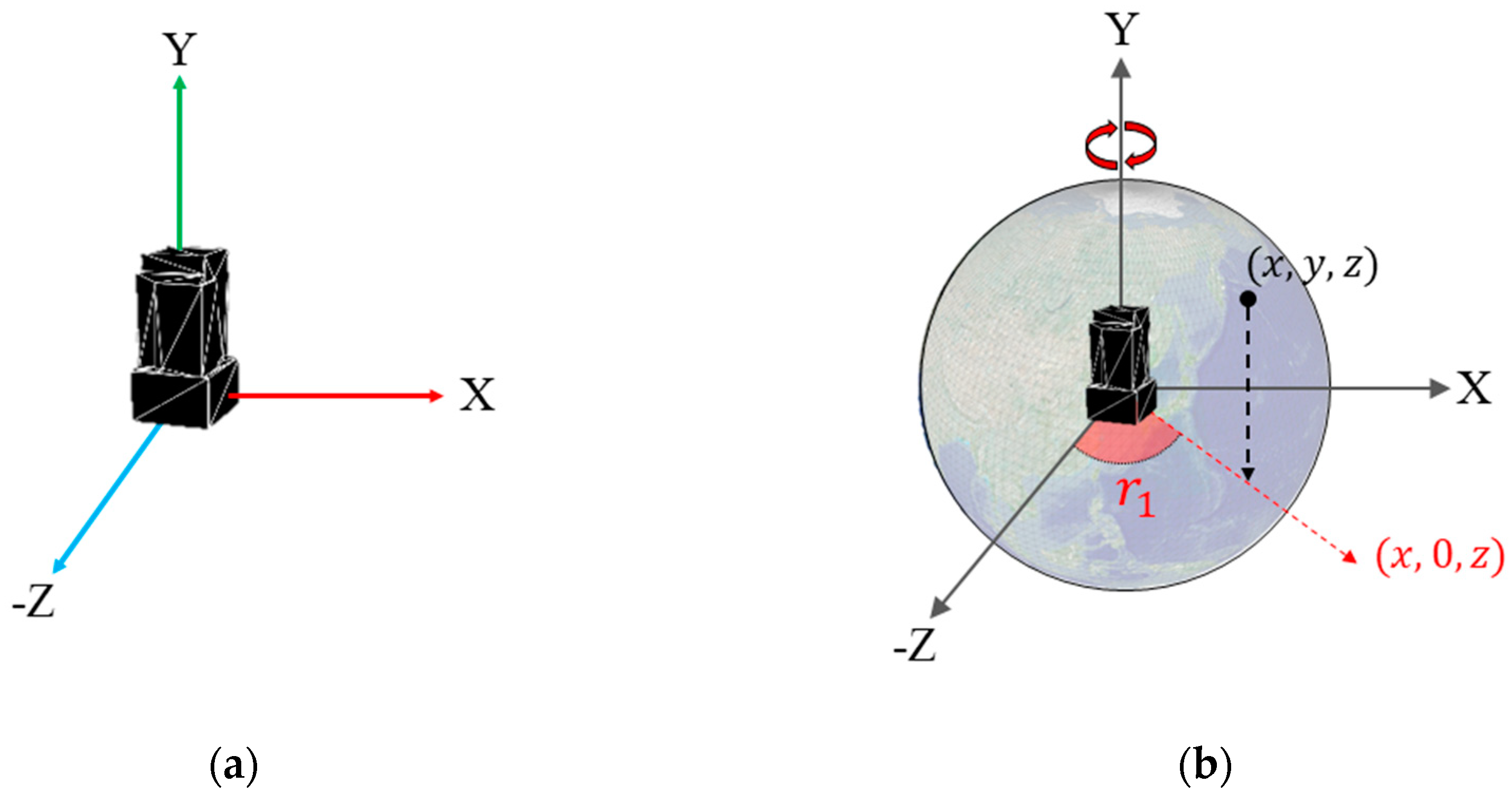

2.1. Camera Control

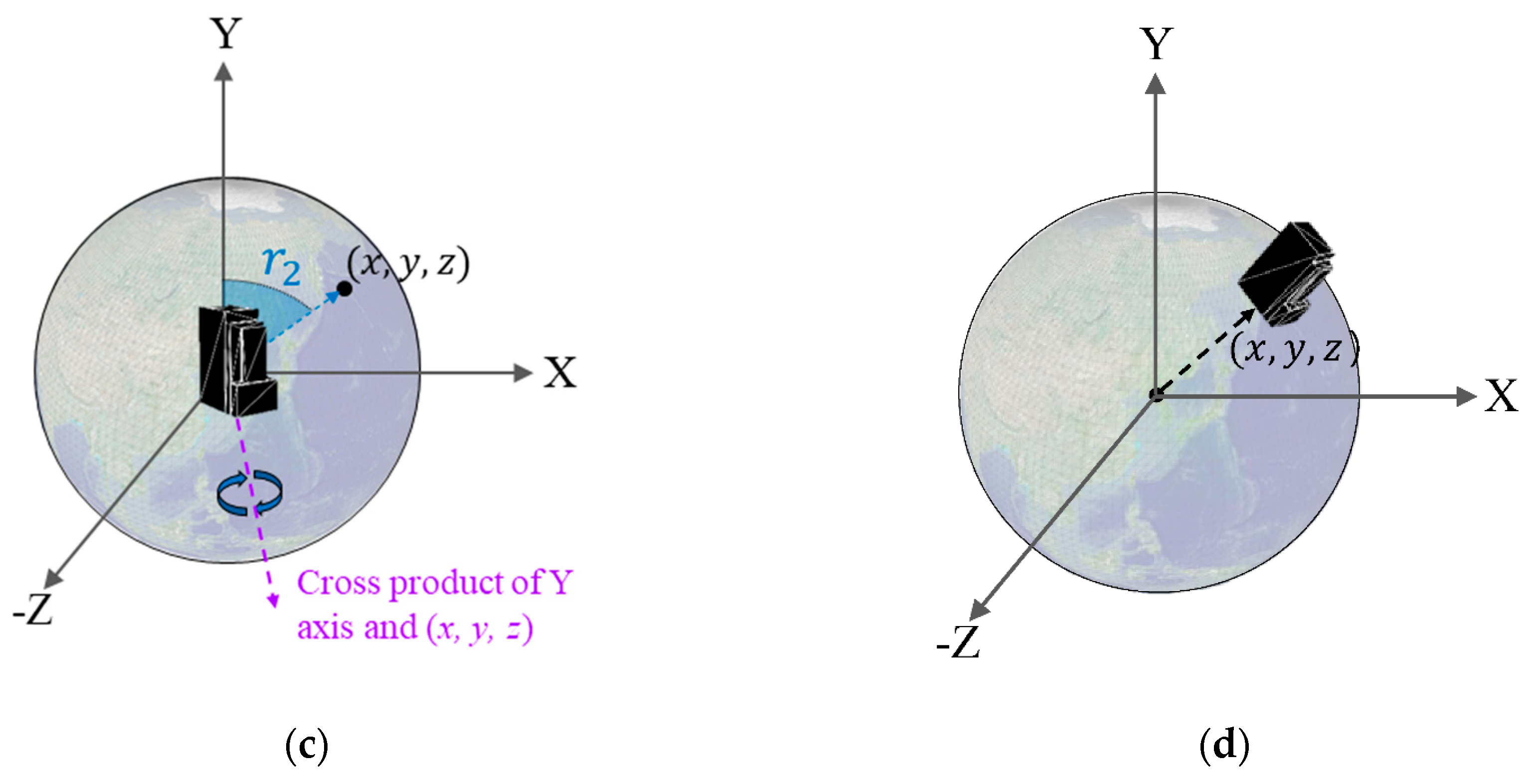

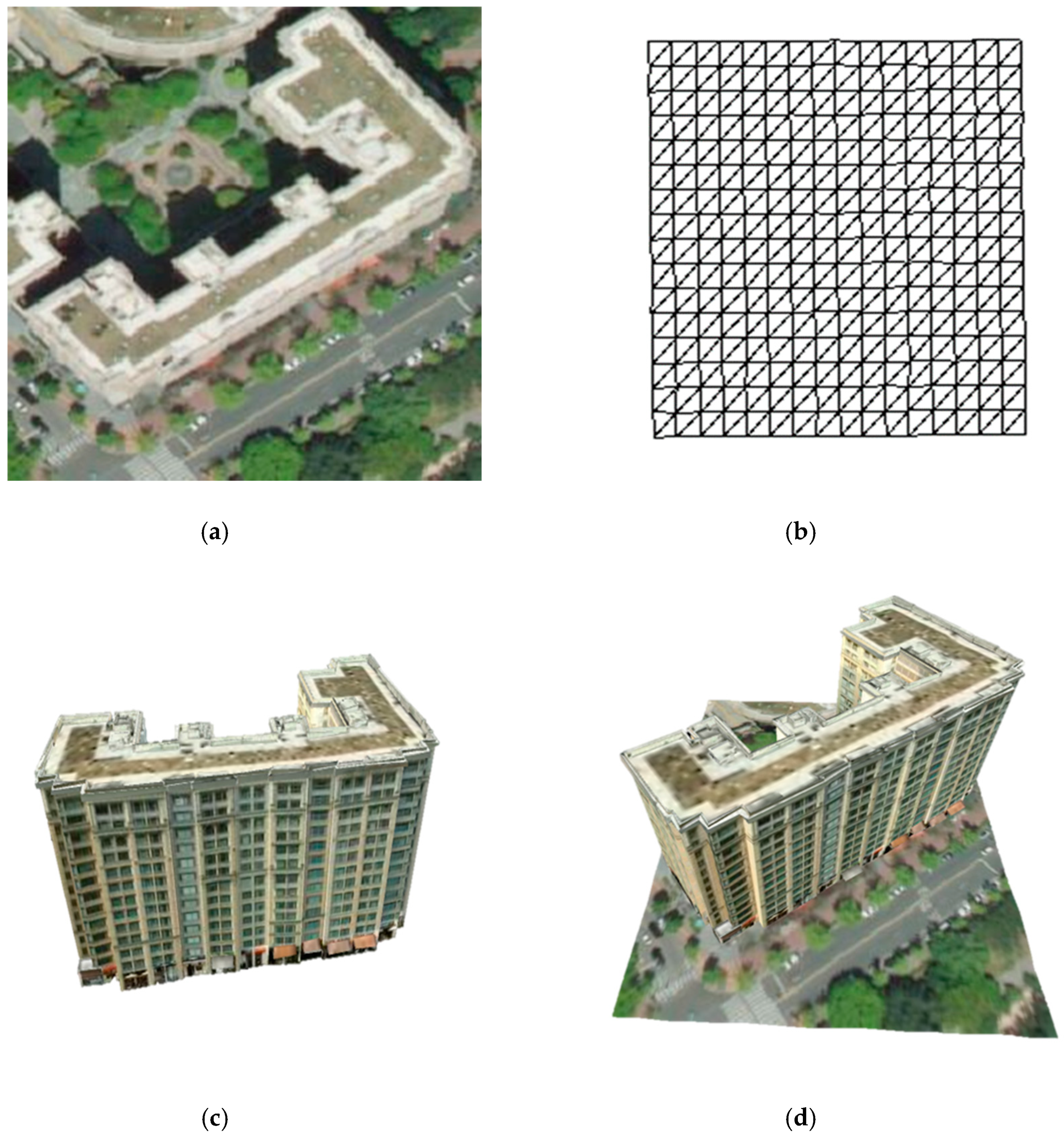

2.2. Positioning Geospatial Model

3. Methods and Results: Data Management and Rendering

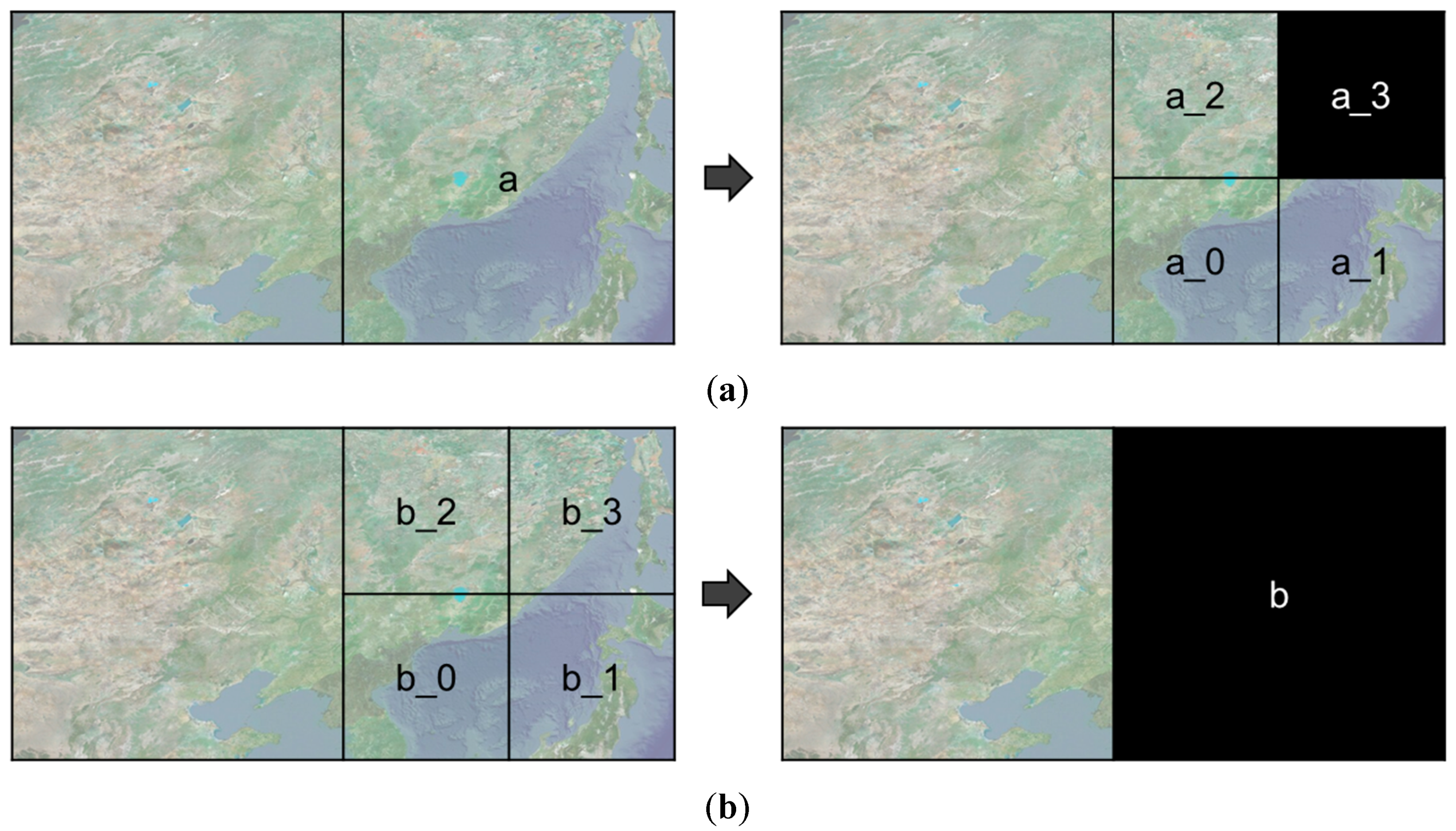

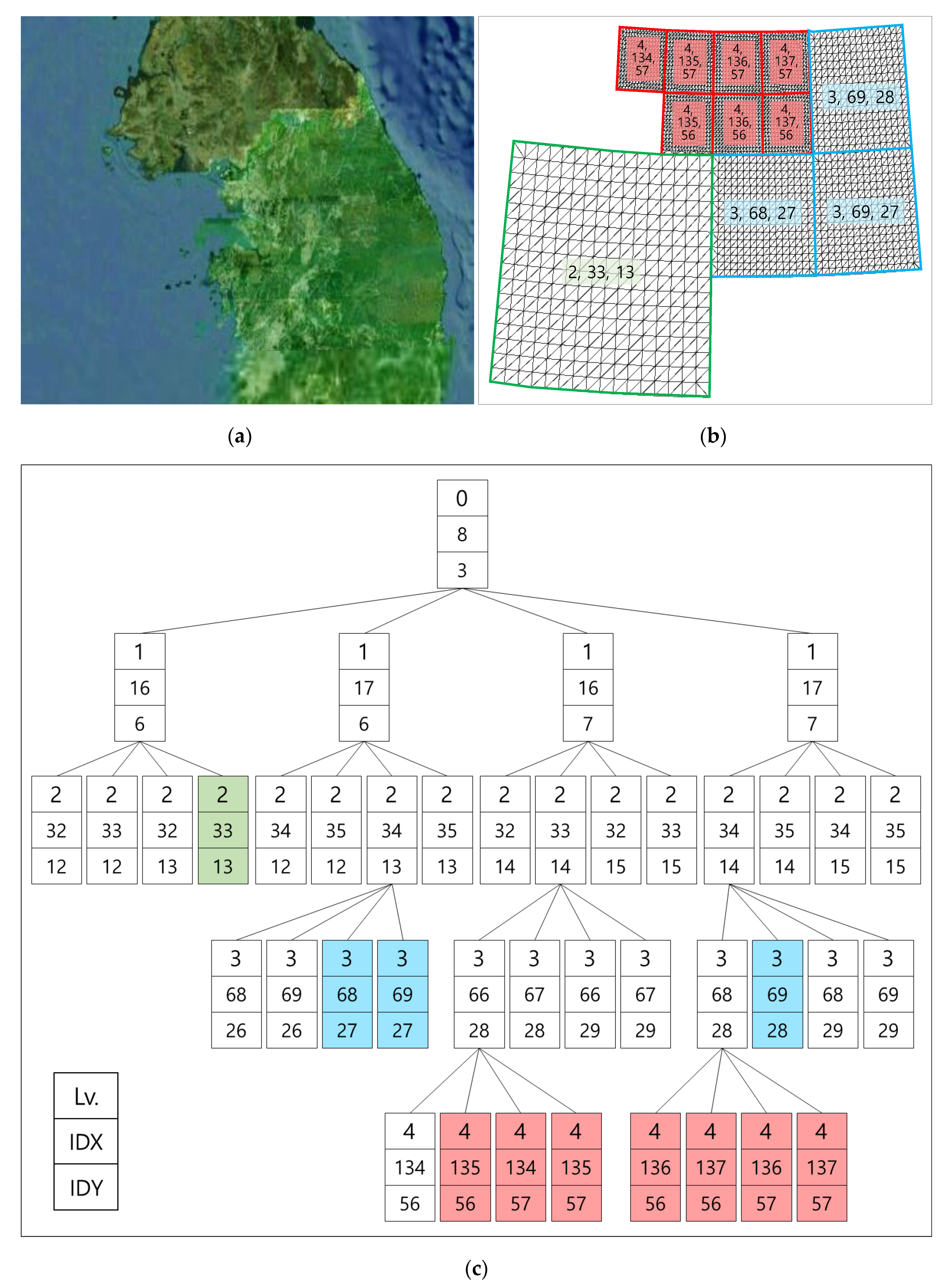

3.1. Sector

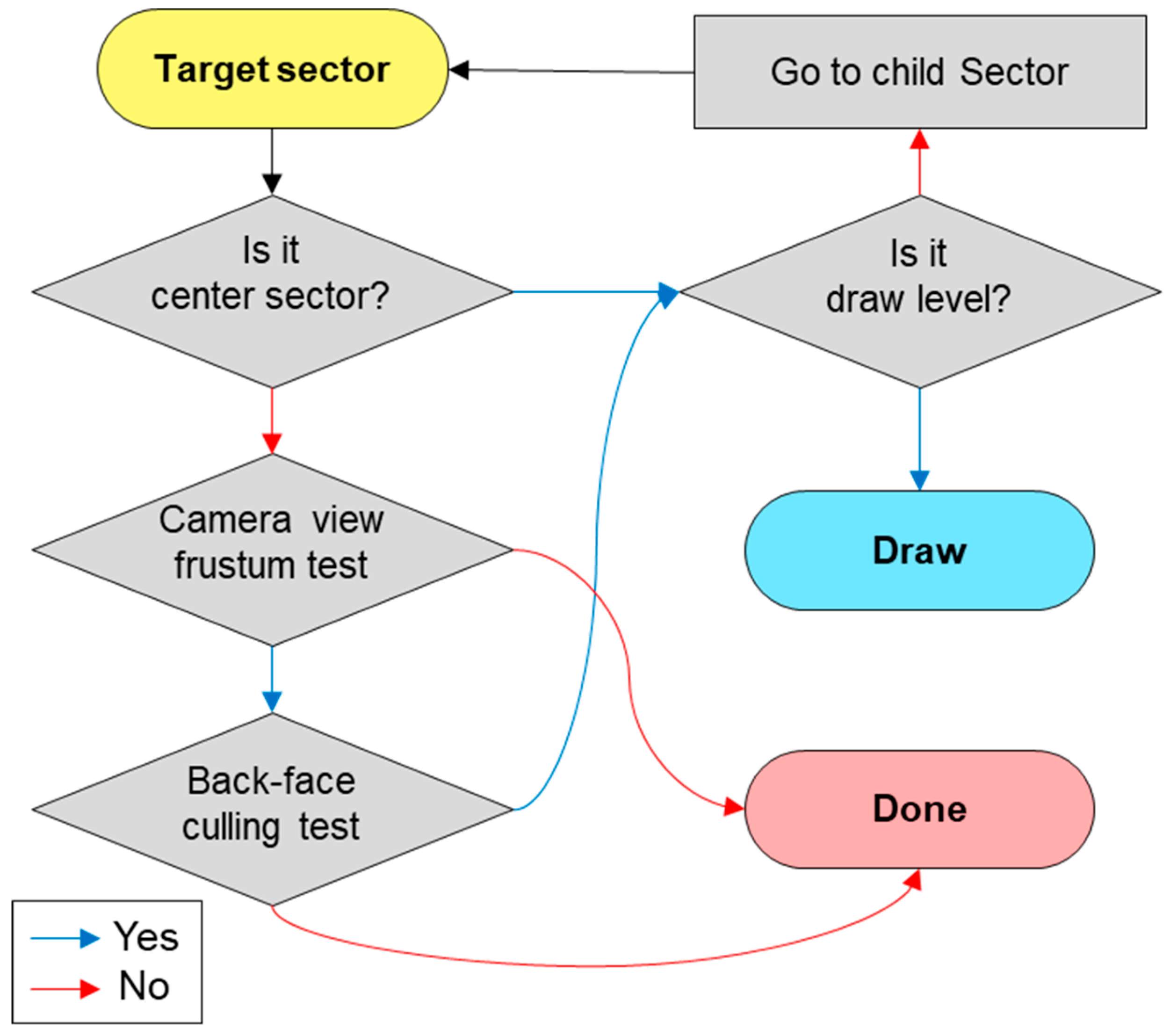

3.2. Determination of the Rendering Sectors

- The parent sector is one level lower than the child sector. The parent sector contains the area of four child sectors.

- The child sectors are one level higher than the parent sector. The child sectors are divided by four from the parent sector.

- The created sector implies that data are requested and downloaded, and the sector is created as a game object in Unity3D.

- The child sectors can be created only when the parent sector is already created. Accordingly, all created sectors have the created parent sector except the level 0 sectors.

- When a new layer is added, all created sectors become a sector whose creation is not completed. The sectors are created by requesting data for the newly added layer from level 0.

- A sector can only be rendered if its neighboring child sectors, which have the same parent sector, are created, except for the level 0 sectors. If no child sector has been created, the parent sector is the rendering target.

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Boschert, S.; Rosen, R. Digital twin—The simulation aspect. In Mechatronic Futures; Springer: Cham, Switzerland; New York, NY, USA, 2016; pp. 59–74. [Google Scholar]

- Schleich, B.; Anwer, N.; Mathieu, L.; Wartzack, S. Shaping the digital twin for design and production engineering. CIRP Ann. 2017, 66, 141–144. [Google Scholar] [CrossRef]

- Grieves, M.; Vickers, J. Digital twin: Mitigating unpredictable, undesirable emergent behavior in complex systems. In Transdisciplinary Perspectives on Complex Systems; Springer: Cham, Switzerland; New York, NY, USA, 2016; pp. 85–113. [Google Scholar]

- Mohammadi, N.; Taylor, J. Knowledge discovery in smart city digital twins. In Proceedings of the 53rd Hawaii International Conference on System Sciences, Hawaii, HI, USA, 7–10 January 2020. [Google Scholar]

- Alam, K.M.; Saddik, A.E. C2PS: A digital twin architecture reference model for the cloud-based cyber-physical systems. IEEE Access 2017, 5, 2050–2062. [Google Scholar] [CrossRef]

- Ignatius, M.; Martin, M.; Wong, N.H. Developing Bescam: Integration of virtual Singapore with building energy simulation and urban canopy modeling for climate and district energy demand assessment. In Proceedings of the AMS International Conference on Urban Climate/14th Symposium on the Urban Environment, New York, NY, USA, 6–10 August 2018. [Google Scholar]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Tian, F.; Wu, B.; Zeng, H.; Zhang, X.; Xu, J. Efficient identification of corn cultivation area with multitemporal synthetic aperture radar and optical images in the Google Earth engine cloud platform. Remote Sens. 2019, 11, 629. [Google Scholar] [CrossRef]

- He, B.; Mo, W.X.; Hu, J.X.; Yang, G.; Lu, G.J.; Liu, Y.Q. Development of power grid Web3D GIS based on Cesium. In Proceedings of the 2016 IEEE PES Asia-Pacific Power and Energy Engineering Conference (APPEEC), Xi’an, China, 25–28 October 2016; pp. 2465–2469. [Google Scholar]

- Müller, R.D.; Qin, X.; Sandwell, D.T.; Dutkiewicz, A.; Williams, S.E.; Flament, N.; Seton, M. The GPlates portal: Cloud-based interactive 3D visualization of global geophysical and geological data in a web browser. PLoS ONE 2016, 11, e0150883. [Google Scholar] [CrossRef] [PubMed]

- Gutbell, R.; Pandikow, L.; Kuijper, A. Web-based visualization component for geo-information. In International Conference on Human Interface and the Management of Information; Springer: Cham, Switzerland; New York, NY, USA, 2018; pp. 23–35. [Google Scholar]

- Wood, L. Programming the Web: The W3C DOM specification. IEEE Internet Comput. 1999, 3, 48–54. [Google Scholar] [CrossRef]

- Zhu, C.; Tan, E.C.; Chan, T.K.Y. 3D Terrain visualization for Web GIS. Geospat. World 2009. Available online: https://www.geospatialworld.net/article/3d-terrain-visualization-for-web-gis/ (accessed on 20 October 2020).

- Angel, E.; Shreiner, D. Geometric objects and transformations. In Interactive Computer Graphics with WebGL, 7th ed.; Addison-Wesley Professional: Boston, MA, USA, 2014; pp. 165–244. [Google Scholar]

- McQuire, S. One map to rule them all? Google Maps as digital technical object. Commun. Public 2019, 4, 150–165. [Google Scholar] [CrossRef]

- Van Maren, G.; Shephard, N.; Schubiger, S. Developing with Esri CityEngine. In Proceedings of the ESRI Developer Summit, San Diego, CA, USA, 26–29 March 2012. [Google Scholar]

- Buyuksalih, I.; Bayburt, S.; Buyuksalih, G.; Baskaraca, A.P.; Karim, H.; Rahman, A.A. 3D modelling and visualization based on the unity game engine—Advantages and challenges. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, IV-4/W4, Safranbolu, Karabuk, Turkey, 14–15 October 2017; pp. 161–166. [Google Scholar]

- Wang, S.; Mao, Z.; Zeng, C.; Gong, H.; Li, S.; Chen, B. A new method of virtual reality based on Unity3D. In Proceedings of the IEEE International Conference on Geoinformatics, Beijing, China, 18–20 June 2010; pp. 1–5. [Google Scholar]

- Xie, J. Research on key technologies base Unity3D game engine. In Proceedings of the IEEE International Conference on Computer Science & Education (ICCSE), Melbourne, Australia, 14–17 July 2012; pp. 695–699. [Google Scholar]

- Koh, E.; Park, G.; Lee, B.; Kim, D.; Sung, S. Performance Validation and Comparison of range/INS integrated system in urban navigation environment using Unity3D and PILS. In Proceedings of the 2020 IEEE/ION Position, Location and Navigation Symposium (PLANS), Portland, OR, USA, 20–23 April 2020; pp. 788–792. [Google Scholar]

- Szajna, A.; Stryjski, R.; Woźniak, W.; Chamier-Gliszczyński, N.; Kostrzewski, M. Assessment of Augmented Reality in Manual Wiring Production Process with Use of Mobile AR Glasses. Sensors 2020, 20, 4755. [Google Scholar] [CrossRef] [PubMed]

- Lee, A.; Jang, I. Implementation of an open platform for 3D spatial information based on WebGL. ETRI J. 2019, 41, 277–288. [Google Scholar] [CrossRef]

- Jang, H.S.; Go, J.H.; Jung, W.R.; Jang, I.S. Performance evaluation of CDN method in V-World web service as spatial information open platform service. Spat. Inf. Res. 2016, 24, 355–364. [Google Scholar] [CrossRef]

- Lavoué, G.; Chevalier, L.; Dupont, F. Streaming compressed 3D data on the web using JavaScript and WebGL. In Proceedings of the 18th International Conference on 3D Web Technology, San Sebastian, Spain, 20–22 June 2013; pp. 19–27. [Google Scholar]

- Suárez, J.P.; Trujillo, A.; De La Calle, M.; Gómez-Deck, D.D.; Santana, J.M. An open source virtual globe framework for iOS, Android and WebGL compliant browser. In Proceedings of the 3rd International Conference on Computing for Geospatial Research and Applications, New York, NY, USA, 1–3 July 2012; pp. 1–10. [Google Scholar]

- Congote, J.; Segura, A.; Kabongo, L.; Moreno, A.; Posada, J.; Ruiz, O. Interactive visualization of volumetric data with WebGL in real-time. In Proceedings of the 16th International Conference on 3D Web Technology, New York, NY, USA, 20–22 June 2011; pp. 137–146. [Google Scholar]

- Ham, Y.; Kim, J. Participatory Sensing and digital twin city: Updating virtual city models for enhanced risk-informed decision-making. J. Manag. Eng. 2020, 36, 04020005. [Google Scholar] [CrossRef]

- Gregory, J. The game loop and real-time simulation. In Game Engine Architecture, 3rd ed.; CRC Press: Danvers, MA, USA, 2018; pp. 525–558. [Google Scholar]

- Li, C.; Zhao, Z.; Sun, W.; Liu, Z. A fast quadtree-based terrain crack locating method that accounts for adjacency relationships. Trans. GIS 2019, 23, 1374–1392. [Google Scholar] [CrossRef]

- Pajarola, R. Large scale terrain visualization using the restricted quadtree triangulation. In Proceedings of the Visualization ‘98, Research Triangle Park, NC, USA, 18–23 October 1998; pp. 19–26. [Google Scholar]

- Wu, J.; Yang, Y.; Gong, S.R.; Cui, Z. A new quadtree-based terrain LOD algorithm. J. Softw. 2010, 5, 769–776. [Google Scholar] [CrossRef]

- Kraak, M.J.; Ormeling, F. Map characteristics. In Cartography: Visualization of Geospatial Data, 2nd ed.; CRC Press: Danvers, MA, USA, 2020; pp. 51–84. [Google Scholar]

- Chen, Q.; Liu, G.; Ma, X.; Mariethoz, G.; He, Z.; Tian, Y.; Weng, Z. Local curvature entropy-based 3D terrain representation using a comprehensive Quadtree. ISPRS J. Photogramm. Remote Sens. 2018, 139, 30–45. [Google Scholar] [CrossRef]

| Step | Speed for Desktop with GPU (Graphics Processing Unit) (ms) | Speed for Notebook with GPU (ms) | Speed for Notebook without GPU (ms) |

|---|---|---|---|

| Camera control | 0.0092 | 0.0123 | 0.0375 |

| Find draw sectors | 0.2202 | 0.2551 | 0.5930 |

| Activate draw sectors | 0.6741 | 0.8201 | 2.8769 |

| Frame rate (fps) | 6.0959 (164.04) | 8.8838 (112.56) | 15.4913 (64.55) |

| Google Earth [7] | VWorld Service Site [22] | Game Solution of Google Maps [15] | Proposed Platform | |

|---|---|---|---|---|

| Development environment | web | web | Unity3D | Unity3D |

| Scale | planetary-scale | planetary-scale | city scale | planetary-scale |

| Provide geospatial data | yes | yes | yes | no (VWorld) |

| Texture resolution of buildings | low | high | low | - |

| Supported locations | global | South Korea, North Korea, and global (only terrains) | global | - |

| Tiled data with LODs management | yes | yes | no | yes |

| External tiled data usage | limit | limit | limit | support |

| Price | free | free | USD 200 (monthly usage) | free |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, A.; Chang, Y.-S.; Jang, I. Planetary-Scale Geospatial Open Platform Based on the Unity3D Environment. Sensors 2020, 20, 5967. https://doi.org/10.3390/s20205967

Lee A, Chang Y-S, Jang I. Planetary-Scale Geospatial Open Platform Based on the Unity3D Environment. Sensors. 2020; 20(20):5967. https://doi.org/10.3390/s20205967

Chicago/Turabian StyleLee, Ahyun, Yoon-Seop Chang, and Insung Jang. 2020. "Planetary-Scale Geospatial Open Platform Based on the Unity3D Environment" Sensors 20, no. 20: 5967. https://doi.org/10.3390/s20205967

APA StyleLee, A., Chang, Y.-S., & Jang, I. (2020). Planetary-Scale Geospatial Open Platform Based on the Unity3D Environment. Sensors, 20(20), 5967. https://doi.org/10.3390/s20205967