3.1. Find Gaps of the Environment

The sensor employed in the proposed approach is 2D LIDAR. The LIDAR is located at the center of the robot with a field of view (FOV) of

(

Figure 2). The LiDAR sends beams evenly within its entire FOV. The angle between two adjacent beams is referred as LIDAR resolution, denoted in this paper as

.

is the farthest detection distance of the LIDAR. For each beam with the beam number

i,

and

are the measured angle and the distance respectively.

in

Figure 2 is the measured distance of beam number

.

Define the heading of the robot as 0 degrees, then

is calculated as:

where

, with

N being the total number of the LiDAR beams calculated as:

Similar methods that navigate a robot by identifying gaps using sensor data have been proposed in [

17,

18]. However, these methods will be invalid when faced with dense obstacles. In this paper, a more general method for identifying gaps is designed, which can find the appropriate gaps between densely interlaced obstacles.

Figure 2 shows that the value of

and

is very close, but there is a big difference between

and

. Because the adjacent beams

m and

are directed at the same object, the smaller the LIDAR resolution is, the closer the values of

and

will be. Therefore, set a constant value

. When the difference between

and

is greater than

, we think that the adjacent beams

m and

are directed at two different objects and call the points where the two beams hit the objects as discontinuous points. At this time, the gap between these two objects may allow the robot to pass. Therefore, the first step is to find all the discontinuous points.

(1) Finding out the discontinuous points

As mentioned above, there are discontinuous points when:

where

is constant. Obviously,

should be larger than the diameter of the robot if the robot wants to pass between two discontinuous points. In theory, the value of

should be as close to the robot diameter as possible because a larger

may cause the loss of some discontinuities that allow the robot to pass. However, the LIDAR data

has deviations. If

is equal to or close to the robot diameter, even though the discontinuous points satisfies

, the real distance between the two discontinuous points may be less than the robot diameter. On the other hand, the LIDAR beams are not continuous. The farther the obstacle is from the robot, the larger the difference between

is. When the robot is near a wall, the phenomenon will be more clear. This can be explained by

Figure 3.

To show more clearly, the number of LIDAR beams in

Figure 3 is much smaller than the actual number of beams. We can see that the difference between

and

is greater than the robot diameter. However, the adjacent beams

m and

are directed at the same object. So there are no openings to allow the robot to pass. At this time, setting

to a larger value can avoid mistakenly identifying the discontinuous points. Therefore, when we set the value of

, there should be a trade-off between avoiding misidentification of discontinuities and avoiding loss of discontinuities. Also, More dense LIDAR beams can avoid misidentification of discontinuities. In the following experiments, the value of

is obtained considering the error, resolution and farthest detection distance of the LIDAR.

(2) Making sure the discontinuous position is passable

Taking into account the size of the robot, the robot can pass only if the free space at the discontinuity is large enough. As shown in

Figure 4, points A and B are discontinuous points that we found in the first step. There is a semicircle centered on point A on the right side of the line

. If there are points detected by the LIDAR beams within the semicircle(shown in

Figure 4b), we think that the robot cannot pass through the gap between point A and point B. As you can see in the picture, there is another semicircle centered at point B. The function of this semicircle is to eliminate the effects of LIDAR error data and extremely small obstacles. In

Figure 4c, if there is a very small obstacle or error LIDAR signal at point A, checking whether there are points detected by the LIDAR beams within the semicircle at B can eliminate the interference. One LIDAR point is found in the semicircle centered on B, so there is no valid gap there.

There are no points detected by LIDAR beams within the two semicircles in

Figure 4a. Therefore, the robot can pass through the gap between point A and point B. Point C is used to represent this gap, whose coordinates can be obtained by the fact that

is the perpendicular bisector of the semicircle’s radius. Besides, the value of

is set to be slightly larger than the diameter of the robot for safety considerations. If point A or point B exceeds the maximum range of LIDAR detection, then the semicircle corresponding to this point does not need to be considered, as shown in

Figure 5.

(3) Making sure the passageway to the gap is not blocked

In the previous step, the gap that allows the robot to pass is found. However, moving directly toward the gap may be impracticable. As shown in

Figure 5a, we find the gap by step (2). Nevertheless, after adding two point-like obstacles (

Figure 5b), point C cannot be reached directly. Therefore, we cannot regard gap C in

Figure 5b as a valid gap. To rule out this situation, we have defined two sets of points

M and

N. Point set

M contains all the points detected by the LIDAR in the rectangle

, and point set N contains all the points detected by the LIDAR in the rectangle

. By checking gaps that found in the step (2) with the following conditions, we get the valid gaps that are not blocked:

where

is the distance between

and

. The method to obtain the elements of set M and N is to find the points that meet the following two conditions among the points detected by the LIDAR: (1) The distance from this point to the straight line

is less than

. (2) The distance from this point to the robot center

O is less than the length of the

. The set determined by this method is slightly different from the set M and N defined before, but this dose not affect the research of this article.

By the above three steps, we can find all the valid gaps. The next part will show how to choose one of these gaps as a sub-goal.

3.2. Selecting Sub-Goal from Gaps

Local navigation approaches are challenged by complex environments. For example, most of the local approaches cannot detour a big U-shaped obstacle. Some solutions [

19,

20] develop navigation strategies based on an empirical evaluation of trap situations. But they may still get stuck in some special environments. In [

17], the author also adopted the concept of the gap, but the sub-goal was selected from gaps by the cost function method, which sometimes produced strange trajectories. Other local approaches, such as [

21,

22,

23], did not solve the problem of navigation in complex environment. These local approaches have the common feature that they have no memory of the sensor date detected in the past. Our findings show that if one local planner does not take the past sensor data into account and there is no global planner available, then any improvement to the local planner cannot avoid being trapped by obstacles while considering the optimal path. Therefore, many existing local approaches cannot completely solve the trapped problem, even if they claim that they can avoid local minimum problems. This can be illustrated in

Figure 6.

In

Figure 6, the red point and green point are the robot position and the target position, respectively. Circles drawn with dotted lines represent the identified gaps. Firstly, we think that the two images are independent. Assuming that the robot can select the gap at the level of human intelligence, the optimal gap and motion direction is shown in

Figure 6. However, the problem arises when

Figure 6a is followed by

Figure 6b. In

Figure 6b, the robot arrives at the gap that selected in

Figure 6a. Nevertheless, the manual selected optimal gap is close to the start point in

Figure 6a, causing the robot to repeat the movement. Even if we derive the new sub-goal combining the current motion direction, the problem still exists.

To eliminate this defect, we have improved the traditional local approaches. When selecting a new sub-goal, the proposed approach combines the current sensor data with the previous sub-goals, which can effectively avoid the robot being trapped. The method we adopt is very simple, that is, the new sub-goal avoids the gaps that passed previously. In the remainder of this section, we will describe the method of selecting the sub-goal form these gaps.

In the previous part, we already know how to find out valid gaps. As shown in

Figure 4a, there is a gap (indicated by point C). We find this gap since point A, point B, and its nearby LIDAR points satisfy those three conditions. The point that is closer to the robot between A and B is referred to as the origin of gap C. In order to avoid the robot being trapped because of continuous circular motion, the method we adopt is creating a blacklist to store the global coordinates of the origin of previously visited sub-goals (gaps). When selecting a new sub-goal, gaps whose origin is close to the point in the blacklist will not be taken into account. Furthermore, the origin of the sub-goal will be stored in the list only when the robot reaches this sub-goal and then leaves it a certain distance of

. In this way, the robot will not return to the gaps that have been to before.

In

Figure 7a, the optimal sub-goal for manual selection is gap A. However, our robot does not have such a high level of intelligence. If a formula is used to calculate the optimal sub-target, the new problem comes out. For example, we can select the gap with the smallest value of

as the sub-goal:

where

is the distance from the gap to the robot,

is the distance from the gap to the goal. Obviously, the optimal sub-goal is gap B, see

Figure 7a. Since the distance from the robot to the gap B or D is less than

, the origin of gaps B, D is not stored in the blacklist. Therefore the gap D cannot be ignored. However, gap D is selected as a new sub-goal since it has the smallest value of

, see

Figure 7b. The new sub-goal D is close to the starting point, causing the robot to repeat the movement.

To solve this problem, we add a new restriction to the sub-goal: the distance between the new sub-goal and the last sub-goal should be greater than the distance between the current position and the last sub-goal. For example, in

Figure 7a, the starting point is treated as the first sub-goal, and the new sub-goal is gap B. In

Figure 7b, the robot reaches gap B. The distance between gap D and the first sub-goal(the last sub-goal) is smaller than the distance between the current position B and the first sub-goal. Therefore, gap D can not be selected as the second sub-goal. In the remaining gaps, gap A is selected as the second sub-goal because of the minimum value of

.

In short, the method of selecting sub-goal from gaps is divided into three steps.

- (1)

Exclude gaps whose origins are close to the point in the blacklist.

- (2)

Exclude gaps that are closer to the last sub-goal than the current position.

- (3)

Select the gap with the smallest value as the new sub-goal.

3.3. Motion Control

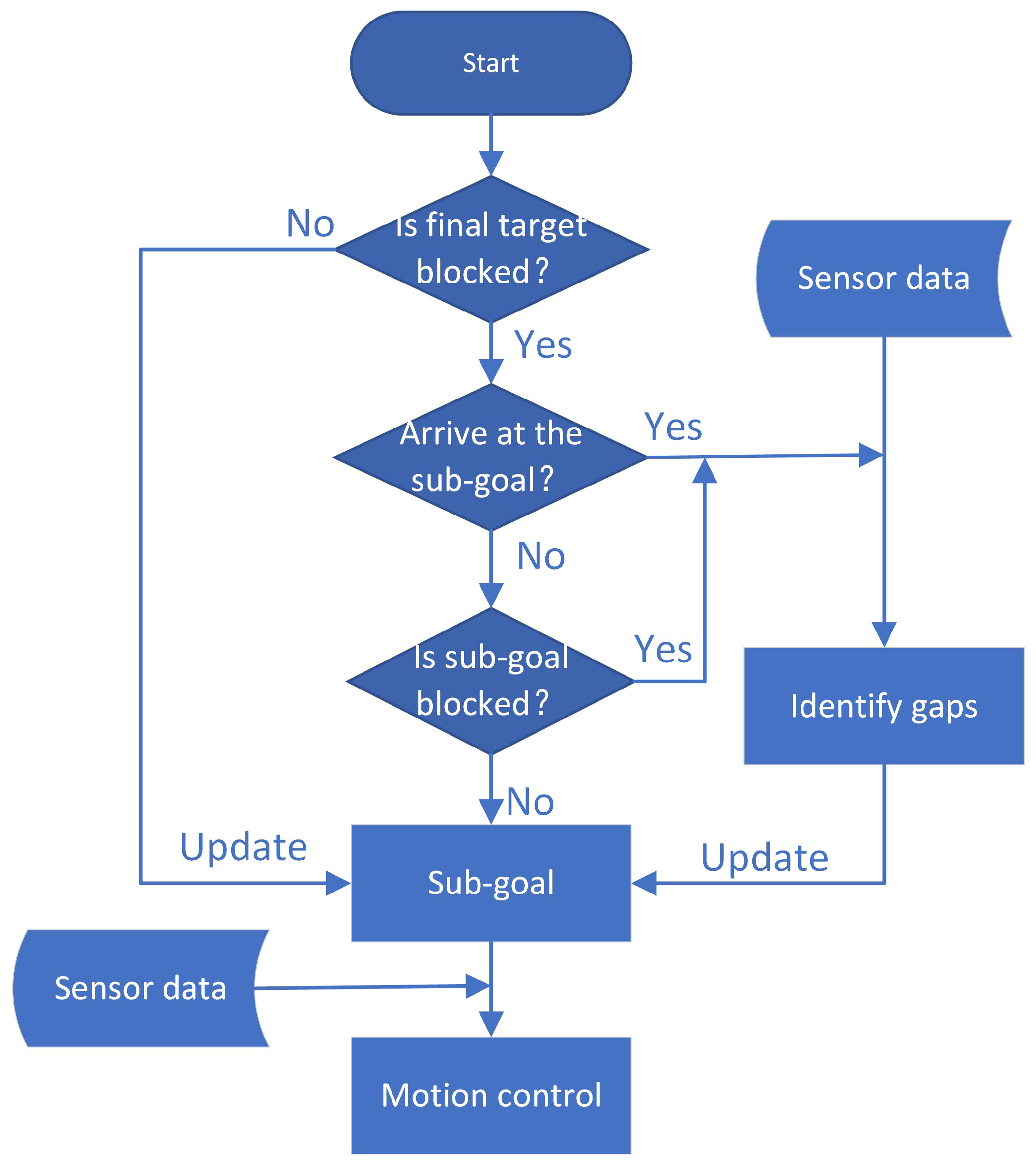

Looking back at the flow chart in

Figure 1, we can see that the sub-goal is not recalculated in each time interval. Only if the current sub-goal has been reached or is blocked, the sub-goal is updated. Methods of finding gaps and selecting sub-goal from gaps have been described in detail earlier. The location of the robot can be obtained by the equipped encoder. The cumulative error has little effect on the proposed method because the previous sub-targets that are farther away from the current position usually do not need to be considered and the previous sub-targets closer to the current position have small coordinates cumulative error. Therefore the global coordinates of the sub-goal can be derived, and it is easy to judge whether the sub-goal has been reached. The method of determining whether a sub-goal is blocked is described in Equation (

4). Therefore, the last problem in

Figure 1 is how to derive robot control commands based on the current sub-goal and LIDAR data.

In this paper, it is considered the wheeled mobile robot of unicycle type, in which the state variables are

x,

y (the world coordinates of the robot’s middle point), and

(angle of the vehicle with the world X-axis). The kinematics of the robot can be modeled by:

First, we design a piecewise control input. Once the current sub-goal is updated, a turning angle is then produced to drive the robot to face the direction of the sub-goal. At this time, the linear velocity

is set to zero to avoid collisions:

When

is close to zero, we can set the linear velocity

to a fixed value and keep the robot moving towards the sub-goal:

where

are three positive gains,

is the angle of the sub-goal in the robot coordinate system.

In Equation (

7), the robot rotates and does not collide. However, Equation (

8) may cause collisions because of small obstacles and previously undetected obstacles. Therefore Equation (

8) should be adjusted according to real-time LIDAR data.

First of all, we define a region

D (

Figure 8) in the robot coordinate system. When obstacles are detected in area

D, the control input (Equation (

8)) should be changed accordingly.

Where

r is the actual radius of the robot,

R is the safe radius, which is larger than

r to maintain a safe distance from the obstacle. The yellow area is

D. The method of determining whether point

belongs to area D is given by:

Then, consider a point-like obstacle, as shown in

Figure 9.

Where point A is the detected obstacle and has polar coordinates

. If the robot passes from the left side of the point-like obstacle A and the trajectory is approximated as an arc, we can derive the radius

(Equation (

10)) of the arc where the robot center is located according to the right triangle

. Since the robot’s linear velocity is fixed value

, the value of angular velocity

(Equation (11)) is derived in situation

Figure 9.

Similarly, when the robot passes from the right side of the obstacle A, the angular velocity

is calculated by:

Generally speaking, real obstacles cannot be considered as a point. As shown in

Figure 10, there is a black object in area

D and multiple points

are detected by LIDAR beams.

According to these detected points in area

D, the distance from the obstacle to the left and right borders of area

D can be calculated by:

For every point detected by LIDAR in area

D, two angular velocities can be calculated using Equations (11) and (

12). Therefore, the maximum value

and the minimum value

among these angular velocities can be derived. According to Equations (11) and (

12), we can find that the value of

can be very large when the distance

d to the obstacle is close to the value of

R. However, the angular velocities

of the robot is bounded, and its maximum and minimum values are represented by

, respectively. At this moment, the linear speed

of the robot should be reduced to avoid the angular velocity

exceeding the boundary. In short, in order to detour obstacles as shown in

Figure 10, the following changes are made in contrast to Equation (

8):

correspond to angular velocities of the robot just passing through the left side and right side of the obstacle. Is it possible that area D in the sub-target direction is entirely blocked by the obstacle causing close to 0? No, because in each time interval, we will investigate whether the present sub-goal is blocked. If the current sub-goal is blocked, a new sub-goal will be selected.

To sum up, the steps of motion control in each time interval are as follows:

- (1)

If there are no obstacles in area D, control input is obtained by Equation (

7) and (

8). Otherwise, go to step (2) and (3)

- (2)

Calculate values of using the points detected in area D.

- (3)

Replace Equation (

8) with Equation (

15).