Coaxiality Evaluation of Coaxial Imaging System with Concentric Silicon–Glass Hybrid Lens for Thermal and Color Imaging

Abstract

1. Introduction

2. Materials and Methods

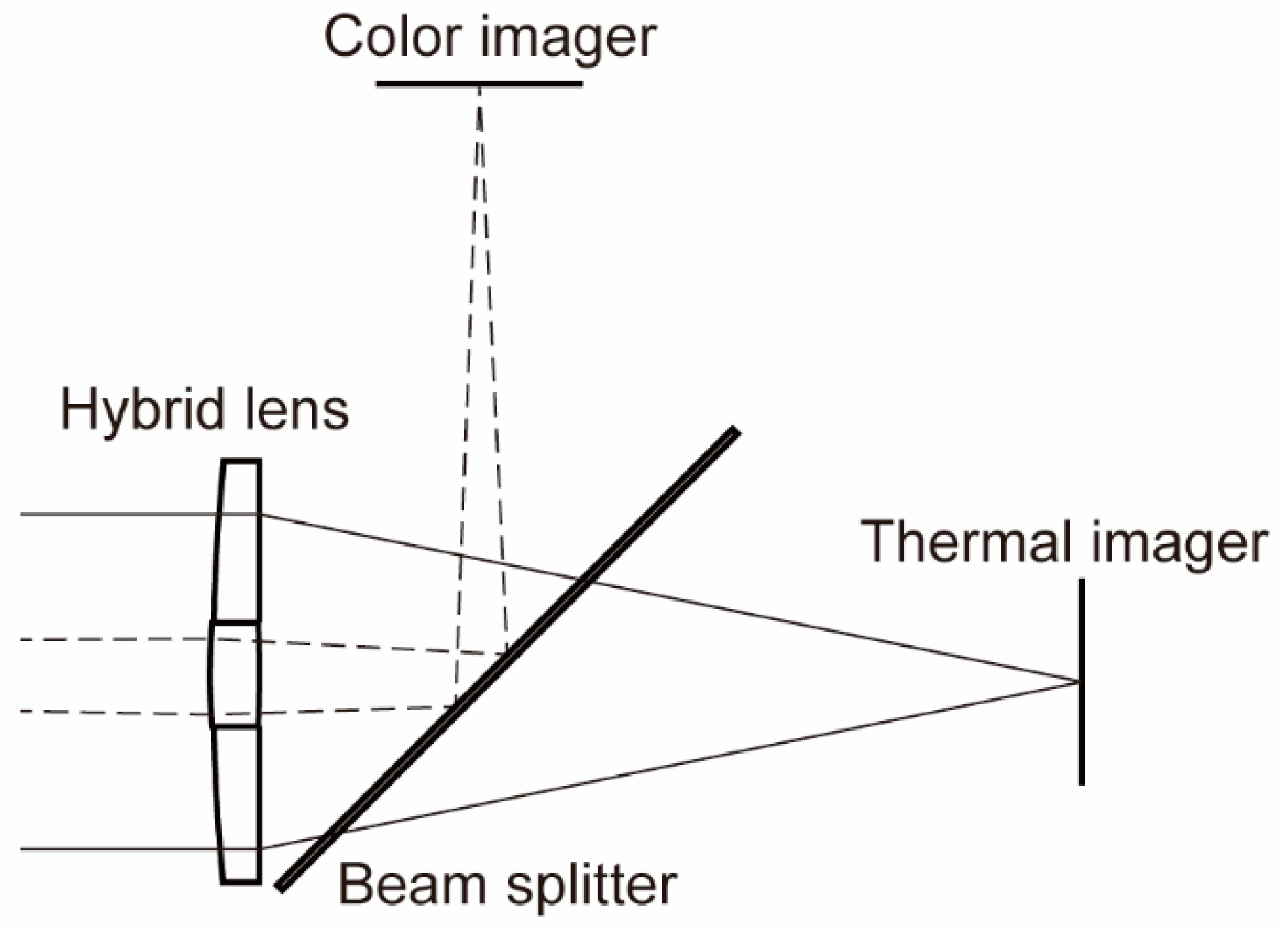

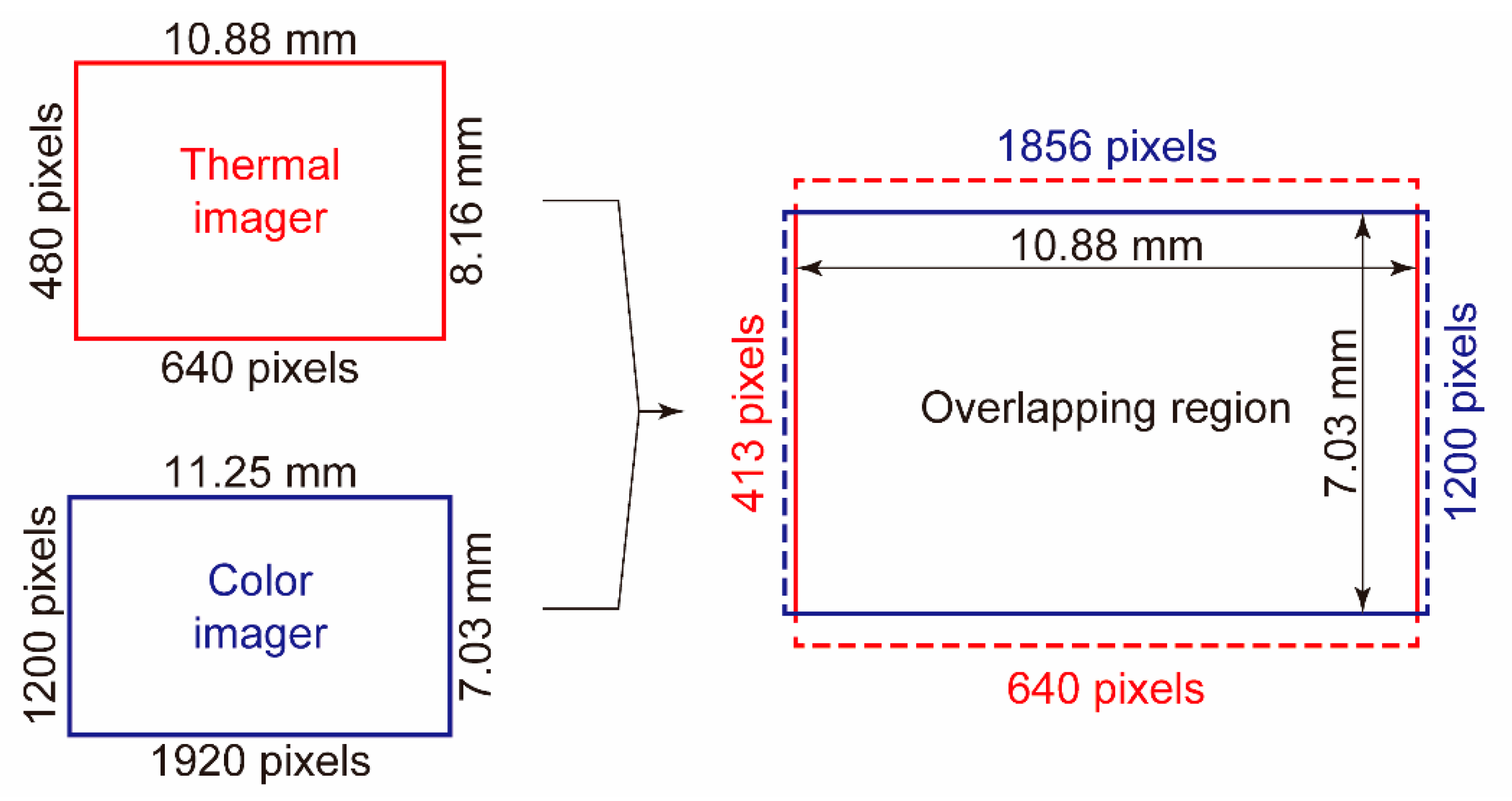

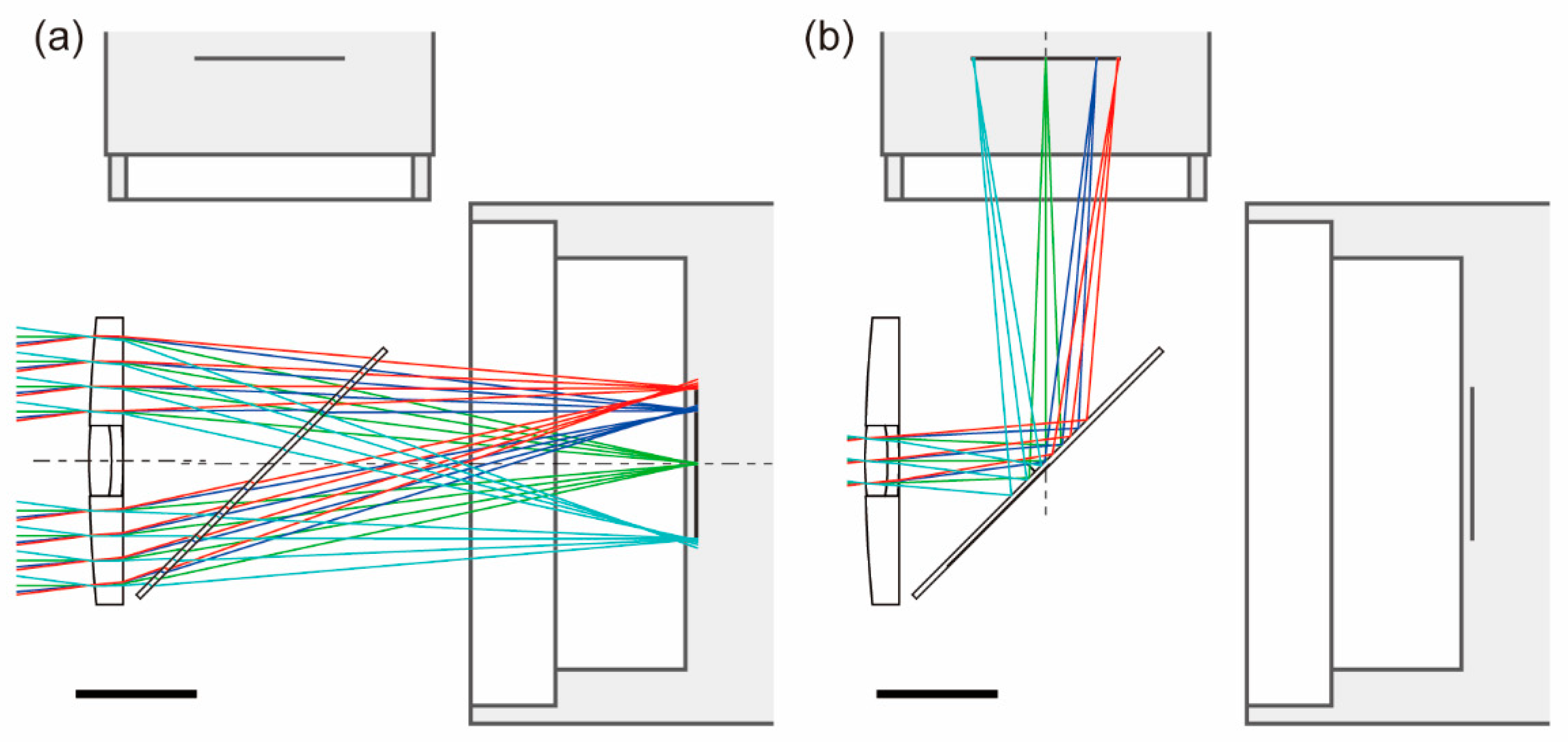

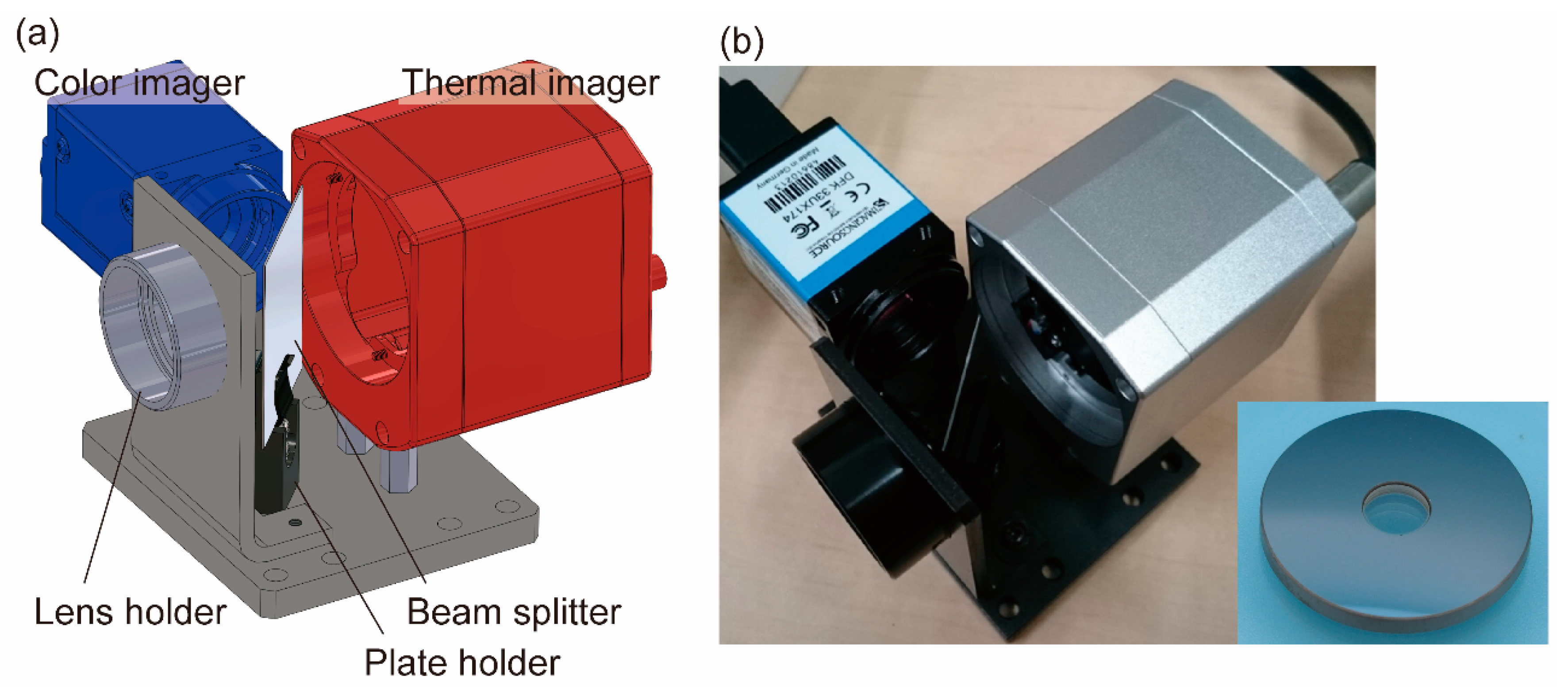

2.1. Overview of Coaxial Imaging System

2.2. Design and Implementation

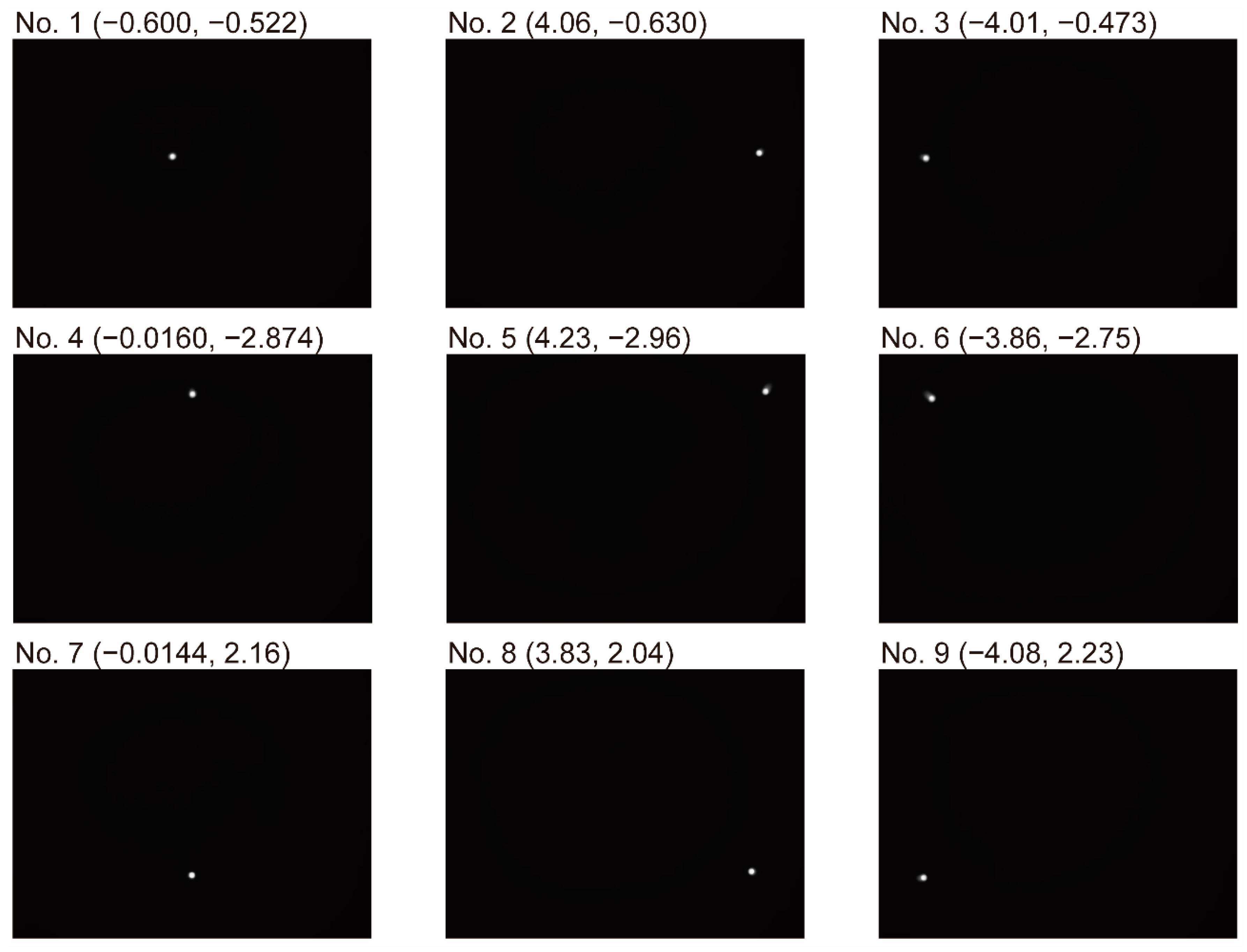

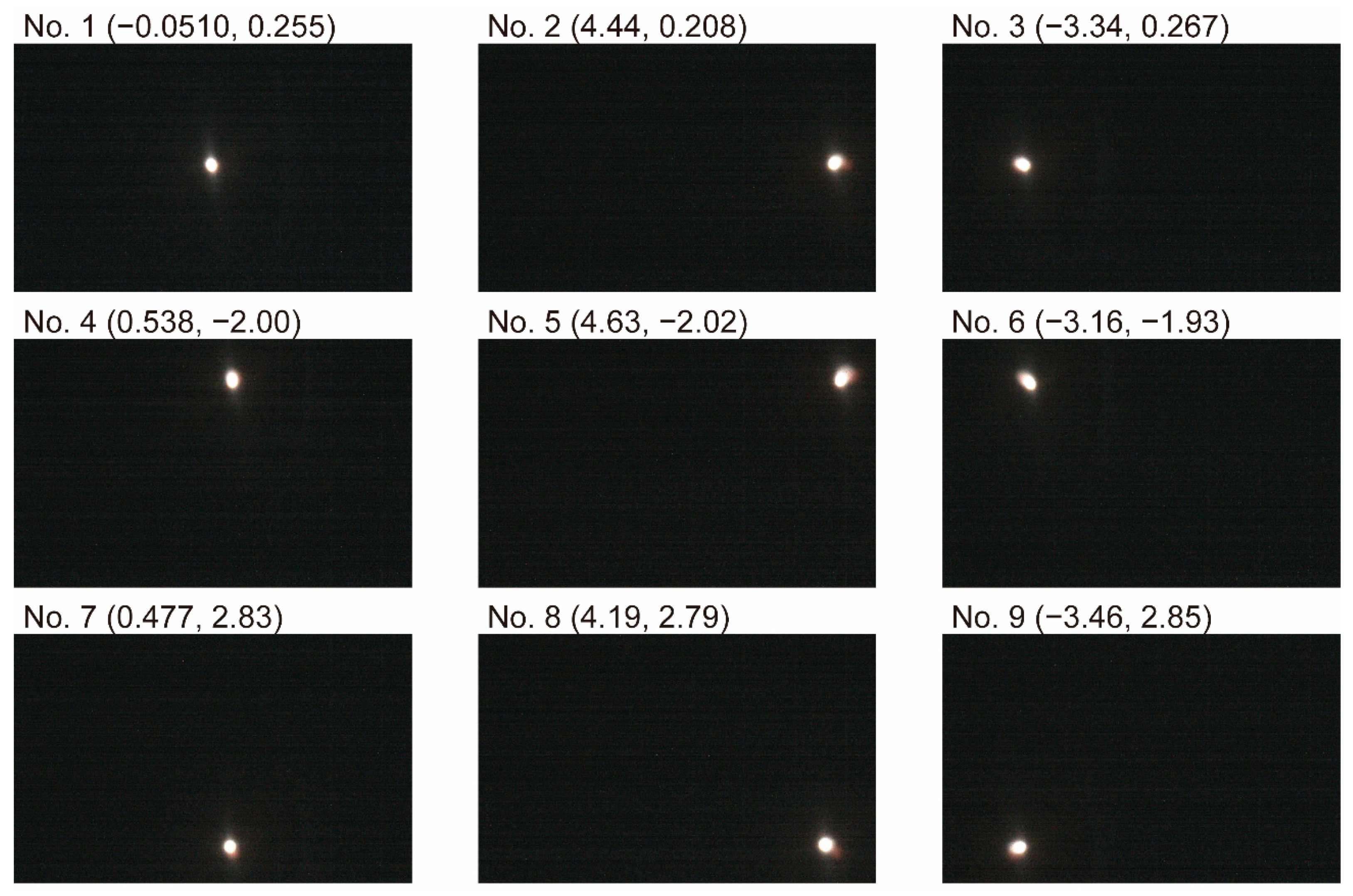

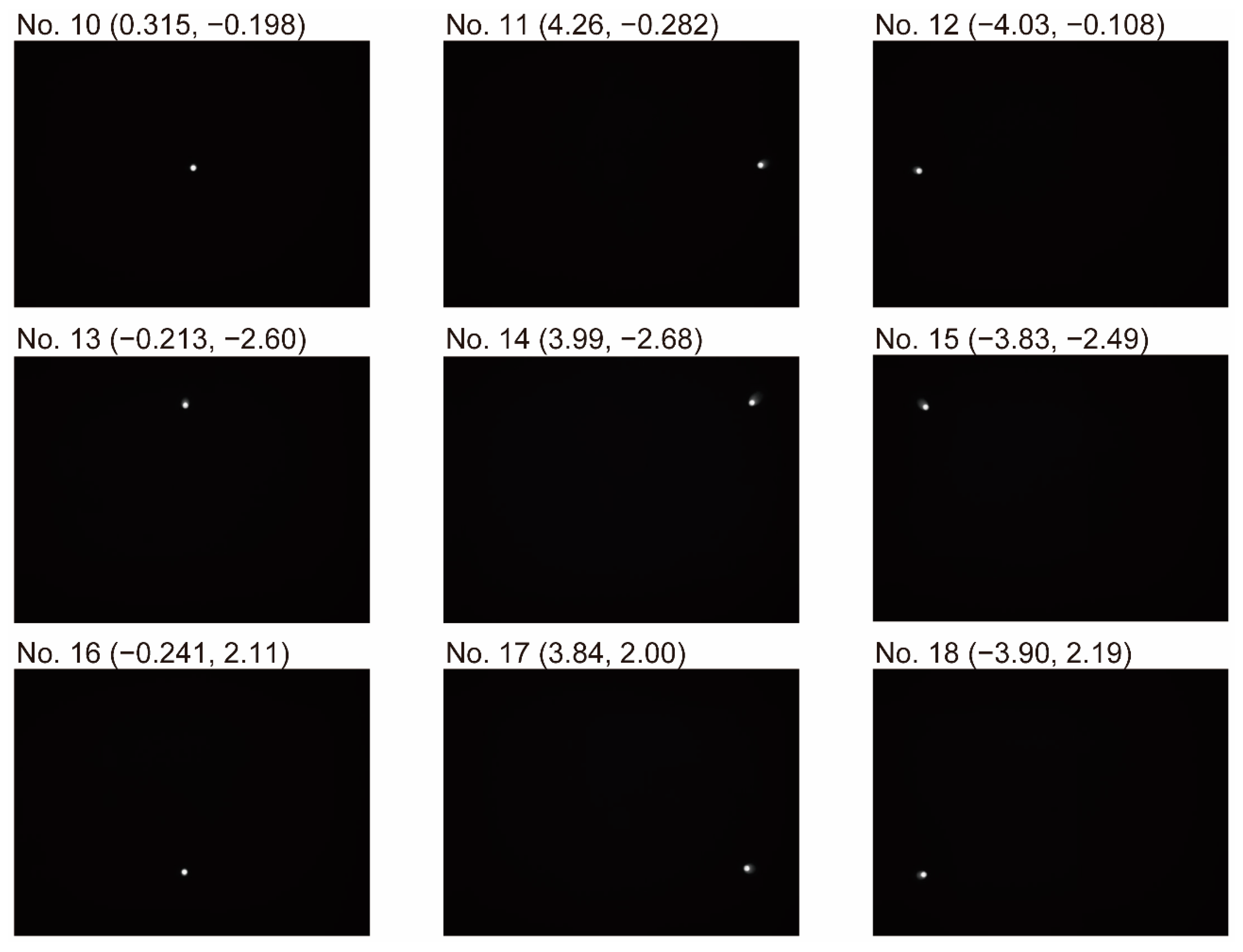

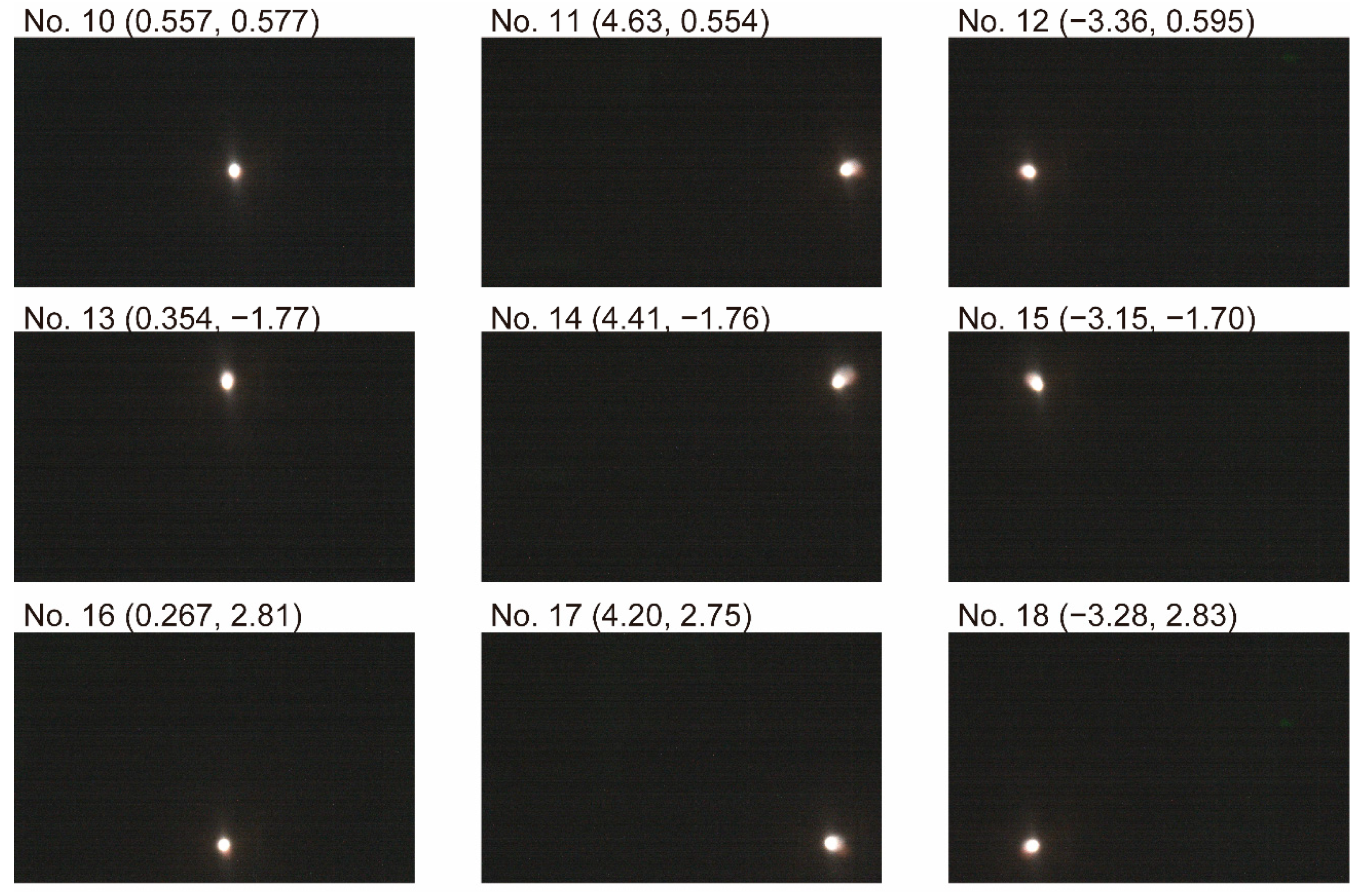

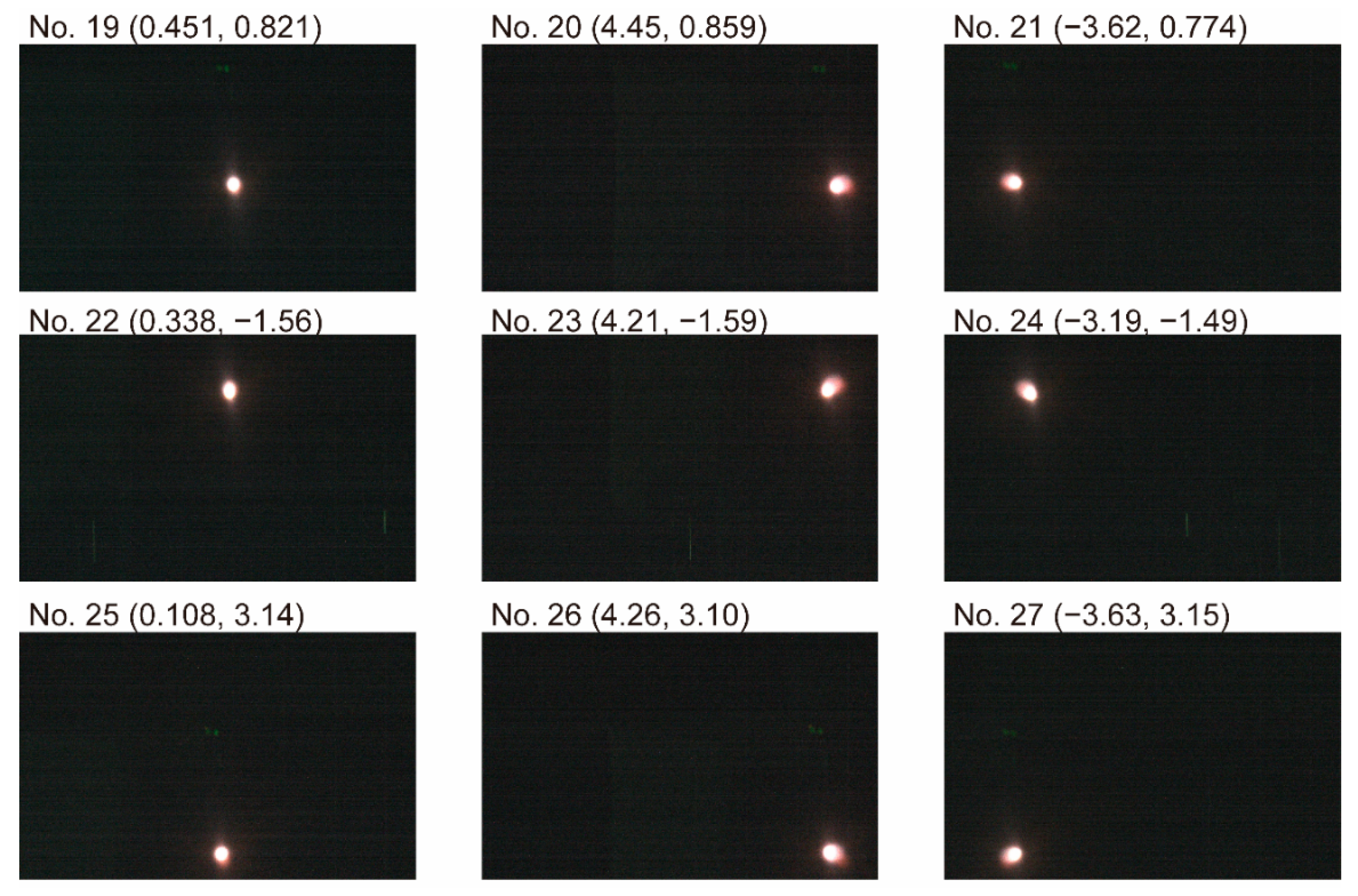

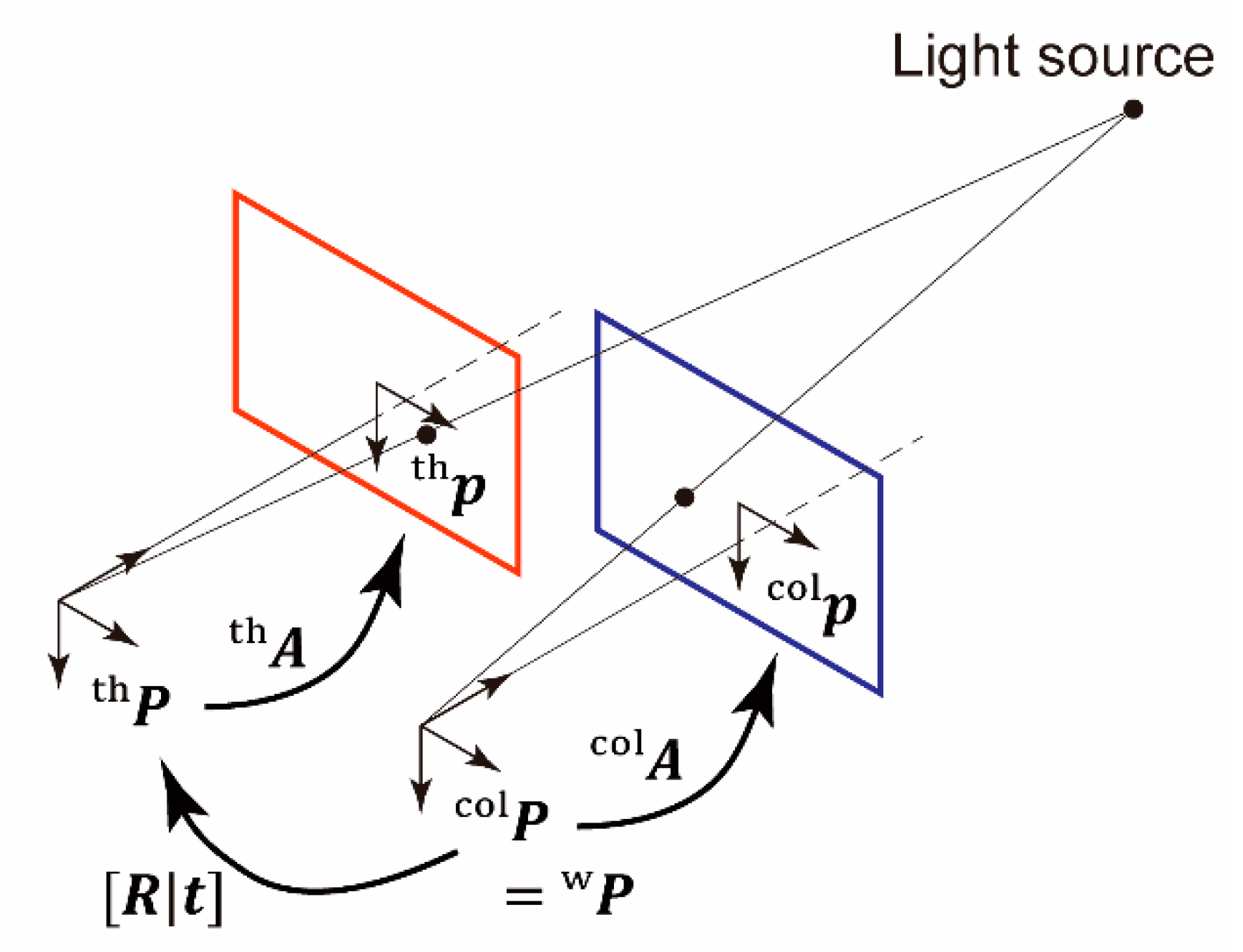

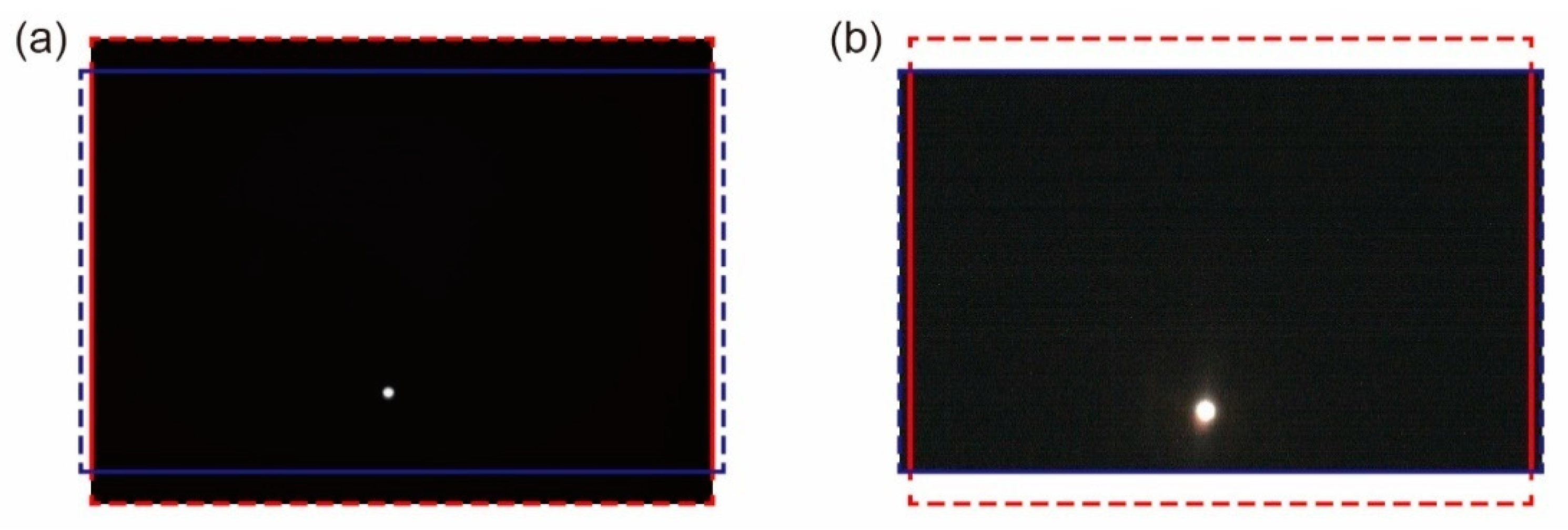

2.3. Capturing Light Source Images

2.4. Camera Parameters for Thermal and Color Cameras

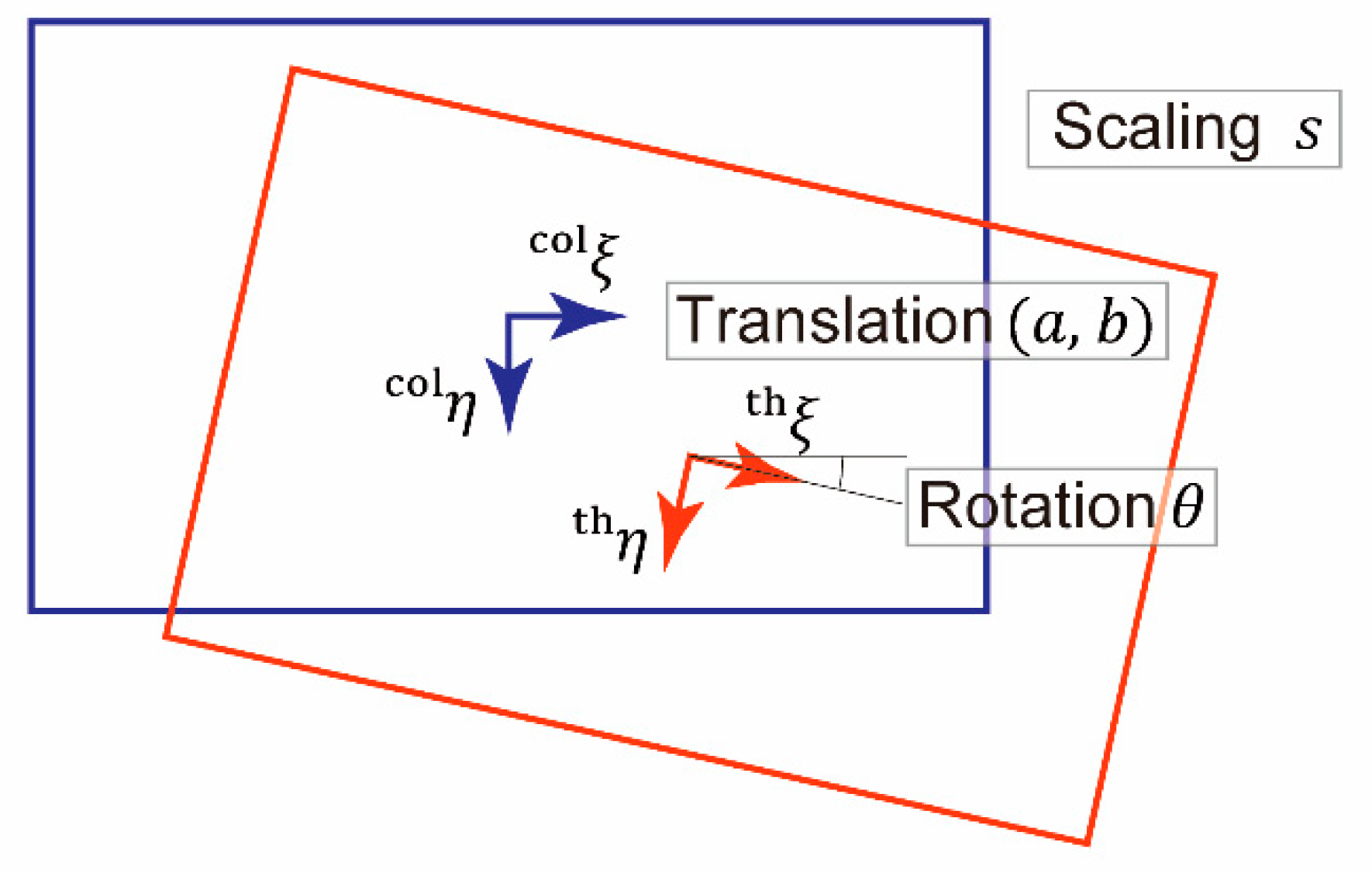

2.5. Mapping from Thermal to Color Images

3. Results

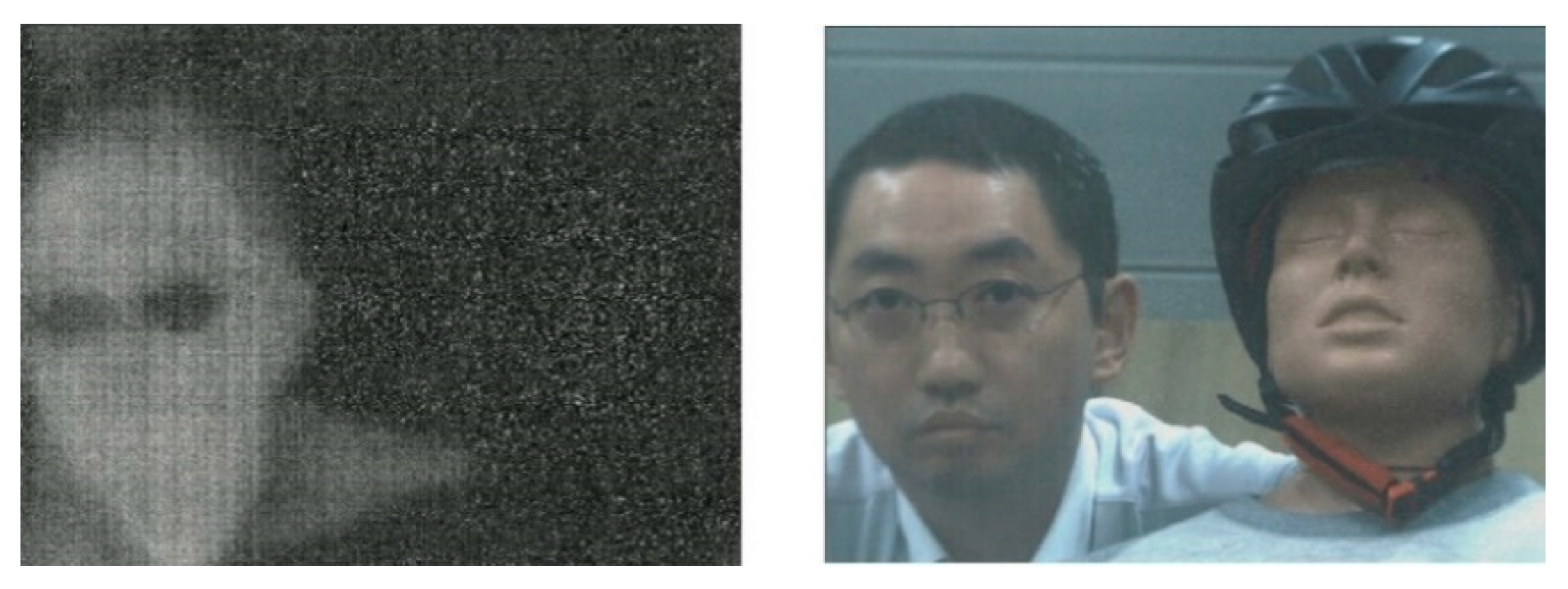

3.1. Captured Thermal and Color Images

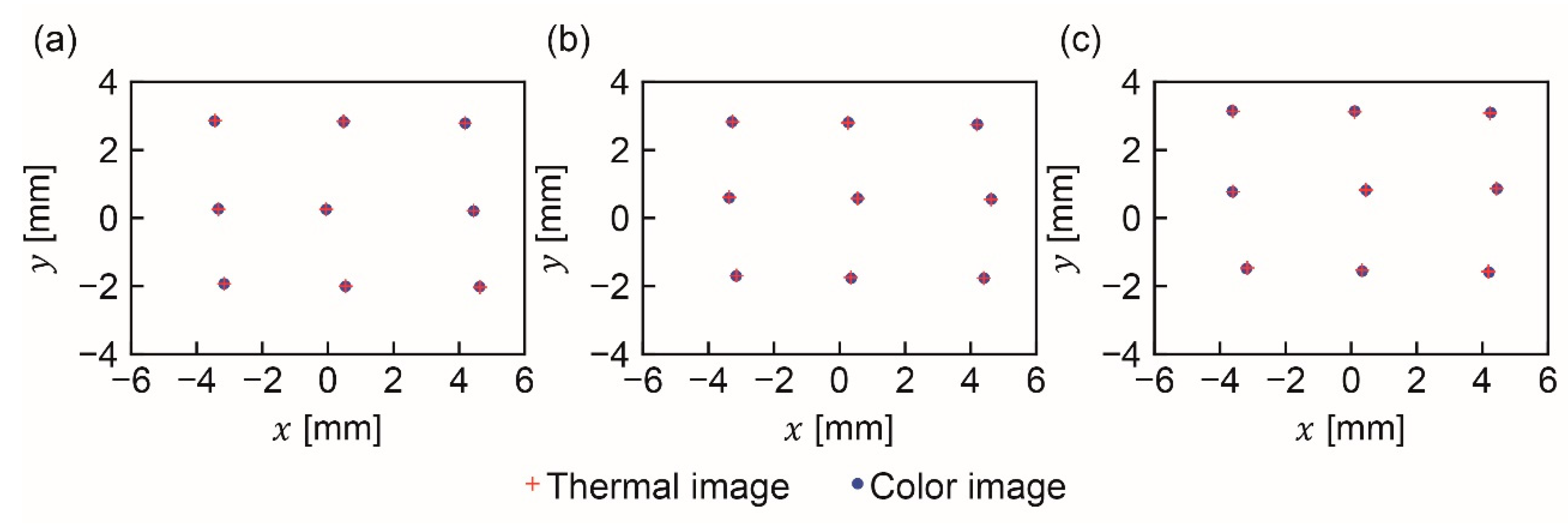

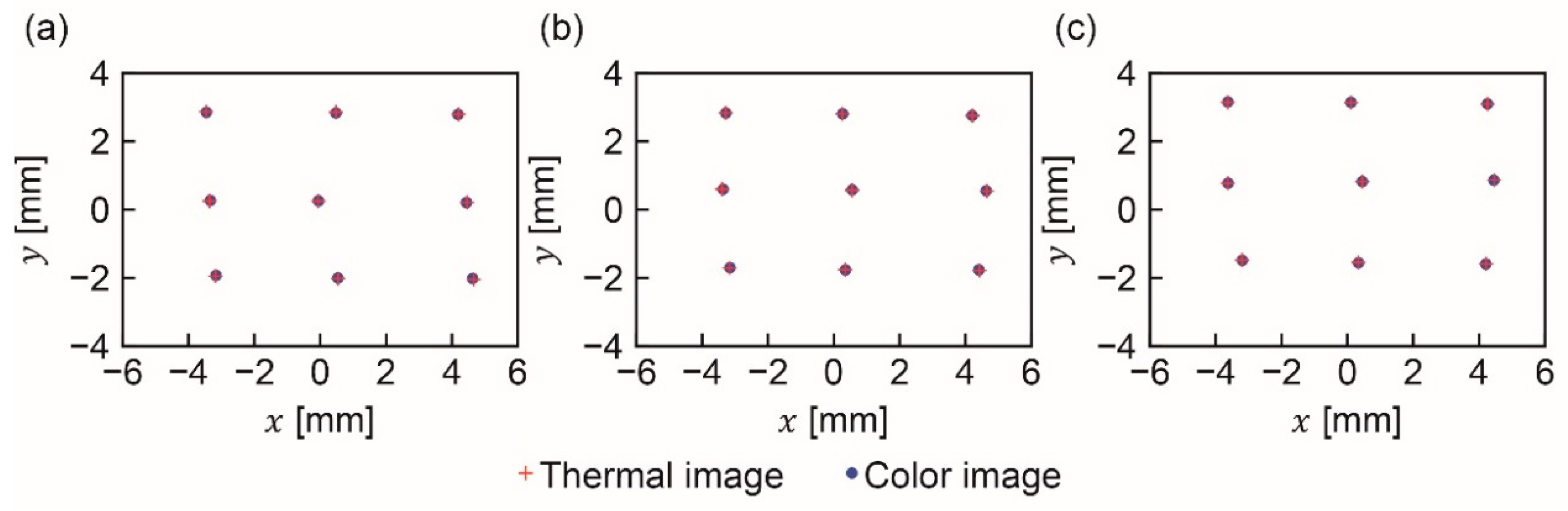

3.2. Estimated Camera Parameters

3.3. Estimated Mapping from Thermal to Color Images

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

Appendix B

| Image Pair Number | Estimated Coordinates of Light Source | Image Pair Number | Estimated Coordinates of Light Source | ||||

|---|---|---|---|---|---|---|---|

| 1 | −0.5268 | 2.543 | 500.0 | 15 | −67.30 | −34.07 | 1000 |

| 2 | 44.45 | 2.083 | 500.0 | 16 | 7.011 | 56.16 | 1000 |

| 3 | −33.37 | 2.634 | 500.0 | 17 | 88.23 | 55.17 | 1000 |

| 4 | 5.370 | −20.08 | 500.0 | 18 | −65.58 | 56.59 | 1000 |

| 5 | 46.32 | −20.27 | 500.0 | 19 | 17.94 | 32.89 | 2000 |

| 6 | −31.63 | −19.31 | 500.0 | 20 | 178.0 | 34.46 | 2000 |

| 7 | 4.760 | 28.35 | 500.0 | 21 | −145.0 | 30.93 | 2000 |

| 8 | 41.88 | 27.90 | 500.0 | 22 | 13.36 | −62.32 | 2000 |

| 9 | −34.52 | 28.54 | 500.0 | 23 | 168.3 | −63.94 | 2000 |

| 10 | 11.07 | 11.52 | 1000 | 24 | −127.7 | −59.48 | 2000 |

| 11 | 92.68 | 11.04 | 1000 | 25 | 4.243 | 125.7 | 2000 |

| 12 | −67.30 | 11.95 | 1000 | 26 | 170.3 | 124.1 | 2000 |

| 13 | 5.259 | −35.35 | 1000 | 27 | −145.2 | 126.1 | 2000 |

| 14 | 84.1 | −35.5 | 1000 | ||||

Appendix C

References

- Ciric, I.T.; Cojbasic, Z.M.; Nikolic, V.D.; Igic, T.S.; Tursnek, B.A.J. Intelligent optimal control of thermal vision-based person-following robot platform. Therm. Sci. 2014, 18, 957–966. [Google Scholar] [CrossRef]

- Gade, R.; Moeslund, T.B. Thermal cameras and applications: A survey. Mach. Vision. Appl. 2014, 25, 245–262. [Google Scholar] [CrossRef]

- Grant, O.M.; Tronina, L.; Jones, H.G.; Chaves, M.M. Exploring thermal imaging variables for the detection of stress responses in grapevine under different irrigation regimes. J. Exp. Bot. 2007, 58, 815–825. [Google Scholar] [CrossRef] [PubMed]

- Guoa, J.; Tiana, G.; Zhoub, Y.; Wanga, M.; Linga, N.; Shena, Q.; Guoa, S. Evaluation of the grain yield and nitrogen nutrient status of wheat (Triticum aestivum L.) using thermal imaging. Field Crops Res. 2016, 196, 463–472. [Google Scholar] [CrossRef]

- Vidas, S.; Moghadam, P.; Bosse, M. 3D thermal mapping of building interiors using an RGB-D and thermal camera. In Proceedings of the IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 2311–2318. [Google Scholar]

- Borrmann, D.; Nuchter, A.; Dakulovic, M.; Maurovic, I.; Petrovic, I.; Osmankovic, D.; Velagic, J. A mobile robot based system for fully automated thermal 3D mapping. Adv. Eng. Inform. 2014, 28, 425–440. [Google Scholar] [CrossRef]

- Prakash, S.; Lee, P.Y.; Caelli, T.; Raupach, T. Robust thermal camera calibration and 3D mapping of object surface temperatures. Proc. SPIE 2016, 6205. [Google Scholar] [CrossRef]

- Yasuda, K.; Naemura, T.; Harashima, H. Thermo-Key: Human region segmentation from video. IEEE Comput. Graph. 2004, 24, 26–30. [Google Scholar] [CrossRef] [PubMed]

- Treptow, A.; Cielniak, G.; Duckett, T. Active people recognition using thermal and grey images on a mobile security robot. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 3610–3615. [Google Scholar]

- Cheng, S.Y.; Park, S.; Trivedi, M. Multiperspective thermal IR and video arrays for 3D body tracking and driver activity analysis. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05)—Workshops, San Diego, CA, USA, 21–23 September 2005; pp. 1–8. [Google Scholar]

- Morris, N.J.W.; Avidan, S.; Matusik, W.; Pfister, H. Statistics of infrared images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–7. [Google Scholar]

- Pham, V.Q.; Takahashi, K.; Naemura, T. Live video segmentation in dynamic backgrounds using thermal vision. IPSJ Trans. Comput. Vis. Appl. 2010, 2, 84–93. [Google Scholar] [CrossRef][Green Version]

- Akhloufi, M.A.; Porcher, C.; Bendada, A. Fusion of thermal infrared and visible spectrum images for robust pedestrian tracking. Proc. SPIE 2014, 9076. [Google Scholar] [CrossRef]

- Loveday, M.; Breckon, T.P. On the impact of parallax free colour and infrared image co-registration to fused illumination invariant adaptive background modelling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 126700–126709. [Google Scholar]

- Susperregi, L.; Sierra, B.; Castrillon, M.; Lorenzo, J.; Martinez-Otzeta, J.M.; Lazkano, E. On the use of a low-cost thermal sensor to improve kinect people detection in a mobile robot. Sensors 2013, 13, 14687–14713. [Google Scholar] [CrossRef] [PubMed]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; Kweon, I.S. Multispectral pedestrian detection benchmark dataset and baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1037–1045. [Google Scholar]

- Choi, Y.; Kim, N.; Hwang, S.; Park, K.; Yoon, J.S.; An, K.; Kweon, I.S. KAIST multi-spectral day/night data set for autonomous and assisted driving. IEEE Trans. Intell. Transp. Syst. 2018, 19, 934–948. [Google Scholar] [CrossRef]

- Okazawa, A.; Takahata, T.; Harada, T. Simultaneous transparent and non-transparent objects segmentation with multispectral scenes. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS2019), Macao, China, 4–8 November 2019; pp. 4977–4984. [Google Scholar]

- Osroosh, Y.; Khot, L.; Peters, R. Economical thermal-RGB imaging system for monitoring agricultural crops. Comput. Electron. Agr. 2018, 147, 34–43. [Google Scholar] [CrossRef]

- Ogino, Y.; Shibata, T.; Tanaka, M.; Okutomi, M. Coaxial visible and FIR camera system with accurate geometric calibration. Proc. SPIE 2017, 10214. [Google Scholar] [CrossRef]

- Shibata, T.; Tanaka, M.; Okutomi, M. Accurate joint geometric camera calibration of visible and far-infrared cameras. In Proceedings of the IS&T International Symposium on Electronic Imaging 2017 (EI2017), Burlingame, CA, USA, 29 January–2 February 2017; pp. 7–13. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- OpenCV: Camera Calibration and 3D Reconstruction. Available online: https://docs.opencv.org/4.4.0/d9/d0c/group__calib3d.html#details (accessed on 29 September 2020).

- Takahata, T.; Matsumoto, K.; Shimoyama, I. A silicon-glass hybrid lens for simultaneous color-and-thermal imaging. In Proceedings of the 17th International Conference on Solid-State Sensors, Actuators and Microsystems (TRANSDUCERS & EUROSENSORS XXVII), Barcelona, Spain, 16–20 June 2013; pp. 1408–1411. [Google Scholar]

- Takahata, T.; Matsumoto, K.; Shimoyama, I. Compact coaxial thermal and color imaging system with silicon-glass hybrid lens. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA 2016), Stockholm, Sweden, 16–21 May 2016; pp. 5555–5560. [Google Scholar]

- Optris PI 640 The Smallest Measuring VGA Thermal Imager Worldwide. Available online: http://www.optris.com/thermal-imager-optris-pi-640 (accessed on 22 June 2020).

- DFK 33UX174—USB 3.0 Color Industrial Camera. Available online: https://www.theimagingsource.com/products/industrial-cameras/usb-3.0-color/dfk33ux174/ (accessed on 22 June 2020).

- uvSc_Camera—ROS Wiki. Available online: http://wiki.ros.org/uvc_camera (accessed on 20 July 2020).

- Optris_Drivers—ROS Wiki. Available online: http://wiki.ros.org/optris_drivers (accessed on 20 July 2020).

- Kraft, D. A software package for sequential quadratic programming. In Technical Report DFVLR-FB 88-28; Institut für Dynamik der Flugsysteme: Oberpfaffenhofen, Germany, 1988. [Google Scholar]

- Minimize (method=‘SLSQP’)—SciPy v1.5.2 Reference Guide. Available online: https://docs.scipy.org/doc/scipy/reference/optimize.minimize-slsqp.html (accessed on 29 September 2020).

| Thermal Camera [26] | Color Camera [27] | |

|---|---|---|

| Manufacturer | Optris | The Imaging Source |

| Model | PI640 | DFK 33UX174 |

| Number of pixels | 640 × 480 | 1920 × 1200 |

| PC interface | USB 2.0 | USB 3.0 |

| Spectral range | 7.5–13 μm | 0.40–0.65 μm (with IR cut filter) |

| Frame rate | 32 fps | 54 fps (RGB24 2) |

| Imager manufacturer and model | (not available) | Sony IMX174LQ |

| Pixel size | 17 μm × 17 μm | 5.86 μm × 5.86 μm |

| Imager size 1 | 10.88 mm × 8.16 mm | 11.25 mm × 7.03 mm |

| Distance to the Light Source for the Images Under Evaluation | Distance to the Light Source for the Images Used for Estimation | ||

|---|---|---|---|

| 0.5 m | 1 m | 2 m | |

| 0.5 m | 0.00964 mm | 0.0157 mm | 0.0264 mm |

| 1 m | 0.0153 mm | 0.00947 mm | 0.0169 mm |

| 2 m | 0.0252 mm | 0.0163 mm | 0.00823 mm |

| Distance to the Light Source for the Images Used for Estimation | |||

|---|---|---|---|

| 0.5 m | 1 m | 2 m | |

| Rotation angle [rad] | 0.0139 | 0.0147 | 0.0136 |

| Lateral translation [mm] | 0.536 | 0.539 | 0.535 |

| Vertical translation [mm] | 0.794 | 0.790 | 0.786 |

| Scaling factor | 0.963 | 0.966 | 0.969 |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Takahata, T. Coaxiality Evaluation of Coaxial Imaging System with Concentric Silicon–Glass Hybrid Lens for Thermal and Color Imaging. Sensors 2020, 20, 5753. https://doi.org/10.3390/s20205753

Takahata T. Coaxiality Evaluation of Coaxial Imaging System with Concentric Silicon–Glass Hybrid Lens for Thermal and Color Imaging. Sensors. 2020; 20(20):5753. https://doi.org/10.3390/s20205753

Chicago/Turabian StyleTakahata, Tomoyuki. 2020. "Coaxiality Evaluation of Coaxial Imaging System with Concentric Silicon–Glass Hybrid Lens for Thermal and Color Imaging" Sensors 20, no. 20: 5753. https://doi.org/10.3390/s20205753

APA StyleTakahata, T. (2020). Coaxiality Evaluation of Coaxial Imaging System with Concentric Silicon–Glass Hybrid Lens for Thermal and Color Imaging. Sensors, 20(20), 5753. https://doi.org/10.3390/s20205753