2.3.2. The Tilt Correction Taking Account of Error from the Barcode

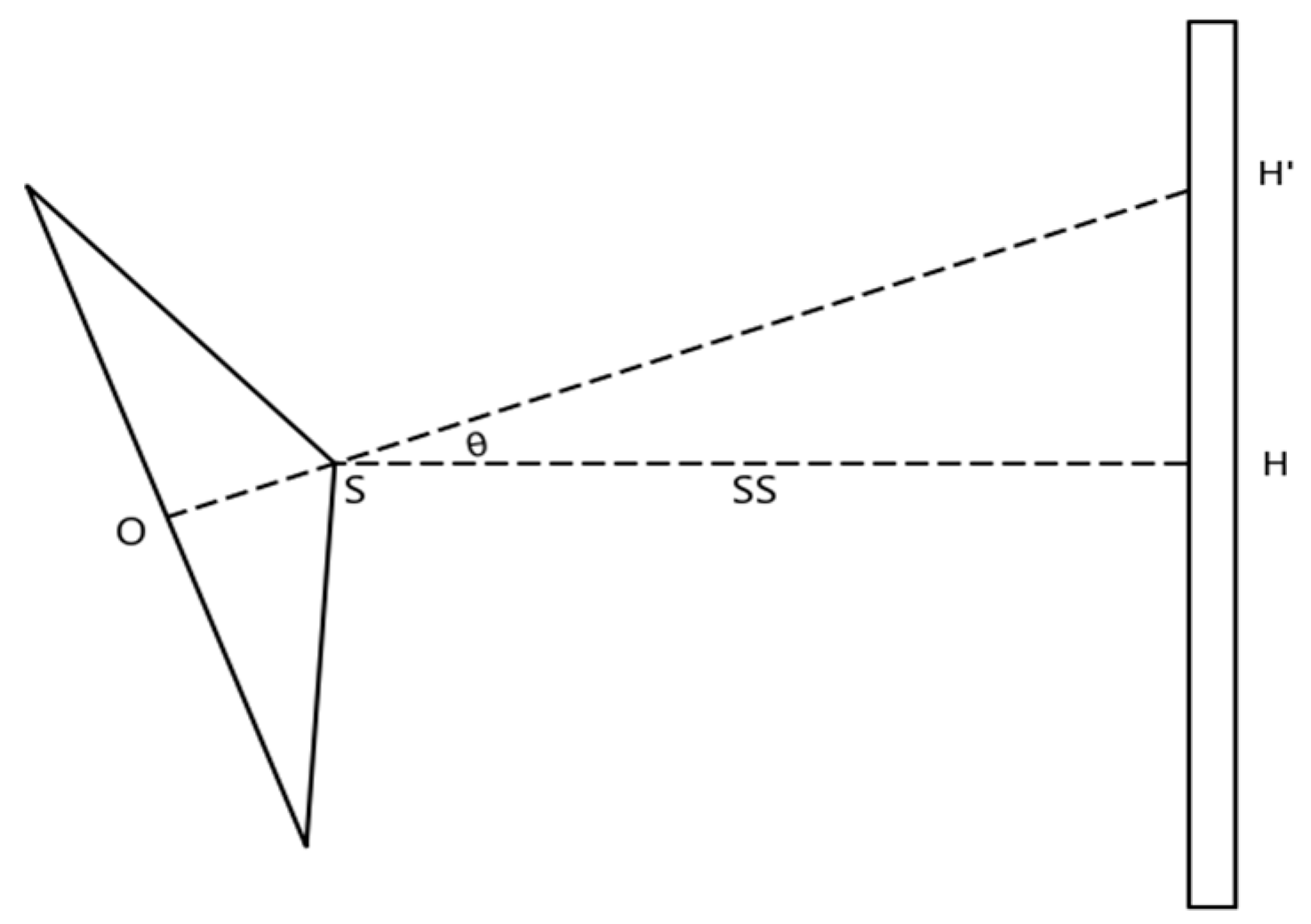

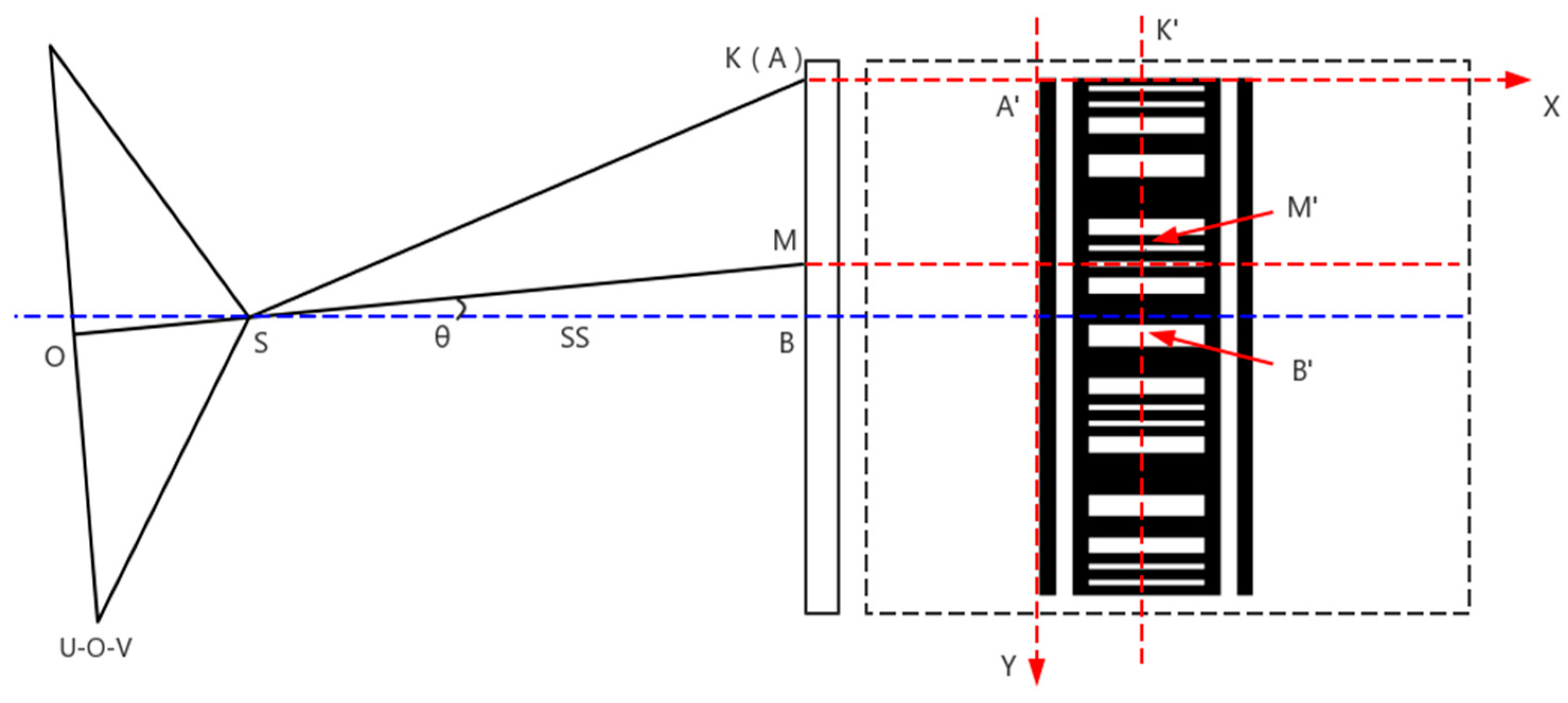

When the measuring mark is installed, the plane of mark cannot be strictly vertical, which will influence the result of the settlement. As shown in

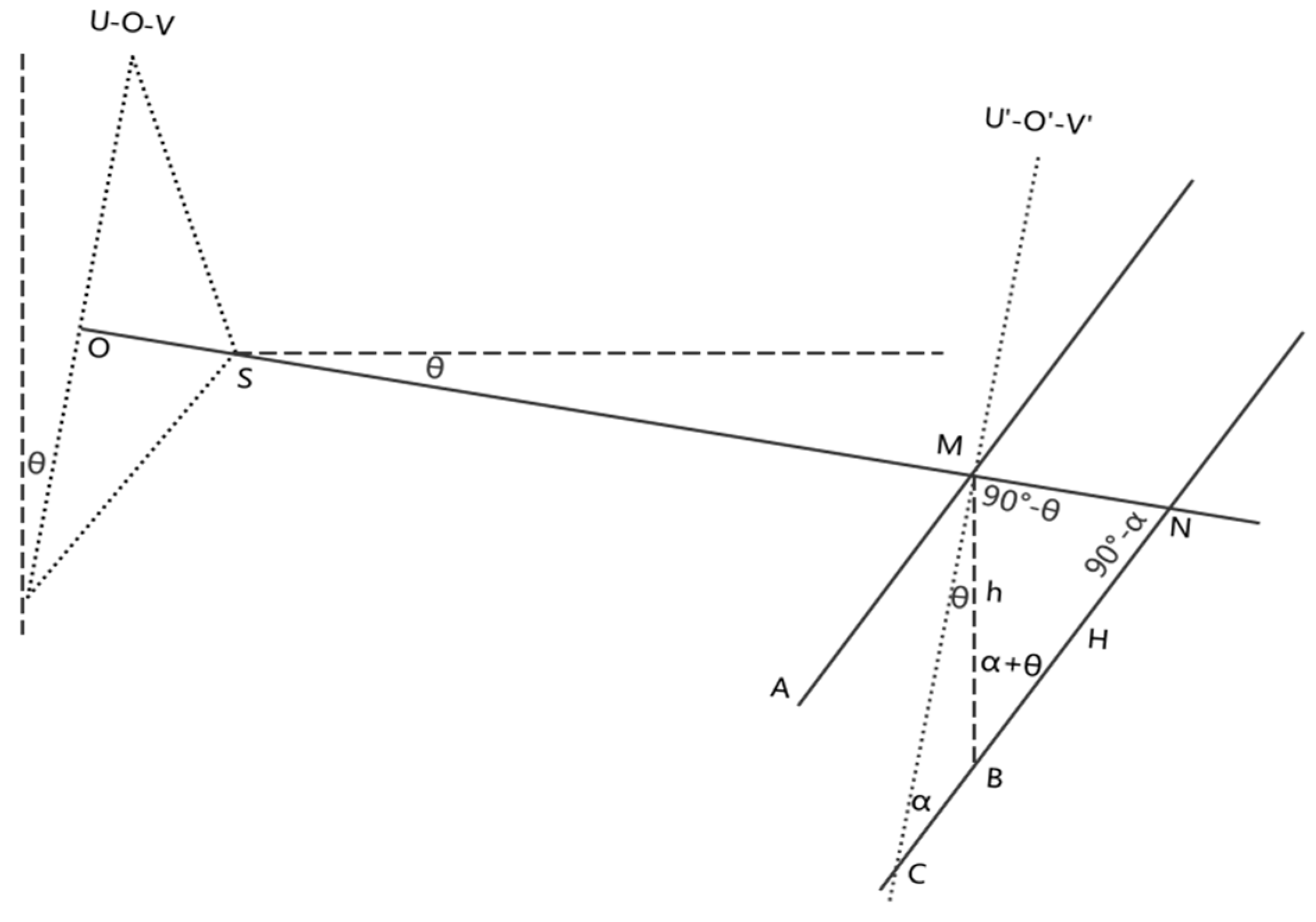

Figure 7, Plane U-O-V is the image plane and O is the principal point on Plane U-O-V, S is the projection center and the angle between the photographic base and the horizontal direction is

. Plane U′-O′-V′ is the plane which is parallel to the Plane U-O-V and contains Point M. AM shows the starting position of the measuring mark and it is not vertical; there is an angle

between AM and Plane U′-O′-V′, then the angle between AM and the vertical direction equals to

. CN shows the position of the measuring mark after settlement. As shown in

Figure 7, Point M moves to Point B after settlement, the actual settlement value is h. However, the settlement value measured by the image is H since the readings from the barcode on the image are M(B) and N respectively.

As shown in

Figure 7, a triangle is formed by lines joining points BMH. Based on the geometric relation and the sine law, the following equation is satisfied:

The rearranged equation is as follows:

Since angle

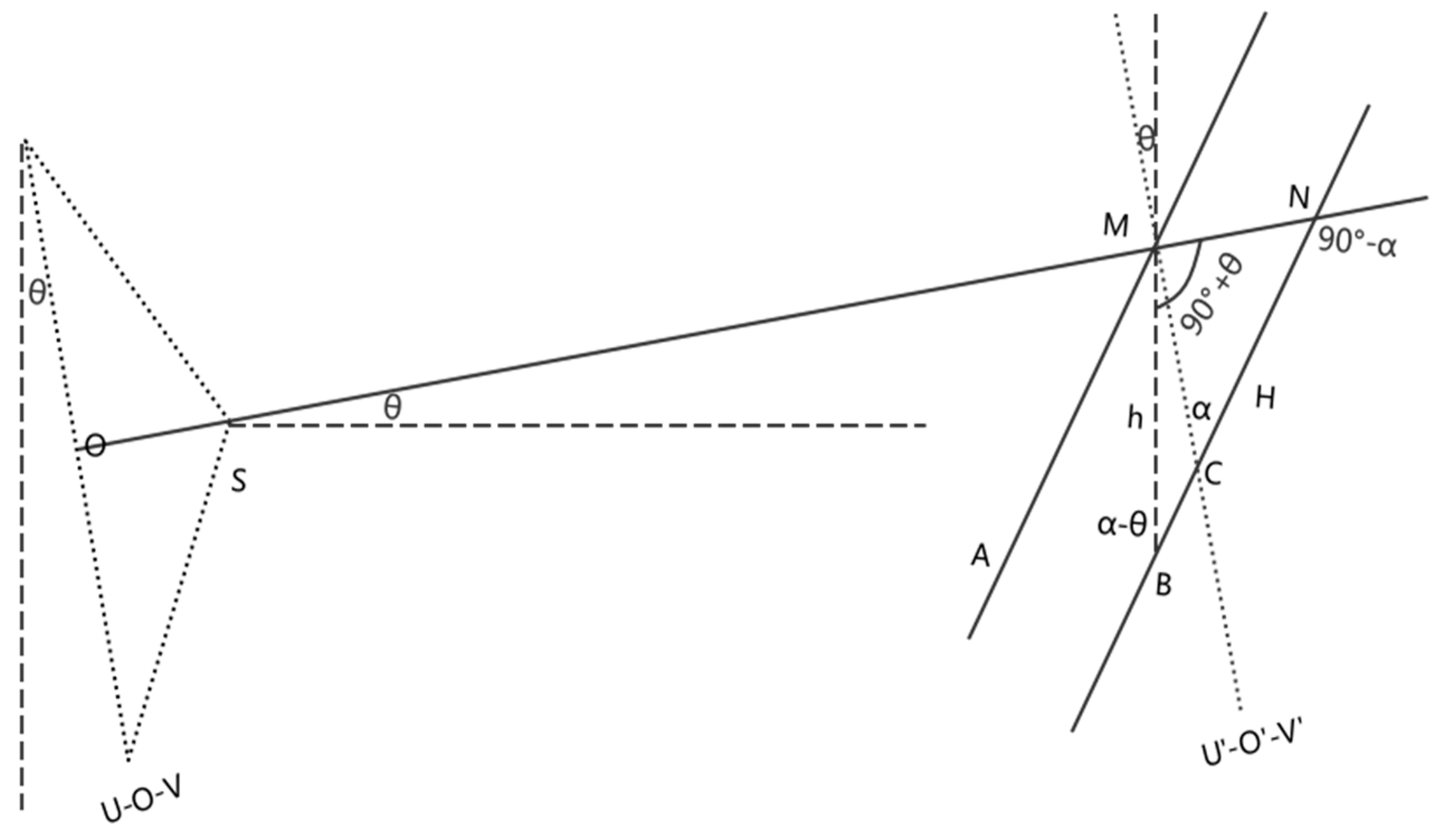

. can be the angle of depression or elevation and angle α angle also can be the angle with two different directions, and either angle can be larger than the other, there are several other conditions of the geometric relations. As shown in

Figure 8, it is another condition that angle

is angle of elevation and angle

is larger than angle

. In

Figure 8, a triangle is also formed by lines joining points BMH and based on the geometric relation and the sine law, the following equation is satisfied:

The rearranged equation is the same as Equation (8). It can be deduced that in other conditions, the relationship of the actual settlement value h and the measuring settlement value H is also the same as Equation (8). Thus it can be concluded that when the angle between the photographic baseline and the horizontal direction is and the angle between measuring mark and image plane is , the actual settlement value h can be calculated by correcting the measuring settlement value H using Equation (8).

In Equation (8), h is the unknown quantity to be evaluated and H is determined by the different readings before and after settlement, angle

is measured using the electronic tilt compensator introduced in

Section 2.3.1. Angle

is determined by the elements of exterior orientation of the camera based on the central projection theory of photogrammetry. The method and theory to determine

is described below.

According to the pinhole camera model and the fundamental projective geometry, the relationship of point Q in the physical world and its projection, point q, on the image is shown in Equation (10):

where

is the homogeneous coordinates of point q on the image plane, which is measured from the image.

is the homogeneous coordinates of point Q in the physical world, which can be given after the establishment of the physical world coordinate system based on the design of the barcode.

is an arbitrary scale factor.

is the matrix of the camera intrinsic parameters as shown in Formula (11):

where

and

. are the focal distances in pixels. The physical focal distance of the camera in millimeters is fixed, while the shape of each picture element is not a square but a rectangle, so there are two focal distances; one is in x direction and the other is in y direction. Parameters

and

are the coordinates of the principal point, which can indicate the deviation from the camera principal optic axis to the center of the imager [

28].

In Equation (10), Parameter

is the translation vector, which indicates the deviation from the origin of the camera coordinate system including point q to the origin of the object coordinate system including point Q.

is the rotation matrix, where

are three vectors with three rows and one column. Using the rotation matrix

can make the object coordinate system revolve around the three axises successively and make the three axises of the object coordinate system parallel to the three axises of the camera coordinate system, respectively. As shown in

Figure 9, Point q is on the image and the x axis and the y axis of the photo coordinate system parallel to the x axis and the y axis of the camera coordinate system, respectively. When the plane XOY of the object coordinate system is established using the plane of the barcode, the angle that the coordinate system revolves around the x axis over is the angle between the image plane and the barcode plane and that is the angle

exactly needed.

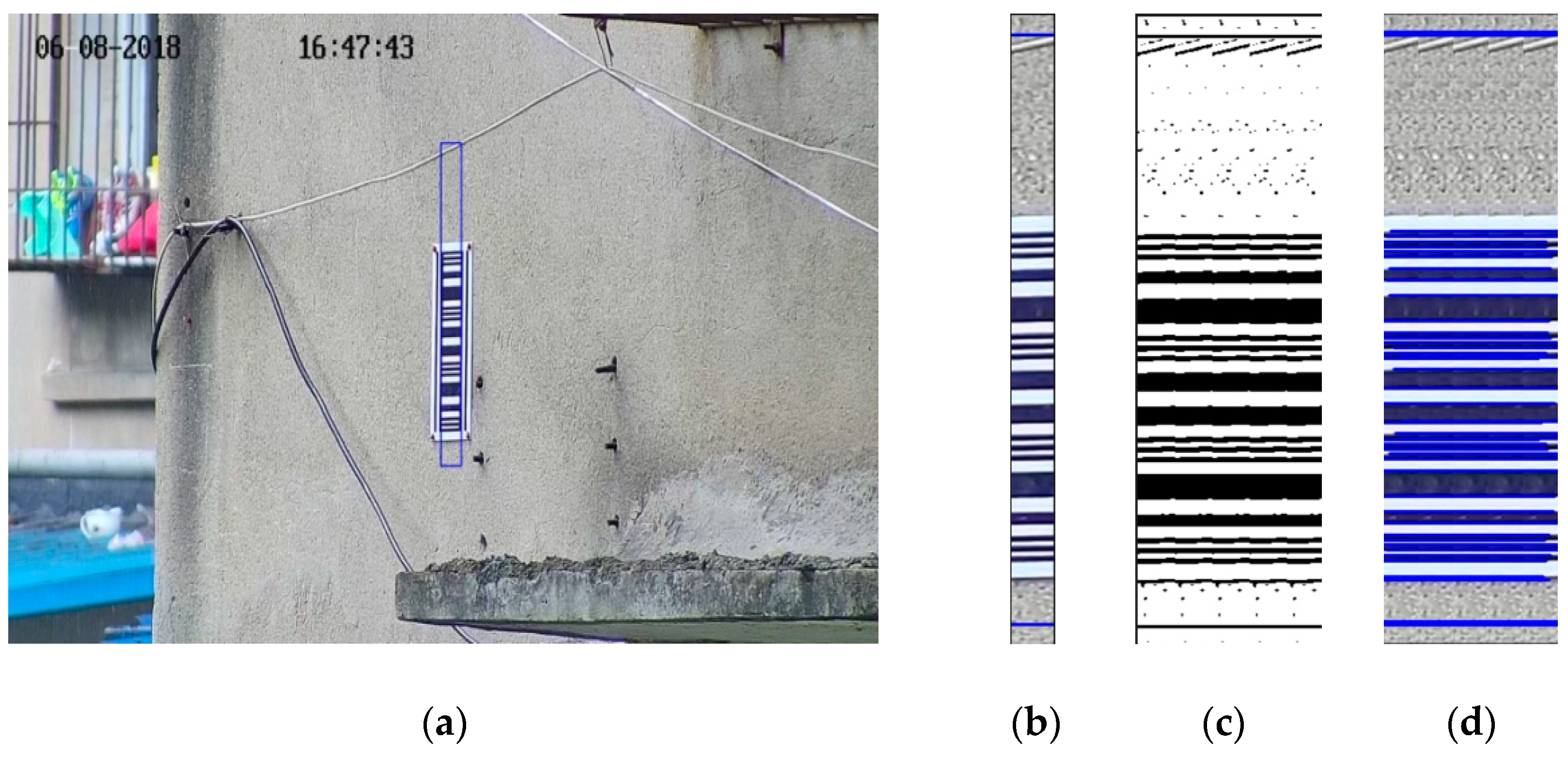

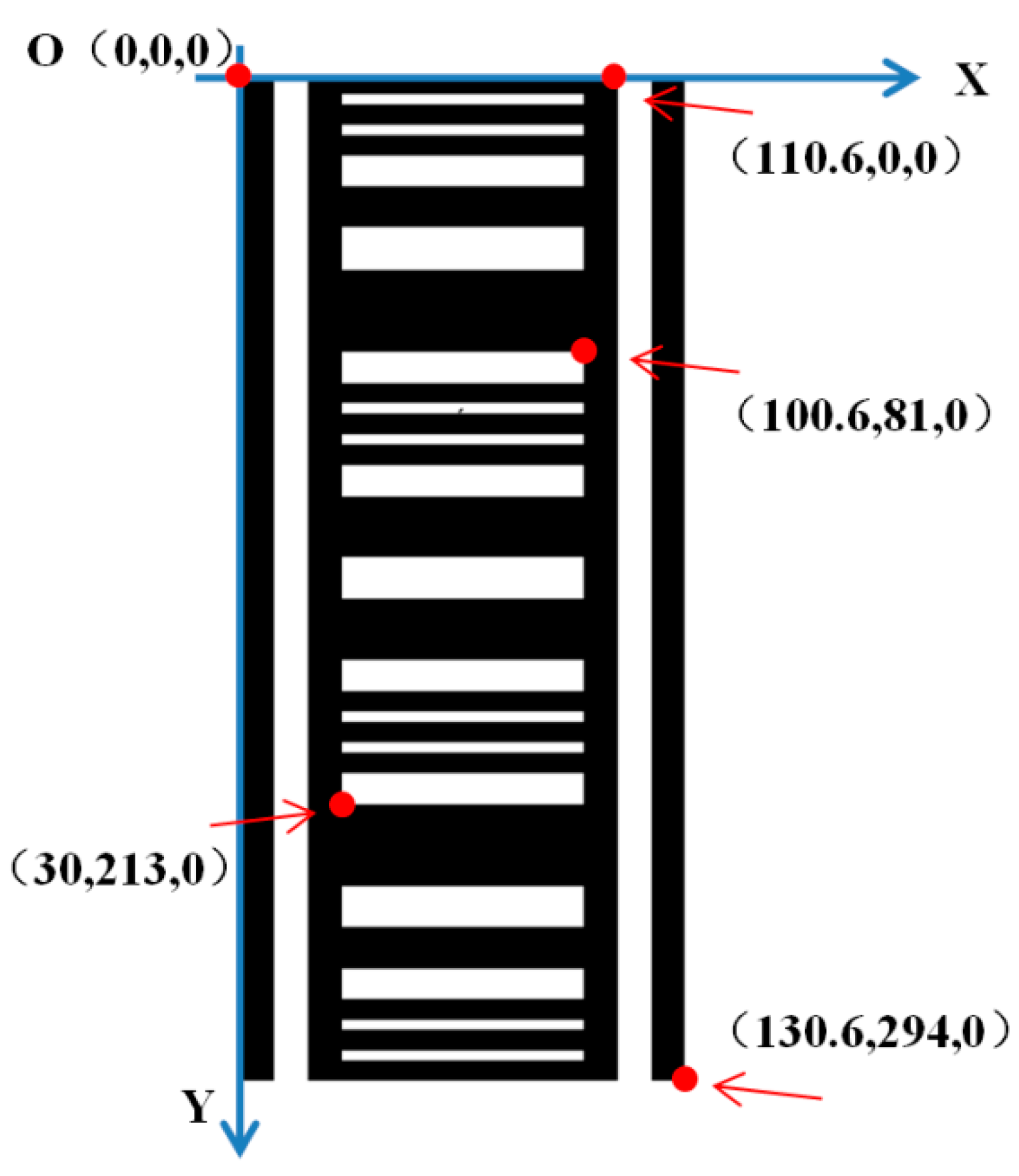

As shown in

Figure 10, the first point in the top left corner on the bar code is set as the origin of the object coordinate system, the coordinates of which are (0, 0, 0). The object coordinate system is established using the OX shown in

Figure 10 as the x axis and the OY shown in

Figure 10 as the y axis, then the direction perpendicular to the x axis and y axis is the z axis. According to the spatial relationships and the design data of the points on the bar code, the object coordinates of the 80 points on the barcode can be acquired. Since they are all in the plane XOY, the z coordinate of each point is 0. The object coordinates of some points is shown in

Figure 10 as an example. The photo coordinates of the 80 points on the barcode can be collected from the image of the barcode. Since the x axis and the y axis of the photo coordinate system are parallel to the x axis and the y axis of the camera coordinate system respectively, the x coordinates and the y coordinates of the camera coordinate system and the photo coordinate system are the same. By plugging the coordinates of the 80 points in two coordinate systems into Equation (10), the unknown quantities,

,

and

, can be solved. Making use of the rotation matrix

, the angle that the coordinate system revolves around the x axis over can be calculated, which is exactly angle

. There are actually 11 unknown quantities in Equation (10), they are two focal distances, two coordinates of the principal point, three rotation angles, three translation parameters and one scale parameter, so at least four points are necessary to solve the equation.

The rotation matrix is expressed as

, then the method of calculating the angles that the coordinate system revolves around the axises over from the rotation matrix is shown as Formula (12):

where

,

and

are the angles that the coordinate system revolves around the x axis, y axis and z axis over respectively,

is the function of C++, the result of which is

when the absolute value of b is larger than the absolute value of a and

when the absolute value of a is larger so that the function value is stable.