The Influence of Camera and Optical System Parameters on the Uncertainty of Object Location Measurement in Vision Systems

Abstract

1. Introduction

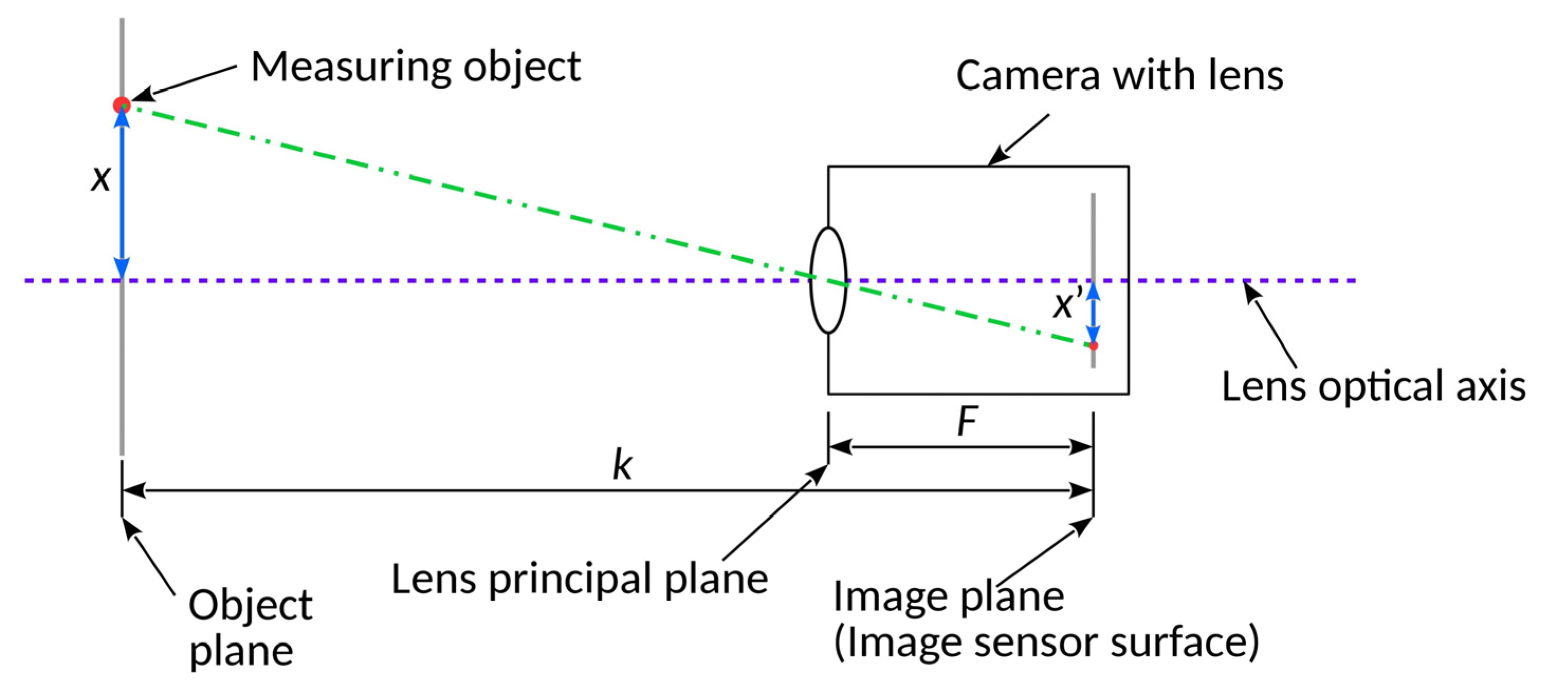

2. Principle of Measuring

- the same factors influence the accuracy of the point displacement on the image sensor in both axes,

- constant mapping scale,

- the camera is not affected by any external factors such as change in temperature, humidity, or vibrations.

3. Uncertainty of Measuring Objects with Imaging Camera

4. Factors Determining Uncertainty of Object Location Measurement

- registration parameters, translating into the brightness level of recorded images,

- features of the camera and the optical system, such as the sensitivity of the image sensor and the focal length of the lens.

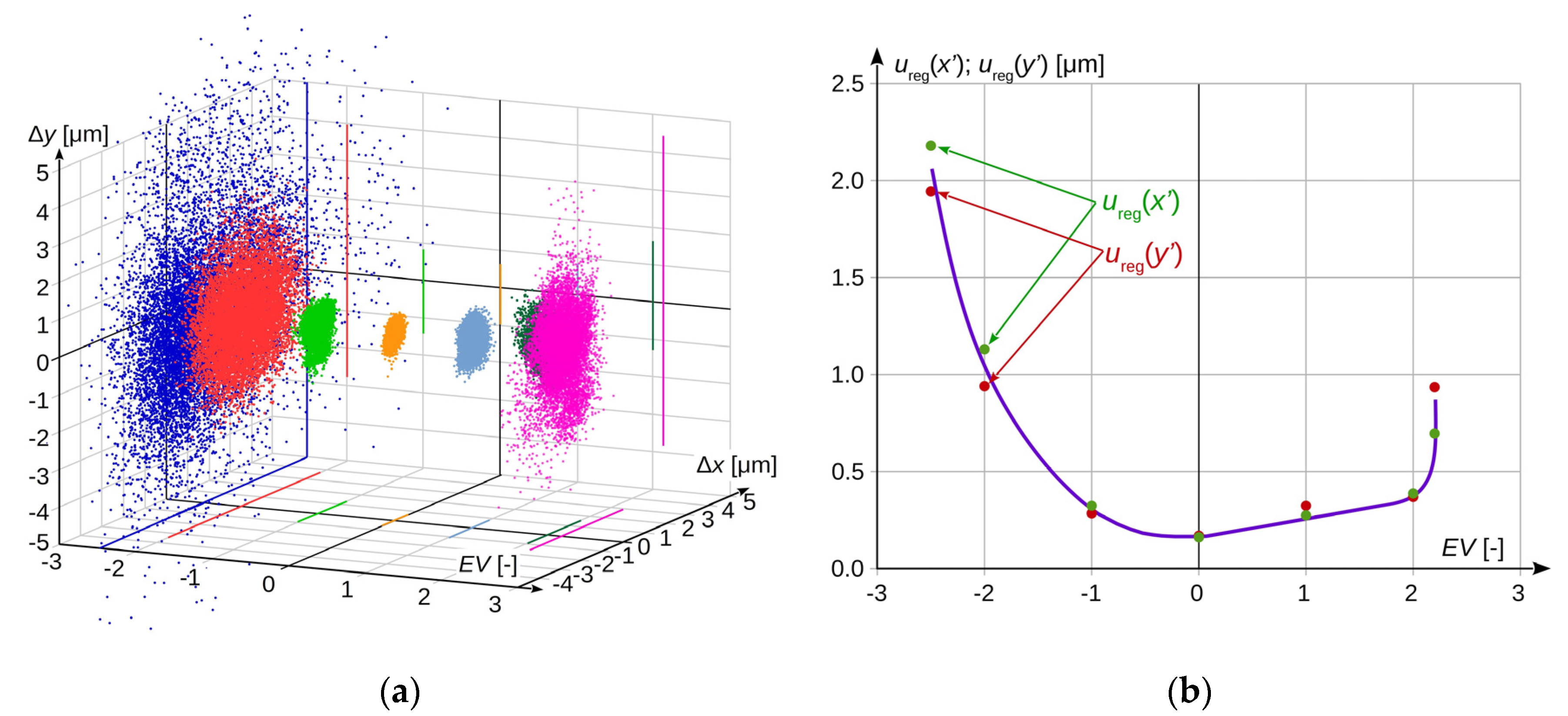

4.1. Uncertainties of the Camera Measurement Related to the ureg Image Registration Parameters (x′)

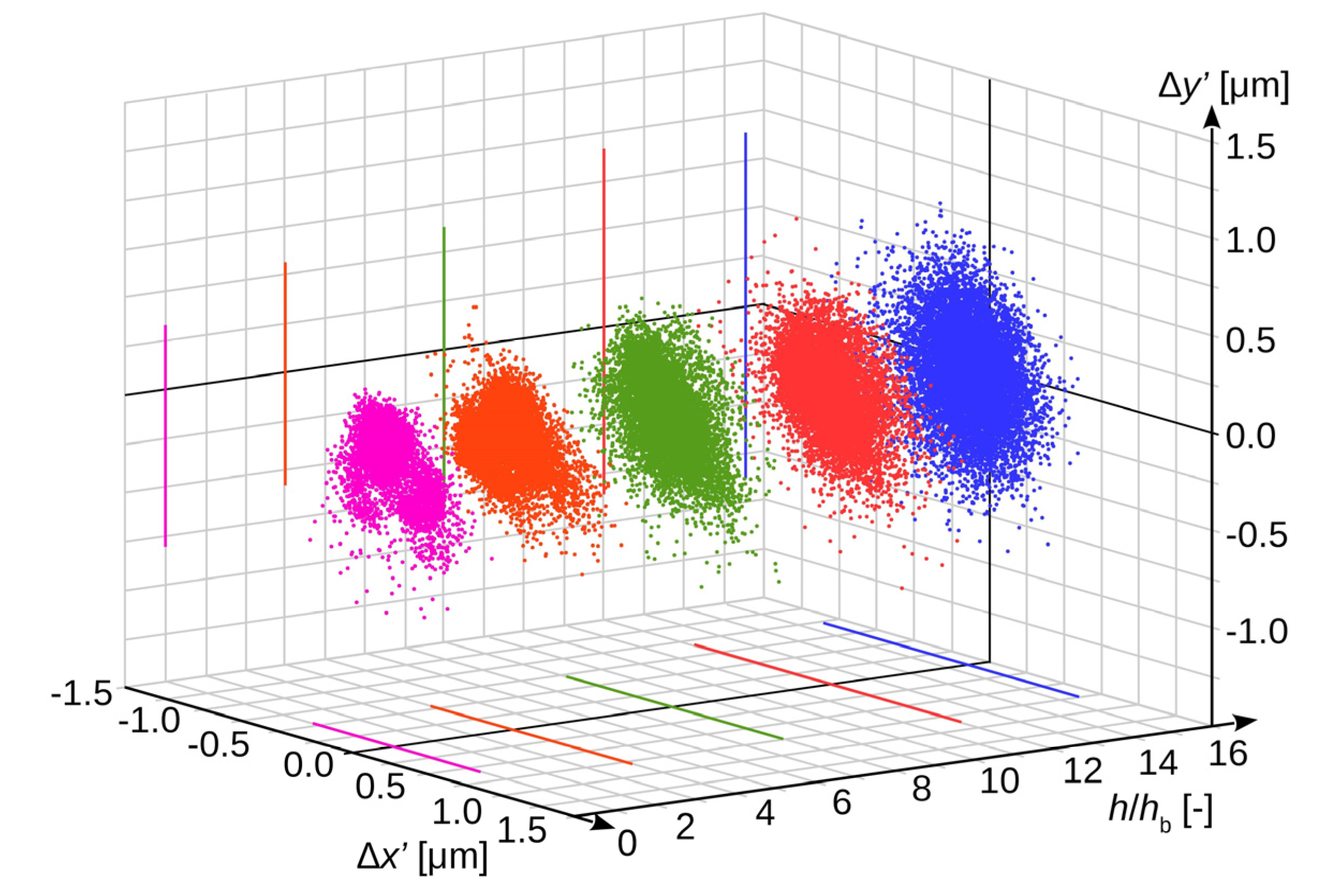

4.2. Uncertainty of Measuring the Object Image Position on Image Sensor, Resulting from Parameters of Camera and Optical System ucam(x′)

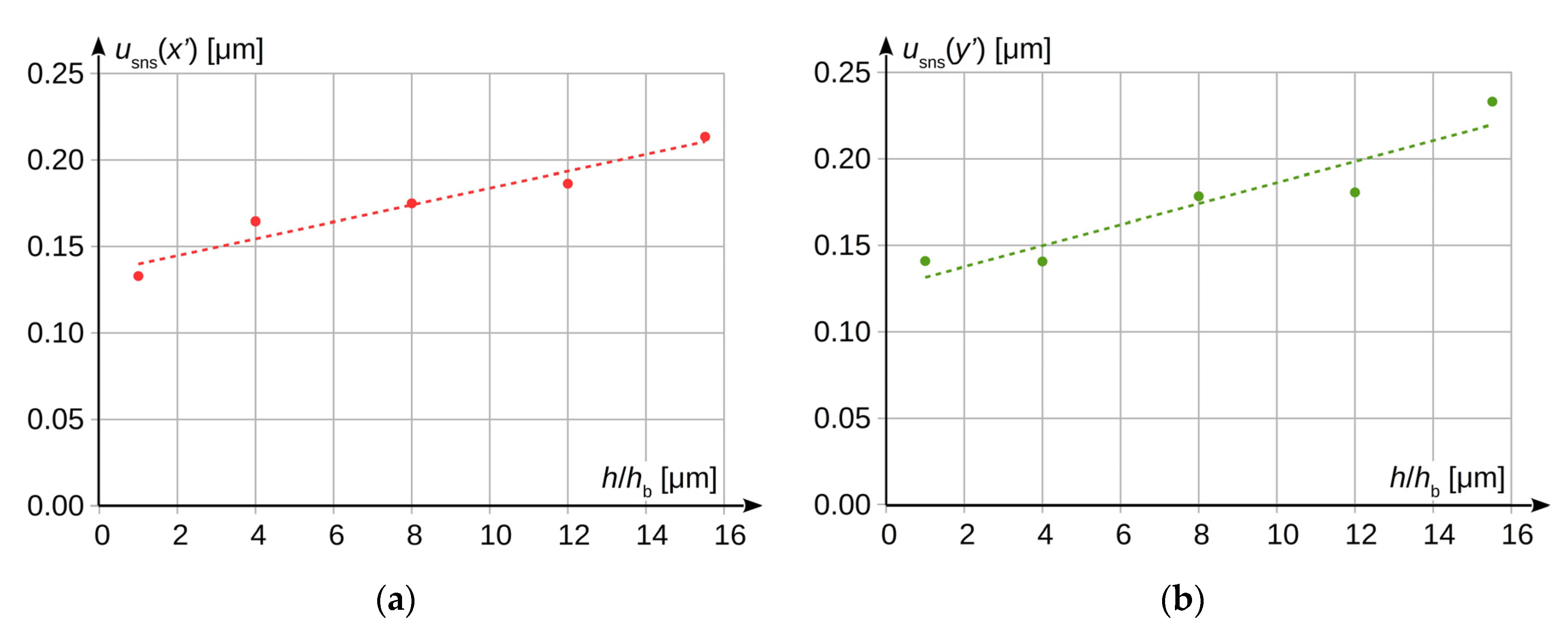

4.2.1. Uncertainty of Measuring the Object Image Position on Camera Sensor due to Actual Sensor Sensitivity usns(x′)

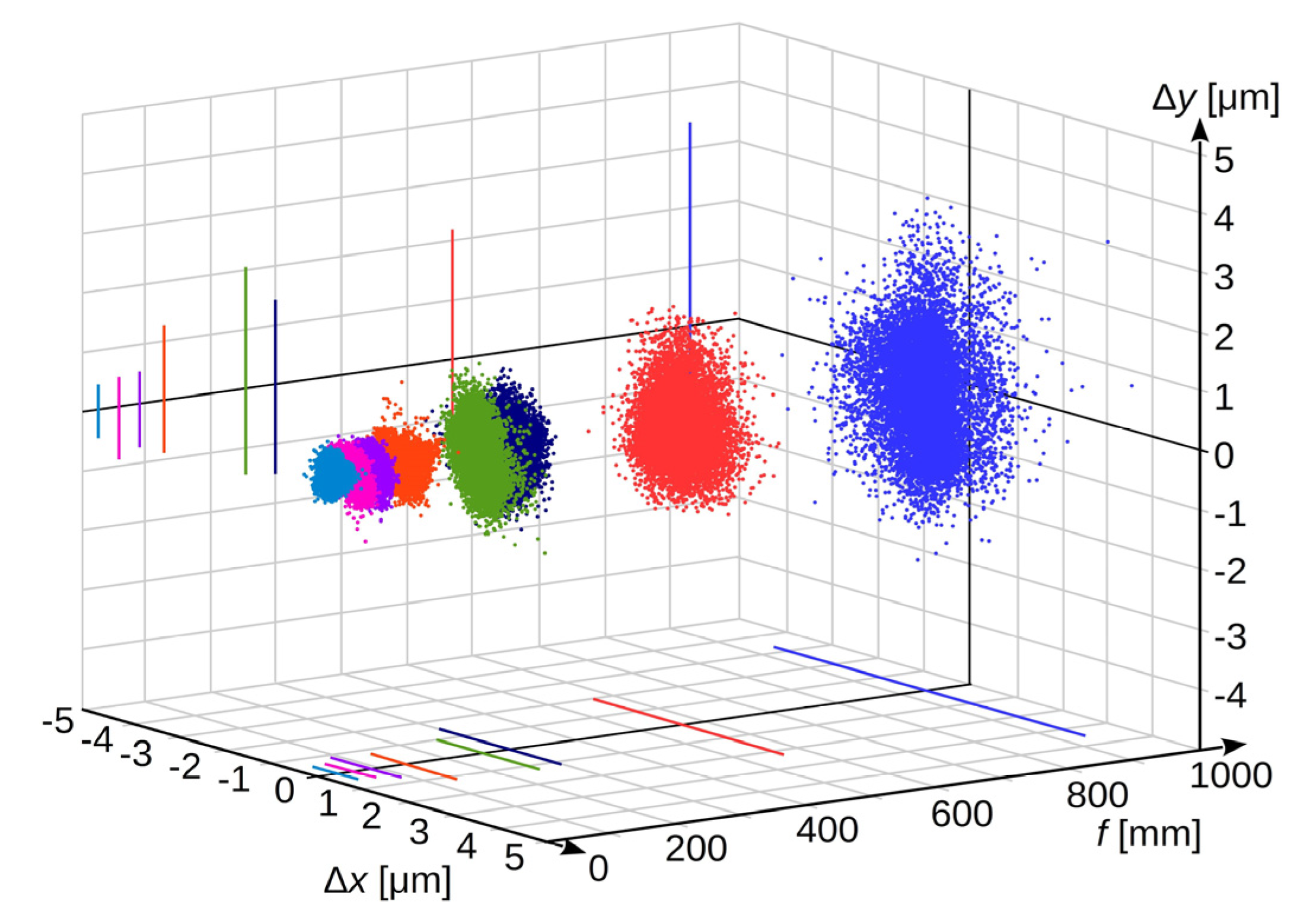

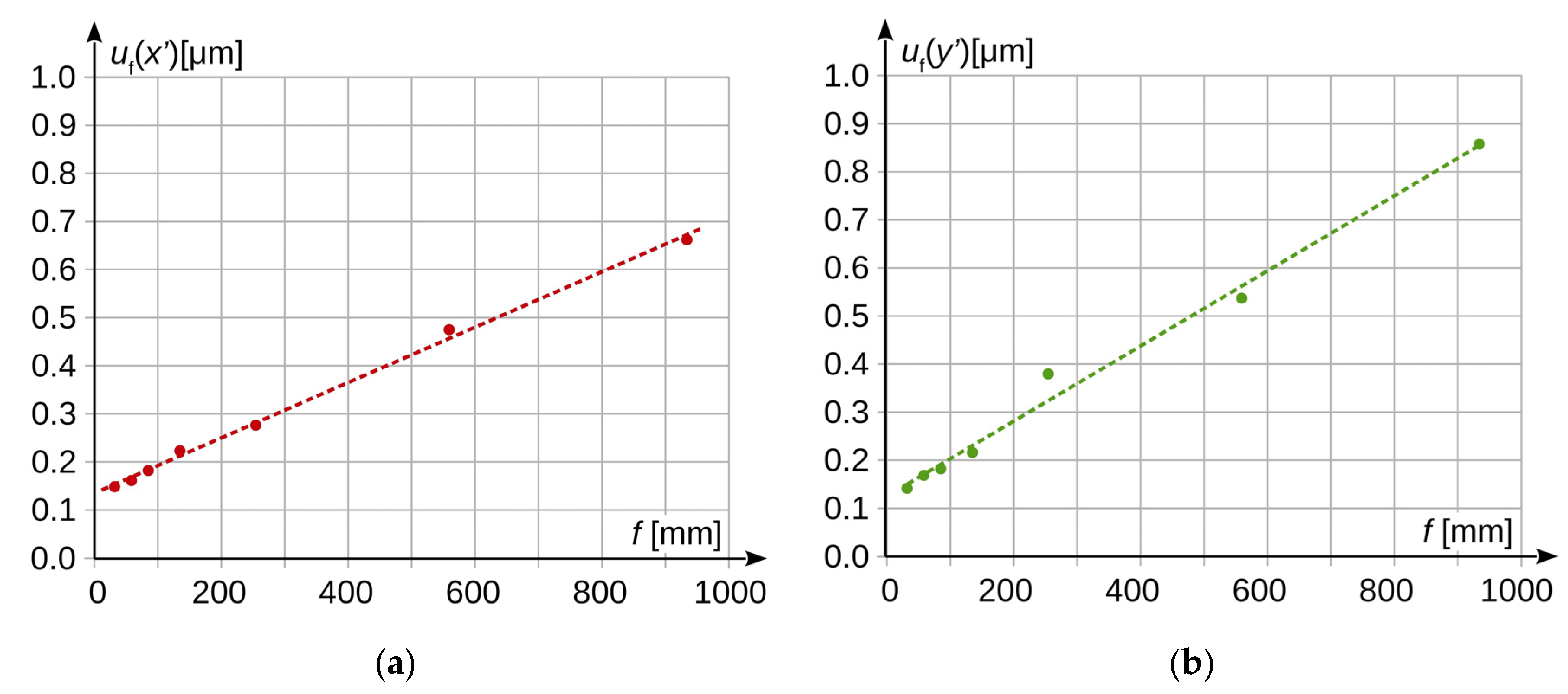

4.2.2. Uncertainty of Measuring the Object Image Position on Camera Sensor uf(x′), the Source of which is Lens Focal Length

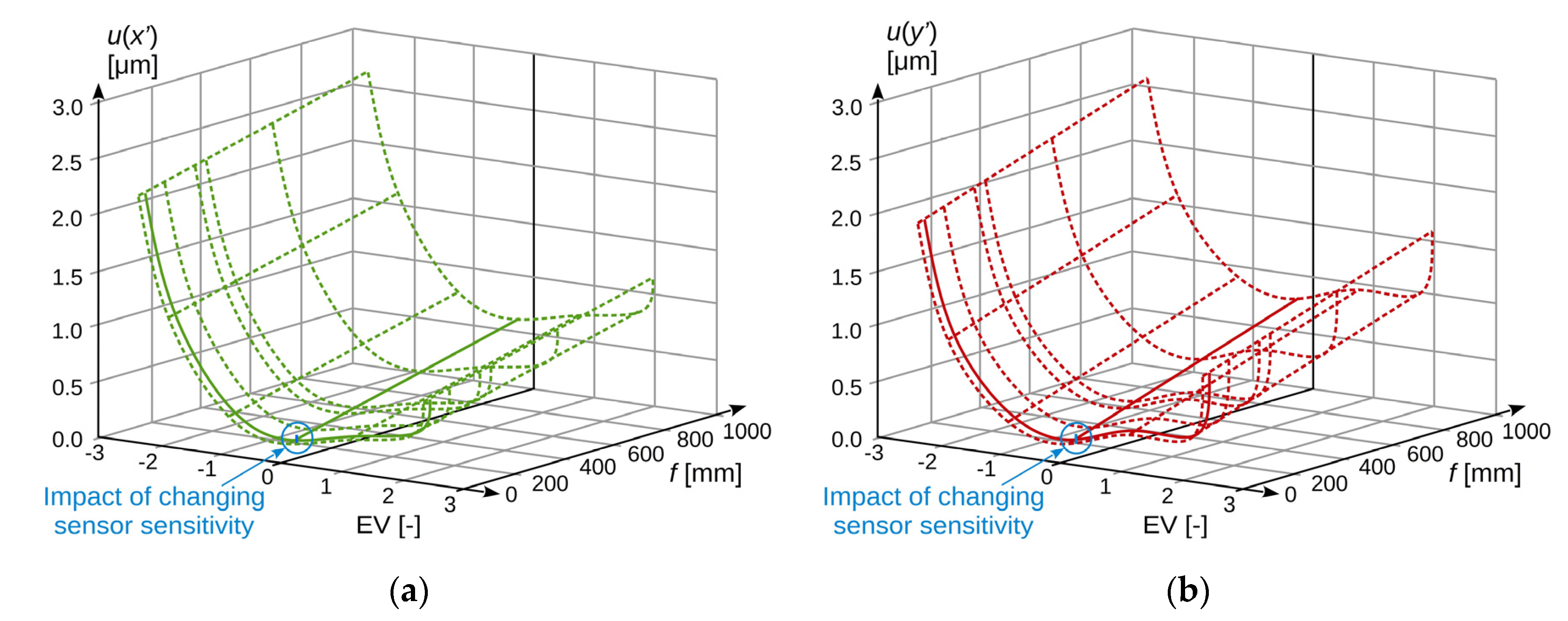

4.3. Combined Standard Uncertainty u(x′) and u(y′)

5. Influence of Optical System Parameters on Real Measurements Results, on the Example of Vertical Displacement of the Overhead Power Line

6. Summary and Conclusions

Author Contributions

Funding

Conflicts of Interest

Nomenclature

| k | distance between the object plane and the image plane (image sensor); |

| F | distance between the plane of the lens optical centre and the image plane |

| x | displacement distance of the object measured in the horizontal axis in relation to the optical axis of the lens; |

| y | displacement distance of the object measured in the vertical axis in relation to the optical axis of the lens; |

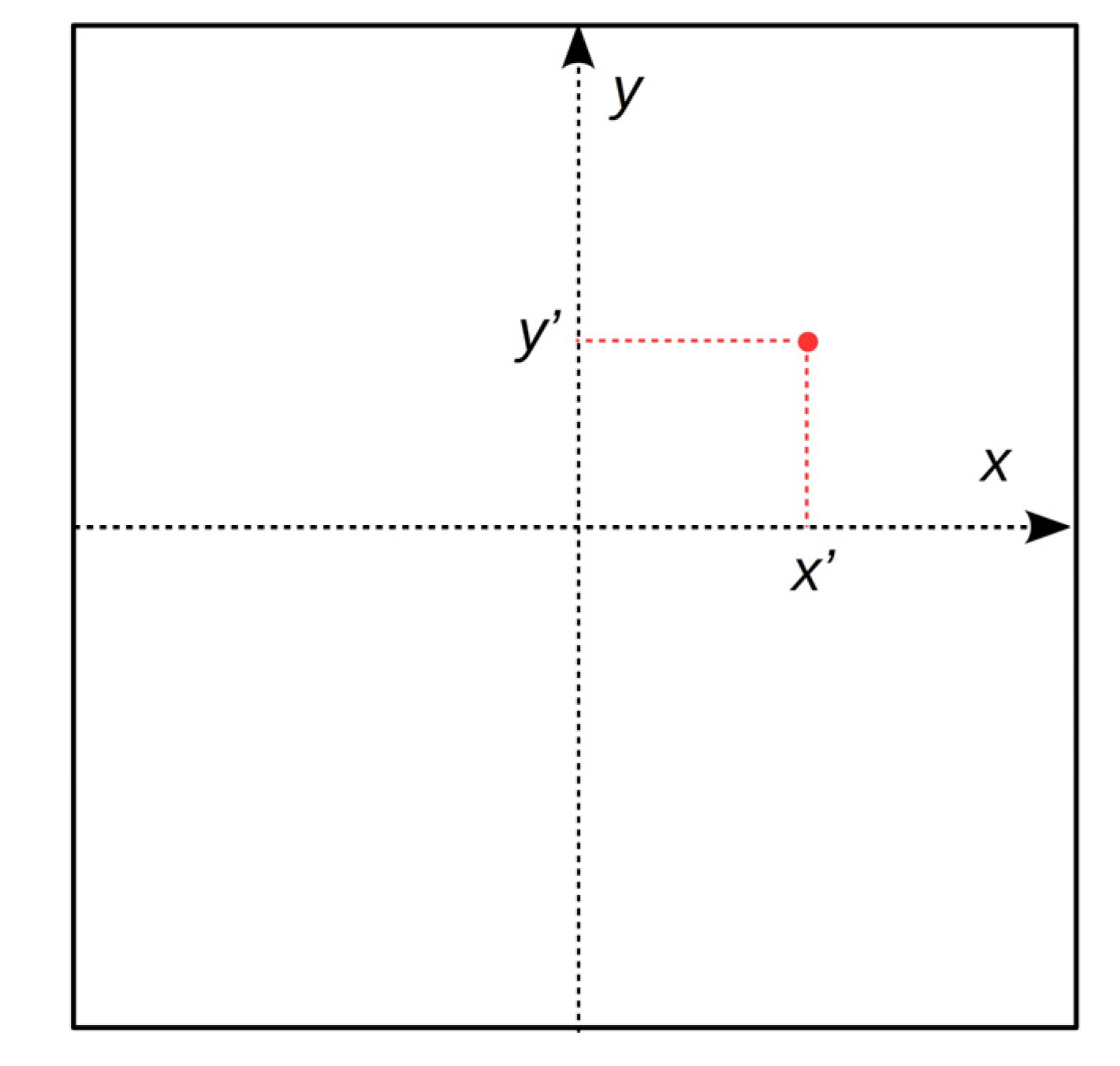

| x′ | location of the image of the object measured in the horizontal axis; |

| y′ | location of the image of the object measured in the vertical axis; |

| u(F) | uncertainty of the F determination; |

| u(x) | uncertainty of the position measurement in the x-axis; |

| u(y) | uncertainty of the position measurement in the y-axis; |

| Δx | difference between the obtained result of a single measurement from the series and the averaged result of the entire static series of measurements in the x-axis; |

| Δy | difference between the obtained result of a single measurement from the series and the averaged result of the entire static series of measurements in the y-axis; |

| f | focal length of the lens; |

| xw | dimension of the reference object located at the distance k from the image sensor [mm]; |

| x′w | image dimension of the reference object on the image sensor [mm]; |

| u(k) | standard uncertainty in measuring the size k; |

| u(xw) | standard uncertainty in measuring the size xw; |

| Δk | maximum limit error of instruments used to determine the distance k; |

| Δxw | maximum limit error of instruments used to determine the distance xw; |

| npix | number of pixels [–]; |

| lpix | dimension of a single pixel [μm]; |

| Δx’we | experimenter’s uncertainty with which he is able to determine the image dimension of the reference object on the image sensor x′w equal to the dimension of a single pixel lpix; |

| u(x′) | combined standard uncertainty of the measurement of the image position on the image sensor in the horizontal axis x; |

| ureg(x′) | component of camera measurement uncertainty related to image registration parameters; |

| ucam(x′) | component of uncertainty associated with camera characteristics and the optical system; |

| usns(x′) | uncertainty of measuring the object image position on the camera image sensor due to its actual sensitivity; |

| uf(x′) | uncertainty of measuring the object image position on the camera sensor; |

| h | actual image sensor sensitivity; |

| hb | basic image sensor sensitivity. |

References

- Costa, P.B.; Leta, F.R.; Baldner, F.D.O. Computer vision measurement system for standards calibration in XY plane with sub-micrometer accuracy. Int. J. Adv. Manuf. Technol. 2019, 105, 1531–1537. [Google Scholar] [CrossRef]

- Cui, J.; Min, C.; Bai, X.; Cui, J. An Improved Pose Estimation Method Based on Projection Vector with Noise Error Uncertainty. IEEE Photonics J. 2019, 11, 1–16. [Google Scholar] [CrossRef]

- Brosnan, T.; Sun, D.-W. Improving quality inspection of food products by computer vision—A review. J. Food Eng. 2004, 61, 3–16. [Google Scholar] [CrossRef]

- Srivastava, B.; Anvikar, A.R.; Ghosh, S.K.; Mishra, N.; Kumar, N.; Houri-Yafin, A.; Pollak, J.J.; Salpeter, S.J.; Valecha, N. Computer-vision-based technology for fast, accurate and cost effective diagnosis of malaria. Malar. J. 2015, 14, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Vázquez, C.A.L.; Quintas, M.M.; Romera, M.M. Non-contact sensor for monitoring catenary-pantograph interaction. In Proceedings of the 2010 IEEE International Symposium on Industrial Electronics, Bari, Italy, 4–7 July 2010; pp. 482–487. [Google Scholar]

- Karwowski, K.; Mizan, M.; Karkosiński, D. Monitoring of current collectors on the railway line. Transport 2016, 33, 177–185. [Google Scholar] [CrossRef]

- Choi, M.; Choi, J.; Park, J.; Chung, W.K. State estimation with delayed measurements considering uncertainty of time delay. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA; pp. 3987–3992. [Google Scholar]

- Li, F.; Li, Z.; Li, Q.; Wang, D. Calibration of Three CCD Camera Overhead Contact Line Measuring System. In Proceedings of the 2010 International Conference on Intelligent Computation Technology and Automation, Changsha, China, 11–12 May 2010; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA; Volume 1, pp. 911–913. [Google Scholar]

- Liu, Z.; Liu, W.; Han, Z. A High-Precision Detection Approach for Catenary Geometry Parameters of Electrical Railway. IEEE Trans. Instrum. Meas. 2017, 66, 1798–1808. [Google Scholar] [CrossRef]

- Judek, S.; Jarzebowicz, L. Analysis of Measurement Errors in Rail Vehicles’ Pantograph Inspection System. Elektron. Elektrotech. 2016, 22, 20–23. [Google Scholar] [CrossRef][Green Version]

- Skibicki, J. The issue of uncertainty of visual measurement techniques for long distance measurements based on the example of applying electric traction elements in diagnostics and monitoring. Measurement 2018, 113, 10–21. [Google Scholar] [CrossRef]

- Skibicki, J. Robustness of contact-less optical method, used for measuring contact wire position in changeable lighting conditions. Tech. Gaz. 2017, 24, 1759–1768. [Google Scholar]

- Standard, JCGM 100:2008: Evaluation of Measurement Data—Guide to the Expression of Uncertainty in Measurement; JCGM: Sèvres, France, 2008.

- Standard, JCGM 200:2012: International Vocabulary of Metrology—Basic and General Concepts and Associated Terms (VIM); JCGM: Sèvres, France, 2012.

- Taylor, J. Introduction to Error Analysis the Study of Uncertainties in Physical Measurements, 2nd ed.; University Science Books: New York, NY, USA, 1997. [Google Scholar]

- Warsza, Z. Methods of Extension Analysis of Measurement Uncertainty; PIAP: Warsaw, Poland, 2016; ISBN 978-83-61278-31-3. (In Polish) [Google Scholar]

- Bartiromo, R.; De Vincenzi, M. Uncertainty in electrical measurements. In Electrical Measurements in the Laboratory Practice. Undergraduate Lecture Notes in Physics; Springer: Berlin/Heidelberg, Germany, 2016; ISBN 978-3-319-31100-5. [Google Scholar] [CrossRef]

- Dzwonkowski, A. Estimation of the uncertainty of the LEM CV 3-500 transducers conversion function. Przegląd Elektrotech. 2015, 1, 13–16. [Google Scholar] [CrossRef][Green Version]

- Klonz, M.; Laiz, H.; Spiegel, T.; Bittel, P. AC-DC current transfer step-up and step-down calibration and uncertainty calculation. IEEE Trans. Instrum. Meas. 2002, 51, 1027–1034. [Google Scholar] [CrossRef]

- Olmeda, P.; Tiseira, A.; Dolz, V.; García-Cuevas, L. Uncertainties in power computations in a turbocharger test bench. Measurement 2015, 59, 363–371. [Google Scholar] [CrossRef]

- Dzwonkowski, A.; Swędrowski, L. Uncertainty analysis of measuring system for instantaneous power research. Metrol. Meas. Syst. 2012, 19, 573–582. [Google Scholar] [CrossRef][Green Version]

- Carullo, A.; Castellana, A.; Vallan, A.; Ciocia, A.; Spertino, F. Uncertainty issues in the experimental assessment of degradation rate of power ratings in photovoltaic modules. Measurement 2017, 111, 432–440. [Google Scholar] [CrossRef]

- Araújo, A. Dual-band pyrometry for emissivity and temperature measurements of gray surfaces at ambient temperature: The effect of pyrometer and background temperature uncertainties. Measurement 2016, 94, 316–325. [Google Scholar] [CrossRef]

- Jaszczur, M.; Pyrda, L. Application of Laser Induced Fluorescence in experimental analysis of convection phenomena. J. Physics Conf. Ser. 2016, 745, 032038. [Google Scholar] [CrossRef]

- Batagelj, V.; Bojkovski, J.; Ek, J.D. Methods of reducing the uncertainty of the self-heating correction of a standard platinum resistance thermometer in temperature measurements of the highest accuracy. Meas. Sci. Technol. 2003, 14, 2151–2158. [Google Scholar] [CrossRef]

- Sajben, M. Uncertainty estimates for pressure sensitive paint measurements. AIAA J. 1993, 31, 2105–2110. [Google Scholar] [CrossRef]

- Golijanek-Jędrzejczyk, A.; Mrowiec, A.; Hanus, R.; Zych, M.; Świsulski, D. Determination of the uncertainty of mass flow measurement using the orifice for different values of the Reynolds number. EPJ Web Conf. 2019, 213, 02022. [Google Scholar] [CrossRef]

- Zych, M.; Hanus, R.; Vlasák, P.; Jaszczur, M.; Petryka, L. Radiometric methods in the measurement of particle-laden flows. Powder Technol. 2017, 318, 491–500. [Google Scholar] [CrossRef]

- Roshani, G.; Hanus, R.; Khazaei, A.; Zych, M.; Nazemi, E.; Mosorov, V. Density and velocity determination for single-phase flow based on radiotracer technique and neural networks. Flow Meas. Instrum. 2018, 61, 9–14. [Google Scholar] [CrossRef]

- Christopoulos, V.N.; Schrater, P. Handling shape and contact location uncertainty in grasping two-dimensional planar objects. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 1557–1563. [Google Scholar]

- Hall, E.M.; Guildenbecher, D.R.; Thurow, B.S. Uncertainty characterization of particle location from refocused plenoptic images. Opt. Express 2017, 25, 21801–21814. [Google Scholar] [CrossRef] [PubMed]

- Myasnikov, V.V.; Dmitriev, E.A. The accuracy dependency investigation of simultaneous localization and mapping on the errors from mobile device sensors. Comput. Opt. 2019, 43, 492–503. [Google Scholar] [CrossRef]

- Wereley, S.T.; Meinhart, C.D. Recent Advances in Micro-Particle Image Velocimetry. Annu. Rev. Fluid Mech. 2010, 42, 557–576. [Google Scholar] [CrossRef]

- Westerweel, J.; Elsinga, G.E.; Adrian, R.J. Particle Image Velocimetry for Complex and Turbulent Flows. Annu. Rev. Fluid Mech. 2013, 45, 409–436. [Google Scholar] [CrossRef]

- Bhattacharya, S.; Vlachos, P.P. Volumetric particle tracking velocimetry (PTV) uncertainty quantification. arXiv 2019, arXiv:1911.12495. [Google Scholar] [CrossRef]

- Wu, Z.; Lina, Y.; Zhang, G. Uncertainty analysis of object location in multi-source remote sensing imagery classification. Int. J. Remote Sens. 2009, 30, 5473–5487. [Google Scholar] [CrossRef]

- Zhao, X.; Stein, A.; Chen, X.; Zhang, X. Quantification of Extensional Uncertainty of Segmented Image Objects by Random Sets. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2548–2557. [Google Scholar] [CrossRef]

- Cai, L.; Shi, W.; Miao, Z.; Hao, M. Accuracy Assessment Measures for Object Extraction from Remote Sensing Images. Remote Sens. 2018, 10, 303. [Google Scholar] [CrossRef]

- De Nigris, D.; Collins, D.L.; Arbel, T. Multi-Modal Image Registration Based on Gradient Orientations of Minimal Uncertainty. IEEE Trans. Med. Imaging 2012, 31, 2343–2354. [Google Scholar] [CrossRef]

- Judek, S.; Skibicki, J. Visual method for detecting critical damage in railway contact strips. Meas. Sci. Technol. 2018, 29, 055102. [Google Scholar] [CrossRef]

- Cioban, V.; Prejmerean, V.; Culic, B.; Ghiran, O. Image calibration for color comparison. In Proceedings of the 2012 IEEE International Conference on Automation, Quality and Testing, Robotics Automation Quality and Testing Robotics (AQTR), Cluj-Napoca, Romania, 24–27 May 2012; pp. 332–336. [Google Scholar]

- Li, M.; Haerken, H.; Guo, P.; Duan, F.; Yin, Q.; Zheng, X. Two-dimensional spectral image calibration based on feed-forward neural network. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 4194–4201. [Google Scholar]

- Beauchamp, D.; Chugg, K.M. Machine Learning Based Image Calibration for a Twofold Time-Interleaved High Speed DAC. In Proceedings of the 2019 IEEE 62nd International Midwest Symposium on Circuits and Systems (MWSCAS), Dallas, TX, USA, 4–7 August 2019; pp. 908–912. [Google Scholar]

- Sruthy, S.; Suresh Babu, S. Dewarping on camera document images. Int. J. Pure Appl. Math. 2018, 119, 1019–1044. [Google Scholar]

- Dasgupta, T.; Das, N.; Nasipuri, M. Multistage Curvilinear Coordinate Transform Based Document Image Dewarping using a Novel Quality Estimator. arXiv 2020, arXiv:2003.06872. [Google Scholar]

- Ramanna, V.; Bukhari, S.; Dengel, A. Document Image Dewarping using Deep Learning. In Proceedings of the 8th International Conference on Pattern Recognition Applications and Methods, Prague, Czech Republic, 19–21 February 2019; pp. 524–531. [Google Scholar]

- Ulges, A.; Lampert, C.; Breuel, T. Document image dewarping using robust estimation of curled text lines. In Proceedings of the Eighth International Conference on Document Analysis and Recognition (ICDAR’05), Seoul, Korea, 31 August–1 September 2005; p. 1001. [Google Scholar]

- Stamatopoulos, N.; Gatos, B.; Pratikakis, I. A Methodology for Document Image Dewarping Techniques Performance Evaluation. In Proceedings of the 2009 10th International Conference on Document Analysis and Recognition, Barcelona, Spain, 26–29 July 2009; pp. 956–960. [Google Scholar]

- Molin, J.L.; Figliolia, T.; Sanni, K.; Doxas, I.; Andreou, A.; Etienne-Cummings, R. FPGA emulation of a spike-based, stochastic system for real-time image dewarping. In Proceedings of the IEEE Non-Volatile Memory System & Applications Symposium (NVMSA), Fort Collins, CO, USA, 2–5 August 2015; pp. 1–4, ISBN 9781467366885. [Google Scholar]

- Skibicki, J. Visual Measurement Methods in Diagnostics of Overhead Contact Line; Wydawnictwo Politechniki Gdańskiej: Gdańsk, Poland, 2018; p. 226. ISBN 978-83-7348-746-8. (In Polish) [Google Scholar]

- Weng, J.; Cohen, P.; Herniou, M. Camera calibration with distortion models and accuracy evaluation. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 965–980. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Relf, C.G. Image Acquisition and Processing with LabVIEW; CRC Press: Boca Raton, FL, USA; London, UK; New York, NY, USA; Washington, DC, USA, 2004; ISBN 0-8493-1480-1. [Google Scholar]

| No. | Lens Data (Optical Set) | Focal Length f [mm] | Distance k [mm] | Distance F [mm] | |

|---|---|---|---|---|---|

| Declared by Producer | Measured | ||||

| 1 | Lydith 3.5/30 | 30 | 32.044 ± 0.036 | 839.46 ± 0.87 | 33.370 ± 0.039 |

| 2 | Helios 44-2 2/58 | 58 | 58.41 ± 0.04 | 1534.06 ± 0.87 | 60.827 ± 0.044 |

| 3 | Jupiter 9 2/85 | 85 | 84.988 ± 0.046 | 2214.26 ± 0.87 | 88.53 ± 0.05 |

| 4 | Jupiter 37 3.5/135 | 135 | 134.89 ± 0.06 | 3527.26 ± 0.87 | 140.485 ± 0.066 |

| 5 | Telemegor 5.5/250 | 250 | 254.1 ± 0.1 | 6620.76 ± 0.87 | 264.70 ± 0.11 |

| 6 | MC Sonnar 4/300 | 300 | 297.57 ± 0.12 | 7809.86 ± 0.87 | 309.86 ± 0.13 |

| 7 | MC Sonnar 4/300 + K-6B 2x converter | 600 | 559.12 ± 0.22 | 14,549.46 ± 0.87 | 582.43 ± 0.23 |

| 8 | MC MTO-11 CA 10/1000 | 1000 | 933.84 ± 0.36 | 24,404.46 ± 0.87 | 972.61 ± 0.39 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Skibicki, J.; Golijanek-Jędrzejczyk, A.; Dzwonkowski, A. The Influence of Camera and Optical System Parameters on the Uncertainty of Object Location Measurement in Vision Systems. Sensors 2020, 20, 5433. https://doi.org/10.3390/s20185433

Skibicki J, Golijanek-Jędrzejczyk A, Dzwonkowski A. The Influence of Camera and Optical System Parameters on the Uncertainty of Object Location Measurement in Vision Systems. Sensors. 2020; 20(18):5433. https://doi.org/10.3390/s20185433

Chicago/Turabian StyleSkibicki, Jacek, Anna Golijanek-Jędrzejczyk, and Ariel Dzwonkowski. 2020. "The Influence of Camera and Optical System Parameters on the Uncertainty of Object Location Measurement in Vision Systems" Sensors 20, no. 18: 5433. https://doi.org/10.3390/s20185433

APA StyleSkibicki, J., Golijanek-Jędrzejczyk, A., & Dzwonkowski, A. (2020). The Influence of Camera and Optical System Parameters on the Uncertainty of Object Location Measurement in Vision Systems. Sensors, 20(18), 5433. https://doi.org/10.3390/s20185433