In this section, we conduct two groups of experiments to evaluate the proposed algorithm. In the first group of experiments, we compare the proposed solution with an MSE-based method to recover simulated sparse signals and image denoising under Gaussian and non-Gaussian noise conditions so as to illustrate the superiority of using negentropy as the measurement error. Further, in the second group of experiments, the effectiveness and practicability of the proposed algorithm are verified by comparing with some robust sparse recovery algorithms to recover simulated sparse signals and real-world MRI images under non-Gaussian noise conditions.

3.1. Comparison with MSE-Based Method

(1) Sparse Signal Recovery

In this subsection, the performance of the proposed algorithm in recovering sparse signals from noisy measurements is presented. For the sake of comparison, the results of ISTA are also presented. The parameter settings are summarized in

Table 1.

The experiments were performed on a 100-dimension 5-sparsity signal, where the non-zero values had random positions and the values were generated following a uniform distribution in the range

. The measurement matrix

was generated by randomly drawing value from normal distribution

. In our experiments, we generate 100 samples of sparse signals and measurement matrices, and the corresponding measurements are obtained from Equation (1). The measurement signals were further corrupted by Gaussian and non-Gaussian noise. In the Gaussian case, the noise is generated from

distribution. In the non-Gaussian case, impulse noise is adopted, which is generated by a mixed Gaussian distribution model as suggested in [

36]. The reconstruction performance is evaluated by the relative norm error between the reconstructed signal and the original signal, which is defined as

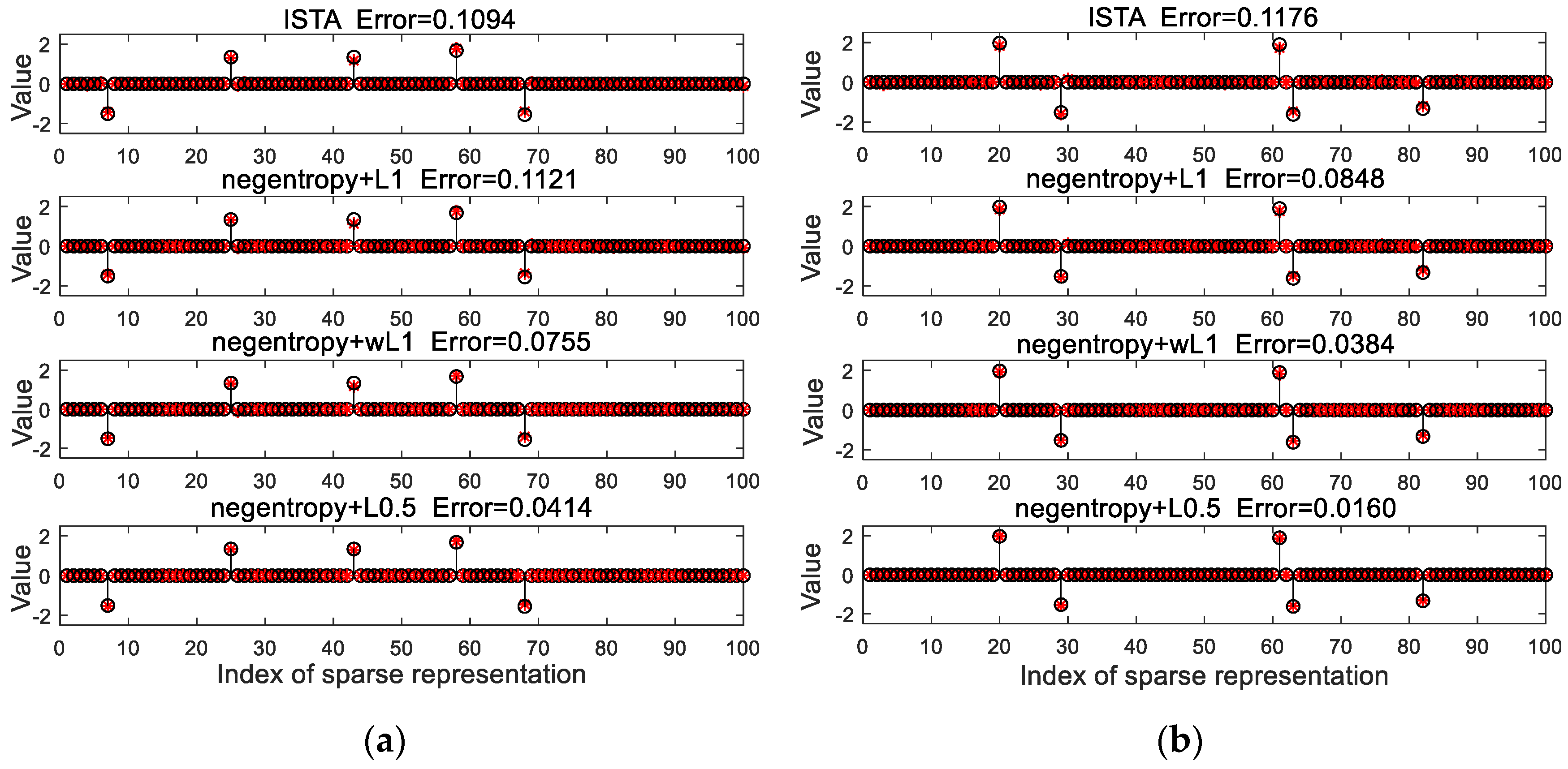

Figure 1 presents the performance of ISTA and our proposed algorithms with 16 dB of Gaussian noise and non-Gaussian noise. As shown in

Figure 1a, the relative error of ISTA is slightly smaller than that of the proposed negentropy algorithm with

norm constraint, which indicates that the proposed negentropy objective function has no obvious advantage in recovering signals with Gaussian noise. With non-Gaussian noise, however, it is shown in

Figure 1b that the negentropy-based algorithms have higher recovery accuracy, which indicates that the proposed negentropy objective function has a stronger adaptability to non-Gaussian noise. Moreover, when weighted

norm and

norm were adopted as sparse constraints, the error further decreases, which implies that

norm as sparse constraint can improve the accuracy of algorithms.

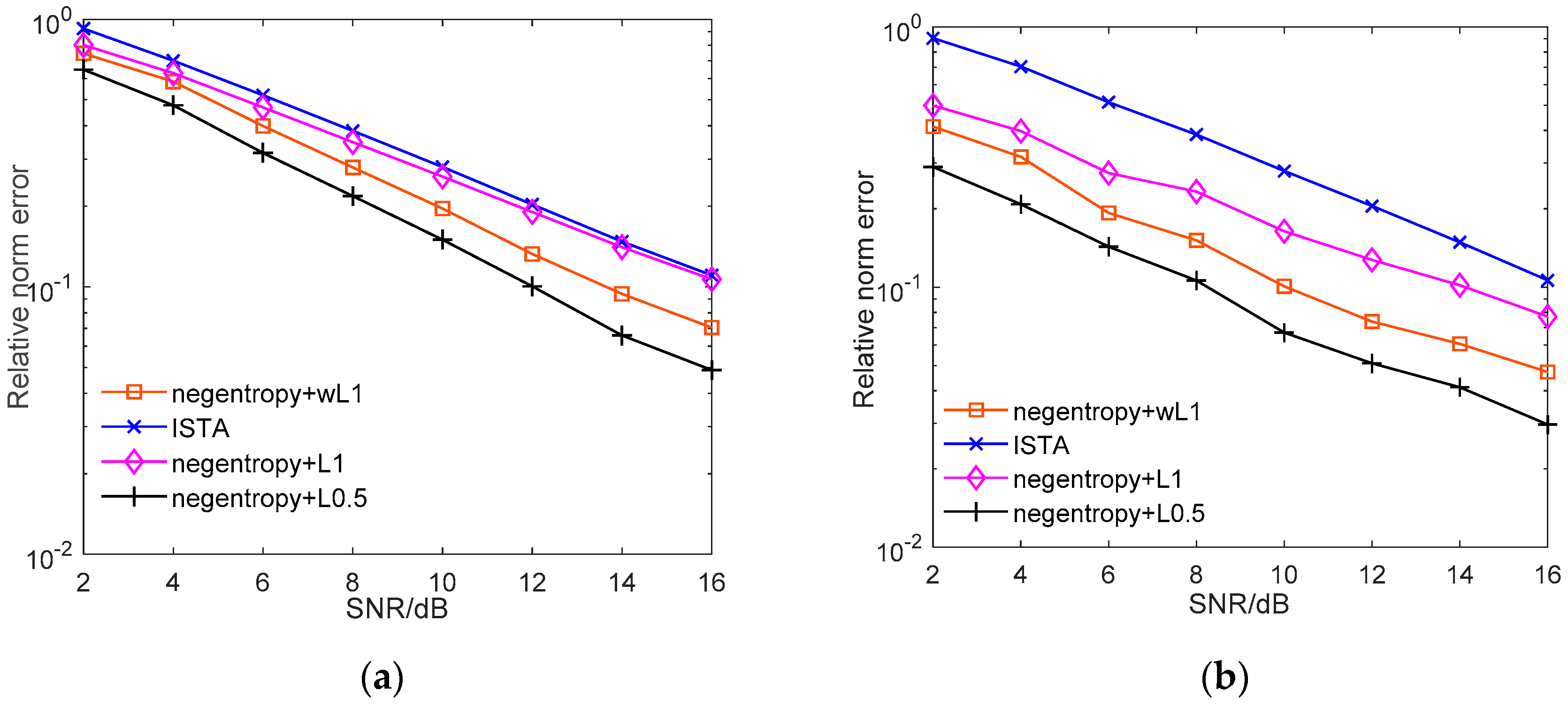

Figure 2 presents how the average reconstruction error varies with the signal-to-noise ratio (SNR). The average relative norm error is obtained by averaging over 100 samples of signals and measurement matrices. As

Figure 2a shows, the proposed negentropy-based methods have higher reconstruction accuracies under various SNRs. The performance gains further increase when the measurements are contaminated by non-Gaussian noise. As we can see from

Figure 2b, the relative error of ISTA is within the level of 10

−1, but the relative error of the proposed algorithm can reach the scale of 10

−2, which means that the proposed algorithm model is more robust than conventional algorithms in non-Gaussian noise. Moreover, when weighted

norm or

norm were adopted as sparse constraints, the recovery accuracy further increases, and the

norm case has superior performance, under which lower reconstruction error can be achieved with high probability.

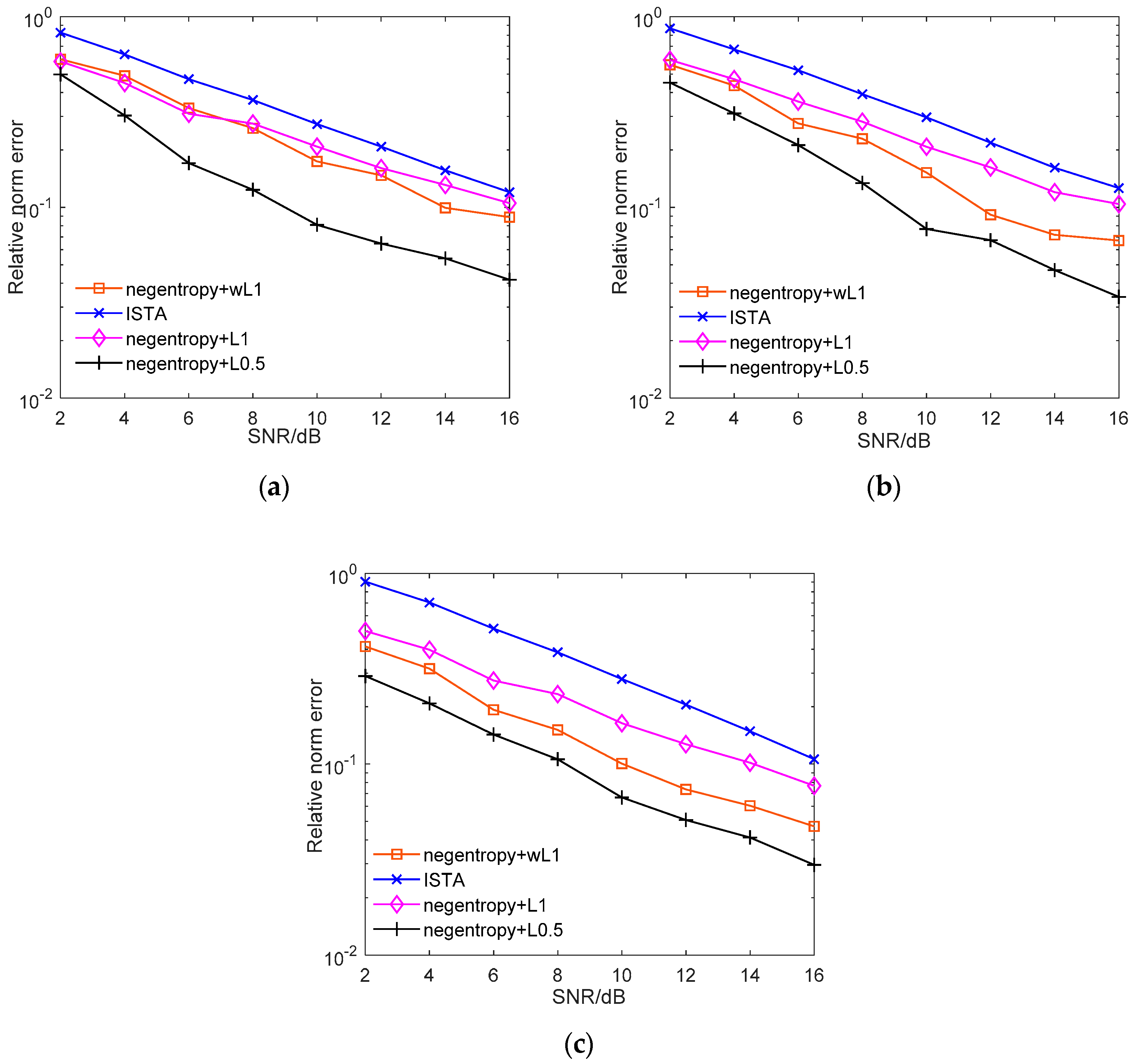

Figure 3 further illustrates the reconstruction performance with non-Gaussian noisy measurements under various compression ratios. As shown in

Figure 3, as the compression ratio decreases, despite the performance of the algorithms deteriorating, the negentropy algorithm always maintains a lower relative error. The

norm-based negentropy algorithm presents the best performance, which indicates that the negentropy algorithm can exhibit more stable performance with different compression ratios and also proves that

norm has superior sparse constraint effect to

norm.

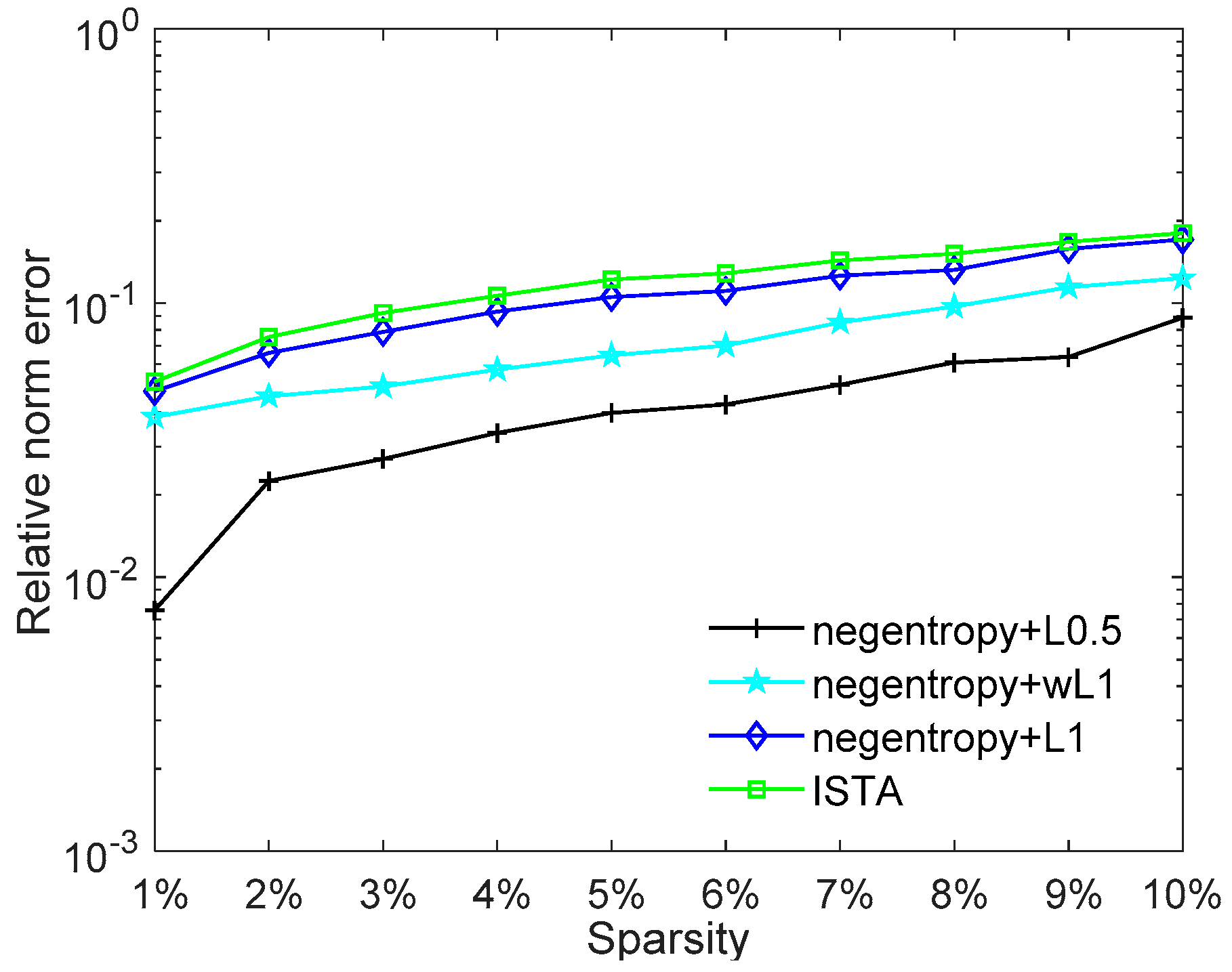

The impact of sparsity on the reconstruction performance is further presented in

Figure 4. As

Figure 4 shows, the reconstruction error increases with the sparsity. The negentropy algorithms present better performance than ISTA. The reconstruction error of the negentropy algorithm based on

norm or weighted

norm can only reach the level of 10

−1 when the signal has low sparsity levels. The negentropy algorithm based on

norm has the best performance; the relative error is always kept within 10

−2 level of magnitude, which shows better suitability for signals with low sparsity.

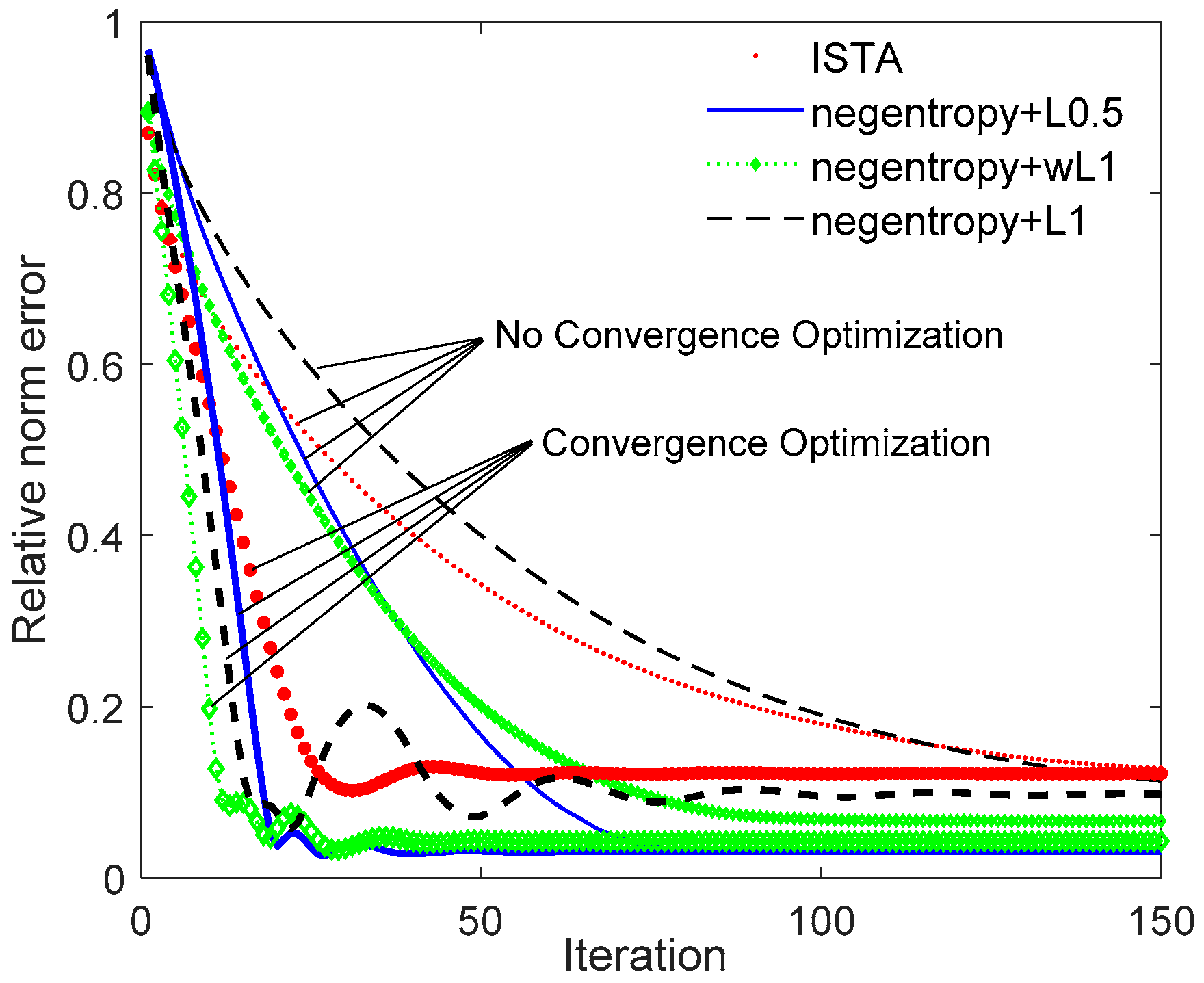

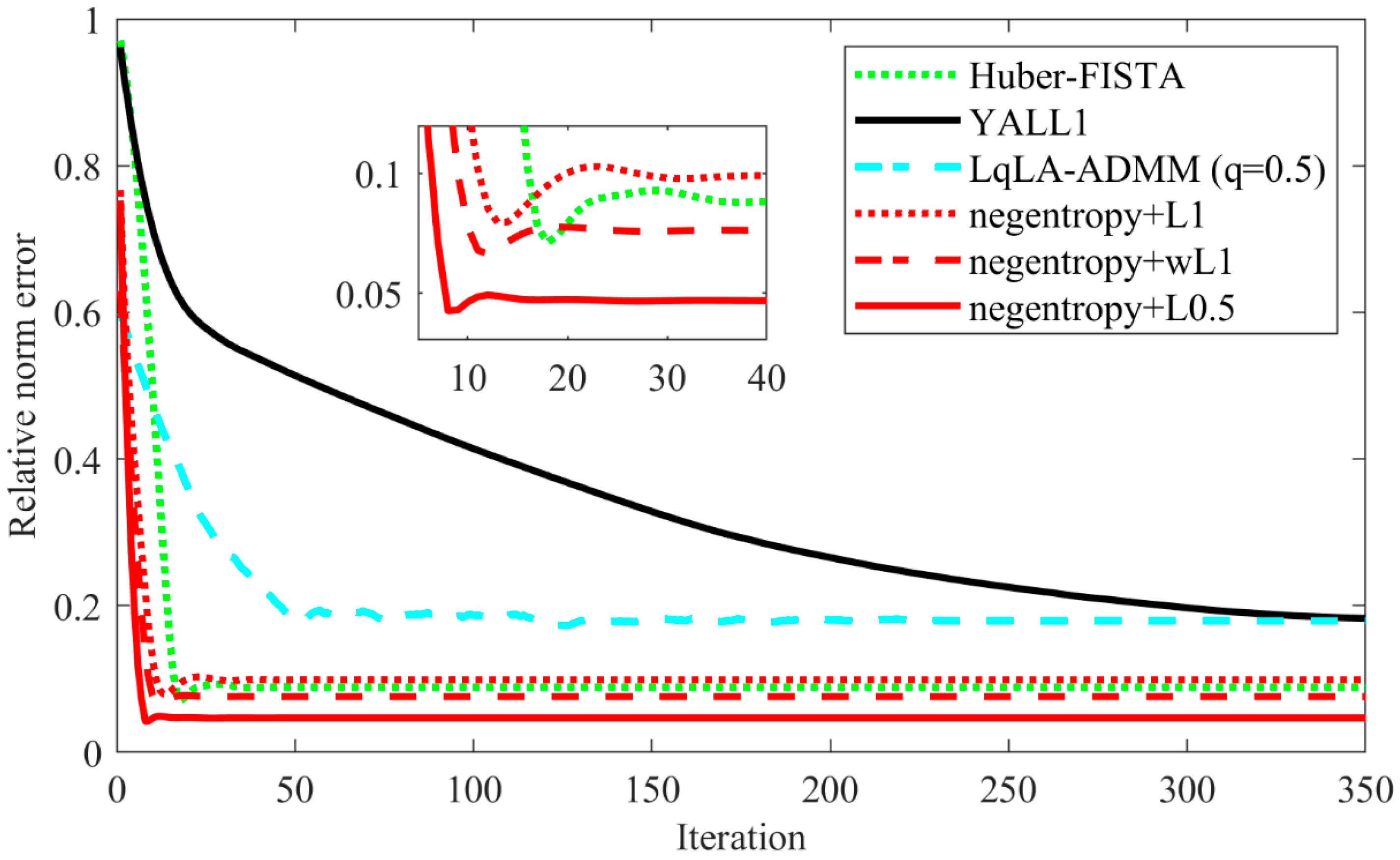

Figure 5 depicts the relative error with iterations. As the number of iterations increases, the relative error will gradually decrease and eventually converge. As we can see from

Figure 5, the convergence performance can be significantly improved after convergence optimization. Taking the negentropy algorithm based on

norm as an example, the curve tends to be gentle after 75 iterations without accelerating convergence. After the convergence rate is optimized, convergence is achieved in about 40 iterations. Thus, by means of optimizing the convergence rate based on FISTA, the negentropy algorithm also proves stronger convergence is guaranteed compared to ISTA.

(2) Image Denoising Experiments

In order to test the performance of the proposed algorithms in the application, we applied them to image denoising, which performs better compared with ISTA in these experiments. The details of the image denoising experiment are described below. We selected the image named ‘Cameraman’ as the target, which is a 512 × 512-pixel gray-scaled photograph. Gaussian noise and salt and pepper noise were added to the image, which was separated into 12 × 12-pixel small patches with an interval of 2 between patches to form an input set

. According to the signal model in Equation (1), we generate a 144 × 256 overcomplete discrete cosine transform (DCT) distributed dictionary for the denoising task. The sparse representation of each patch in the dictionary is recovered by the proposed algorithm and ISTA, which removes the noise in the images. The parameter settings of the algorithms are summarized in

Table 2. The peak signal-to-noise ratio (PSNR) of the recovered images and the structural similarity (SSIM) index between the recovered images and original images were used to evaluate the denoising performance.

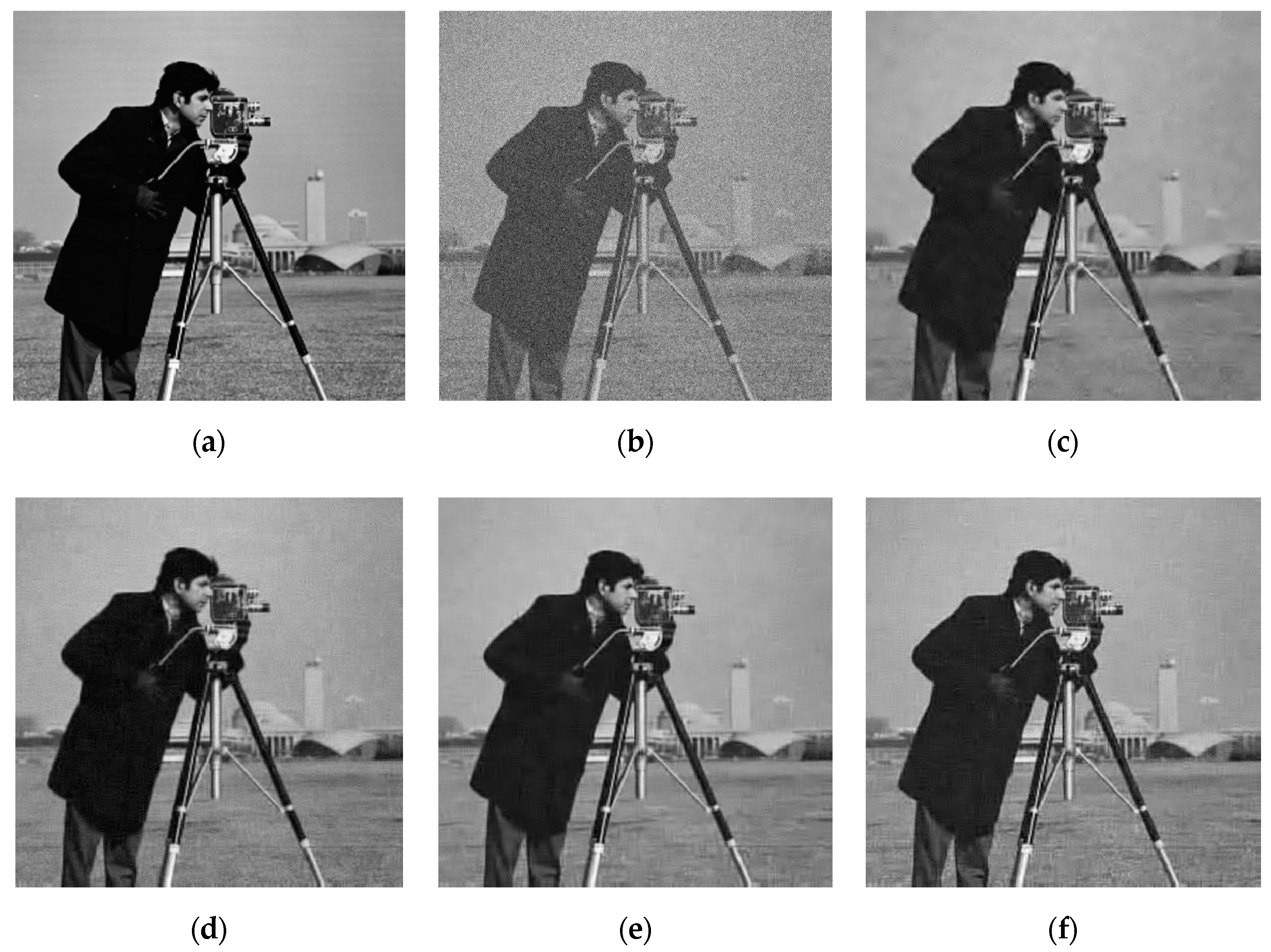

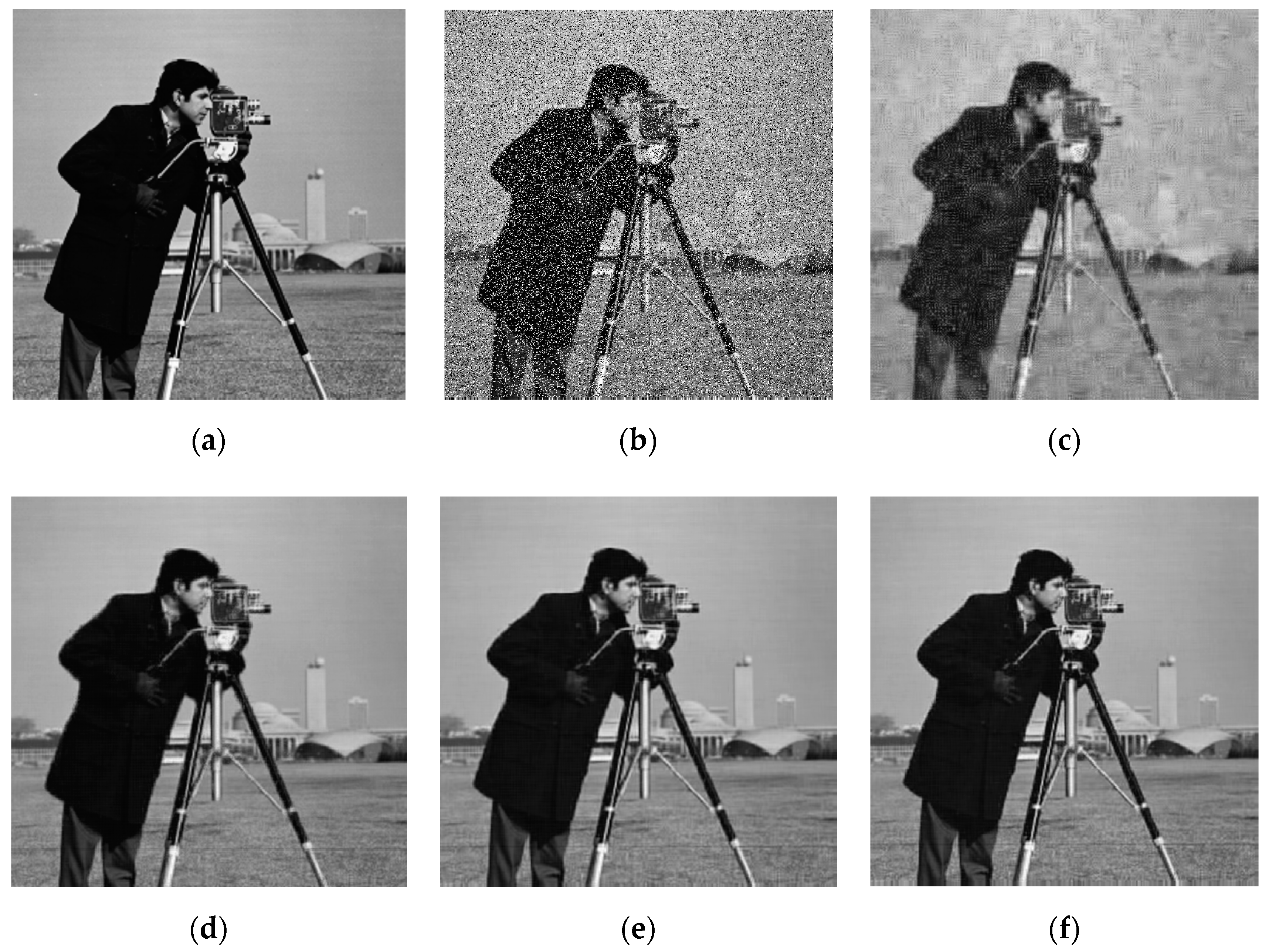

Figure 6 shows the results of recovering images with 20.17 dB Gaussian noise using ISTA and the proposed algorithms. By comparing (c) and (d), we can see that the PSNR value of the image recovered by ISTA is 1 dB larger than that of the negentropy algorithm. However, from the perspective of visual effects, the image recovered by the negentropy algorithm based on

norm are relatively clear, and the image recovered by ISTA are somewhat blurred. Therefore, the negentropy algorithm has a certain denoising effect for images with Gaussian noise, but it has no obvious advantage over ISTA.

Figure 7 is the results of recovering an image with salt and pepper noise with 30% density using ISTA and the proposed algorithms, which shows that the images recovered using ISTA are very blurred and the PSNR is only 20.24 dB. It can be judged that ISTA can hardly recover the images corrupted by strong salt and pepper noise. The proposed algorithms, however, reconstruct the original image clearly and the PSNR value is over 30 dB, which shows that the algorithm based on negentropy is able to adapt to the influence of non-Gaussian noise. Compared with (d–f), it can be seen that the PSNR value of the image recovered using the negentropy algorithm based on

norm is 1.3 dB higher than that recovered using the negentropy algorithm based on

norm, and the visual effects are better, which demonstrates that

norm, as a sparse constraint, can make the reconstruction accuracy of the algorithm higher and plays a key role in the restoration of image details. For the convenience of comparison, the PSNR results in two noise conditions are shown in

Table 3.

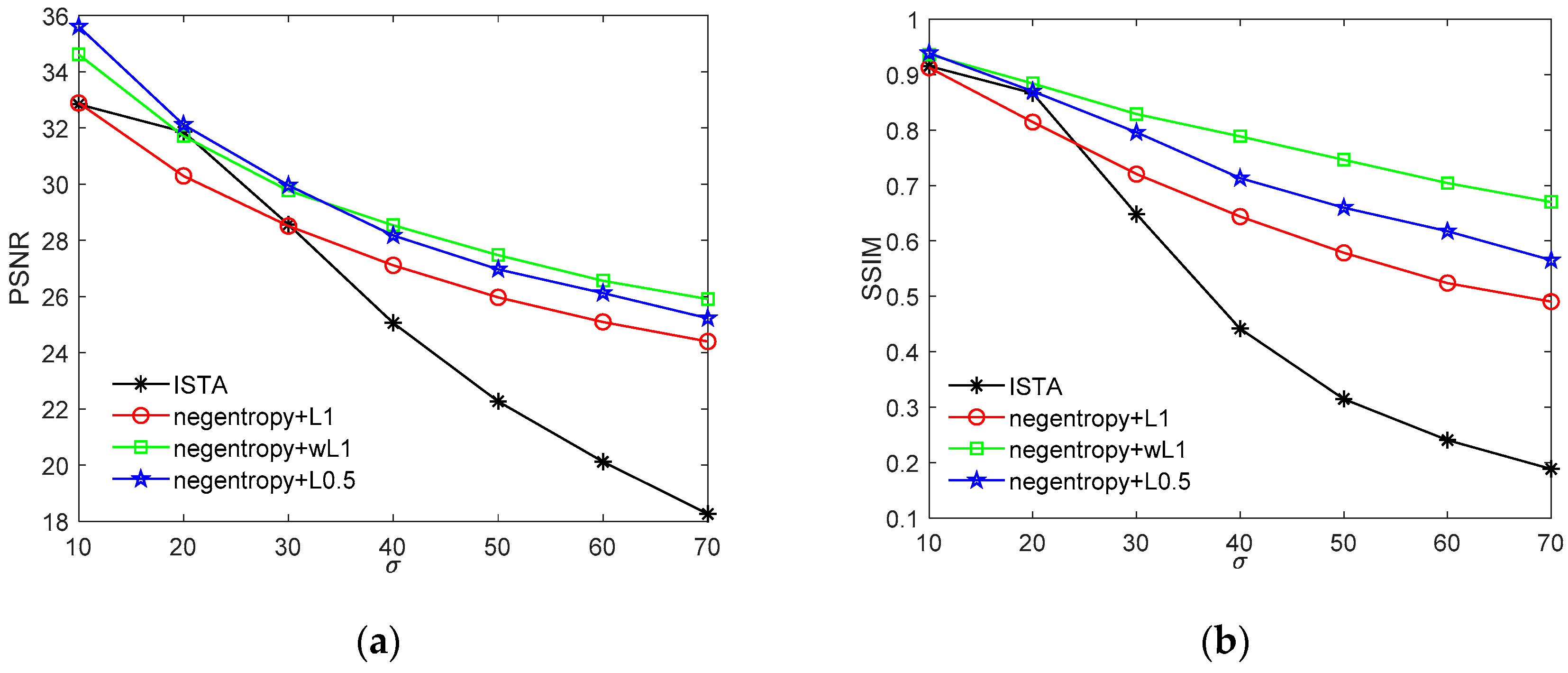

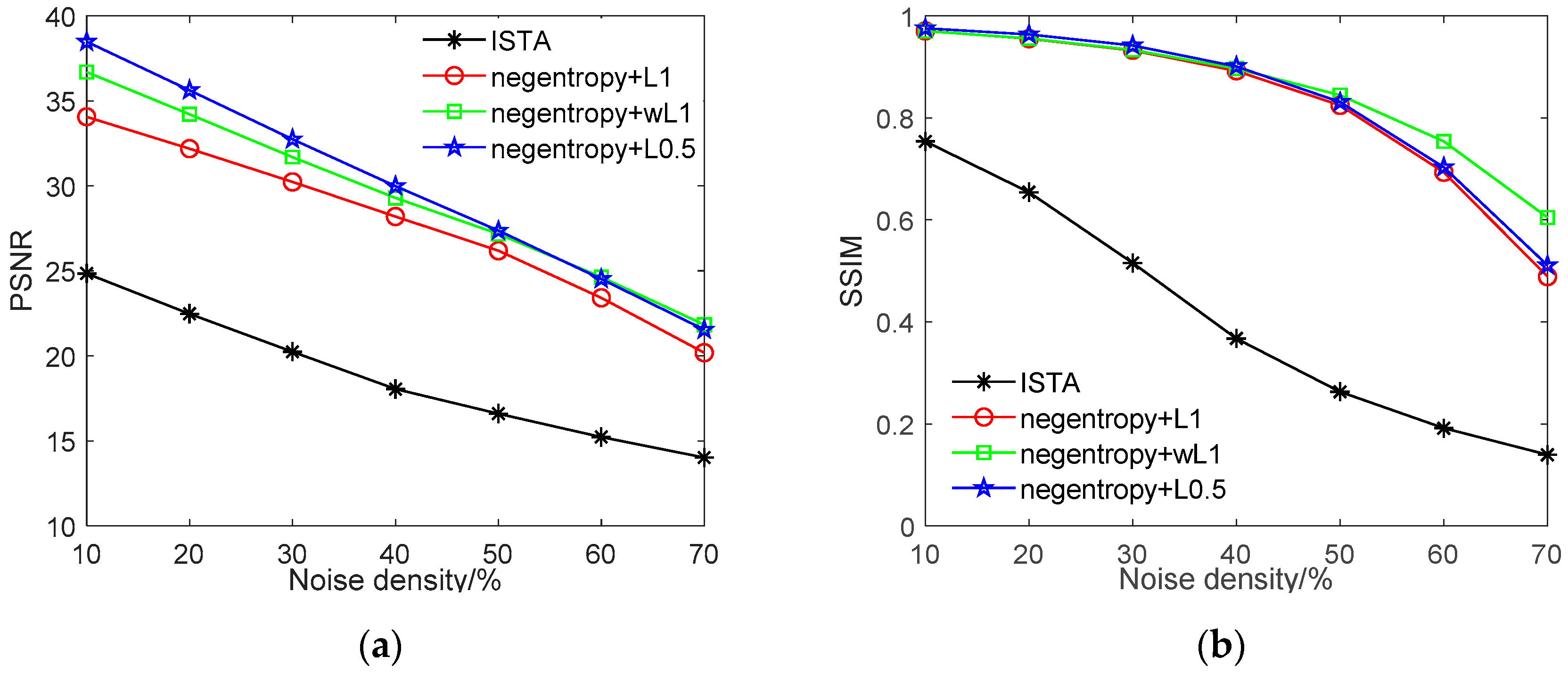

The PSNR and SSIM index values of the denoised images are presented in

Figure 8. As we can see, both the PSNR and the SSIM index decrease gradually as the Gaussian noise intensity increases. Despite the fact that the ISTA algorithm presents comparable performance with the proposed algorithms when the standard deviation of the Gaussian noise is around 20, its performance rapidly decreases with the noise intensity. When the Gaussian noise is strong, the proposed algorithm presents much better performance in both PSNR and SSIM, which indicates that the negentropy algorithms have strong adaptability to different noise intensity.

Figure 9 presents the denoising performance with various densities of salt and pepper noise. As we can observe from

Figure 9, the ISTA algorithm can only recover images with salt and pepper noise density below 20%, and the recovered image is extremely blurred, where the maximum SSIM value only reaches 0.74. Furthermore, when the noise density in the image is higher, ISTA is unable to recover a clear image. The negentropy algorithm, on the other hand, can recover the original image with much higher accuracy. For instance, when the noise density in the image is less than 30%, all three negentropy-based methods have PSNR values of more than 30 dB and SSIM values of more than 0.9. The negentropy algorithm with

norm achieves the best performance, with PSNR = 38 dB and SSIM = 0.97, when the noise density is 10%. With weighted

norm as constraint, although the PSNR value is lower than

norm, the structure similarity between the recovered images and the original images is higher, leading to better visual effects.

3.2. Comparison with Robust Methods

(1) Sparse Signal Recovery

In this part, we conduct sparse signal recovery experiments under non-Gaussian noise and use several well-known, robust sparse recovery algorithms for comparison, including Huber-FISTA [

20], YALL1 [

21] and LqLA-ADMM [

22]. The construction of the simulated k-sparse signal is similar to that in

Section 3.1. The length of the sparse signal and the number of measurements are set at N = 100, M = 60. An

orthonormal Gaussian random matrix is used as the measurement matrix. Impulse noise with SNR = 16 dB. Since the original sparse signal is unknown, we choose

(as proposed in [

37]) with

for all algorithms.

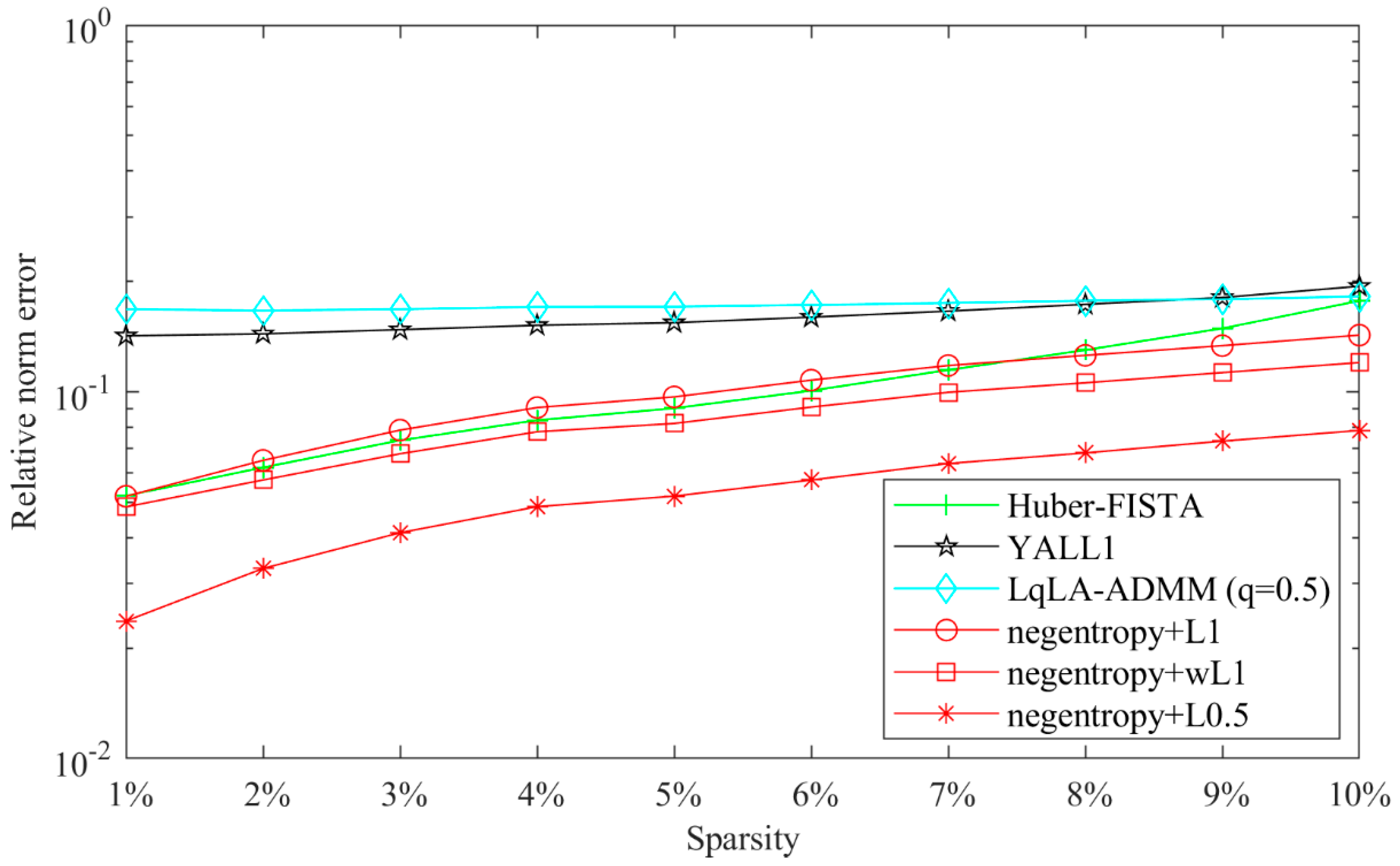

Figure 10 presents the recovery performance of the compared algorithms versus sparsity with

. All algorithms are conducted 300 times for each k.

Figure 11 shows the relative error versus iterations with fixed k = 5. As shown in these figures, the negentropy algorithms (except the

norm-based negentropy algorithm in low sparsity) outperform other compared algorithms in terms of both recovery performance and convergence speed, especially in the case of

norm.

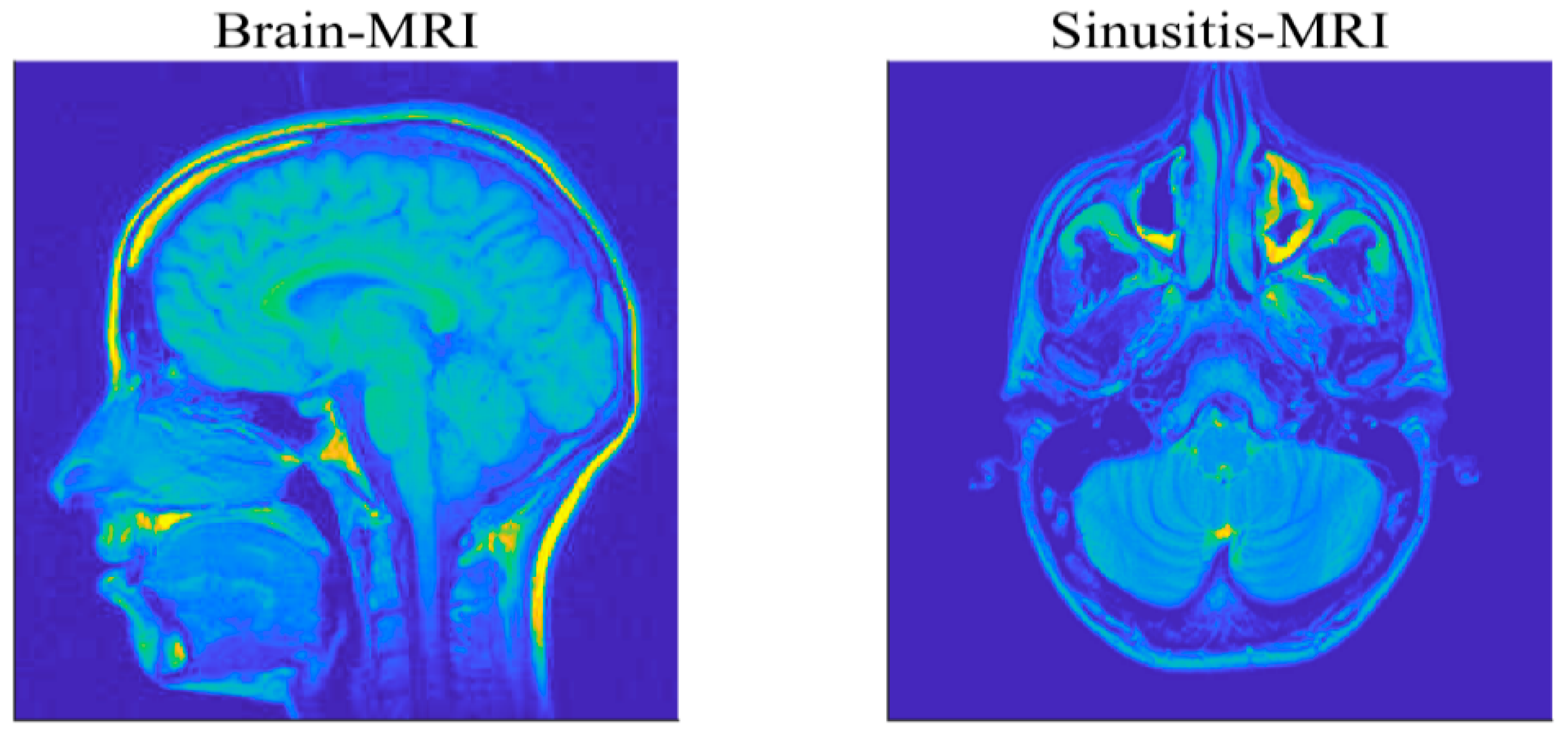

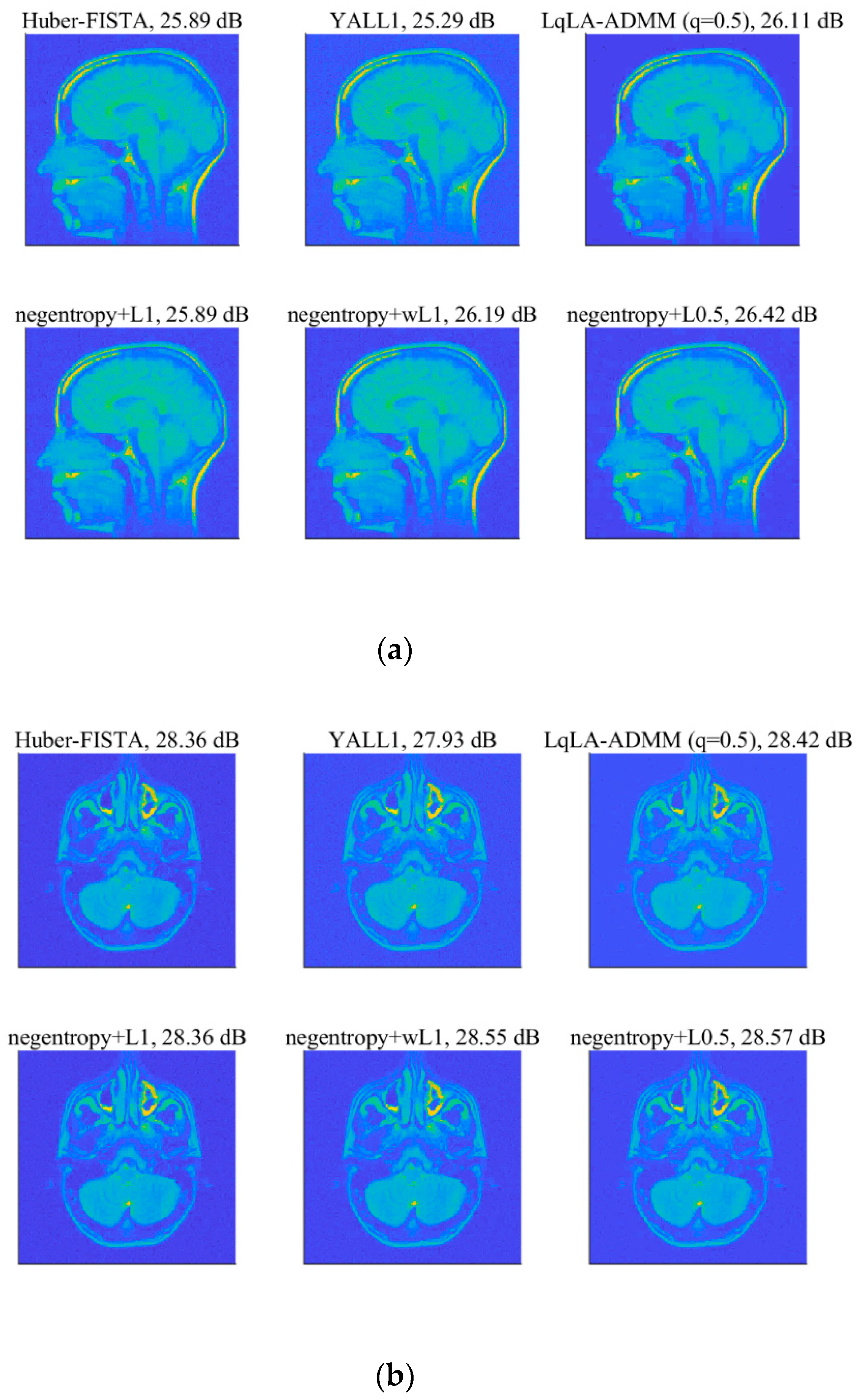

(2) Magnetic Resonance Imaging Example

To further verify the effectiveness and practicability of the proposed algorithms, this part will focus on the reconstruction of medical images. The used MRI images are of size

, for a brain MRI and sinusitis MRI, as shown in

Figure 12, and evaluate the recovery performance by the value of PSNR. We utilize the Haar wavelets as the basis for sparse representation of the images. The compression ratio

M/

N is set as 0.4 and the measurement matrix is a partial DCT matrix. A Gaussian mixture model (GMM)

is taken to model the impulsive noise, where the parameters

and

respectively control the proportion and the strength of outliers in the noise [

19]. To ensure fairness of comparison, the

in each algorithm is selected by providing the best performance in terms of relative error of recovery [

22].

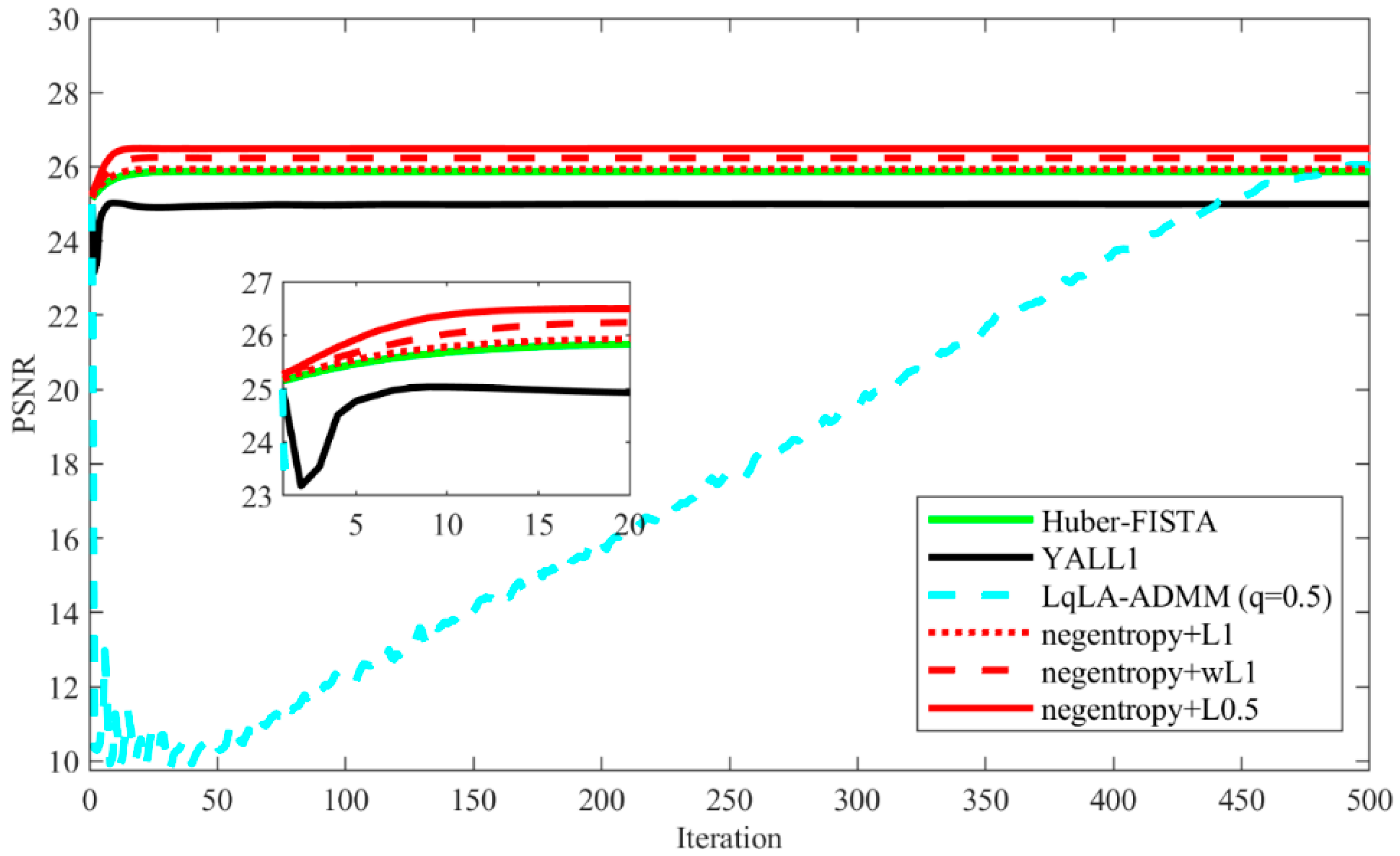

Figure 13 shows the recovered MRI images of all algorithms under the GMM noise with

and SNR = 20 dB. The PSNR results are shown in

Table 4. It can be seen that each algorithm can successfully reconstruct the two MRI images. Quantitatively, it is observed that the PSNR of the MRI images recovered using negentropy algorithms based on

norm have a higher value than those recovered by other compared algorithms and the negentropy algorithm based on

norm obtains the best recovery performance. Particularly, for the brain MRI image, the PSNR gains of negentropy +

over Huber-FISTA, YALL1 and LqLA-ADMM (q = 0.5) are 0.53 dB, 1.13 dB and 0.31 dB, respectively. Furthermore,

Figure 14 presents the convergence curve of PSNR versus iterations in recovering the brain MRI image. It can be seen that compared with LqLA-ADMM (q = 0.5) and Huber-FISTA, the negentropy algorithm requires less iterations to converge. Thus, the proposed algorithm is more efficient for the recovery of MRI images in terms of accuracy and convergence speed.