Remote Insects Trap Monitoring System Using Deep Learning Framework and IoT

Abstract

1. Introduction

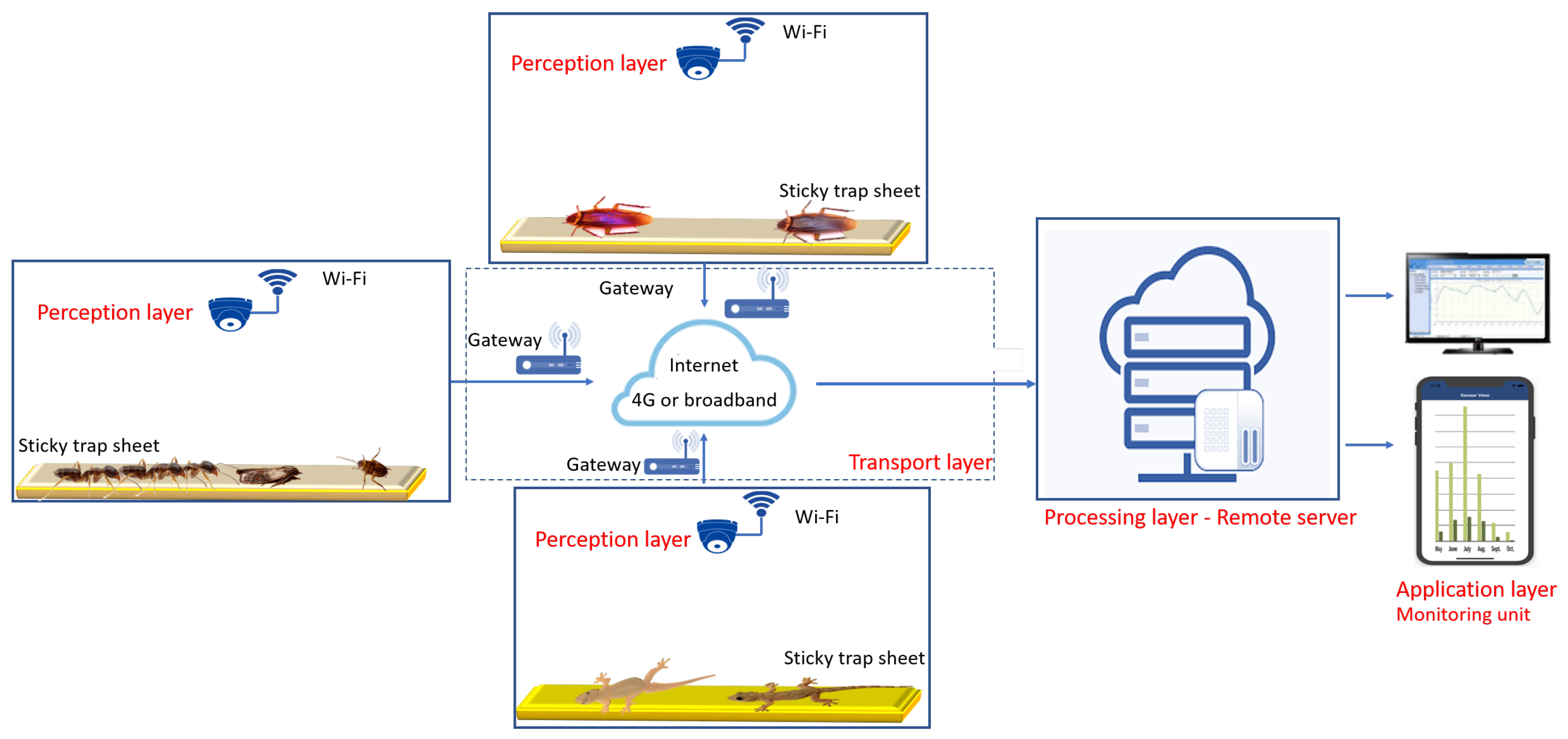

2. Proposed System

2.1. Perception Layer

2.2. Transport Layer

2.3. Processing Layer

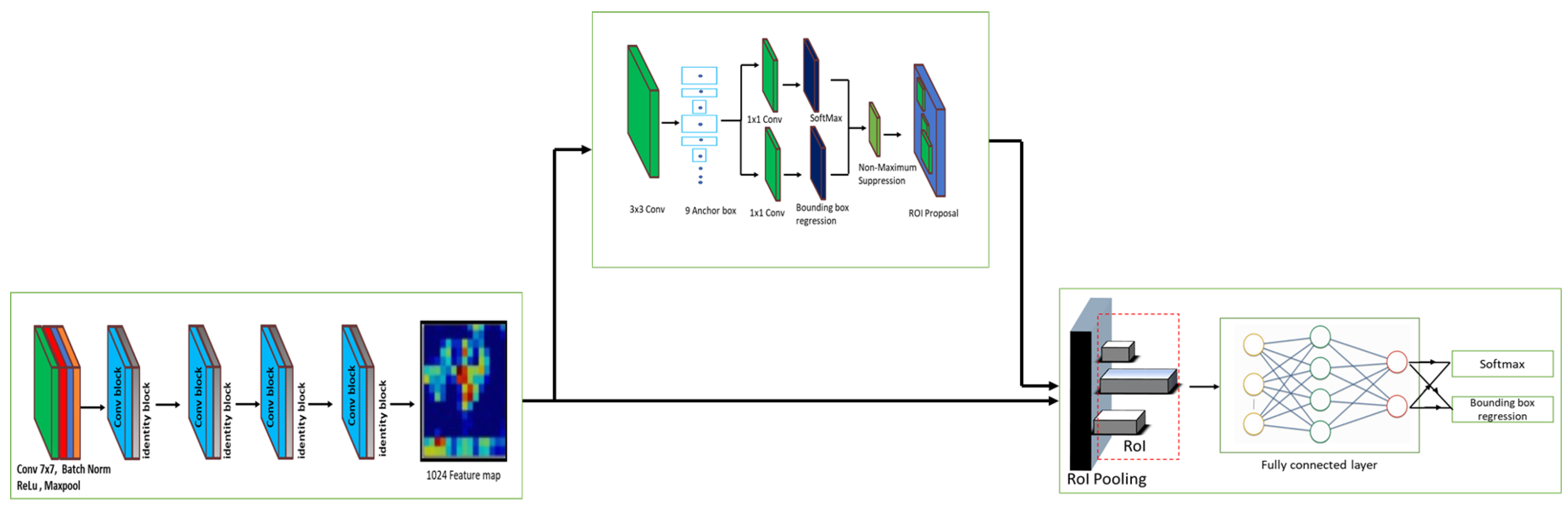

Insects Detection Module

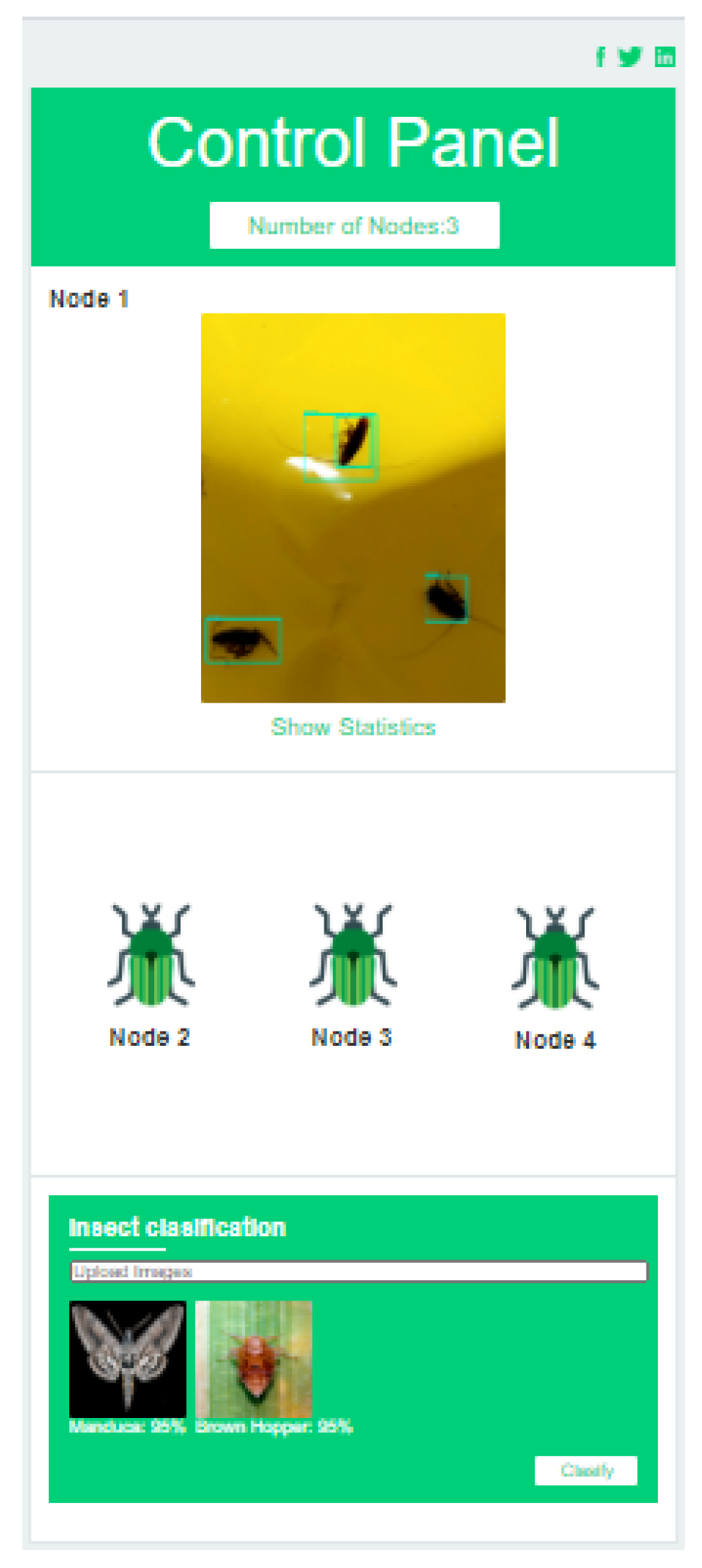

2.4. Application Layer

3. Experimental Results

3.1. Dataset Preparation and Annotations

3.2. Hardware Details

3.3. Training

3.4. Evaluation Metrics

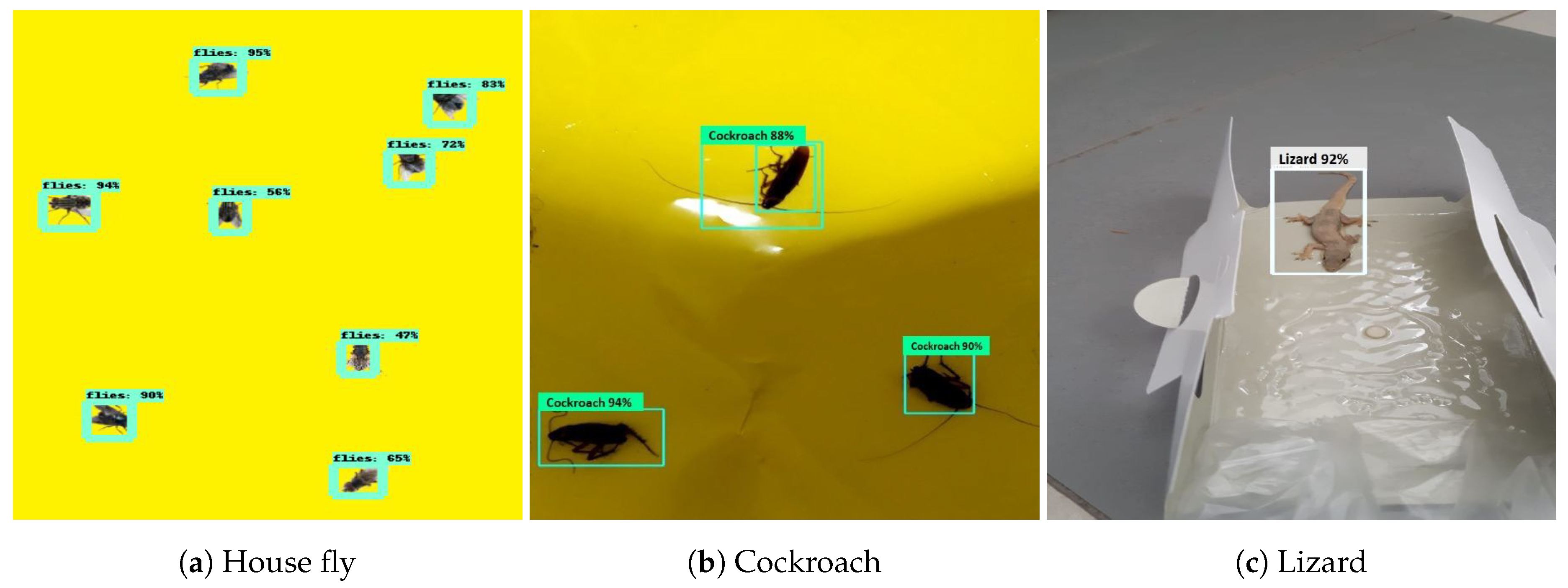

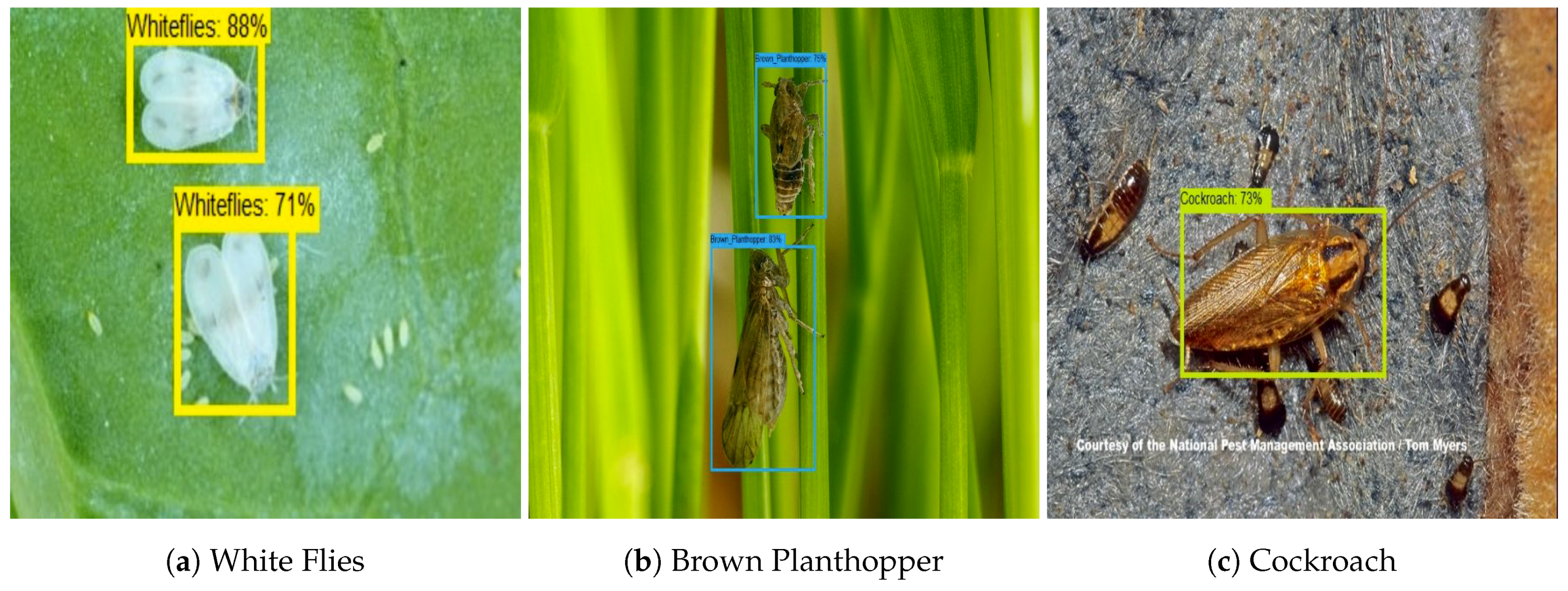

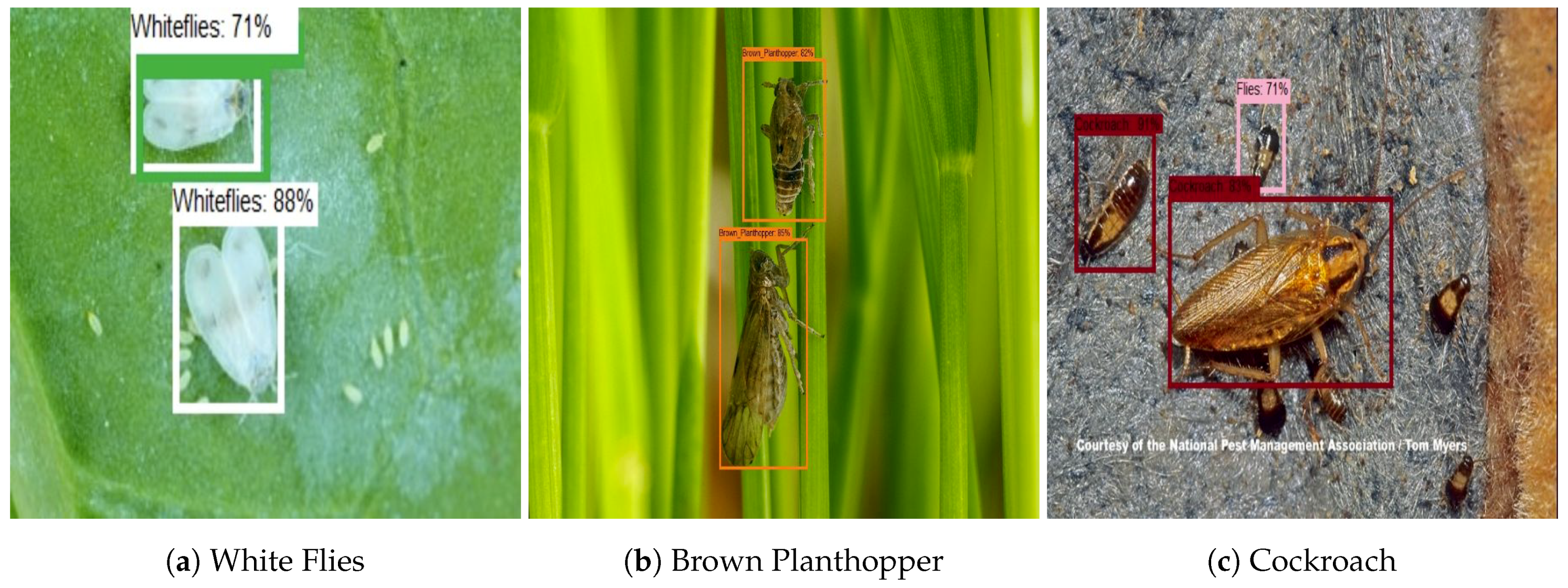

3.5. Offline and Real Time Test

3.6. Farm Field Insect Detection

3.7. Comparison with Other Object Detection Framework

3.8. Comparison with Existing Work

3.9. Application and Future Work

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Pest Management Sector to Be Integrated with Environmental Services Industry Transformation Map. Available online: https://www.nea.gov.sg/media/news/news/index/pest-management-sector-to-be-integrated-with-environmental-services-industry-transformation-map (accessed on 16 July 2020).

- On the Job as a Pest Control Professional: More than Just Killing Bugs, It Requires Expert Knowledge. Available online: https://www.channelnewsasia.com/news/singapore/on-the-job-as-a-pest-control-professional-more-than-just-killing-9832686 (accessed on 16 July 2020).

- Manpower or Productivity Woes? Pest Control Firms Can Turn to Tech. Available online: https://www.straitstimes.com/singapore/manpower-or-productivity-woes-pest-control-firms-can-turn-to-tech (accessed on 16 July 2020).

- Marques, G.; Pitarma, R.; M. Garcia, N.; Pombo, N. Internet of Things Architectures, Technologies, Applications, Challenges, and Future Directions for Enhanced Living Environments and Healthcare Systems: A Review. Electronics 2019, 8, 1081. [Google Scholar] [CrossRef]

- Oliveira, A., Jr.; Resende, C.; Pereira, A.; Madureira, P.; Gonçalves, J.; Moutinho, R.; Soares, F.; Moreira, W. IoT Sensing Platform as a Driver for Digital Farming in Rural Africa. Sensors 2020, 20, 3511. [Google Scholar] [CrossRef] [PubMed]

- Visconti, P.; de Fazio, R.; Velázquez, R.; Del-Valle-Soto, C.; Giannoccaro, N.I. Development of Sensors-Based Agri-Food Traceability System Remotely Managed by a Software Platform for Optimized Farm Management. Sensors 2020, 20, 3632. [Google Scholar] [CrossRef]

- Ullo, S.L.; Sinha, G.R. Advances in Smart Environment Monitoring Systems Using IoT and Sensors. Sensors 2020, 20, 3113. [Google Scholar] [CrossRef] [PubMed]

- Xu, G.; Shi, Y.; Sun, X.; Shen, W. Internet of Things in Marine Environment Monitoring: A Review. Sensors 2019, 19, 1711. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Zhang, Z.; Zhang, L.; Yang, Y.; Kang, Q.; Sun, D. Object Tracking for a Smart City Using IoT and Edge Computing. Sensors 2019, 19, 1987. [Google Scholar] [CrossRef]

- Barthélemy, J.; Verstaevel, N.; Forehead, H.; Perez, P. Edge-Computing Video Analytics for Real-Time Traffic Monitoring in a Smart City. Sensors 2019, 19, 2048. [Google Scholar] [CrossRef]

- Potamitis, I.; Eliopoulos, P.; Rigakis, I. Automated Remote Insect Surveillance at a Global Scale and the Internet of Things. Robotics 2017, 6, 19. [Google Scholar] [CrossRef]

- Rustia, D.J.; Chao, J.J.; Chung, J.Y.; Lin, T.T. An Online Unsupervised Deep Learning Approach for an Automated Pest Insect Monitoring System. In Proceedings of the 2019 ASABE Annual International Meeting, Boston, MA, USA, 7–10 July 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Kajol, R.; Akshay, K.K. Automated Agricultural FieldAnalysis and Monitoring System Using IOT. Int. J. Inform. Eng. Electron. Bus. 2018, 10, 17–24. [Google Scholar] [CrossRef][Green Version]

- Severtson, D.; Congdon, B.; Valentine, C. Apps, traps and LAMP’s: ‘Smart’ improvements to pest and disease management. In Proceedings of the 2018 Grains Research Update, Perth, Australia, 26–27 February 2018. [Google Scholar]

- Eliopoulos, P.; Tatlas, N.A.; Rigakis, I.; Potamitis, I. A “Smart” Trap Device for Detection of Crawling Insects and Other Arthropods in Urban Environments. Electronics 2018, 7, 161. [Google Scholar] [CrossRef]

- Potamitis, I.; Rigakis, I.; Vidakis, N.; Petousis, M.; Weber, M. Affordable Bimodal Optical Sensors to Spread the Use of Automated Insect Monitoring. J. Sens. 2018, 2018, 1–25. [Google Scholar] [CrossRef]

- Teng, T.W.; Veerajagadheswar, P.; Ramalingam, B.; Yin, J.; Elara Mohan, R.; Gómez, B.F. Vision Based Wall Following Framework: A Case Study With HSR Robot for Cleaning Application. Sensors 2020, 20, 3298. [Google Scholar] [CrossRef] [PubMed]

- Yin, J.; Apuroop, K.G.S.; Tamilselvam, Y.K.; Mohan, R.E.; Ramalingam, B.; Le, A.V. Table Cleaning Task by Human Support Robot Using Deep Learning Technique. Sensors 2020, 20, 1698. [Google Scholar] [CrossRef]

- Ramalingam, B.; Yin, J.; Rajesh Elara, M.; Tamilselvam, Y.K.; Mohan Rayguru, M.; Muthugala, M.A.V.J.; Félix Gómez, B. A Human Support Robot for the Cleaning and Maintenance of Door Handles Using a Deep-Learning Framework. Sensors 2020, 20, 3543. [Google Scholar] [CrossRef] [PubMed]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 1–13. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Solis-Sánchez, L.O.; Castañeda-Miranda, R.; García-Escalante, J.J.; Torres-Pacheco, I.; Guevara-González, R.G.; Castañeda-Miranda, C.L.; Alaniz-Lumbreras, P.D. Scale invariant feature approach for insect monitoring. Comput. Electron. Agric. 2011, 75, 92–99. [Google Scholar] [CrossRef]

- Wen, C.; Guyer, D. Image-based orchard insect automated identification and classification method. Comput. Electron. Agric. 2012, 89, 110–115. [Google Scholar] [CrossRef]

- Xie, C.; Zhang, J.; Li, R.; Li, J.; Hong, P.; Xia, J.; Chen, P. Automatic classification for field crop insects via multiple-task sparse representation and multiple-kernel learning. Comput. Electron. Agric. 2015, 119, 123–132. [Google Scholar] [CrossRef]

- Qing, Y.A.O.; Jun, L.V.; Liu, Q.J.; Diao, G.Q.; Yang, B.J.; Chen, H.M.; Jian, T.A.N.G. An Insect Imaging System to Automate Rice Light-Trap Pest Identification. J. Integr. Agric. 2012, 11, 978–985. [Google Scholar] [CrossRef]

- Rustia, D.J.; Lin, T.T. An IoT-based Wireless Imaging and Sensor Node System for Remote Greenhouse Pest Monitoring. Chem. Eng. Trans. 2017, 58. [Google Scholar] [CrossRef]

- Ding, W.; Taylor, G. Automatic moth detection from trap images for pest management. Comput. Electron. Agric. 2016, 123, 17–28. [Google Scholar] [CrossRef]

- Liu, Z.; Gao, J.; Yang, G.; Zhang, H.; He, Y. Localization and Classification of Paddy Field Pests using a Saliency Map and Deep Convolutional Neural Network. Sci. Rep. 2016, 6, 20410. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Wang, R.; Xie, C.; Yang, P.; Wang, F.; Sudirman, S.; Liu, W. PestNet: An End-to-End Deep Learning Approach for Large-Scale Multi-Class Pest Detection and Classification. IEEE Access 2019, 7, 45301–45312. [Google Scholar] [CrossRef]

- Nam, N.T.; Hung, P.D. Pest Detection on Traps Using Deep Convolutional Neural Networks. In Proceedings of the 2018 International Conference on Control and Computer Vision (ICCCV ’18), Singapore, 18–21 November 2018; pp. 33–38. [Google Scholar] [CrossRef]

- Xia, D.; Chen, P.; Wang, B.; Zhang, J.; Xie, C. Insect Detection and Classification Based on an Improved Convolutional Neural Network. Sensors 2018, 18, 4169. [Google Scholar] [CrossRef] [PubMed]

- Gutierrez, A.; Ansuategi, A.; Susperregi, L.; Tubío, C.; Rankić, I.; Lenža, L. A Benchmarking of Learning Strategies for Pest Detection and Identification on Tomato Plants for Autonomous Scouting Robots Using Internal Databases. J. Sens. 2019, 2019, 1–15. [Google Scholar] [CrossRef]

- Burhan, M.; Rehman, R.; Khan, B.; Kim, B.S. IoT Elements, Layered Architectures and Security Issues: A Comprehensive Survey. Sensors 2018, 18, 2796. [Google Scholar] [CrossRef]

- Laubhan, K.; Talaat, K.; Riehl, S.; Morelli, T.; Abdelgawad, A.; Yelamarthi, K. A four-layer wireless sensor network framework for IoT applications. In Proceedings of the 2016 IEEE 59th International Midwest Symposium on Circuits and Systems (MWSCAS), Abu Dhabi, UAE, 16–19 October 2016; pp. 1–4. [Google Scholar]

- Dorsemaine, B.; Gaulier, J.; Wary, J.; Kheir, N.; Urien, P. A new approach to investigate IoT threats based on a four layer model. In Proceedings of the 2016 13th International Conference on New Technologies for Distributed Systems (NOTERE), Paris, France, 18 July 2016; pp. 1–6. [Google Scholar]

- Pham, P.; Nguyen, D.; Do, T.; Duc, T.; Le, D.D. Evaluation of Deep Models for Real-Time Small Object Detection. In International Conference on Neural Information Processing; Springer: Cham, Switzerland, 2017; pp. 516–526. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-Based Convolutional Networks for Accurate Object Detection and Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Insect Images. Available online: https://www.insectimages.org/ (accessed on 14 July 2020).

- Wu, X.; Zhan, C.; Lai, Y.; Cheng, M.M.; Yang, J. IP102: A Large-Scale Benchmark Dataset for Insect Pest Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8787–8796. [Google Scholar]

- Rice Knowledge Bank. Available online: http://www.knowledgebank.irri.org/ (accessed on 14 July 2020).

- Bugwood Center for Invasive Species and Ecosystem Health—University of Georgia. Available online: https://www.bugwood.org/ (accessed on 14 July 2020).

- Ning, C.; Zhou, H.; Song, Y.; Tang, J. Inception Single Shot MultiBox Detector for object detection. In Proceedings of the 2017 IEEE International Conference on Multimedia Expo Workshops (ICMEW), Hong Kong, China, 10–14 July 2017; pp. 549–554. [Google Scholar]

- Sandler, M.; Howard, A.G.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Ramalingam, B.; Lakshmanan, A.K.; Ilyas, M.; Le, A.V.; Elara, M.R. Cascaded Machine-Learning Technique for Debris Classification in Floor-Cleaning Robot Application. Appl. Sci. 2018, 8, 2649. [Google Scholar] [CrossRef]

- Hung, P.D.; Kien, N.N. SSD-Mobilenet Implementation for Classifying Fish Species. In Intelligent Computing and Optimization; Vasant, P., Zelinka, I., Weber, G.W., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 399–408. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Cui, J.; Zhang, J.; Sun, G.; Zheng, B. Extraction and Research of Crop Feature Points Based on Computer Vision. Sensors 2019, 19, 2553. [Google Scholar] [CrossRef] [PubMed]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. UAV-YOLO: Small Object Detection on Unmanned Aerial Vehicle Perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef] [PubMed]

| Specification | Details |

|---|---|

| View Angle | 60 degree |

| Output image format | VGA 640 × 480, QVGA 320 × 240, QQVGA 160 × 120 |

| Output Video format | Motion JPEG |

| Frame Per second (FPS) | 30 |

| Wireless Interface | IEEE 802.11b/g 2.4 GHz ISM Band |

| Wireless Range | 20 m |

| Dimension/Weight | 30 mm (Diameter) × 35 mm (L)/100 g |

| Power Supply/Consumption | Voltage: 3.0 V , Power: 750 mAH |

| Test | Cockroach | Lizard | Housefly | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | Accuracy | Prec. | Recall | Accuracy | Prec. | Recall | Accuracy | ||||

| offline (database) | 97.21 | 97.12 | 97.04 | 97.00 | 98.64 | 98.18 | 98.25 | 98.31 | 95.77 | 95.44 | 95.29 | 95.33 |

| Real time (trap sheet) | 96.45 | 96.22 | 96.17 | 96.29 | 96.72 | 96.17 | 96.03 | 96.43 | 94.89 | 94.38 | 94.04 | 94.27 |

| Insect Name | Precision | Recall | F1 | Accuracy |

|---|---|---|---|---|

| Planthoppers | 93.25 | 92.39 | 92.11 | 93.13 |

| Colorado | 95.23 | 94.89 | 94.22 | 94.88 |

| Empoasca | 94.34 | 93.90 | 93.54 | 94.08 |

| Mole-cricket | 94.65 | 94.24 | 94.19 | 94.33 |

| Manduca | 93.05 | 92.93 | 92.74 | 92.97 |

| Rice Hispa | 94.21 | 93.82 | 93.77 | 93.88 |

| Stink-bug | 95.42 | 95.13 | 95.17 | 95.00 |

| Whiteflies | 94.80 | 94.23 | 94.01 | 94.60 |

| Test | Bounding Box Detection (IOU > 0.5) | Classification | |||||

|---|---|---|---|---|---|---|---|

| Prec. | Recall | mAP | Prec. | Recall | Accuracy | ||

| Yolo V2 | 79.15 | 84.13 | 78.65 | 89.33 | 87.55 | 87.11 | 87.66 |

| SSD MobileNet | 84.34 | 87.78 | 82.31 | 92.31 | 92.07 | 92.00 | 92.12 |

| SSD Inception | 85.61 | 88.13 | 86.52 | 93.74 | 93.16 | 93.05 | 93.47 |

| Proposed | 90.10 | 89.78 | 88.79 | 96.22 | 95.98 | 95.79 | 96.08 |

| Algorithm | Computational Cost for Training (Hours: Minutes) | Computational Cost for Testing (Seconds) |

|---|---|---|

| Yolo V2 | 7:20 | 20.22 |

| SSD MobileNet | 7:50 | 15.88 |

| SSD Inception | 8:35 | 26.03 |

| Proposed | 9:30 | 31.66 |

| Case Study | Application | Algorithm | Detection Accuracy |

|---|---|---|---|

| Xia et al. [31] | Farmfield | VGG19 + RPN | 0.89 |

| Nguyen et al. [30] | Pest detection on Traps | SSD + VGG16 | 0.86 |

| Liu et al. [29] | agriculture pest identification | PestNet | 0.75 |

| Rustia et al. [12] | built environment insects | YOLO v3 | 0.92 |

| Ding et al. [27] | farm field moth | ConvNet | 0.93 |

| Proposed system | built environment and farm field | Faster RCNN ResNet 50 | 0.94 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ramalingam, B.; Mohan, R.E.; Pookkuttath, S.; Gómez, B.F.; Sairam Borusu, C.S.C.; Wee Teng, T.; Tamilselvam, Y.K. Remote Insects Trap Monitoring System Using Deep Learning Framework and IoT. Sensors 2020, 20, 5280. https://doi.org/10.3390/s20185280

Ramalingam B, Mohan RE, Pookkuttath S, Gómez BF, Sairam Borusu CSC, Wee Teng T, Tamilselvam YK. Remote Insects Trap Monitoring System Using Deep Learning Framework and IoT. Sensors. 2020; 20(18):5280. https://doi.org/10.3390/s20185280

Chicago/Turabian StyleRamalingam, Balakrishnan, Rajesh Elara Mohan, Sathian Pookkuttath, Braulio Félix Gómez, Charan Satya Chandra Sairam Borusu, Tey Wee Teng, and Yokhesh Krishnasamy Tamilselvam. 2020. "Remote Insects Trap Monitoring System Using Deep Learning Framework and IoT" Sensors 20, no. 18: 5280. https://doi.org/10.3390/s20185280

APA StyleRamalingam, B., Mohan, R. E., Pookkuttath, S., Gómez, B. F., Sairam Borusu, C. S. C., Wee Teng, T., & Tamilselvam, Y. K. (2020). Remote Insects Trap Monitoring System Using Deep Learning Framework and IoT. Sensors, 20(18), 5280. https://doi.org/10.3390/s20185280