Author Contributions

Conceptualization, X.C.; Data curation, X.L.; Formal analysis, D.F. and X.C.; Investigation, F.M.; Methodology, D.F. and Y.L. (Yunhui Liu); Software, D.F. and Z.U.; Supervision, Y.L. (Yunhui Liu) and Q.H.; Validation, Y.L. (Yanyang Liu), F.M. and W.C.; Visualization, Y.L (Yanyang Liu). and X.L.; Writing—original draft, D.F.; Writing—review and editing, X.C., Z.U., Y.L. (Yunhui Liu) and Q.H. All authors have read and agreed to the published version of the manuscript.

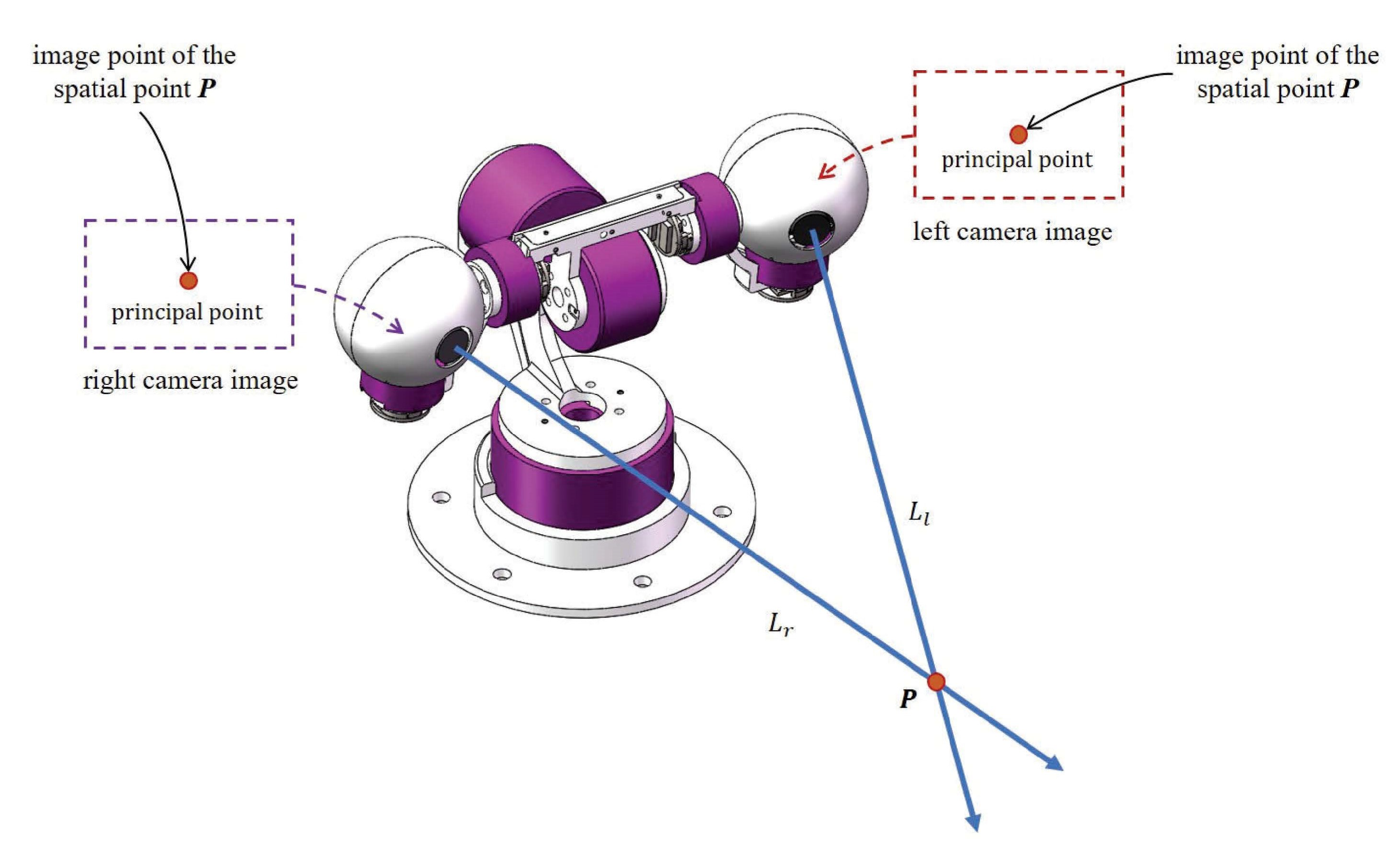

Figure 1.

In our proposed method, we use eye gazing or visual servoing to actively adjust the view direction of each camera to keep the 2D image of a three-dimensional (3D) point at the principal point. In other words, is located at the fixation point. 3D triangulation could be performed through the intersection of two cameras’ optical axes.

Figure 1.

In our proposed method, we use eye gazing or visual servoing to actively adjust the view direction of each camera to keep the 2D image of a three-dimensional (3D) point at the principal point. In other words, is located at the fixation point. 3D triangulation could be performed through the intersection of two cameras’ optical axes.

Figure 2.

The anthropomorphic robotic bionic eyes.

Figure 2.

The anthropomorphic robotic bionic eyes.

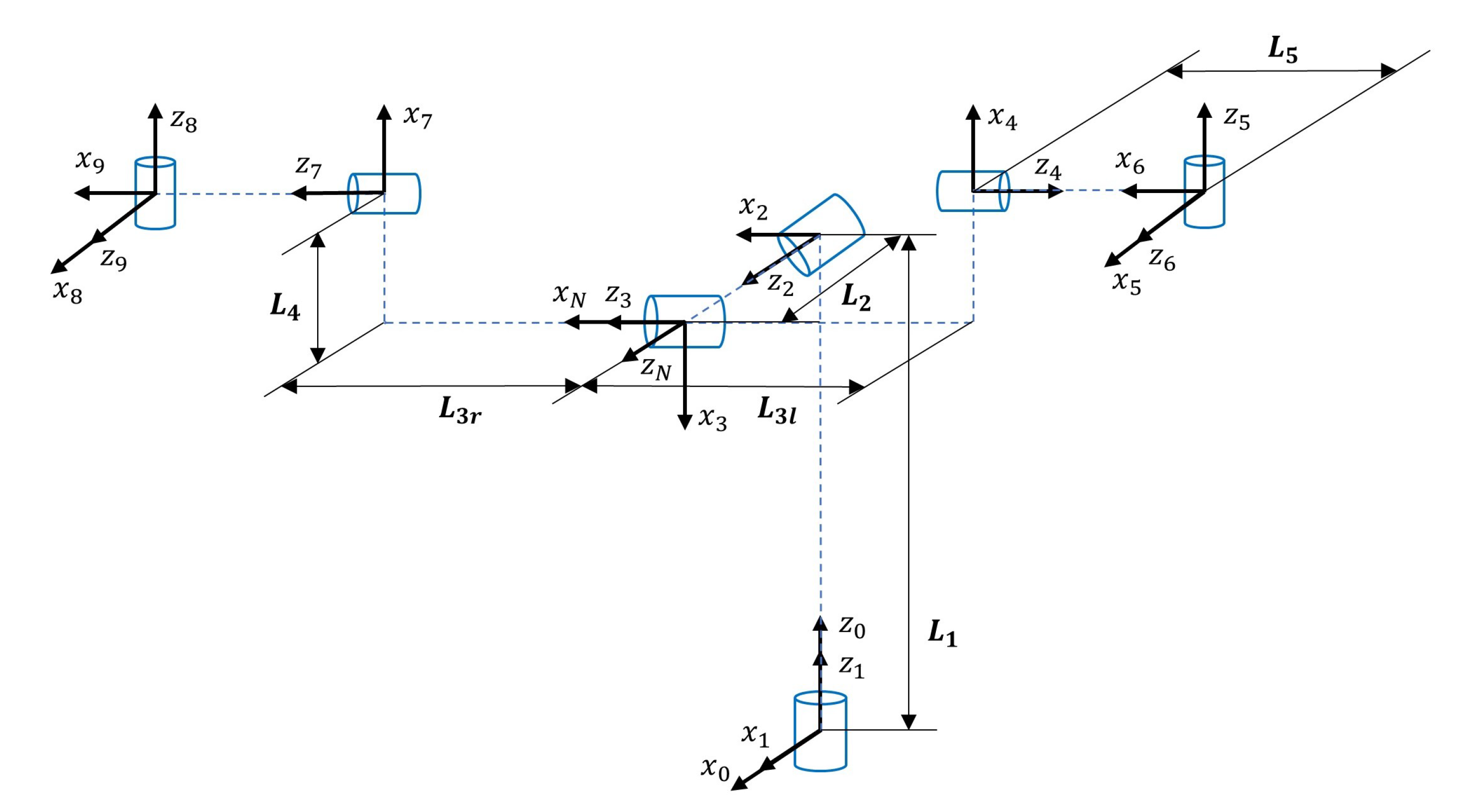

Figure 3.

The definition of the robot bionic eyes’ coordinate system.

Figure 3.

The definition of the robot bionic eyes’ coordinate system.

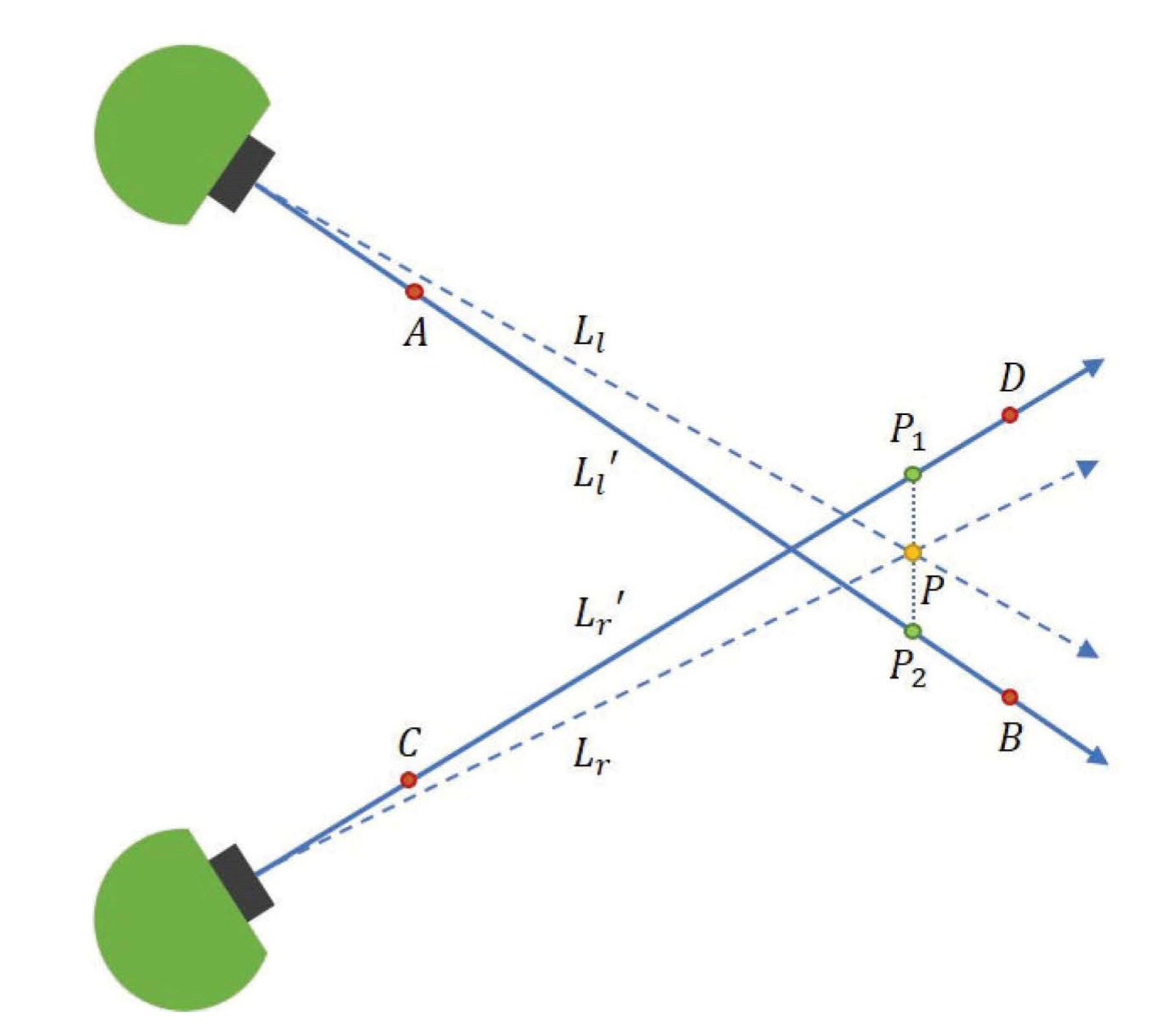

Figure 4.

The actual model of our proposed method.

Figure 4.

The actual model of our proposed method.

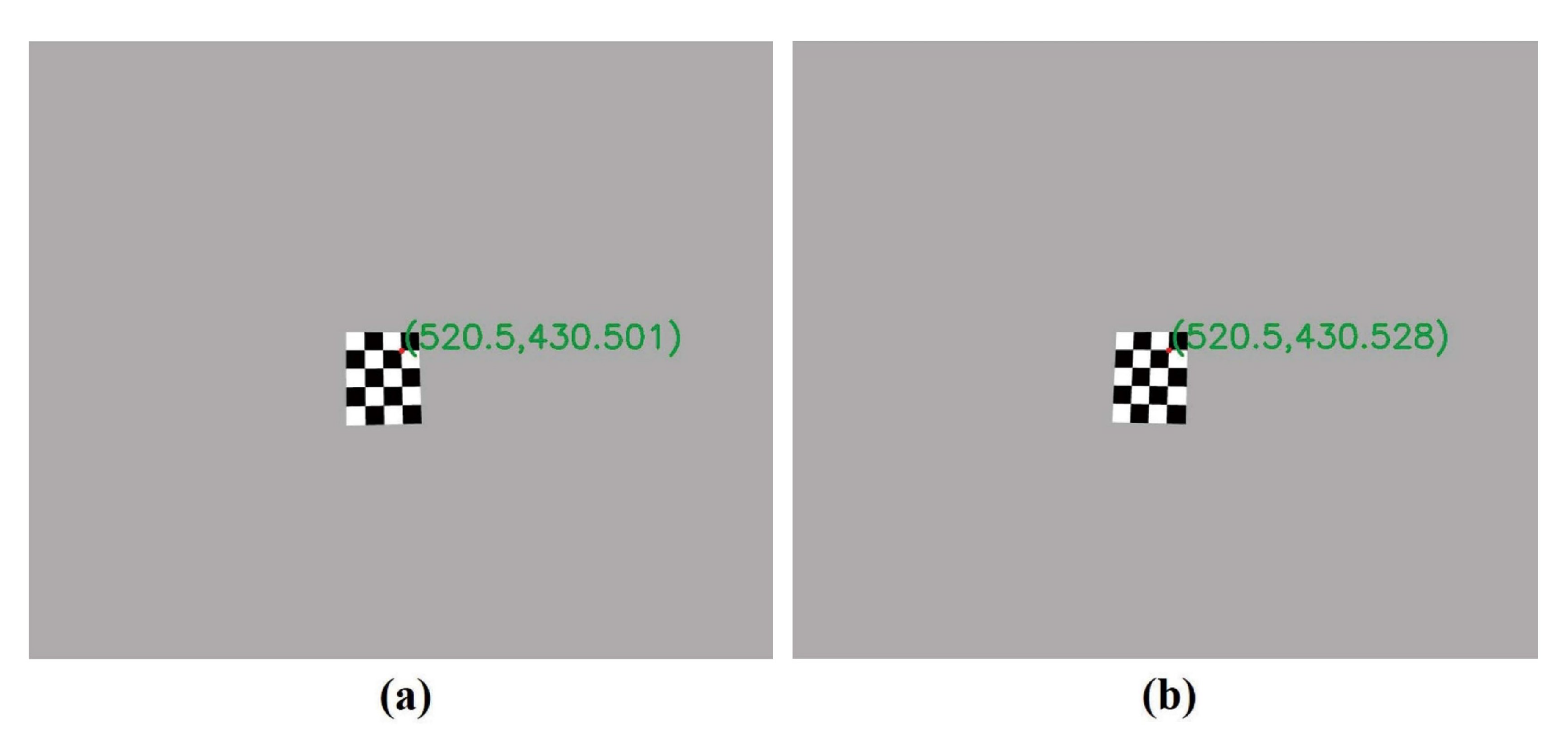

Figure 5.

The images of in the left and right simulated cameras. (a) left image; (b) right image.

Figure 5.

The images of in the left and right simulated cameras. (a) left image; (b) right image.

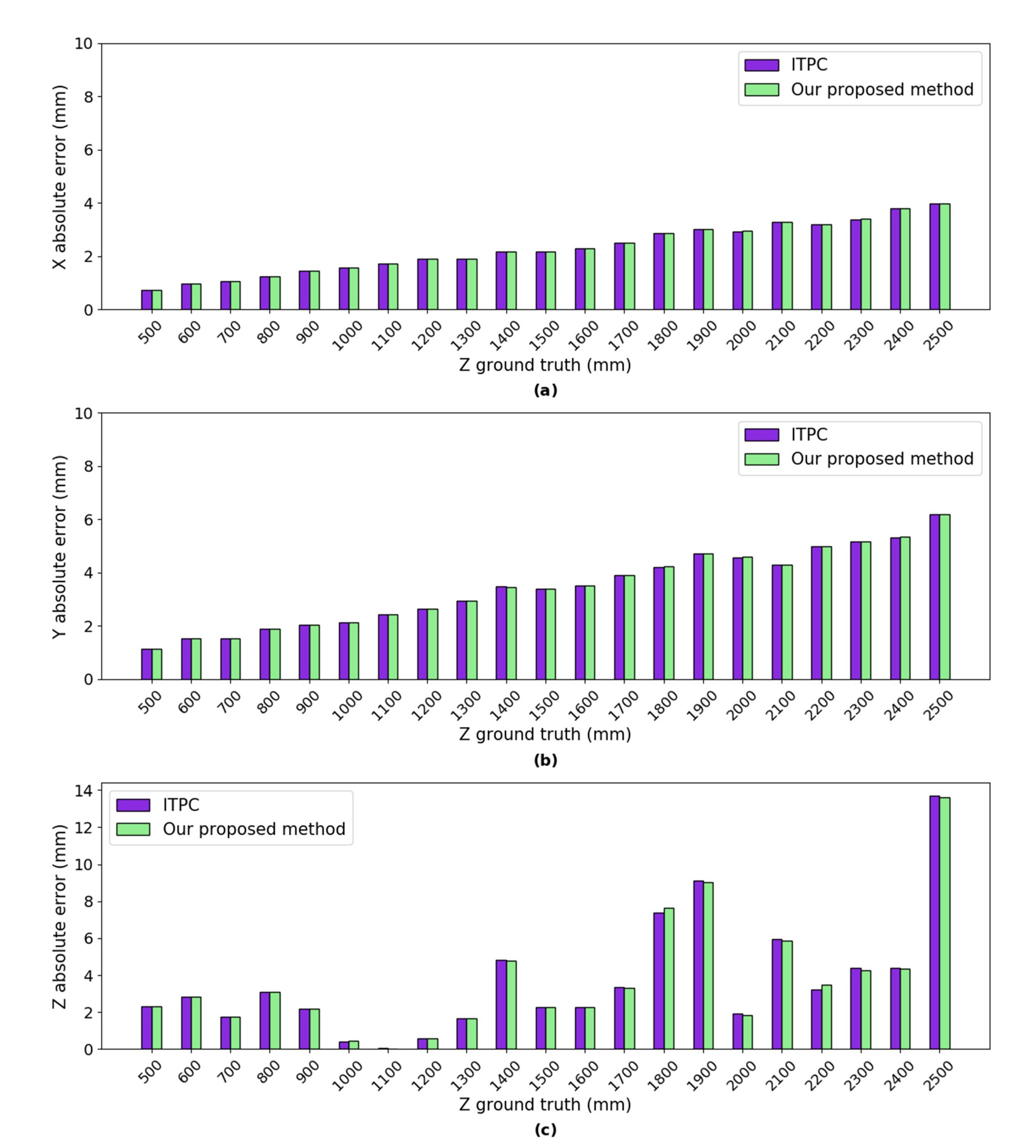

Figure 6.

The absolute error of the mean of the estimated 3D coordinates using our proposed method and integrated two-pose calibration (ITPC) method w.r.t ground truth respectively. (a) absolute error in X axis; (b) absolute error in Y axis; and,(c) absolute error in Z axis.

Figure 6.

The absolute error of the mean of the estimated 3D coordinates using our proposed method and integrated two-pose calibration (ITPC) method w.r.t ground truth respectively. (a) absolute error in X axis; (b) absolute error in Y axis; and,(c) absolute error in Z axis.

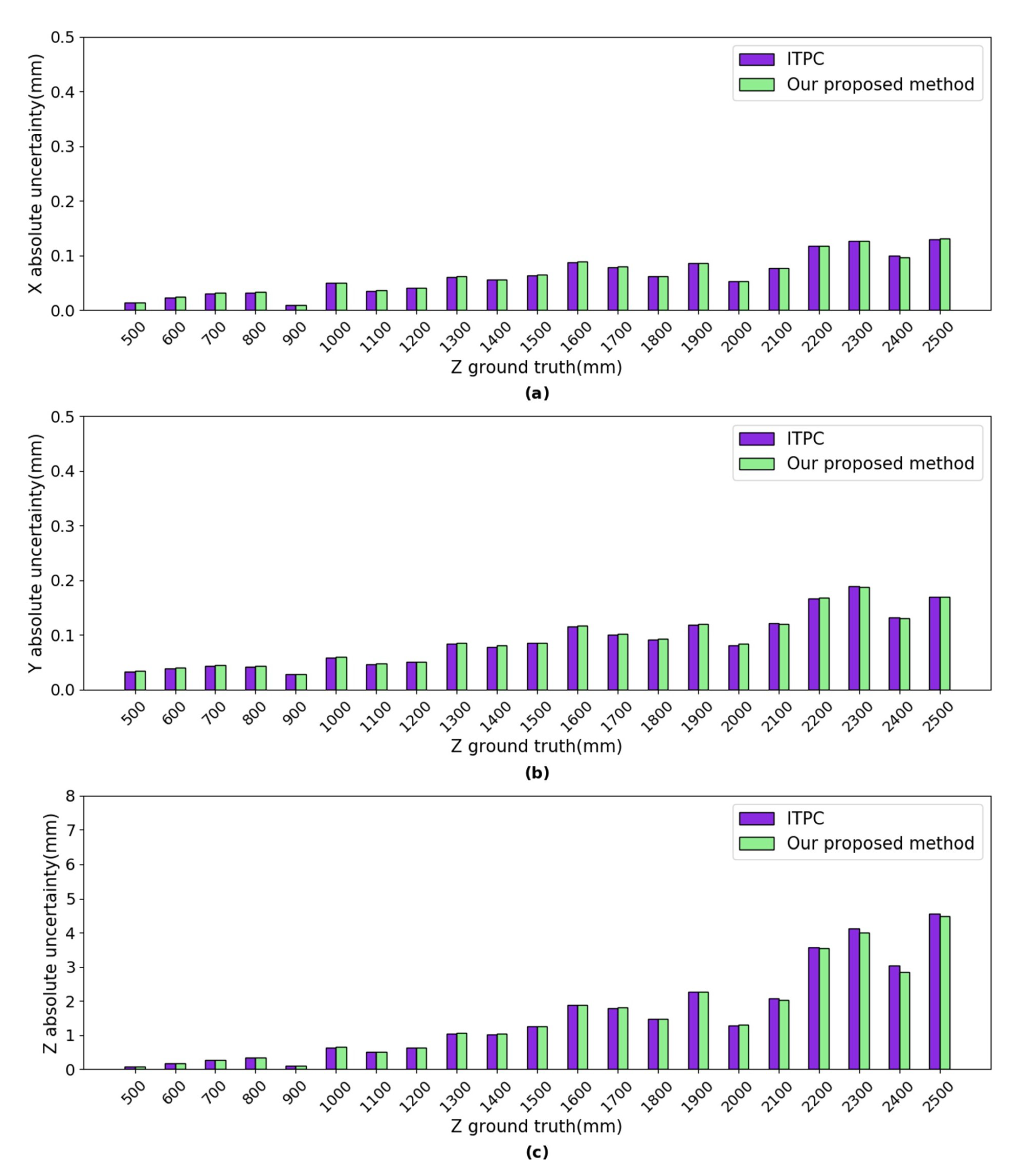

Figure 7.

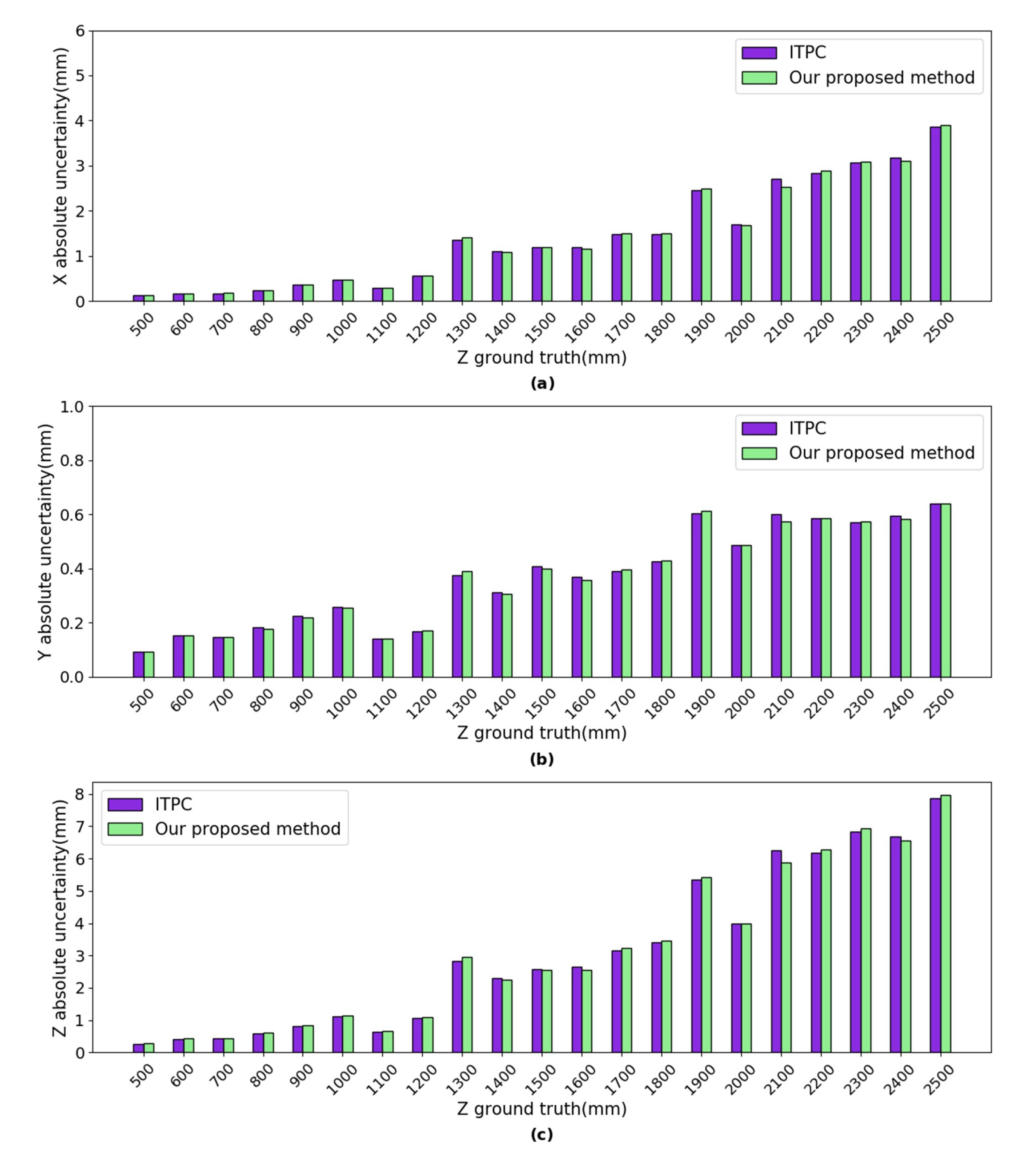

The uncertainties of our proposed method and ITPC method. (a) absolute uncertainty in X axis; (b) absolute uncertainty in Y axis; and, (c) absolute uncertainty in Z axis.

Figure 7.

The uncertainties of our proposed method and ITPC method. (a) absolute uncertainty in X axis; (b) absolute uncertainty in Y axis; and, (c) absolute uncertainty in Z axis.

Figure 8.

The absolute error of the mean of the estimated 3D coordinates using our proposed method and ITPC method w.r.t ground truth, respectively. (a) absolute error in X axis; (b) absolute error in Y axis; and, (c) absolute error in Z axis.

Figure 8.

The absolute error of the mean of the estimated 3D coordinates using our proposed method and ITPC method w.r.t ground truth, respectively. (a) absolute error in X axis; (b) absolute error in Y axis; and, (c) absolute error in Z axis.

Figure 9.

The images of the left and right cameras. (a) left image; (b) right image.

Figure 9.

The images of the left and right cameras. (a) left image; (b) right image.

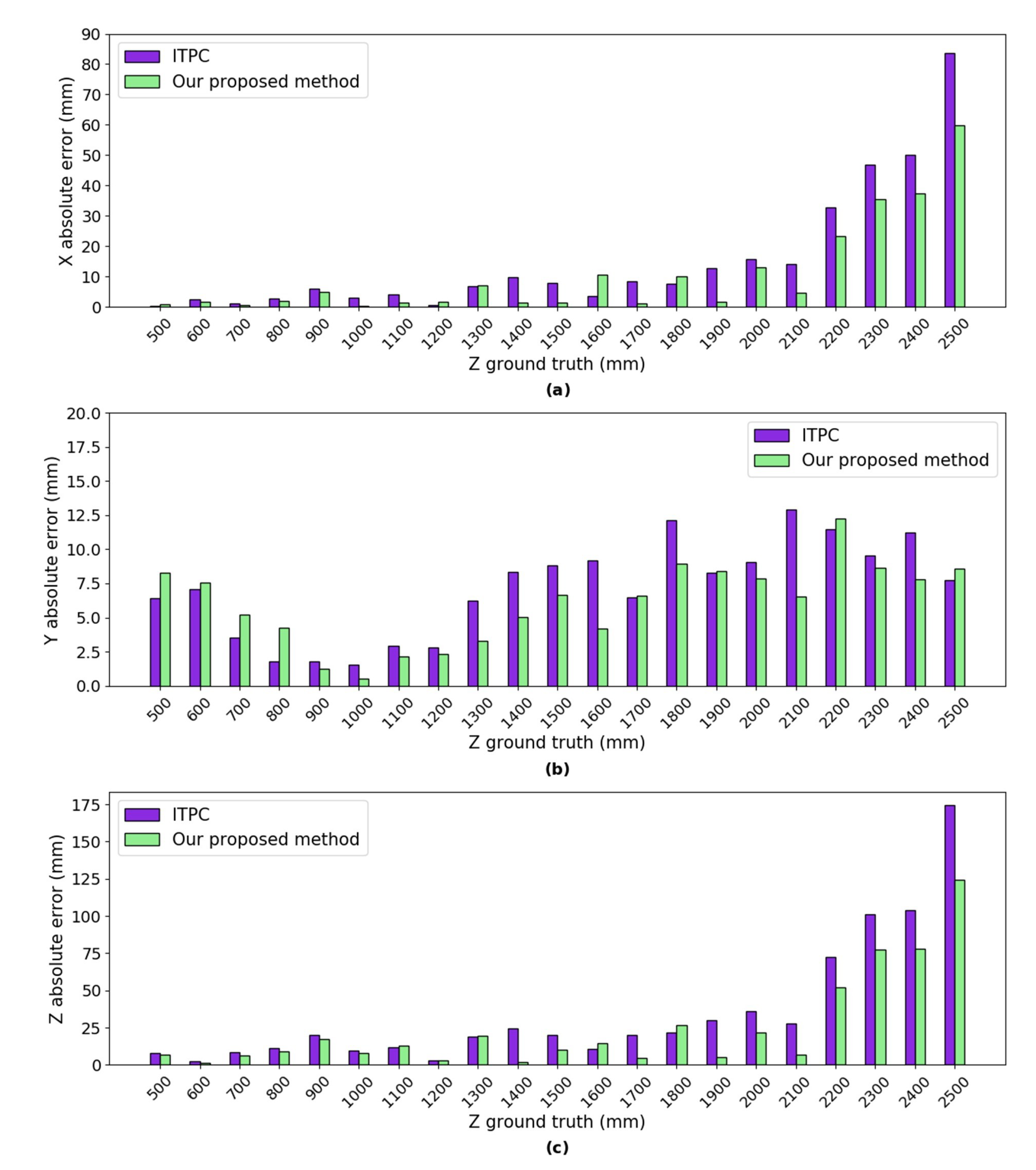

Figure 10.

The absolute error of the mean of the estimated 3D coordinates using our proposed method and ITPC method w.r.t ground truth respectively. (a) absolute error in X axis; (b) absolute error in Y axis; and, (c) absolute error in Z axis.

Figure 10.

The absolute error of the mean of the estimated 3D coordinates using our proposed method and ITPC method w.r.t ground truth respectively. (a) absolute error in X axis; (b) absolute error in Y axis; and, (c) absolute error in Z axis.

Figure 11.

The uncertainties of our proposed method and ITPC method. (a) absolute uncertainty in X axis; (b) absolute uncertainty in Y axis; and, (c) absolute uncertainty in Z axis.

Figure 11.

The uncertainties of our proposed method and ITPC method. (a) absolute uncertainty in X axis; (b) absolute uncertainty in Y axis; and, (c) absolute uncertainty in Z axis.

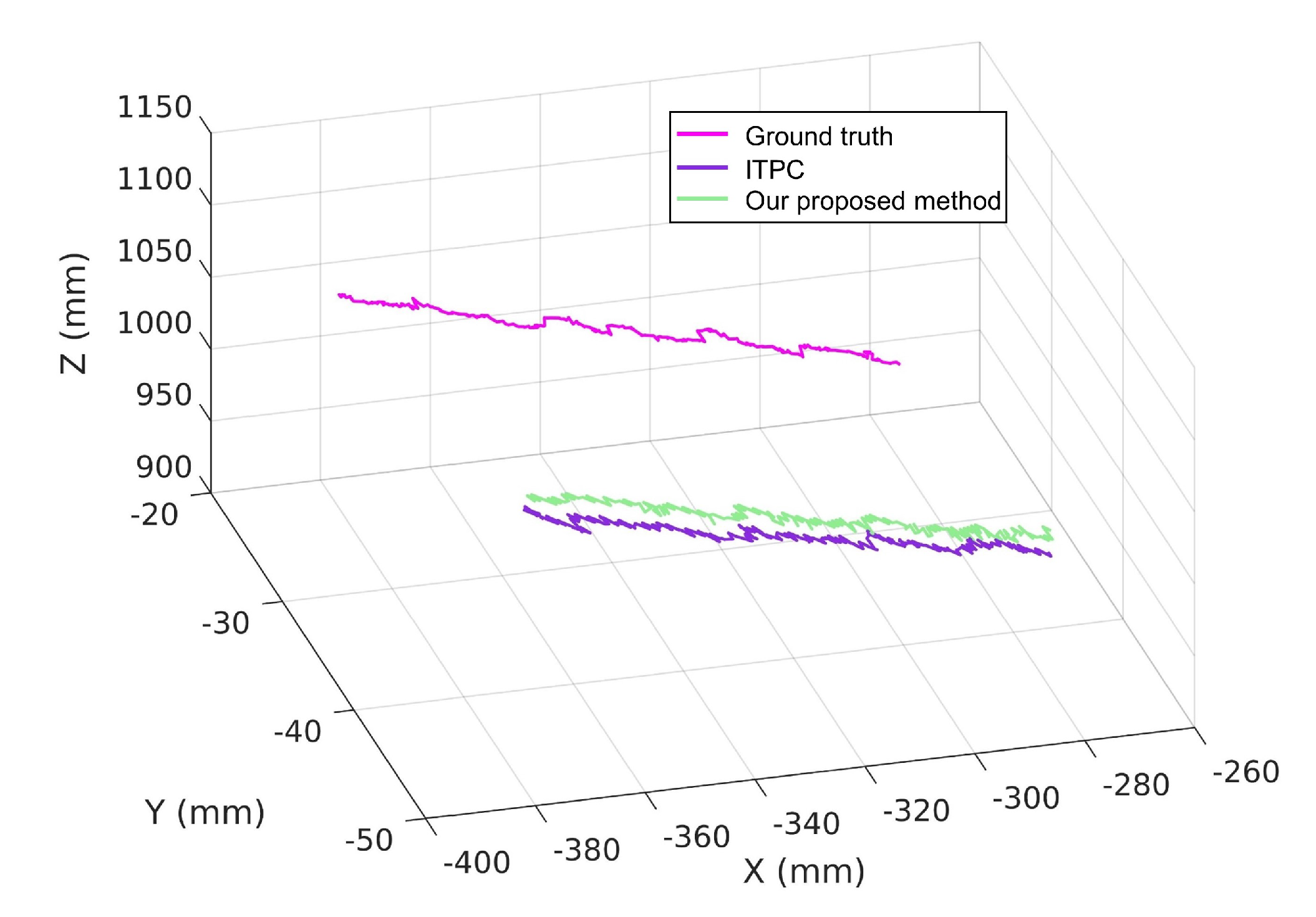

Figure 12.

The trajectory of the ground truth and the estimated point using our proposed method and ITPC method.

Figure 12.

The trajectory of the ground truth and the estimated point using our proposed method and ITPC method.

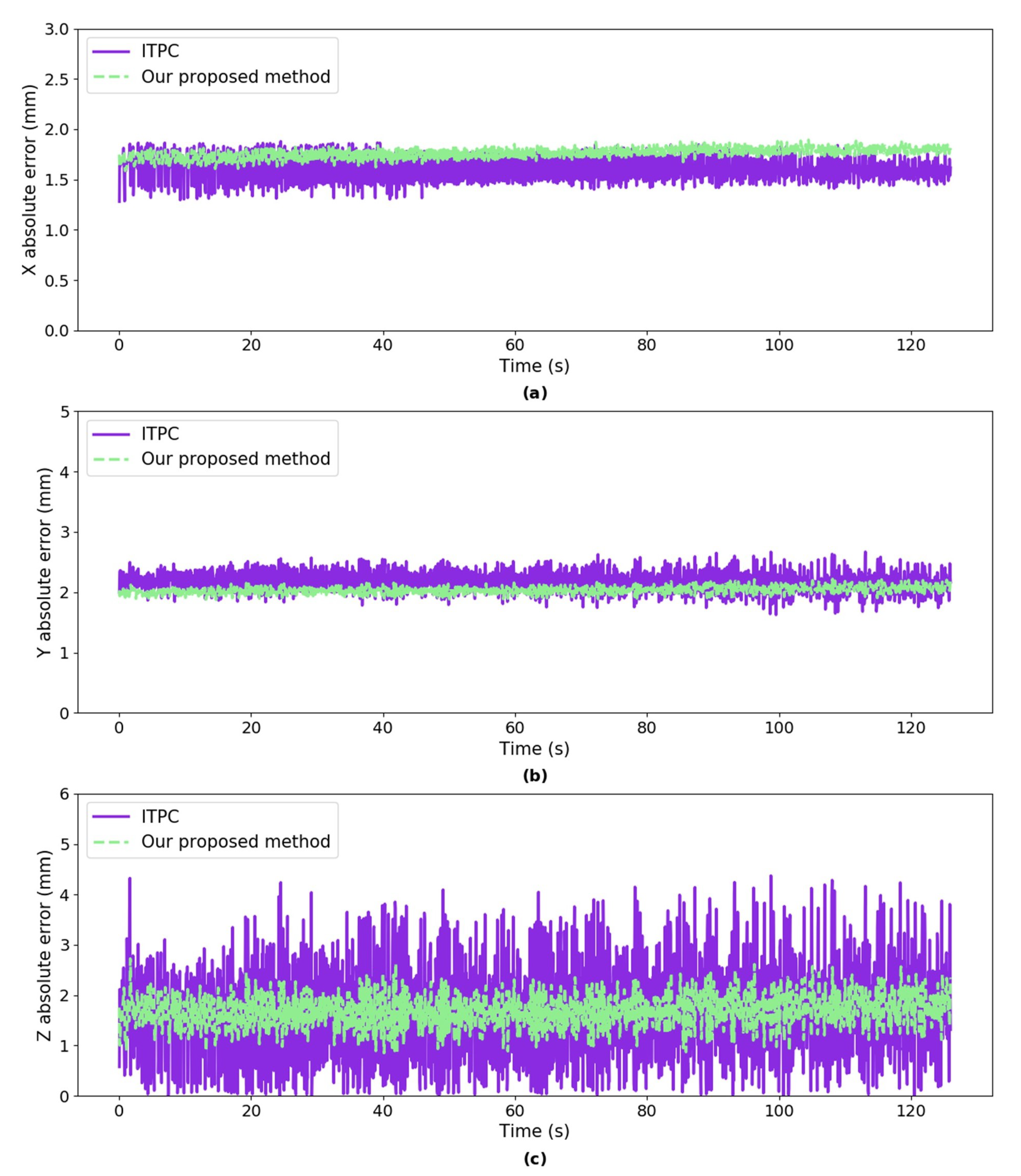

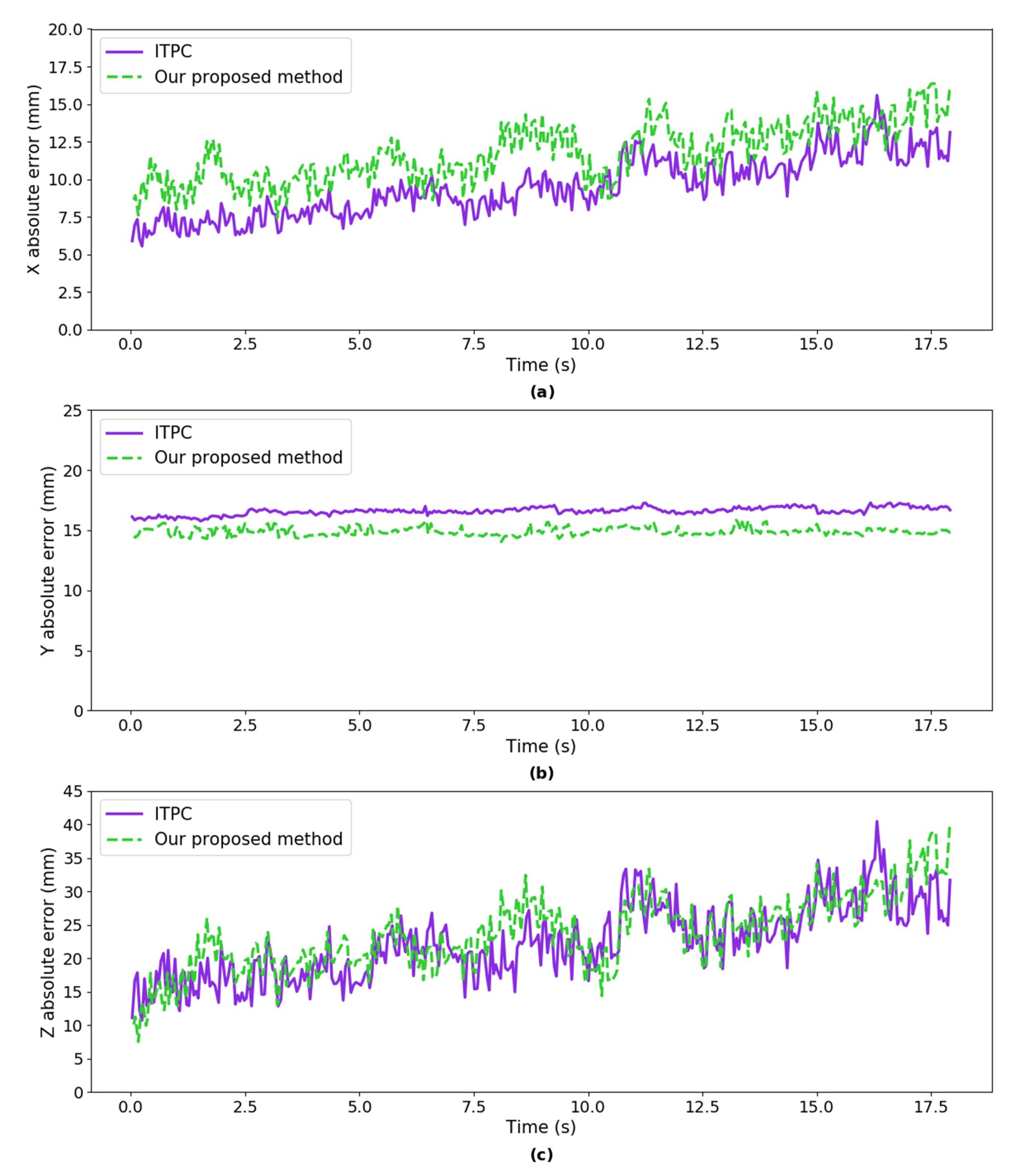

Figure 13.

The absolute error of our proposed method and ITPC method w.r.t ground truth respectively. (a) absolute error in X axis; (b) absolute error in Y axis; and, (c) absolute error in Z axis.

Figure 13.

The absolute error of our proposed method and ITPC method w.r.t ground truth respectively. (a) absolute error in X axis; (b) absolute error in Y axis; and, (c) absolute error in Z axis.

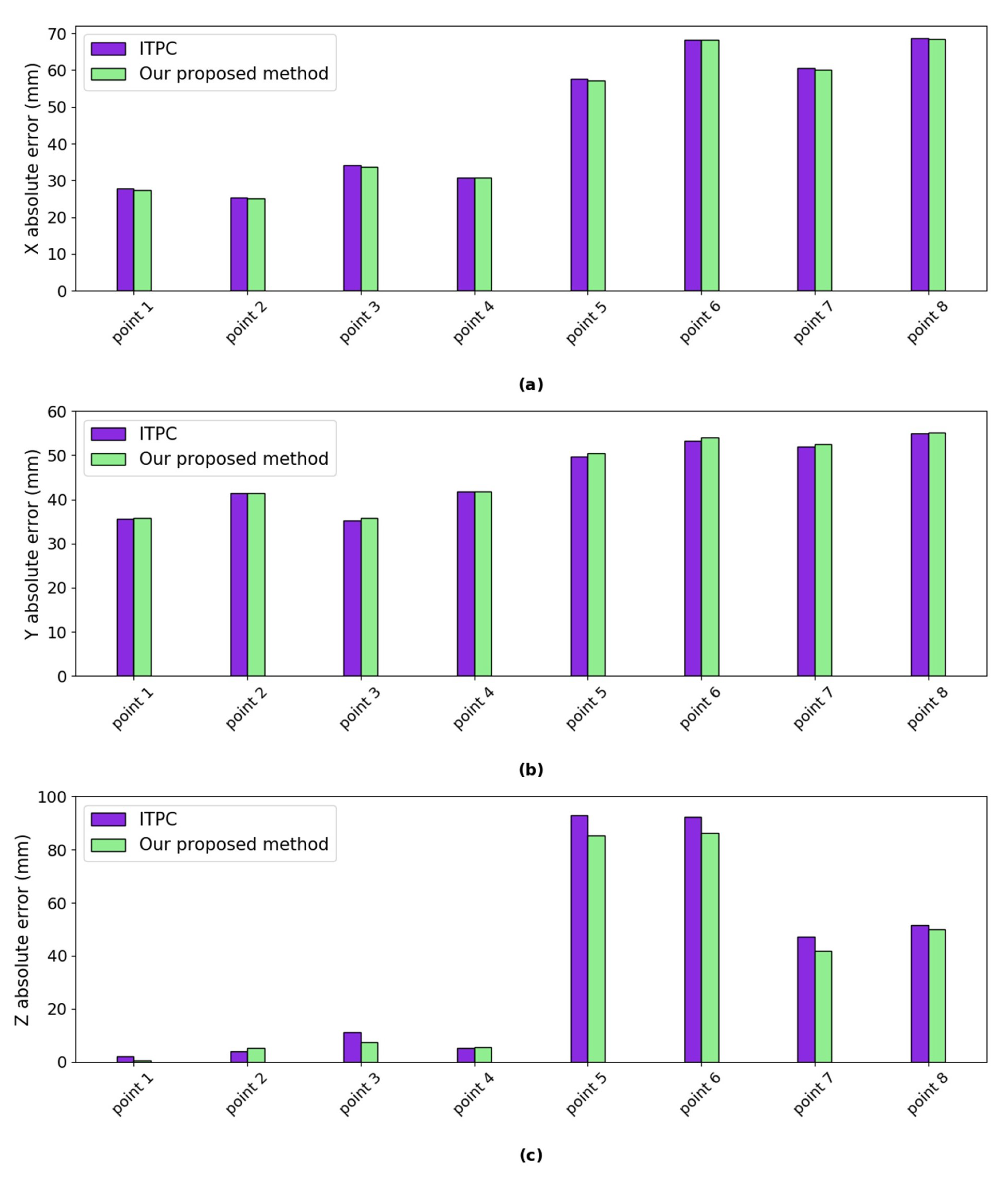

Figure 14.

The absolute error of our proposed method and ITPC method w.r.t ZED Mini. (a) absolute error in X axis; (b) absolute error in Y axis; and, (c) absolute error in Z axis.

Figure 14.

The absolute error of our proposed method and ITPC method w.r.t ZED Mini. (a) absolute error in X axis; (b) absolute error in Y axis; and, (c) absolute error in Z axis.

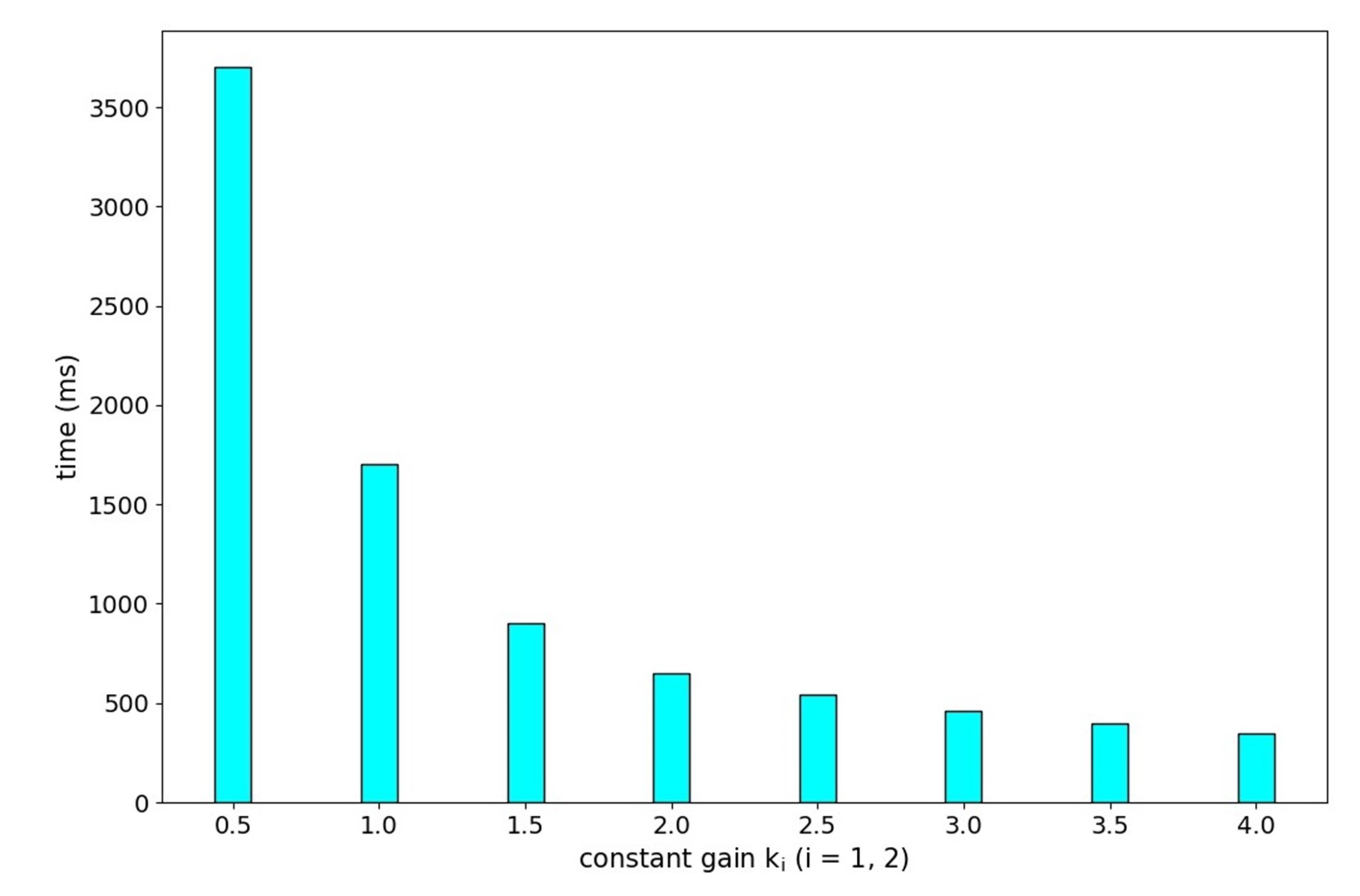

Figure 15.

The time it takes for eye gazing affected by and .

Figure 15.

The time it takes for eye gazing affected by and .

Table 1.

The D-H parameters of the robotic bionic eyes. (Here parameters , , , are the link offset, joint angle, link length and link twist, respectively. The i-th joint offset is the value of in the initial state. is 64.27 mm, is 11.00 mm, is 44.80 mm, is 47.20 mm, is 13.80 mm, and is 30.33 mm).

Table 1.

The D-H parameters of the robotic bionic eyes. (Here parameters , , , are the link offset, joint angle, link length and link twist, respectively. The i-th joint offset is the value of in the initial state. is 64.27 mm, is 11.00 mm, is 44.80 mm, is 47.20 mm, is 13.80 mm, and is 30.33 mm).

| i | mm | rad | mm | rad | i-th joint offset/rad |

|---|

| 1 | 0 | | 0 | 0 | 0 |

| 2 | | | 0 | − | − |

| 3 | | | 0 | | |

| 4 | | | | | |

| 5 | | | 0 | | |

| 6 | 0 | 0 | 0 | | |

| 7 | | | | 0 | |

| 8 | | | 0 | | |

| 9 | 0 | 0 | 0 | | |

Table 2.

The intrinsic parameters of the left and right simulated cameras.

Table 2.

The intrinsic parameters of the left and right simulated cameras.

| Camera | Pixel | Pixel | Pixel | Pixel |

|---|

| Left | 619.71 | 619.71 | 520.50 | 430.50 |

| Right | 619.71 | 619.71 | 520.50 | 430.50 |

Table 3.

The ground truth of the 3D coordinates of the 21 static target points.

Table 3.

The ground truth of the 3D coordinates of the 21 static target points.

| Number | mm | mm | mm | Number | mm | mm | mm |

|---|

| 1 | −0.00 | −99.81 | 499.98 | 12 | −0.01 | −99.84 | 1599.99 |

| 2 | −0.00 | −99.81 | 599.99 | 13 | −0.01 | −99.85 | 1699.99 |

| 3 | −0.00 | −99.81 | 699.99 | 14 | −0.01 | −99.85 | 1799.99 |

| 4 | −0.00 | −99.82 | 799.99 | 15 | −0.01 | −99.84 | 1899.99 |

| 5 | −0.00 | −99.82 | 899.99 | 16 | −0.01 | −99.86 | 1999.99 |

| 6 | −0.01 | −99.83 | 999.99 | 17 | −0.01 | −99.86 | 2099.99 |

| 7 | −0.00 | −99.83 | 1099.99 | 18 | −0.01 | −99.85 | 2199.99 |

| 8 | −0.01 | −99.83 | 1199.99 | 19 | −0.01 | −99.86 | 2299.99 |

| 9 | −0.00 | −99.84 | 1299.99 | 20 | −0.01 | −99.86 | 2399.99 |

| 10 | −0.01 | −99.84 | 1399.99 | 21 | −0.01 | −99.87 | 2499.99 |

| 11 | −0.01 | −99.85 | 1499.99 | | | | |

Table 4.

The intrinsic parameters of the left and right cameras of the designed robotic bionic eyes.

Table 4.

The intrinsic parameters of the left and right cameras of the designed robotic bionic eyes.

| Camera | Pixel | Pixel | Pixel | Pixel |

|---|

| Left | 521.66 | 521.83 | 362.94 | 222.53 |

| Right | 521.20 | 521.51 | 388.09 | 220.82 |

Table 5.

The ground truth of the static target points.

Table 5.

The ground truth of the static target points.

| Number | mm | mm | mm | Number | mm | mm | mm |

|---|

| 1 | −208.73 | −177.64 | 508.04 | 12 | −721.54 | −180.49 | 1603.42 |

| 2 | −232.55 | −177.53 | 605.69 | 13 | −754.63 | −182.21 | 1704.31 |

| 3 | −255.58 | −176.02 | 699.53 | 14 | −779.88 | −181.77 | 1798.82 |

| 4 | −324.48 | −179.81 | 800.92 | 15 | −816.46 | −182.52 | 1909.67 |

| 5 | −407.39 | −178.42 | 899.89 | 16 | −842.48 | −182.31 | 2004.67 |

| 6 | −452.88 | −179.90 | 1001.11 | 17 | −909.75 | −171.71 | 2097.57 |

| 7 | −486.87 | −180.58 | 1102.26 | 18 | −984.94 | −168.96 | 2200.87 |

| 8 | −563.40 | −179.15 | 1198.49 | 19 | −1029.85 | −171.79 | 2296.25 |

| 9 | −607.89 | −181.18 | 1308.29 | 20 | −1144.02 | −168.11 | 2407.59 |

| 10 | −649.50 | −179.74 | 1404.37 | 21 | −1229.31 | −166.47 | 2506.59 |

| 11 | −688.94 | −182.54 | 1501.91 | | | | |

Table 6.

The intrinsic parameters of the left and right cameras of ZED mini.

Table 6.

The intrinsic parameters of the left and right cameras of ZED mini.

| Camera | Pixel | Pixel | Pixel | Pixel |

|---|

| Left | 676.21 | 676.21 | 645.09 | 368.27 |

| Right | 676.21 | 676.21 | 645.09 | 368.27 |

Table 7.

The estimated 3D coordinates of the spatial points w.r.t the base frame using ZED mini.

Table 7.

The estimated 3D coordinates of the spatial points w.r.t the base frame using ZED mini.

| Point | mm | mm | mm | Point | mm | mm | mm |

|---|

| 1 | −52.97 | −52.35 | 995.14 | 5 | −53.83 | −55.49 | 1493.80 |

| 2 | −51.75 | −104.14 | 1004.50 | 6 | −47.30 | −93.07 | 1498.50 |

| 3 | −106.29 | −49.44 | 1002.60 | 7 | −99.29 | −55.39 | 1478.30 |

| 4 | −103.80 | −112.04 | 989.98 | 8 | −104.57 | −99.60 | 1495.60 |