Fabric Defect Detection Based on Illumination Correction and Visual Salient Features

Abstract

1. Introduction

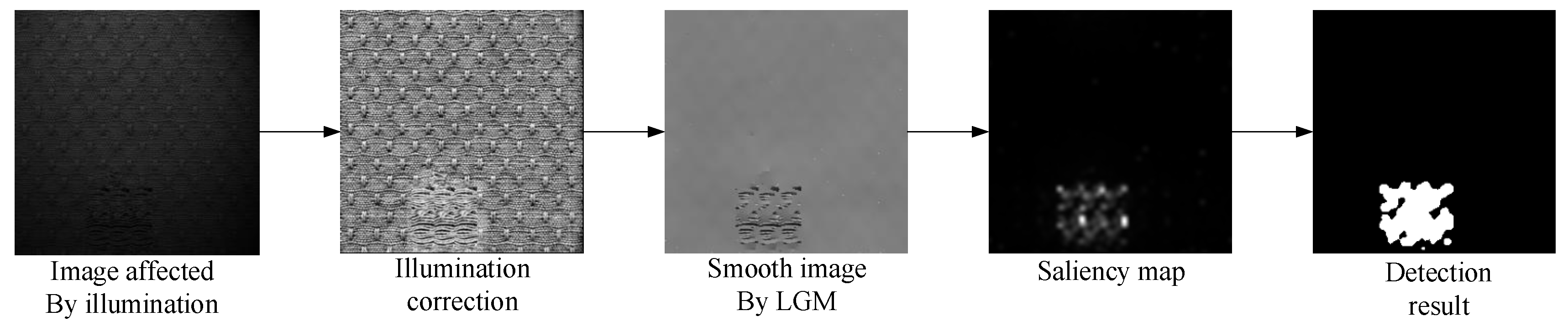

- Different from traditional methods that only perform illumination correction locally or globally, our method performs illumination correction on the fabric image in both global and local angles.

- Different from the traditional method of constructing quaternion images, we choose a color space that is more suitable for fabric images, improve the robustness of the intensity feature channel, and replace the motion feature channel with edge feature channel.

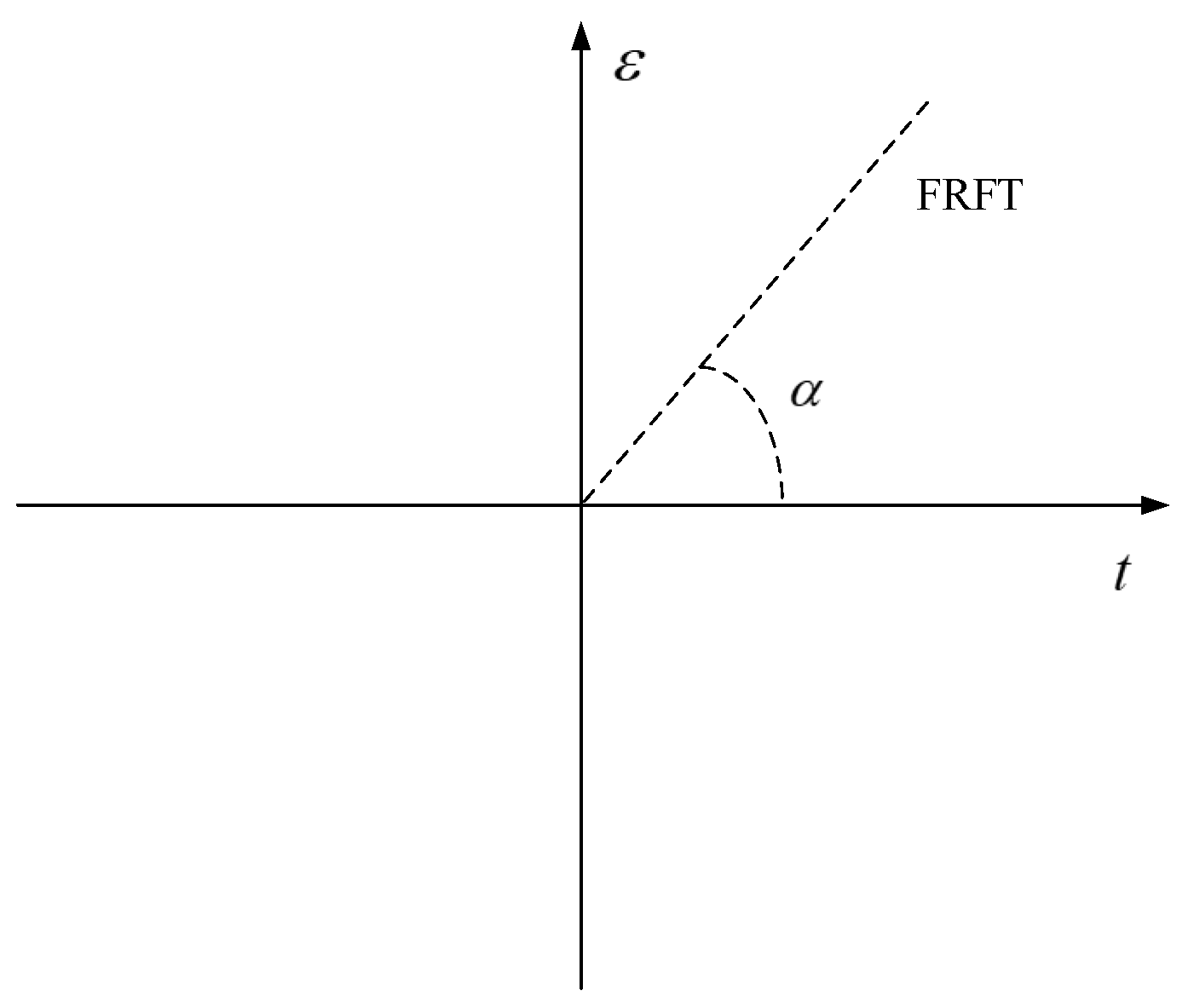

- Different from the traditional frequency domain method using simple Fourier transform to obtain the saliency map, we use the two-dimensional fractional Fourier transform to obtain the saliency map of the quaternion image.

2. Related Work

2.1. Illumination Correction

2.2. Visual Salient Feature

3. Methods

3.1. Illumination Correction

3.1.1. Illumination Correction in the Global Angle

3.1.2. Enhance the Contrast in the Local Angle

3.2. Extract Visual Salient Features of Image

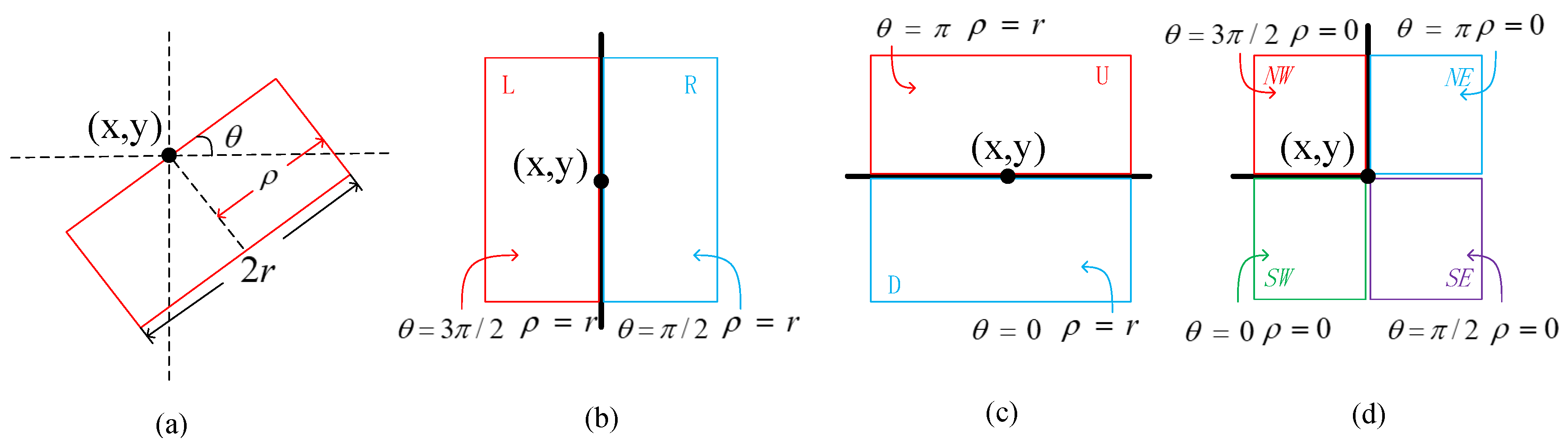

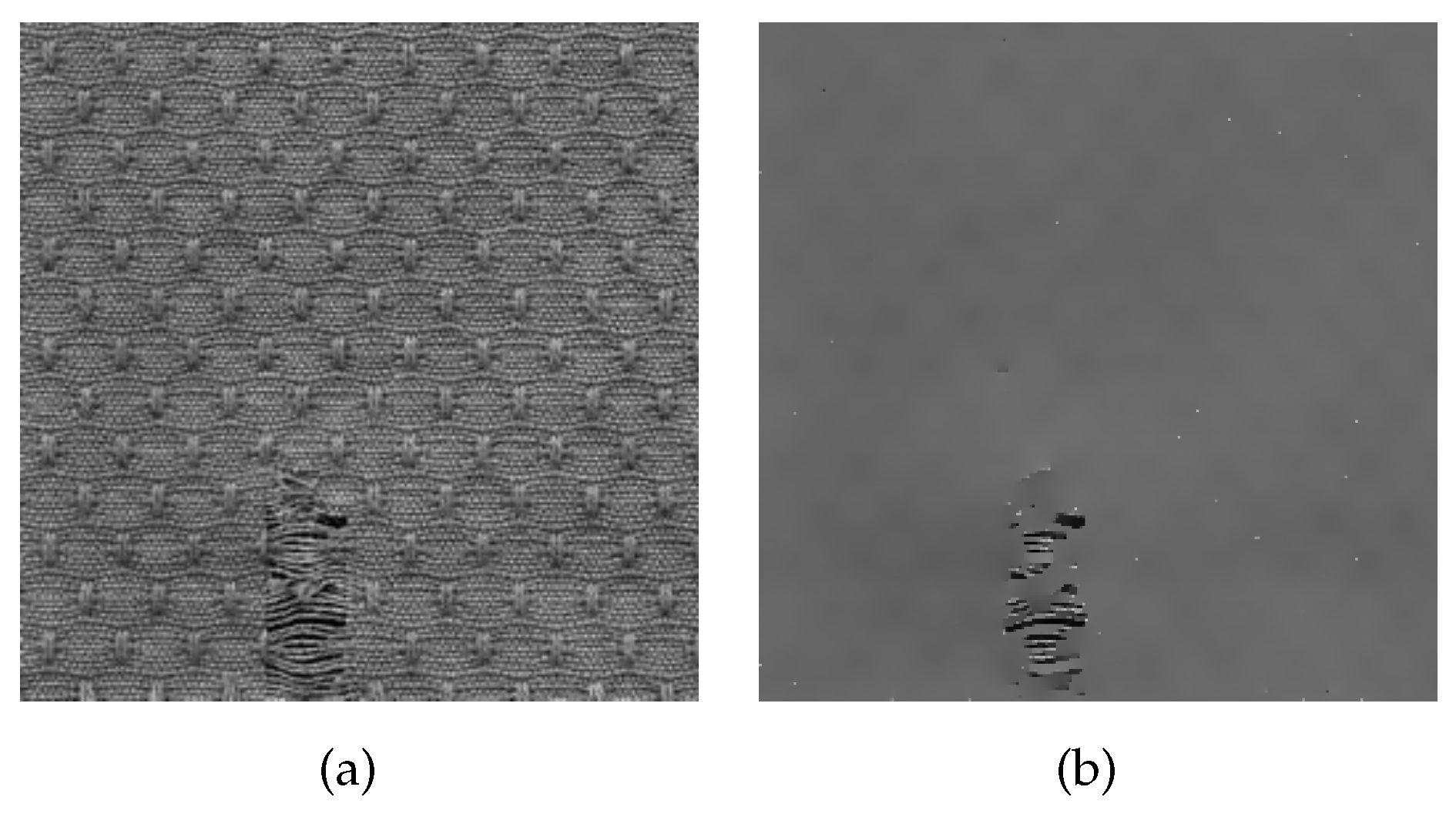

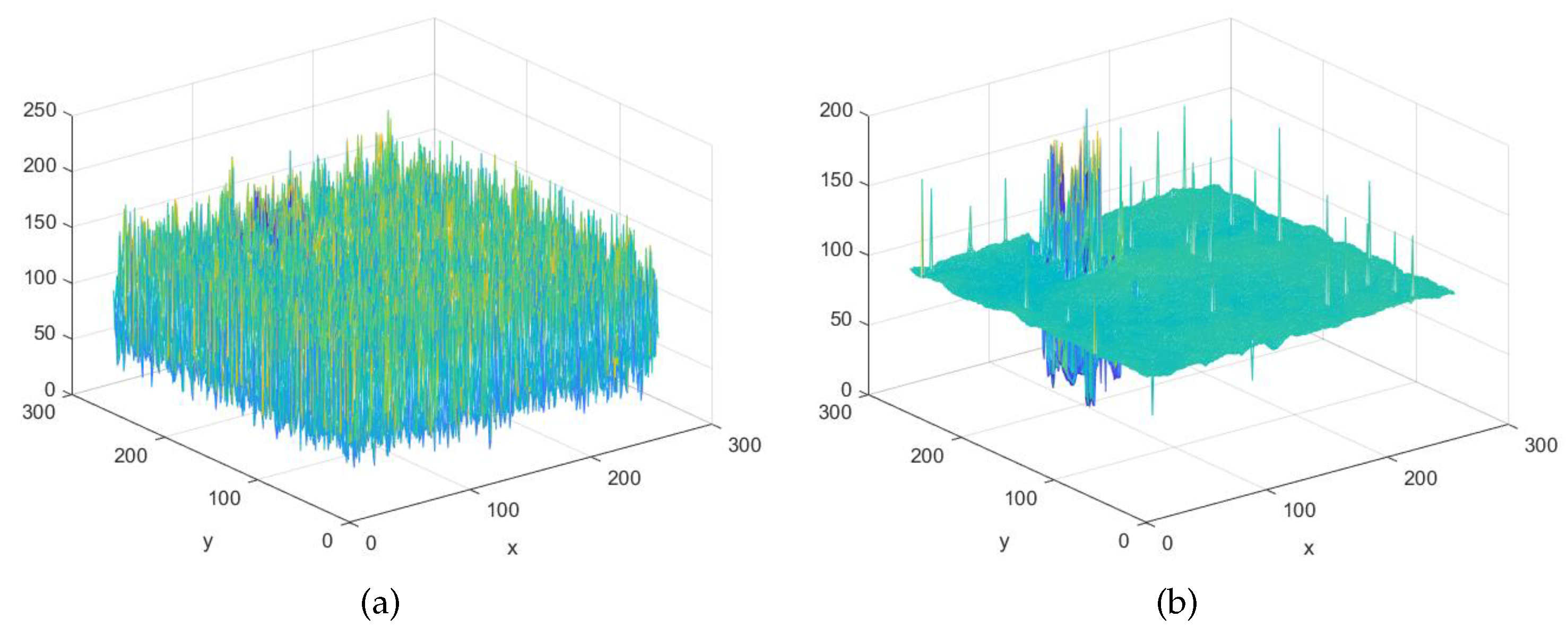

3.2.1. Background Texture Smooth by the Gradient Minimization(LGM)

3.2.2. Creation of a Quaternion Image

3.2.3. Using 2-D Fractional Fourier Transform to Obtain Saliency Map

3.2.4. Generation of Saliency Map

3.2.5. Computation Cost Analysis

4. Experiments and Performance Evaluation

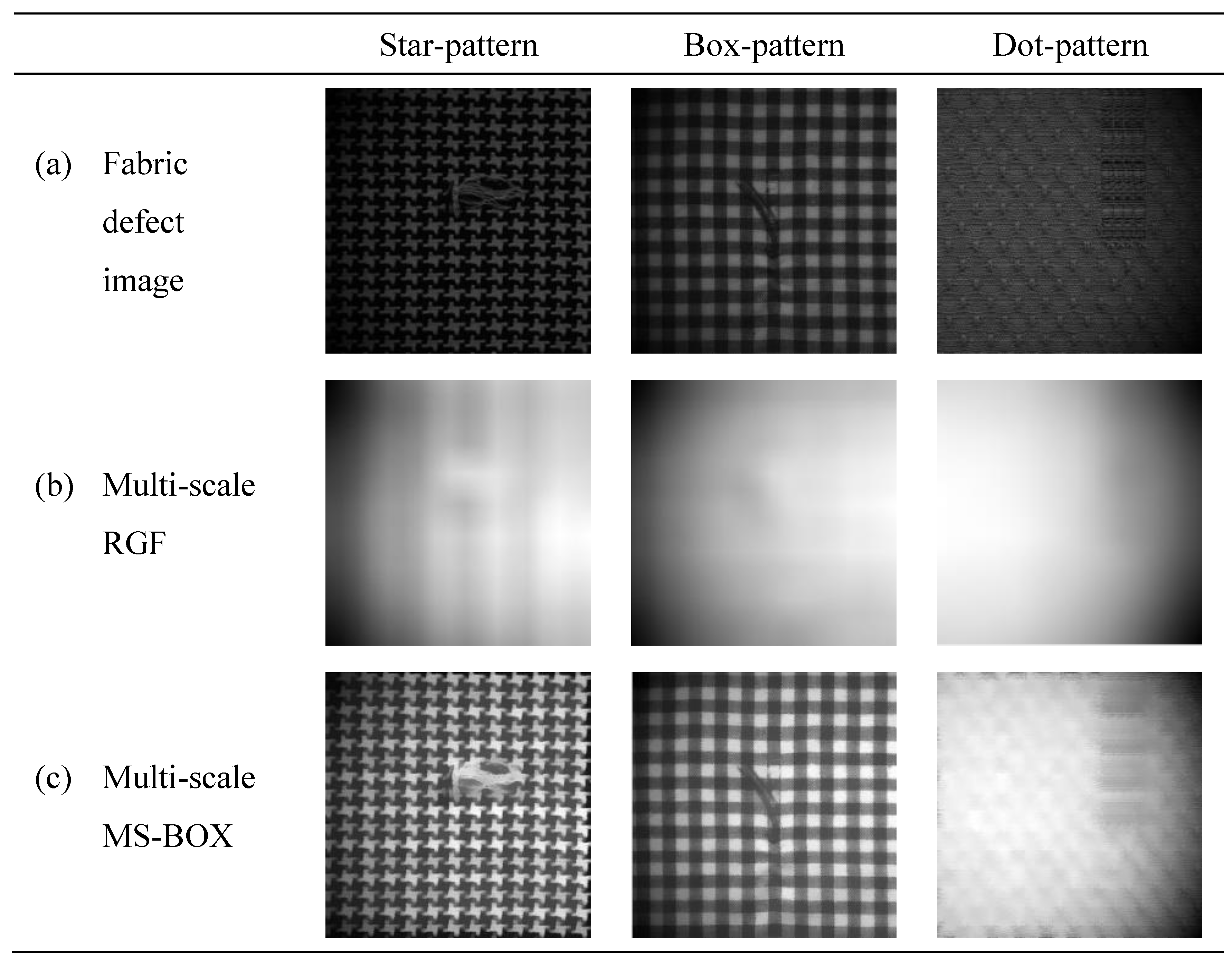

4.1. Analysis of Experimental Results of Different Illumination Correction Methods

4.2. Parameter Selection of the Gradient Minimization Method

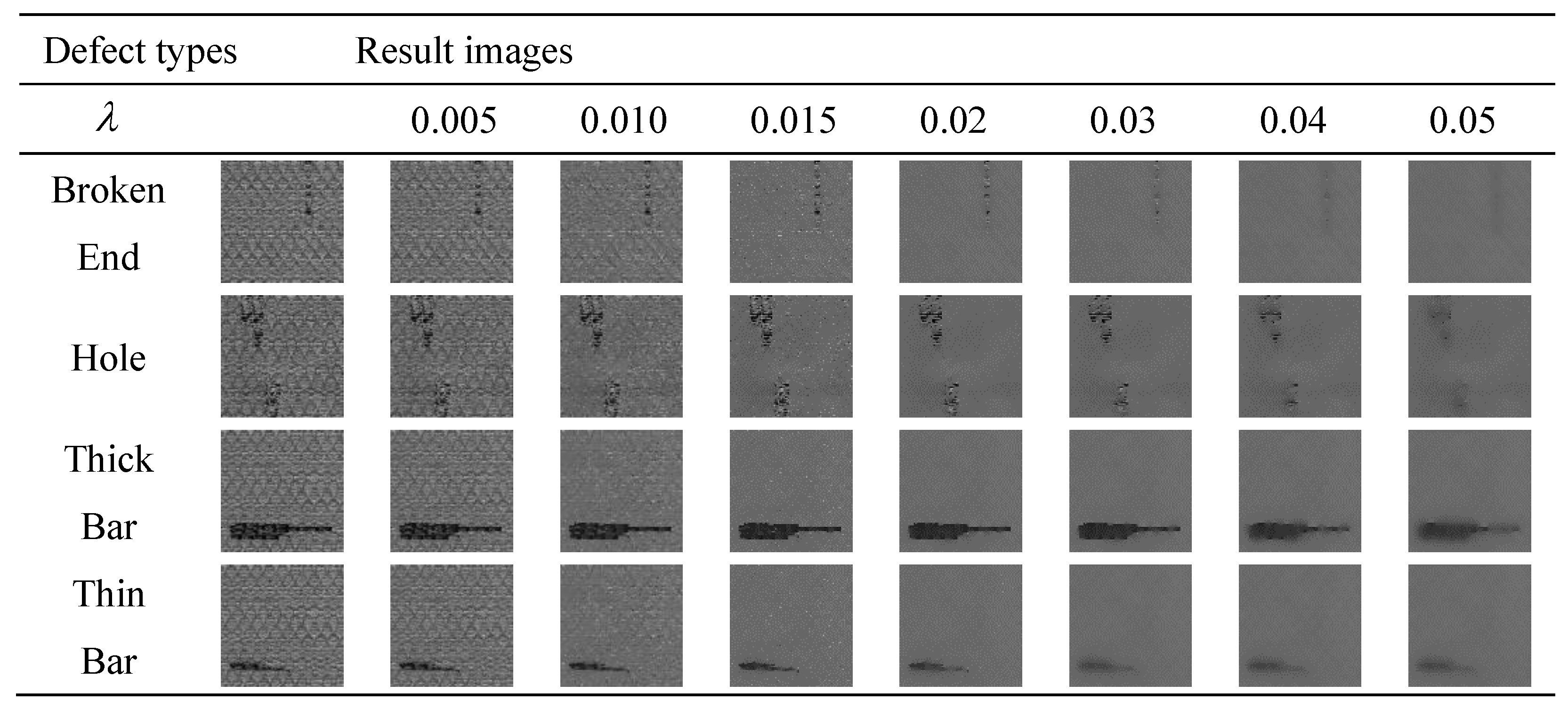

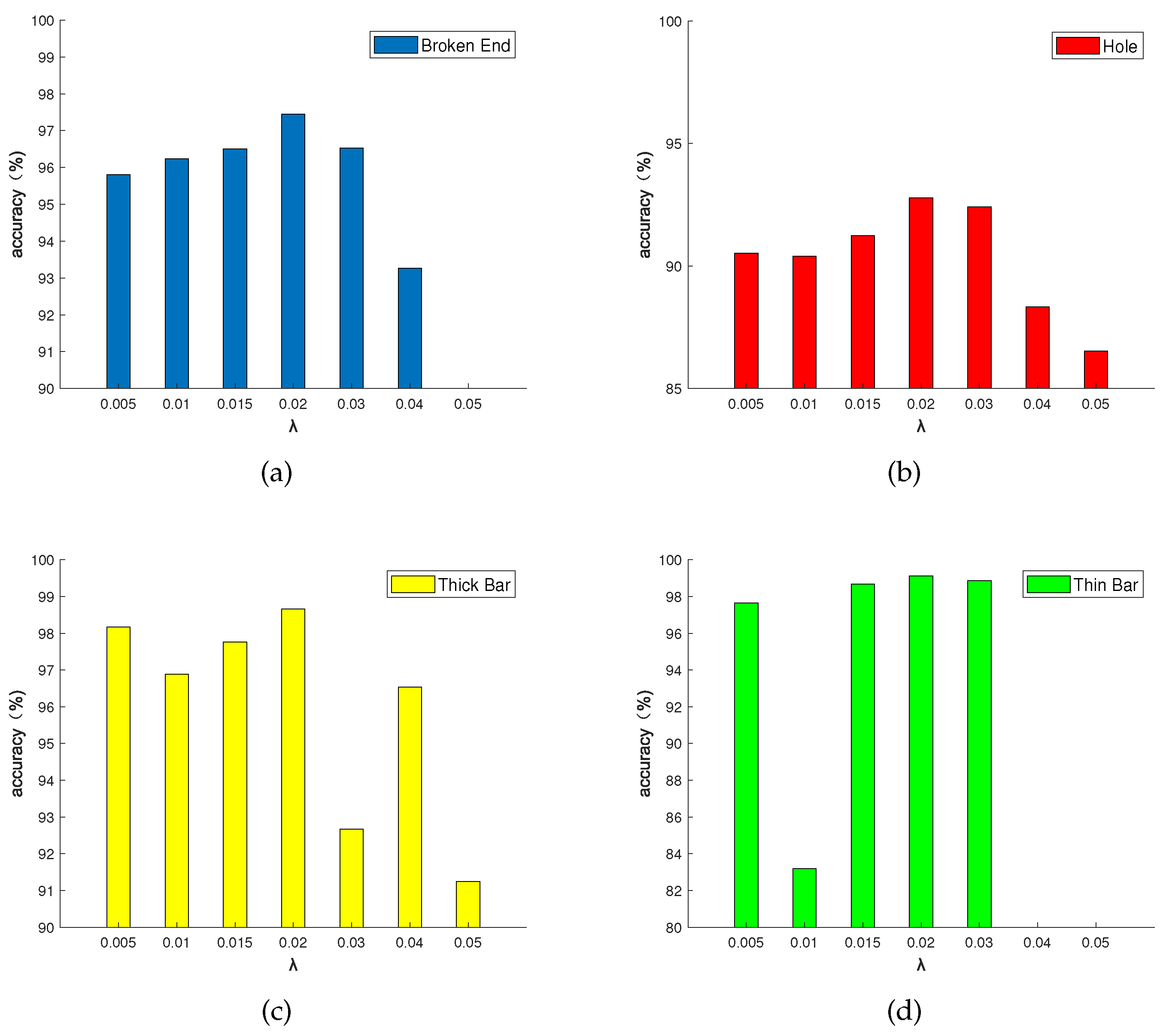

4.3. Generation of the Saliency Map

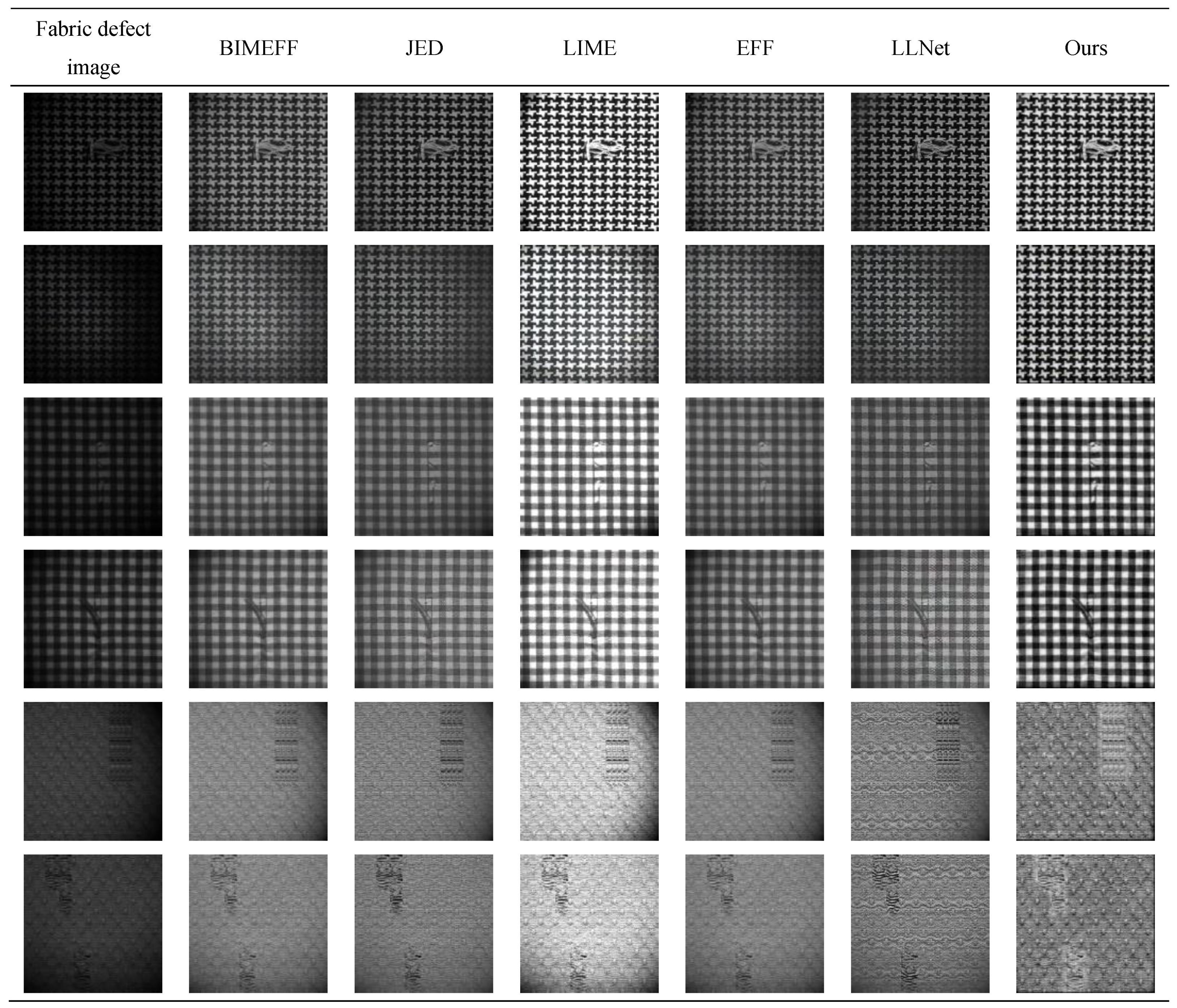

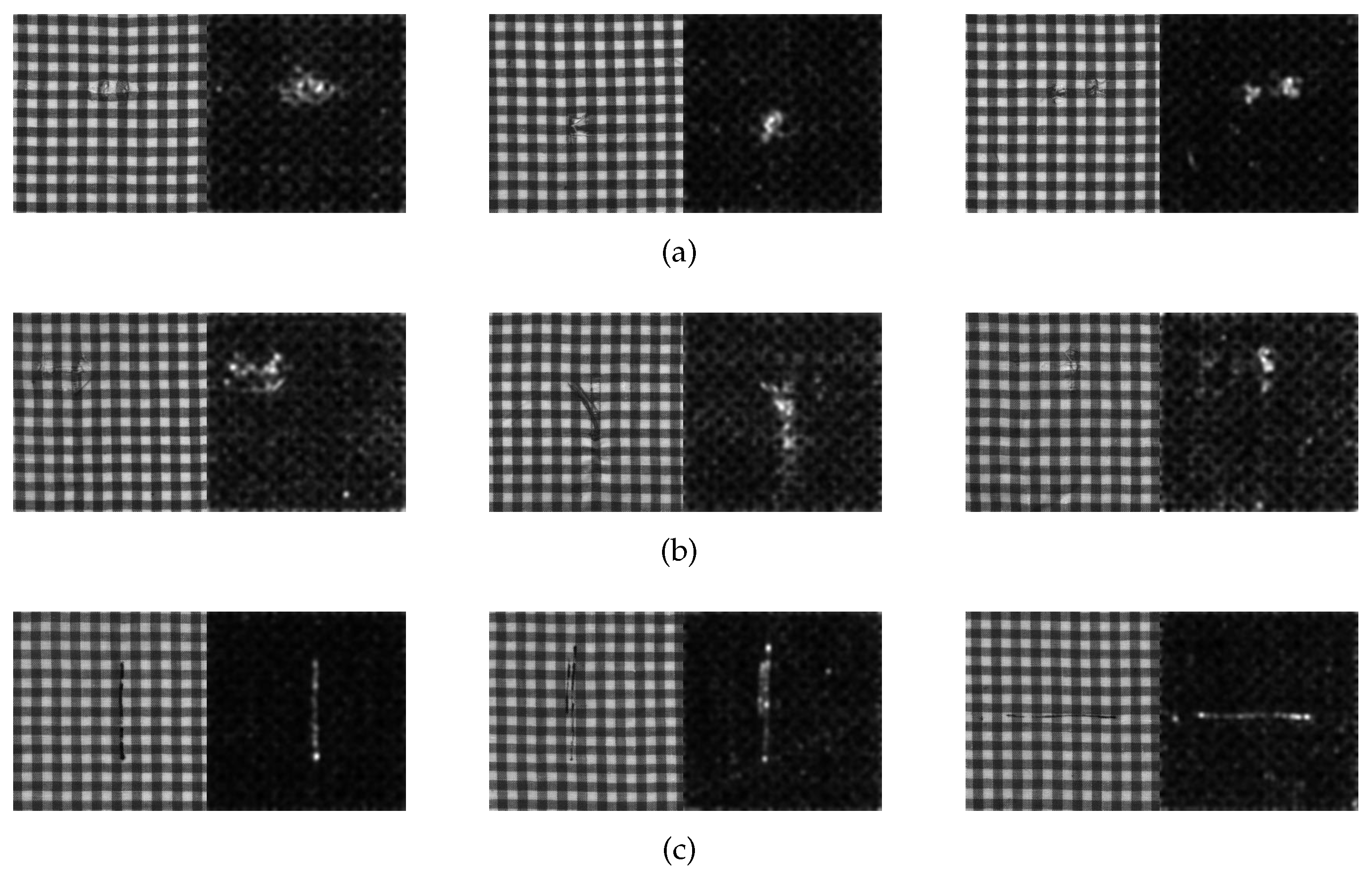

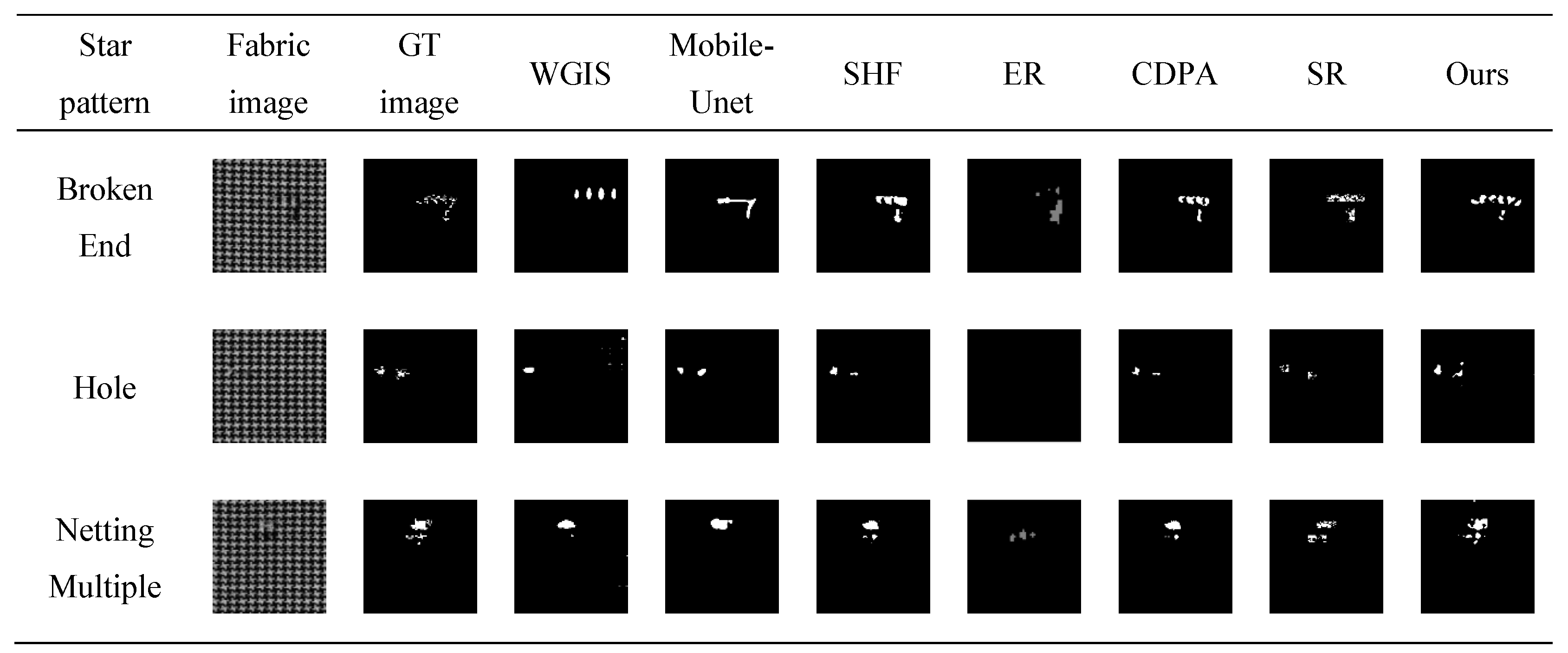

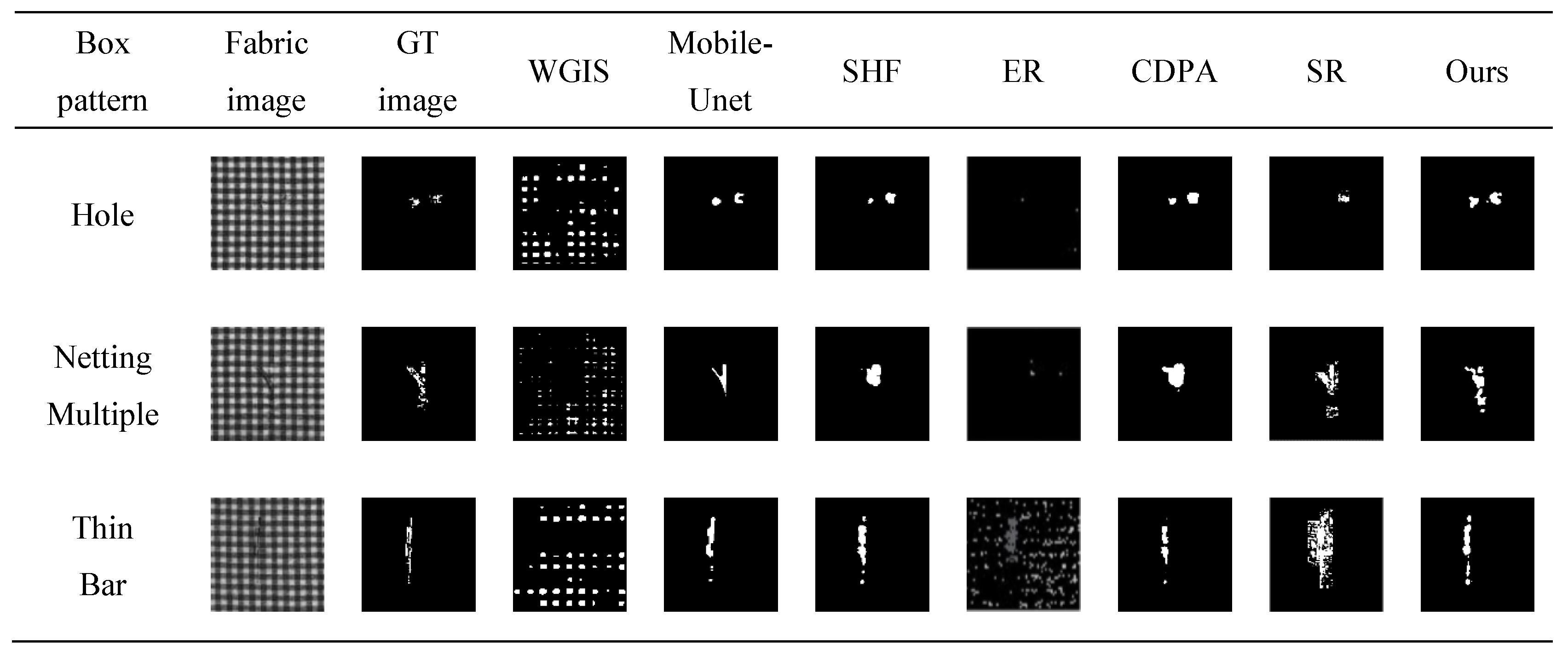

4.4. Result Comparison

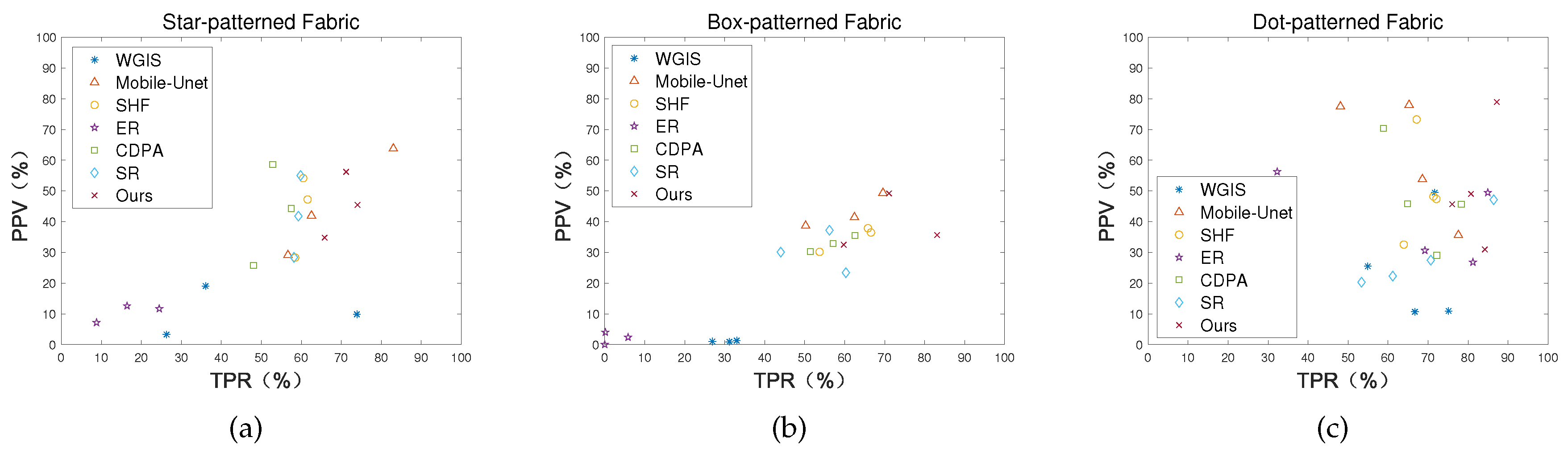

4.5. Quantitative Comparison

4.6. Running Time Comparison

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Tsai, I.; Lin, C.; Lin, J. Applying an Artificial Neural Network to Pattern Recognition in Fabric Defects. Text. Res. J. 1995, 65, 123–130. [Google Scholar] [CrossRef]

- Chetverikov, D.; Hanbury, A. Finding Defects in Texture Using Regularity and Local Orientation. Pattern Recognit. 2002, 35, 2165–2180. [Google Scholar] [CrossRef]

- Chan, C.; Pang, G. Fabric Defect Detection by Fourier Analysis. IEEE Trans. Ind. Appl. 2000, 36, 1267–1276. [Google Scholar] [CrossRef]

- Yang, X.; Pang, G.; Yung, N. Discriminative Fabric Defect Detection Using Adaptive Wavelets. Opt. Eng. 2002, 41, 3116–3126. [Google Scholar] [CrossRef]

- Mak, K.; Peng, P. An Automated Inspection System for Textile Fabrics Based on Gabor Filters. Robot. Comput. Integr. Manuf. 2008, 24, 359–369. [Google Scholar] [CrossRef]

- Cohen, F.; Fan, Z.; Attali, S. Automate Inspection of Textile Fabrics Using Textural Models. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 13, 803–808. [Google Scholar] [CrossRef]

- Athanasios, V.; Nikolaos, D.; Anastasios, D.; Eftychios, P. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 1–13. [Google Scholar]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Zhang, Y.; Lu, Z.; Li, J. Fabric Defect Classification Using Radial Basis Function Network. Pattern Recognit. Lett. 2010, 31, 2033–2042. [Google Scholar] [CrossRef]

- Jing, J.; Wang, Z.; Matthias, R.; Zhang, H. Mobile-Unet: An efficient convolutional neural network for fabric defect detection. Text. Res. J. 2020. [Google Scholar] [CrossRef]

- Ngan, H.; Pang, G.; Yung, S.; Ng, M. Wavelet based methods on patterned fabric defect detection. Pattern Recognit. 2005, 38, 559–576. [Google Scholar] [CrossRef]

- Ngan, H.; Pang, G. Novel Method for Patterned Fabric Inspection Using Bollinger Bands. Opt. Eng. 2006, 45, 187–202. [Google Scholar]

- Ngan, H.; Pang, G. Regularity Analysis for Patterned Texture Inspection. IEEE Trans. Autom. Sci. Eng. 2009, 6, 131–144. [Google Scholar] [CrossRef]

- Tsang, C.; Ngan, H.; Pang, G. Fabric inspection based on the Elo rating method. Pattern Recognit. 2016, 51, 378–394. [Google Scholar] [CrossRef]

- Liang, J.; Gu, C.; Chang, X. Fabric Defect Detection Based on Similarity Relation. Pattern Recognit. Artif. Intell. 2017, 30, 456–464. [Google Scholar]

- Li, C.; Gao, G.; Liu, Z. Defect detection for patterned fabric images based on GHOG and low-rank decomposition. IEEE Access 2019, 7, 83962–83973. [Google Scholar] [CrossRef]

- Shi, B.; Liang, J.; Di, L.; Chen, C.; Hou, Z. Fabric Defect Detection via Low-rank Decomposition with Gradient Information. IEEE Access 2019, 7, 130424–130437. [Google Scholar] [CrossRef]

- Achanta, R.; Hemami, S.; Estrada, F. Frequency-tuned Salient Region Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1597–1604. [Google Scholar]

- Nevrex, I.; Lin, W.; Fang, Y. A Saliency Detection Model Using Low-Level Features Based on Wavelet Transform. IEEE Trans. Multimed. 2013, 15, 96–105. [Google Scholar]

- Hou, X.; Zhang, L. Saliency Detection: A Spectral Residual Approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Wang, H.; Li, S.; Wang, Y. Self quotient image for face recognition. In Proceedings of the 2004 International Conference on Image Processing, Singapore, 24–27 October 2004; pp. 1397–1400. [Google Scholar]

- Yugander, P.; Tejaswini, C.H.; Meenakshi, J.; Varma, B.S.; Jagannath, M. MR Image Enhancement using Adaptive Weighted Mean Filtering and Homomorphic Filtering. Procedia Comput. Sci. 2020, 167, 677–685. [Google Scholar] [CrossRef]

- Land, E. Recent advances in Retinex thory. Vis. Res. 1986, 26, 7–21. [Google Scholar] [CrossRef]

- Zhang, Q.; Shen, X.; Xu, L. Rolling Guidance Filter. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 815–830. [Google Scholar]

- Ying, Z.; Ren, Y.; Wang, R.; Wang, W. A New Image Contrast Enhancement Algorithm Using Exposure Fusion Framework. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Ystad, Sweden, 22–24 August 2017; pp. 36–46. [Google Scholar]

- Ying, Z.; Li, G.; Gao, W. A Bio-Inspired Multi-Exposure Fusion Framework for Low-light Image Enhancement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1–10. [Google Scholar]

- Ren, X.; Li, M.; Cheng, W.; Liu, J. Joint Enhancement and Denoising Method via Sequential Decomposition. In Proceedings of the IEEE International Symposium on Circuits and Systems, Florence, Italy, 27–30 May 2018. [Google Scholar]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-Light Image Enhancement via Illumination Map Estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Kin, L.; Adedotun, A.; Soumik, S. LLNet: A deep autoencoder approach to natural low-light image enhancement. Pattern Recognit. 2017, 61, 650–662. [Google Scholar]

- Li, M.; Wan, S.; Deng, Z.; Wang, Y. Fabric defect detection based on saliency histogram features. Comput. Intell. 2019, 35, 517–534. [Google Scholar] [CrossRef]

- Zhang, K.; Yan, Y.; Li, P.; Jing, J.; Liu, P.; Wang, Z. Fabric Defect Detection Using Salience Metric for Color Dissimilarity and Positional Aggregation. IEEE Access 2018, 6, 49170–49181. [Google Scholar] [CrossRef]

- Guo, C.; Ma, Q.; Zhang, L. Spatio-temporal Saliency Detection Using Phase Spectrum of Quaternion Fourier Transform. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Yin, H.; Gong, Y.; Qiu, G. Side Window Filtering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 18–20 June 2019. [Google Scholar]

- Di, L.; Zhao, S.; He, R. Fabric defect inspection based on illumination preprocessing and feature extraction. CAAI Trans. Intell. Syst. 2019, 14, 716–724. [Google Scholar]

- Xu, L.; Lu, C.; Xu, Y.; Jia, J. Image smoothing via L0 gradient minimization. ACM Trans. Graph 2011, 30, 1–12. [Google Scholar]

- Engel, S.; Zhang, X.; Wandell, B. Colour tuning in human visual cortex measured with functional magnetic resonance imaging. Nature 1997, 388, 68–71. [Google Scholar] [CrossRef]

- Lazarevic-McManus, N.; Renno, J.; Jones, G. Performance evaluation in visual surveillance using the Fmeasure. In Proceedings of the 4th ACM International Workshop Video Surveillance and Sensor Networks, Cairo, Egypt, 1–4 October 2006; pp. 45–52. [Google Scholar]

| Star Pattern | TPR (%) | FPR (%) | PPV (%) | NPV (%) | f (%) | Methods |

|---|---|---|---|---|---|---|

| Broken End (5) | 73.88 | 4.34 | 9.88 | 99.24 | 17.42 | WGIS |

| 56.65 | 0.95 | 29.11 | 99.74 | 38.45 | Mobile-Unet | |

| 58.46 | 0.91 | 28.31 | 99.74 | 38.14 | SHF | |

| 8.79 | 1.16 | 7.17 | 99.27 | 7.89 | ER | |

| 48.05 | 0.78 | 25.82 | 99.62 | 33.59 | CDPA | |

| 58.16 | 2.67 | 28.40 | 99.64 | 38.16 | SR | |

| 65.81 | 0.79 | 34.82 | 99.75 | 45.54 | Ours | |

| Hole (5) | 26.30 | 7.58 | 3.27 | 99.45 | 5.81 | WGIS |

| 62.53 | 0.47 | 41.95 | 99.80 | 50.21 | Mobile-Unet | |

| 61.57 | 0.46 | 47.22 | 99.79 | 53.44 | SHF | |

| 24.47 | 1.23 | 11.68 | 99.54 | 15.81 | ER | |

| 57.51 | 0.47 | 44.28 | 99.78 | 50.03 | CDPA | |

| 59.26 | 4.60 | 41.80 | 99.78 | 49.02 | SR | |

| 74.06 | 0.51 | 45.48 | 99.86 | 56.35 | Ours | |

| Netting Multiple (5) | 36.07 | 3.25 | 19.06 | 98.25 | 24.94 | WGIS |

| 83.01 | 0.69 | 63.77 | 99.77 | 72.12 | Mobile-Unet | |

| 60.48 | 0.66 | 54.15 | 99.25 | 57.14 | SHF | |

| 16.42 | 0.82 | 12.61 | 98.54 | 14.26 | ER | |

| 52.84 | 0.62 | 58.63 | 99.16 | 55.58 | CDPA | |

| 59.80 | 0.79 | 55.03 | 99.43 | 57.31 | SR | |

| 71.21 | 0.57 | 56.22 | 99.17 | 62.83 | Ours | |

| Overall (15) | 45.41 | 5.06 | 10.73 | 98.98 | 17.35 | WGIS |

| 67.39 | 0.70 | 44.94 | 99.77 | 53.92 | Mobile-Unet | |

| 60.17 | 0.67 | 43.23 | 99.59 | 50.31 | SHF | |

| 16.56 | 1.07 | 10.48 | 99.12 | 12.83 | ER | |

| 52.80 | 0.62 | 42.91 | 99.52 | 47.34 | CDPA | |

| 59.07 | 2.68 | 41.74 | 99.61 | 48.91 | SR | |

| 70.36 | 0.63 | 45.51 | 99.59 | 55.27 | Ours |

| Box Pattern | TPR (%) | FPR (%) | PPV (%) | NPV (%) | f (%) | Methods |

|---|---|---|---|---|---|---|

| Hole (5) | 31.17 | 25.52 | 0.92 | 99.31 | 1.78 | WGIS |

| 62.44 | 0.76 | 41.41 | 99.75 | 49.79 | Mobile-Unet | |

| 66.57 | 1.05 | 36.49 | 99.80 | 47.14 | SHF | |

| 0 | 0.03 | 0 | 97.69 | 0 | ER | |

| 62.60 | 0.97 | 35.55 | 99.72 | 45.34 | CDPA | |

| 56.20 | 0.80 | 37.20 | 99.67 | 44.76 | SR | |

| 83.10 | 1.33 | 35.67 | 99.88 | 49.91 | Ours | |

| Netting Multiple (5) | 33.00 | 25.68 | 1.28 | 98.87 | 2.46 | WGIS |

| 50.23 | 0.91 | 38.65 | 99.38 | 43.68 | Mobile-Unet | |

| 53.72 | 1.33 | 30.17 | 99.42 | 38.63 | SHF | |

| 0.15 | 0.04 | 4.00 | 95.81 | 0.28 | ER | |

| 51.38 | 1.52 | 30.28 | 99.50 | 38.10 | CDPA | |

| 44.00 | 0.16 | 30.10 | 99.36 | 35.74 | SR | |

| 59.76 | 1.44 | 32.50 | 99.46 | 42.10 | Ours | |

| Thin Bar (5) | 26.90 | 24.20 | 1.02 | 99.07 | 1.96 | WGIS |

| 69.57 | 0.69 | 49.35 | 99.70 | 57.74 | Mobile-Unet | |

| 65.81 | 1.05 | 37.86 | 99.67 | 48.06 | SHF | |

| 5.84 | 4.51 | 2.36 | 97.68 | 3.36 | ER | |

| 57.09 | 1.13 | 32.84 | 99.60 | 41.69 | CDPA | |

| 60.30 | 1.60 | 23.40 | 99.66 | 33.71 | SR | |

| 71.10 | 0.81 | 49.19 | 99.72 | 58.14 | Ours | |

| Overall (15) | 30.35 | 25.13 | 1.07 | 99.08 | 2.06 | WGIS |

| 60.75 | 0.78 | 43.13 | 99.61 | 50.44 | Mobile-Unet | |

| 62.03 | 1.14 | 34.84 | 99.63 | 44.61 | SHF | |

| 1.99 | 1.52 | 2.12 | 97.06 | 2.05 | ER | |

| 57.02 | 1.21 | 32.89 | 99.61 | 41.71 | CDPA | |

| 53.50 | 0.85 | 30.23 | 99.56 | 38.63 | SR | |

| 71.32 | 1.19 | 39.08 | 99.68 | 50.49 | Ours |

| Dot Pattern | TPR (%) | FPR (%) | PPV (%) | NPV (%) | f (%) | Methods |

|---|---|---|---|---|---|---|

| Broken End (5) | 54.93 | 0.18 | 25.51 | 93.90 | 34.84 | WGIS |

| 68.59 | 1.87 | 53.80 | 98.11 | 60.30 | Mobile-Unet | |

| 72.09 | 4.01 | 47.41 | 98.70 | 57.20 | SHF | |

| 32.27 | 0.01 | 56.25 | 91.90 | 41.01 | ER | |

| 78.32 | 5.20 | 45.65 | 98.94 | 57.68 | CDPA | |

| 53.36 | 26.50 | 20.30 | 82.60 | 29.41 | SR | |

| 80.74 | 5.09 | 49.05 | 99.07 | 61.02 | Ours | |

| Hole (5) | 75.13 | 0.17 | 10.92 | 99.15 | 19.06 | WGIS |

| 77.58 | 4.01 | 35.63 | 99.29 | 48.83 | Mobile-Unet | |

| 63.94 | 4.07 | 32.54 | 98.97 | 43.13 | SHF | |

| 69.21 | 0.05 | 30.63 | 98.94 | 42.46 | ER | |

| 72.18 | 4.82 | 29.04 | 99.04 | 41.41 | CDPA | |

| 61.17 | 6.50 | 22.28 | 98.95 | 32.66 | SR | |

| 84.19 | 5.38 | 30.95 | 99.45 | 45.26 | Ours | |

| Thick Bar (5) | 71.66 | 0.17 | 49.46 | 96.19 | 58.52 | WGIS |

| 65.26 | 0.27 | 77.97 | 95.01 | 71.05 | Mobile-Unet | |

| 67.13 | 2.30 | 73.27 | 93.11 | 70.15 | SHF | |

| 84.94 | 0.15 | 49.46 | 96.19 | 62.51 | ER | |

| 58.85 | 3.36 | 70.43 | 93.92 | 64.12 | CDPA | |

| 70.68 | 5.49 | 27.46 | 99.23 | 39.55 | SR | |

| 87.18 | 3.61 | 78.95 | 97.74 | 82.86 | Ours | |

| Thin Bar (5) | 66.69 | 0.16 | 10.66 | 98.64 | 18.38 | WGIS |

| 48.09 | 0.17 | 77.47 | 98.63 | 59.34 | Mobile-Unet | |

| 71.34 | 1.85 | 48.20 | 99.22 | 57.53 | SHF | |

| 81.22 | 0.07 | 26.81 | 99.30 | 40.31 | ER | |

| 64.88 | 1.87 | 45.78 | 99.02 | 53.68 | CDPA | |

| 86.42 | 16.58 | 47.15 | 97.43 | 61.01 | SR | |

| 76.02 | 2.36 | 45.67 | 99.34 | 57.06 | Ours | |

| Overall (20) | 67.10 | 0.17 | 20.07 | 96.81 | 30.89 | WGIS |

| 64.88 | 1.58 | 61.22 | 97.76 | 62.99 | Mobile-Unet | |

| 68.62 | 3.06 | 50.36 | 97.50 | 58.08 | SHF | |

| 66.91 | 0.07 | 40.79 | 96.58 | 50.68 | ER | |

| 68.56 | 3.81 | 47.72 | 97.58 | 56.27 | CDPA | |

| 67.90 | 13.76 | 29.30 | 94.55 | 40.93 | SR | |

| 82.03 | 4.11 | 51.16 | 98.90 | 63.01 | Ours |

| Methods | Average Running Time/s | Hardware |

|---|---|---|

| Mobile-Unet [10] | 0.021 | One Nvidia TITAN Xp (GPU) |

| WGIS [11] | 12.99 | Intel Core i5-8300H (CPU) |

| ER [14] | 12.13 | Intel Core i5-8300H (CPU) |

| SR [15] | 3.99 | Intel Core i5-8300H (CPU) |

| SHF [30] | 16.46 | Intel Core i5-8300H (CPU) |

| CDPA [31] | 10.43 | Intel Core i5-8300H (CPU) |

| Ours | 2.18 | Intel Core i5-8300H (CPU) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Di, L.; Long, H.; Liang, J. Fabric Defect Detection Based on Illumination Correction and Visual Salient Features. Sensors 2020, 20, 5147. https://doi.org/10.3390/s20185147

Di L, Long H, Liang J. Fabric Defect Detection Based on Illumination Correction and Visual Salient Features. Sensors. 2020; 20(18):5147. https://doi.org/10.3390/s20185147

Chicago/Turabian StyleDi, Lan, Hanbin Long, and Jiuzhen Liang. 2020. "Fabric Defect Detection Based on Illumination Correction and Visual Salient Features" Sensors 20, no. 18: 5147. https://doi.org/10.3390/s20185147

APA StyleDi, L., Long, H., & Liang, J. (2020). Fabric Defect Detection Based on Illumination Correction and Visual Salient Features. Sensors, 20(18), 5147. https://doi.org/10.3390/s20185147