Abstract

Vehicle re-identification plays an important role in cross-camera tracking and vehicle search in surveillance videos. Large variance in the appearance of the same vehicle captured by different cameras and high similarity of different vehicles with the same model poses challenges for vehicle re-identification. Most existing methods use a center proxy to represent a vehicle identity; however, the intra-class variance leads to great difficulty in fitting images of the same identity to one center feature and the images with high similarity belonging to different identities cannot be separated effectively. In this paper, we propose a sampling strategy considering different viewpoints and a multi-proxy constraint loss function which represents a class with multiple proxies to perform different constraints on images of the same vehicle from different viewpoints. Our proposed sampling strategy contributes to better mine samples corresponding to different proxies in a mini-batch using the camera information. The multi-proxy constraint loss function pulls the image towards the furthest proxy of the same class and pushes the image from the nearest proxy of different class further away, resulting in a larger margin between decision boundaries. Extensive experiments on two large-scale vehicle datasets (VeRi and VehicleID) demonstrate that our learned global features using a single-branch network outperforms previous works with more complicated network and those that further re-rank with spatio-temporal information. In addition, our method is easy to plug into other classification methods to improve the performance.

1. Introduction

As people attach importance to traffic surveillance and public safety, there is an ever-increasing need to retrieve the same vehicles across cameras. Vehicle re-identification (Re-ID) aims to identify the same target vehicle in the large-scale gallery database, given a probe vehicle image. Some research solves this problem by license plate recognition [1,2]; however, it is difficult to get a clear shot of license plates in some views. Therefore, vision-based vehicle Re-ID has attracted more attention.

Compared with person Re-ID, vehicle Re-ID faces some unique challenges: (1) Different vehicle instances usually have highly similar appearance with those of the same type and color, regarded as inter-class similarity. (2) Images of the same vehicle captured from different cameras exhibit large variance in appearance due to different structures and details in different faces of the body, namely intra-class variance.

With regard to inter-class similarity, the methods of extracting partial discriminative region features [3,4] and generating similar anti-samples [5] have been put forward. Three even divisions to the global features were used to obtain local features in the Region-Aware deep model (RAM) [3] model. He et al. [4] detected special parts including lights, brands, windows, and jointed these local features with global features to improve the performance. Lou et al. [5] designed a distance adversarial scheme to generate similar hard negative samples, aiming at facilitating the discriminative capability. However, they neglected the influence of intra-class variance, resulting in the inability to learn a compact feature embedding space. In addition, detecting the pre-defined local regions requires additional training.

Some work [6,7,8,9,10,11] were devoted to addressing the intra-class variance problem of vehicle re-identification by predicting key points or viewpoints. The key points can be passed as input to feature extract network [9] or directly used as the discriminant regions to aggregate the orientation-invariant features [6] and trained supervised by IDs to distinguish similar vehicles [10]. Despite obtaining local discriminative features, key points require extra labels and are only partially visible in different viewpoints. The role of viewpoints can be divided into two categories: learning features [7,8] and learning metric [11]. For feature learning, inferring the multi-view feature [7] using the attention model and learning transformations of vehicle images between different viewpoints [8] are proposed. As for metric learning, Chu et al. [11] adopted different matrices to evaluate the similarity of vehicle images according to whether the viewpoints are similar. Again, these methods need additional labeling and prediction process. In addition to these methods, Bai et al. [12] performed an online grouping to cluster the similar viewpoints to optimize the distance metric. However, this network had a complicated training process.

In contrast to the above approaches, we propose a multi-proxy constraint loss (MPCL) function to deal with both intra-class variance and inter-class similarity problem in this paper. We introduce a novel sampling strategy considering viewpoints, which can help mine samples corresponding to different proxies in a mini-batch. A multi-proxy constraint loss function is implemented to learn multiple proxies of a class end to end without additional clustering and impose different constraints based on similarity, effectively achieving intra-class differentiation representation and a larger inter-class margin. We evaluate our approach on two large-scale vehicle Re-ID datasets, VeRi [13] and VehicleID [14] and compare the performance with other state-of-the-art vehicle Re-ID methods. Experimental results showed the superiority of our approach to multiple state-of-the-art vehicle Re-ID methods. The major contribution can be summarized as follows:

- (1)

- We propose a novel sampling strategy considering different viewpoints, effectively selecting the samples captured by different cameras. This sampling strategy contributes to sample the images corresponding to different proxies in a mini-batch. Moreover, it helps to mine hard positive and negative sample pairs.

- (2)

- A multi-proxy constraint loss function is implemented to learn the multiple intra-class proxies and constrain the distance to hard positive proxy less than to hard negative proxy. The feature embedding space supervised by this loss function is more compact, resulting in a larger inter-class distance.

- (3)

- Our proposed approach can be seamlessly plugged into existing methods to improve performance with less effort. We conduct extensive experiments on two large-scale vehicle Re-ID datasets, achieving promising results.

2. Related Works

Research on vehicle Re-ID can be divided into two categories: one is view-independent, the other is based on multi-view. These view-independent methods concentrate on obtaining more robust features by aggregating multiple attributes or partial features. Cui et al. [15] fused the classification features of color, vehicle model, and pasted marks on windshield as the final features to describe the vehicle. Some studies used a variety of attributes to identify vehicles from coarse to fine, such as Progress Vehicle Re-identification (PROVID) [13] and RNN-based Hierarchical Attention (RNN-HA) [16]. These coarse-to-fine approaches require multiple recognition processes and cannot be implemented end to end. Because the differences between similar vehicles are mainly distributed in local regions, some work extracted partial features to improve discriminative ability. RAM [3] adopted horizontal segmentation to obtain local features. He et al. [4] introduced a detection branch to detect window, light, and brand, then combined these partial features and global features to help identify subtle discrepancies. However, these methods of using local features increase the complexity of the network and are usually difficult to train. In addition, the pre-defined areas cannot be detected on images captured from some views.

Taking into account the variance between different views of the same vehicle, some studies focus on generating multi-view features of the vehicle. Wang et al. [6] extracted features of 20 selected key points to aggregate the orientation-invariant feature. The Viewpoint-aware Attentive Multi-view Inference (VAMI) [8] model inferred multi-view features by adversarial training, after selecting core regions of different viewpoints by an attention model. Zhou et al. [7] used spatially concatenated multi-view images to train the network aiming at transforming a single-view image to multi-view features. Also, he proposed bi-directional Long Short-Term Memory (LSTM) units to learn successive transforms between adjacent views. Besides generating multi-view features, Chu et al. [11] learned two metrics for similar viewpoints and different viewpoints, then used the corresponding matric to evaluate the similarity of two images based on whether the viewpoints are similar. Tang et al. [9] reasoned the vehicle pose and shape with synthetic datasets and passed this information to the attributes and feature learning network. Khorramshahi et al. [10] increased a path to detect vehicle key points using the orientation as a conditional factor and extract the local features to distinguish similar vehicles. However, these multi-view approaches require additional labels of key points or viewpoints and complex training process.

In addition, the metric learning methods directly impose distance constraints on different classes and generally achieve good performance in face recognition [17,18,19,20,21,22,23] and person re-identification [24,25,26,27]. Therefore, some scholars also use metric learning to improve the performance of vehicle Re-ID. Liu et al. [14] used the cluster center instead of randomly selected anchor samples in order to solve the problem of the triplet loss which is sensitive to the selected anchor. Bai et al. [12] divided the same vehicle into different groups aiming at characterizing intra-class variance, and adopted an offline strategy to generate the center of the class and each group in this class. However, these center clustering methods require multiple computational processes. Chen et al. [28] designed the distance-based classification to maintain the consistency among criteria for similarity evaluation, but it does not solve the problem of intra-class variance.

3. The Proposed Method

The proposed method takes into account the impact of different viewpoints on the appearances of vehicles, and uses the viewpoint-based sampling strategy to better mine samples corresponding to different proxies. The feature embedding space is optimized by performing the multi-proxy constraint classification.

3.1. Sampling Strategy Considering Viewpoints

The appearances of the same vehicle captured by different cameras vary greatly. To address this problem, we design a novel sampling strategy considering multiple viewpoints. For vehicle Re-ID, the samples with large variance in the appearance usually have different viewpoints. Therefore, we select different vehicle images with different viewpoints for every identity to learn intra-class variance in each sampling.

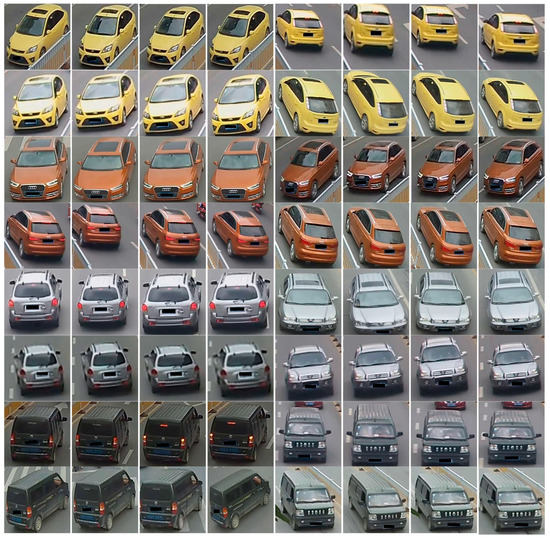

In a mini-batch, we randomly sample vehicle identities, and then randomly select cameras for each identity. The vehicle images are randomly sampled times under the restriction of both the identity and the camera. This strategy results in a mini-batch of images, as shown in Figure 1.

Figure 1.

An example of samples using strategy considering viewpoints. P, K, and V are all set to 4. We can see that the images shot by the same camera are similar and images from different viewpoints show the intra-class variance.

Normally, an epoch is completed after all the vehicle identities are sampled. However, the distribution of vehicle images is not even, some identities have more images than others. If all identities are sampled in the same number, then many images are wasted in one epoch. Therefore, we perform iterations in an epoch, and each iteration samples all the vehicle identities according to the above sampling strategy.

3.2. Multi-Proxy Constraint Loss

Considering the intra-class variance and inter-class similarity, we design a multi-proxy constraint loss function to learn the multiple proxies for one class end to end. The proxy is the center vector of the class. Unlike the usual practice of using a center vector to represent a class, we use multiple center vectors to represent a class. Instead of using extra clustering [12], we adopt a full connected (FC) layer to learn the multiple proxies for each class. The weight vectors of this FC layer are regarded as the proxies of all the classes, and the size is determined by the number of classification and proxies in each class. The weight matrix is expressed as , while there are c classes in total and each class has m proxies. We compute the cosine similarity between the feature and the weight matrix as follows,

In this way, the size of cosine similarity is , but the labels for supervision are . To constrain every m weights adjacent to represent multiple proxies of the same class, the minimum value in is used as the similarity between feature and class , and the maximum value in is taken as the similarity between feature and class , while is not equal to .

Then we get the prediction probability after normalizing the cosine similarity by SoftMax function. The loss function for a mini-batch is computed as:

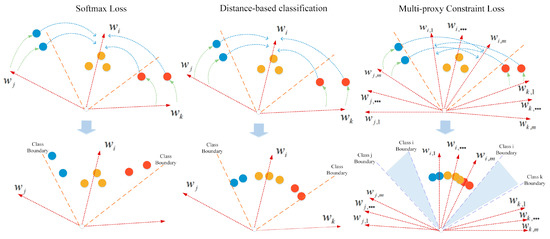

Compared with SoftMax loss and distance-based classification [28], the multi-proxy constraint loss has a different optimization process, as illustrated in Figure 2. SoftMax loss aims to pull all the positive samples within the boundaries of the class. Distance-based classification eliminates the effect of the length of feature vector and makes the classification consistent with the final similarity evaluation criteria. Multi-proxy constraint loss goes a step further based on distance-based classification, requiring that the distance to the furthest intra-class proxy is less than the distance to the closest inter-class proxy. With the supervision of the multi-proxy constraint loss, the embedding space is more compact within classes, as well as having a larger inter-class distance.

Figure 2.

The comparison of optimization process supervised by SoftMax loss, distance-based classification, and multi-proxy constraint loss. The blue arc means the samples are pull closer to the weight vector of the corresponding class, and the green arc shows the samples are push away from the weight vector of the neighboring class. It can be seen that the multi-proxy constraint loss will generate a margin between adjacent classes.

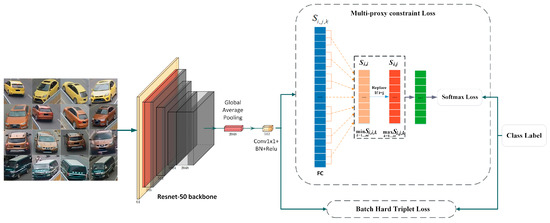

3.3. Network Architecture

As show in Figure 3, we adopt a pre-trained network with partial modification for the vehicle Re-ID task. ResNet-50 [29] is adopted as the backbone, as it achieved competitive performance in some Re-ID works. The structure before the original global average pooling (GAP) layer shares the similar architecture with the backbone, except for that the down-sample stride is changed to 1 in res_conv5_1 block in order to increase the output resolution.

Figure 3.

The proposed network architecture. We add a block consisting of Conv1x1, BatchNorm, and Rectified Linear Unit (ReLU) to reduce the dimension of the features from 2048 to 512. The multi-proxy constraint loss function and the batch hard triplet loss function are jointed to learn the feature embedding space.

A reduction-dim block is added to force the network to learn the discriminative features with fewer channels. The reduction-dim block consists of three layers. A convolution layer with a batch normalization and Rectified Linear Unit (ReLU) reduces the 2048-dim feature to the 512-dim feature.

The multi-proxy constraint loss and batch hard triplet loss [24] together constitute the final loss function. Based on our proposed sampling strategy, the batch hard triplet loss function for a mini-batch is defined as follows:

where mg is the margin, is the feature learned by the network, and correspond to the n1-th and n2-th images for the i-th vehicle identity, and D stands for the cosine function. All the input features are normalized.

The overall loss function is formulated as follows:

where , denote the weights of the corresponding loss. For simplicity, we set all the weights to one. With the strong constraint on distance between different vehicle identities by batch hard triplet loss, the multi-proxy constraint loss is fast in convergence. During testing phases, the 512-dim feature before the classification layer is used as the final descriptor for the image.

4. Experiments

In this section, we evaluate the performance of our proposed approach on two large-scale vehicle Re-ID datasets. The effectiveness of the multi-proxy constraint loss function and the influence of the parameter and sample strategy are investigated.

4.1. Implementation Details

Our network is implemented on the PyTorch framework. The backbone network, Resnet-50, is pre-trained on ImageNet. The input images are resized to . When training, we perform random horizontal flipping and random erasing on the training dataset for data augmentation. The sampling parameters P, K, and V are all set to 4, so the mini-batch size is 64. The iteration number N in an epoch is set to 10 for VeRi-776 [30] dataset as each vehicle has multiple images captured by one camera in this dataset, while N is set to 1 for VehicleID [14] dataset since the vast majority of vehicle identities in this dataset have fewer than 16 images. The proxy number m is set to 8 and 2, respectively, for VeRi-776 and VehicleID datasets, because images in VeRi-776 dataset were taken at different viewpoints and images in VehicleID have only the front and back views. In batch hard triplet loss function, the margin m is set to 0.3. We adopt the SGD optimizer with the momentum of 0.9. A warming-up strategy [27] is used to help the network initialize better before applying a large learning rate. The initial learning rate is , and the learning rate increases linearly to within 10 epochs. The learning rate at epoch 10 is set to , then decreased to at epoch 60 respectively. The total epoch number of all the experiments is 100. When evaluating, we average the features of the original image and the horizontal flipped one as the final feature. This is the usual practice of obtaining more robust features in person Re-ID.

4.2. Datasets and Evaluation Metrics

We evaluate our proposed approach on two large-scale vehicle Re-ID datasets, VeRi-776 [30] and VehicleID [14]. The details of these two datasets are as follows:

VeRi-776 is a dataset containing multi-view vehicle images. It has a total of 776 vehicle identities from 20 cameras in real-world traffic surveillance environment. In addition to vehicle ID and camera ID, the colors, types, and spatio-temporal information are provided. 576 vehicles are used for training, and the remaining 200 vehicles for testing. 1678 images from the test vehicle images are selected as query images. Compared to VeRi, the images in VehicleID dataset have only the front and rear viewpoints. 110,178 images of 13,134 vehicles are used for training and 111,585 images of 13,133 vehicles for testing. Three subsets are proposed as test dataset of different scales, with 800, 1600, and 2400 vehicles, respectively.

The mean average precision (mAP) and cumulative match characteristics (CMC) are adopted to evaluate the performance, which are the same evaluation criteria with previous work. The CMC curve shows the probability that the query identity appears in different-sized search lists. The CMC at Top-k can be defined as:

where is the number of queries and equals one if appears in the Top-k of the ordered list. The CMC evaluation requires that the number of the ground-truth image for a given query should be one.

The mAP metric evaluates the accurate of the overall predications. AP(q) for the query image q is calculated as:

where n and are the numbers of retrieved vehicles and true retrievals for respectively. is the precision at cut-off of images, indicates whether each recall image is correct or not. The mAP is calculated as:

where is the total number of queries.

4.3. Comparisons to the State-of-the-Art

We compare our proposed approach with state-of-the-art vehicle Re-ID methods on the two above-mentioned datasets.

4.3.1. Performance Comparisons on VeRi-776 Dataset

Recent works on vehicle Re-ID can be divided as viewpoint-independent and viewpoint-dependent. The viewpoint-independent methods such as PROVID [13], RHH-HA [16], RAM [3], Multi-Region Model (MRM) [31] mainly focus on learning of robust global and local features or learning of distance metric (Batch Sample (BS) [32] and Feature Distance Adversarial Network (FDA-Net) [5]). The viewpoint-dependent methods are dedicated to learning orientation-invariance features (Orientation Invariant Feature Embedding (OIFE) and OIFE + ST) [6] or multi-view features (VAMI and VAMI + ST) [8]. We group the comparison methods based on whether or not the viewpoint information is used.

Table 1 shows the results on VeRi dataset. This dataset provides the camera IDs and shooting time, so we use the camera ID to distinguish viewpoints when sampling. In addition, there are some methods using the spatio-temporal information to improve the performance of vehicle Re-ID, for example, OIFE [6], VAMI [8] and Pose Guide Spatiotemporal model (PGST) [33]. The annotations used by these methods are also outlined. Our proposed method outperforms all these methods even if those approaches use extra attributes or none-visual cues. Compared with other methods using viewpoints, such as VAMI [8], we use camera ID to simply distinguish views without additional annotation, but our MPCL exceeds the former method by 17.33% mAP. Although the Pose-Aware Multi-Task Re-Identification (PAMTRI) [9] adopts a more complicated backbone DenseNet201 and trains with both real and synthetic data, our approach outperforms PAMTRI by 6.77% mAP, 3.45% Rank-1 and 1.36% Rank-5.

Table 1.

Comparison of mAP (%) and match rate (CMC) at Top-k (%) on VeRi-776 dataset.

4.3.2. Performance Comparisons on VehicleID Dataset

According to the evaluation protocol proposed by Liu et al. [14], we provide results on three different test datasets (i.e., small, medium and large with testing size = 800, 1600, 2400), as shown in Table 2. Without camera IDs, we randomly select 16 images for each identity, the rest remains unchanged. From the results, the use of multi-proxy constraint loss function can achieve the best performance at Rank-1 and Rank-5 on the three test datasets compared to other state-of-the-art methods. Even without viewpoints, our proposed loss function contributes to better feature embedding by learning multiple intra-class proxies.

Table 2.

Results of Top-k metric (%) on VehicleID dataset.

4.4. Ablation Analysis

We conduct ablation study on VeRi dataset to verify the effectiveness of the sampling strategy and view-aware distance loss function and examine the influence of some parameters.

4.4.1. The Validation of Multi-Proxy Constraint Loss

The multi-proxy constraint loss is a polycentric classification based on the cosine distance and SoftMax, so we compare the retrieval performance under the supervision of SoftMax and distance-based classification. As shown in Table 3, multi-proxy constraint loss function outperforms SoftMax loss function and distance-based classification. All the three methods are trained under the joint supervision of batch hard triplet loss function, adopting the same network and learning parameters. Multi-proxy constraint loss beats distance-based classification by 1.34% for mAP on VeRi-776, and surpasses distance-based classification and SoftMax loss in the three different scale datasets of VehicleID. This shows that the multi-proxy constraint loss function effectively pulls closer the features from the same class and pushes away those from different classes.

Table 3.

Performance comparison on the VeRi-776 and VehicleID datasets.

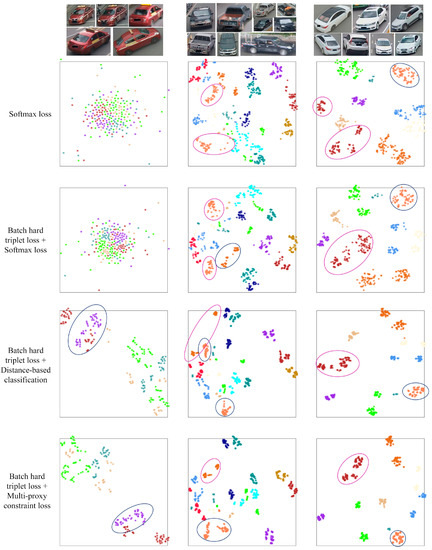

The feature distribution by t-SNE on VeRi-776 test dataset is show in Figure 4. We compare three sets of the feature embedding space, all of which consist of similar samples. Compared to the distribution supervised by distance-based classification, there are larger inter-class margins and smaller intra-class distances in feature embedding space learned by multi-proxy constraint loss. It can be clearly seen that under the supervision of multi-proxy constraint loss, the distribution within the class colored with purple in the first group is more compact, especially it has a greater inter-class distance to the class colored with dark red. In the second group, the intra-class distance of the class colored with light red is greatly reduced under the constraint of multi-proxy loss, while the class colored with light red learned by distance-based classification is separated by other classes. All the classes learned from multi-proxy constraint can be better separated from each other.

Figure 4.

Visualization of the feature distribution by t-SNE on VeRi-776 test dataset. Different colors represent different vehicles. We randomly select three sets of similar images for comparison. The last two row shows the results of distance-based classification and multi-proxy constraint loss from top downwards, respectively. Compared with distance-based classification, the multi-proxy constraint loss contributes to a more compact feature embedding space.

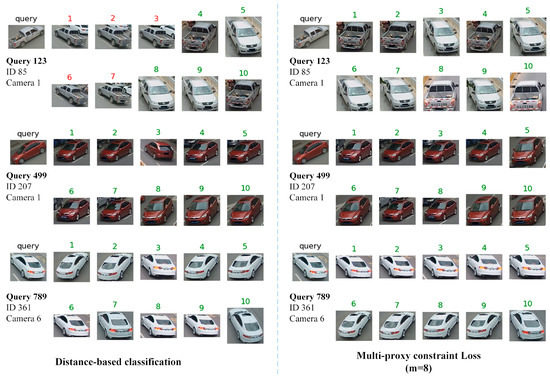

Figure 5 visualizes the Top-10 retrieval results of distance-based classification and multi-proxy constraint loss for three query images. For query 123, multi-proxy constraint loss lists the positive top 10 images, but the negative similar images in the list obtained by distance-based classification are ranked higher. From the rank lists, we can see the features of images with similar viewpoints cluster together supervised by multi-proxy constraint loss, while the features learned by distance-based classification do not show this pattern. Although the top 10 images queried for id 499 and 789 by both methods are all the positive ones, the ranking results of multi-proxy constraint loss are more in line with the criteria for manual discrimination, i.e., the similarity of images with similar viewpoints is higher. This also shows that intra-class clustering can be effectively achieved by multi-proxy constraint loss, making the learned feature representation better deal with the problem of large intra-class variance.

Figure 5.

The Top-10 retrieval results on VeRi-776 dataset. The left-most images labeled ‘query’ are the query images. The two columns are the results of distance-based classification and multi-proxy constraint loss (m = 8). The ground-truth is labeled with green number. The wrong images will be labeled with red numbers.

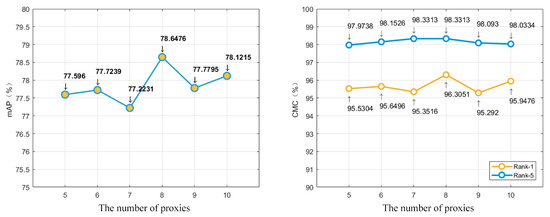

4.4.2. The Influence of the Number of Proxies

The only hyper-parameter the multi-proxy constraint loss function brings is m, which indicates the number of proxies. For the multi-proxy constraint loss, a key step is to determine the number of centers. Too few centers may lead to poor intra-clustering results. However, the larger m, the fully connected layer representing multiple proxies of all the classes has more parameters, making the network more difficult to converge. To verify the effect of the number of proxies, we train the proposed method with different m and compare the evaluation results on VeRi-776 dataset.

As shown in Figure 6, the best scores of Rank-1, Rank-5, and mAP were obtained when setting m to 8. We infer that intra-clustering can be better performed with 8 proxies, because the shooting angle for the same vehicle can be roughly divided into 8 directions including front, left front, left, left rear, rear, right rear, right, right front. In addition, the network with an even number of proxies performs better than that with its adjacent odd number of proxies.

Figure 6.

The comparison of different number of proxies on VeRi-776 dataset. The chart on the left shows the score of mAP, and the chart on the right corresponds to the scores of Rank-1 and Rank-5.

4.4.3. The Influence of Sampling Strategy

To verify whether our proposed sampling strategy contributes to learn multiple intra-class proxies, we compare the performance of two sampling strategies, as shown in Table 4. Both two sampling strategies have 4 vehicle identities in a mini-batch, and each identity has 16 images. The only difference between these two sampling strategies is whether the camera information is taken into consideration when sampling images for each vehicle. The sampling strategy considering viewpoints achieves better performance. This indicates that selecting samples from different viewpoints can effectively enrich the diversity of samples in a mini-batch and promote the network to better learn multiple intra-class proxies. Moreover, sampling considering viewpoints helps to mine hard positive and negative sample pair in a mini-batch.

Table 4.

Performance from different sampling strategies on the VeRi-776 dataset. The best performance is bold.

5. Conclusions

In this paper, we propose a sampling strategy considering viewpoints and the multi-proxy constraint loss function that deals with the intra-class variance and inter-class similarity problems for vehicle Re-ID. This sampling strategy is beneficial for better learning multiple intra-class proxies. With this sampling strategy, the multi-proxy constraint loss function effectively uses the most difficult positive and negative proxies to impose stronger constraint on samples, leading to large inter-class margins and small intra-class distances. Experiments on two large-scale vehicle datasets demonstrate the superiority of our method. In particular, our approach achieves state-of-the-art performance on VeRi-776 dataset, with 78.65% mAP, 96.31% Rank1 and 98.33% Rank5. In addition, our proposed multi-proxy constraint loss function also works for other classification tasks and easy to plug into other frameworks to improve the performance.

However, the relationship between samples from different viewpoints with the same identity is neglected, which helps to identify accurately when faced with large variance in appearance. In the future, we will consider facilitating graph convolutional neural networks to learn the relationship between samples to obtain more robust features.

Author Contributions

All the authors made significant contributions to this work. X.C. designed and performed the experiments; C.W. and M.Z. helped with the experiments; X.C. wrote the original draft preparation; J.F. and H.S. reviewed and edited the paper. All authors have read and approved the final manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under Grant No. 41771457 and the project funded by China Postdoctoral Science Foundation (No. 2018M642875).

Acknowledgments

The authors thank the providers of VeRi-776 and VehicleID datasets.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jain, V.; Sasindran, Z.; Rajagopal, A.; Biswas, S.; Bharadwaj, H.S.; Ramakrishnan, K.R. Deep automatic license plate recognition system. In Proceedings of the Tenth Indian Conference on Computer Vision, Graphics and Image Processing, Guwahati, Assam, India, 18–22 December 2016; pp. 1–8. [Google Scholar]

- Spanhel, J.; Sochor, J.; Juranek, R.; Herout, A.; Marsik, L.; Zemcik, P. Holistic recognition of low quality license plates by CNN using track annotated data. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017. [Google Scholar]

- Liu, X.; Zhang, S.; Huang, Q.; Gao, W. RAM: A Region-Aware Deep Model for Vehicle Re-Identification. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), San Diego, CA, USA, 23–27 July 2018. [Google Scholar]

- He, B.; Li, J.; Zhao, Y.; Tian, Y. Part-regularized Near-duplicate Vehicle Re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; Volume 1, pp. 3997–4005. [Google Scholar]

- Lou, Y.; Bai, Y.; Liu, J.; Wang, S. VERI-Wild: A Large Dataset and a New Method for Vehicle Re-Identification in the Wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3235–3243. [Google Scholar]

- Wang, Z.; Tang, L.; Liu, X.; Yao, Z.; Yi, S.; Shao, J.; Yan, J.; Wang, S.; Li, H.; Wang, X. Orientation Invariant Feature Embedding and Spatial Temporal Regularization for Vehicle Re-identification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 379–387. [Google Scholar]

- Zhou, Y.; Liu, L.; Shao, L. Vehicle Re-Identification by Deep Hidden Multi-View Inference. IEEE Trans. Image Process. 2018, 27, 3275–3287. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Shao, L. Viewpoint-aware Attentive Multi-view Inference for Vehicle Re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 6489–6498. [Google Scholar]

- Tang, Z.; Naphade, M.; Birchfield, S.; Tremblay, J.; Hodge, W.; Kumar, R.; Wang, S.; Yang, X. PAMTRI: Pose-aware multi-task learning for vehicle re-identification using highly randomized synthetic data. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 211–220. [Google Scholar]

- Khorramshahi, P.; Kumar, A.; Peri, N.; Rambhatla, S.S.; Chen, J.; Chellappa, R. A Dual-Path Model With Adaptive Attention For Vehicle Re-Identification. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019. [Google Scholar]

- Chu, R.; Sun, Y.; Li, Y.; Liu, Z.; Zhang, C.; Wei, Y. Vehicle Re-identification with Viewpoint-aware Metric Learning. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019. [Google Scholar]

- Bai, Y.; Lou, Y.; Gao, F.; Wang, S.; Wu, Y.; Duan, L. Group Sensitive Triplet Embedding for Vehicle Re-identification. IEEE Trans. Multimed. 2018, 20, 2385–2399. [Google Scholar] [CrossRef]

- Liu, X.; Liu, W.; Mei, T.; Ma, H. PROVID: Progressive and Multimodal Vehicle Reidentification for Large-Scale Urban Surveillance. IEEE Trans. Multimed. 2018, 20, 645–658. [Google Scholar] [CrossRef]

- Liu, H.; Tian, Y.; Wang, Y.; Pang, L.; Huang, T. Deep Relative Distance Learning: Tell the Difference between Similar Vehicles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2167–2175. [Google Scholar]

- Cui, C.; Sang, N.; Gao, C.; Zou, L. Vehicle re-identification by fusing multiple deep neural networks. In Proceedings of the 2017 Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), Montreal, QC, Canada, 28 November–1 December 2017. [Google Scholar]

- Wei, X.-S.; Zhang, C.-L.; Liu, L.; Shen, C.; Wu, J. Coarse-to-fine: A RNN-based hierarchical attention model for vehicle re-identification. arXiv 2018, arXiv:1812.04239. [Google Scholar]

- Liu, W.; Wen, Y.; Yu, Z.; Yang, M. Large-Margin Softmax Loss for Convolutional Neural Networks. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Ranjan, R.; Castillo, C.D.; Chellappa, R. L2-constrained Softmax Loss for Discriminative Face Verification. arXiv 2017, arXiv:1703.09507. [Google Scholar]

- Wang, F.; Xiang, X.; Cheng, J.; Yuille, A.L. NormFace: L2 Hypersphere Embedding for Face Verification. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017. [Google Scholar]

- Liu, W.; Wen, Y.; Yu, Z.; Li, M.; Raj, B.; Song, L. SphereFace: Deep hypersphere embedding for face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6738–6746. [Google Scholar]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Wang, H.; Wang, Y.; Zhou, Z.; Ji, X.; Gong, D.; Zhou, J.; Li, Z.; Liu, W. CosFace: Large Margin Cosine Loss for Deep Face Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 5265–5274. [Google Scholar]

- Wang, F.; Cheng, J.; Liu, W.; Liu, H. Additive Margin Softmax for Face Verification. IEEE Signal Process. Lett. 2018, 25, 926–930. [Google Scholar] [CrossRef]

- Hermans, A.; Beyer, L.; Leibe, B. In Defense of the Triplet Loss for Person Re-Identification. arXiv 2017, arXiv:1703.07737. [Google Scholar]

- Ye, M.; Liang, C.; Yu, Y.; Wang, Z.; Leng, Q.; Xiao, C.; Chen, J.; Hu, R. Person Reidentification via Ranking Aggregation of Similarity Pulling and Dissimilarity Pushing. IEEE Trans. Multimed. 2016, 18, 2553–2566. [Google Scholar] [CrossRef]

- Cheng, D.; Gong, Y.; Zhou, S.; Wang, J.; Zheng, N. Person re-identification by multi-channel parts-based CNN with improved triplet loss function. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1335–1344. [Google Scholar]

- Fan, X.; Jiang, W.; Luo, H.; Fei, M. SphereReID: Deep hypersphere manifold embedding for person re-identification. J. Vis. Commun. Image Represent. 2019, 60, 51–58. [Google Scholar] [CrossRef]

- Chen, X.; Sui, H.; Fang, J.; Feng, W.; Zhou, M. Vehicle Re-Identification Using Distance-Based Global and Partial Multi-Regional Feature Learning. IEEE Trans. Intell. Transp. Syst. 2020, 1–11. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Liu, X.; Liu, W.; Ma, H.; Fu, H. Large-scale vehicle re-identification in urban surveillance videos. In Proceedings of the IEEE International Conference on Multimedia and Expo, Seattle, WA, USA, 11–15 July 2016; pp. 1–6. [Google Scholar]

- Peng, J.; Wang, H.; Zhao, T.; Fu, X. Learning multi-region features for vehicle re-identification with context-based ranking method. Neurocomputing 2019, 359, 427–437. [Google Scholar] [CrossRef]

- Kumar, R.; Weill, E.; Aghdasi, F.; Sriram, P. Vehicle Re-Identification: An Efficient Baseline Using Triplet Embedding. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019. [Google Scholar]

- Zhong, X.; Feng, M.; Huang, W.; Wang, Z.; Satoh, S. Poses Guide Spatiotemporal Model for Vehicle Re-identification. In MultiMedia Modeling; Springer International Publishing: Cham, Switzerland, 2019; pp. 426–439. [Google Scholar]

- Shen, Y.; Xiao, T.; Li, H.; Yi, S.; Wang, X. Learning Deep Neural Networks for Vehicle Re-ID with Visual-Spatio-Temporal Path Proposals. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1918–1927. [Google Scholar]

- Wu, C.; Liu, C.; Chiang, C.; Tu, W.; Chien, S. Vehicle Re-Identification with the Space-Time Prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Guo, H.; Zhao, C.; Liu, Z.; Wang, J.; Lu, H. Learning Coarse-to-Fine Structured Feature Embedding for Vehicle Re-Identification. In Thirty-Second {AAAI} Conference on Artificial Intelligence; McIlraith, S.A., Weinberger, K.Q., Eds.; {AAAI} Press: Palo Alto, CA, USA, 2018; pp. 6853–6860. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).