1. Introduction

Human gait is an important indicator related to different markers of health (e.g., age-related diseases or early mortality). More specifically, gait speed has been denoted as the sixth vital sense because it is associated with daily function, late-life mobility, independence, falls, fear of falls, fractures, mental health, cognitive function, adverse clinical events, hospitalization, institutionalization, and survival [

1]. Accordingly, gait analysis is indispensable for the description of underlying gait patterns, especially in patients with neurological diseases such as Parkinson’s disease [

2]. Spatio-temporal parameters help to describe the gait pattern of patients quantitatively and objectively. For instance, reduced step length, prolonged double-stance phases, increased step width can often be found in Parkinsonian patients [

3]. An established gold standard for gait analysis are multi-camera motion capturing systems that track retro-reflective markers. Based on the marker positions in the individual camera images, their 3D positions can be reconstructed using triangulation. In general, motion capturing systems, such as the well-known Vicon system (Vicon Motion Systems, Oxford, UK), offer high accuracy [

4]. However, these camera systems are expensive and require a large setup in specialized gait analysis laboratories as well as a time-consuming marker placement on the participants. In order to be able to take measurements outside the laboratory, the Microsoft Kinect sensor has been identified as a portable and cost-effective device for gait assessment [

5].

The Kinect v1, launched by Microsoft in 2010, has an integrated RGB and an infrared (IR) camera, capable of tracking users in 3D. This sensor was designed as a gaming controller for the Xbox, allowing users to interact with games without the use of a controller device. For depth estimation, the Kinect v1 uses the principle of structured light, in which an IR projector sequentially illuminates the scene with a known and fixed dot pattern. By observing the dot pattern with an IR camera, the depth information can be estimated using triangulation techniques, where the baseline between camera and projector is known [

6]. The Artificial Intelligence (AI)-based skeleton tracking uses randomized decision forests trained on a large data set of labeled depth images [

7].

The Kinect v2 was launched in 2014, with improved hardware and skeleton tracking. The depth estimation method has changed to the time-of-flight (ToF) principle, in which the distance to an object is determined by the time it takes for the emitted light to reach the object and return to the sensor [

8]. The Kinect for Windows SDK 2.0 allows for tracking the 3D positions and orientations of 25 joints of six users simultaneously [

9]. However, Microsoft has stopped the production of the Kinect v2 [

10].

In 2019, Microsoft launched the Azure Kinect DK (Developer Kit). It can easily be integrated with Azure Cognitive Services, a library of various AI applications, such as speech and image analysis [

11]. In a 2019 review, Clark et al. [

12] were not able to determine whether the Azure Kinect could be used as a standalone device or only as part of a web platform. However, it turned out, this camera can still be used as a local peripheral device. The Azure Kinect camera also consists of an RGB camera and an IR camera, which utilizes the ToF principle, but offers significantly higher accuracy than other commercially available cameras [

13]. In addition, the Kinect camera has an integrated inertial measurement unit (IMU) and a 7-microphone array, extending the range of possible applications to other areas. Microsoft has also developed a new body tracking SDK for the Azure Kinect which is based on Deep Learning (DL) and Convolutional Neural Networks (CNN).

Notably, depth sensors other than the Microsoft Kinect are commercially available, such as the Intel Realsense, Stereolabs ZED, or Orbbec [

14]. By using either the manufacturer’s skeleton tracking software solutions or other available human pose estimation methods that also work on 2D RGB images, such as OpenPose [

15], DeepPose [

16], or VNect [

17], these depth cameras also have the potential to be used for healthcare applications. However, according to Clark et al. [

12], the accuracy of other depth cameras in comparison to the Kinect cameras has not yet been established.

Against this background, the present study investigates whether the Azure Kinect camera can be used for accurate gait assessment on a treadmill by comparing the collected data to a gold standard (Vicon 3D camera system). We also evaluate whether its improved hardware and DL-based skeleton tracking algorithm performs better than its predecessor, the Kinect v2. The structure of this paper is as follows:

Section 2 gives an overview of the related literature and presents applications in which the Kinect v1 and Kinect v2 cameras were evaluated with respect to clinical use cases.

Section 3 introduces the hardware used and the methods developed. The evaluation results of the human pose estimation performance and concurrent comparison between both Kinect cameras is presented in

Section 4 and concludes with a discussion in

Section 5.

2. Related Work

In recent years, Kinect v1 and Kinect v2 were extensively studied by the research community to see if these sensors can be used as alternative measurement devices for assessing human motion. Among these studies, several constellations and configurations were used, such as performing physical exercises at a static location, walking along a walkway, or walking on a treadmill. In addition, some studies used multiple Kinect sensors simultaneously and integrated the resulting data into a global coordinate system, resulting in more robust tracking and extended camera range [

18]. However, since only a single Kinect sensor was used in this study to evaluate the gait, the focus lies on related literature that studied gait with a single camera.

Wang et al. [

19] examined the differences in human pose assessment between Kinect v1 and Kinect v2 in relation to twelve different rehabilitation exercises. They also evaluated the quality changes when filming from three different viewing angles. Ultimately, they concluded that Kinect v2 has overall better accuracy in joint estimation and is also more robust to occlusion and body rotation than Kinect v1. The study by Galna et al. [

20] examined the accuracy of the Microsoft Kinect sensor for measuring movement in people with Parkinson’s disease. Movement tasks included quiet standing, multidirectional grasping and various tasks from the Unified Parkinson’s Disease Rating Scale such as hand gripping and finger tapping. These tasks were performed by nine Parkinsonian patients and 10 healthy controls. It was found that Kinect performs well for temporal parameters, but lacks in accuracy for smaller spatial exercises such as toe tapping.

Capecci et al. [

21] used the Kinect v2 sensor to evaluate rehabilitation exercises for lower back pain, such as lifting the arms and performing a squat. Angles and joint positions were defined as clinically relevant features and the deviation was analyzed with respect to a reference motion capture system. It was concluded that the Kinect v2 captured the timing of exercises well and provided comparable results for joint angles. Overground walking recorded with Kinect v2 was evaluated by Mentiplay et al. [

22] by studying spatio-temporal and kinematic walking conditions. There was excellent relative agreement on the measurement of walking speed, ground contact time, vertical displacement range of the center of the pelvis, foot swing speed as well as step time, length and width at both speeds, comfortable and fast. However, the relative agreement for kinematic parameters was described as being poor to moderate.

Xu et al. [

23] investigated whether the Kinect v2 sensor could be used to measure spatio-temporal gait parameters on the treadmill. Using 20 healthy volunteers, they validated gait parameters such as step time, step time, swing phase, stance phase, and double limb support time as well as kinematic angles for walking at three different speeds. Their results showed that gait parameters based solely on heel strikes had lower errors than those based on toe off events. This could be caused by the camera angle, i.e., test subjects were being filmed from the frontal plane, so that the corresponding joints were closer to the camera during heel strike. Macpherson et al. [

24] evaluated the tracking quality of a pointcloud-based Kinect system for measuring pelvic and trunk kinematics for treadmill walking. The three-dimensional linear and angular range of motion (ROM) of the pelvis and trunk was evaluated and compared with a 6-camera motion capturing system (Vicon). They obtained very large to perfect within subjects correlation coefficients (

r = 0.87–1.00) for almost all linear ROMs of the trunk and pelvis. However, the authors report less consistent correlations for the angular ROM, ranging from moderate to large.

3. Materials and Methods

This chapter introduces the hardware used and the methods developed in order to compare the human pose estimation performance between both Kinect cameras and the Vicon high resolution motion capturing system.

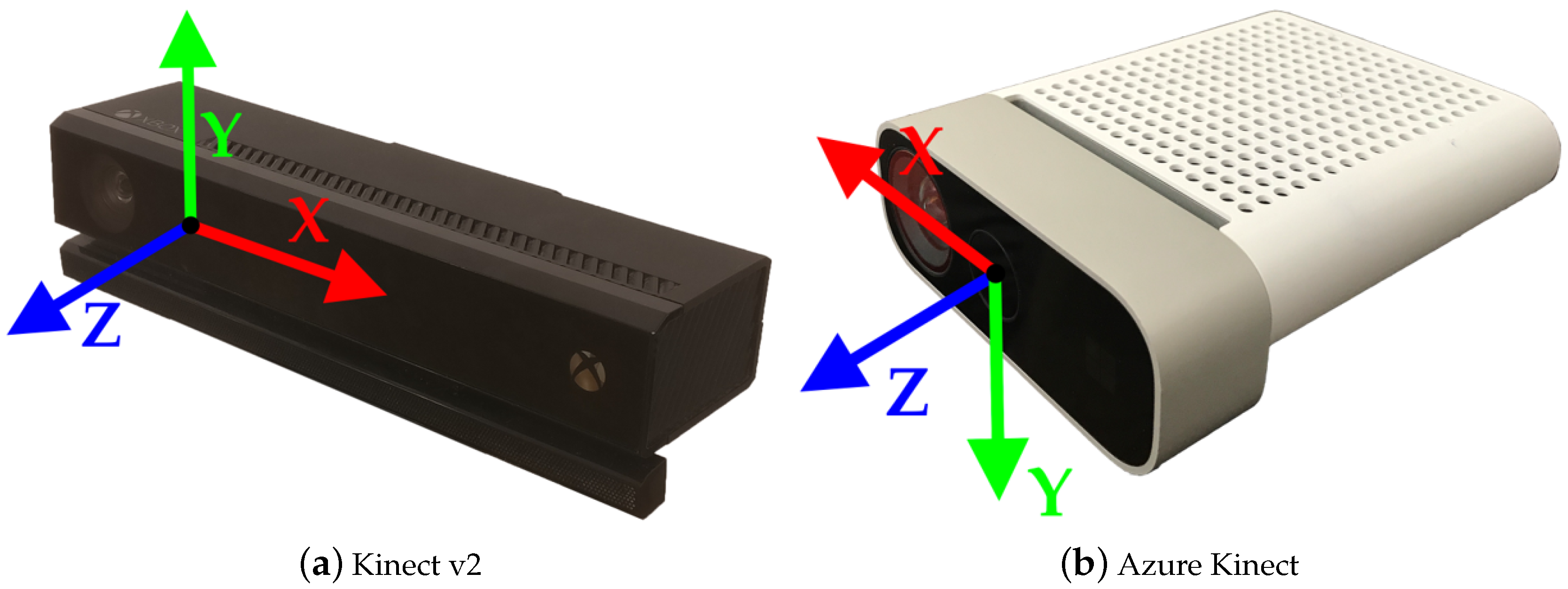

3.1. Microsoft Kinect v2

The Microsoft Kinect v2 camera consists of a RGB and an IR camera as well as three IR projectors. The sensor is utilizing the ToF principle for depth estimation. The RGB camera has a resolution of 1920 × 1080 px and the IR camera has a resolution of 512 × 424 px while both cameras deliver frames at 30 Hz. Data from the sensor can be accessed using the Kinect for Windows SDK 2.0, which allows for tracking up to 6 users simultaneously with 25 joints each. For each joint, the three-dimensional position is provided as well as the orientation as quaternion. The center of the IR camera lense represents the origin of the 3D coordinate system, in which the skeletons are also tracked, as shown in

Figure 1a.

Table 1 provides a more detailed overview of the technical specifications of the Kinect v2 compared to the Azure Kinect camera.

3.2. Microsoft Azure Kinect

The Azure Kinect camera also consists of a RGB camera and an IR camera. The color camera offers different resolutions, the highest resolution being 3840 × 2160 px at 30 Hz. The IR camera has a highest resolution of 1 MP, with 1024 × 1024 px. This Kinect sensor also utilizes the ToF principle. In addition, both the RGB and IR cameras support different fields of view. The Azure Kinect also has an IMU sensor, consisting of a triaxial accelerometer and a gyroscope, which can estimate its own position in space. For skeletal tracking, Microsoft offers a Body Tracking SDK, which is available for Windows and Linux and the programming languages C and C++. This SDK is able to track multiple users with 32 joints each. In contrast to the skeleton definition of the former Kinect generation, the current definition includes more joints in the face, such as ears and eyes. As its older generations, the SDK provides the joint orientations in quaternions representing local coordinate systems, as well as three-dimensional position data. The origin of the 3D coordinate system is also the center of the IR camera, but having some axes expand into opposite directions, as presented in

Figure 1b.

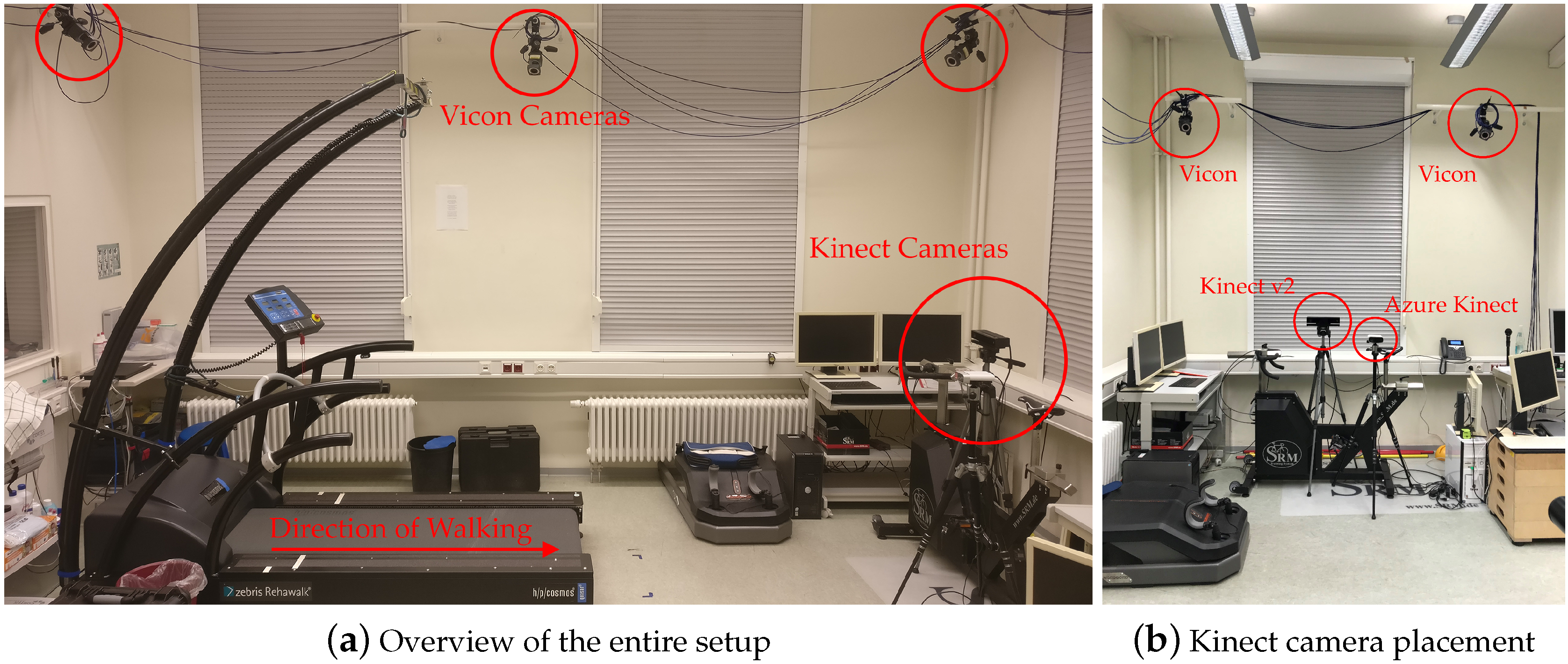

3.3. Experimental Setup and Data Collection

This study was approved by the Ethics Committee of the University of Potsdam (Application 29/2020). Before the data collection, informed consent was given by the participants. Five young and healthy adults (average age of 28.4 ± 4.2 years, body mass of 73.4 ± 10.6 kg, and height of 178.8 ± 7.0 cm) walked on a treadmill (h/p/cosmos, Nussdorf-Traunstein, Germany) at three different speeds. The experiments were conducted at the lab of the Division of Training and Movement Science at the University of Potsdam. Speeds were selected according to the study presented by Xu et al. [

23], starting at 3 kmh

≈ 0.85 ms

followed by 3.9 kmh

≈ 1.07 ms

and finally 4.7 kmh

≈ 1.3 ms

. Each subject was asked to perform each trial of a certain speed twice and to walk 100 steps per side. The participants were equipped with the 39 retro-reflective marker full-body Plug-in Gait model [

25] with markers corresponding to anatomical landmarks, as shown in

Figure 2a. In addition, the participants walked barefoot, firstly to be able to attach the foot markers precisely and secondly to avoid movement artifacts through the fabric of the shoes. 3D marker kinematics were captured at 100 Hz using ten IR cameras (Vicon, Bonita 10 [

26]), as shown in

Figure 3a. Vicon Nexus 2.10.1. software was used for data recording and post-processing of the data.

The joints tracked by both Kinect cameras are approximate and anatomically incorrect. As mentioned above, the Kinect v2 and Azure Kinect cameras track 25 joints and 32 joints, respectively. Most joints between the Kinect systems are similar. However, the Azure Kinect model contains additional markers, e.g., for ears, eyes, and clavicles.

Figure 2b shows the marker definitions from Kinect v2 and Azure Kinect together.

Both Kinect cameras were placed at a distance of 3–4 meters in front of the treadmill to stay within the recommended range for body tracking. Each camera was mounted on a tripod at approximately one meter above the floor, as shown in

Figure 3b. During a pilot experiment, it was found that the Azure Kinect skeleton tracking generated artifacts such as skeleton jitter and frame loss. These artifacts disappeared after the Azure Kinect camera was moved closer to the treadmill and the subject. However, the reason for these initial problems is still unknown. Due to the different distances to the treadmill of both Kinect cameras, the height of the Azure Kinect was slightly lowered (also noticeable in the figure) in order to have aligned visible treadmill areas in both camera images. Each Kinect camera was connected to its own laptop running a customized recording software. The laptop used to run the Azure Kinect was equipped with a Nvidia

TM GeForce RTX 2060 GPU with 6 GB of graphics memory, in order to have sufficient power to run the DL model for body tracking, as well as 16 GB of RAM and an Intel

® i7-8750H 2.20 GHz CPU. The software for storing the pose and image data of the Azure Kinect was implemented using the programming language C++ and the Body Tracking SDK. At the time this study was conducted, the Body Tracking SDK was available in version 1.0.1.

The Microsoft Kinect v2 camera data was recorded with custom software using the PyKinect2 library for the PythonTM programming language, which is based on the Kinect for Windows 2.0 SDK. The recording laptop had 16 GB of RAM installed and was running with an Intel® i7-8565U 2.20 GHz CPU.

3.4. Signal Processing and Synchronization

Data post-processing included the reconstruction of the model and the labeling of the markers. Present gaps in the data were filled manually within the Vicon Nexus software. Data were filtered using a 4th-order Butterworth filter with a cut-off frequency of 5 Hz.

The Kinect v2 camera has a target sampling rate of 30 Hz, but, during the experiments, it was found that the frequency was not constant. This was shown by calculating the time difference between consecutive examples across all experiments, resulting in a sampling rate of 37.73 ± 25.80 ms. Since the Kinect v2 data were not sampled constantly, the signal was resampled at a constant sampling frequency as proposed by Scano et al. [

27]. Furthermore, the signal was upsampled to 100 Hz in order to match the Vicon sampling frequency.

Similar to the Kinect v2, the Azure Kinect also provides a sampling frequency of 30 Hz. The calculated differences over all measured frames gave a mean value of 34.23 ± 14.92 ms. However, the recorded time stamps in the data showed that the time differences of the measurements were constant, but some gaps were present. These gaps were identified in the developed software and filled using quadratic interpolation. After filling the gaps, the pose data were also upsampled to 100 Hz to match the Vicon sampling frequency.

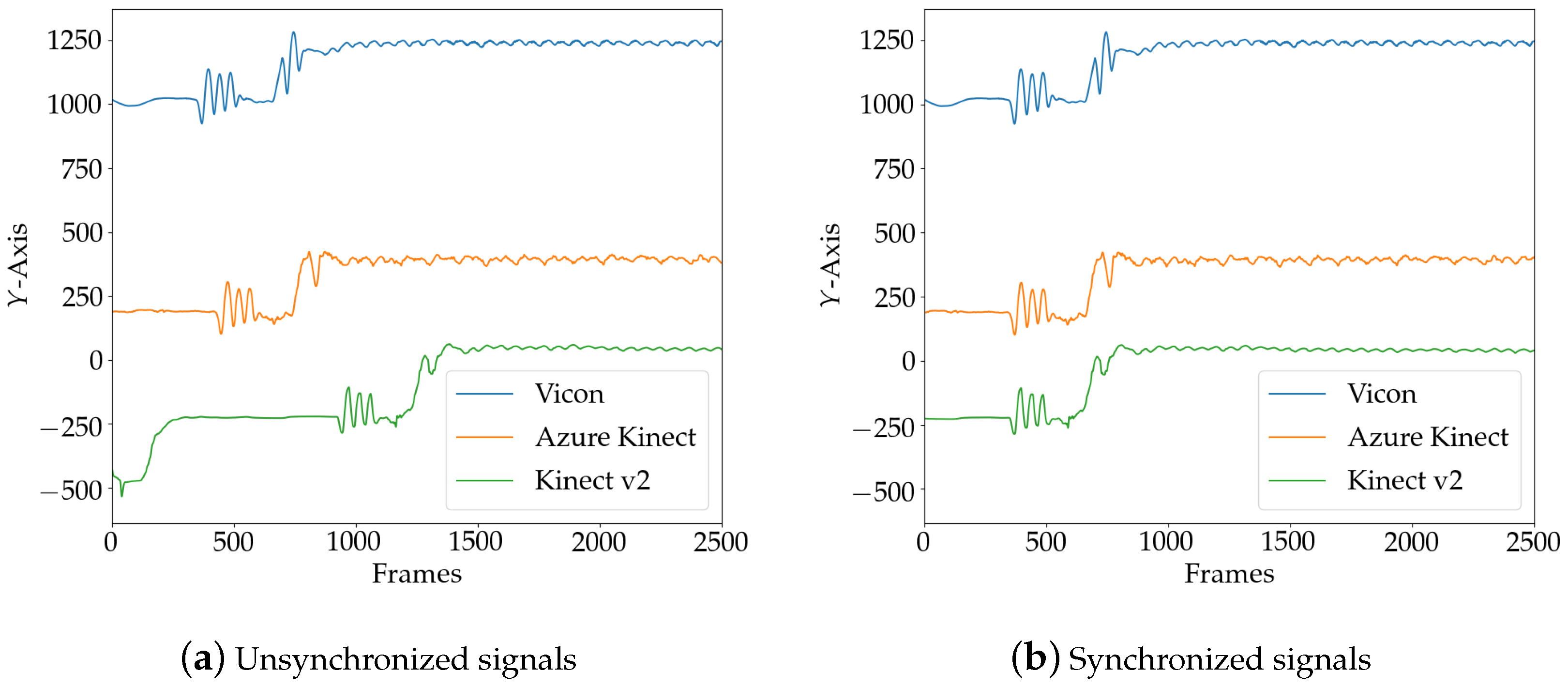

The participants were asked to perform three consecutive jumps before and after each trial to obtain significant peaks in the pose data. During post-processing, the data of both Kinect sensors were synchronized with the Vicon reference system using cross-correlation of the second derivative of a central marker in the vertical axes. Therefore, the Sternum marker (STRN) of the Plug-in Gait model was used, and the Pelvis marker for Kinect v2 and Azure Kinect.

Figure 4a shows the unsynchronized signals from the three camera systems, with both Kinect signals upsampled to 100 Hz and the peaks from the jumping protocol visible. The final synchronization result using the cross-correlation shift method is shown in

Figure 4b.

3.5. Spatial Alignment of Skeleton Data

To calculate the spatial deviation of the two Kinect cameras and the ground truth data from the Vicon system, the kinematic data from all three systems were transformed into a global coordinate system. For both Kinect cameras, the origin of the coordinate system is the center of the IR camera. The

y-axis of the Kinect v2 camera points upwards, while the same axis points downwards in the Azure Kinect. The

x-axes also point in the opposite direction along the camera. The

z-axis extends in the direction of view in both systems (as shown in

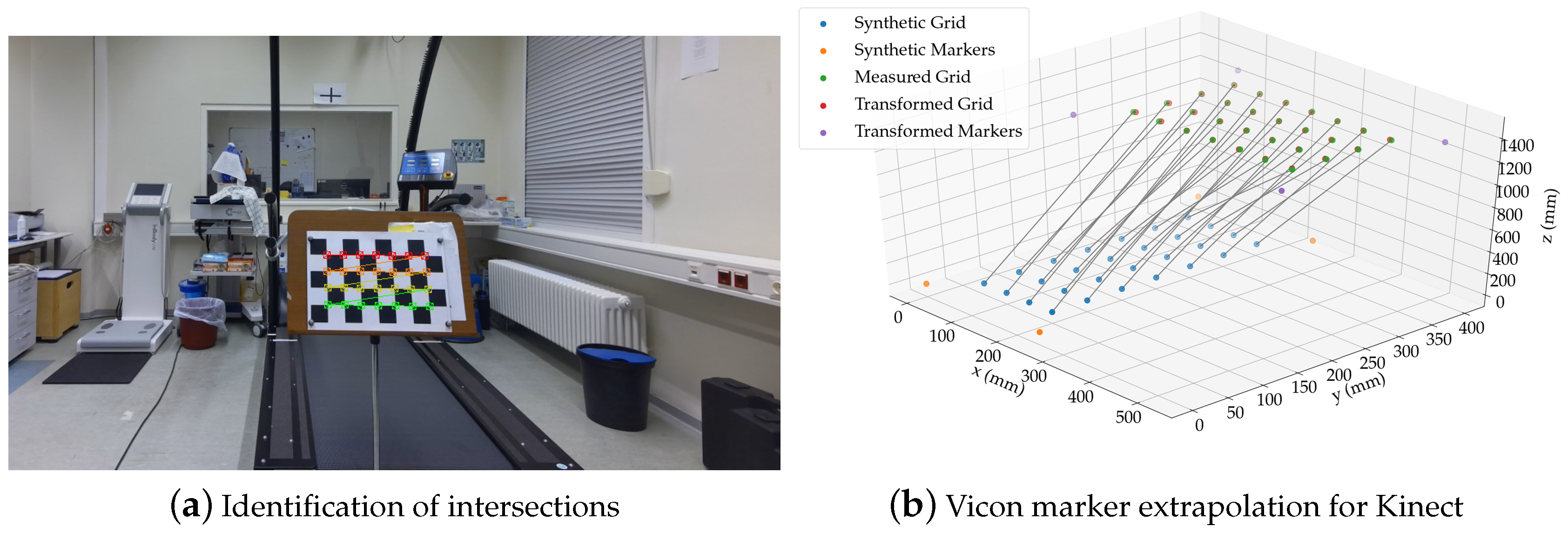

Figure 1a,b). The Vicon coordinate system was defined by the triangular calibration wand, which was placed parallel to the treadmill. To transform each Kinect coordinate system into the global system, the Vicon system, an 8 × 5 checkerboard pattern with a length of

mm per square side was used. Four retro-reflective 14 mm markers were placed at the outer corners of the checkerboard, as shown in

Figure 5a. The goal of the calibration method was to find a transformation to map simultaneously recorded points from one system to another system.

As mentioned by Naeemabadi et al. [

28], retro-reflective markers, like those of the Vicon system, appear as noise in the IR images, so their 3D position is unknown to the Kinect sensors. To determine the 3D position of the Vicon markers, nevertheless, the intermediate intersection points on the chessboard were identified in the 2D color images using the OpenCV library, followed by the coordinate mapper functions provided by the Kinect SDKs to obtain the actual 3D positions from the depth camera space, denoted as points

in the point set

P. A transformation was then found by fitting a grid with the same dimensions into the point set and using this transformation to extrapolate the known Vicon marker positions in 3D space. The grid

Q consisting of points

with known distances was generated as shown in Equation (

1), where

h and

w denote the dimensions of the used checkerboard:

To register the two point sets

P and

Q with known point correspondences, a rigid transformation must be found, which consists of a rotation matrix

and a translation vector

. Therefore, a method based on singular value decomposition (SVD) was used [

29]. Since the checkerboard data contained measurement errors, the objective of the algorithm was to find a rigid transformation such that the least squares error in Equation (

2) was minimized:

To minimize the objective, both point sets of size

n were moved to their respective origin by subtracting for each point set its centroid

and

as shown in Formulas (

3) and (

4):

The next step was to calculate the covariance matrix

, where

X and

Y are matrices that hold the centered vectors

and

in their columns, as shown in Equation (

5):

Subsequently, the rotation matrix was obtained by performing a SVD on the covariance matrix

. The rotation matrix was then calculated as shown in Equation (

6), and the final translation vector was calculated as shown in Formula (

7):

The obtained transformation with

R and

t was then applied to the Vicon marker locations of the synthetically generated grid to obtain the real locations with respect to the Kinect coordinate system. Then, the above algorithm from Formulas (

2) to (

7) was applied to the extrapolated Vicon markers for Kinect and the measured Vicon points

V to obtain a transformation that maps the Kinect points into the Vicon coordinate system. Throughout the calibration procedure, the checkerboard was moved several times in the area to scan enough calibration points. The calibration algorithm was applied for both Kinect cameras separately.

3.6. Gait Parameter Calculation

Since the aim of this study was to evaluate spatio-temporal gait parameters, i.e., step length, step time, step width and stride time, these parameters were calculated based on the method presented by Zeni et al. [

30]. The authors proposed an algorithm to automatically detect heel strike and toe off events in kinematic data and to derive swing and stance phases from it. It was shown that this method determines the timing of gait events with an error of

s compared to a vertical ground reaction force (GRF).

In this method, the anterio-posterior direction (e.g., the

x-axis) of the foot marker trajectory is plotted over time which results in a sinusoidal curve as the treadmill belt constantly pulls back the foot after every heel strike [

30]. Thus, the heel strikes at a given time

were determined by finding the maximum peaks within the 1D heel marker signal

, while toe off events at a given time

were identified as the valleys of the toe markers

, also in the anterio-posterior direction. In addition, the foot markers were normalized with respect to the sacrum marker

, resulting in the signal being centered around the origin, as shown in Equations (

8) and (

9):

As thresholds for the peak detection algorithm, the mean value of the anterio-posterior marker signals were used. Since the Plug-in Gait marker model does not include a marker at the sacrum, the average position of the right and left posterior superior iliac markers (RPSI and LPSI) was calculated to represent a virtual sacrum marker. For the Kinect cameras, the Spine-Base and Pelvis markers were used for Kinect v2 and Azure Kinect, respectively.

Based on the identified gait events, the spatio-temporal gait parameters were calculated as proposed in the study by Eltoukhy et al. [

31]. The description of the gait parameter calculation for the Vicon and Kinect systems is presented in

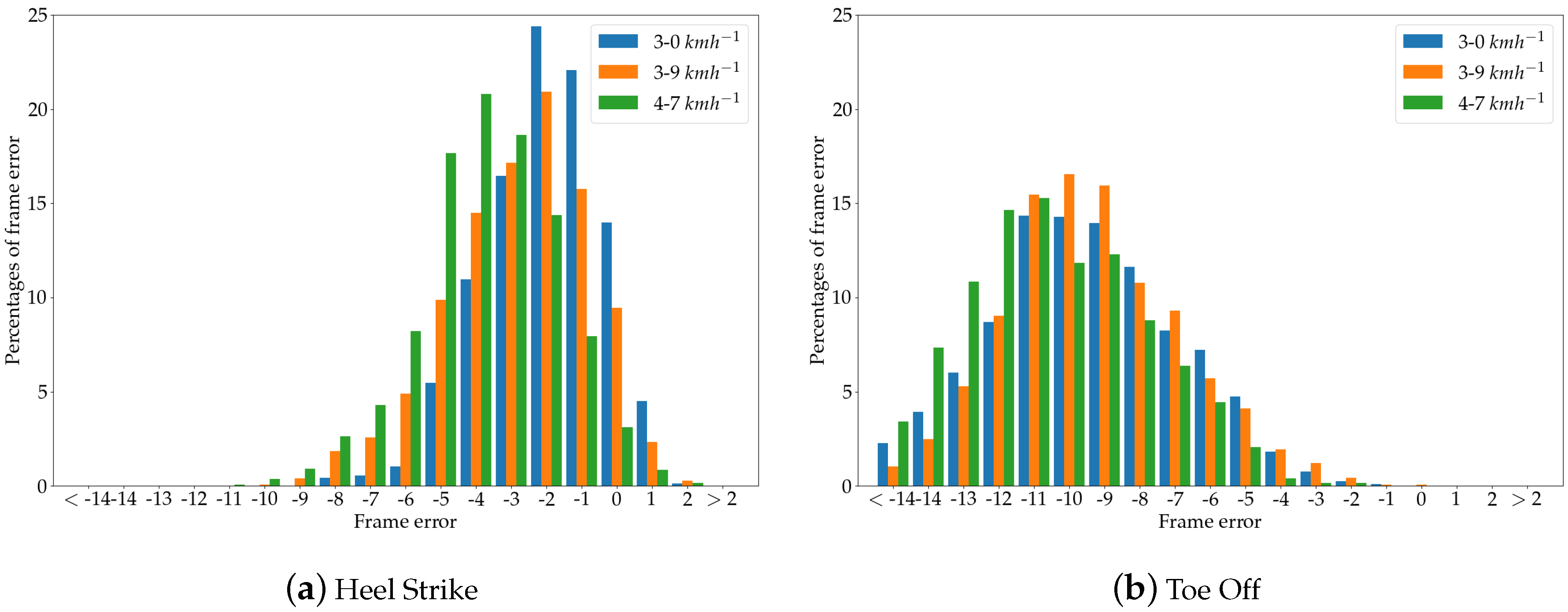

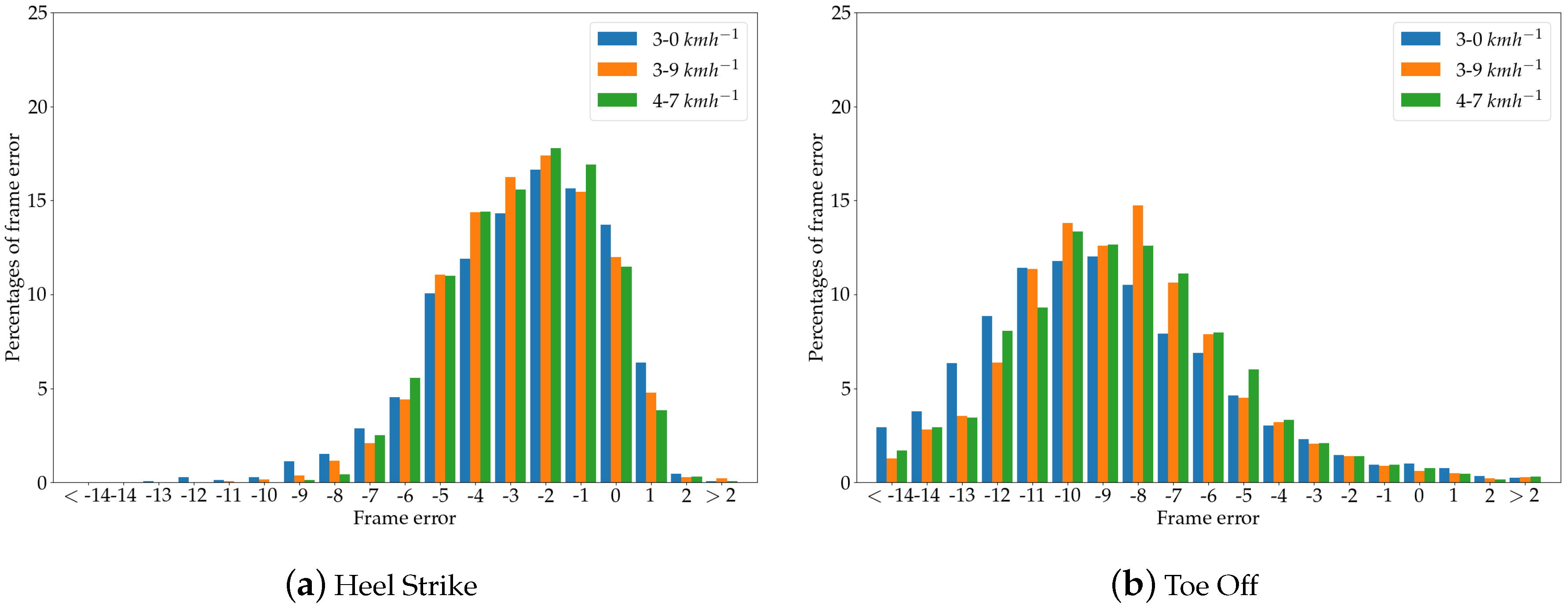

Table 2. Since the timing of the gait events is crucial for the accuracy of the calculated spatio-temporal gait parameters, the frame differences of the identified peaks between the Kinect and Vicon signals were evaluated at 100 Hz.

3.7. Statistical Analysis

The first goal of our experiment was to investigate spatial agreement of the corresponding joint coordinates between both Kinect cameras relative to the Vicon reference system. In order to calculate the accuracy of the Kinect markers, the three different marker models have to be mapped. Using the approach proposed by Otte et al. [

32], a subset of the Vicon retro-reflective markers was mapped to the corresponding markers of the respective Kinect skeleton. This was achieved either by assigning individual Vicon markers that were closest to the corresponding Kinect landmark or, if several Vicon markers were present, by averaging the markers.

Table A1 in the

Appendix A presents the complete marker mapping in detail. Since the Vicon markers were applied to the participant’s skin while both Kinect cameras track the joints approximately within the user’s body (as shown in

Figure 2a,b), an offset of the corresponding marker positions would cause a systematic bias when directly comparing the joint positions. In order to compare the joint trajectories in all three directions, this systematic bias was removed by subtracting the mean value of each axis of each joint [

32]. These signals are referred to as zero-mean shifted signals, where similar movements should now have a high degree of agreement when moving in a certain direction.

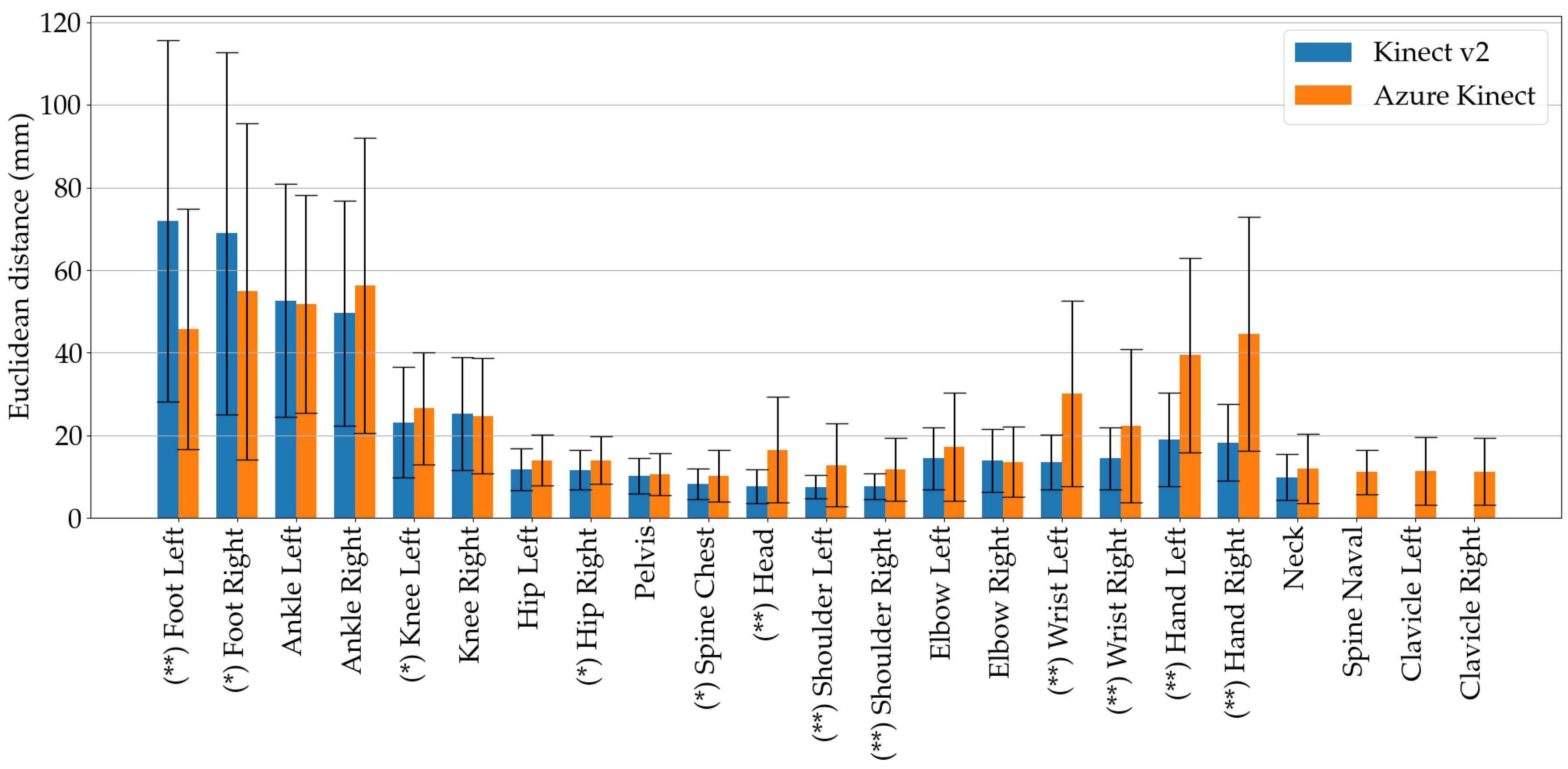

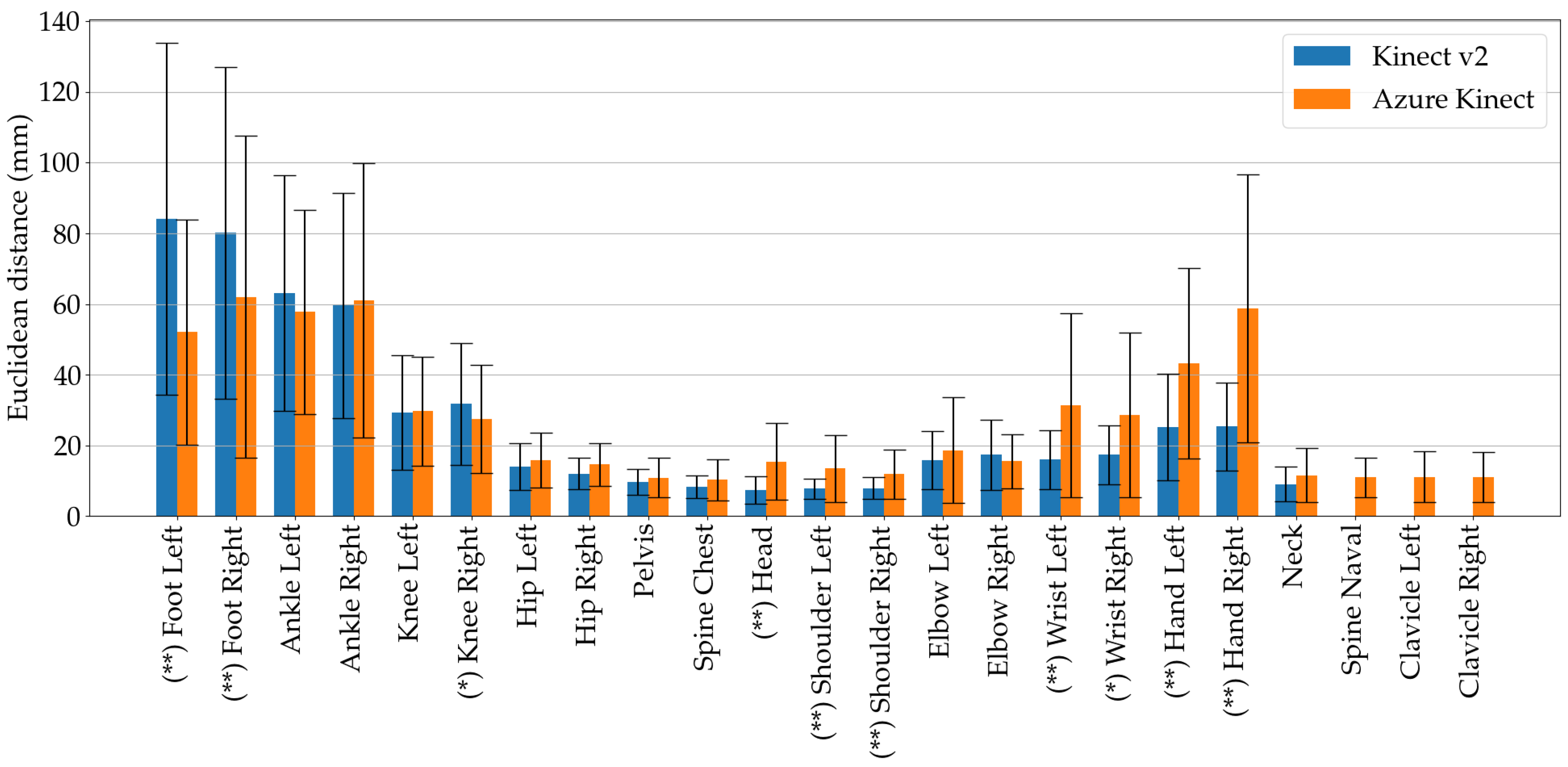

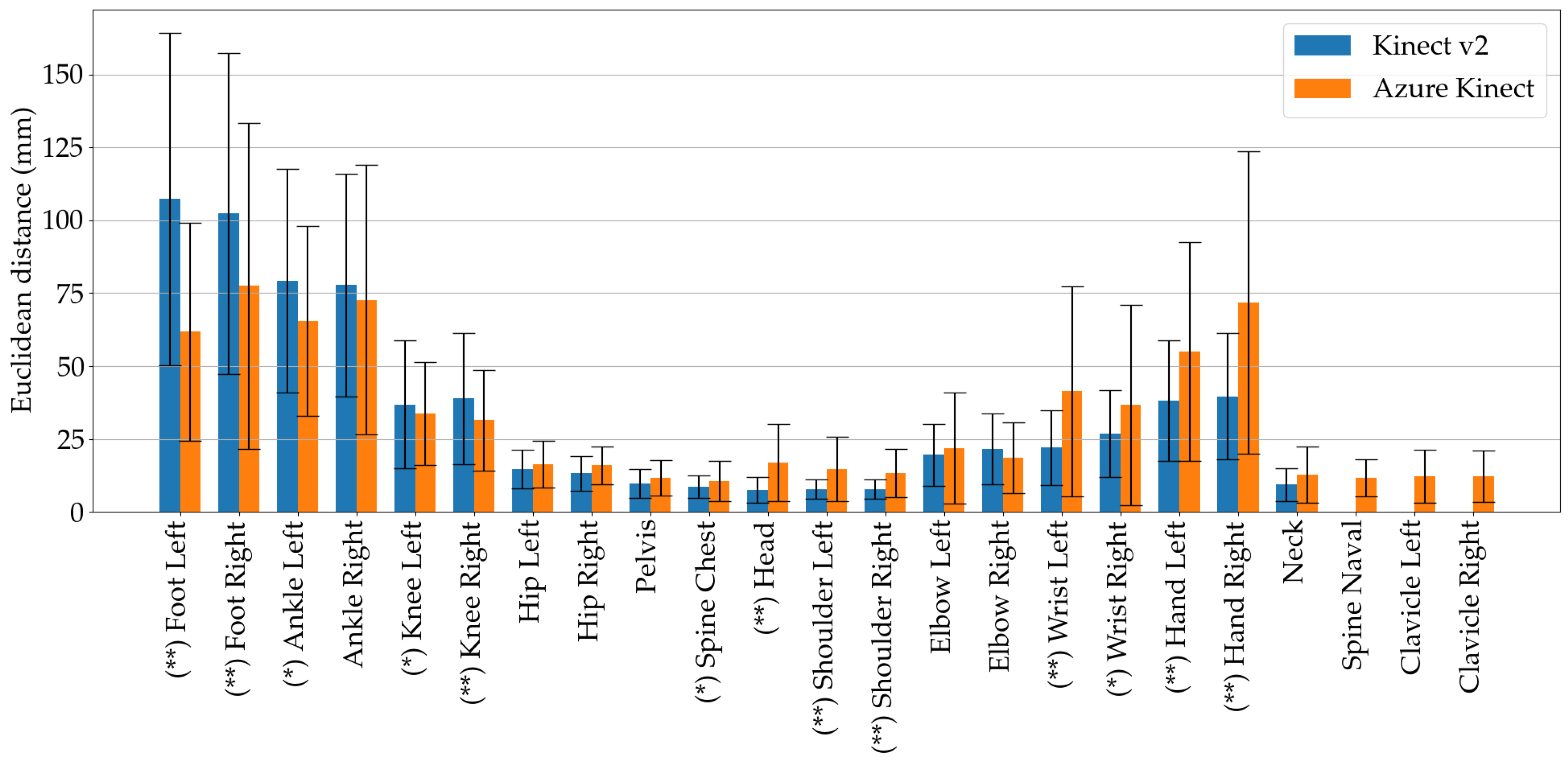

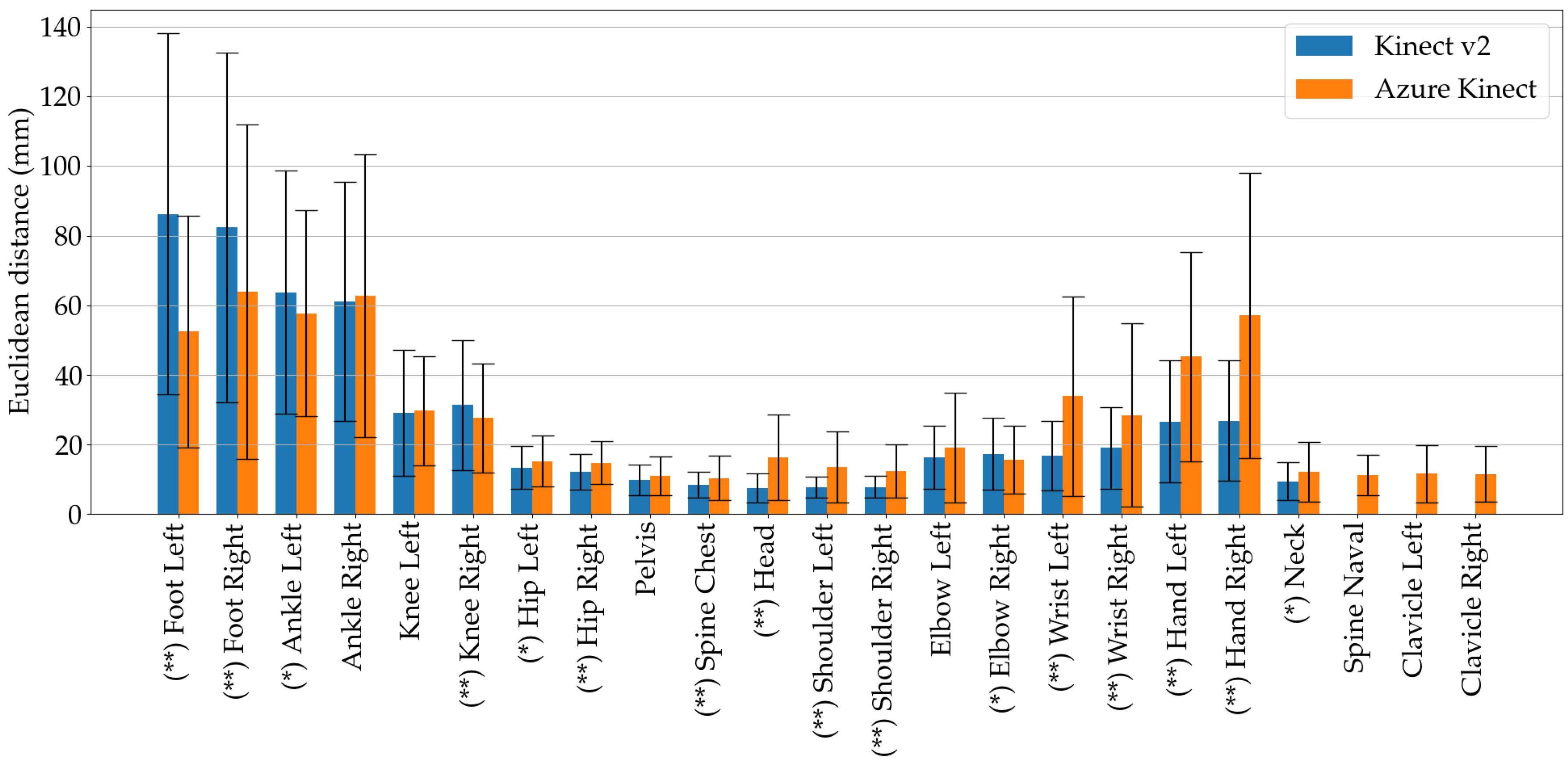

After the marker models were mapped, the 3D Euclidean distance between the zero-mean shifted signals of corresponding joints were calculated over all

N captured frames of all participants and trials and for each Kinect camera individually. The overall tracking accuracy was then assessed as mean and standard deviation of the Euclidean distances [

32]. As this study included five participants, the non-parametric paired Wilcoxon test was performed using the mean values of the distances of all trials. Differences in tracking quality were found to be significant for

p-values smaller than

and

, respectively.

In addition to the paired Wilcoxon test, Pearson’s correlation coefficients were used to assess reliability [

33]. The correlation was assessed of zero-mean shifted signals over all

N captured frames of all subjects and trails. Pearson’s

r-values were calculated for the three axes separately, in the following referred to as the anterio-posterior (AP), medio-lateral (ML), and vertical (V) axes [

32,

34]. The levels of agreement were set to poor (

), moderate (

), good (

) and excellent (

) [

32,

35].

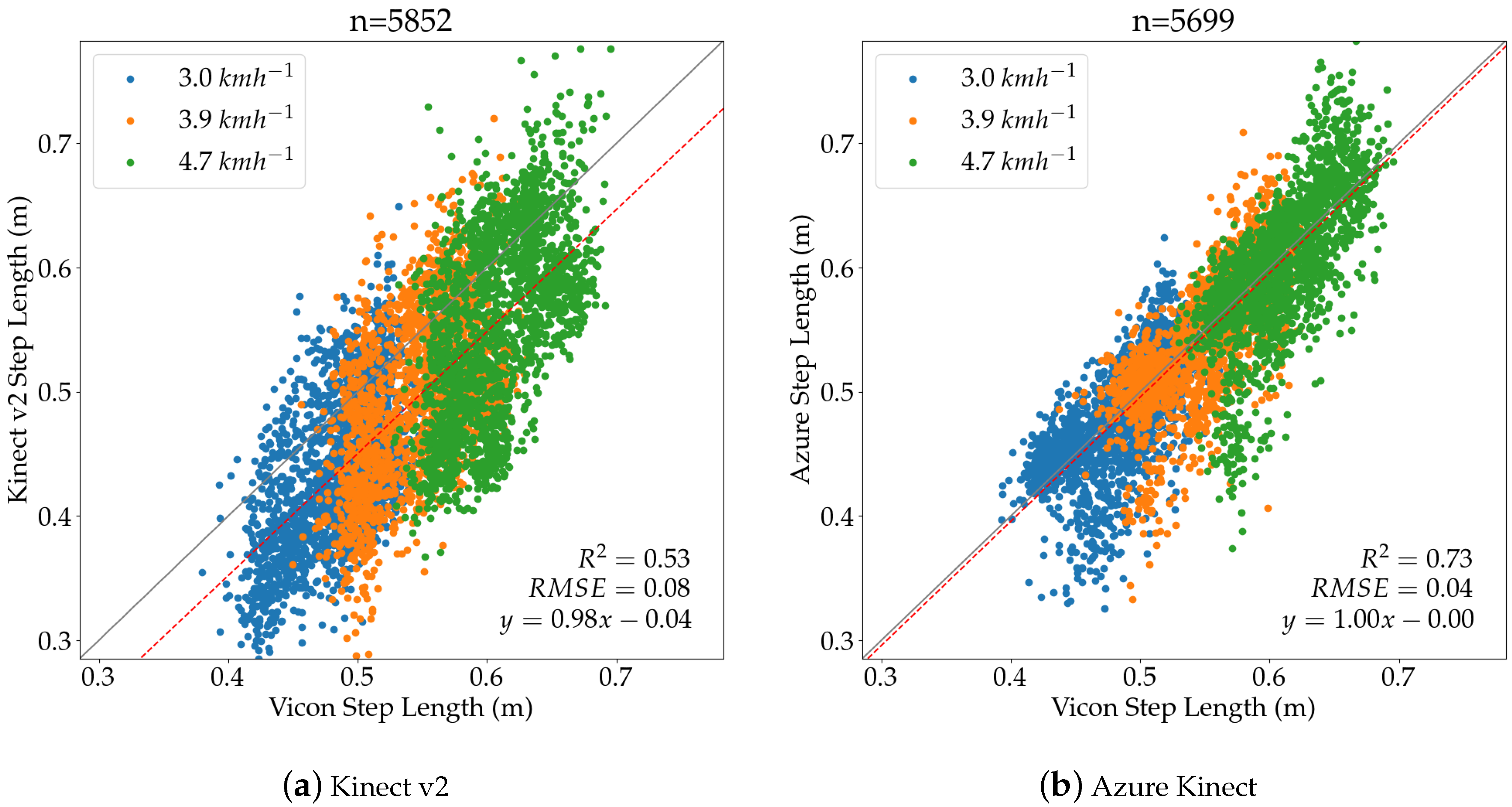

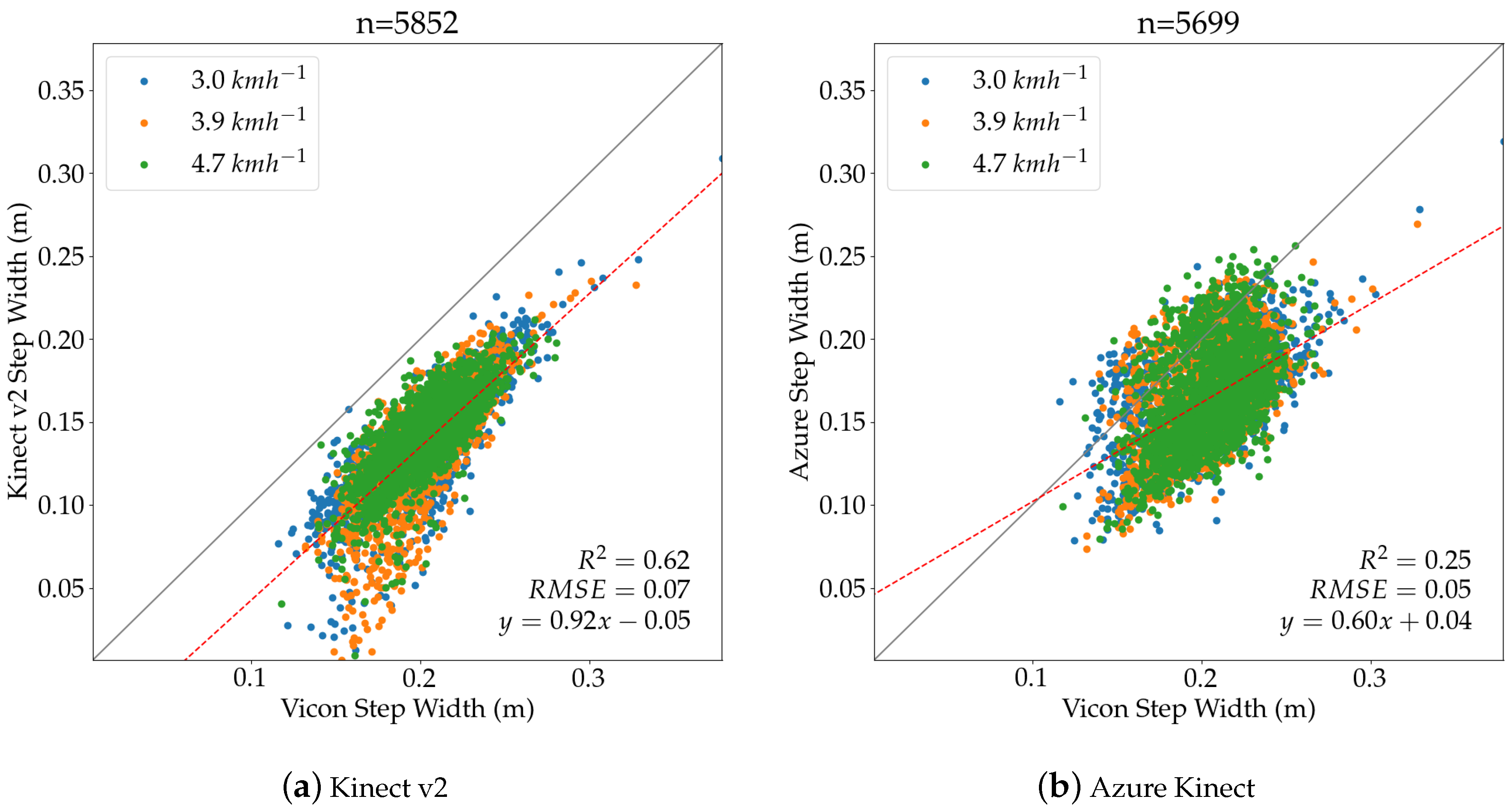

The agreement of the spatio-temporal gait parameters generated by the three camera systems was compared and evaluated over a range of individual treadmill velocities. Therefore, the average values of the Vicon gait parameters and the two Kinect sensors were calculated first.

Absolute

(shown in Equation (

10)) and relative errors

(shown in Equation (

11)) were assessed for each spatio-temporal gait parameter in order to obtain an average absolute magnitude of the differences between the systems as well as a directional magnitude of the differences [

31]. Additionally, the root mean squared error (RMSE) between gait parameters calculated from the Vicon system and those of the Kinect sensors was determined:

To assess the statistical significance between the three camera systems, we performed the non-parametric paired Wilcoxon test on the the pooled data of five subjects by using the mean values of the according gait parameter of individual trials. A significant difference for gait parameters was defined for p-values with .

5. Discussion

In this study, the human pose estimation performance of the Azure Kinect camera was evaluated and compared to its predecessor model Kinect v2 in terms of gait analysis on a treadmill. The evaluation subject was the spatial agreement of joint locations as well as the quality of spatio-temporal gait parameters. A Vicon motion capturing system and the 39 marker full-body Plug-in Gait model were used to evaluate the parameters coming from both Kinect systems.

5.1. Results

The results of this study show that the tracking accuracy of the foot marker trajectories is significantly higher for the Azure Kinect camera over all treadmill velocities (3.0 kmh, 3.9 kmh, and 4.7 kmh) compared to the previous model Kinect v2. However, the Kinect v2 performed better than the Azure Kinect in the mid and upper body region, especially in the upper extremities. The newly added joints (clavicles, spine chest) of the Azure Kinect camera achieved a reasonable tracking error of about 11.5 mm. Regarding the gait parameters, it was shown that, for spatial parameters, i.e., step length and step width, the Azure Kinect camera delivered a significantly higher accuracy than Kinect v2. One possible reason for this could be the improved tracking quality of the foot markers of the Azure Kinect. However, for temporal gait parameters, i.e., step length and stride length, no significant differences were found between the two Kinect camera systems. Furthermore, the calibration method presented here is a tool that can be used in future similar studies for further evaluation of the cameras.

5.2. Study Limitations

Our results are specific to a population of young and healthy participants with normal, i.e., unpathological, gait patterns who walked at average walking speeds. The main objective of this pilot study was to demonstrate the technical feasibility of using the novel skeleton tracking algorithm of the Azure Kinect camera and to compare it with the previous model, Kinect v2. Therefore, no clinical research question was addressed in this study and a smaller sample size was regarded as sufficient.

Although a multi-camera motion capture system was considered the gold standard for evaluating human motion in this study, it is important to note that these systems have potential sources of error. The main drawback of retro-reflective marker-based systems, such as the Vicon system, is that each marker must be seen by at least two cameras at any time in order to be correctly interpolated. The study conducted by Merriaux et al. [

36] examined the accuracy of the Vicon system for both static and dynamic setups with a 4-axis motor arm and a fast-moving rotor, respectively. These mechanical setups provided accurate ground truth data that was compared to the tracked Vicon data. They found that the Vicon system for the static mechanical setup had an average absolute positioning error of 0.15 mm and a variability of 0.015 mm, indicating high accuracy and precision. For fast dynamic movements of the markers, a mean position error of less than 2 mm was determined, whereas the variability in the static setup was lower.

Unlike passive tracking systems that use markers with reflective material, active marker systems use light emitting diodes (LED) that can be tracked by the individual cameras. These LEDs can be assigned to individual identifiers so that the system can easily identify markers after they have been lost, for instance due to occlusion. In passive marker systems, marker confusion in the tracking software is a common problem, especially if the markers move at higher speeds [

37]. The confusion of markers can lead to more holes in the data and therefore requires manual effort to clean up the data in the software with interpolation strategies that introduce artificial artifacts into the data. In our study, we have used a 10-camera Vicon system and resulting holes in the data were filled using the Vicon Nexus software, which eventually introduced noise in the reference data.

With marker-based motion capture systems, the correct placement of active or passive markers on the subject is crucial for the quality of the kinematic data. The study conducted by Tsushima et al. [

38] investigated the test–retest and inter-test reliability of a Vicon system. Kinematic data of the lower extremities were recorded using a repeated measurement protocol of two test sessions on two different days. Two trained experts placed 15 markers on the pelvis and lower body according to a predefined marker model. Across both testers and six unimpaired participants, the authors received high coefficients of multiple correlation (CMC) values for the sagittal plane (

= 0.971–0.994), the frontal plane (

= 0.759–0.977) and the transverse plane (

= 0.729–0.899), excluding the pelvic tilt. From this, the authors conclude that the reduction of variability is possible if standardized marker placement methods are used.

In addition, soft tissue artifacts are a potential source of error in motion capture systems. These artifacts are caused by the movement of markers on the skin, which therefore no longer match the underlying anatomical bone landmarks. Lin et al. [

39] quantified the effect of soft tissue artifacts in the posterior extremity of canines using a fluoroscopic computed tomography system with simultaneous acquisition of Vicon data using retro-reflective markers. It was found that the thigh markers and crus markers had a large peak amplitude of 27.4 mm and 28.7 mm, respectively. In addition, flexion angles were underestimated, but adduction and internal rotation were overestimated once the knee was flexed more than 90 degrees. Given this, it is likely that the recorded data in our study were also susceptible to soft tissue artifacts that led to more unstable data and results.

In gait analysis, the accurate detection of heel strike and toe off events is important as timing information is needed for the exact calculation of the temporal gait parameters and for the normalization of the data per gait cycle. The common gold standard method for the exact determination of these events are force plates [

40]. O’Connor et al. [

40] and Zeni et al. [

30] presented algorithms for calculating these gait events based on kinematic data. However, the calculation of these events using motion capture data are flawed compared to force plate data. The so-called foot velocity algorithm for overground walking, as presented by O’Connor et al. [

40], has an error of 16 ± 15 ms for heel strike and 9 ± 15 ms for toe off events and was verified on a data set containing gait of 54 normal children recorded with a force plate and a motion capture system. The method proposed by Zeni et al. [

30] for detecting gait events on the treadmill was verified on a data set that included seven healthy and unimpaired participants as well as seven multiple sclerosis and four stroke patients. In healthy participants, the algorithm correctly identified 94% of gait events with a 16 ms error. In impaired participants, 89% of treadmill events were identified with a 33 ms error. Since we used the algorithm presented by Zeni et al. [

30] for our study, it can be assumed that the error of their method is also reflected in our results, since we used the temporal gait parameters obtained from Vicon as a reference.

5.3. Practical Application

The experimental setup of this camera evaluation study presented here offers many advantages that could also be transferred to a real-life scenario. Since the two Kinect cameras do not require time-consuming placement of markers, systems using these cameras could be operated by individuals/patients living in remote or rural areas who do not have access to medical services. Findings from our study appear to be relevant for this type of tele medicine or tele rehabilitation approach, particularly for patients with neurological disorders, such as in stroke survivors who are in need of gait retraining. In addition, walking on a treadmill allows data recording even in homes with limited space, as well as recording a large amount of data in just one session. Von Schroeder et al. [

41] have investigated how the gait of stroke patients can change over time. Therefore, data were collected from 49 stroke patients and 24 control subjects using a portable step analyzer (B & L Engineering, Santa Fe Springs, CA, USA). They found that the evaluated gait parameters improved over time, with the greatest change occurring within the first 12 months. With a home setup using tele medicine, similar to the setup shown in

Figure 3a, which consists of only one treadmill and a single Kinect camera, it would be possible to record gait parameters that provide important insights into changes in gait performance. The algorithm described in

Section 3.6 could serve as a basis to obtain more important gait parameters.

Besides the above presented concrete use case, such single-based Kinect camera systems could also be used in different environments, where the use of more complex systems, e.g., multi-camera 3D motion capturing systems, is not feasible. These include physiotherapy, rehabilitation clinics, field test conditions, and fitness centers.

5.4. Future Work

At the time of conducting this pilot study, the Azure Kinect Body Tracking SDK was in its most recent version (version 1.0.1.) and will most likely be subject to further changes in the future, which could lead to improvements in the tracking quality. At this stage, however, a pilot evaluation study was necessary to assess the tracking quality that can be achieved with the improved hardware and DL-based skeleton tracking algorithm to date.

Future research should validate that the Azure Kinect camera could also be used for measurements among different age groups and individuals with gait abnormalities (Parkinsonian gait, stroke, etc.), using a larger number of participants. Various aspects of human movement such as body weight exercises or overground walking should also be further investigated with the Azure Kinect camera.