Evaluation of the Severity of Major Depression Using a Voice Index for Emotional Arousal

Abstract

1. Introduction

2. Materials and Methods

2.1. Acquisition of Data

2.1.1. Data about Arousal Level

2.1.2. Data about the Severity of Depression

2.2. Proposed Method

3. Results

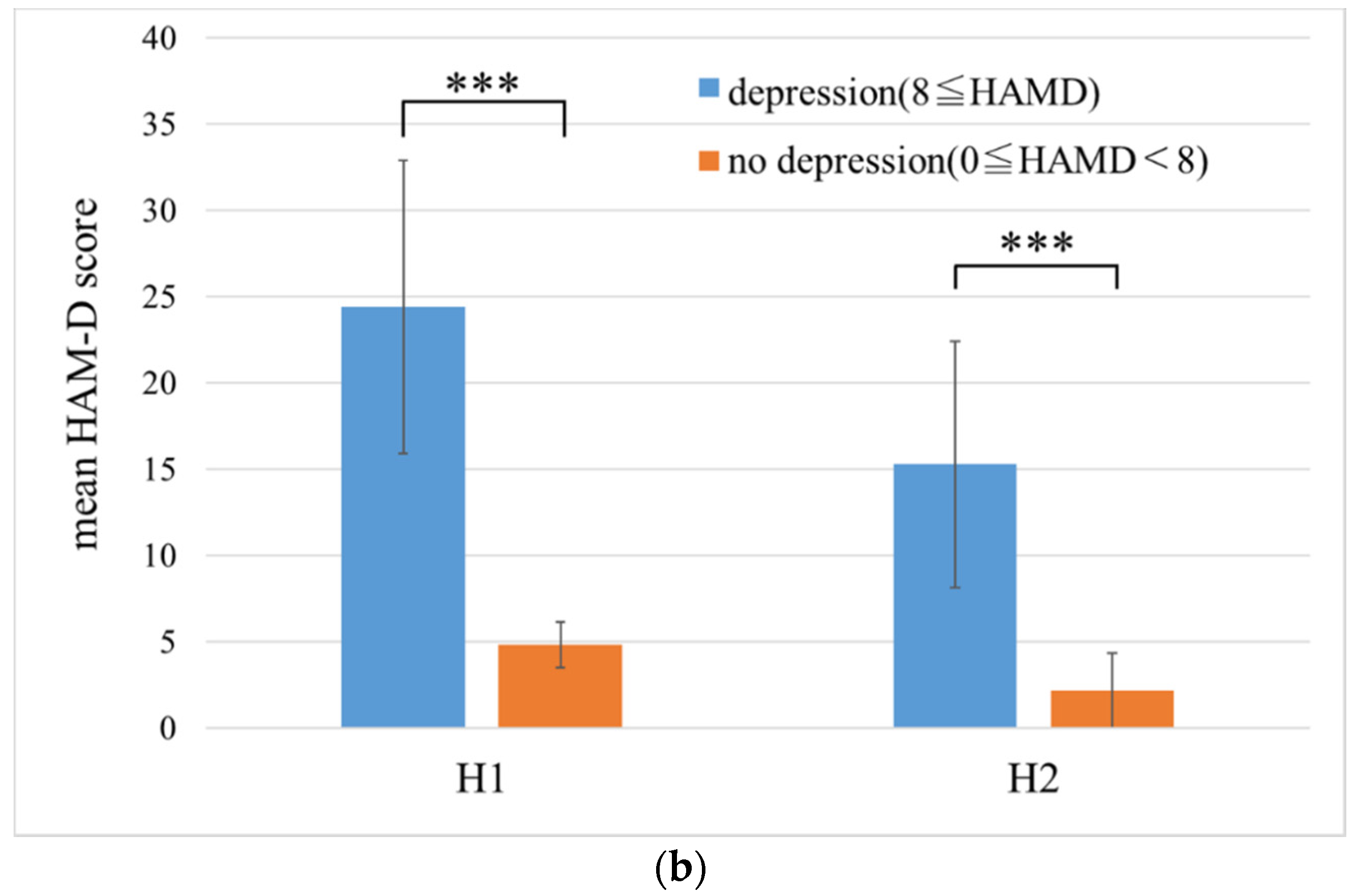

3.1. HAM-D Score

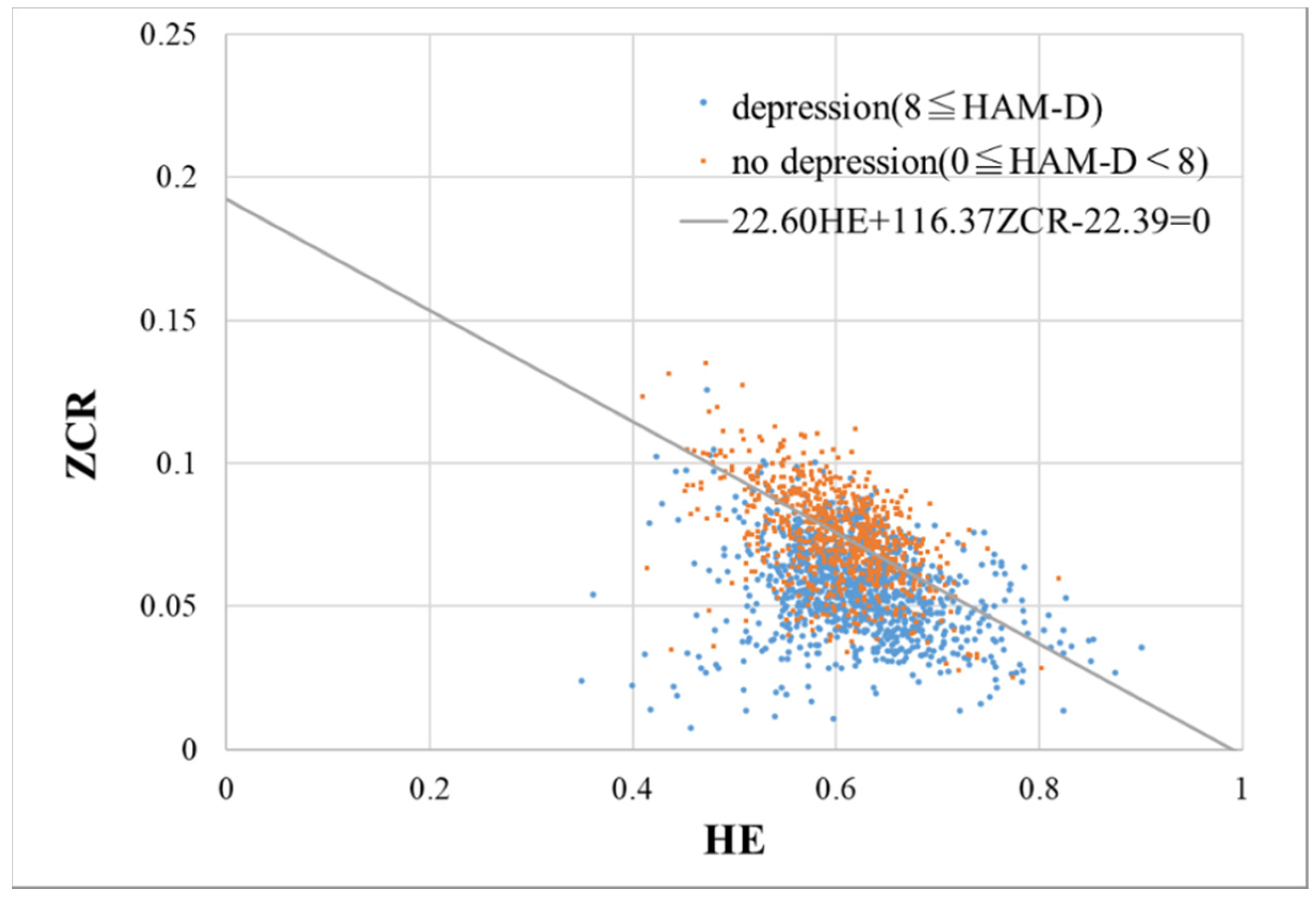

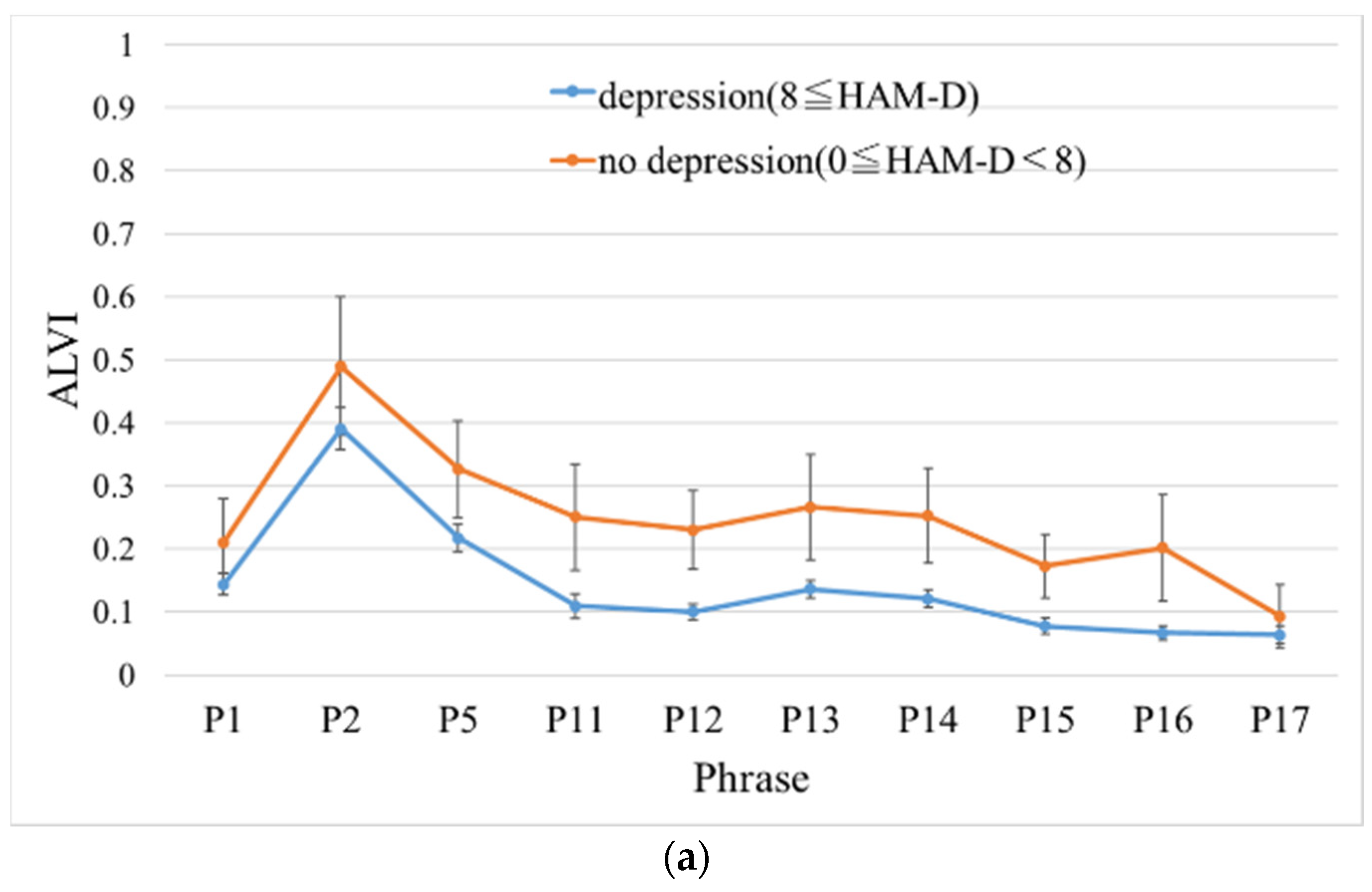

3.2. Performance Evaluation of ALVI

4. Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Kessler, R.C.; Akiskal, H.S.; Ames, M.; Birnbaum, H.; Greenberg, P.; Hirschfeld, R.M.A.; Jin, R.; Merikangas, K.R.; Simon, G.E.; Wang, P.S. Prevalence and effects of mood disorders on work performance in a nationally representative sample of U.S. workers. Am. J. Psychiatry 2006, 163, 1561–1568. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. The Global Burden of Disease: 2004 Update; WHO Press: Geneva, Switzerland, 2004; pp. 46–49. [Google Scholar]

- Gabrieli, G.; Bornstein, M.H.; Manian, N.; Esposito, G. Assessing Mothers’ Postpartum Depression from Their Infants’ Cry Vocalizations. Behav. Sci. 2020, 10, 55. [Google Scholar] [CrossRef] [PubMed]

- Hamilton, M. Development of a rating scale for primary depressive illness. Br. J. Soc. Clin. Psychol. 1967, 6, 278–296. [Google Scholar] [CrossRef] [PubMed]

- Goldberg, D.P. Manual of the General Health Questionnaire; NFER Publishing: Windsor, UK, 1978. [Google Scholar]

- Beck, A.T.; Ward, C.H.; Mendelson, M.; Mock, J.; Erbaugh, J. An inventory for measuring depression. Arch. Gen. Psychiatry 1961, 4, 561–571. [Google Scholar] [CrossRef] [PubMed]

- Delgado-Rodriguez, M.; Llorca, J. Bias. J. Epidemiol. Community Health 2004, 58, 635–641. [Google Scholar] [CrossRef]

- Izawa, S.; Sugaya, N.; Shirotsuki, K.; Yamada, K.C.; Ogawa, N.; Ouchi, Y.; Nagano, Y.; Suzuki, K.; Nomura, S. Salivary dehydroepiandrosterone secretion in response to acute psychosocial stress and its correlations with biological and psychological changes. Biol. Psychol. 2008, 79, 294–298. [Google Scholar] [CrossRef]

- Suzuki, G.; Tokuno, S.; Nibuya, M.; Ishida, T.; Yamamoto, T.; Mukai, Y.; Mitani, K.; Tsumatori, G.; Scott, D.; Shimizu, K. Decreased plasma brain-derived neurotrophic factor and vascular endothelial growth factor concentrations during military training. PLoS ONE 2014, 9, e89455. [Google Scholar] [CrossRef]

- Takai, N.; Yamaguchi, M.; Aragaki, T.; Eto, K.; Uchihashi, K.; Nishikawa, Y. Effect of psychological stress on the salivary cortisol and amylase levels in healthy young adults. Arch. Oral Biol. 2004, 49, 963–968. [Google Scholar] [CrossRef]

- Hori, H.; Nakamura, S.; Yoshida, F.; Teraishi, T.; Sasayama, D.; Ota, M.; Hattori, K.; Kim, Y.; Higuchi, T.; Kunugi, H. Integrated profiling of phenotype and blood transcriptome for stress vulnerability and depression. J. Psychiatry Res. 2018, 104, 202–210. [Google Scholar] [CrossRef]

- Arora, S.; Venkataraman, V.; Zhan, A.; Donohue, S.; Biglan, K.M.; Dorsey, E.R.; Little, M.A. Detecting and monitoring the symptoms of Parkinson’s disease using smartphones: A pilot study. Park. Relat. D 2015, 21, 650–653. [Google Scholar] [CrossRef]

- Rachuri, K.K.; Musolesi, M.; Mascolo, C.; Rentfrow, P.J.; Longworth, C.; Aucinas, A. EmotionSense: A mobile phones based adaptive platform for experimental social psychology research. In Proceedings of the 12th ACM International Conference on Ubiquitous Computing, Copenhagen, Denmark, 26–29 September 2010; pp. 281–290. [Google Scholar]

- Lu, H.; Rabbi, M.; Chittaranjan, G.T.; Frauendorfer, D.; Mast, M.S.; Campbell, A.T.; Gatica-Perez, D.; Choudhury, T. StressSense: Detecting stress in unconstrained acoustic environments using smartphones. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 351–360. [Google Scholar]

- Byrom, B.; McCarthy, M.; Schueler, P.; Muehlhausen, W. Brain monitoring devices in neuroscience clinical research: The potential of remote monitoring using sensors, wearables, and mobile devices. Clin. Pharmacol. Ther. 2018, 104, 59–71. [Google Scholar] [CrossRef] [PubMed]

- Haihua, J.; Bin, H.; Zhenyu, L.; Lihua, Y.; Tianyang, W.; Fei, L.; Kang, H.; Li, X. Investigation of different speech types and emotions for detecting depression using different classifiers. Speech Commun. 2017, 90, 39–46. [Google Scholar] [CrossRef]

- Sobin, C.; Sackeim, H.A. Psychomotor symptoms of depression. Am. J. Psychiatry 1997, 154, 4–17. [Google Scholar] [PubMed]

- Darby, J.K.; Hollien, H. Vocal and speech patterns of depressive patients. Folia Phoniatr. 1977, 29, 279–291. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Fairbairn, C.; Cohn, J.F. Detecting depression severity from vocal prosody. IEEE Trans. Affect. Comput. 2013, 4, 142–150. [Google Scholar] [CrossRef] [PubMed]

- Mundt, J.C.; Vogel, A.P.; Feltner, D.E.; Lenderking, W.R. Vocal acoustic biomarkers of depression severity and treatment response. Biol. Psychiatry 2012, 72, 580–587. [Google Scholar] [CrossRef]

- Cannizzaro, M.; Harel, B.; Reilly, N.; Chappell, P.; Snyder, P.J. Voice acoustical measurement of the severity of major depression. Brain Cogn. 2004, 56, 30–35. [Google Scholar] [CrossRef] [PubMed]

- Faurholt-Jepsen, M.; Busk, J.; Frost, M.; Vinberg, M.; Christensen, E.M.; Winther, O.; Bardram, J.K.; Kessing, L.V. Voice analysis as an objective state marker in bipolar disorder. Transl. Psychiatry 2016, 6, e856. [Google Scholar] [CrossRef]

- Young, R.C.; Biggs, J.T.; Ziegler, V.E.; Meyer, D.A. A rating scale for mania: Reliability, validity and sensitivity. Br. J. Psychiatry 1978, 133, 429–435. [Google Scholar] [CrossRef]

- Eyben, F.; Wöllmer, M.; Schuller, B. OpenSMILe—The Munich Versatile and Fast OpenSource Audio Feature Extractor. In Proceedings of the 18th ACM International Conference on Multimedia, Firenze, Italy, 12–16 October 2010; pp. 1459–1462. [Google Scholar]

- Hashim, N.W.; Wilkes, M.; Salomon, R.; Meggs, J.; France, D.J. Evaluation of voice acoustics as predictors of clinical depression scores. J. Voice 2017, 31, 256.e1–256.e6. [Google Scholar] [CrossRef]

- Taguchi, T.; Tachikawa, H.; Nemoto, K.; Suzuki, M.; Nagano, T.; Tachibana, R.; Nishimura, M.; Arai, T. Major depressive disorder discrimination using vocal acoustic features. J. Affect. Disord. 2018, 225, 214–220. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, F.; Sander, C.; Dietz, M.; Nowak, C.; Schröder, T.; Mergl, R.; Schönknecht, P.; Himmerich, H.; Hegerl, U. Brain arousal regulation as response predictor for antidepressant therapy in major depression. Sci. Rep. 2017, 7, 45187. [Google Scholar] [CrossRef] [PubMed]

- Moratti, S.; Rubio, G.; Campo, P.; Keil, A.; Ortiz, T. Hypofunction of right temporoparietal cortex during emotional arousal in depression. Arch. Gen. Psychiatry 2008, 65, 532–541. [Google Scholar] [CrossRef]

- Benning, S.D.; Oumeziane, B.A. Reduced positive emotion and underarousal are uniquely associated with subclinical depression symptoms: Evidence from psychophysiology, self-report, and symptom clusters. Psychophysiology 2017, 54, 1010–1030. [Google Scholar] [CrossRef] [PubMed]

- Bone, D.; Lee, C.C.; Narayanan, S. Robust unsupervised arousal rating: A rule-based framework with knowledge-inspired vocal features. IEEE Trans. Affect. Comput. 2014, 5, 201–213. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, J.; Janse, E.; Scharenborg, O. Perception of emotion in conversational speech by younger and older listeners. Front. Psychol. 2016, 7, 781. [Google Scholar] [CrossRef]

- Lazarus, R.S. From psychological stress to the emotions: A history of changing outlooks. Annu. Rev. Psychol. 1993, 44, 1–21. [Google Scholar] [CrossRef]

- American Psychiatric Association. Steering Committee on Practice Guidelines, American Psychiatric Association Practice Guidelines for the Treatment of Psychiatric Disorders: Compendium 2000; American Psychiatric Association: Arlington, VA, USA, 2000. [Google Scholar]

- Busso, C.; Bulut, M.; Lee, C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335. [Google Scholar] [CrossRef]

- Shinohara, S.; Toda, H.; Nakamura, M.; Omiya, Y.; Higuchi, M.; Takano, T.; Saito, T.; Tanichi, M.; Boku, S.; Mitsuyoshi, S.; et al. Evaluation of emotional arousal level and depression severity using the centripetal force derived from voice. Med. Rxiv. 2020. [Google Scholar] [CrossRef]

- Otsubo, T.; Tanaka, K.; Koda, R.; Shinoda, J.; Sano, N.; Tanaka, S.; Aoyama, H.; Mimura, M.; Kamijima, K. Reliability and validity of Japanese version of the Mini-International Neuropsychiatric Interview. Psychiatry Clin. Neurosci. 2005, 59, 517–526. [Google Scholar] [CrossRef]

- Eom, C.; Choi, S.; Oh, G.; Jung, W.S. Hurst exponent and prediction based on weak-form efficient market hypothesis of stock markets. Physica A 2008, 387, 4630–4636. [Google Scholar] [CrossRef]

- Jalil, M.; Butt, F.A.; Malik, A. Short-time energy, magnitude, zero-crossing rate and autocorrelation measurement for discriminating voiced and unvoiced segments of speech signals. In Proceedings of the 2013 International Conference on Technological Advances in Electrical, Electronics and Computer Engineering (TAEECE), Konya, Turkey, 9–11 May 2013; pp. 208–212. [Google Scholar] [CrossRef]

- Bachu, R.; Kopparthi, S.; Adapa, B.; Barkana, B. Voiced/unvoiced decision for speech signals based on zero-crossing rate and energy. In Advanced Techniques in Computing Sciences and Software Engineering; Elleithy, K., Ed.; Springer: Dordrecht, The Netherlands, 2010; pp. 279–282. [Google Scholar]

- Kazi, M.H.; Ekramul, H.; Khademul, I.M.A. Method for voiced/unvoiced classification of noisy speech by analyzing time-domain features of spectrogram image. Sci. J. Circuits Syst. Signal. Process. 2017, 6, 11–17. [Google Scholar] [CrossRef][Green Version]

- A Language and Environment for Statistical Computing. Available online: https://www.R-project.org/ (accessed on 5 July 2019).

- Zimmerman, M.; Martinez, J.H.; Young, D.; Chelminski, I.; Dalrymple, K. Severity classification on the Hamilton Depression Rating Scale. J. Affect. Disord. 2013, 150, 384–388. [Google Scholar] [CrossRef] [PubMed]

- Shinohara, S.; Omiya, Y.; Nakamura, M.; Hagiwara, N.; Higuchi, M.; Mitsuyoshi, S.; Tokuna, S. Multilingual evaluation of voice disability index using pitch rate. Adv. Sci. Technol. Eng. Syst. J. 2017, 2, 765–772. [Google Scholar] [CrossRef]

| Phrase | Phrase in Japanese | Purpose (Meaning) |

|---|---|---|

| P1 | I-ro-ha-ni-ho-he-to | Non-emotional (no meaning, similar to “a-b-c”) |

| P2 | Honjitsu ha seiten nari | Non-emotional (It is fine today) |

| P5 | Mukashi aru tokoro ni | Non-emotional (Once upon a time, there lived) |

| P11 | Garapagosu shotou | Check pronunciation (Galápagos Islands) |

| P12 | Tsukarete guttari shiteimasu. | Emotional (I am tired/dead tired) |

| P13 | Totemo genki desu | Emotional (I am very cheerful) |

| P14 | Kinou ha yoku nemuremashita | Emotional (I was able to sleep well yesterday) |

| P15 | Shokuyoku ga arimasu | Emotional (I have an appetite) |

| P16 | Okorippoi desu | Emotional (I am irritable) |

| P17 | Kokoroga odayaka desu | Emotional (My heart is calm) |

| Hospital | Sex | Number of Subjects | Mean Age ± SD |

|---|---|---|---|

| H1 | Female | 55 | 31.6 ± 8.6 |

| Male | 33 | 32.5 ± 6.5 | |

| Total | 88 | 32.0 ± 7.9 | |

| H2 | Female | 44 | 62.0 ± 13.1 |

| Male | 46 | 48.8 ± 13.5 | |

| Total | 90 | 55.2 ± 14.8 |

| Hospital | Group | Number of Subjects | Mean HAM-D Score ± SD |

|---|---|---|---|

| H1 | No depression (HAM-D < 8) | 10 | 4.8 ± 1.3 |

| Depression (HAM-D ≧ 8) | 78 | 24.4 ± 8.5 | |

| Total | 88 | 22.2 ± 10.1 | |

| H2 | No depression (HAM-D < 8) | 65 | 2.2 ± 2.2 |

| Depression (HAM-D ≧ 8) | 25 | 15.3 ± 7.2 | |

| Total | 90 | 5.8 ± 7.2 |

| Phrase | p-Value a | AUC | ||

|---|---|---|---|---|

| H1 | H2 | H1 | H2 | |

| P1 | 0.33 | 0.027 * | 0.60 | 0.65 |

| P2 | 0.30 | 0.0092 ** | 0.60 | 0.68 |

| P5 | 0.20 | 0.00060*** | 0.63 | 0.74 |

| P11 | 0.096 * | 0.29 | 0.66 | 0.57 |

| P12 | 0.040* | 0.016 * | 0.70 | 0.67 |

| P13 | 0.17 | 0.0047 ** | 0.63 | 0.69 |

| P14 | 0.096* | 0.0062 ** | 0.66 | 0.69 |

| P15 | 0.099* | 0.040 * | 0.66 | 0.64 |

| P16 | 0.19 | 0.022 * | 0.63 | 0.68 |

| P17 | 0.28 | 0.028 * | 0.61 | 0.65 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shinohara, S.; Toda, H.; Nakamura, M.; Omiya, Y.; Higuchi, M.; Takano, T.; Saito, T.; Tanichi, M.; Boku, S.; Mitsuyoshi, S.; et al. Evaluation of the Severity of Major Depression Using a Voice Index for Emotional Arousal. Sensors 2020, 20, 5041. https://doi.org/10.3390/s20185041

Shinohara S, Toda H, Nakamura M, Omiya Y, Higuchi M, Takano T, Saito T, Tanichi M, Boku S, Mitsuyoshi S, et al. Evaluation of the Severity of Major Depression Using a Voice Index for Emotional Arousal. Sensors. 2020; 20(18):5041. https://doi.org/10.3390/s20185041

Chicago/Turabian StyleShinohara, Shuji, Hiroyuki Toda, Mitsuteru Nakamura, Yasuhiro Omiya, Masakazu Higuchi, Takeshi Takano, Taku Saito, Masaaki Tanichi, Shuken Boku, Shunji Mitsuyoshi, and et al. 2020. "Evaluation of the Severity of Major Depression Using a Voice Index for Emotional Arousal" Sensors 20, no. 18: 5041. https://doi.org/10.3390/s20185041

APA StyleShinohara, S., Toda, H., Nakamura, M., Omiya, Y., Higuchi, M., Takano, T., Saito, T., Tanichi, M., Boku, S., Mitsuyoshi, S., So, M., Yoshino, A., & Tokuno, S. (2020). Evaluation of the Severity of Major Depression Using a Voice Index for Emotional Arousal. Sensors, 20(18), 5041. https://doi.org/10.3390/s20185041