Abstract

Automated and high-accuracy three-dimensional (3D) shape measurement is required in quality control of large-size components for the aerospace industry. To eliminate the contradiction between global measurement and local precision measurement control in 3D digitalization for the key local features of the large-size components, a combined measurement method is proposed, including a 3D scanner, a laser tracker, and an industrial robot used as an orienting device, to achieve high-accuracy measurement. As for improving the overall measurement accuracy, an accurate calibration method based on coordinate optimization of common points (COCP) and coordinate optimization of global control points (COGP) is proposed to determine the coordinate systems. Firstly, a coordinate optimization method of common points (COCP) is recommended. Then, a coordinate optimization method of global control points (COGP) based on the angular constraint is proposed for minimizing the measurement errors and improving the measurement accuracy of the position and orientation of the 3D scanner. Finally, a combined measurement system is established, and validation experiments are carried out in laboratory within a distance of 4 m. The calibration experiment results demonstrate that the max and mean errors of the coordinate transformation have been reduced from 0.037 and 0.022 mm to 0.021 and 0.0122 mm. Additionally, the measurement experiment results also show that the combined measurement system features high accuracy.

1. Introduction

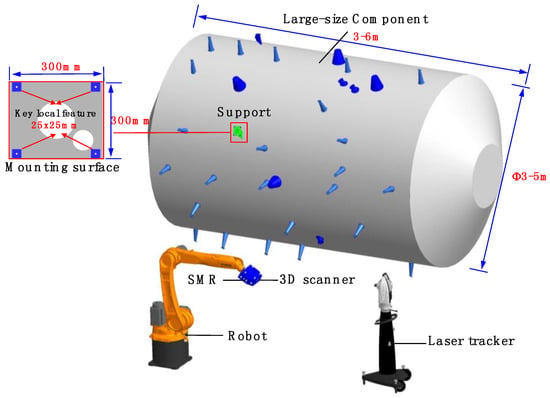

Large-size components with a large number of supports are commonly seen in modern advanced manufacturing, especially in the aerospace industry. The machining quality of the key local features on the support mounting surface directly impacts the quality of assemblies between the large-scale component and external instruments. On-line machining is necessary because high-precision and high-efficiency machining cannot presently be achieved through off-line processing. Therefore, to ensure on-line machining accuracy, it is essential to measure the key local features on-line at the product design coordinates. The key 3D and geometrical information derived from the measurement includes the component’s position and orientation, the 3D shape, and the location of the key local features on the supports. Furthermore, the measuring range (3 × 3 m to 5 × 6 m) is large, which makes it challenging to locate the support with high accuracy and simultaneously acquire 3D information of the key local feature (25 × 25 mm) with high accuracy (±0.035 mm). The requirement of measuring the key local features in large-scale on-site machining usually cannot be met by the employment of a single measuring device. Additionally, all information needs to be obtained at the same time and transformed into a unified coordinate system, which also makes the large-scale 3D measurement extremely challenging.

Various methods and systems have been proposed for the large-scale 3D measurements [1,2]. The three-coordinate measuring machines (CMMs) have been extensively applied in 3D measurement due to their high accuracy and excellent stability. With the development of noncontact optical measuring equipment and computer vision techniques, visual sensors have been integrated with traditional CMMs [3,4,5]. However, due to the limitation of CMM measurement efficiency, this allows only a small percentage of products to be sampled and inspected. Furthermore, CMM is less used in on-site and on-line measurement due to its structure. When 3D shape information of large-sized components needs to be measured, the 3D shape measurement system integrated with photogrammetry and fringe projection [6] is widely used. However, reflective markers on the target must be attached before the measurement, which interferes with the morphology of the measured part and affects the measurement efficiency. Recently, industrial robots have been extensively applied in the manufacturing field as economical and flexible orienting devices. Therefore, an increasing number of visual sensors are being integrated into robots. Laser scanning, a technology for large-scale 3D shape measurement [7,8,9,10], is more available and economical. However, laser scanning only collects data along limited lines for each measurement, which may result in the robot scanning results containing ripples. Thus, the measurements of the key local features remain barriers to obtaining high accuracy. To extend the measuring range of the laser scanner at designated measurement positions, a movement mechanism [11,12] has been integrated into the laser scanning system. In addition, a linear track or a rotary system will be required to be put into use. However, the movement mechanism inevitably gives rise to errors, reducing the measurement accuracy. The movement mechanism should be calibrated to ensure measurement accuracy. Compared with the laser scanning, structured light profilometry [13,14,15,16,17,18,19,20] has been well developed and widely used for scanning the surface of the object rapidly, as well as acquiring a high-density and high-quality point cloud of a region for each measurement. If the calibration process of the visual sensors is well designed and implemented, their measurement accuracy can be guaranteed [21,22]. Furthermore, compared with the line scanning method, structured light profilometry has a much bigger scanned area and thus is more efficient. Due to the large-size geometry of the component and the finite measuring range of a single station, it is difficult to guarantee the overall measurement accuracy.

To further expand the measurement range for measuring large-size objects and guarantee the overall measurement accuracy, more external measurement devices are being integrated into 3D shape measurement systems [23,24,25,26], such as indoor GPS (iGPS) systems, total stations, and laser trackers. Du, F. et al. [23] developed a large-scale 3D measurement system that combines iGPS, a robot, and a portable scanner. However, the overall measurement accuracy is limited by the measurement property of the iGPS. Paoli, A. et al. [24] developed a 3D measurement system that combines a 3D scanner, a total station, and a robot for automating the measurement process of hull yacht shapes. Several optical target corner cube reflectors are mounted on the robotic system basis and tracked by a total station. However, the robot positioning error is inevitably introduced, and the measurement accuracy is restricted by the robot positioning accuracy. Leica developed a large-scale 3D shape measurement system [25] by combining a laser tracker, T-scan, and a robot. However, it was too expensive to be widely adopted. Du, H. et al. [26] proposed a robot-integrated 3D scanning system, which combines a 3D scanner, a laser tracker, and a robot. The scanner is carried by the robot heading to the planned measurement position during operation. Its end coordinate system is created by rotating the 3D scanner, which is tracked by the laser tracker. However, the laser tracker cannot detect and control the measurement errors during the measurement process.

As the requirements for accuracy have continued to increase, the current measurement methods and systems mentioned above cannot meet the present requirements for high-accuracy on-line measurement of key local features. Besides, the study of the error control in measurement systems is limited. Therefore, a combined measurement method for the large-scale 3D shape measurement of key local features is proposed, combining a 3D scanner, a laser tracker, and an industrial robot. On the basis, a novel calibration method is carried out.

The remainder of the paper is structured as follows: Section 2 introduces the combined measurement method in detail. The calibration of the measurement system is described in Section 3. In Section 4, the proposed method is verified through calibration experiments and measurement experiments, and concluding remarks are provided in Section 5.

2. Measurement Principle

2.1. Combined Measurement Method

The proposed combined measurement system mainly incorporates a laser tracker, a 3D scanner, and a robot, as shown in Figure 1. Based on the fringe projection technique, the 3D scanner can capture local high-accuracy 3D shape information. The global measurement method adopts laser trackers to ensure the unity of overall and local measurement accuracy. Additionally, the industrial robot is introduced as the orienting device to improve the efficiency. The 3D scanner will be carried by the robot heading to the discrete measured regions. Then, the laser tracker measures the spherically mounted reflectors (SMRs) rigidly mounted on the base of the 3D scanner. In this way, the position and orientation of the 3D scanner can be acquired. Besides, the acquired data are unified in the world coordinate system defined by the laser tracker.

Figure 1.

Hardware architecture and the combined measurement scheme.

A calibration method is proposed to improve the overall measurement accuracy of the proposed system. The relationship between the intermediate coordinate system set on the 3D scanner and the 3D scanner measurement coordinate system is deduced by the method. As for improving the overall measurement accuracy, an accurate calibration method based on coordinate optimization of common points (COCP) and coordinate optimization of global control points (COGP) is proposed to determine the coordinate systems. In the calibration process, both the 3D scanner and the laser tracker are used at different positions for measuring the common points and acquiring redundant data. Firstly, the COCP is recommended. Then, the 3D data of the target are obtained by moving the multiview 3D scanner. Meanwhile, the position and orientation of the 3D scanner at each position are acquired by the laser tracker. Moreover, the COGP based on the angular constraint is proposed for controlling the measurement errors and improving the measurement accuracy for the position and orientation of the 3D scanner.

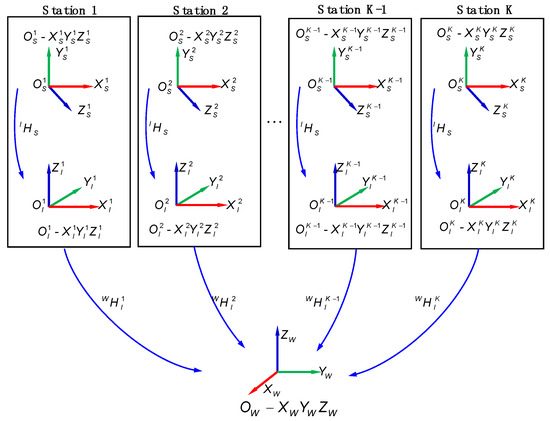

2.2. Measurement Model

The coordinate systems of the proposed system comprise the 3D scanner measurement coordinate system (SCS), the intermediate coordinate system (ICS), and world coordinate system (WCS). To provide laser trackers with the position and orientation of the 3D scanner, ICS is created on the 3D scanner framework and serves as a fixed reference frame of the SCS. Besides, to get the complete data for the key local features on the support mounting surface, the measurement should be implemented multiple times at different stations. Then, the acquired multiview data can be converted into WCS. The schematic of the combined measurement model is shown in Figure 2.

Figure 2.

Schematic of the combined measurement model.

With being a visual point on the workspace, the coordinate mapping model between WCS and SCS is expressed as follows:

where and are the homogeneous coordinates of the visual point P in WCS and SCS, respectively. and are homogeneous transformation matrices. denotes the transformation relationship between WCS and ICS while , as an invariable during the measurement process, reflects the coordinate transformation relationship between ICS and SCS. As the calibration of transformation for the ICS and the SCS is viewed as extrinsic parameter calibration, an accurate calibration method will be introduced in the following section.

3. Calibration of the Measurement System

The high-precision model for coordinate transformation between SCS and ICS is established by extrinsic parameter calibration. Additionally, the extrinsic parameter calibration has to be performed before the combined measurement system is applied for large-scale metrology, and there is no calibration procedure during the measurement process. Therefore, an accurate extrinsic parameter calibration result is a critical factor in ensuring the overall measurement accuracy of the proposed system.

As for improving the overall measurement accuracy and minimizing the measurement errors, a extrinsic parameter calibration method based on COCP and COGP optimization is proposed. Firstly, the COCP is recommended. Then, the COGP based on the angular constraint is proposed for minimizing the measurement errors and improving the measurement accuracy of the position and orientation of the 3D scanner.

3.1. Calibration Principle

The homogeneous coordinates of the points in two coordinate systems can be denoted as and . The relationship can be expressed as follows:

where is the homogeneous transformation matrix. is a rotation matrix, and are the angles of Cardan. is a translation vector.

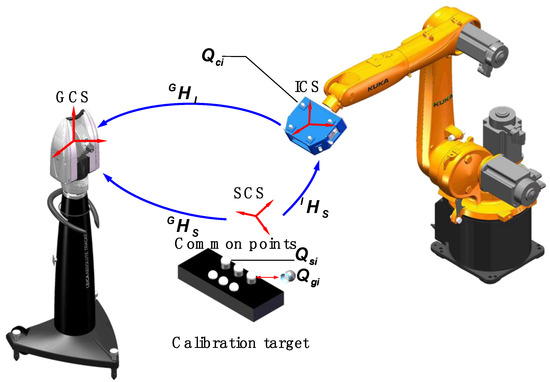

The combined calibration system consists of a 3D scanner, a laser tracker, an industrial robot, and the calibration target. The principle of extrinsic parameter calibration is shown in Figure 3. Firstly, to establish the transformation relationship between SCS and GCS, the laser tracker and the 3D scanner measure the common points arranged on the calibration target. Then, the laser tracker can locate and track the position and orientation of the 3D scanner by measuring the coordinates of the global control points, and the transformation matrix is established after the process. Finally, according to the two transformation matrixes above, the extrinsic parameter matrix can be calculated.

Figure 3.

Principle of extrinsic parameter calibration.

Four or more noncollinear SMRs set on the 3D scanner, known as global control points, are denoted as . Besides, the homogeneous coordinates of can be denoted as in ICS. Two groups of target observation points replaced by each other are arranged on the calibration target for ensuring the accuracy of extrinsic parameter calibration. As the standard ceramic spheres take the place of SMRs, the sphere centers of the SMRs are basically in the same positions as those of sphere centers of the standard ceramic spheres. The homogeneous coordinates of the laser tracker observation points can be denoted as in laser tracker measurement coordinate system (GCS), and the homogeneous coordinates of the 3D scanner observation points can be denoted as in SCS; the following relationship between them exists:

The homogeneous coordinates of can be denoted as in GCS, and then the following relationship between in ICS and in GCS is expressed as follows:

where is the transformation matrix between GCS and ICS.

According to Equations (2)–(4), the can be calculated as follows:

Improving the accuracy of transformation matrixes and is the key to improving the accuracy of transformation matrix . However, the measurement errors of the laser tracker and the 3D scanner could lead to transformation parameter errors. Therefore, to improve the accuracy of extrinsic parameter calibration by minimizing measurement errors, the coordinate optimization method of the common points is proposed in Section 3.2, and the coordinate optimization method of the global control points is proposed in Section 3.3.

3.2. Optimization of the Coordinates of Common Points

The common points arranged on the calibration target are measured by both the laser tracker and the 3D scanner. COCP is proposed to minimize measurement errors. In addition, it optimizes the transformation parameters of the , which consists of three angle parameters in matrix and three translation parameters in .

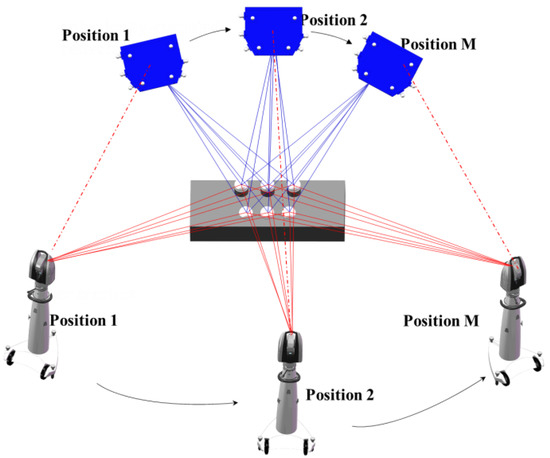

Figure 4 shows common points that are measured at M positions. If the coordinate system of the first position is taken as the reference coordinate system, the Cartesian coordinates of the common points obtained by the laser tracker and the 3D scanner at first position can be denoted as and , respectively. The Cartesian coordinates of the common points measured in other positions can be denoted as and . If measurement errors are considered, Equation (2) can be rewritten as follows:

where and represent the correction values for common points of the laser tracker and the 3D scanner, respectively. The simultaneous equation of measurement errors is as follows:

Figure 4.

Schematic of the adjustment optimization.

The rotation and translation matrix between the laser tracker and 3D scanner at all positions can be computed by the Procrustes method [27]. Based on this, the correction value of coordinates of the common points can be calculated by the rank-defect network adjustment algorithm [28]. As a result, the coordinate values of the common points are optimized. Thereby, the accuracy of the transformation parameters of is improved.

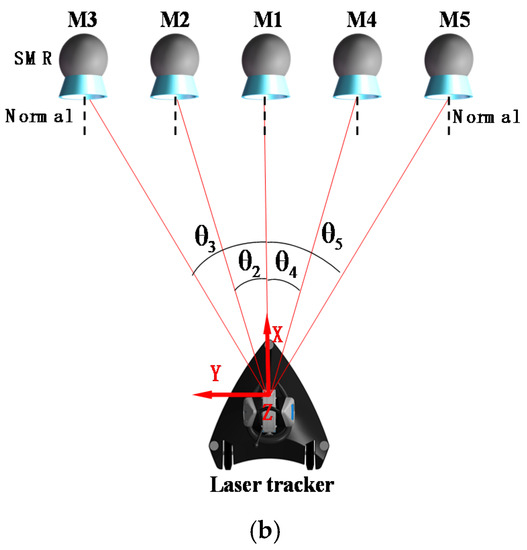

3.3. Optimization of the Coordinates of Global Control Points

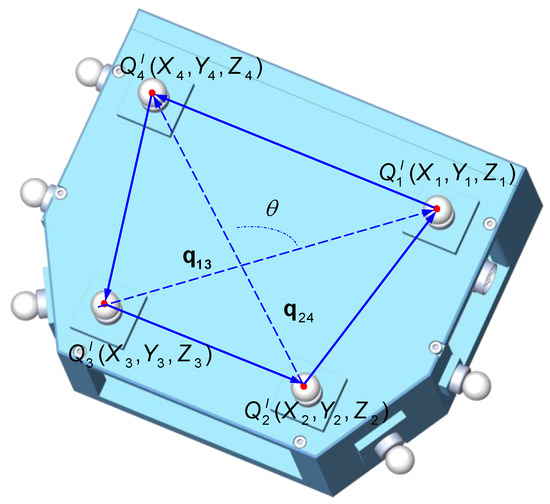

Due to environmental uncertainties and instrument instability, errors in the measurement of global control points are unavoidable. To solve the problem of unknown and uncontrollable error in measuring the global control points and optimize the transformation parameters of , COGP based on the angular constraint is proposed to obtain the correction values of coordinates of the global control points. Because the angle between two vectors in Euclidean space is independent of the coordinate system [29], the geometric information of the global control points set on the 3D scanner is fully used. In four global control points, a vector is established by two target points, and the angle between the vectors and is , as shown in Figure 5; the four target points can make up six vectors, which can form 15 angles.

Figure 5.

Schematic of the spatial angle.

CMM is used to calibrate the angle values, which are set as the nominal angles. We can obtain the angle error equation by calculating the difference between the actual angle values measured from the on-site measurement and the nominal angle values. Then, the normal equation is obtained by the least squares method.

The method for finding the angle between two nonzero vectors is expressed as follows:

Therefore, the angle could be obtained by the arc cosine function as follows:

Equation (9) is expanded by Taylor’s formula, and the second-order term is ignored. Therefore, the linearized equation of angular constraint is expressed as follows:

where are the optimized correction values of coordinates of the global control points, and is a vector of the correction values.

The angle error equation is expressed as follows:

where are nominal angles, and are actual angles.

In an alternative way, Equation (10) can be rewritten as follows:

where is the angle error vector.

Then, the normal equation is as follows:

where is the weight matrix.

The objective function for finding the best coordinate estimates can be expressed as follows:

The angle adjustment is conducted to obtain the optimal estimate value by solving the error matrix equation in the least squares norm. However, the traditional least squares method requires the coefficient matrix to be a nonsingular or full rank matrix. The coefficient matrix in Equation (13) is an ill-conditioned matrix with maximum condition number ( and represent the maximum and minimum eigenvalues of coefficient matrix ), and the result of this solution is extremely unstable.

To obtain the optimal solution, a two-objective optimization formula can be constructed as follows:

According to Tikhonov’s regularization method, the objective function of Equation (15) based on the ridge estimation algorithm is given as follows:

where the non-negative parameter is the ridge estimation parameter, and is the unit matrix. Finding the conjugate gradient of in Equation (16), we have

According to the extremum condition, let Equation (17) be equal to 0. Therefore, the final solution can be expressed as follows:

where the damping term added to the main diagonal of the coefficient matrix in Equation (18) can overcome the ill-conditioned effect of the coefficient matrix. Thus, a stable solution can be obtained.

The ridge estimation method can change singular matrix into a nonsingular matrix. Besides, it ensures the stability for the solution of the ill-conditioned equation. The appropriate ridge parameter can be solved by the L-curve method [30], which can reduce the condition number of the equation and change the ill-conditioned equation into a well-conditioned equation. As a result, the coordinate values of the global control points are optimized. Thereby, the accuracy of the transformation parameters of is improved.

4. Experiments and Discussion

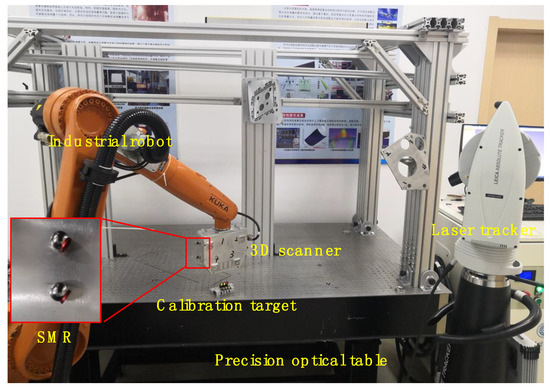

Based on the principle detailed in Section 3.1, the extrinsic parameter calibration was carried out. The calibration method mentioned in Section 3 can be verified through calibration experiments and measurement experiments. The experimental setup of the combined measurement system is shown in Figure 6. The 3D scanner was mounted on the 6-DOF industrial robot KR10R1420 manufactured by KUKA Corporation, and several SMRs set on the 3D scanner ensure that the position and orientation of the 3D scanner can be tracked by the laser tracker. The laser tracker was the Leica AT960, with an accuracy of 0.015 mm 0.006 mm/m, which can be connected to the computer by Gigabit Ethernet (GBE). The off-the-shelf visual sensor is a binocular structured light scanner with precision of 0.012 mm, resolution up to 0.020 mm, and scan range of 30 × 40 × 25 mm.

Figure 6.

Overview of the experimental setup of the system.

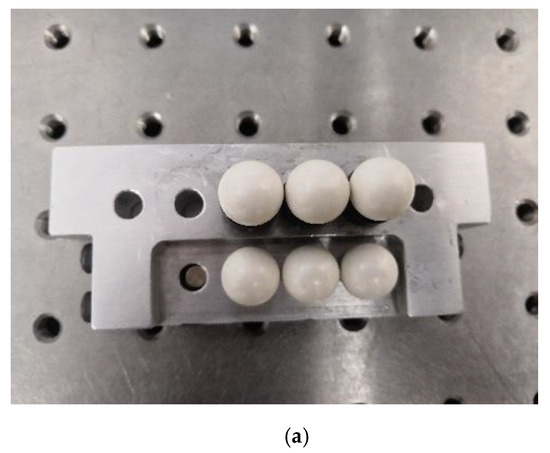

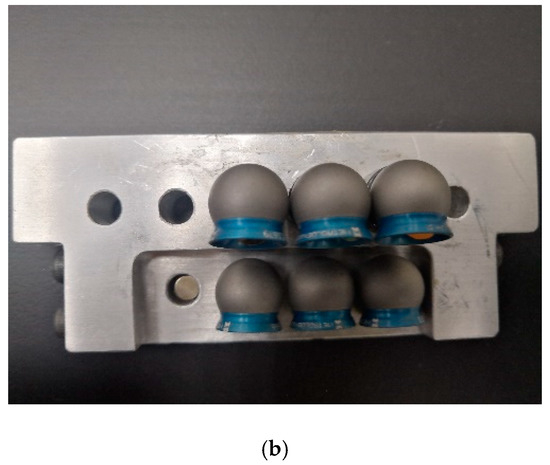

Figure 7 shows a specific calibration target designed for extrinsic parameter calibration and experiment purposes. Six sophisticated magnetic nests (SMNs) for the SMRs of laser tracker and the standard ceramic spheres of the 3D scanner were rigidly assembled on the aluminum plate. The standard ceramic spheres and the SMRs were first inspected on a CMM, and the maximum deviation of the diameter of the spheres was found to be about 0.003 mm. Therefore, supposing that the standard ceramic spheres take the place of the SMRs, the SMR center position would be aligned with that of the standard sphere center. All experiments were performed in a stable laboratory environment. The temperature varied between 22 and 23 °C, and the relative humidity varied between 55 and 60%.

Figure 7.

Calibration target: (a) standard ceramic spheres; (b) spherically mounted reflectors.

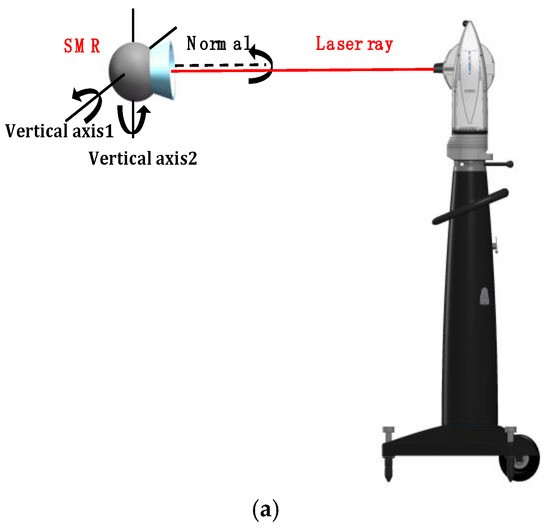

4.1. Incidence Angle Experiment

To minimize measurement errors, the influence of the incident angle error of the laser ray on the measurement accuracy of the laser tracker was analyzed under different circumstances. Figure 8a shows the SMR installed at the same height as the laser tracker. Rotating the SMR around the normal, vertical axis 1, and vertical axis 2, we collected 100 measurements for each operation to obtain the average value. The measurement results in Table 1 show that the maximum error can reach 0.008 mm with the change of SMR pose. Figure 8b also shows the SMR installed at the same height as the laser tracker. In the laser tracker measurement coordinate system, the five measuring points were distributed in a straight line and parallel to the XOY plane. The average value was also obtained by measuring 100 times within 2 m. The results of the analysis are shown in Table 2.

Figure 8.

Schematic of the incident angle of the laser ray: (a) incident angle of SMR; (b) incident angle of laser tracker.

Table 1.

Errors of the incident angle of the laser ray.

Table 2.

Results of the analysis of the angle measurement of the laser tracker.

In Table 2, the standard deviation of the X-coordinate value is close to the standard deviation of the length measurement result . However, (the standard deviation of the Y-coordinate value) and (the standard deviation of the Z-coordinate value) are relatively large. Because the angle measurement accuracy of the laser tracker is relatively low, larger angles result in lower measurement accuracy. Angle measurement error is the main factor affecting the measurement accuracy of the laser tracker. Therefore, the optimization methods for reducing the incident angle error can be adopted to minimize the measurement errors during the measurement process.

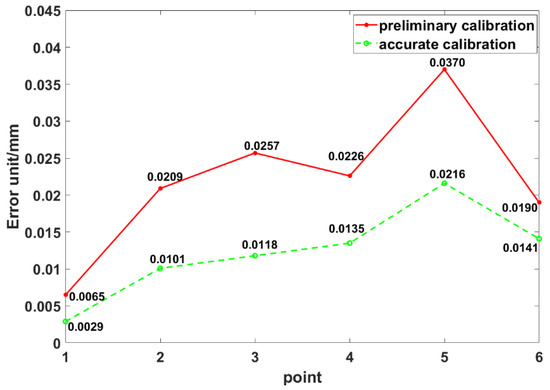

4.2. Calibration Experiment

Figure 6 shows a combined calibration system, which combines a 3D scanner, a laser tracker, an industrial robot, and the calibration target. The common points were measured by the laser tracker and the 3D scanner at eight positions. Firstly, several SMRs were mounted on the SMNs of the calibration target and the 3D scanner, and centers of SMRs were measured by the laser tracker. Then, the 3D scanner measured the standard ceramic spheres mounted on the SMNs of the calibration target. Finally, the optimization data and correction data of common points of the laser tracker and the 3D scanner were obtained according to the method introduced in Section 3.2, as shown in Table 3 and Table 4.

Table 3.

Optimization data and correction data of common points of the laser tracker (unit: mm).

Table 4.

Optimization data and correction data of common points of the 3D scanner (unit: mm).

Table 5 shows the optimization data and correction data of global control points obtained according to the method introduced in Section 3.3.

Table 5.

Optimization data and correction data of global control points (unit: mm).

The distance errors between the measurement values and the nominal values can be calculated by using the coordinate values of global control points optimized by COGP optimization method. The results are shown in Table 6. The max deviation and RMS errors were reduced from 0.0179 and 0.0111 mm to 0.0115 and 0.0074 mm. This demonstrates that the measurement accuracy of global control points can be improved by the angle constraint method.

Table 6.

Distance errors between global control points with angle optimization (unit: mm).

With the optimization data of the global control points, an accurate result of the transformation matrix is given as follows:

With all data mentioned above, the accurate result of the extrinsic parameter calibration can be calculated, according to Equation (5), as follows:

As shown in Figure 9, with the accurate calibration result, the max and mean errors of the coordinate transformation were reduced from 0.037 and 0.022 mm to 0.021 and 0.0122 mm. The results demonstrate that the calibration accuracy has been improved significantly, and the calibration method achieves high accuracy.

Figure 9.

Errors of the coordinate transformation after preliminary and accurate calibration.

4.3. Measurement Experiment

After the extrinsic parameter calibration, further experiments were needed to verify the performance and the accuracy of the proposed system. Section 4.3.1 detail how the performance of the system was assessed by the support measurement test. The measurement accuracy verification is described in Section 4.3.2.

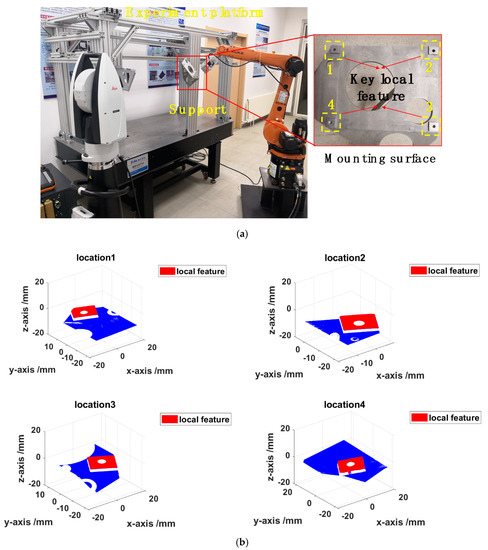

4.3.1. Support Measurement Test

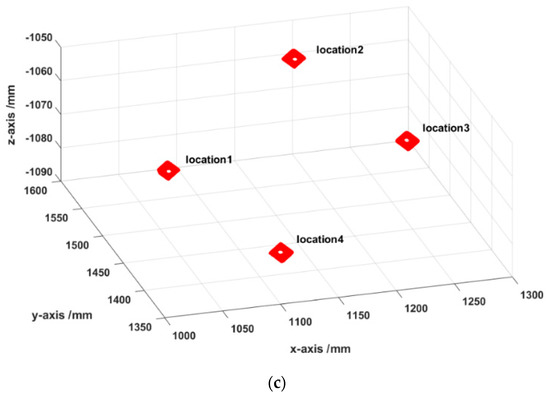

To measure key local features of the support mounting surface with the size of 300 × 300 × 100 mm, the proposed combined measurement system was applied to measure a support fixed on the experiment platform in the laboratory, as shown in Figure 10a. Firstly, the robot moved the 3D scanner to the discrete regions that needed to be measured, and then the 3D scanner scanned the region. Meanwhile, the laser tracker measured the SMRs fixed on the 3D scanner to obtain the position and orientation of 3D scanner. Finally, the 3D information of the local key features could be obtained by the 3D scanner at different robot positions. Figure 10b shows the 3D point clouds obtained at each measurement position. Furthermore, the 3D point clouds of the local key features were preprocessed and then unified into the world frame according to Equation (1), as shown in Figure 10c. This test demonstrated that the combined measurement system can be applied to measure key local features of the support mounting surface.

Figure 10.

(a) Experimental setup for measuring the key local features. (b) Surface point clouds from four measurements. (c) Reconstructed point clouds of the key local features.

4.3.2. Accuracy Verification

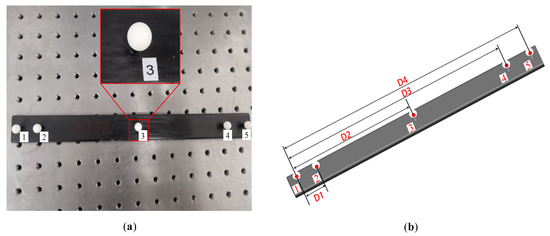

To verify the measurement accuracy of the system, a metric tool that consisted of five homogeneous and standard ceramic spheres with approximate diameter was designed and adopted, as shown in Figure 11. The distance between the centers of two spheres was measured on the CMM, and the results are as follows: D1 = 24.7581 mm, D2 = 149.8429 mm, D3 = 269.7165 mm, and D4 = 299.5461 mm.

Figure 11.

Metric tool for accuracy verification: (a) standard ruler; (b) distance between the centers of each pair of spheres.

The distance errors between the measured values and nominal values were computed on the basis of the model in Equation (1). Table 7 presents the distance errors among the centers of the five spheres, which were measured by the combined measurement system within 4 m. To better demonstrate the errors, the mean value (), standard deviation value (), and root-mean-square error (RMS error) are summarized in Table 5. The proposed method can be verified by these quantitative statistical results; it results in relatively high accuracy in 3D measurement, with a space length measuring error of less than 0.03%.

Table 7.

Statistical results (unit: mm).

5. Conclusions

This paper has proposed a combined measurement method for high-accuracy large-scale 3D metrology, where a 3D scanner and a laser tracker are combined to conduct highly accurate measurement of key local features. In this measurement system, the orienting device, an industrial robot, is applied. The principle of measurement has been illustrated in detail. As for improving the overall measurement accuracy, an accurate calibration method based on COCP and COGP optimization has been proposed to determine the coordinate systems. Firstly, the COCP has been recommended. Then, the COGP based on the angular constraint has been proposed for minimizing the measurement errors and improving the measurement accuracy of the position and orientation of the 3D scanner. The calibration experiment results demonstrate that the max and mean errors of the coordinate transformation have been reduced from 0.037 and 0.022 mm to 0.021 and 0.0122 mm. The application of measuring a support has also been performed to demonstrate that the measurement process is simple and efficient. Finally, a metric tool has been developed, which helped to verify the measurement accuracy of the proposed system. Future work will focus on the improvement of measuring accuracy in larger measurement range.

Author Contributions

All authors contributed to this work. Conceptualization, W.L., and Z.Z.; methodology, Z.Z.; software, Q.W. and Y.W.; validation, J.Z. and Y.Y.; writing—original draft preparation, Z.Z.; writing—review and editing, W.L. and B.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the National Science and Technology Major Project of China (No. 2018ZX04018001-005).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chen, F.; Brown, G.M.; Song, M. Overview of 3-D shape measurement using optical methods. Opt. Eng. 2000, 39, 10–22. [Google Scholar]

- Sun, B.; Zhu, J.; Yang, L.; Yang, S.; Guo, Y. Sensor for In-Motion Continuous 3D Shape Measurement Based on Dual Line-Scan Cameras. Sensors 2016, 16, 1949. [Google Scholar] [CrossRef] [PubMed]

- Van Gestel, N.; Cuypers, S.; Bleys, P.; Kruth, J.P. A performance evaluation test for laser line scanners on CMMs. Opt. Lasers Eng. 2008, 47, 336–342. [Google Scholar] [CrossRef]

- Chao, B.I.; Jianguo, F.A.N.G.; Kun, L.I.; Zhijun, G.U.O. Extrinsic calibration of a laser displacement sensor in a non-contact coordinate measuring machine. Chin. J. Aeronaut. 2017, 30, 1528–1537. [Google Scholar]

- Xie, Z.; Wang, X.; Chi, S. Simultaneous calibration of the intrinsic and extrinsic parameters of structured-light sensors. Opt. Laser Eng. 2014, 58, 9–18. [Google Scholar] [CrossRef]

- Reich, C. 3-D shape measurement of complex objects by combining photogrammetry and fringe projection. Opt. Eng. 2000, 39, 224. [Google Scholar] [CrossRef]

- Mu, N.; Wang, K.; Xie, Z.; Ren, P. Calibration of a flexible measurement system based on industrial articulated robot and structured light sensor. Opt. Eng. 2017, 56, 054103. [Google Scholar] [CrossRef]

- Ren, Y.J.; Yin, S.; Zhu, J. Calibration technology in application of robot-laser scanning system. Opt. Eng. 2012, 51, 114204. [Google Scholar] [CrossRef]

- Wu, D.; Chen, T.; Li, A. A High Precision Approach to Calibrate a Structured Light Vision Sensor in a Robot-Based Three-Dimensional Measurement System. Sensors 2016, 16, 1388. [Google Scholar] [CrossRef]

- Isheil, A.; Gonnet, J.P.; Joannic, D.; Fontaine, J.F. Systematic error correction of a 3D laser scanning measurement device. Opt. Laser Eng. 2011, 49, 16–24. [Google Scholar] [CrossRef]

- Yin, S.; Ren, Y.; Guo, Y.; Zhu, J.; Yang, S.; Ye, S. Development and calibration of an integrated 3D scanning system for high-accuracy large-scale metrology. Measurement 2014, 54, 65–76. [Google Scholar] [CrossRef]

- Li, J.; Chen, M.; Jin, X.; Chen, Y.; Dai, Z.; Ou, Z.; Tang, Q. Calibration of a multiple axes 3-D laser scanning system consisting of robot, portable laser scanner and turntable. Optik 2011, 122, 324–329. [Google Scholar] [CrossRef]

- Van der Jeught, S.; Dirckx, J.J. Real-time structured light profilometry: A review. Opt. Laser Eng. 2016, 87, 18–31. [Google Scholar] [CrossRef]

- Yin, X.; Zhao, H.; Zeng, J.; Qu, Y. Acoustic grating fringe projector for high-speed and high-precision three-dimensional shape measurements. Appl. Opt. 2007, 46, 3046. [Google Scholar] [CrossRef]

- Jiang, C.; Lim, B.; Zhang, S. Three-dimensional shape measurement using a structured light system with dual projectors. Appl. Opt. 2018, 57, 3983. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Xue, Q.; Wang, Z.; Gao, J. Analysis and Compensation for Lateral Chromatic Aberration in a Color Coding Structured Light 3D Measurement System. Sensors 2016, 16, 1426. [Google Scholar] [CrossRef]

- He, Y.; Cao, Y. A composite-structured-light 3D measurement method based on fringe parameter calibration. Opt. Laser Eng. 2011, 49, 773–779. [Google Scholar] [CrossRef]

- Atif, M.; Lee, S. FPGA Based Adaptive Rate and Manifold Pattern Projection for Structured Light 3D Camera System. Sensors 2018, 18, 1139. [Google Scholar] [CrossRef]

- Cheng, X.; Liu, X.; Li, Z.; Zhong, K.; Han, L.; He, W.; Gan, W.; Xi, G.; Wang, C.; Shi, Y. High-Accuracy Globally Consistent Surface Reconstruction Using Fringe Projection Profilometry. Sensors 2019, 19, 668. [Google Scholar] [CrossRef]

- Paul Kumar, U.; Somasundaram, U.; Kothiyal, M.P.; Krishna Mohan, N. Single frame digital fringe projection profilometry for 3-D surface shape measurement. Optik 2013, 124, 166–169. [Google Scholar] [CrossRef]

- Wang, P.; Wang, J.; Xu, J.; Guan, Y.; Zhang, G.; Chen, K. Calibration method for a large-scale structured light measurement system. Appl. Opt. 2017, 56, 3995–4002. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Liu, M.; Song, J.; Yin, S.; Guo, Y.; Ren, Y.; Zhu, J. Flexible digital projector calibration method based on per-pixel distortion measurement and correction. Opt. Laser Eng. 2017, 92, 29–38. [Google Scholar] [CrossRef]

- Chen, Z.; Du, F. Measuring principle and uncertainty analysis of a large volume measurement network based on the combination of iGPS and portable scanner. Measurement 2017, 104, 263–277. [Google Scholar] [CrossRef]

- Paoli, A.; Razionale, A.V. Large yacht hull measurement by integrating optical scanning with mechanical tracking-based methodologies. Robot. Comput. Integr. Manuf. 2012, 28, 592–601. [Google Scholar] [CrossRef]

- Leica-Geosystems. Available online: https://www.exactmetrology.com/metrology-equipment/leica-geosystems/t-scan (accessed on 9 April 2020).

- Du, H.; Chen, X.; Xi, J.; Yu, C.; Zhao, B. Development and Verification of a Novel Robot-Integrated Fringe Projection 3D Scanning System for Large-Scale Metrology. Sensors 2017, 17, 2886. [Google Scholar] [CrossRef]

- Wang, D.; Tang, W.; Zhang, X.; Qiang, M.; Wang, J.; Sun, H.; Shao, J. A universal precision positioning equipment in coordinate unified for large scale metrology. In Proceedings of the 2015 IEEE International Conference on Mechatronics and Automation (ICMA), Beijing, China, 2–5 August 2015. [Google Scholar]

- Chang, Q.S. An Analysis of the Method of Rank-Deficiency Free Network Adjustment. Acta Geod. Et Cartogr. Sin. 1986, 4, 297–302. [Google Scholar]

- Boyd, S.; Boyd, S.P.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004; pp. 405–410. [Google Scholar]

- Hansen, P.C. Analysis of Discrete Ill-Posed Problems by Means of the L-Curve. SIAM Rev. 1992, 34, 561–580. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).